how host connect to NIC

1. linux PCI DMA

These platforms support the PCI DMA interface, but it is mostly a false front. There are no mapping registers in the bus interface, so scatterlists cannot be combined and virtual addresses cannot be used. There is no bounce buffer support, so mapping of high-memory addresses cannot be done. The mapping functions on the ARM architecture can sleep, which is not the case for the other platforms.

do not support

2. in FlexTOE

Our x86 and BlueField ports currently only support applications running on the same platform as FlexTOE. Hence, context queues always use shared memory rather than DMA. The corresponding DMA pipeline stage executes the payload copies in software using shared memory, rather than leveraging a DMA engine.

use shared memory

3. Blue-field buffer sharing

The dma-buf subsystem provides the framework for sharing buffers for hardware (DMA) access across multiple device drivers and subsystems, and for synchronizing asynchronous hardware access.

This is used, for example, by drm "prime" multi-GPU support, but is of course not limited to GPU use cases.

The three main components of this are: (1) dma-buf, representing a sg_table and exposed to userspace as a file descriptor to allow passing between devices, (2) fence, which provides a mechanism to signal when one device as finished access, and (3) reservation, which manages the shared or exclusive fence(s) associated with the buffer.

exposed to userspace

4. Blue-field DMA engine controller

Linux kernel to support Mellanox BlueField SoCs

linux kernel v.5.4

current: mellanox blue-field ofed kernel 5.2, fastswap 4.11, Leap 4.4.125

- client

- provider

- async-tx-api

https://github.com/Mellanox/bluefield-linux/blob/master/Documentation/crypto/async-tx-api.txt

5. Infiniband

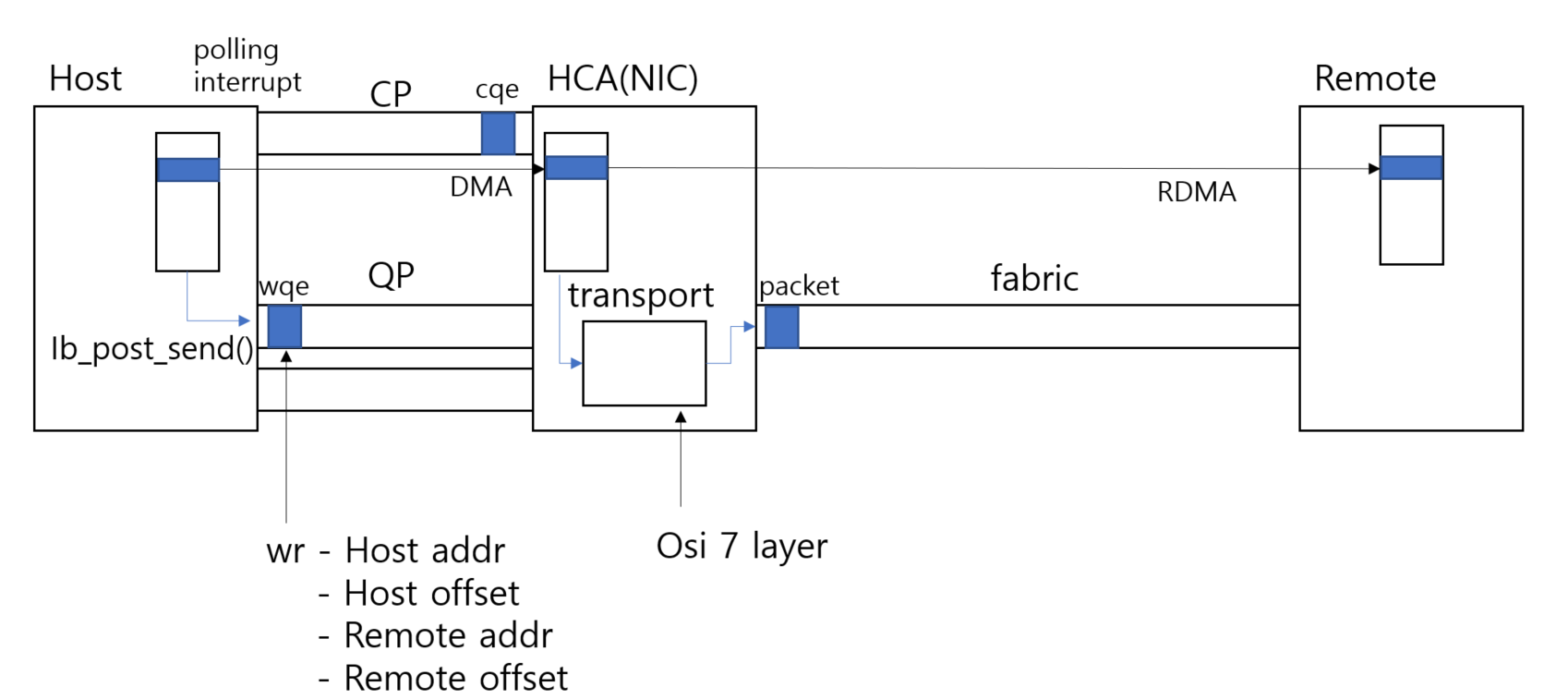

구조

- Host: user, consumer

- CA: Channel Adapter, NIC (HCA host CA, TCA target CA)

- QP: Queue Pair

- CQ: Complete Queue

- fabric: Infiniband Network

- transport: OSI 7 layer

- Switch: link packet to upper link

- WR: Work request

동작 순서

- ib_post_send

- wr 생성

- wqe HCA 전달

- DMA

- transport

- packet to fabric

- rdma

- cqe 생성 in cq

- host polling or interrupt

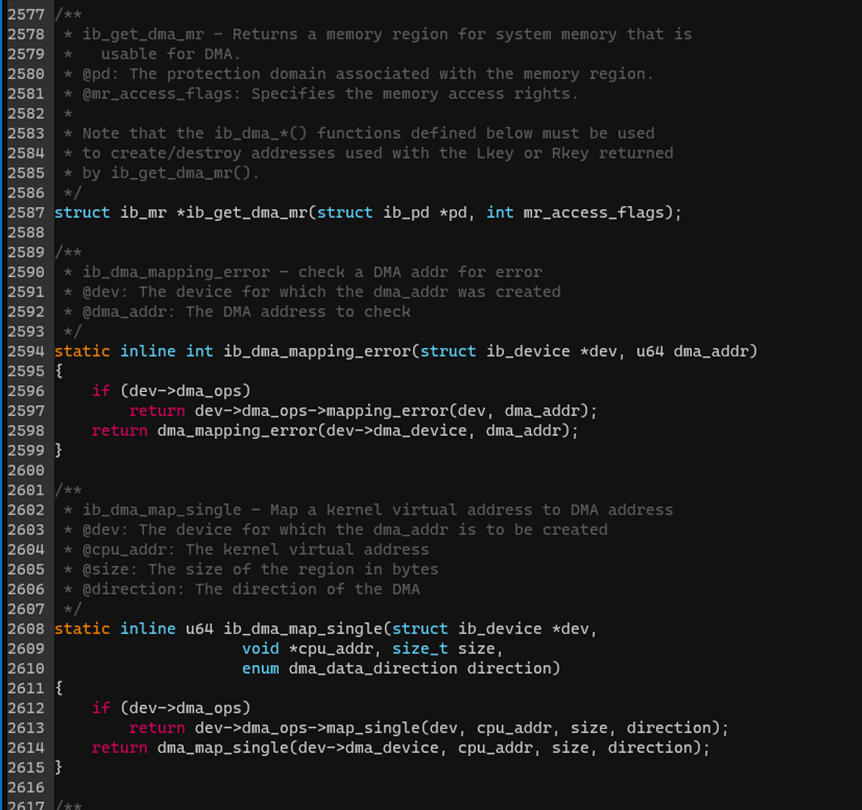

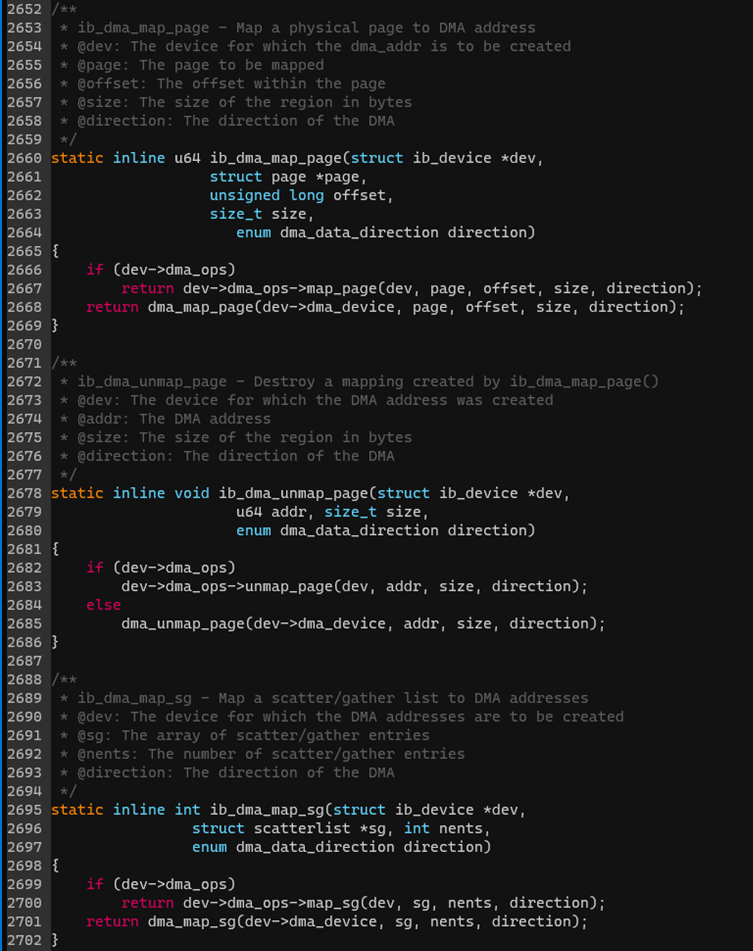

dma 관련 메소드

-

Ib_sg_dma_address

-

Ib_sg_dma_len

-

Ib_dma_sync_single_for_cpu

-

Ib_dma_sync_single_for_device

-

Ib_dma_alloc_coherent

-

Ib_dma_free_coherent