Introduction

I would like to review the progress I have made so far and assess the current state of my projects. Due to the simultaneous commitments of other projects (which I plan to upload in the future), classes, and teaching assistant duties, I haven't made significant progress recently. However, in this post, I would like to revisit the work I have done thus far and examine the current status of each project.

Approach

Title & Musical features

What relationships exist between the title and the elements of music? In simple terms, we can consider the presence of genres, artists, melodies, keys, and so on. My research aims to approach and explore these relationships using deep learning methods.

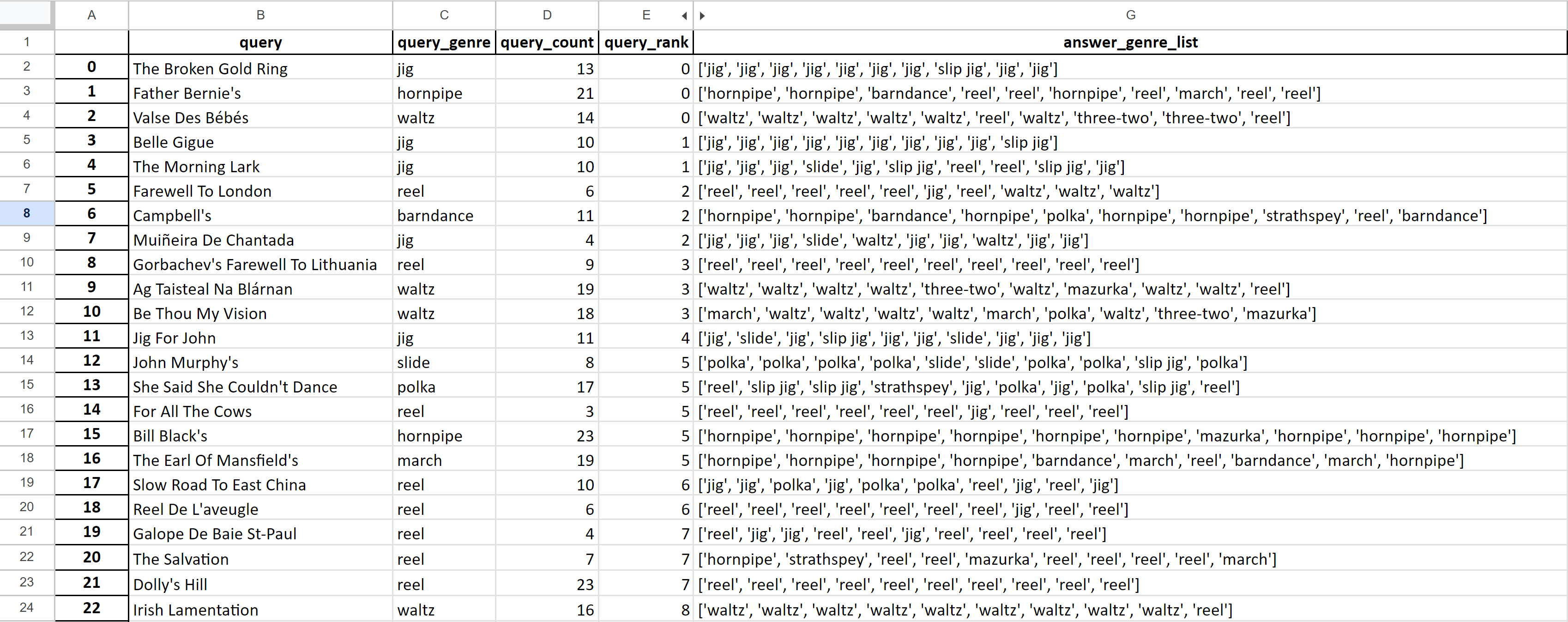

Dataset

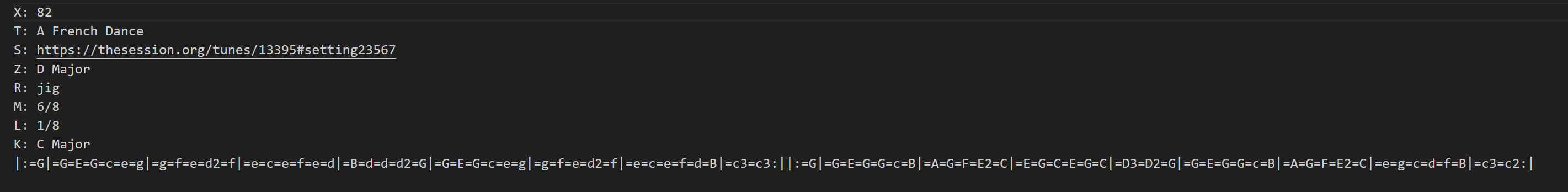

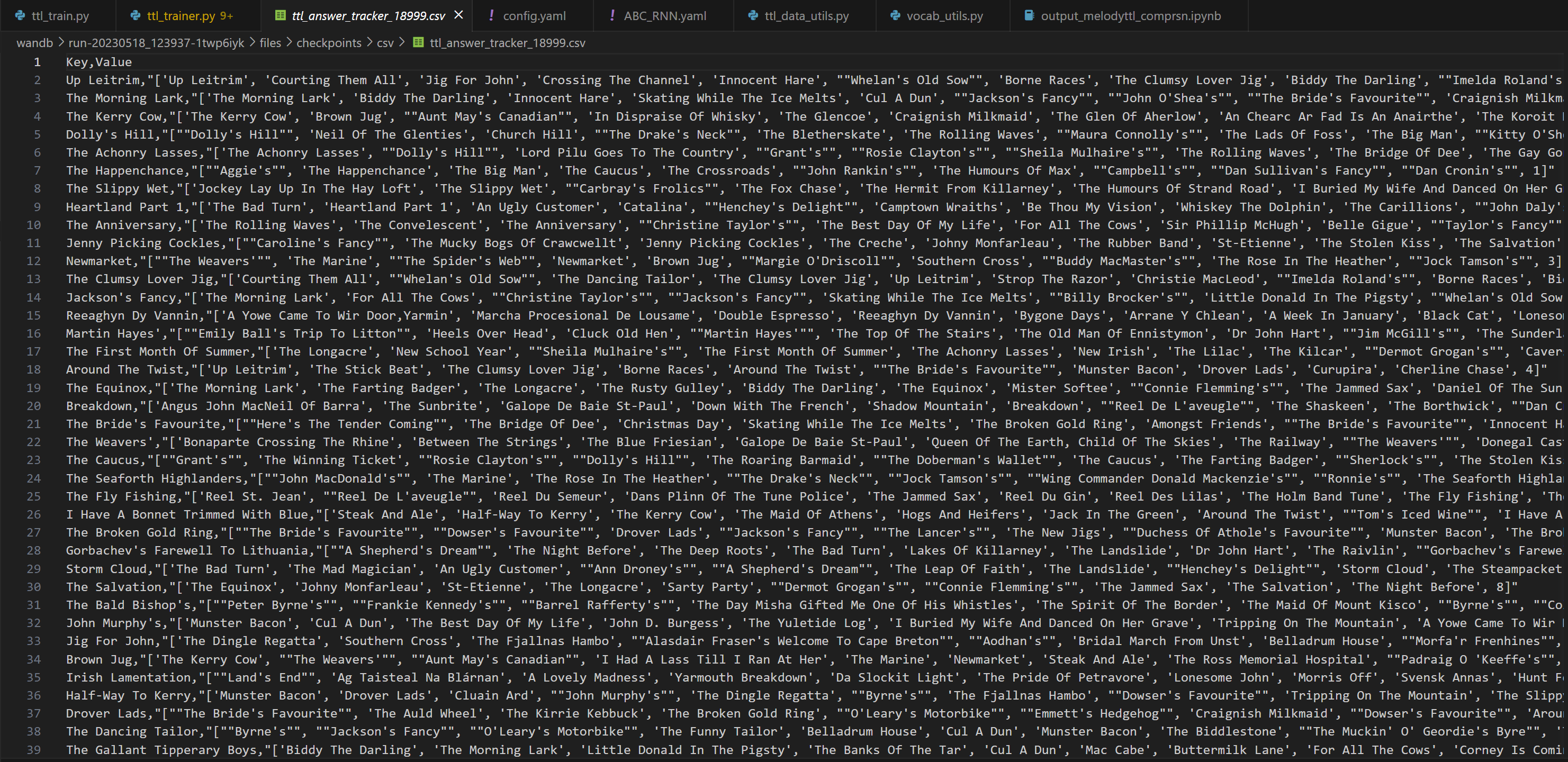

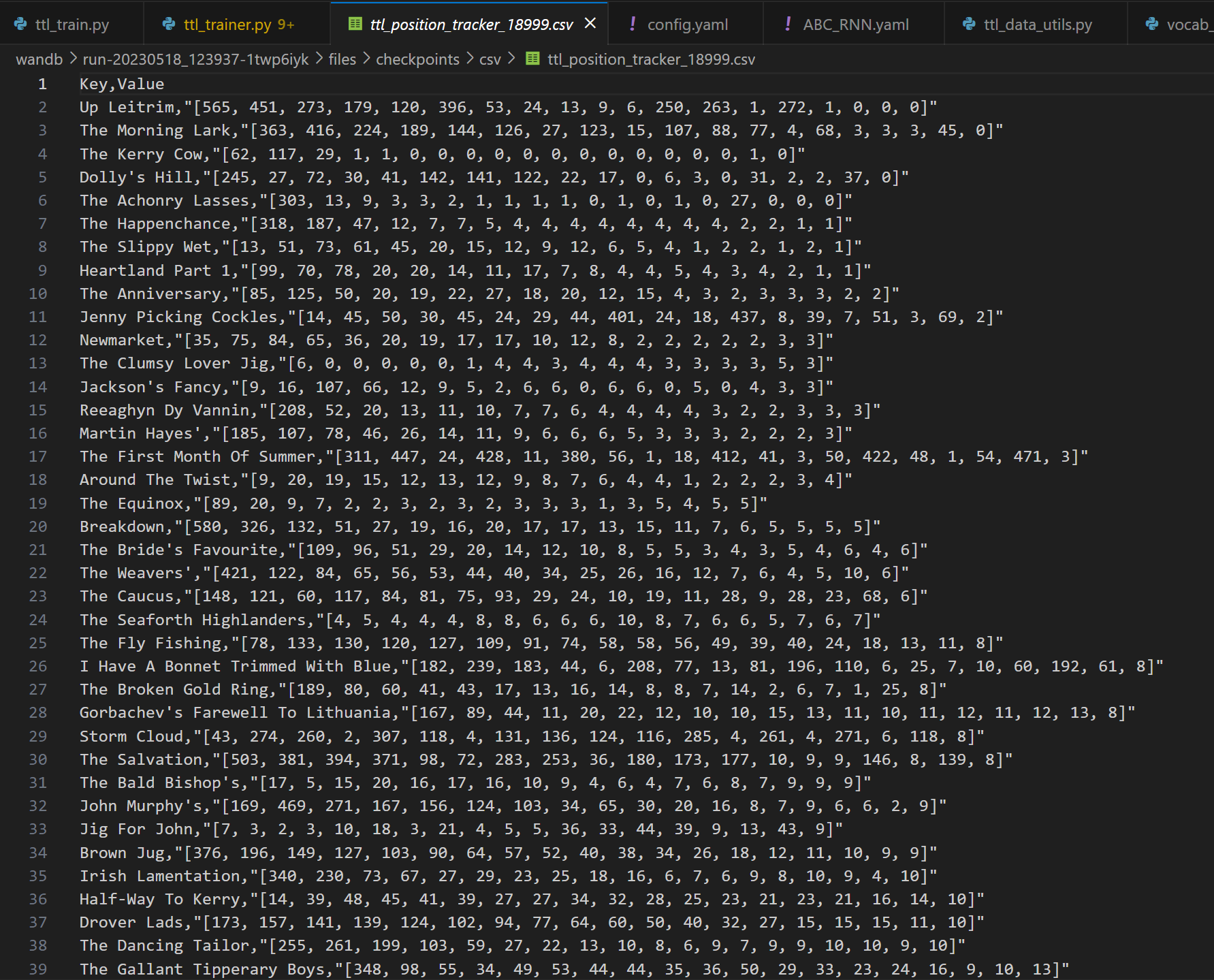

The dataset I used is the one provided in the 2022 AI Music Generation Challenge, which I obtained as a preprocessed dataset created during our lab's participation in the challenge. In the task of generating melodies using symbolic tokens, our lab achieved first place. However, in the title generation task, in which I directly participated, the results were not as good. While the dataset had minimal noise, I encountered difficulties in analyzing the results due to a lack of background knowledge in Irish folk music.

- the 350 published in F. O’Neill “The Dance Music of Ireland: O’Neill’s 1001” (1907)

- using symbolic token data

- lack of a background knowledge of Irish folk music

Methodology

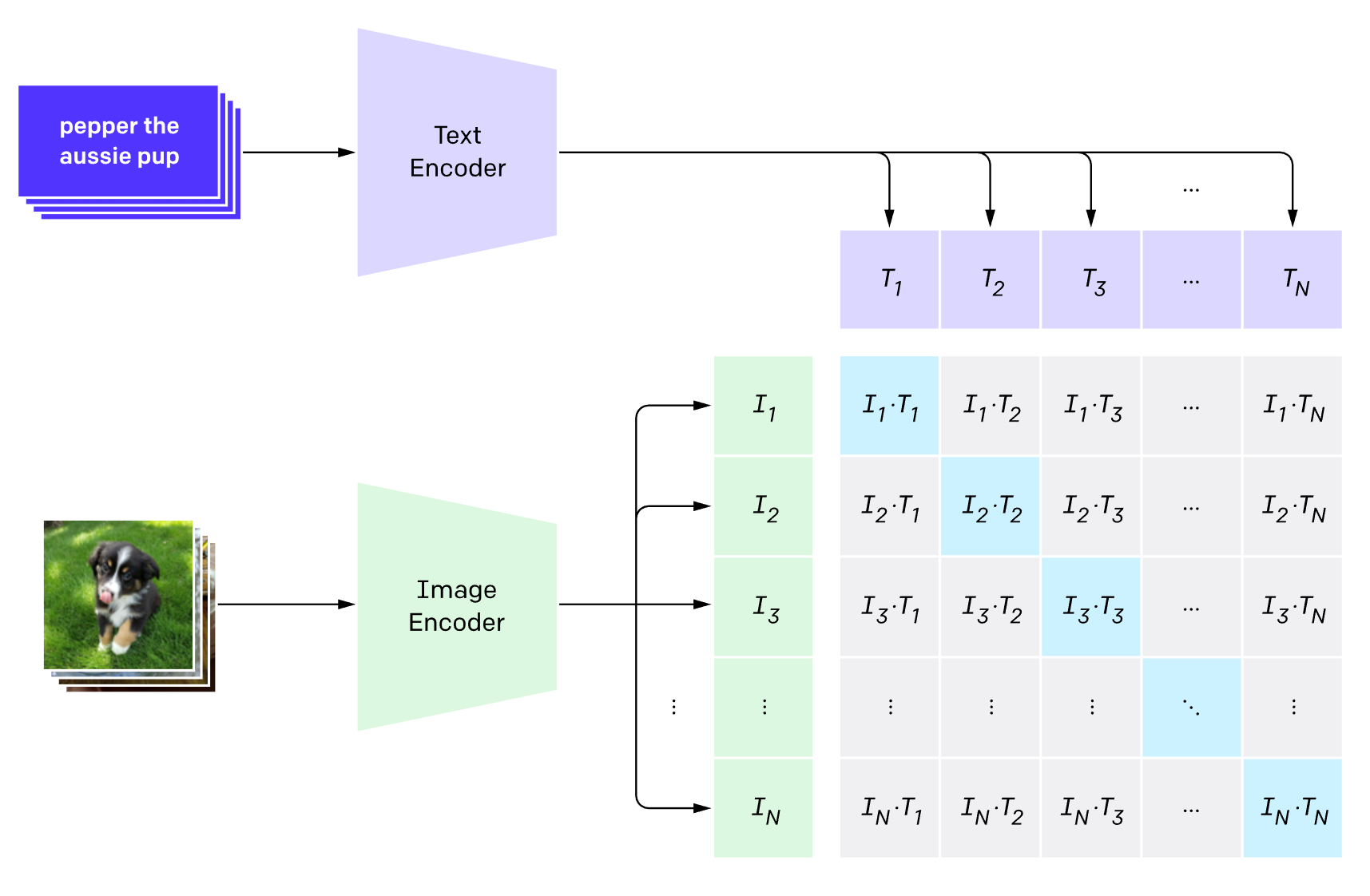

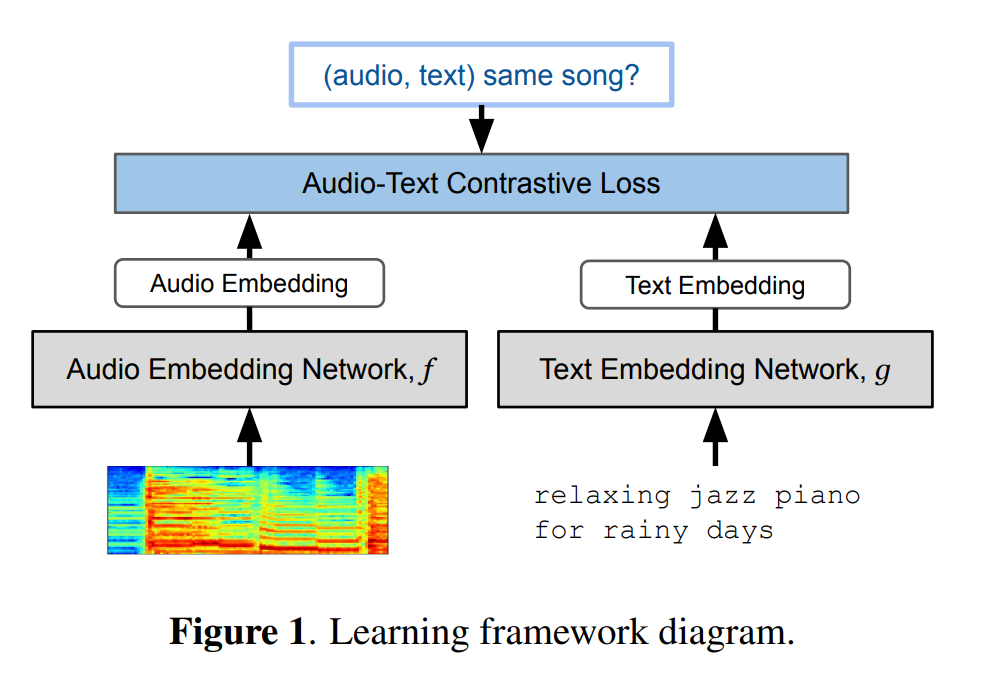

For training, I developed a two-tower embedding model and conducted contrastive learning. However, understanding the relationship between melody and title with a limited amount of data was not an easy task. Therefore, I explored various model structures such as CNN, RNN, and RNN with attention. Through multiple experiments, I found that using the parameters of the melody generation model previously developed in our lab and transferring them for training, along with the title embedding model, yielded the best results.

from CLIP (Contrastive Language–Image Pre-training), https://openai.com/research/clip

from MuLan: A Joint Embedding of Music Audio and Natural Language, https://arxiv.org/abs/2208.12415

Metric

For evaluation metrics, I used Mean Reciprocal Rank (MRR) and Discounted Cumulative Gain (DCG). To assess the performance of the actual model, I recorded the model's predictions for tracking purposes at specific iterations during the training process.

Genre

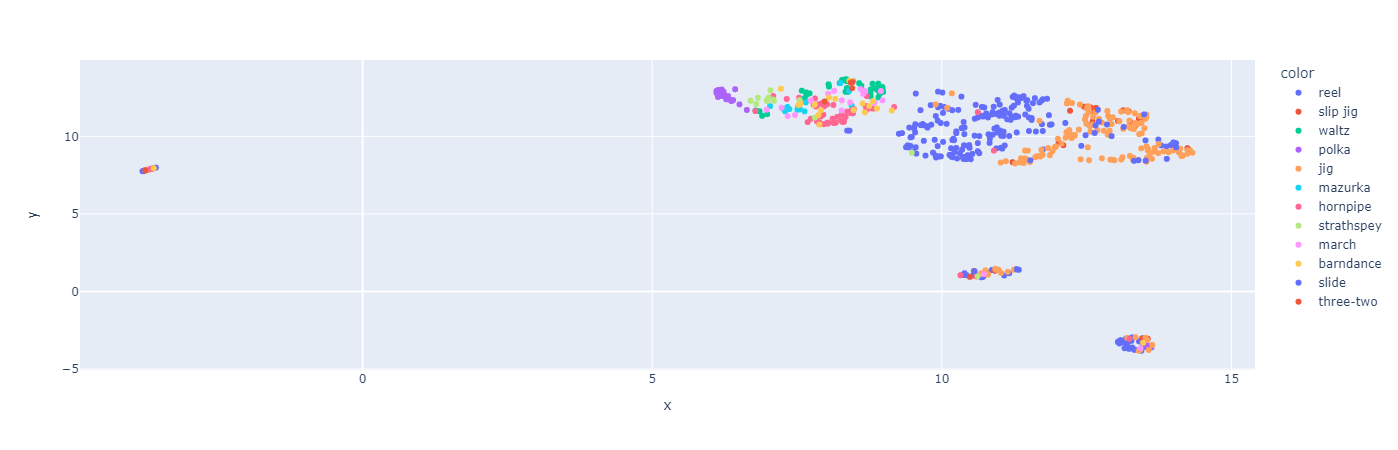

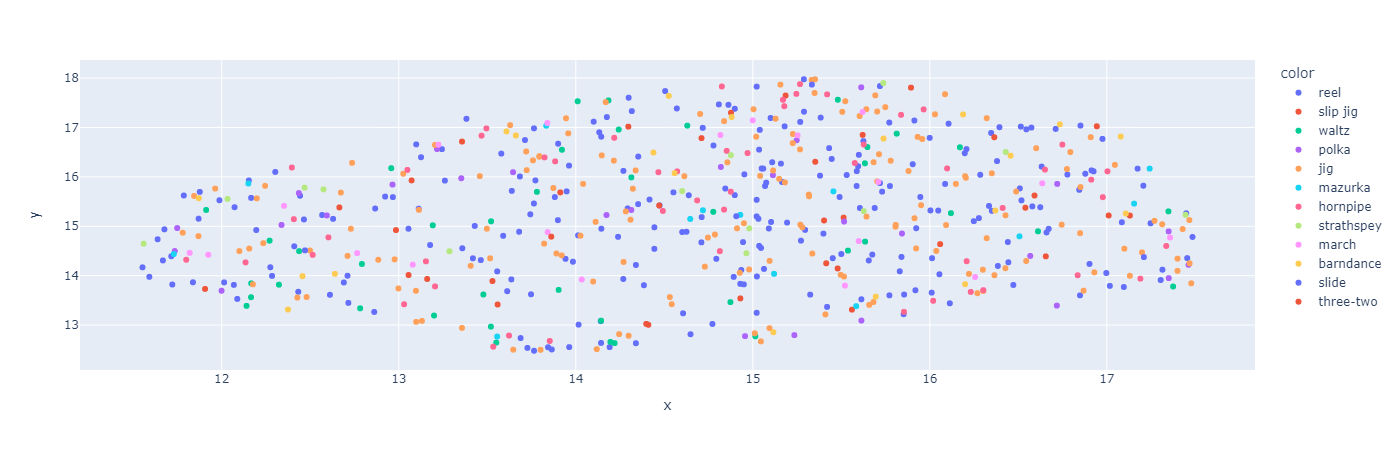

As expected, the model trained to embed music information successfully distinguishes music genres and shows a well-clustered pattern when plotted. Currently, I am in the process of investigating which specific musical elements have a greater impact on distinguishing titles. To do so, I plan to conduct training by removing certain information that was previously included and analyze the results.

2개의 댓글

That sounds like an awesome challenge! AI music is getting wild these days. If you're creating tracks, don’t forget to showcase them properly—check out Loop Fans' Website Builder. It's super easy and perfect for musicians who want a slick site without all the tech hassle. Here’s more: https://music.loop.fans/blog/best-website-builder-for-musicians

For downloading music without unnecessary complications, https://tubidy.cv is an excellent option. The site features a broad variety of genres and lets you download tracks without signing up. The download process is fast and reliable, and the platform works perfectly on mobile and desktop devices, offering a seamless user experience.