Overview

Motivation

- Most modern applications are multithreaded

- Threads run within application

- Multiple tasks with the application can be implemented by separate threads

- Update display

- Fetch data

- Spell checking

- Answer a network request

- Process creation is heavy-weight while thread creation is light-weight

- Can simplify code, increase efficiency

- Kernels are generally multithreaded

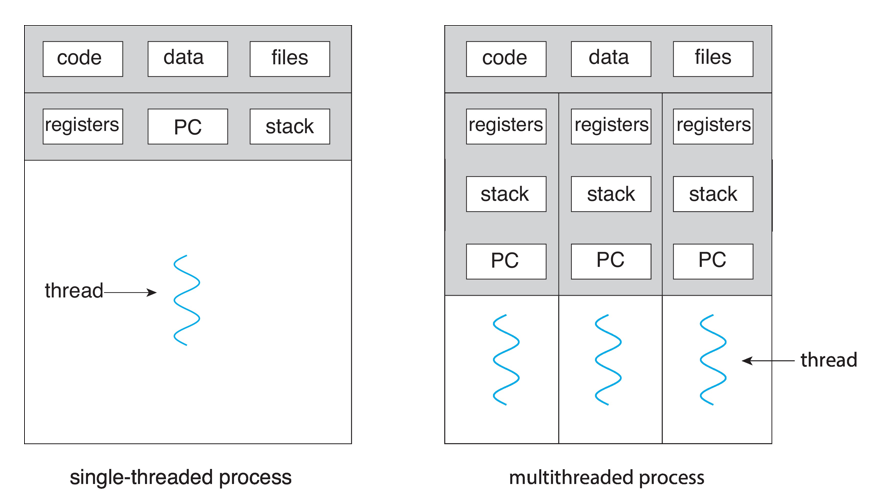

Single and Multithreaded Processes

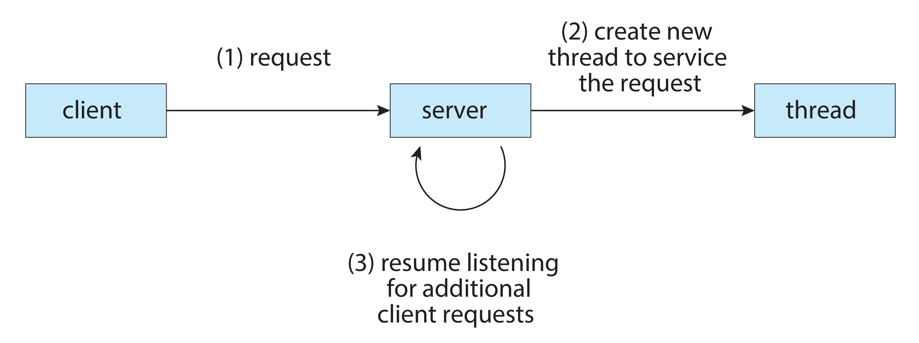

Multithreaded Server Architecture

Benefits

- Responsiveness – may allow continued execution if part of process is blocked, especially important for user interfaces

- Resource Sharing – threads share resources of process, easier than shared memory or message passing

- Economy – cheaper than process creation, thread switching lower overhead than context switching

- Scalability – process can take advantage of multicore architectures

Multicore Programming

- Multicore or multiprocessor systems putting pressure on programmers, challenges include:

- Dividing activities

- Balance

- Data splitting

- Data dependency

- Testing and debugging

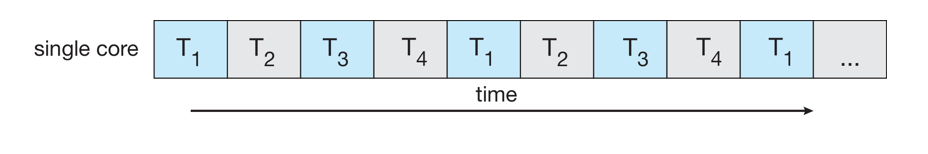

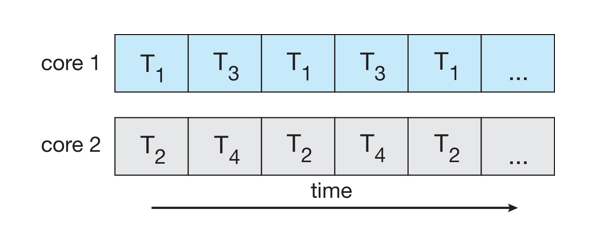

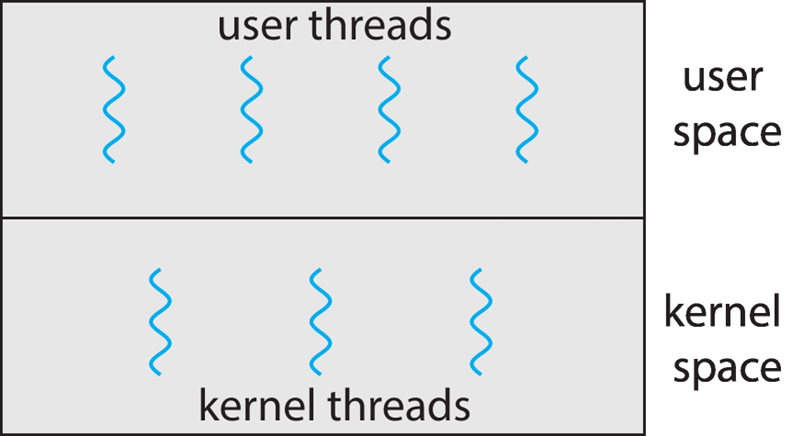

- Parallelism implies a system can perform more than one task simultaneously

- Concurrency supports more than one task making progress

Single processor / core, scheduler providing concurrency

Concurrency vs. Parallelism

-

Concurrent execution on single-core system:

-

Parallelism on a multi-core system:

Multicore Programming

Multicore Programming

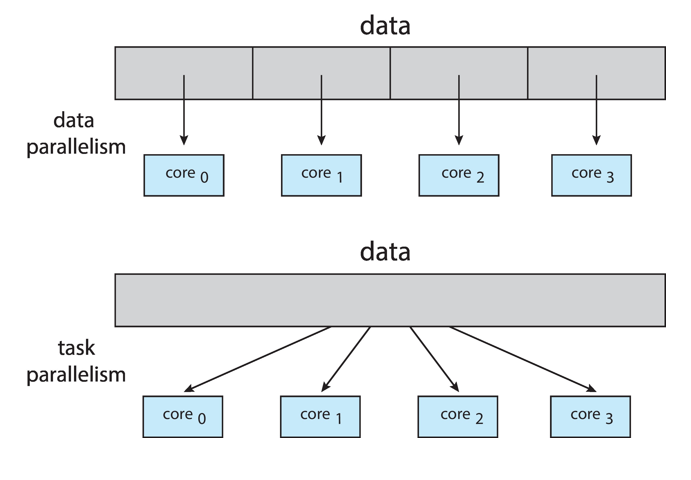

- Types of parallelism

- Data parallelism – distributes subsets of the same data across multiple cores, same operation on each

- Task parallelism – distributing threads across cores, each thread performing unique operation

Data and Task Parallelism

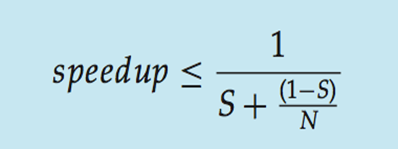

Amdahl's Law

- Identifies performance gains from adding additional cores to an application that has both serial and parallel components

- S is serial portion

- N processing cores

- That is, if application is 75% parallel / 25% serial, moving from 1 to 2 cores results in speedup of 1.6 times

- As N approaches infinity, speedup approaches 1 / S

- Serial portion of an application has disproportionate effect on performance gained by adding additional cores

- But does the law take into account contemporary multicore systems?

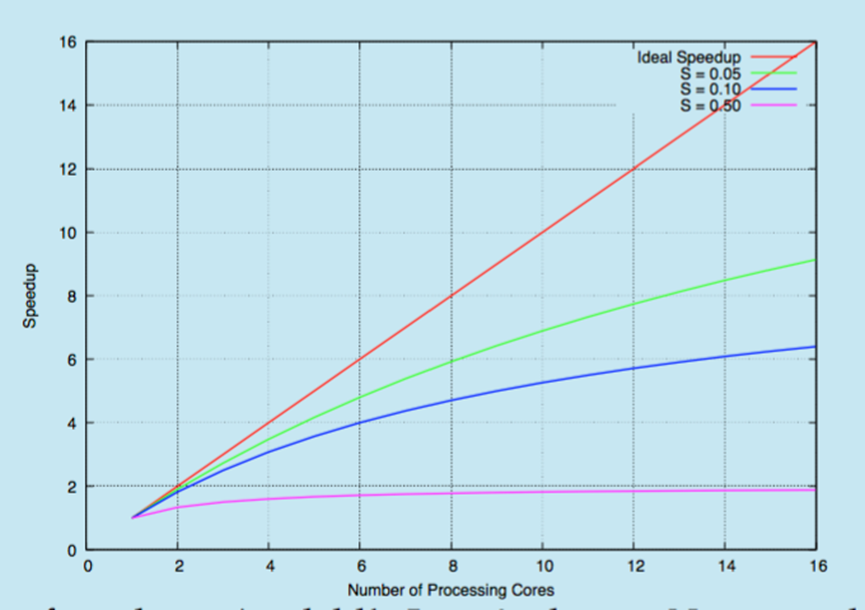

User Threads and Kernel Threads

- User threads - management done by user-level threads library

- Three primary thread libraries:

- POSIX Pthreads

- Windows threads

- Java threads

- Kernel threads - Supported by the Kernel

- Examples – virtually all general purpose operating systems, including:

- Windows

- Linux

- Mac OS X

- iOS

- Android

User and Kernel Threads

Multithreading Models

Multithreading Models

- Many-to-One

- One-to-One

- Many-to-Many

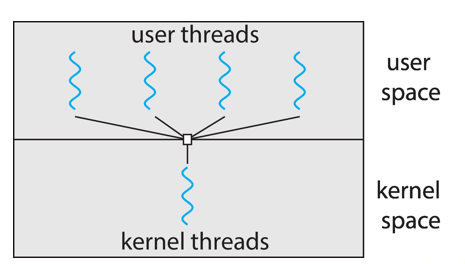

Many-to-One

- Many user-level threads mapped to single kernel thread

- One thread blocking causes all to block

- Multiple threads may not run in parallel on muticore system because only one may be in kernel at a time

- Few systems currently use this model

- Examples:

- Solaris Green Threads

- GNU Portable Threads

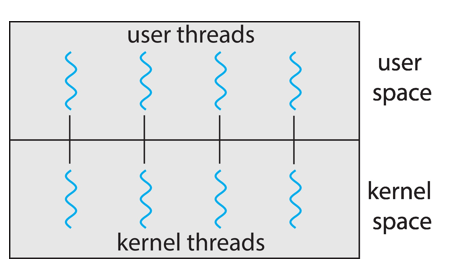

One-to-One

- Each user-level thread maps to kernel thread

- Creating a user-level thread creates a kernel thread

- More concurrency than many-to-one

- Number of threads per process sometimes restricted due to overhead

- Examples

- Windows

- Linux

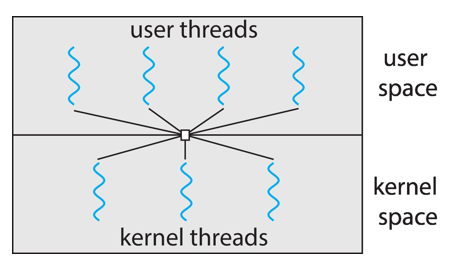

Many-to-Many Model

- Allows many user level threads to be mapped to many kernel threads

- Allows the operating system to create a sufficient number of kernel threads

- Windows with the ThreadFiber package

- Otherwise not very common

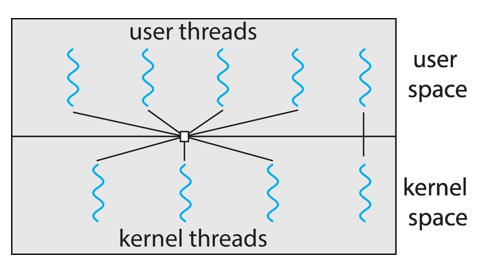

Two-level Model

- Similar to M:M, except that it allows a user thread to be bound to kernel thread

Thread Libraries

Thread Libraries

- Thread library provides programmer with API for creating and managing threads

- Two primary ways of implementing

- Library entirely in user space

- Kernel-level library supported by the OS

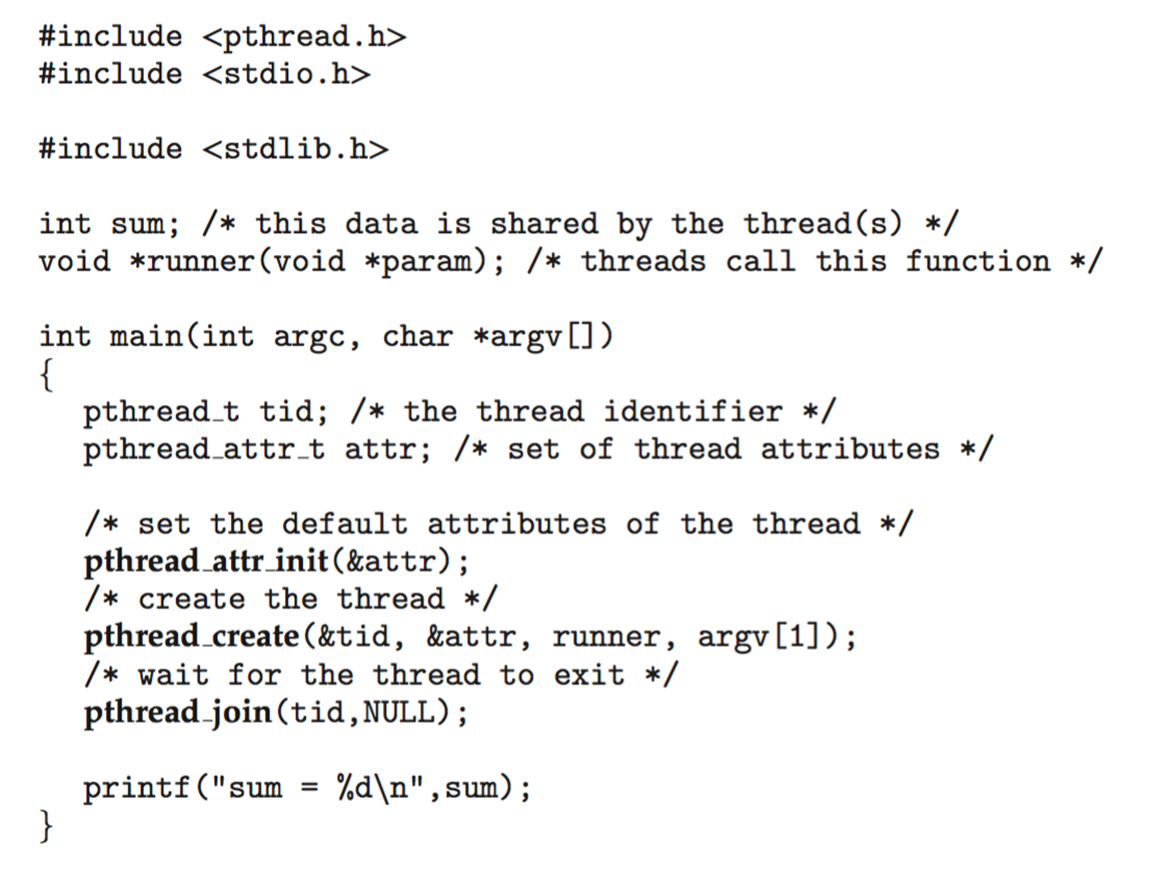

Pthreads

- May be provided either as user-level or kernel-level

- A POSIX standard (IEEE 1003.1c) API for thread creation and synchronization

- Specification, not implementation

- API specifies behavior of the thread library, implementation is up to development of the library

- Common in UNIX operating systems (Linux & Mac OS X)

Pthreads Example

Pthreads Code for Joining 10 Threads

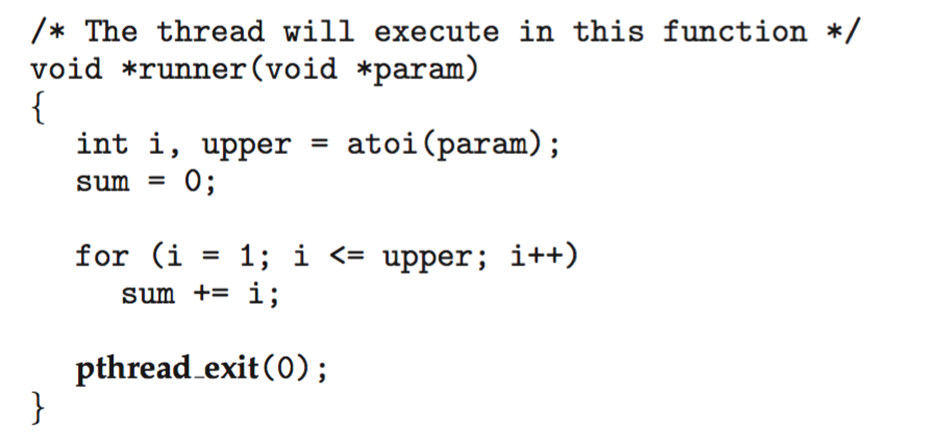

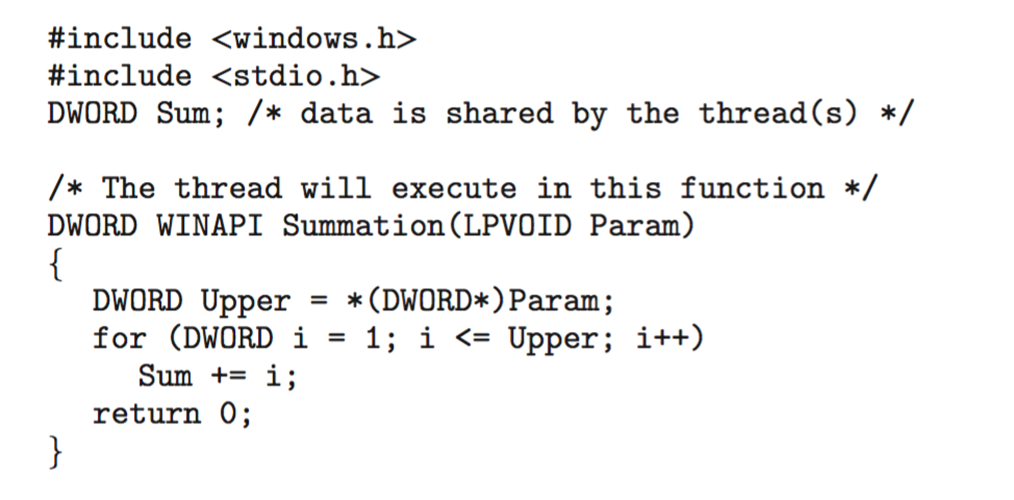

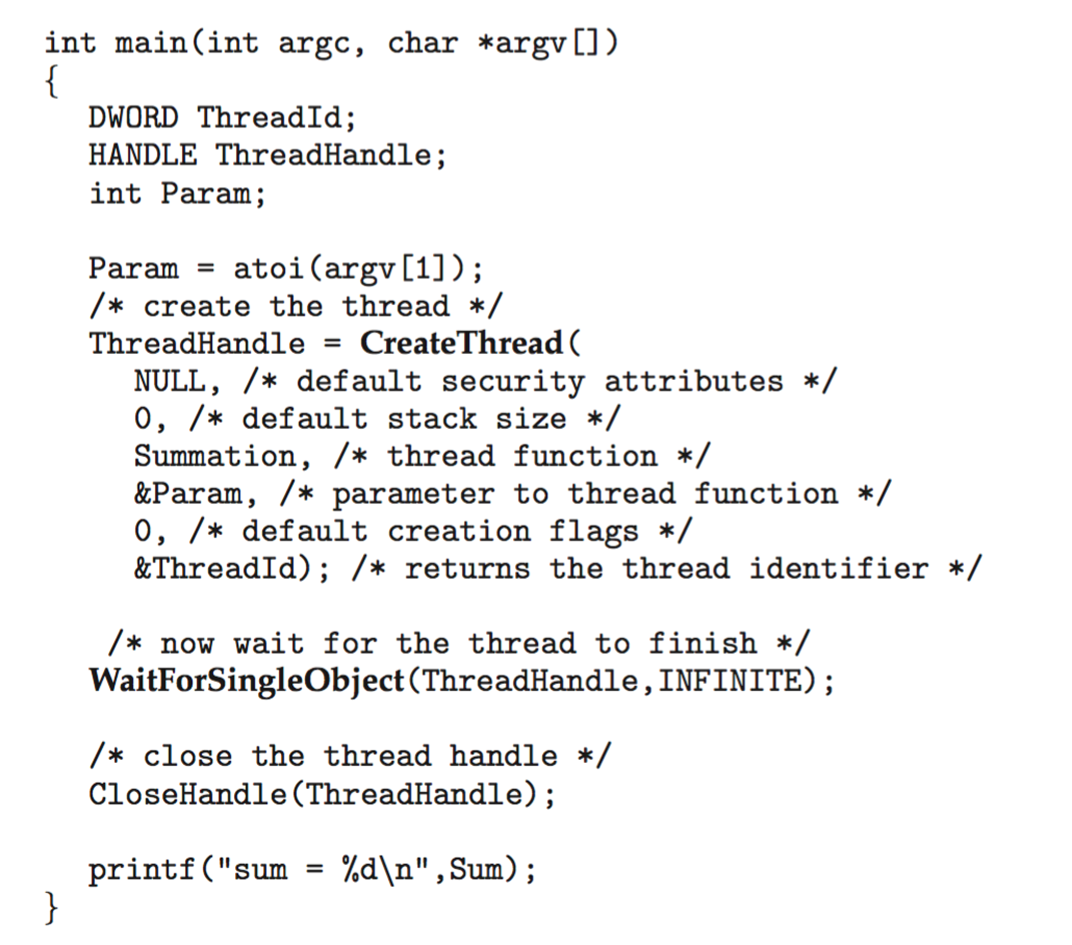

Windows Multithreaded C Program

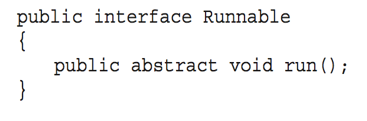

Java Threads

- Java threads are managed by the JVM

- Typically implemented using the threads model provided by underlying OS

- Java threads may be created by:

- Extending Thread class

- Implementing the Runnable interface

- prStandardactice is to implement Runnable interface

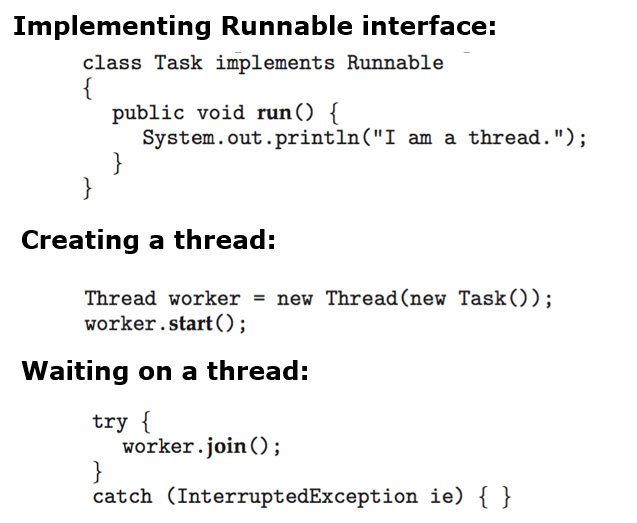

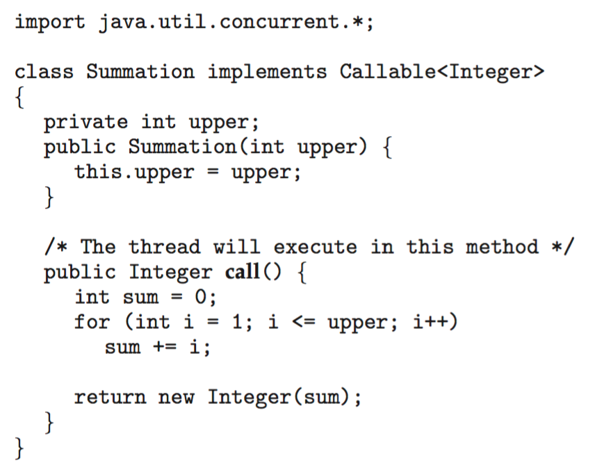

Java Executor Framework

Implicit Threading

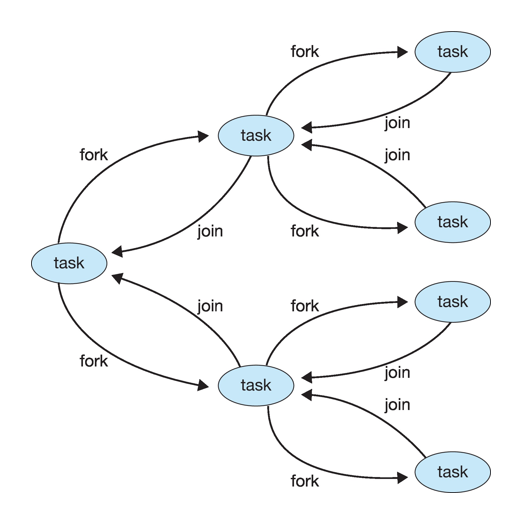

Implicit Threading

- Growing in popularity as numbers of threads increase, program correctness more difficult with explicit threads

- Creation and management of threads done by compilers and run-time libraries rather than programmers

- Five methods explored

- Thread Pools

- Fork-Join

- OpenMP

- Grand Central Dispatch

- Intel Threading Building Blocks

Thread Pools

- Create a number of threads in a pool where they await work

- Advantages:

- Usually slightly faster to service a request with an existing thread than create a new thread

- Allows the number of threads in the application(s) to be bound to the size of the pool

- Separating task to be performed from mechanics of creating task allows different strategies for running task

- i.e.Tasks could be scheduled to run periodically

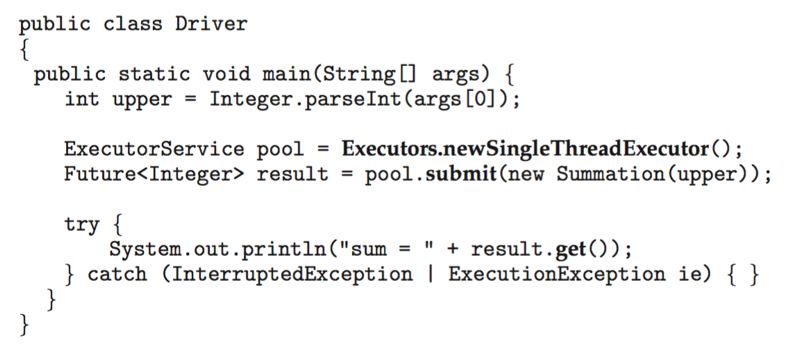

- Windows API supports thread pools:

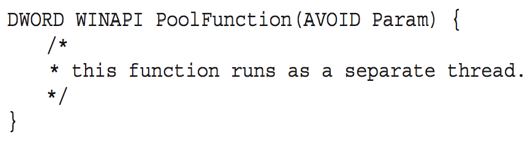

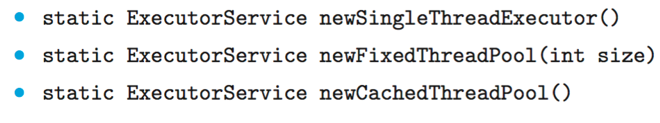

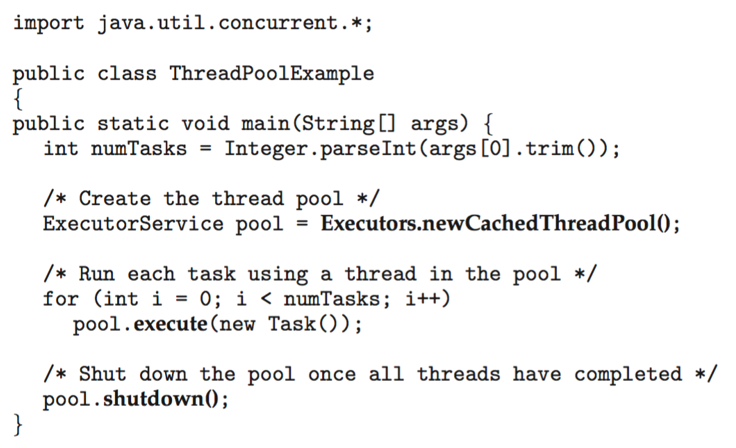

Java Thread Pools

- Three factory methods for creating thread pools in Executors class:

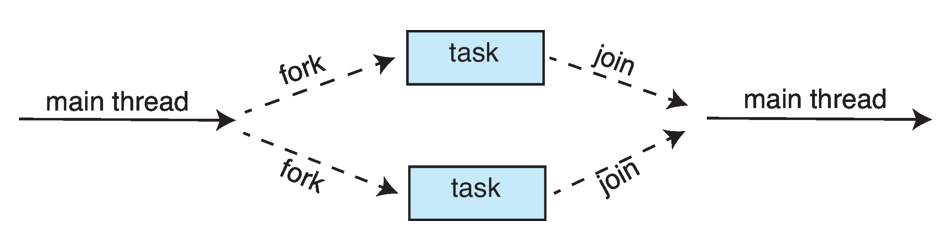

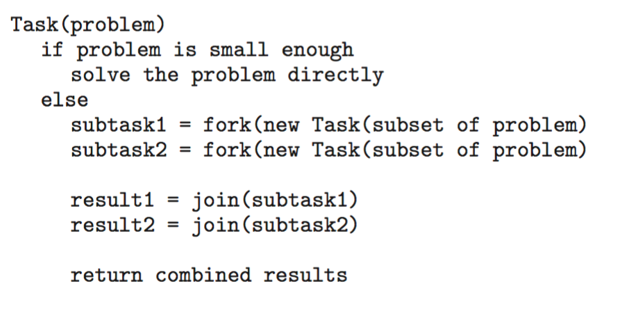

Fork-Join Parallelism

-

Multiple threads (tasks) are forked, and then joined.

-

General algorithm for fork-join strategy:

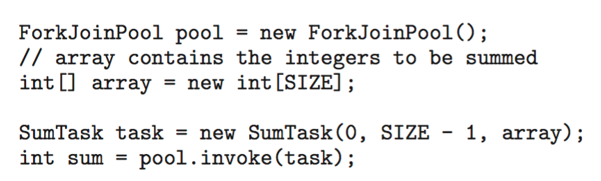

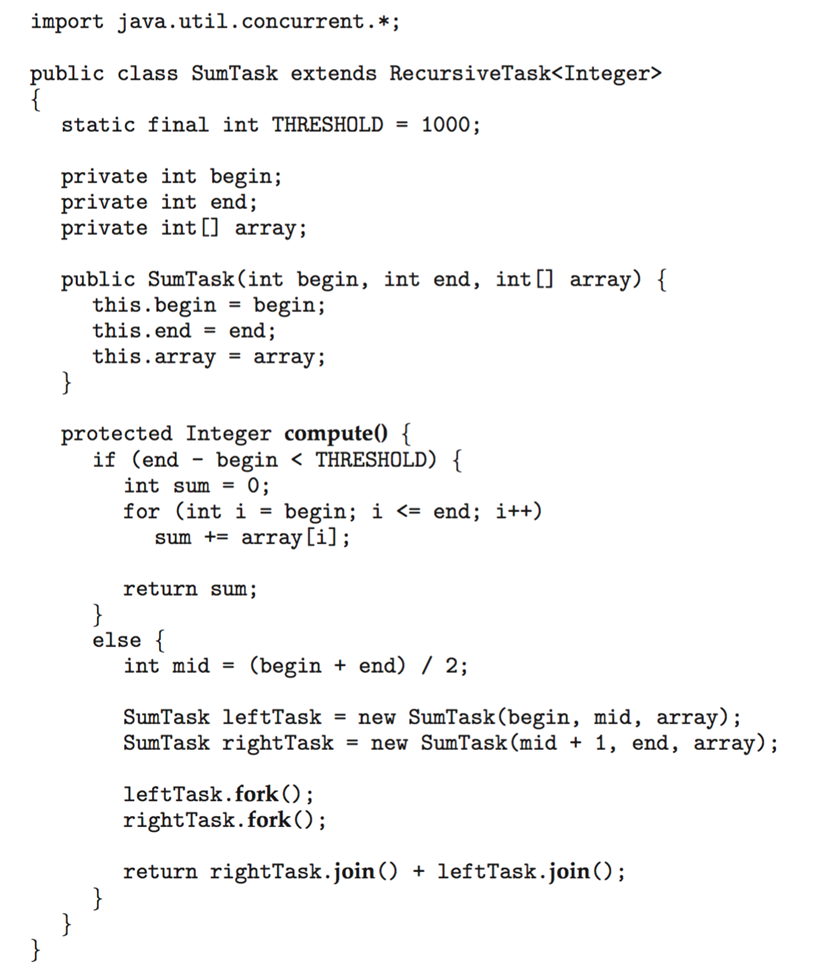

Fork-Join Parallelism in Java

- The ForkJoinTask is an abstract base class

- RecursiveTask and RecursiveAction classes extend ForkJoinTask

- RecursiveTask returns a result (via the return value from the compute() method)

- RecursiveAction does not return a result

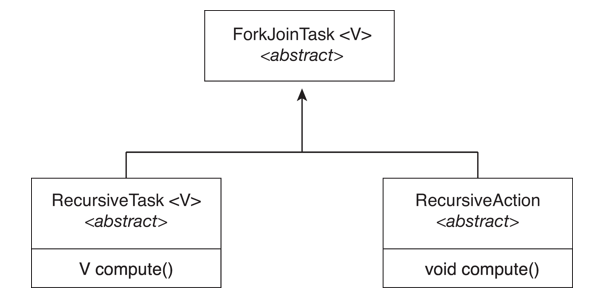

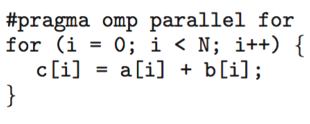

OpenMP

-

Set of compiler directives and an API for C, C++, FORTRAN

-

Provides support for parallel programming in shared-memory environments

-

Identifies parallel regions – blocks of code that can run in parallel

- #pragma omp parallel

-

Create as many threads as there are cores

-

Run the for loop in parallel

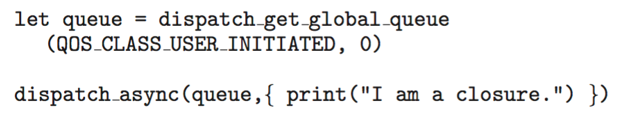

Grand Central Dispatch

-

Apple technology for macOS and iOS operating systems

-

Extensions to C, C++ and Objective-C languages, API, and run-time library

-

Allows identification of parallel sections

-

Manages most of the details of threading

-

Block is in “^{ }” :

- ˆ{ printf("I am a block"); }

-

Blocks placed in dispatch queue

- Assigned to available thread in thread pool when removed from queue

-

Two types of dispatch queues:

- serial – blocks removed in FIFO order, queue is per process, called main queue

- Programmers can create additional serial queues within program

- concurrent – removed in FIFO order but several may be removed at a time

- Four system wide queues divided by quality of service:

- QOS_CLASS_USER_INTERACTIVE

- QOS_CLASS_USER_INITIATED

- QOS_CLASS_USER_UTILITY

- QOS_CLASS_USER_BACKGROUND

- serial – blocks removed in FIFO order, queue is per process, called main queue

-

For the Swift language a task is defined as a closure – similar to a block, minus the caret

-

Closures are submitted to the queue using the dispatch_async() function:

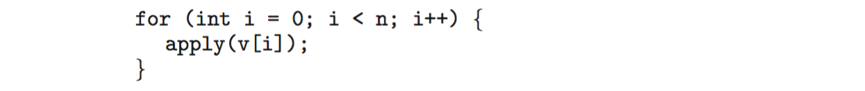

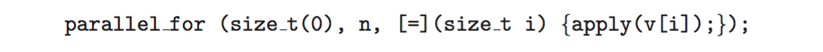

Intel Threading Building Blocks (TBB)

-

Template library for designing parallel C++ programs

-

A serial version of a simple for loop

-

The same for loop written using TBB with parallel_for statement![]

Threading Issues

Threading Issues

- Semantics of fork() and exec() system calls

- Signal handling

- Synchronous and asynchronous

- Thread cancellation of target thread

- Asynchronous or deferred

- Thread-local storage

- Scheduler Activations

Semantics of fork() and exec()

- Does fork()duplicate only the calling thread or all threads?

- Some UNIXes have two versions of fork

- exec() usually works as normal – replace the running process including all threads

Signal Handling

-

Signals are used in UNIX systems to notify a process that a particular event has occurred.

-

A signal handler is used to process signals

- Signal is generated by particular event

- Signal is delivered to a process

- Signal is handled by one of two signal handlers:

- default

- user-defined

-

Every signal has default handler that kernel runs when handling signal

- User-defined signal handler can override default

- For single-threaded, signal delivered to process

-

Where should a signal be delivered for multi-threaded?

- Deliver the signal to the thread to which the signal applies

- Deliver the signal to every thread in the process

- Deliver the signal to certain threads in the process

- Assign a specific thread to receive all signals for the process

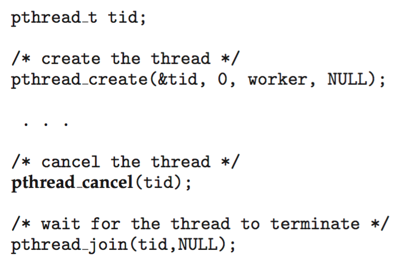

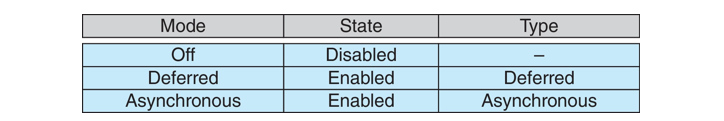

Thread Cancellation

- Terminating a thread before it has finished

- Thread to be canceled is target thread

- Two general approaches:

- Asynchronous cancellation terminates the target thread immediately

- Deferred cancellation allows the target thread to periodically check if it should be cancelled

- Pthread code to create and cancel a thread:

- Invoking thread cancellation requests cancellation, but actual cancellation depends on thread state

- If thread has cancellation disabled, cancellation remains pending until thread enables it

- Default type is deferred

- Cancellation only occurs when thread reaches cancellation point

- I.e. pthread_testcancel()

- Then cleanup handler is invoked

- Cancellation only occurs when thread reaches cancellation point

- On Linux systems, thread cancellation is handled through signals

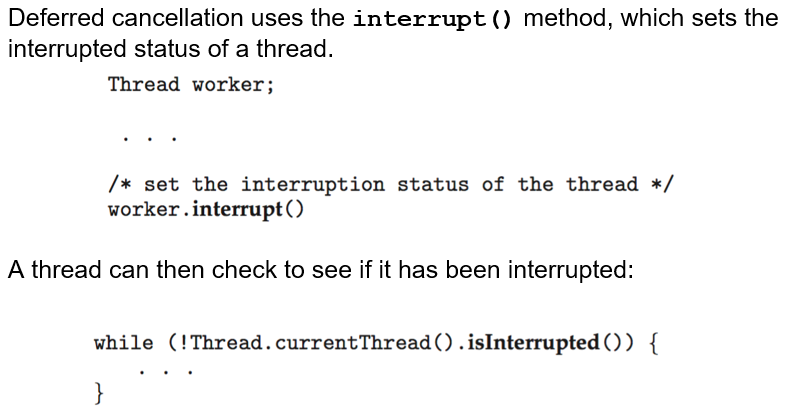

Thread Cancellation in Java

Thread-Local Storage

- Thread-local storage (TLS) allows each thread to have its own copy of data

- Useful when you do not have control over the thread creation process (i.e., when using a thread pool)

- Different from local variables

- Local variables visible only during single function invocation

- TLS visible across function invocations

- Similar to static data

- TLS is unique to each thread

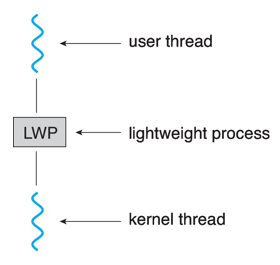

Scheduler Activations

- Both M:M and Two-level models require communication to maintain the appropriate number of kernel threads allocated to the application

- Typically use an intermediate data structure between user and kernel threads – lightweight process (LWP)

- Appears to be a virtual processor on which process can schedule user thread to run

- Each LWP attached to kernel thread

- How many LWPs to create?

- Scheduler activations provide upcalls - a communication mechanism from the kernel to the upcall handler in the thread library

- This communication allows an application to maintain the correct number kernel threads

Operating System Examples

Operating System Examples

- Windows Threads

- Linux Threads

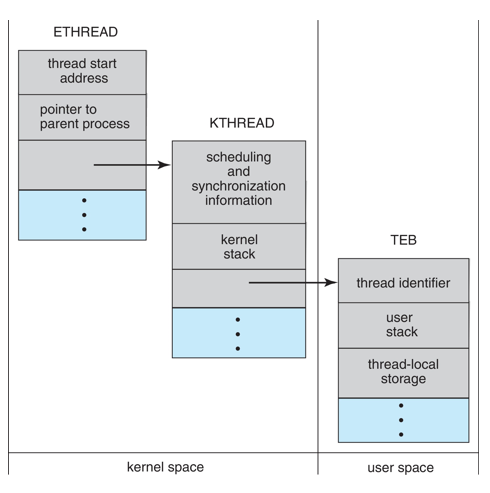

Windows Threads

-

Windows API – primary API for Windows applications

-

Implements the one-to-one mapping, kernel-level

-

Each thread contains

- A thread id

- Register set representing state of processor

- Separate user and kernel stacks for when thread runs in user mode or kernel mode

- Private data storage area used by run-time libraries and dynamic link libraries (DLLs)

-

The register set, stacks, and private storage area are known as the context of the thread

-

The primary data structures of a thread include:

- ETHREAD (executive thread block) – includes pointer to process to which thread belongs and to KTHREAD, in kernel space

- KTHREAD (kernel thread block) – scheduling and synchronization info, kernel-mode stack, pointer to TEB, in kernel space

- TEB (thread environment block) – thread id, user-mode stack, thread-local storage, in user space

Windows Threads Data Structures

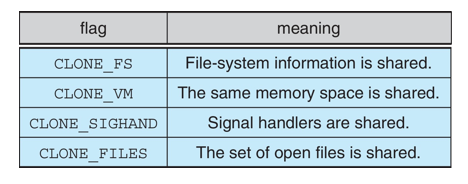

Linux Threads

- Linux refers to them as tasks rather than threads

- Thread creation is done through clone() system call

- clone() allows a child task to share the address space of the parent task (process)

- Flags control behavior

- Flags control behavior

- struct task_struct points to process data structures (shared or unique)