푸🤗항🤗항

저번 게시글에서 작업한 크롤링을 수행하는 파이썬 코드에 몇 가지 크롤링 코드를 좀 더 추가하여

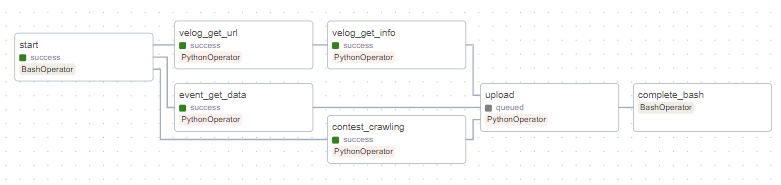

dag을 구성했다. dag 구성은 다음과 같았다(이미 S3 업로드까지 완성된 dag파일이다..). (이 링크에 방문하면 dag 파일을 확인할 수 있다.)

- start: 시작

- velog_get_url: velog에서 수집한 url list 가져오기

- velog_get_info: velog에서 수집한 url에서 정보 가져오기

- event_get_data: 개발 행사 정보 가져오기

- contest_crawling: 개발 대회 정보 가져오기

- upload: 수집한 정보 S3에 업로드

- complete_bash: 종료

DAG 구성

from datetime import timedelta

from airflow import DAG

from airflow.operators.bash import BashOperator

from airflow.operators.python_operator import PythonOperator

from airflow.utils.trigger_rule import TriggerRule

from airflow.providers.amazon.aws.hooks.s3 import S3Hook # 추가

import sys, os

sys.path.append(os.getcwd())

from crawling_event import *

from crawling_velog import *

from crawling_contest import *

def upload_to_s3() :

date = datetime.now().strftime("%Y%m%d")

hook = S3Hook('de-project') # connection ID 입력

event_filename = f'/home/ubuntu/airflow/airflow/data/event_{date}.csv'

velog_filename = f'/home/ubuntu/airflow/airflow/data/velog_{date}.csv'

contest_filename = f'/home/ubuntu/airflow/airflow/data/contest_{date}.csv'

event_key = f'data/event_{date}.csv'

velog_key = f'data/velog_{date}.csv'

contest_key = f'data/contest_{date}.csv'

bucket_name = 'de-project-airflow'

hook.load_file(filename=event_filename, key=event_key, bucket_name=bucket_name, replace=True)

hook.load_file(filename=velog_filename, key=velog_key, bucket_name=bucket_name, replace=True)

hook.load_file(filename=contest_filename, key=contest_key, bucket_name=bucket_name, replace=True)

default_args = {

'owner': 'owner-name',

'depends_on_past': False,

'email': ['youremail@gmail.com'],

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=30),

}

dag_args = dict(

dag_id="crawling-upload",

default_args=default_args,

description='description',

schedule_interval=timedelta(days=1),

start_date=datetime(2023, 11, 29),

tags=['de-project'],

)

with DAG( **dag_args ) as dag:

start = BashOperator(

task_id='start',

bash_command='echo "start"',

)

upload = PythonOperator(

task_id = 'upload',

python_callable = upload_to_s3

)

# -------- velog -------- #

velog_get_url_task = PythonOperator(

task_id='velog_get_url',

python_callable= velog_get_url

)

velog_get_info_task= PythonOperator(

task_id='velog_get_info',

python_callable= velog_get_info

)

# -------- event -------- #

event_get_data_task = PythonOperator(

task_id="event_get_data",

python_callable= event_get_data

)

# -------- contest -------- #

contest_task = PythonOperator(

task_id='contest_crawling',

python_callable=contest_crawling,

)

complete = BashOperator(

task_id='complete_bash',

bash_command='echo "complete"',

)

start >> event_get_data_task >> upload >> complete

start >> velog_get_url_task >> velog_get_info_task >> upload >> complete

start >> contest_task >> upload >> complete

위 코드는 다음과 같은 과정을 수행한다.

- 필요한 라이브러리 불러오기

- 추출한 정보를 csv 파일로 만들어 S3에 업로드

- dag 구성

- task 의존성 작성

나머지 과정은 이전 게시글에서 설명했기 때문에, 이번 게시물에서는 S3 관련한 내용만 설명하겠다.

S3 연결

S3 버킷 생성

AWS Console에 접속하여 S3 → bucket 으로 들어간다.

- 버킷 생성

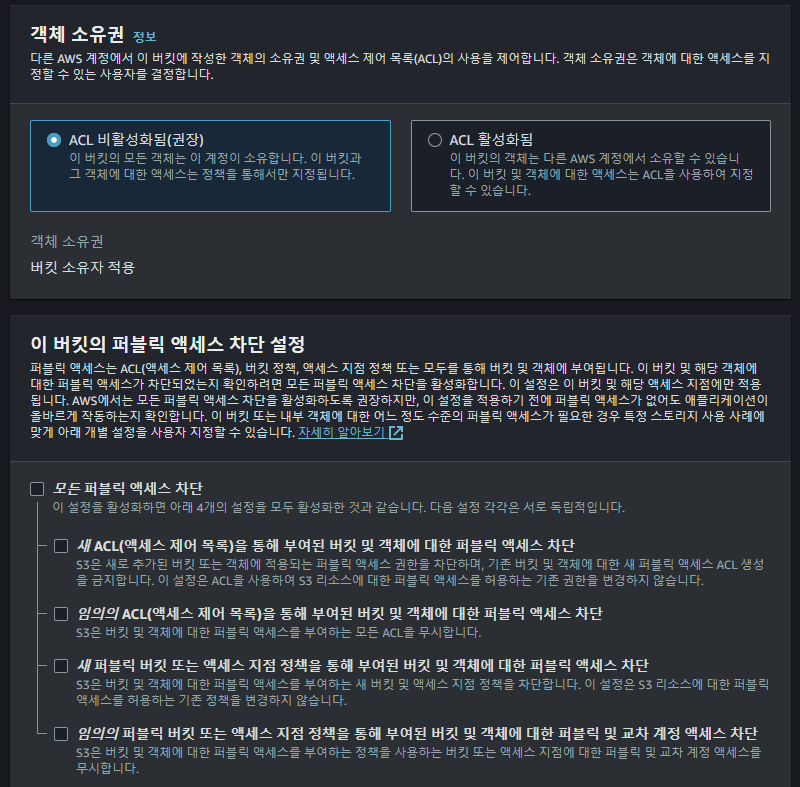

버킷 이름지정객체 소유권: ACL 비활성화됨(권장) 선택퍼블릭 액세스 차단 설정: 모든 퍼블릭 액세스 차단 해제- 나머지 설정 기본값

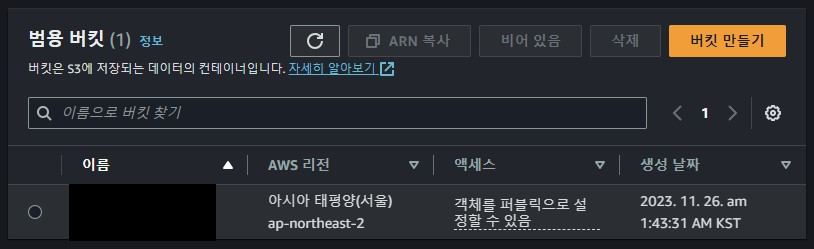

다음과 같이 생성되면 완료다.

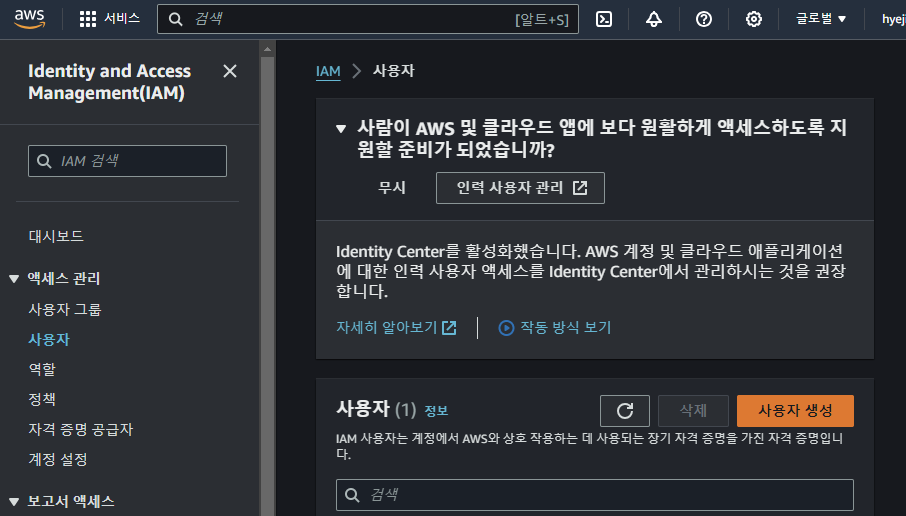

IAM 생성

AWS Console에 접속하여 IAM 으로 들어간다.

- 좌측 리스트에서

사용자선택 사용자 생성사용자 이름지정- 나머지 설정 기본값

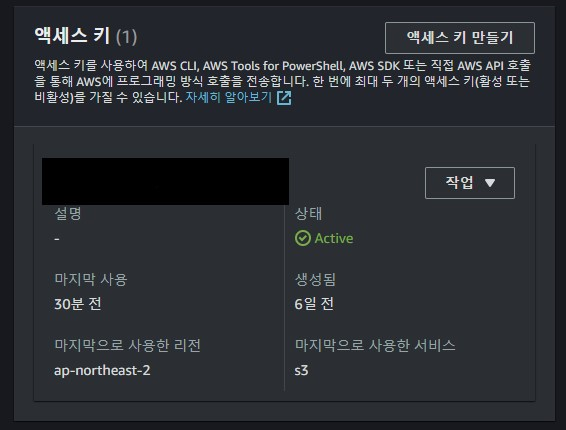

사용자를 생성한 뒤, 사용자 세부 정보로 들어가면,보안 자격 증명으로 들어간다. 아래 쪽에,액세스 키파트에서액세스 키 만들기를 선택해준다.

(나는 이미 생성해서 존재하지만, 사용자를 새로 생성했다면, 없을 것이다.)

- 우린 AWS 컴퓨팅 서비스에서 실행할 것이기 때문에, 3번째

AWS 컴퓨팅 서비스에서 실행되는 애플리케이션을 선택해준다. - 태그 값을 설정하면 액세스 키가 생성된다.

액세스 키와비밀 액세스 키가 있는데, 비밀 액세스 키는 재확인하거나 복구할 수 없으므로 잘 저장해두길 바란다.

S3와 Airflow 연결

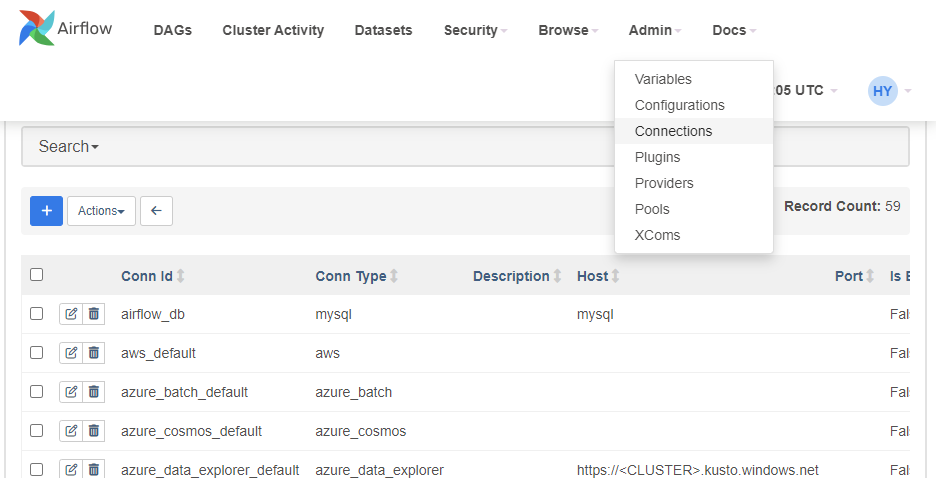

Airflow web ui에서 Admin → Connections 으로 들어간다.

좌측 상단에 + 버튼을 눌러준다.

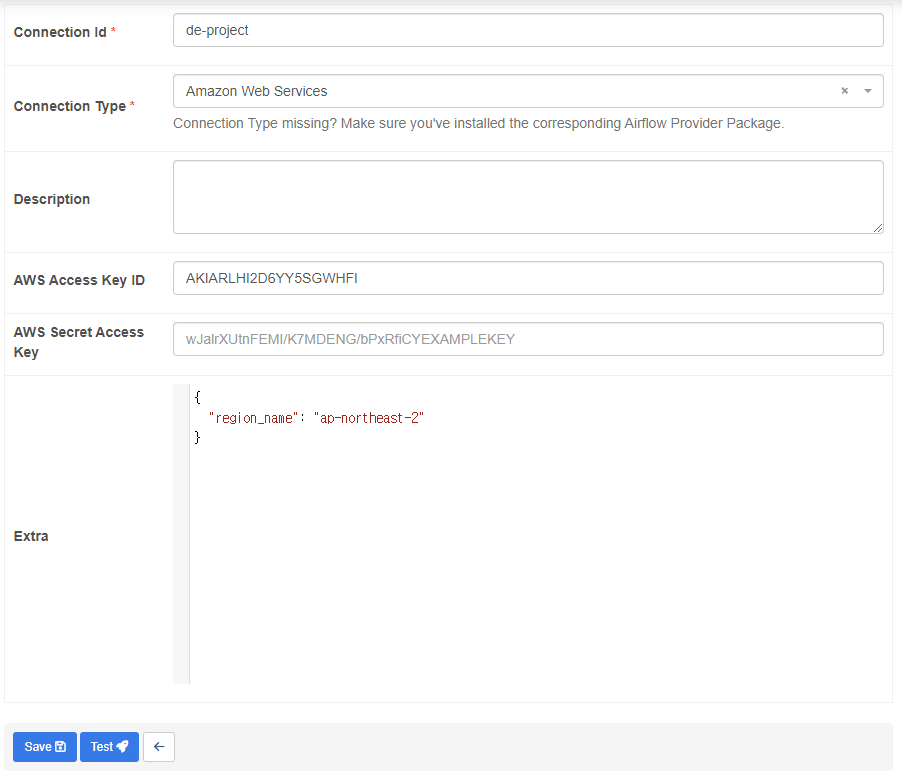

- Connection ID 지정

- Connection Type: Amazon Web Services

- AWS Access Key ID: IAM 생성할 때 저장해두었던 액세스 키 입력

- AWS Secret Access Key: IAM 생성할 때 저장해두었던 비밀 액세스 키 입력

- Extra: 아래와 같이 region에 대한 default 값을 지정한다.

{

"region_name": "ap-northeast-2"

}Connection Type에 Amazon Web Services가 나타나지 않을 땐,

pip install apache-airflow-providers-amazon을 입력한다. 이후 webserver를 종료 후 다시 실행시켜줘야 Connection Type에 Amazon Web Services가 나타난다.

Test Connection

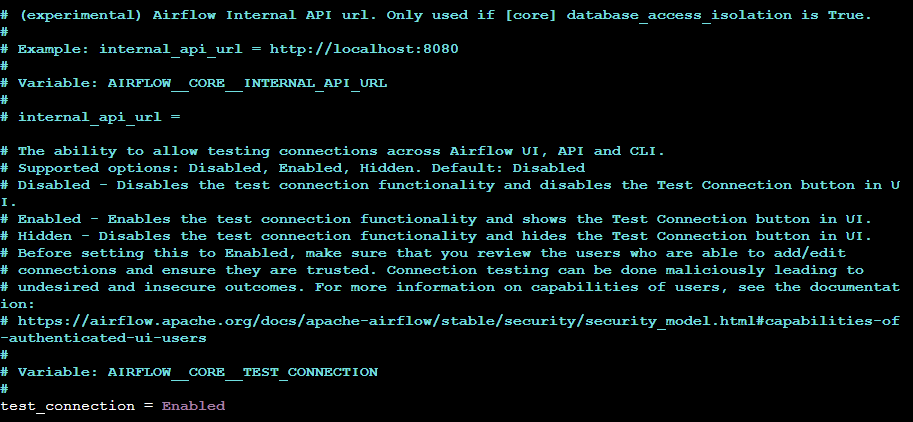

test 버튼이 활성화되어있지 않을 수 있다. 이 땐, airflow.cfg 파일에서 test_connection 부분이 Disabled로 되어있을텐데, Enabled로 바꿔준다.

그러면 test 버튼이 활성화된 것을 확인할 수 있다. test 버튼을 누르고, 상단에 초록창이 뜨면 잘 연결된 것이다.

DAG 파일 수정

crawling_contest, crawling_event, crawling_velog 세 가지 크롤링 파일에서 수집한 데이터들을 csv 파일로 만들어주자.

S3 관련 라이브러리 불러오기

from airflow.providers.amazon.aws.hooks.s3 import S3HookS3에 파일 업로드해주는 task 추가

S3에 수집한 데이터를 담은 csv 파일을 업로드하는 task를 위해 함수를 추가해준다.

def upload_to_s3() :

date = datetime.now().strftime("%Y%m%d")

hook = S3Hook('de-project') # connection ID 입력

event_filename = f'/home/ubuntu/airflow/airflow/data/event_{date}.csv'

velog_filename = f'/home/ubuntu/airflow/airflow/data/velog_{date}.csv'

contest_filename = f'/home/ubuntu/airflow/airflow/data/contest_{date}.csv'

event_key = f'data/event_{date}.csv'

velog_key = f'data/velog_{date}.csv'

contest_key = f'data/contest_{date}.csv'

bucket_name = 'de-project-airflow'

hook.load_file(filename=event_filename, key=event_key, bucket_name=bucket_name, replace=True)

hook.load_file(filename=velog_filename, key=velog_key, bucket_name=bucket_name, replace=True)

hook.load_file(filename=contest_filename, key=contest_key, bucket_name=bucket_name, replace=True)- S3Hook의 인자로

connection ID를 입력해준다. - hook.load_file의 인자는 다음과 같이 설정해준다.

filename: S3에 올릴 csv 파일이 저장된 경로key: S3에 저장할 경로bucket_name: S3의 버킷 이름

DAG 객체 수정

with DAG( **dag_args ) as dag:

...

upload = PythonOperator(

task_id = 'upload',

python_callable = upload_to_s3

)

...task 의존성 수정

start >> event_get_data_task >> upload >> complete

start >> velog_get_url_task >> velog_get_info_task >> upload >> complete

start >> contest_task >> upload >> complete각각의 크롤링 코드를 통해 데이터를 수집한 다음, csv 파일을 저장한 뒤에 S3에 저장된 csv 파일을 업로드할 수 있도록 task 의존성을 수정해주었다.

다음에는 airflow와 slack을 연결해 크롤링을 통해 수집한 데이터를 특정 워크스페이스에 자동으로 보내주는 슬랙봇을 만들어보겠다.