Keras API : https://keras.io/api/

import tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt1. Models API

The Sequential class

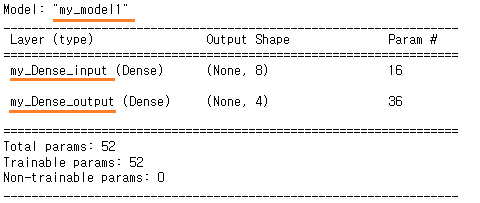

model = keras.Sequential([

#keras.layers.InputLayer(input_shape=(1,)),

keras.layers.Dense(units=8, input_shape=(1,), name = 'my_Dense_input'),

# input_shape는 맨 첫번째 layer에만 적어줌.

keras.layers.Dense(units=4, name = 'my_Dense_output')

], name = 'my_model1')

model.summary()( Input layer : numpy와 pandas 를 tensorflow 데이터 형식으로 변환시켜주는 역할 )

모델 안의 list에 access하는 방법

: layer들은 모델 내부에 list형식으로 저장되어 있음.

model.layers[0].name

model.get_layer(index=0).name

model.get_layer(name='my_Dense_input')

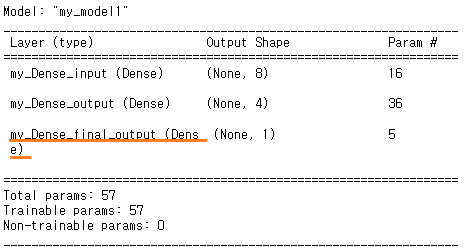

: 맨 마지막에 layer 추가addmethod

Adds a layer instance on top of the layer stack.

model.add(keras.layers.Dense(units=1, name = 'my_Dense_final_output'))

model.summary()

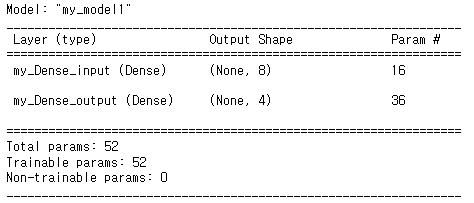

: 맨 마지막에 추가된 layer 삭제popmethod

Removes the last layer in the model.

model.pop()

model.summary()

< Sequential model 만들기 2 : add 활용 >

model2 = keras.Sequential()

layer1 = keras.layers.Dense(10, input = (1,))

layer2 = keras.layers.Dense(5)

model2.add(layer1)

model2.add(layer2)

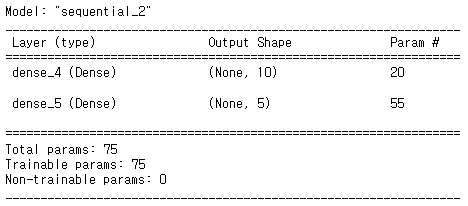

model2.summary()

< Sequential model 만들기 3 : functional model >

함수를 사용하듯이, input 값을 주고, output 뽑고, output을 다시 input으로 주는 방법.

1.

input_layer = keras.layers.Input(shape=(1,))

hidden_layer1 = keras.layers.Dense(1, name = 'hidden1')(input_layer)

hidden_layer2 = keras.layers.Dense(1, name = 'hidden2')(input_layer)

output_layer = keras.layers.Dense(1, name = 'output')(hidden_layer2)

model = keras.Model(inputs = [input_layer], outputs= [hidden_layer1, output_layer])2.

step1 = hidden_layer1(input_layer)

step2 = hidden_layer2(step1)

step3 = output_layer(step2)

model = keras.Model(inputs =[input_layer], outputs =[step3])

# ------------------------------------------------------ #

step1 = hidden_layer1(input_layer)

step2 = hidden_layer2(input_layer)

step3 = output_layer(step2)

model = keras.Model(inputs = [input_layer], outputs= [step1, step3]).

.

.

2. Layers API

Layer weight initializers

_ https://keras.io/api/layers/initializers/

: Initializers define the way to set the initial random weights of Keras layers.

(1) Ones class : weight의 초기값이 1

(2) Zeros class : weight의 초기값이 0

(3) RandomNormal class : Normal Curve를 따라 weights를 가져옴.

(4) RandomUniform class : 일정한 확률로 범위 내에서 weights를 가져옴.

(5) TruncatedNormal class

(6) GlorotNormal class

(7) GlorotUniform class

(8) HeNormal class

(9) HeUniform class

(10) Identity class

(10) Orthogonal class

(11) Constant class

(12) VarianceScaling classones class

: weight 의 초기값이 1

model = keras.Sequential([

keras.layers.Dense(units=1, input_shape=(1,), name = 'my_Dense_input',

kernel_initializer = keras.initializers.Ones()),

# Layer weight initializers

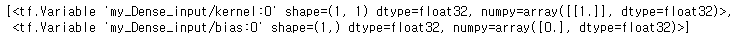

], name = 'my_model1')layer1 = model.get_layer(index=0)

layer1.weights # Returns the list of all layer variables/weights.

# Returns the current weights of the layer, as NumPy arrays.

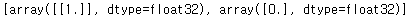

layer1.get_weights() #2차원, 1차원 ndarray

weight를 임의값으로 설정

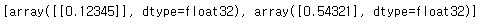

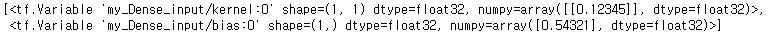

layer1.set_weights([np.array([[0.12345]]), np.array([0.54321])])

layer1.get_weights()

The base Layer class

trainable weights

: training이 가능한 weights

layer1.trainable_weights

non-trainable weights

# trainable = False

# training을 시킬때, 특정 layer의 weight값을 내가 원하는 값으로 fix 하고 싶을 때 사용.

layer1.trainable = False

layer1.trainable_weights #[ ]

layer1.non_trainable_weights

: Adds a new variable to the layer.add_weightmethod

layer1.add_weight(

name = None,

shape = None,

dtype = None,

initializer = None,

regularizer = None,

trainable = None,

constraint = None,

use_resource = None,

synchronization = tf.VariableSynchronization.AUTO,

aggregation = tf.VariableSynchronization.NONE,

**kwargs

)

: layer 의 현재 가중치를 NumPy 배열로 반환get_weightsmethod

Returns the current weights of the layer, as NumPy arrays.

layer1.get_weights()

: Sets the weights of the layer, from NumPy arrays.set_weightsmethod

layer1.set_weights([np.array([[0.12345]]), np.array([0.54321])])

print(layer1.get_weights())

# [<tf.Variable 'my_Dense_input/kernel:0' shape=(1, 1) dtype=float32, numpy=array([[0.12345]], dtype=float32)>,

# <tf.Variable 'my_Dense_input/bias:0' shape=(1,) dtype=float32, numpy=array([0.54321], dtype=float32)>]get_configmethod

add_lossmethod

Activation layers

- ReLU layer

- Softmax layer

- LeakyReLU layer

- PReLU layer

- ELU layer

- ThresholdedReLU layer

# activation function을 사용하는 방법.

# 1.

model = keras.Sequential([

keras.layers.Dense(units=1, input_shape=(1,),

activation = 'relu'), # relu의 매개변수를 줄 수 없음.

])

# 2.

model = keras.Sequential([

keras.layers.Dense(units=1, input_shape=(1,),

activation = keras.activations.relu())

])

# 3.

model = keras.Sequential([

keras.layers.Dense(units=1, input_shape=(1,),

keras.layers.Activations('relu'))

])

# 4.

model = keras.Sequential([

keras.layers.Dense(units=1, input_shape=(1,)),

keras.layers.Activations(keras.activations.relu())

])

# 5.

model = keras.Sequential([

keras.layers.Dense(units=1, input_shape=(1,),

keras.layers.ReLU()),

])

Layer weight constraints

weight값의 상한, 하한을 정해줌

: MaxNorm class / MinMaxNorm class / NonNeg class / UnitNorm class / RadialConstraint class

Layers API overview

-

Convolution layers : CNN

Pooling layers : CNN -

Recurrent layers : RNN (시계열데이터)

-

Preprocessing layers, Normalization layers

: sklearn에서 preprocessing 하는 비슷한 작업을 함. weight값이 없음. -

Regularization layers

: 과적합 방지 layer , Dropout layer를 가장 많이 사용 -

Attention layers

: transformer만들때 사용되는 layer -

Reshaping layers

-

Merging layers