본 게시물은 CloudNet@팀 Gasida(서종호) 님이 진행하시는

AWS EKS Workshop Study 내용을 기반으로 작성되었습니다.

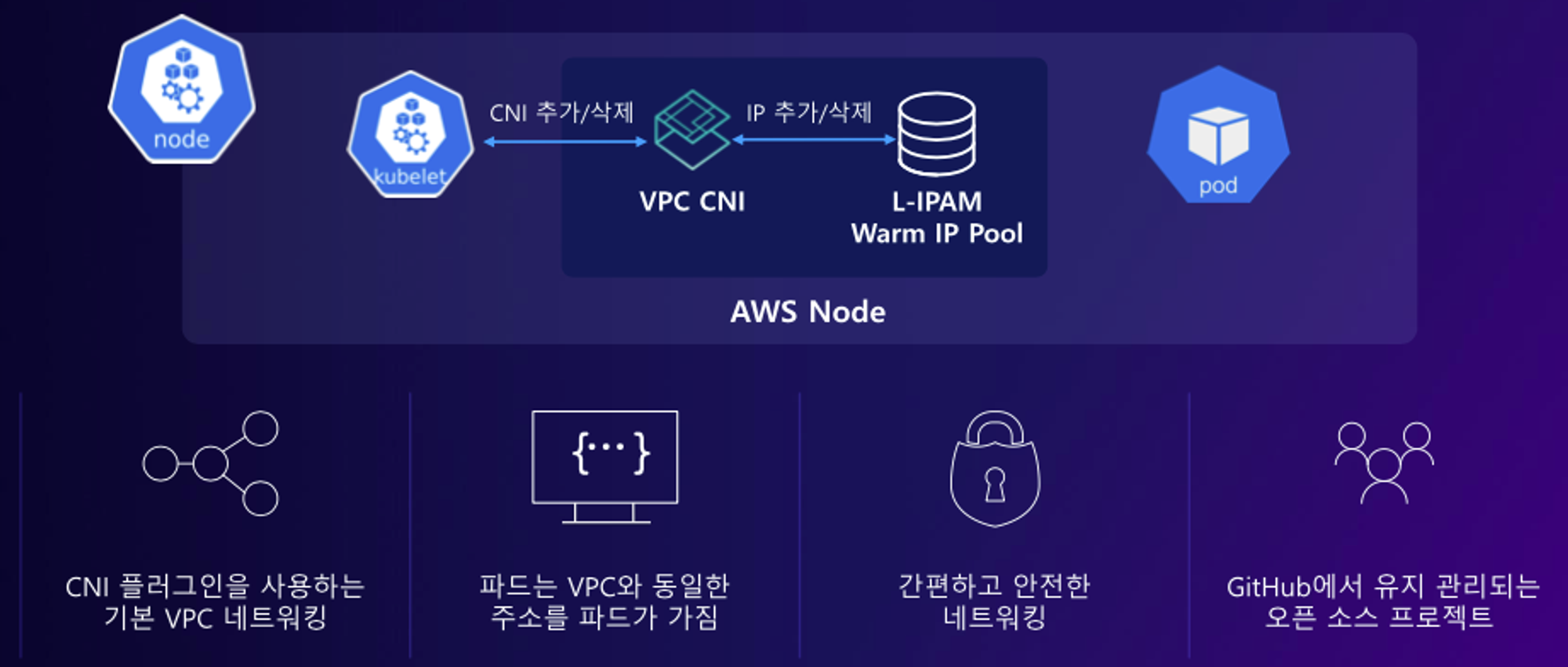

1. AWS VPC CNI

k8s의 CNI (Container Network Interface)는 k8s 네트워크 환경을 구축하며, 다양한 플러그인이 존재한다.

그중 AWS VPC CNI는 pod의 IP 네트워크 대역과 node의 IP 대역이 동일하여, 직접 통신이 용이하다.

- VPC와 통합되어 VPC Flow log, 라우팅 정책, 보안 그룹을 사용 가능

- VPC ENI에 미리 할당된 IP(=Local-IPAM Warm IP Pool) 사용 가능

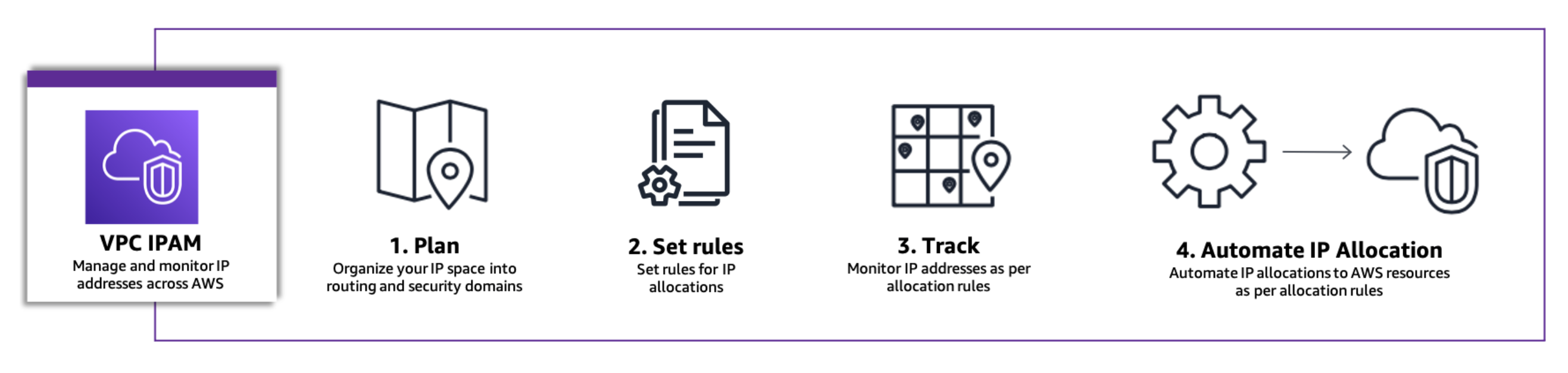

IPAM

AWS VPC IPAM(IP Address Manager)는 자동화된 워크플로우를 사용하여 워크로드의 IP 주소를 보다 쉽게 계획, 추적 및 모니터링할 수 있게 해주는 기능이다.

-

IPAM의 핵심 구성 요소

-

scope

- IPAM 내에서 가장 높은 수준의 컨테이너

IPAM에는 단일 네트워크 IP 공간을 나타내는 2개의 기본 scope가 포함되어 있으며 scope는 private scope, public scope로 구분된다. scope 안에서 pool을 생성할 수 있다.

- IPAM 내에서 가장 높은 수준의 컨테이너

-

pool

- 연속 IP 주소 범위 (또는 CIDR)의 모음

pool을 사용하여 라우팅 및 보안 요구 사항에 따라 IP 주소를 구성할 수 있으며, pool 안에서 CIDR을 AWS 리소스에 할당할 수 있다.

- 연속 IP 주소 범위 (또는 CIDR)의 모음

-

allocation

- pool 안에서 AWS 리소스 또는 다른 pool로 CIDR을 할당하는 것

-

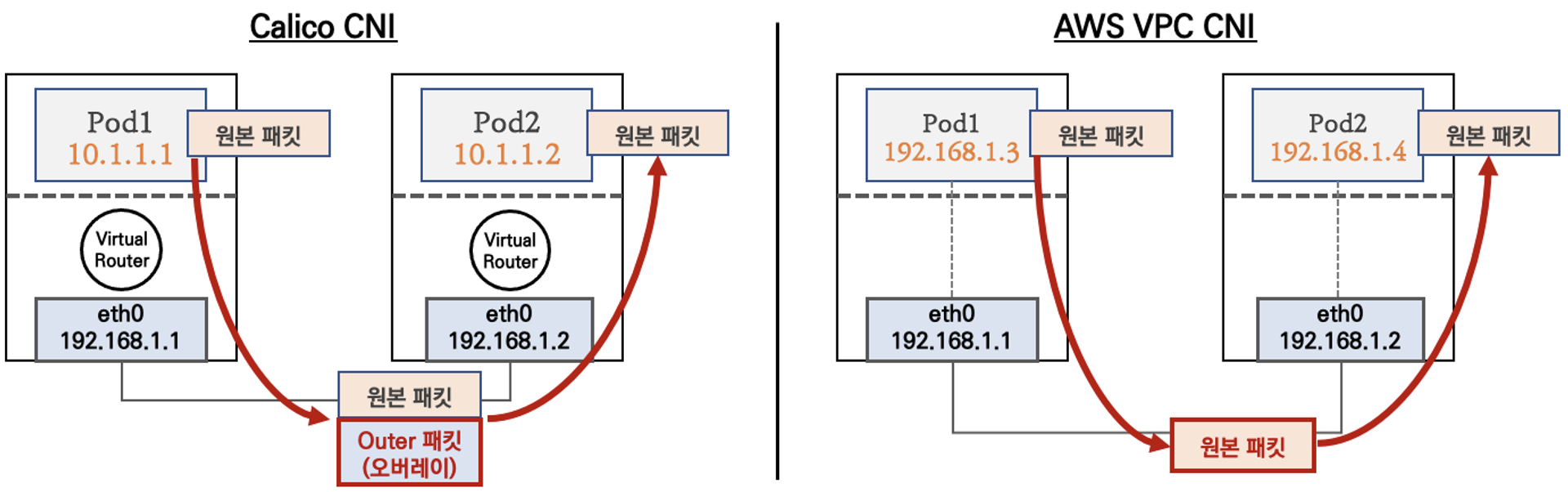

Calico CNI vs AWS VPC CNI

AWS VPC CNI는 node와 pod의 네트워크 대역을 동일하게 설정해

최적화된 통신을 구현한다.

pod간 통신 시 일반적으로 k8s CNI는 오버레이(VXLAN, IP-IP 등) 통신을 하고, AWS VPC CNI는 동일 대역으로 직접 통신을 하기 때문에 오버헤드가 적다.

2. 기본 네트워크 정보 확인

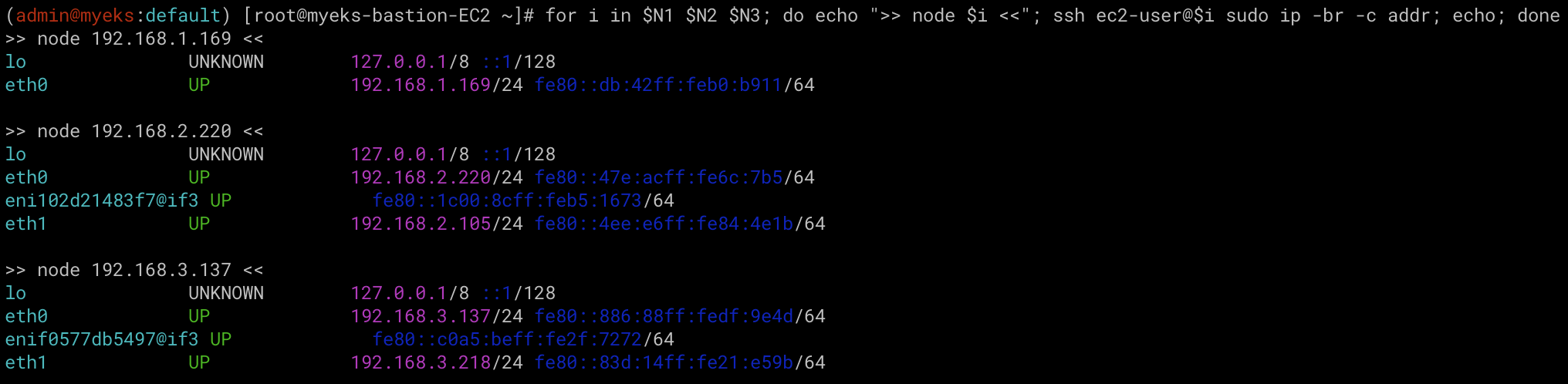

# 노드 네트워크 정보 확인

for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i sudo ip -br -c addr; echo; done

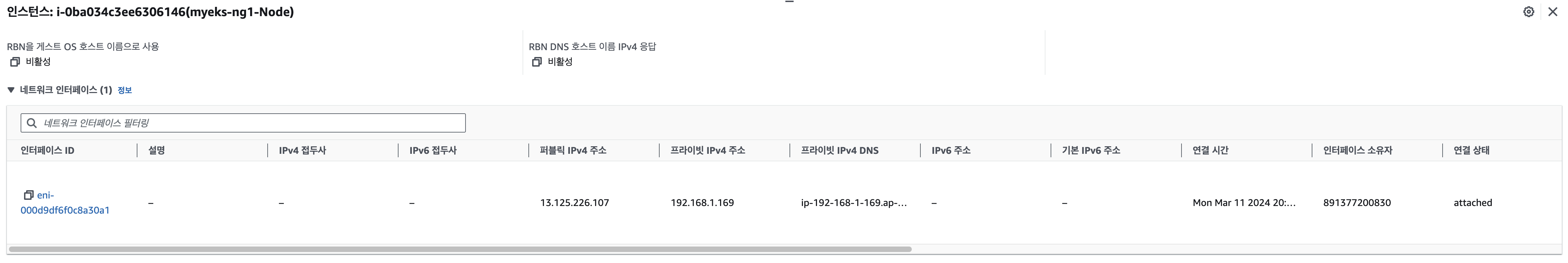

노드1은 ENI를 1개 가지고 있기 때문에 eth0만 조회된다.

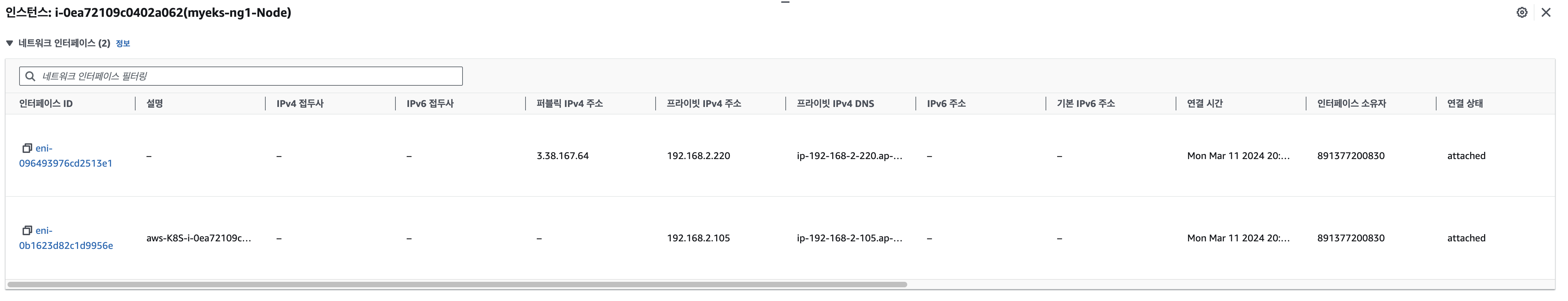

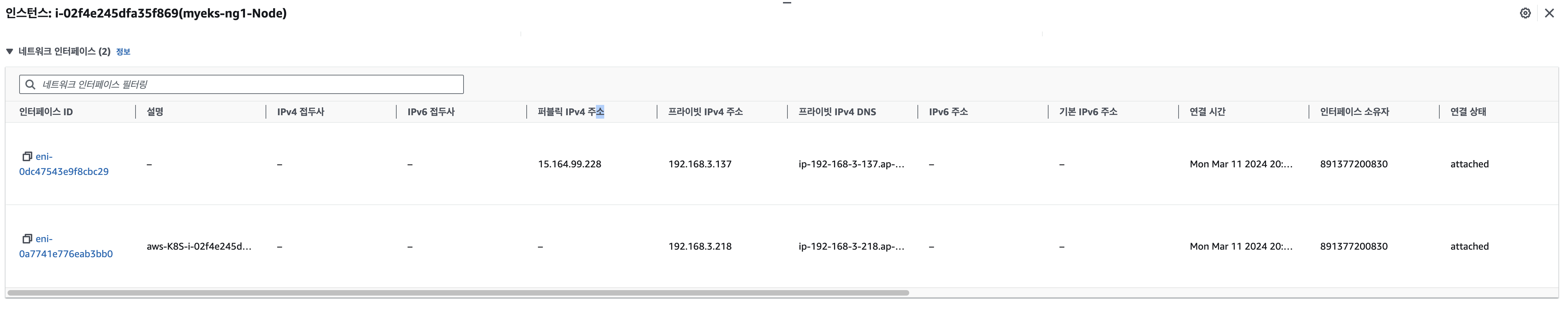

노드2, 3은 ENI를 2개 가지고 있기 때문에 eth0, eth1이 조회된다.

인스턴스 네트워크 정보 확인

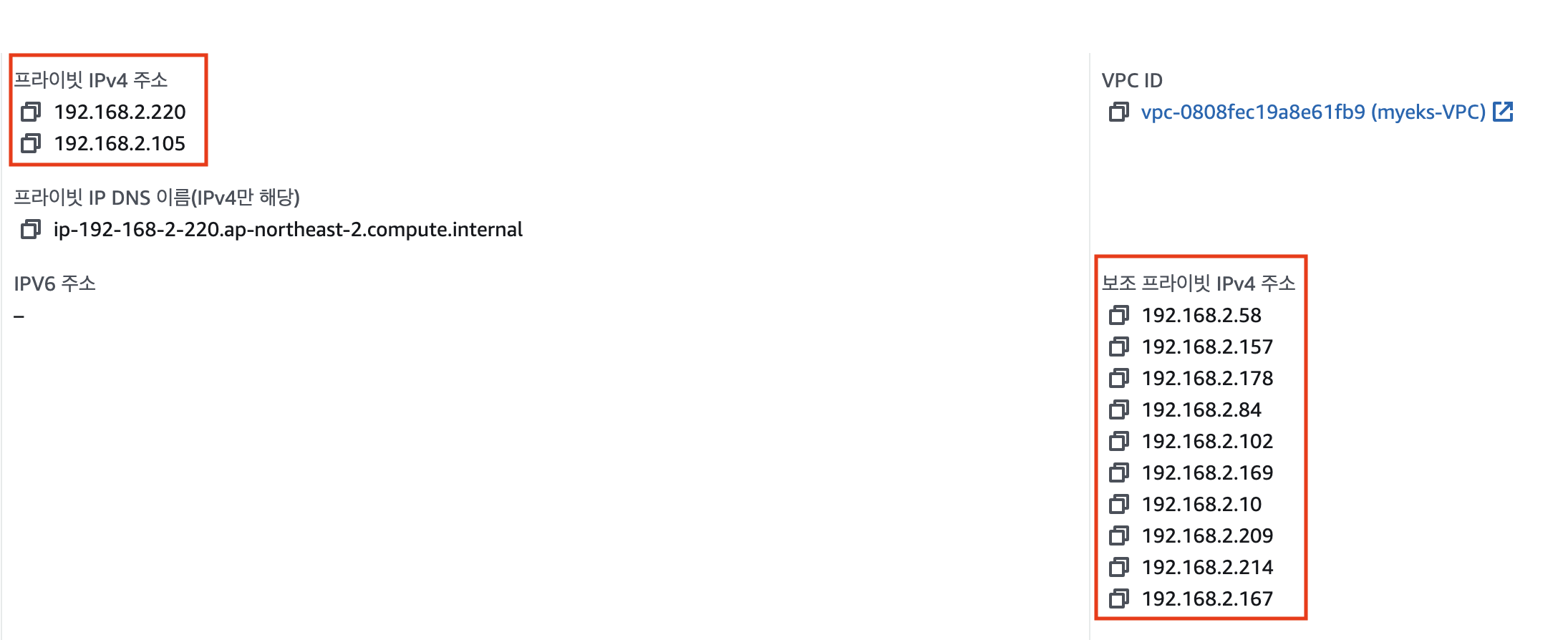

t3.medium 인스턴스는 ENI 자신의 IP 이외에 추가적으로 5개의 보조 private IP를 가질 수 있다.

보조 private IP를 pod가 사용하는지 확인

- coredns pod가 ENI의 보조 private IP를 사용하고 있다.

kubectl get pod -n kube-system -l k8s-app=kube-dns -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-55474bf7b9-6jm6f 1/1 Running 0 62m 192.168.2.58 ip-192-168-2-220.ap-northeast-2.compute.internal <none> <none>

coredns-55474bf7b9-fsd6c 1/1 Running 0 62m 192.168.3.235 ip-192-168-3-137.ap-northeast-2.compute.internal <none> <none>pod 생성해서 네트워크 정보 확인

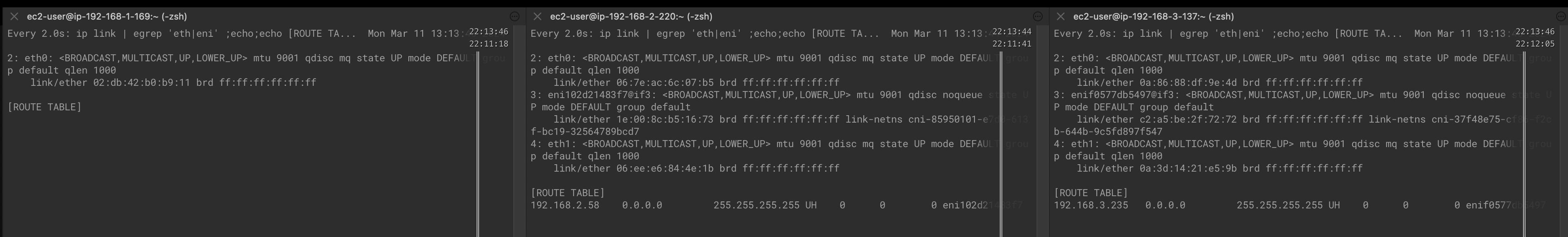

# [터미널1~3] 노드 모니터링

ssh ec2-user@$N1

watch -d "ip link | egrep 'eth|eni' ;echo;echo "[ROUTE TABLE]"; route -n | grep eni"

ssh ec2-user@$N2

watch -d "ip link | egrep 'eth|eni' ;echo;echo "[ROUTE TABLE]"; route -n | grep eni"

ssh ec2-user@$N3

watch -d "ip link | egrep 'eth|eni' ;echo;echo "[ROUTE TABLE]"; route -n | grep eni"

- pod 생성

# 테스트용 파드 netshoot-pod 생성

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: netshoot-pod

spec:

replicas: 3

selector:

matchLabels:

app: netshoot-pod

template:

metadata:

labels:

app: netshoot-pod

spec:

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 파드 이름 변수 지정

PODNAME1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].metadata.name})

PODNAME2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].metadata.name})

PODNAME3=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[2].metadata.name})

# 파드 확인

kubectl get pod -o wide

kubectl get pod -o=custom-columns=NAME:.metadata.name,IP:.status.podIP

NAME IP

netshoot-pod-79b47d6c48-dc2x8 192.168.1.80

netshoot-pod-79b47d6c48-h66hc 192.168.3.95

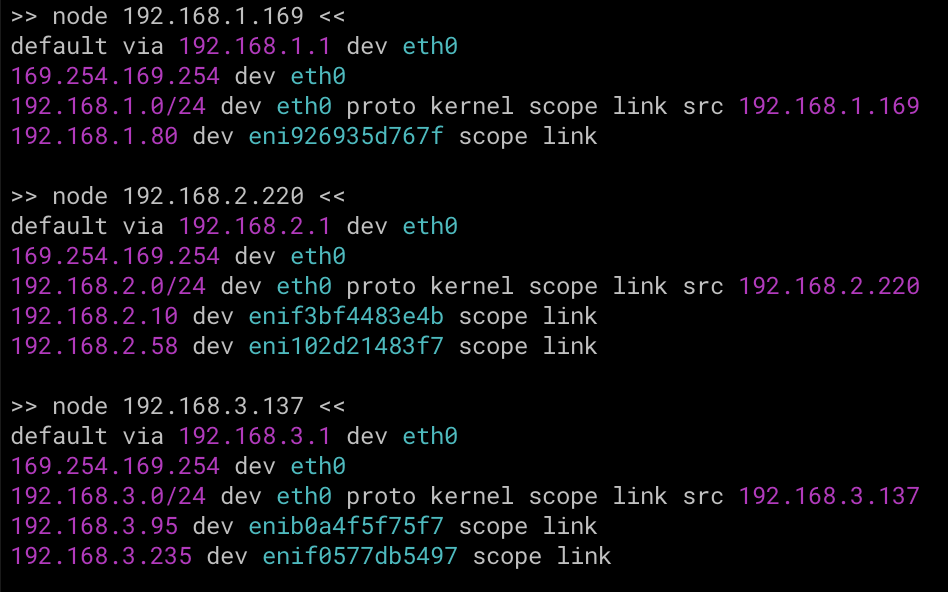

netshoot-pod-79b47d6c48-mns58 192.168.2.10- node 라우팅 정보 확인

for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i sudo ip -c route; echo; done각 node에 1개의 pod가 생성되어, veth 인터페이스 라우팅 정책이 추가됐다.

- pod eniY 정보 확인

ssh ec2-user@$N3

----------------

ip -br -c addr show

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.3.137/24 fe80::886:88ff:fedf:9e4d/64

enif0577db5497@if3 UP fe80::c0a5:beff:fe2f:7272/64

eth1 UP 192.168.3.218/24 fe80::83d:14ff:fe21:e59b/64

enib0a4f5f75f7@if3 UP fe80::40c3:1ff:fee7:d412/64

ip -c link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 0a:86:88:df:9e:4d brd ff:ff:ff:ff:ff:ff

3: enif0577db5497@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP mode DEFAULT group default

link/ether c2:a5:be:2f:72:72 brd ff:ff:ff:ff:ff:ff link-netns cni-37f48e75-cf86-f2cb-644b-9c5fd897f547

4: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 0a:3d:14:21:e5:9b brd ff:ff:ff:ff:ff:ff

5: enib0a4f5f75f7@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP mode DEFAULT group default

link/ether 42:c3:01:e7:d4:12 brd ff:ff:ff:ff:ff:ff link-netns cni-3244f266-d2d4-e4df-5681-460204df3ff7

ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 0a:86:88:df:9e:4d brd ff:ff:ff:ff:ff:ff

inet 192.168.3.137/24 brd 192.168.3.255 scope global dynamic eth0

valid_lft 2619sec preferred_lft 2619sec

inet6 fe80::886:88ff:fedf:9e4d/64 scope link

valid_lft forever preferred_lft forever

3: enif0577db5497@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether c2:a5:be:2f:72:72 brd ff:ff:ff:ff:ff:ff link-netns cni-37f48e75-cf86-f2cb-644b-9c5fd897f547

inet6 fe80::c0a5:beff:fe2f:7272/64 scope link

valid_lft forever preferred_lft forever

4: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 0a:3d:14:21:e5:9b brd ff:ff:ff:ff:ff:ff

inet 192.168.3.218/24 brd 192.168.3.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::83d:14ff:fe21:e59b/64 scope link

valid_lft forever preferred_lft forever

5: enib0a4f5f75f7@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether 42:c3:01:e7:d4:12 brd ff:ff:ff:ff:ff:ff link-netns cni-3244f266-d2d4-e4df-5681-460204df3ff7

inet6 fe80::40c3:1ff:fee7:d412/64 scope link

valid_lft forever preferred_lft forever

ip route # 혹은 route -n

default via 192.168.3.1 dev eth0

169.254.169.254 dev eth0

192.168.3.0/24 dev eth0 proto kernel scope link src 192.168.3.137

192.168.3.95 dev enib0a4f5f75f7 scope link

192.168.3.235 dev enif0577db5497 scope link

# 마지막 생성된 네임스페이스 정보 출력 -t net(네트워크 타입)

sudo lsns -o PID,COMMAND -t net | awk 'NR>2 {print $1}' | tail -n 1

36255

# 마지막 생성된 네임스페이스 net PID 정보 출력 -t net(네트워크 타입)를 변수 지정

MyPID=$(sudo lsns -o PID,COMMAND -t net | awk 'NR>2 {print $1}' | tail -n 1)

# PID 정보로 파드 정보 확인

sudo nsenter -t $MyPID -n ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether 36:25:1f:33:88:50 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.3.95/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::3425:1fff:fe33:8850/64 scope link

valid_lft forever preferred_lft forever

sudo nsenter -t $MyPID -n ip -c route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

----------------- pod 접속하여 네트워크 정보 확인

# 테스트용 파드 접속(exec) 후 Shell 실행

kubectl exec -it $PODNAME1 -- zsh

# 아래부터는 pod-1 Shell 에서 실행 : 네트워크 정보 확인

----------------------------

ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether ea:8b:bc:bb:5a:bd brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.80/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::e88b:bcff:febb:5abd/64 scope link

valid_lft forever preferred_lft forever

ip -c route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 169.254.1.1 0.0.0.0 UG 0 0 0 eth0

169.254.1.1 0.0.0.0 255.255.255.255 UH 0 0 0 eth0

ping -c 1 192.168.2.10 # <pod-2 IP>

PING 192.168.2.10 (192.168.2.10) 56(84) bytes of data.

64 bytes from 192.168.2.10: icmp_seq=1 ttl=125 time=1.44 ms

--- 192.168.2.10 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.437/1.437/1.437/0.000 ms

ps

PID USER TIME COMMAND

1 root 0:00 tail -f /dev/null

78 root 0:00 zsh

172 root 0:00 ps

cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local ap-northeast-2.compute.internal

nameserver 10.100.0.10

options ndots:5

----------------------------