본 게시물은 CloudNet@팀 Gasida(서종호) 님이 진행하시는

AWS EKS Workshop Study 내용을 기반으로 작성되었습니다.

1. Service & AWS LoadBalancer Controller

서비스 종류

-

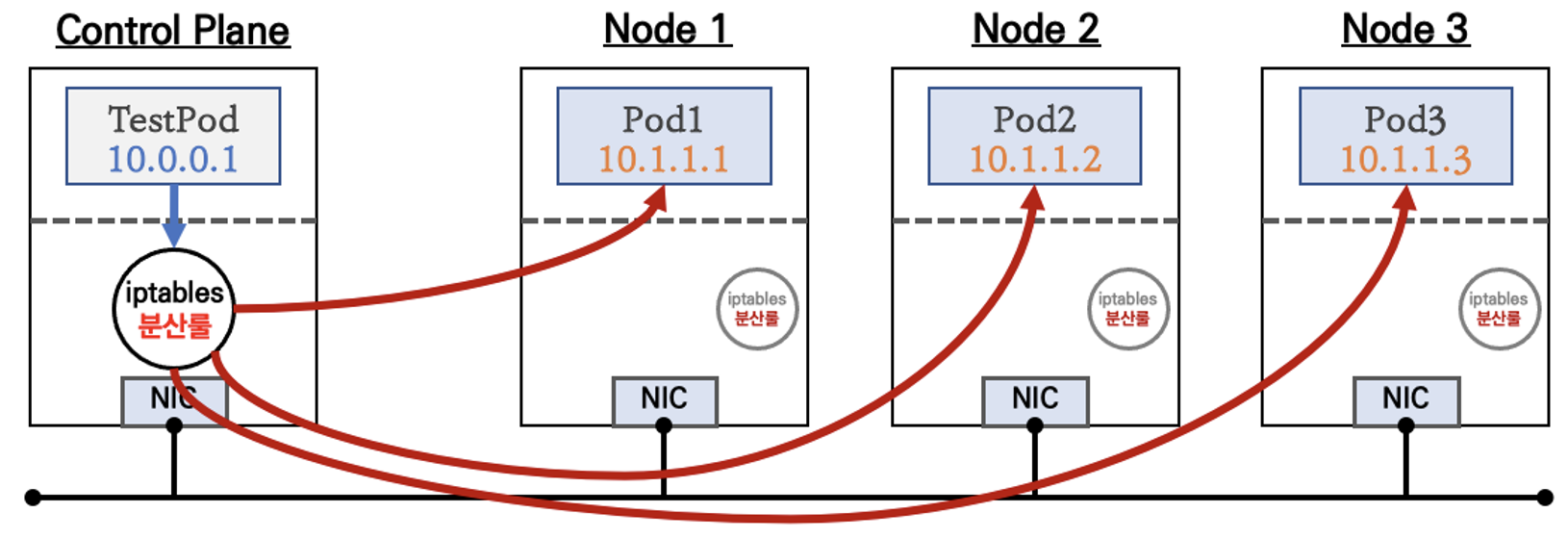

Cluster IP

클라이언트(TestPod)가 'CLUSTER-IP' 접속 시 해당 노드의 iptables 룰(랜덤 분산)에 의해서 DNAT 처리가 되어 목적지(backend) 파드와 통신

-

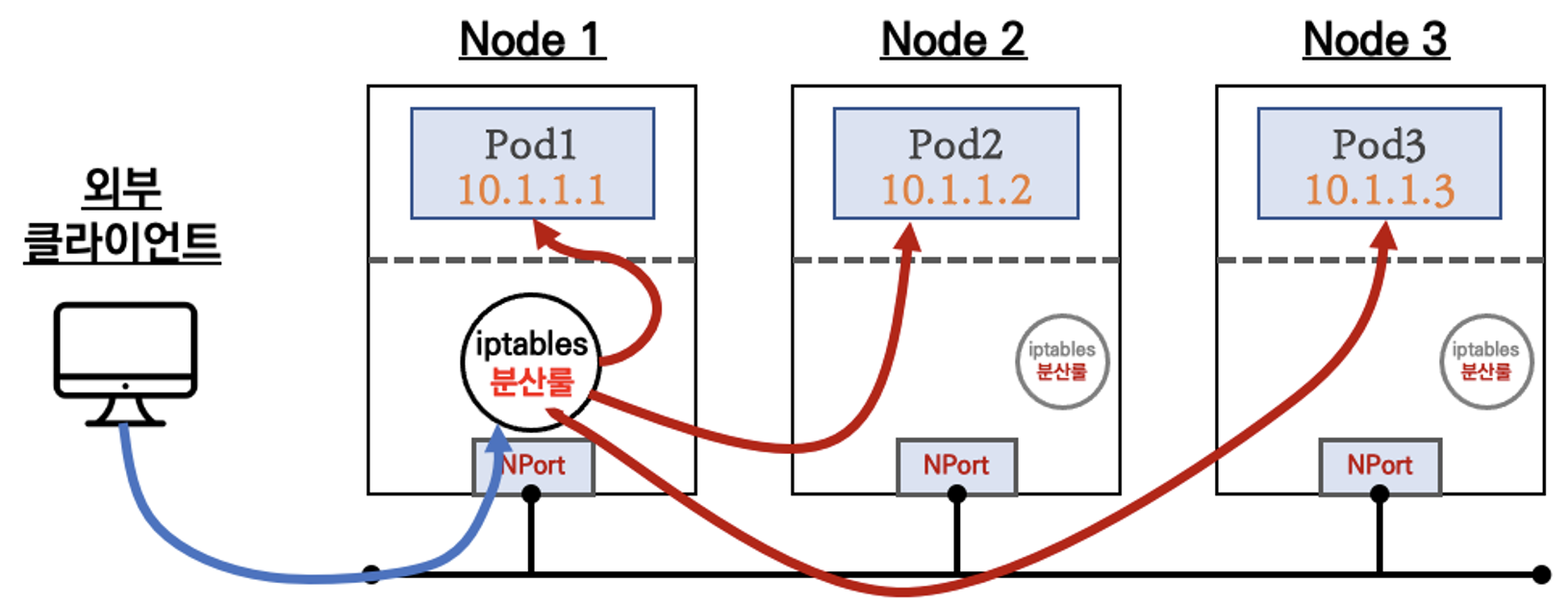

NodePort

외부 클라이언트가 '노드IP:NodePort' 접속 시 해당 노드의 iptables 룰에 의해서 SNAT/DNAT 되어 목적지 파드와 통신 후 리턴 트래픽은 최초 인입 노드를 경유해서 외부로 되돌아감

-

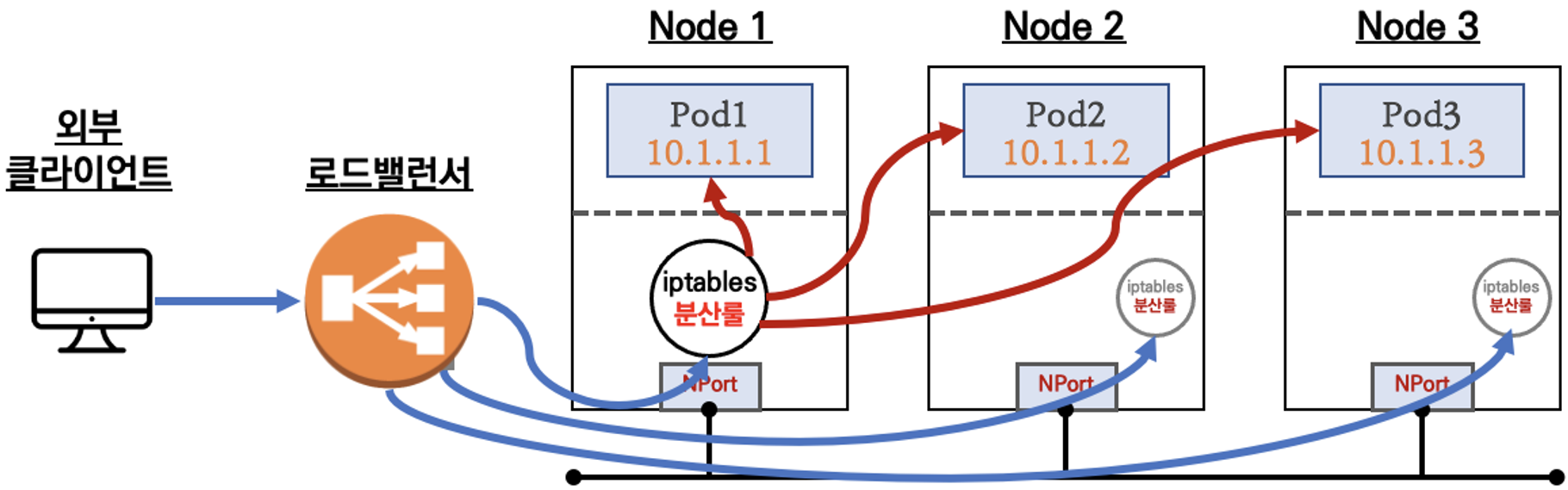

LoadBalancer

외부 클라이언트가 '로드밸런서' 접속 시 부하분산 되어 노드 도달 후 iptables 룰로 목적지 파드와 통신됨

-

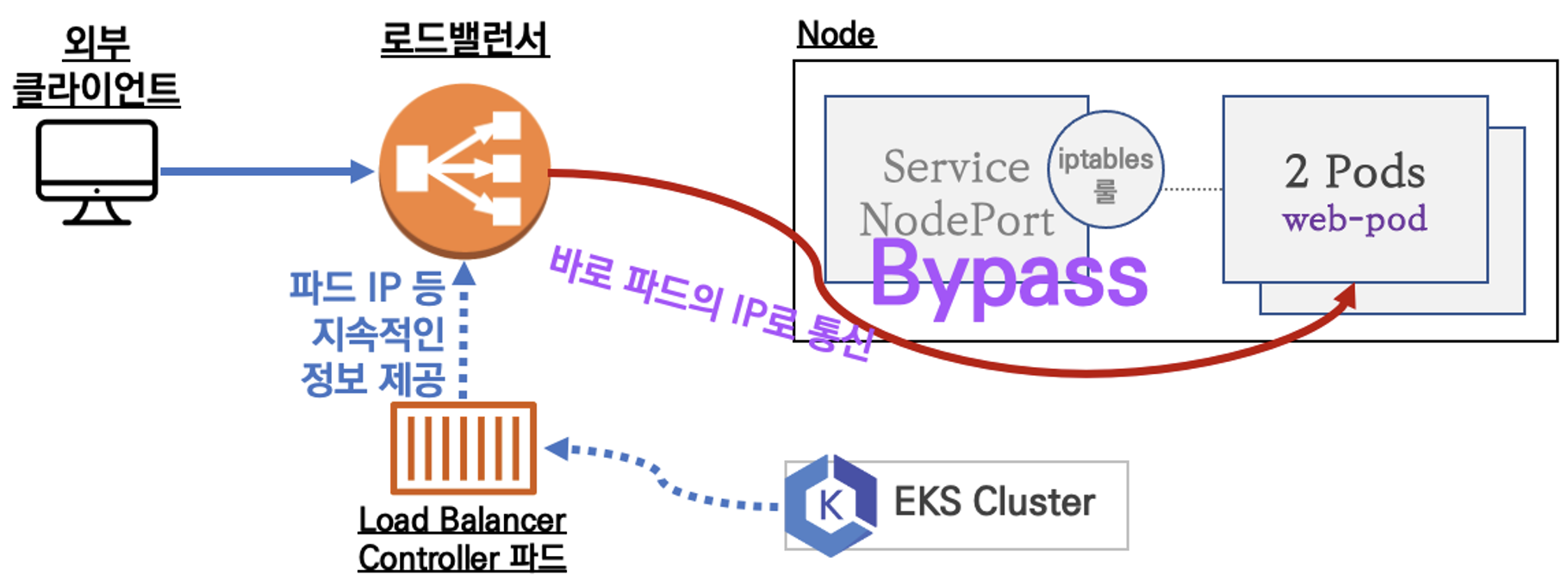

LoadBalancer Controller

LoadBalancer Controller가 LoadBalancer에 Pod의 네트워크 정보를 지속적으로 제공하고 LoadBalancer는 Pod로 직접 통신함

NLB 모드 정리

-

인스턴스 유형

externalTrafficPolicy: ClusterIP

2번 분산 및 SNAT으로 Client IP 확인 불가능 (LoadBalancer 타입의 기본 모드)externalTrafficPolicy: Local

1번 분산 및 ClientIP 유지, 워커 노드의 iptables 사용함

-

IP 유형 (AWS LoadBalancer 컨트롤러 파드 및 정책 설정 필요)

Proxy Protocol v2 비활성화

NLB에서 바로 파드로 인입, 단 ClientIP가 NLB로 SNAT 되어 Client IP 확인 불가능Proxy Protocol v2 활성화

NLB에서 바로 파드로 인입 및 ClientIP 확인 가능(→ 단 PPv2 를 애플리케이션이 인지할 수 있게 설정 필요)

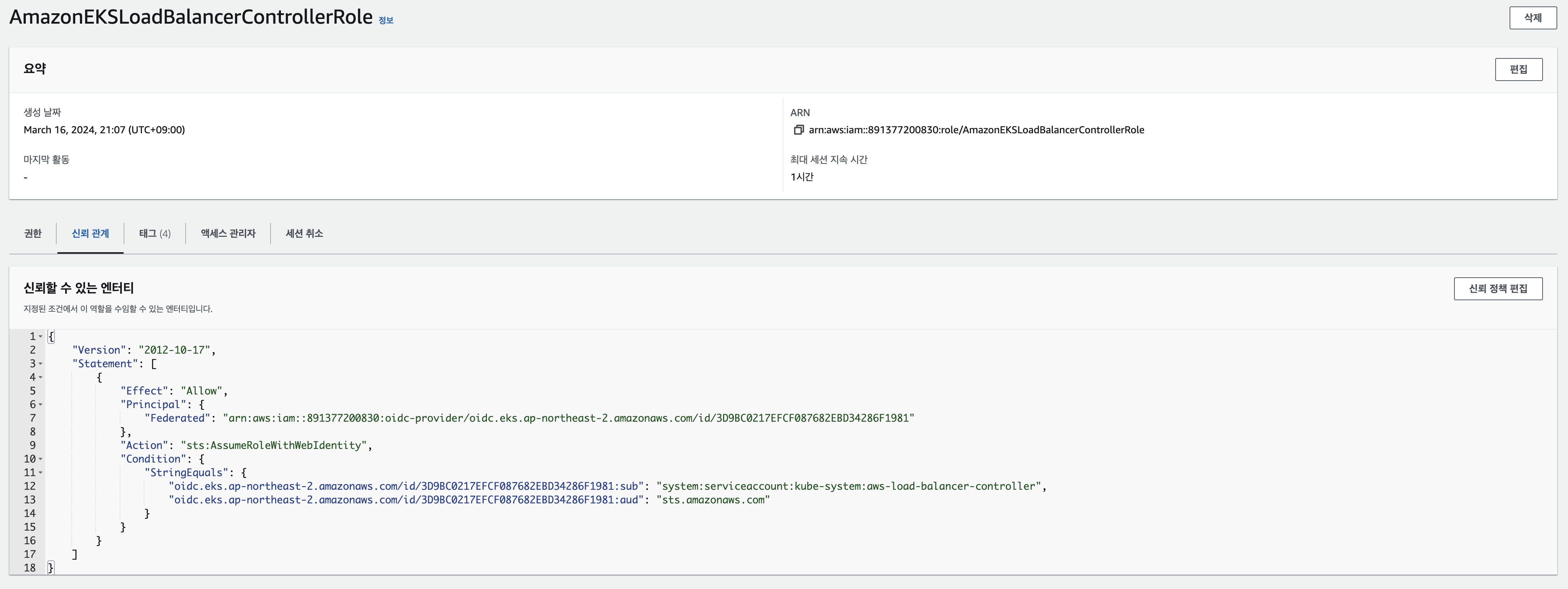

AWS LoadBalancer Controller 배포 with IRSA

# OIDC 확인

aws eks describe-cluster --name $CLUSTER_NAME --query "cluster.identity.oidc.issuer" --output text

aws iam list-open-id-connect-providers | jq

# IAM Policy (AWSLoadBalancerControllerIAMPolicy) 생성

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.4/docs/install/iam_policy.json

aws iam create-policy --policy-name AWSLoadBalancerControllerIAMPolicy --policy-document file://iam_policy.json

# 혹시 이미 IAM 정책이 있지만 예전 정책일 경우 아래 처럼 최신 업데이트 할 것

# aws iam update-policy ~~~

# 생성된 IAM Policy Arn 확인

aws iam list-policies --scope Local | jq

aws iam get-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy | jq

aws iam get-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --query 'Policy.Arn'

# AWS Load Balancer Controller를 위한 ServiceAccount를 생성 >> 자동으로 매칭되는 IAM Role 을 CloudFormation 으로 생성됨!

# IAM 역할 생성. AWS Load Balancer Controller의 kube-system 네임스페이스에 aws-load-balancer-controller라는 Kubernetes 서비스 계정을 생성하고 IAM 역할의 이름으로 Kubernetes 서비스 계정에 주석을 답니다

eksctl create iamserviceaccount --cluster=$CLUSTER_NAME --namespace=kube-system --name=aws-load-balancer-controller --role-name AmazonEKSLoadBalancerControllerRole \

--attach-policy-arn=arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --override-existing-serviceaccounts --approve

## IRSA 정보 확인

eksctl get iamserviceaccount --cluster $CLUSTER_NAME

## 서비스 어카운트 확인

kubectl get serviceaccounts -n kube-system aws-load-balancer-controller -o yaml | yh

# Helm Chart 설치

helm repo add eks https://aws.github.io/eks-charts

helm repo update

helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

## 설치 확인 : aws-load-balancer-controller:v2.7.1

kubectl get crd

kubectl get deployment -n kube-system aws-load-balancer-controller

kubectl describe deploy -n kube-system aws-load-balancer-controller

kubectl describe deploy -n kube-system aws-load-balancer-controller | grep 'Service Account'

Service Account: aws-load-balancer-controller

# 클러스터롤, 롤 확인

kubectl describe clusterrolebindings.rbac.authorization.k8s.io aws-load-balancer-controller-rolebinding

kubectl describe clusterroles.rbac.authorization.k8s.io aws-load-balancer-controller-role

...

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

targetgroupbindings.elbv2.k8s.aws [] [] [create delete get list patch update watch]

events [] [] [create patch]

ingresses [] [] [get list patch update watch]

services [] [] [get list patch update watch]

ingresses.extensions [] [] [get list patch update watch]

services.extensions [] [] [get list patch update watch]

ingresses.networking.k8s.io [] [] [get list patch update watch]

services.networking.k8s.io [] [] [get list patch update watch]

endpoints [] [] [get list watch]

namespaces [] [] [get list watch]

nodes [] [] [get list watch]

pods [] [] [get list watch]

endpointslices.discovery.k8s.io [] [] [get list watch]

ingressclassparams.elbv2.k8s.aws [] [] [get list watch]

ingressclasses.networking.k8s.io [] [] [get list watch]

ingresses/status [] [] [update patch]

pods/status [] [] [update patch]

services/status [] [] [update patch]

targetgroupbindings/status [] [] [update patch]

ingresses.elbv2.k8s.aws/status [] [] [update patch]

pods.elbv2.k8s.aws/status [] [] [update patch]

services.elbv2.k8s.aws/status [] [] [update patch]

targetgroupbindings.elbv2.k8s.aws/status [] [] [update patch]

ingresses.extensions/status [] [] [update patch]

pods.extensions/status [] [] [update patch]

services.extensions/status [] [] [update patch]

targetgroupbindings.extensions/status [] [] [update patch]

ingresses.networking.k8s.io/status [] [] [update patch]

pods.networking.k8s.io/status [] [] [update patch]

services.networking.k8s.io/status [] [] [update patch]

targetgroupbindings.networking.k8s.io/status [] [] [update patch]- 생성된 IAM Role 신뢰 관계 확인

서비스/파드 배포 테스트 with NLB

# 모니터링

watch -d kubectl get pod,svc,ep

# 작업용 EC2 - 디플로이먼트 & 서비스 생성

curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/2/echo-service-nlb.yaml

cat echo-service-nlb.yaml | yh

kubectl apply -f echo-service-nlb.yaml

# 확인

kubectl get deploy,pod

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deploy-echo 2/2 2 2 10s

NAME READY STATUS RESTARTS AGE

pod/deploy-echo-7f579ff9d7-gp67t 1/1 Running 0 10s

pod/deploy-echo-7f579ff9d7-rdct9 1/1 Running 0 10s

kubectl get svc,ep,ingressclassparams,targetgroupbindings

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 76m

service/svc-nlb-ip-type LoadBalancer 10.100.180.98 k8s-default-svcnlbip-088e7c2435-82dca2e656c7458d.elb.ap-northeast-2.amazonaws.com 80:31883/TCP 45s

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.1.143:443,192.168.2.205:443 76m

endpoints/svc-nlb-ip-type 192.168.1.132:8080,192.168.3.164:8080 45s

NAME GROUP-NAME SCHEME IP-ADDRESS-TYPE AGE

ingressclassparams.elbv2.k8s.aws/alb 4m52s

NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE

targetgroupbinding.elbv2.k8s.aws/k8s-default-svcnlbip-9db68e0f86 svc-nlb-ip-type 80 ip 41s

kubectl get targetgroupbindings -o json | jq

{

"apiVersion": "v1",

"items": [

{

"apiVersion": "elbv2.k8s.aws/v1beta1",

"kind": "TargetGroupBinding",

"metadata": {

"creationTimestamp": "2024-03-16T12:12:15Z",

"finalizers": [

"elbv2.k8s.aws/resources"

],

"generation": 1,

"labels": {

"service.k8s.aws/stack-name": "svc-nlb-ip-type",

"service.k8s.aws/stack-namespace": "default"

},

"name": "k8s-default-svcnlbip-9db68e0f86",

"namespace": "default",

"resourceVersion": "14865",

"uid": "4ef37f6a-3eef-4443-ab48-fd6f7da545fb"

},

"spec": {

"ipAddressType": "ipv4",

"networking": {

"ingress": [

{

"from": [

{

"securityGroup": {

"groupID": "sg-0a18efd205568b412"

}

}

],

"ports": [

{

"port": 8080,

"protocol": "TCP"

}

]

}

]

},

"serviceRef": {

"name": "svc-nlb-ip-type",

"port": 80

},

"targetGroupARN": "arn:aws:elasticloadbalancing:ap-northeast-2:891377200830:targetgroup/k8s-default-svcnlbip-9db68e0f86/e3db5d04de01f5e1",

"targetType": "ip"

},

"status": {

"observedGeneration": 1

}

}

],

"kind": "List",

"metadata": {

"resourceVersion": ""

}

}

# (옵션) 빠른 실습을 위해서 등록 취소 지연(드레이닝 간격) 수정 : 기본값 300초

vi echo-service-nlb.yaml

..

apiVersion: v1

kind: Service

metadata:

name: svc-nlb-ip-type

annotations:

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-healthcheck-port: "8080"

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-target-group-attributes: deregistration_delay.timeout_seconds=60

...

:wq!

kubectl apply -f echo-service-nlb.yaml

# AWS ELB(NLB) 정보 확인

aws elbv2 describe-load-balancers | jq

aws elbv2 describe-load-balancers --query 'LoadBalancers[*].State.Code' --output text

ALB_ARN=$(aws elbv2 describe-load-balancers --query 'LoadBalancers[?contains(LoadBalancerName, `k8s-default-svcnlbip`) == `true`].LoadBalancerArn' | jq -r '.[0]')

aws elbv2 describe-target-groups --load-balancer-arn $ALB_ARN | jq

TARGET_GROUP_ARN=$(aws elbv2 describe-target-groups --load-balancer-arn $ALB_ARN | jq -r '.TargetGroups[0].TargetGroupArn')

aws elbv2 describe-target-health --target-group-arn $TARGET_GROUP_ARN | jq

{

"TargetHealthDescriptions": [

{

"Target": {

"Id": "192.168.1.132",

"Port": 8080,

"AvailabilityZone": "ap-northeast-2a"

},

"HealthCheckPort": "8080",

"TargetHealth": {

"State": "healthy"

}

},

{

"Target": {

"Id": "192.168.3.164",

"Port": 8080,

"AvailabilityZone": "ap-northeast-2c"

},

"HealthCheckPort": "8080",

"TargetHealth": {

"State": "healthy"

}

}

]

}

# 웹 접속 주소 확인

kubectl get svc svc-nlb-ip-type -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Pod Web URL = http://"$1 }'

# 파드 로깅 모니터링

kubectl logs -l app=deploy-websrv -f

# 분산 접속 확인

NLB=$(kubectl get svc svc-nlb-ip-type -o jsonpath={.status.loadBalancer.ingress[0].hostname})

curl -s $NLB

for i in {1..100}; do curl -s $NLB | grep Hostname ; done | sort | uniq -c | sort -nr

52 Hostname: deploy-echo-7f579ff9d7-gp67t

48 Hostname: deploy-echo-7f579ff9d7-rdct9

# 지속적인 접속 시도 : 아래 상세 동작 확인 시 유용(패킷 덤프 등)

while true; do curl -s --connect-timeout 1 $NLB | egrep 'Hostname|client_address'; echo "----------" ; date "+%Y-%m-%d %H:%M:%S" ; sleep 1; done

Hostname: deploy-echo-7f579ff9d7-rdct9

client_address=192.168.3.48

----------

2024-03-16 21:19:29

Hostname: deploy-echo-7f579ff9d7-gp67t

client_address=192.168.1.189

----------

2024-03-16 21:19:30

Hostname: deploy-echo-7f579ff9d7-gp67t

client_address=192.168.3.48

----------

2024-03-16 21:19:31

Hostname: deploy-echo-7f579ff9d7-rdct9

client_address=192.168.2.65

----------

2024-03-16 21:19:32

Hostname: deploy-echo-7f579ff9d7-rdct9

client_address=192.168.1.189

----------

2024-03-16 21:19:33

Hostname: deploy-echo-7f579ff9d7-gp67t

client_address=192.168.3.48

----------

2024-03-16 21:19:34

Hostname: deploy-echo-7f579ff9d7-gp67t

client_address=192.168.2.65

----------

2024-03-16 21:19:35

Hostname: deploy-echo-7f579ff9d7-rdct9

client_address=192.168.1.189

----------

2024-03-16 21:19:36

Hostname: deploy-echo-7f579ff9d7-gp67t

client_address=192.168.3.48

----------auto discovery 동작 확인

while true; do aws elbv2 describe-target-health --target-group-arn $TARGET_GROUP_ARN --output text; echo; done

# 작업용 EC2 - 파드 1개 설정

kubectl scale deployment deploy-echo --replicas=1

# 확인

kubectl get deploy,pod,svc,ep

curl -s $NLB

for i in {1..100}; do curl -s --connect-timeout 1 $NLB | grep Hostname ; done | sort | uniq -c | sort -nr

# 작업용 EC2 - 파드 3개 설정

kubectl scale deployment deploy-echo --replicas=3

# 확인 : NLB 대상 타켓이 아직 initial 일 때 100번 반복 접속 시 어떻게 되는지 확인해보자!

kubectl get deploy,pod,svc,ep

curl -s $NLB

for i in {1..100}; do curl -s --connect-timeout 1 $NLB | grep Hostname ; done | sort | uniq -c | sort -nr

#

kubectl describe deploy -n kube-system aws-load-balancer-controller | grep -i 'Service Account'

Service Account: aws-load-balancer-controller

# [AWS LB Ctrl] 클러스터 롤 바인딩 정보 확인

kubectl describe clusterrolebindings.rbac.authorization.k8s.io aws-load-balancer-controller-rolebinding

# [AWS LB Ctrl] 클러스터롤 확인

kubectl describe clusterroles.rbac.authorization.k8s.io aws-load-balancer-controller-rolesh2. Ingress

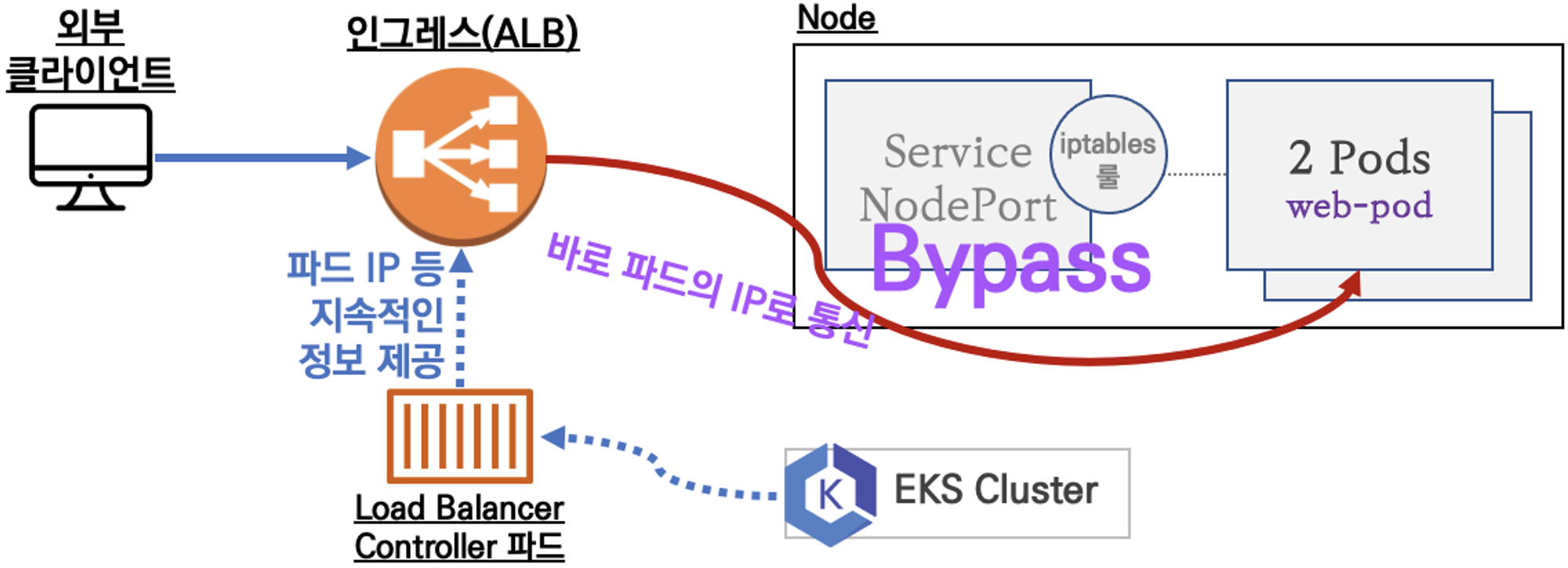

클러스터 내부의 서비스(ClusterIP, NodePort, Loadbalancer)를 외부로 노출(HTTP/HTTPS) - Web Proxy 역할

AWS Load Balancer Controller + Ingress (ALB) IP 모드 동작 with AWS VPC CNI

서비스/파드 배포 테스트 with Ingress(ALB)

# 게임 파드와 Service, Ingress 배포

curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ingress1.yaml

cat ingress1.yaml | yh

kubectl apply -f ingress1.yaml

# 모니터링

watch -d kubectl get pod,ingress,svc,ep -n game-2048

# 생성 확인

kubectl get-all -n game-2048

kubectl get ingress,svc,ep,pod -n game-2048

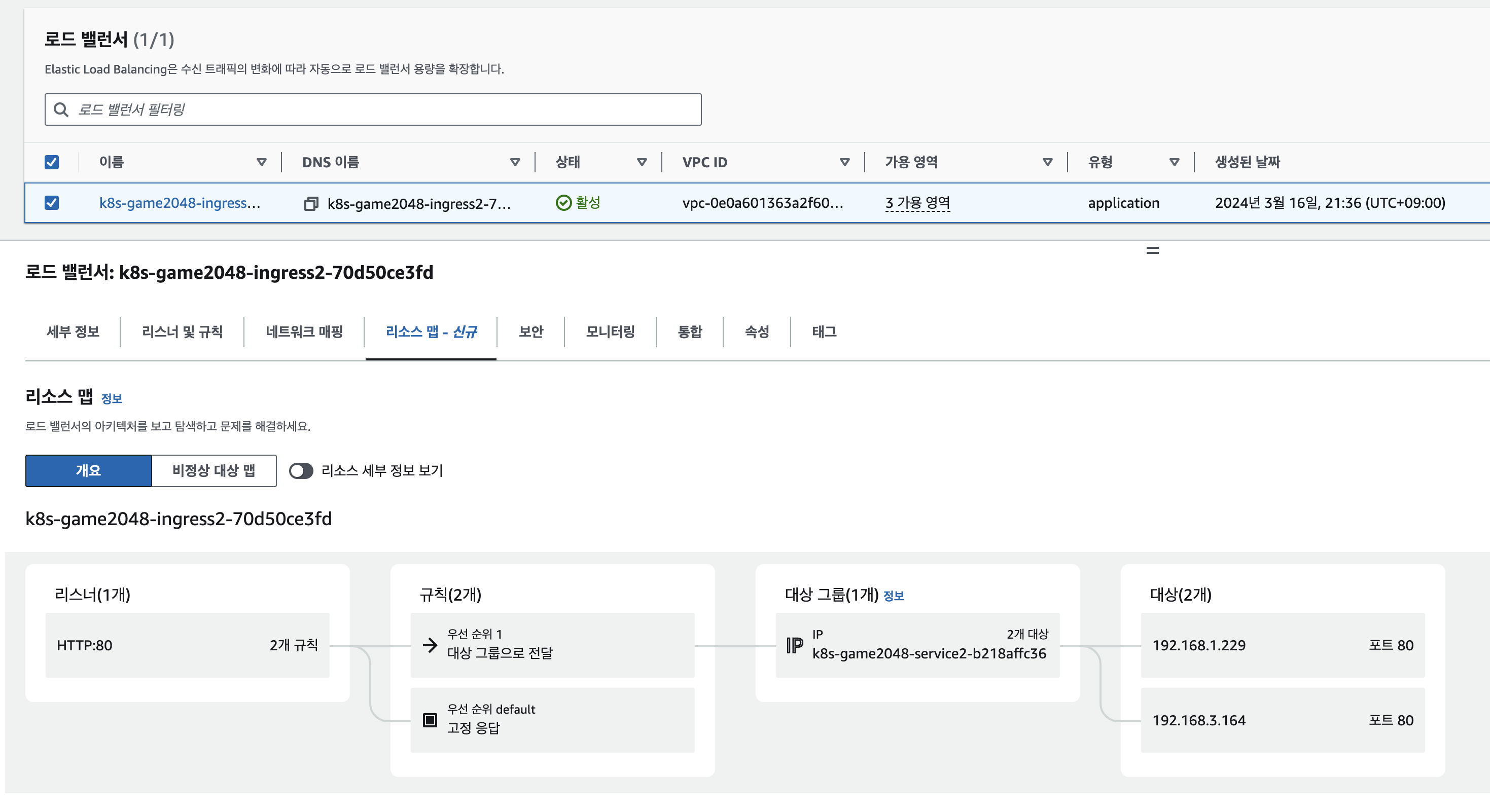

kubectl get targetgroupbindings -n game-2048

NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE

k8s-game2048-service2-e48050abac service-2048 80 ip 87s

# ALB 생성 확인

aws elbv2 describe-load-balancers --query 'LoadBalancers[?contains(LoadBalancerName, `k8s-game2048`) == `true`]' | jq

ALB_ARN=$(aws elbv2 describe-load-balancers --query 'LoadBalancers[?contains(LoadBalancerName, `k8s-game2048`) == `true`].LoadBalancerArn' | jq -r '.[0]')

aws elbv2 describe-target-groups --load-balancer-arn $ALB_ARN

TARGET_GROUP_ARN=$(aws elbv2 describe-target-groups --load-balancer-arn $ALB_ARN | jq -r '.TargetGroups[0].TargetGroupArn')

aws elbv2 describe-target-health --target-group-arn $TARGET_GROUP_ARN | jq

# Ingress 확인

kubectl describe ingress -n game-2048 ingress-2048

kubectl get ingress -n game-2048 ingress-2048 -o jsonpath="{.status.loadBalancer.ingress[*].hostname}{'\n'}"

# 게임 접속 : ALB 주소로 웹 접속

kubectl get ingress -n game-2048 ingress-2048 -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Game URL = http://"$1 }'

Game URL = http://k8s-game2048-ingress2-70d50ce3fd-734993885.ap-northeast-2.elb.amazonaws.com

# 파드 IP 확인

kubectl get pod -n game-2048 -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-2048-75db5866dd-268cv 1/1 Running 0 93s 192.168.3.164 ip-192-168-3-215.ap-northeast-2.compute.internal <none> <none>

deployment-2048-75db5866dd-q7wqz 1/1 Running 0 93s 192.168.1.229 ip-192-168-1-158.ap-northeast-2.compute.internal <none> <none>

(admin@myeks:N/A) [root@myeks-bastion-EC2 ~]#-

ALB 대상 그룹 확인

-

접속 확인

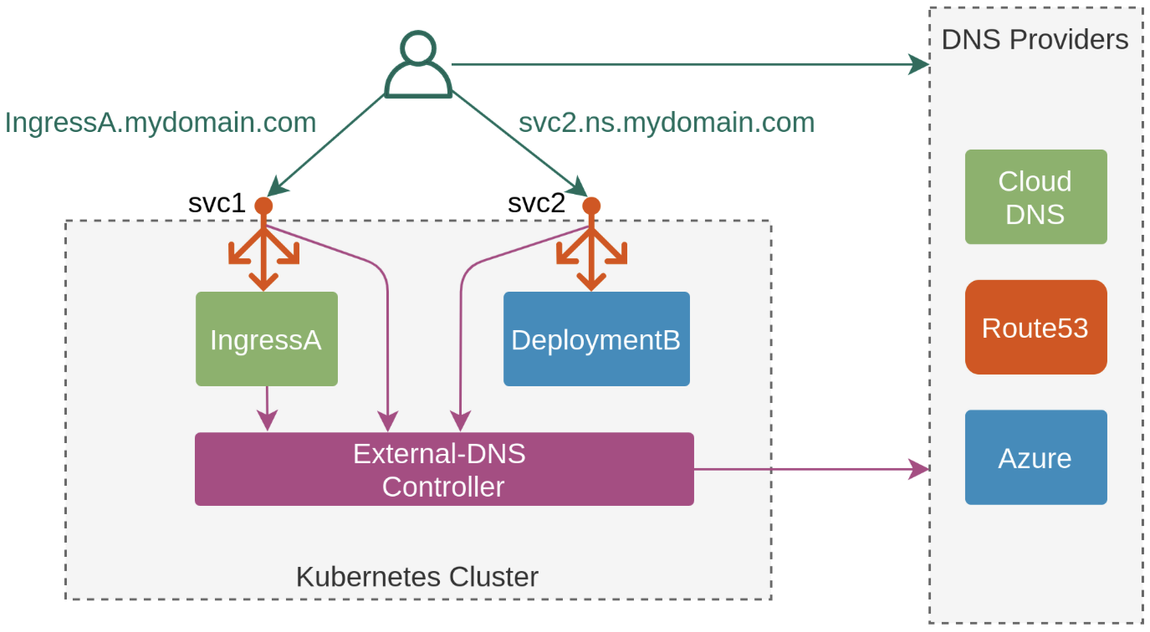

3. ExternalDNS

K8S 서비스/인그레스 생성 시 도메인을 설정하면, AWS(Route 53), Azure(DNS), GCP(Cloud DNS) 에 A 레코드(TXT 레코드)로 자동 생성/삭제

AWS Route 53 정보 확인 & 변수 지정

# 자신의 도메인 변수 지정

MyDomain=<자신의 도메인>

MyDomain=tkops.click

echo "export MyDomain=tkops.click" >> /etc/profile

# 자신의 Route 53 도메인 ID 조회 및 변수 지정

aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." | jq

aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Name"

aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text

MyDnzHostedZoneId=`aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text`

echo $MyDnzHostedZoneId

# (옵션) NS 레코드 타입 첫번째 조회

aws route53 list-resource-record-sets --output json --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'NS']" | jq -r '.[0].ResourceRecords[].Value'

# (옵션) A 레코드 타입 모두 조회

aws route53 list-resource-record-sets --output json --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A']"

# A 레코드 타입 조회

aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A']" | jq

aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A'].Name" | jq

aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A'].Name" --output text

# A 레코드 값 반복 조회

while true; do aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A']" | jq ; date ; echo ; sleep 1; done

ExternalDNS 설치

# EKS 배포 시 Node IAM Role 설정되어 있음

# eksctl create cluster ... --external-dns-access ...

#

MyDomain=<자신의 도메인>

MyDomain=gasida.link

# 자신의 Route 53 도메인 ID 조회 및 변수 지정

MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

# 변수 확인

echo $MyDomain, $MyDnzHostedZoneId

# ExternalDNS 배포

curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

sed -i "s/0.13.4/0.14.0/g" externaldns.yaml

cat externaldns.yaml | yh

MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

# 확인 및 로그 모니터링

kubectl get pod -l app.kubernetes.io/name=external-dns -n kube-system

NAME READY STATUS RESTARTS AGE

external-dns-5ddbfb674-g48j2 1/1 Running 0 8s

kubectl logs deploy/external-dns -n kube-system -f

Service(NLB) + 도메인 연동(ExternalDNS)

# 터미널1 (모니터링)

watch -d 'kubectl get pod,svc'

kubectl logs deploy/external-dns -n kube-system -f

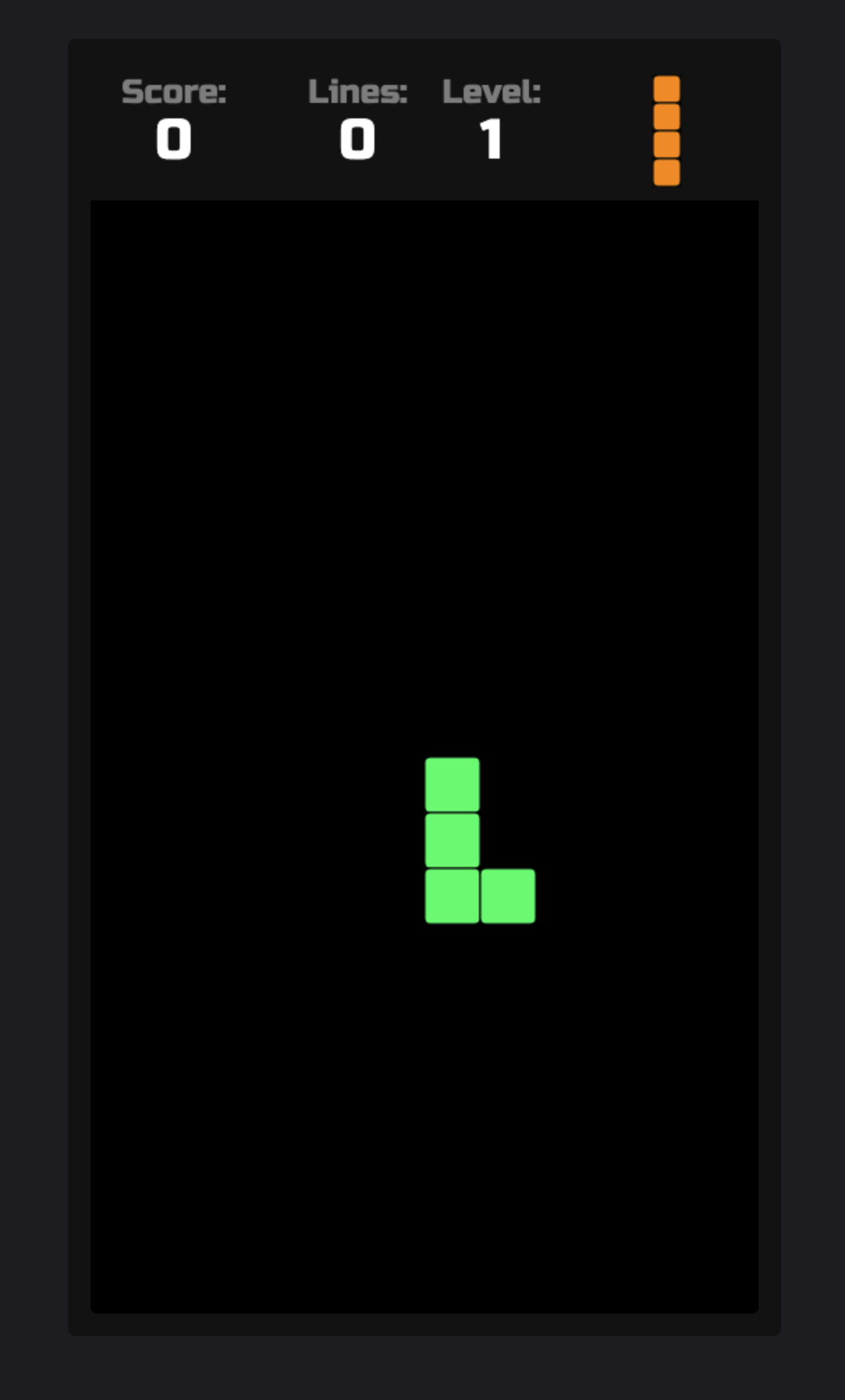

# 테트리스 디플로이먼트 배포

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: tetris

labels:

app: tetris

spec:

replicas: 1

selector:

matchLabels:

app: tetris

template:

metadata:

labels:

app: tetris

spec:

containers:

- name: tetris

image: bsord/tetris

---

apiVersion: v1

kind: Service

metadata:

name: tetris

annotations:

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: "http"

#service.beta.kubernetes.io/aws-load-balancer-healthcheck-port: "80"

spec:

selector:

app: tetris

ports:

- port: 80

protocol: TCP

targetPort: 80

type: LoadBalancer

loadBalancerClass: service.k8s.aws/nlb

EOF

# 배포 확인

kubectl get deploy,svc,ep tetris

# NLB에 ExternanDNS 로 도메인 연결

kubectl annotate service tetris "external-dns.alpha.kubernetes.io/hostname=tetris.$MyDomain"

while true; do aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A']" | jq ; date ; echo ; sleep 1; done

# Route53에 A레코드 확인

aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A']" | jq

aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A'].Name" | jq .[]

# 확인

dig +short tetris.$MyDomain @8.8.8.8

dig +short tetris.$MyDomain

# 도메인 체크

echo -e "My Domain Checker = https://www.whatsmydns.net/#A/tetris.$MyDomain"

# 웹 접속 주소 확인 및 접속

echo -e "Tetris Game URL = http://tetris.$MyDomain"- 접속 확인

4. Network Policies with VPC CNI

사전 준비 및 기본 정보 확인

# Network Policy 기본 비활성화되어 있어, 활성화 필요 : 실습 환경은 미리 활성화 설정 추가되어 있음

tail -n 11 myeks.yaml | yh

addons:

- name: vpc-cni # no version is specified so it deploys the default version

version: latest # auto discovers the latest available

attachPolicyARNs:

- arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy

configurationValues: |-

enableNetworkPolicy: "true"

- name: kube-proxy

version: latest

- name: coredns

version: latest

# Node Agent 확인 : AWS VPC CNI 1.14 이상 버전 정보 확인

kubectl get ds aws-node -n kube-system -o yaml | k neat | yh

...

- args:

- --enable-ipv6=false

- --enable-network-policy=true

...

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /sys/fs/bpf

name: bpf-pin-path

- mountPath: /var/log/aws-routed-eni

name: log-dir

- mountPath: /var/run/aws-node

name: run-dir

...

kubectl get ds aws-node -n kube-system -o yaml | grep -i image:

kubectl get pod -n kube-system -l k8s-app=aws-node

kubectl get ds -n kube-system aws-node -o jsonpath='{.spec.template.spec.containers[*].name}{"\n"}'

aws-node aws-eks-nodeagent

# EKS 1.25 버전 이상 확인

kubectl get node

# OS 커널 5.10 이상 확인

ssh ec2-user@$N1 uname -r

5.10.210-201.852.amzn2.x86_64

# 실행 중인 eBPF 프로그램 확인

ssh ec2-user@$N1 sudo /opt/cni/bin/aws-eks-na-cli ebpf progs

Programs currently loaded :

Type : 26 ID : 6 Associated maps count : 1

========================================================================================

Type : 26 ID : 8 Associated maps count : 1

========================================================================================

# 각 노드에 BPF 파일 시스템을 탑재 확인

ssh ec2-user@$N1 mount | grep -i bpf

none on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

ssh ec2-user@$N1 df -a | grep -i bpf

none 0 0 0 - /sys/fs/bpf

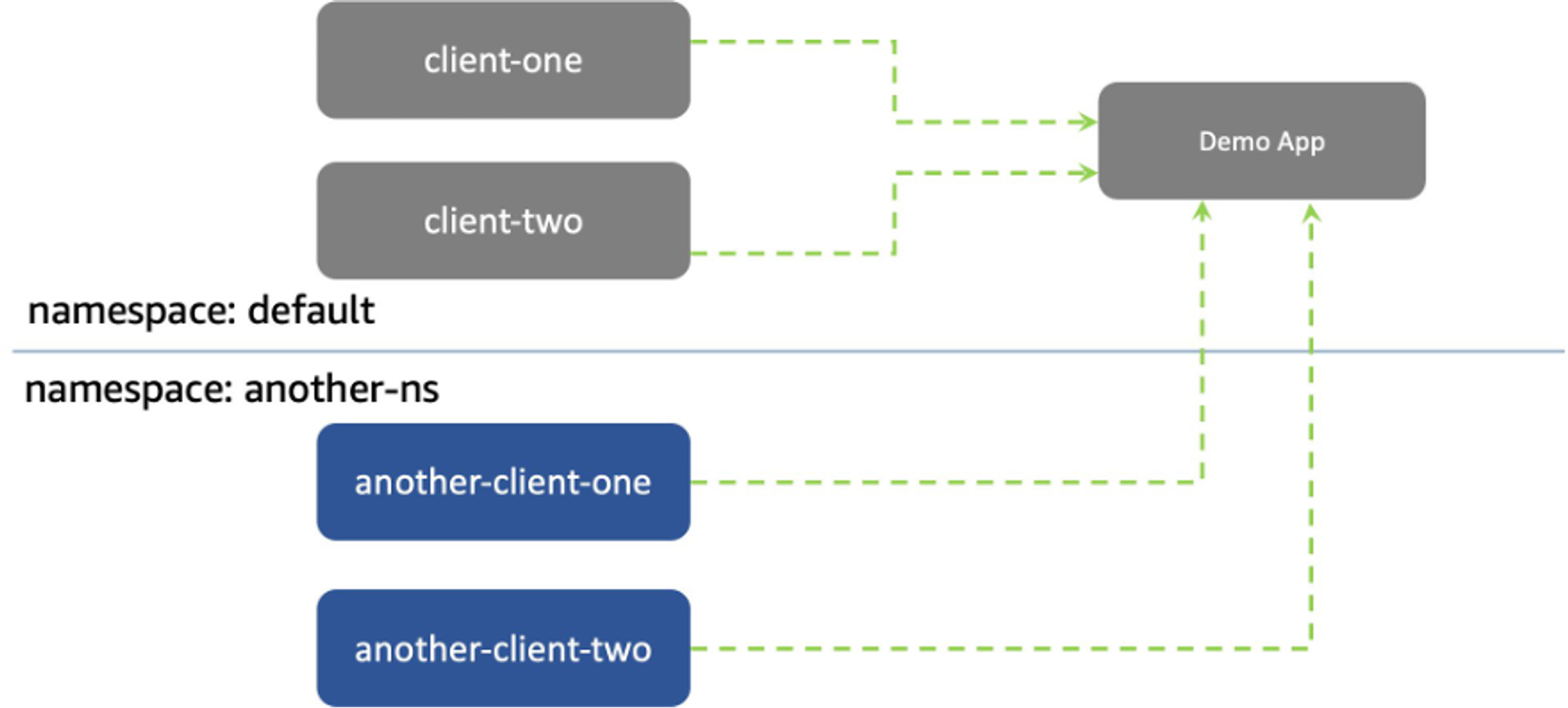

Network Policies 적용

-

샘플 애플리케이션 배포 및 통신 확인

# git clone https://github.com/aws-samples/eks-network-policy-examples.git cd eks-network-policy-examples tree advanced/manifests/ kubectl apply -f advanced/manifests/ # 확인 kubectl get pod,svc kubectl get pod,svc -n another-ns # 통신 확인 kubectl exec -it client-one -- curl demo-app kubectl exec -it client-two -- curl demo-app kubectl exec -it another-client-one -n another-ns -- curl demo-app kubectl exec -it another-client-one -n another-ns -- curl demo-app.default kubectl exec -it another-client-two -n another-ns -- curl demo-app.default.svc -

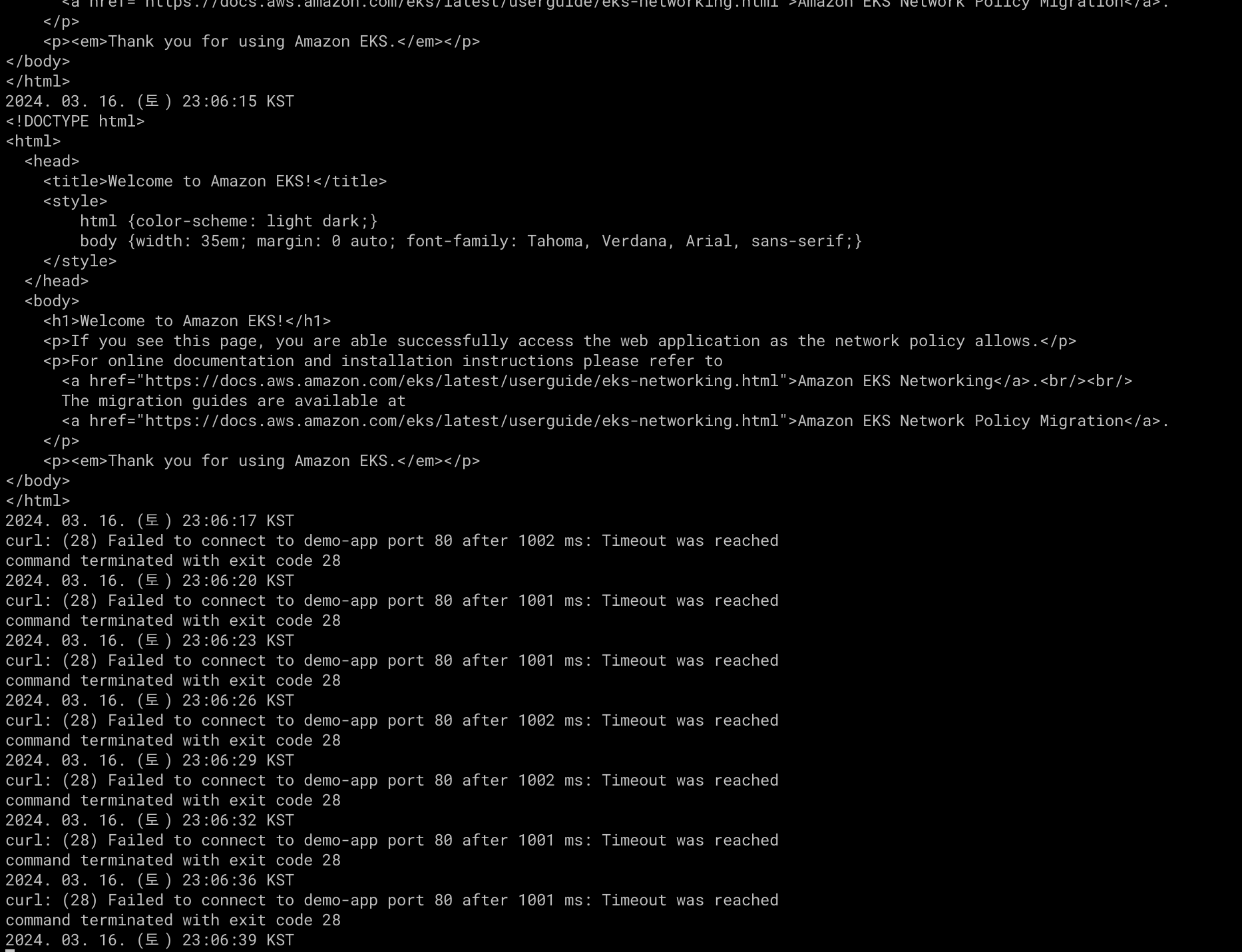

모든 트래픽 거부 적용

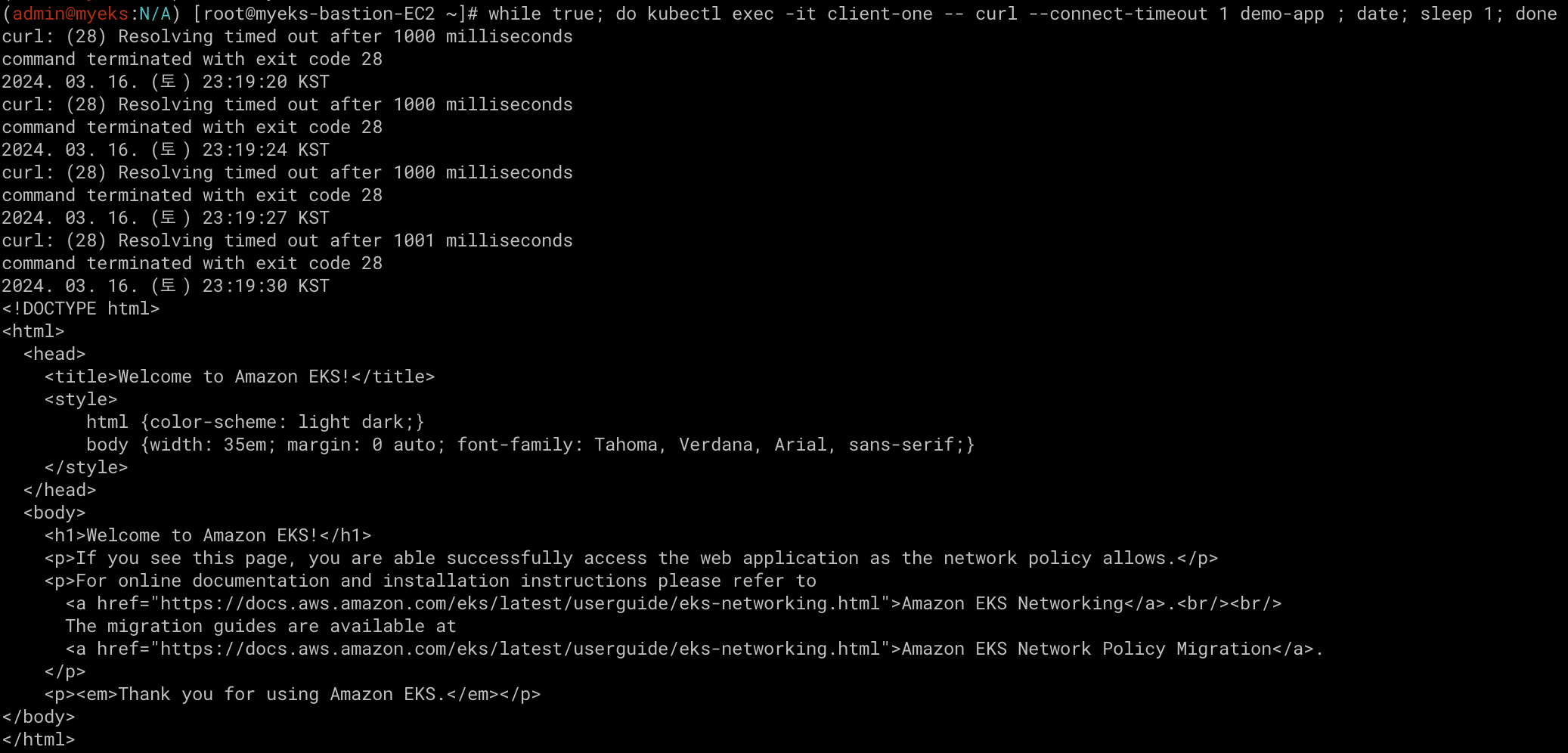

# 01-deny-all-ingress.yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: demo-app-deny-all spec: podSelector: matchLabels: app: demo-app policyTypes: - Ingress# 모니터링 # kubectl exec -it client-one -- curl demo-app while true; do kubectl exec -it client-one -- curl --connect-timeout 1 demo-app ; date; sleep 1; done # 정책 적용 cat advanced/policies/01-deny-all-ingress.yaml | yh kubectl apply -f advanced/policies/01-deny-all-ingress.yaml kubectl get networkpolicy

-

동일 네임스페이스 + 클라이언트1 로부터의 수신 허용

# 03-allow-ingress-from-samens-client-one.yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: demo-app-allow-samens-client-one spec: podSelector: matchLabels: app: demo-app ingress: - from: - podSelector: matchLabels: app: client-one# cat advanced/policies/03-allow-ingress-from-samens-client-one.yaml | yh kubectl apply -f advanced/policies/03-allow-ingress-from-samens-client-one.yaml kubectl get networkpolicy # 클라이언트2 수신 확인 kubectl exec -it client-two -- curl --connect-timeout 1 demo-app curl: (28) Failed to connect to demo-app port 80 after 1001 ms: Timeout was reached command terminated with exit code 28 -

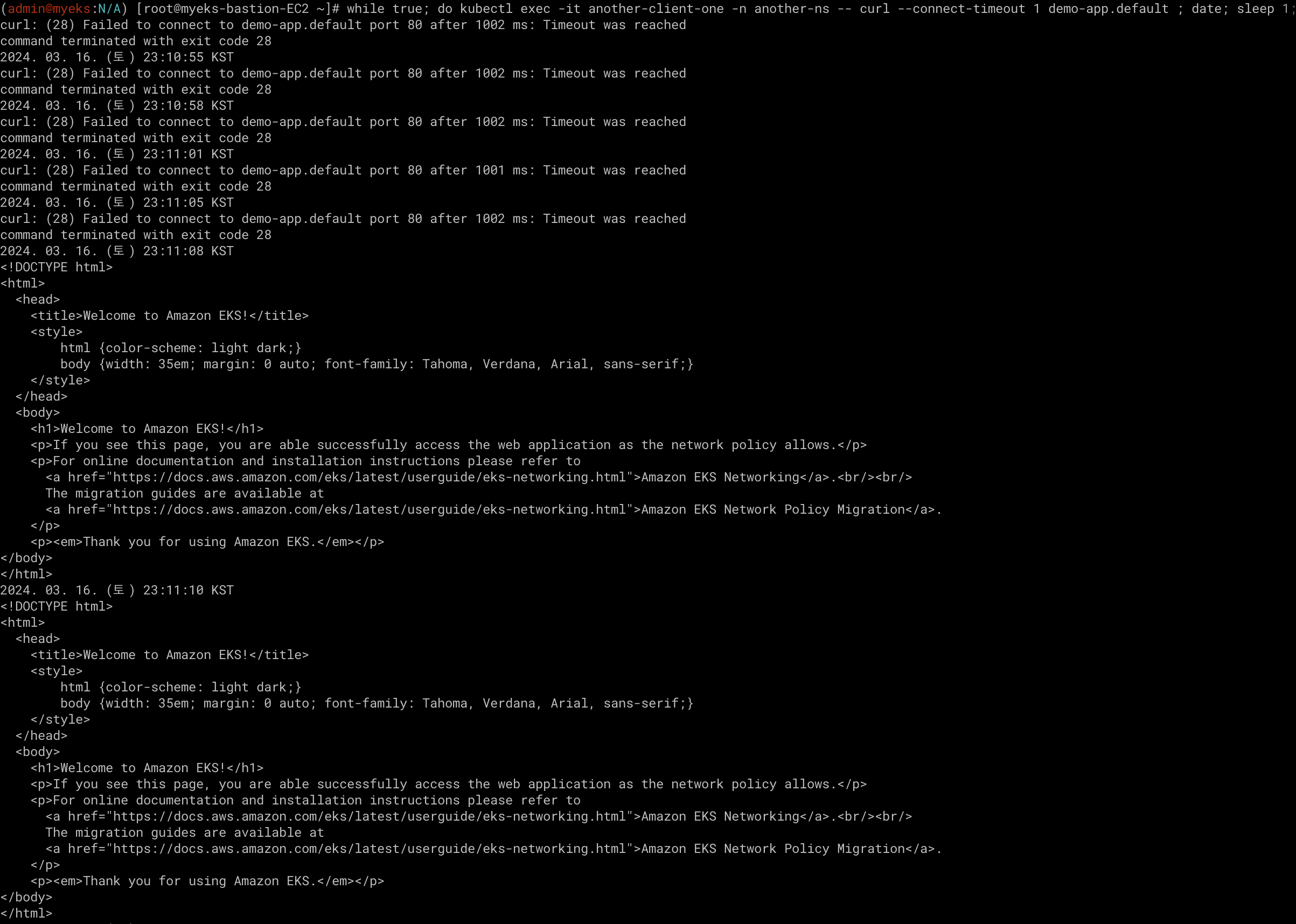

another-ns 네임스페이스로부터의 수신 허용

# 04-allow-ingress-from-xns.yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: demo-app-allow-another-ns spec: podSelector: matchLabels: app: demo-app ingress: - from: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: another-ns# 모니터링 # kubectl exec -it another-client-one -n another-ns -- curl --connect-timeout 1 demo-app.default while true; do kubectl exec -it another-client-one -n another-ns -- curl --connect-timeout 1 demo-app.default ; date; sleep 1; done # cat advanced/policies/04-allow-ingress-from-xns.yaml | yh kubectl apply -f advanced/policies/04-allow-ingress-from-xns.yaml kubectl get networkpolicy # kubectl exec -it another-client-two -n another-ns -- curl --connect-timeout 1 demo-app.default

-

eBPF 정보 확인

# 실행 중인 eBPF 프로그램 확인 for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i sudo /opt/cni/bin/aws-eks-na-cli ebpf progs; echo; done >> node 192.168.1.158 << Programs currently loaded : Type : 26 ID : 6 Associated maps count : 1 ======================================================================================== Type : 26 ID : 8 Associated maps count : 1 ======================================================================================== >> node 192.168.2.139 << Programs currently loaded : Type : 26 ID : 6 Associated maps count : 1 ======================================================================================== Type : 26 ID : 8 Associated maps count : 1 ======================================================================================== Type : 3 ID : 9 Associated maps count : 3 ======================================================================================== Type : 3 ID : 10 Associated maps count : 3 ======================================================================================== >> node 192.168.3.215 << Programs currently loaded : Type : 26 ID : 6 Associated maps count : 1 ======================================================================================== Type : 26 ID : 8 Associated maps count : 1 ======================================================================================== # eBPF 로그 확인 for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i sudo cat /var/log/aws-routed-eni/ebpf-sdk.log; echo; done for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i sudo cat /var/log/aws-routed-eni/network-policy-agent; echo; done -

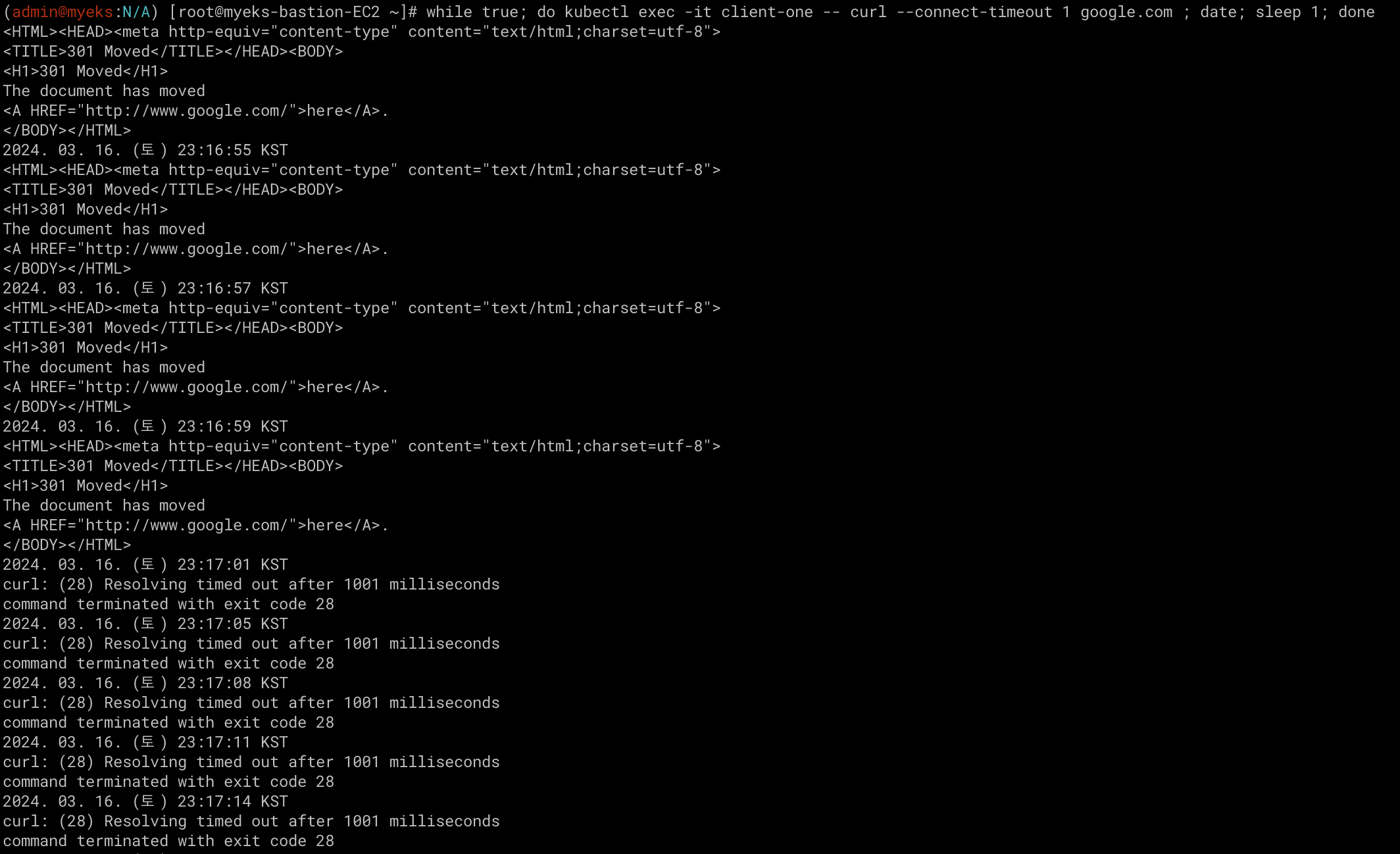

송신 트래픽 거부

기본 네임스페이스의 client-one pod에서 모든 송신 격리를 적용# 06-deny-egress-from-client-one.yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: client-one-deny-egress spec: podSelector: matchLabels: app: client-one egress: [] policyTypes: - Egress# 모니터링 while true; do kubectl exec -it client-one -- curl --connect-timeout 1 google.com ; date; sleep 1; done # cat advanced/policies/06-deny-egress-from-client-one.yaml | yh kubectl apply -f advanced/policies/06-deny-egress-from-client-one.yaml kubectl get networkpolicy # kubectl exec -it client-one -- nslookup demo-app ;; connection timed out; no servers could be reached command terminated with exit code 1

-

송신 트래픽 허용

# 08-allow-egress-to-demo-app.yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: client-one-allow-egress-demo-app spec: podSelector: matchLabels: app: client-one egress: - to: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: kube-system podSelector: matchLabels: k8s-app: kube-dns ports: - port: 53 protocol: UDP - to: - podSelector: matchLabels: app: demo-app ports: - port: 80 protocol: TCP# 모니터링 while true; do kubectl exec -it client-one -- curl --connect-timeout 1 demo-app ; date; sleep 1; done # cat advanced/policies/08-allow-egress-to-demo-app.yaml | yh kubectl apply -f advanced/policies/08-allow-egress-to-demo-app.yaml kubectl get networkpolicy