본 게시물은 CloudNet@팀 Gasida(서종호) 님이 진행하시는

AWS EKS Workshop Study 내용을 기반으로 작성되었습니다.

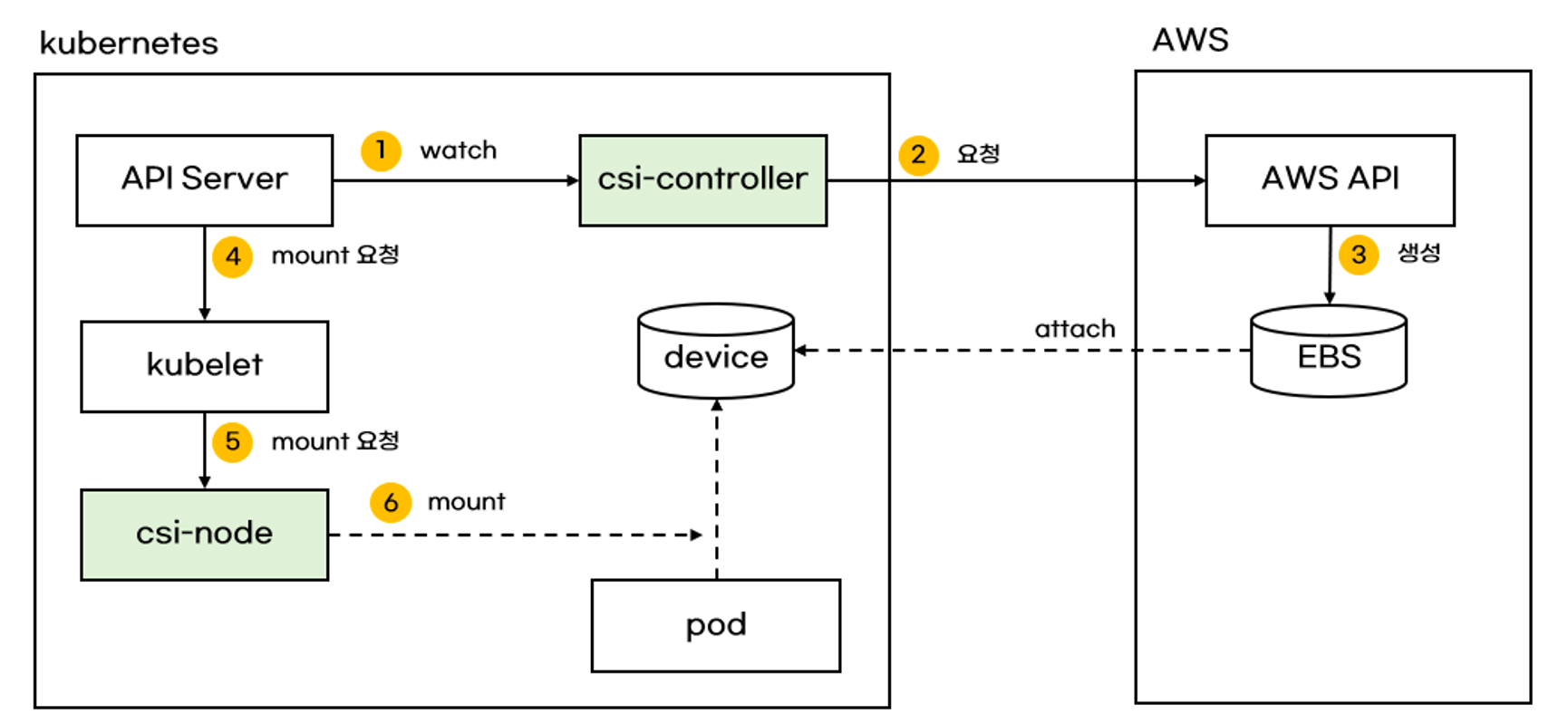

1. AWS EBS Controller

ebs-csi-controller

1~3. csi-controller는 k8s API Server의 요청으로 인해,

AWS API를 사용하여 EBS Volume을 생성한다.

-

k8s API Server는 각 node kublet에게 Volume Mount를 요청한다.

-

kublet은 csi-node에 Mount를 요청한다.

-

csi-node는 3에서 생성한 EBS Volume을 node에 Mount한다.

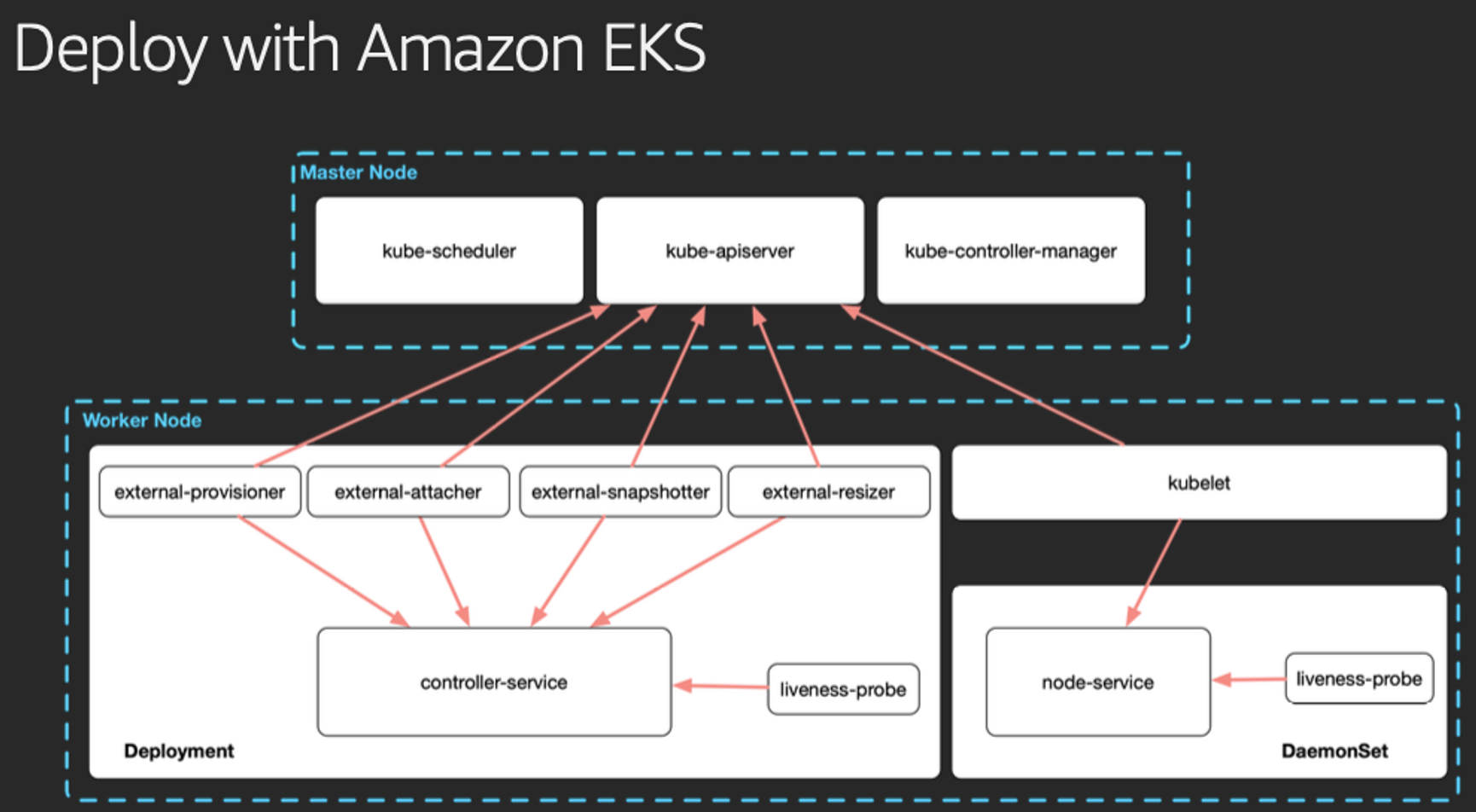

Deploy with Amazon EKS

-

Amazon EBS CSI driver as an Amazon EKS add-on 설치

# 아래는 aws-ebs-csi-driver 전체 버전 정보와 기본 설치 버전(True) 정보 확인 aws eks describe-addon-versions \ --addon-name aws-ebs-csi-driver \ --kubernetes-version 1.28 \ --query "addons[].addonVersions[].[addonVersion, compatibilities[].defaultVersion]" \ --output text # ISRA 설정 : AWS관리형 정책 AmazonEBSCSIDriverPolicy 사용 eksctl create iamserviceaccount \ --name ebs-csi-controller-sa \ --namespace kube-system \ --cluster ${CLUSTER_NAME} \ --attach-policy-arn arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy \ --approve \ --role-only \ --role-name AmazonEKS_EBS_CSI_DriverRole # ISRA 확인 eksctl get iamserviceaccount --cluster myeks NAMESPACE NAME ROLE ARN kube-system aws-load-balancer-controller arn:aws:iam::891377200830:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-RR2HibQuTVFy kube-system ebs-csi-controller-sa arn:aws:iam::891377200830:role/AmazonEKS_EBS_CSI_DriverRole ... # Amazon EBS CSI driver addon 추가 eksctl create addon --name aws-ebs-csi-driver --cluster ${CLUSTER_NAME} --service-account-role-arn arn:aws:iam::${ACCOUNT_ID}:role/AmazonEKS_EBS_CSI_DriverRole --force kubectl get sa -n kube-system ebs-csi-controller-sa -o yaml | head -5 # 확인 eksctl get addon --cluster ${CLUSTER_NAME} kubectl get deploy,ds -l=app.kubernetes.io/name=aws-ebs-csi-driver -n kube-system kubectl get pod -n kube-system -l 'app in (ebs-csi-controller,ebs-csi-node)' kubectl get pod -n kube-system -l app.kubernetes.io/component=csi-driver # ebs-csi-controller 파드에 6개 컨테이너 확인 kubectl get pod -n kube-system -l app=ebs-csi-controller -o jsonpath='{.items[0].spec.containers[*].name}' ; echo ebs-plugin csi-provisioner csi-attacher csi-snapshotter csi-resizer liveness-probe # csinodes 확인 kubectl get csinodes # gp3 스토리지 클래스 생성 - Link kubectl get sc cat <<EOT > gp3-sc.yaml kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: gp3 allowVolumeExpansion: true provisioner: ebs.csi.aws.com volumeBindingMode: WaitForFirstConsumer parameters: type: gp3 #iops: "5000" #throughput: "250" allowAutoIOPSPerGBIncrease: 'true' encrypted: 'true' fsType: xfs # 기본값이 ext4 EOT kubectl apply -f gp3-sc.yaml kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 78m gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 32s local-path rancher.io/local-path Delete WaitForFirstConsumer false 40m kubectl describe sc gp3 | grep Parameters Parameters: allowAutoIOPSPerGBIncrease=true,encrypted=true,fsType=xfs,type=gp3

-

PVC/PV Pod 테스트

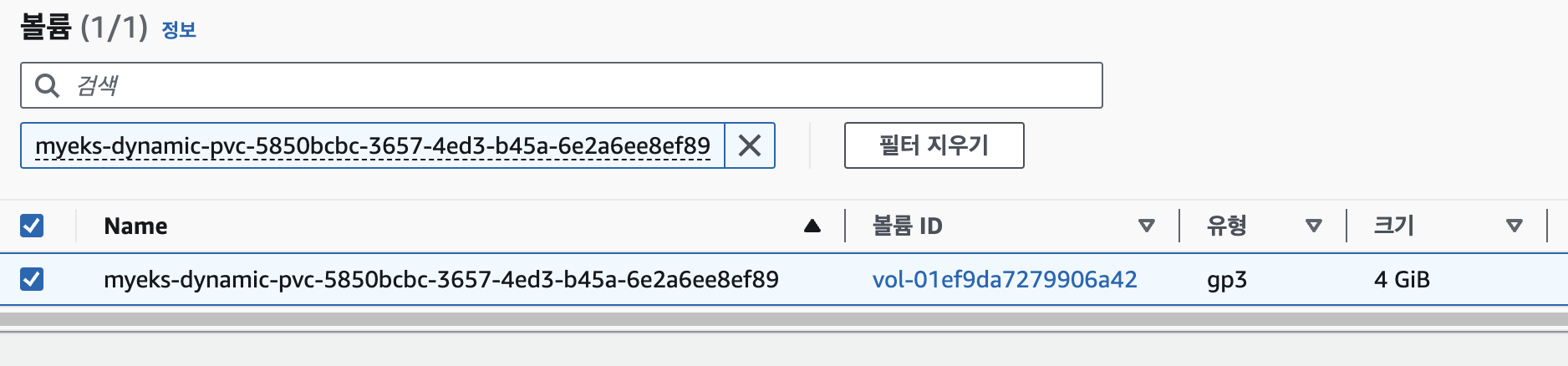

# 워커노드의 EBS 볼륨 확인 : tag(키/값) 필터링 - 링크 aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --output table aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[*].Attachments" | jq aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[*].{ID:VolumeId,Tag:Tags}" | jq aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[].[VolumeId, VolumeType, Attachments[].[InstanceId, State][]][]" | jq aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq # 워커노드에서 파드에 추가한 EBS 볼륨 확인 aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --output table aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[*].{ID:VolumeId,Tag:Tags}" | jq aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq # 워커노드에서 파드에 추가한 EBS 볼륨 모니터링 while true; do aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" --output text; date; sleep 1; done # PVC 생성 cat <<EOT > awsebs-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: ebs-claim spec: accessModes: - ReadWriteOnce resources: requests: storage: 4Gi storageClassName: gp3 EOT kubectl apply -f awsebs-pvc.yaml kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/ebs-claim Pending gp3 6s # 파드 생성 cat <<EOT > awsebs-pod.yaml apiVersion: v1 kind: Pod metadata: name: app spec: terminationGracePeriodSeconds: 3 containers: - name: app image: centos command: ["/bin/sh"] args: ["-c", "while true; do echo \$(date -u) >> /data/out.txt; sleep 5; done"] volumeMounts: - name: persistent-storage mountPath: /data volumes: - name: persistent-storage persistentVolumeClaim: claimName: ebs-claim EOT kubectl apply -f awsebs-pod.yaml # PVC, 파드 확인 kubectl get pvc,pv,pod NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/ebs-claim Bound pvc-5850bcbc-3657-4ed3-b45a-6e2a6ee8ef89 4Gi RWO gp3 104s NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-5850bcbc-3657-4ed3-b45a-6e2a6ee8ef89 4Gi RWO Delete Bound default/ebs-claim gp3 46s NAME READY STATUS RESTARTS AGE pod/app 1/1 Running 0 50s kubectl get VolumeAttachment NAME ATTACHER PV NODE ATTACHED AGE csi-3413d48f272e2b5250fecd391c5fa1b7cc876b9fa4d726b82bb6013fb249284a ebs.csi.aws.com pvc-5850bcbc-3657-4ed3-b45a-6e2a6ee8ef89 ip-192-168-2-201.ap-northeast-2.compute.internal true 101s # 추가된 EBS 볼륨 상세 정보 확인 aws ec2 describe-volumes --volume-ids $(kubectl get pv -o jsonpath="{.items[0].spec.csi.volumeHandle}") | jq # PV 상세 확인 kubectl get pv -o yaml | yh ... nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: topology.ebs.csi.aws.com/zone operator: In values: - ap-northeast-2b ... kubectl get node --label-columns=topology.ebs.csi.aws.com/zone,topology.kubernetes.io/zone kubectl describe node | more # 파일 내용 추가 저장 확인 kubectl exec app -- tail -f /data/out.txt Sat Mar 23 10:56:15 UTC 2024 Sat Mar 23 10:56:20 UTC 2024 Sat Mar 23 10:56:25 UTC 2024 Sat Mar 23 10:56:30 UTC 2024 # 아래 명령어는 확인까지 다소 시간이 소요됨 kubectl df-pv PV NAME PVC NAME NAMESPACE NODE NAME POD NAME VOLUME MOUNT NAME SIZE USED AVAILABLE %USED IUSED IFREE %IUSED pvc-5850bcbc-3657-4ed3-b45a-6e2a6ee8ef89 ebs-claim default ip-192-168-2-201.ap-northeast-2.compute.internal app persistent-storage 3Gi 60Mi 3Gi 1.50 4 2097148 0.00 ## 파드 내에서 볼륨 정보 확인 kubectl exec -it app -- sh -c 'df -hT --type=overlay' Filesystem Type Size Used Avail Use% Mounted on overlay overlay 30G 3.8G 27G 13% / kubectl exec -it app -- sh -c 'df -hT --type=xfs' Filesystem Type Size Used Avail Use% Mounted on /dev/nvme1n1 xfs 4.0G 61M 3.9G 2% /data /dev/nvme0n1p1 xfs 30G 3.8G 27G 13% /etc/hosts

-

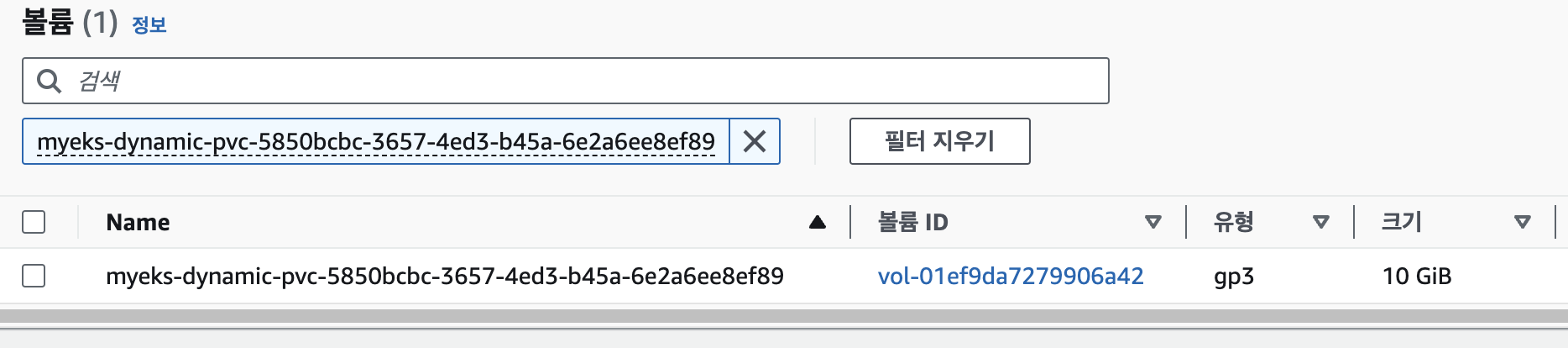

볼륨 용량 증가

증가 전 4GiB의 크기를 가진 볼륨 확인kubectl get pvc ebs-claim -o jsonpath={.spec.resources.requests.storage} ; echo 4Gi

10GiB로 볼륨 크기 증가# pvc patch kubectl patch pvc ebs-claim -p '{"spec":{"resources":{"requests":{"storage":"10Gi"}}}}' # 볼륨 크기 증가 확인 kubectl exec -it app -- sh -c 'df -hT --type=xfs' Filesystem Type Size Used Avail Use% Mounted on /dev/nvme1n1 xfs 10G 105M 9.9G 2% /data /dev/nvme0n1p1 xfs 30G 3.8G 27G 13% /etc/hosts kubectl df-pv PV NAME PVC NAME NAMESPACE NODE NAME POD NAME VOLUME MOUNT NAME SIZE USED AVAILABLE %USED IUSED IFREE %IUSED pvc-5850bcbc-3657-4ed3-b45a-6e2a6ee8ef89 ebs-claim default ip-192-168-2-201.ap-northeast-2.compute.internal app persistent-storage 9Gi 104Mi 9Gi 1.02 4 5242876 0.00

2. AWS Volume SnapShots Controller

Volumesnapshots 컨트롤러 설치

# (참고) EBS CSI Driver에 snapshots 기능 포함 될 것으로 보임

kubectl describe pod -n kube-system -l app=ebs-csi-controller

# Install Snapshot CRDs

curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml

curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml

curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml

kubectl apply -f snapshot.storage.k8s.io_volumesnapshots.yaml,snapshot.storage.k8s.io_volumesnapshotclasses.yaml,snapshot.storage.k8s.io_volumesnapshotcontents.yaml

kubectl get crd | grep snapshot

volumesnapshotclasses.snapshot.storage.k8s.io 2024-03-23T11:16:29Z

volumesnapshotcontents.snapshot.storage.k8s.io 2024-03-23T11:16:29Z

volumesnapshots.snapshot.storage.k8s.io 2024-03-23T11:16:28Z

kubectl api-resources | grep snapshot

volumesnapshotclasses vsclass,vsclasses snapshot.storage.k8s.io/v1 false VolumeSnapshotClass

volumesnapshotcontents vsc,vscs snapshot.storage.k8s.io/v1 false VolumeSnapshotContent

volumesnapshots vs snapshot.storage.k8s.io/v1 true VolumeSnapshot

# Install Common Snapshot Controller

curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml

curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yaml

kubectl apply -f rbac-snapshot-controller.yaml,setup-snapshot-controller.yaml

kubectl get deploy -n kube-system snapshot-controller

NAME READY UP-TO-DATE AVAILABLE AGE

snapshot-controller 2/2 2 0 11s

kubectl get pod -n kube-system -l app.kubernetes.io/name=snapshot-controller

snapshot-controller-749cb47b55-4z9zs 1/1 Running 0 82s

snapshot-controller-749cb47b55-tl5ns 1/1 Running 0 82s

# Install Snapshotclass

curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-ebs-csi-driver/master/examples/kubernetes/snapshot/manifests/classes/snapshotclass.yaml

kubectl apply -f snapshotclass.yaml

kubectl get vsclass # 혹은 volumesnapshotclasses

NAME DRIVER DELETIONPOLICY AGE

csi-aws-vsc ebs.csi.aws.com Delete 7sVolumesnapshots 컨트롤러 사용

-

PVC/Pod 생성

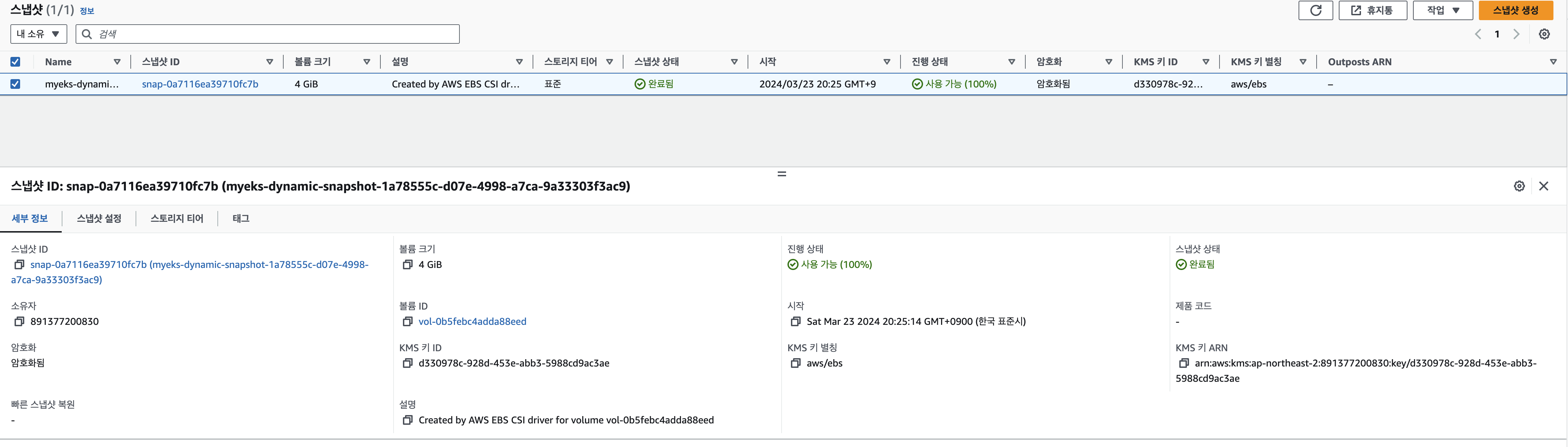

# PVC 생성 kubectl apply -f awsebs-pvc.yaml # 파드 생성 kubectl apply -f awsebs-pod.yaml # 파일 내용 추가 저장 확인 kubectl exec app -- tail -f /data/out.txt Sat Mar 23 11:24:38 UTC 2024 Sat Mar 23 11:24:43 UTC 2024 # VolumeSnapshot 생성 : Create a VolumeSnapshot referencing the PersistentVolumeClaim name >> EBS 스냅샷 확인 curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ebs-volume-snapshot.yaml cat ebs-volume-snapshot.yaml | yh kubectl apply -f ebs-volume-snapshot.yaml # VolumeSnapshot 확인 kubectl get volumesnapshot NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE ebs-volume-snapshot false ebs-claim 4Gi csi-aws-vsc snapcontent-1a78555c-d07e-4998-a7ca-9a33303f3ac9 6s 6s kubectl get volumesnapshot ebs-volume-snapshot -o jsonpath={.status.boundVolumeSnapshotContentName} ; echo snapcontent-1a78555c-d07e-4998-a7ca-9a33303f3ac9 kubectl describe volumesnapshot.snapshot.storage.k8s.io ebs-volume-snapshot kubectl get volumesnapshotcontents NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT VOLUMESNAPSHOTNAMESPACE AGE snapcontent-1a78555c-d07e-4998-a7ca-9a33303f3ac9 true 4294967296 Delete ebs.csi.aws.com csi-aws-vsc ebs-volume-snapshot default 77s # VolumeSnapshot ID 확인 kubectl get volumesnapshotcontents -o jsonpath='{.items[*].status.snapshotHandle}' ; echo snap-0a7116ea39710fc7b # AWS EBS 스냅샷 확인 aws ec2 describe-snapshots --owner-ids self | jq aws ec2 describe-snapshots --owner-ids self --query 'Snapshots[]' --output table # app & pvc 제거 (강제로 장애 상황을 재현) kubectl delete pod app && kubectl delete pvc ebs-claim

-

스냅샷을 사용하여 복원

# 스냅샷에서 PVC 로 복원 kubectl get pvc,pv cat <<EOT > ebs-snapshot-restored-claim.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: ebs-snapshot-restored-claim spec: storageClassName: gp3 accessModes: - ReadWriteOnce resources: requests: storage: 4Gi dataSource: name: ebs-volume-snapshot kind: VolumeSnapshot apiGroup: snapshot.storage.k8s.io EOT cat ebs-snapshot-restored-claim.yaml | yh kubectl apply -f ebs-snapshot-restored-claim.yaml # 확인 kubectl get pvc,pv NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/ebs-snapshot-restored-claim Pending gp3 6s # 파드 생성 curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ebs-snapshot-restored-pod.yaml cat ebs-snapshot-restored-pod.yaml | yh kubectl apply -f ebs-snapshot-restored-pod.yaml # 파일 내용 저장 확인 : 파드 삭제 전까지의 저장 기록이 남아 있다. 이후 파드 재생성 후 기록도 잘 저장되고 있다 kubectl exec app -- cat /data/out.txt ... Sat Mar 23 11:24:38 UTC 2024 Sat Mar 23 11:24:43 UTC 2024 Sat Mar 23 11:33:21 UTC 2024 Sat Mar 23 11:33:26 UTC 2024 ... # 삭제 kubectl delete pod app && kubectl delete pvc ebs-snapshot-restored-claim && kubectl delete volumesnapshots ebs-volume-snapshot

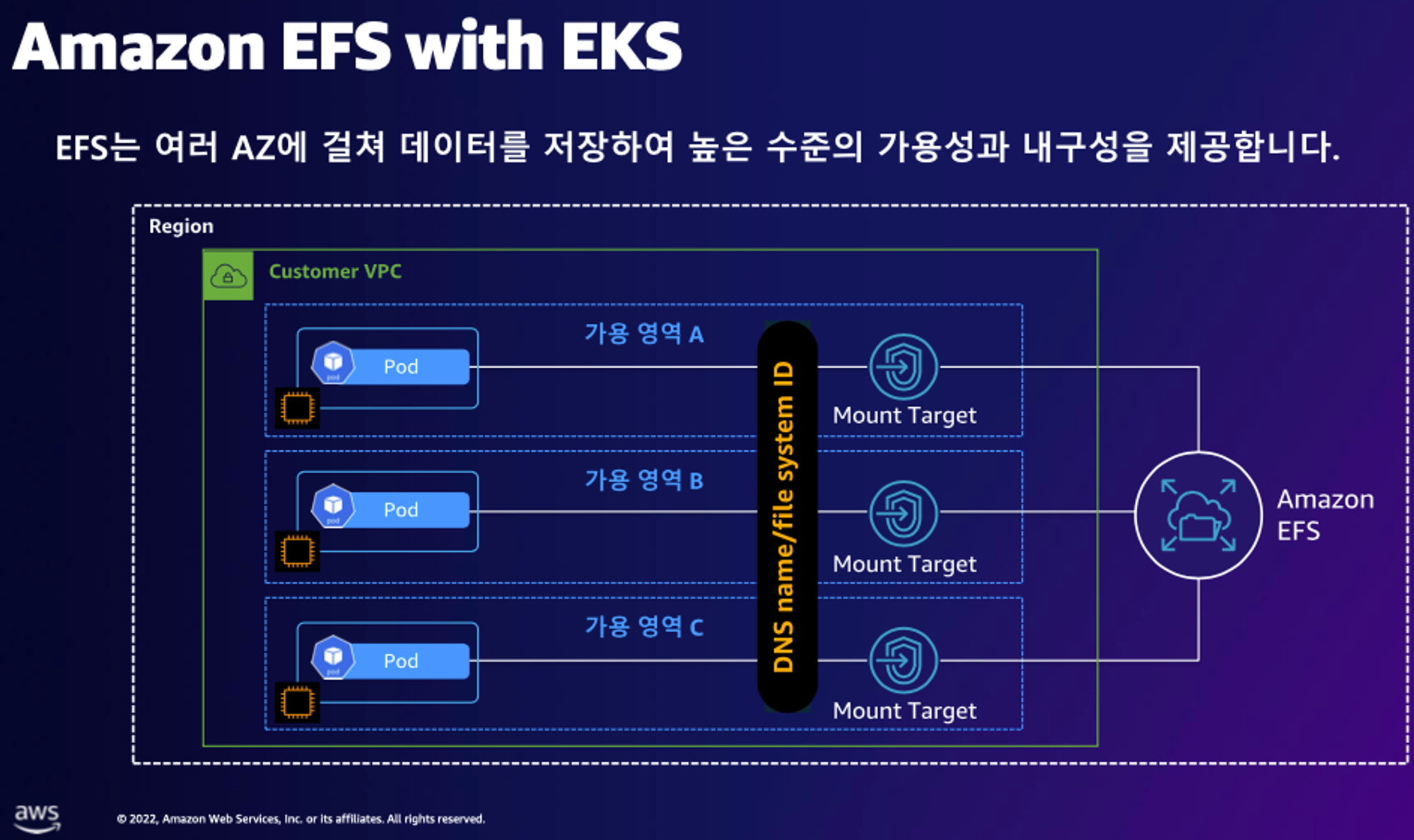

3. AWS EFS Controller

구성 아키텍처

EFS 파일시스템 확인 및 EFS Controller 설치

# EFS 정보 확인

aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text

fs-09b53f34b4aa7db46

# IAM 정책 생성

curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/docs/iam-policy-example.json

aws iam create-policy --policy-name AmazonEKS_EFS_CSI_Driver_Policy --policy-document file://iam-policy-example.json

# ISRA 설정 : 고객관리형 정책 AmazonEKS_EFS_CSI_Driver_Policy 사용

eksctl create iamserviceaccount \

--name efs-csi-controller-sa \

--namespace kube-system \

--cluster ${CLUSTER_NAME} \

--attach-policy-arn arn:aws:iam::${ACCOUNT_ID}:policy/AmazonEKS_EFS_CSI_Driver_Policy \

--approve

# ISRA 확인

kubectl get sa -n kube-system efs-csi-controller-sa -o yaml | head -5

eksctl get iamserviceaccount --cluster myeks

NAMESPACE NAME ROLE ARN

kube-system aws-load-balancer-controller arn:aws:iam::891377200830:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-RR2HibQuTVFy

kube-system ebs-csi-controller-sa arn:aws:iam::891377200830:role/AmazonEKS_EBS_CSI_DriverRole

kube-system efs-csi-controller-sa arn:aws:iam::891377200830:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-9AuSGuIgMZr4

# EFS Controller 설치

helm repo add aws-efs-csi-driver https://kubernetes-sigs.github.io/aws-efs-csi-driver/

helm repo update

helm upgrade -i aws-efs-csi-driver aws-efs-csi-driver/aws-efs-csi-driver \

--namespace kube-system \

--set image.repository=602401143452.dkr.ecr.${AWS_DEFAULT_REGION}.amazonaws.com/eks/aws-efs-csi-driver \

--set controller.serviceAccount.create=false \

--set controller.serviceAccount.name=efs-csi-controller-sa

# 확인

helm list -n kube-system

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

aws-efs-csi-driver kube-system 1 2024-03-23 20:41:52.997463648 +0900 KST deployed aws-efs-csi-driver-2.5.6 1.7.6

kubectl get pod -n kube-system -l "app.kubernetes.io/name=aws-efs-csi-driver,app.kubernetes.io/instance=aws-efs-csi-driver"

NAME READY STATUS RESTARTS AGE

efs-csi-controller-789c8bf7bf-h7rns 3/3 Running 0 34s

efs-csi-controller-789c8bf7bf-n4v7z 3/3 Running 0 34s

efs-csi-node-4ng95 3/3 Running 0 34s

efs-csi-node-flbmm 3/3 Running 0 34s

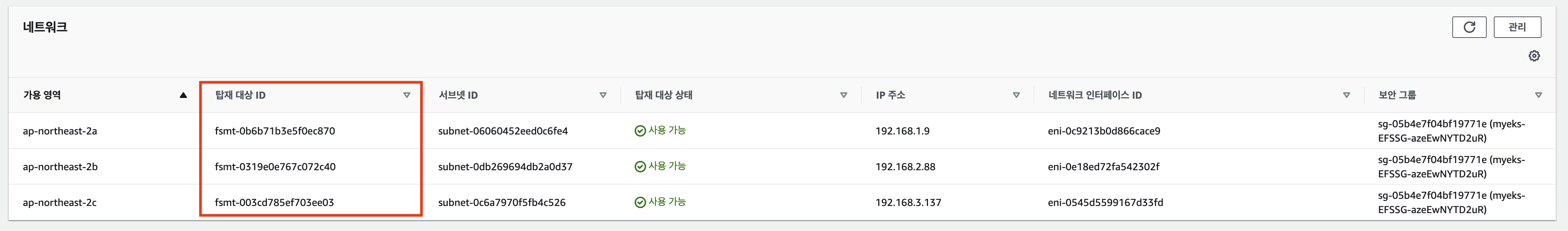

efs-csi-node-pwmc5 3/3 Running 0 34s- EFS 네트워크 탑재 대상ID 확인

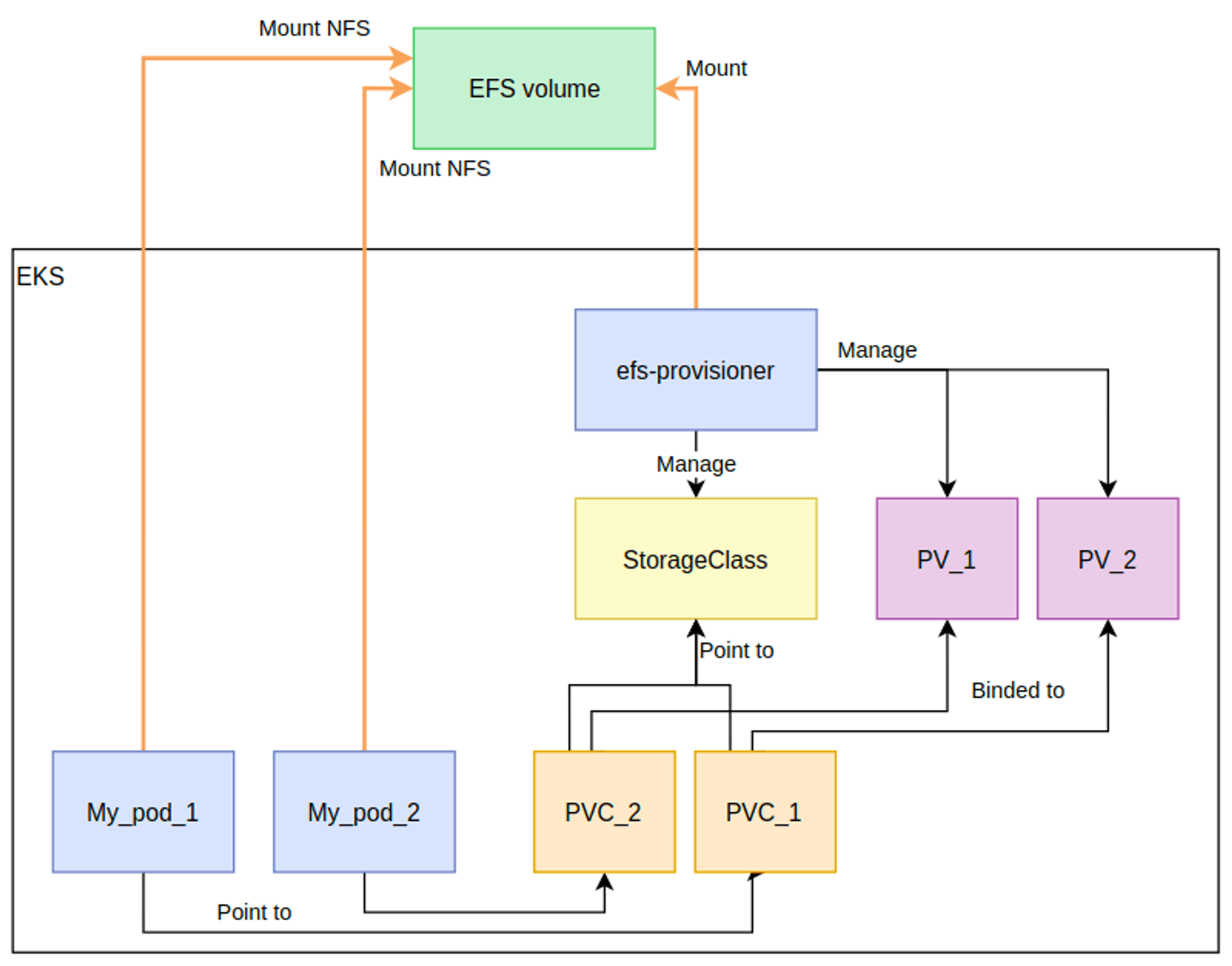

EFS 파일시스템을 다수의 Pod가 사용

-

Add empty StorageClasses from static example - Workshop 링크

# 모니터링 watch 'kubectl get sc efs-sc; echo; kubectl get pv,pvc,pod' # 실습 코드 clone git clone https://github.com/kubernetes-sigs/aws-efs-csi-driver.git /root/efs-csi cd /root/efs-csi/examples/kubernetes/multiple_pods/specs && tree # EFS 스토리지클래스 생성 및 확인 cat storageclass.yaml | yh kubectl apply -f storageclass.yaml kubectl get sc efs-sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE efs-sc efs.csi.aws.com Delete Immediate false 1s # PV 생성 및 확인 : volumeHandle을 자신의 EFS 파일시스템ID로 변경 EfsFsId=$(aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text) sed -i "s/fs-4af69aab/$EfsFsId/g" pv.yaml cat pv.yaml | yh apiVersion: v1 kind: PersistentVolume metadata: name: efs-pv spec: capacity: storage: 5Gi volumeMode: Filesystem accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Retain storageClassName: efs-sc csi: driver: efs.csi.aws.com volumeHandle: fs-05699d3c12ef609e2 kubectl apply -f pv.yaml kubectl get pv; kubectl describe pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE efs-pv 5Gi RWX Retain Available efs-sc 6s Name: efs-pv Labels: <none> Annotations: <none> Finalizers: [kubernetes.io/pv-protection] StorageClass: efs-sc Status: Available Claim: Reclaim Policy: Retain Access Modes: RWX VolumeMode: Filesystem Capacity: 5Gi Node Affinity: <none> Message: Source: Type: CSI (a Container Storage Interface (CSI) volume source) Driver: efs.csi.aws.com FSType: VolumeHandle: fs-09b53f34b4aa7db46 ReadOnly: false VolumeAttributes: <none> Events: <none> # PVC 생성 및 확인 cat claim.yaml | yh kubectl apply -f claim.yaml kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE efs-claim Bound efs-pv 5Gi RWX efs-sc 3s # 파드 생성 및 연동 : 파드 내에 /data 데이터는 EFS를 사용 cat pod1.yaml pod2.yaml | yh kubectl apply -f pod1.yaml,pod2.yaml kubectl df-pv # 파드 정보 확인 # EFS는 8.0E 까지 확장될 수 있는 거대한 파일시스템을 지원한다. # 하지만 PV의 capacity가 5Gi이므로, Pod는 5Gi 이상 저장할 수 없다. kubectl get pods kubectl exec -ti app1 -- sh -c "df -hT -t nfs4" Filesystem Type Size Used Available Use% Mounted on 127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /data kubectl exec -ti app2 -- sh -c "df -hT -t nfs4" Filesystem Type Size Used Available Use% Mounted on 127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /data # 공유 저장소 저장 동작 확인 tree /mnt/myefs # 작업용EC2에서 확인 /mnt/myefs ├── out1.txt └── out2.txt tail -f /mnt/myefs/out1.txt # 작업용EC2에서 확인 Sat Mar 23 12:23:00 UTC 2024 Sat Mar 23 12:23:05 UTC 2024 Sat Mar 23 12:23:10 UTC 2024 Sat Mar 23 12:23:15 UTC 2024 Sat Mar 23 12:23:21 UTC 2024 kubectl exec -ti app1 -- tail -f /data/out1.txt Sat Mar 23 12:23:21 UTC 2024 Sat Mar 23 12:23:26 UTC 2024 Sat Mar 23 12:23:31 UTC 2024 Sat Mar 23 12:23:36 UTC 2024 Sat Mar 23 12:23:41 UTC 2024 kubectl exec -ti app2 -- tail -f /data/out2.txt Sat Mar 23 12:23:51 UTC 2024 Sat Mar 23 12:23:56 UTC 2024 Sat Mar 23 12:24:01 UTC 2024 Sat Mar 23 12:24:06 UTC 2024 Sat Mar 23 12:24:11 UTC 2024

-

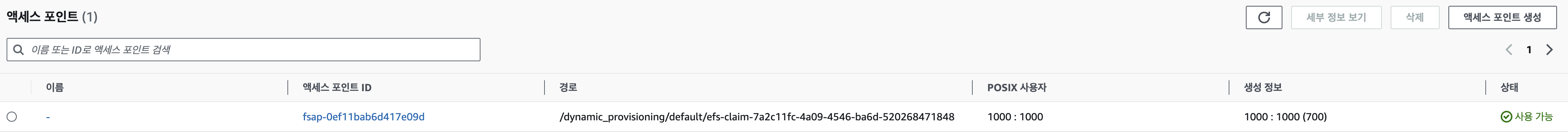

Dynamic provisioning using EFS (Fargate node는 현재 미지원) - Workshop

# 모니터링 watch 'kubectl get sc efs-sc; echo; kubectl get pv,pvc,pod' # EFS 스토리지클래스 생성 및 확인 curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/examples/kubernetes/dynamic_provisioning/specs/storageclass.yaml cat storageclass.yaml | yh sed -i "s/fs-92107410/$EfsFsId/g" storageclass.yaml kubectl apply -f storageclass.yaml kubectl get sc efs-sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE efs-sc efs.csi.aws.com Delete Immediate false 7s # PVC/파드 생성 및 확인 curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/examples/kubernetes/dynamic_provisioning/specs/pod.yaml cat pod.yaml | yh kubectl apply -f pod.yaml kubectl get pvc,pv,pod NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/efs-claim Bound pvc-58e3b82f-7f15-4c01-9e63-37510f3c859f 5Gi RWX efs-sc 7s NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-58e3b82f-7f15-4c01-9e63-37510f3c859f 5Gi RWX Delete Bound default/efs-claim efs-sc 6s NAME READY STATUS RESTARTS AGE pod/efs-app 1/1 Running 0 7s # PVC/PV 생성 로그 확인 kubectl logs -n kube-system -l app=efs-csi-controller -c csi-provisioner -f # 파드 정보 확인 kubectl exec -it efs-app -- sh -c "df -hT -t nfs4" Filesystem Type Size Used Avail Use% Mounted on 127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /data # 공유 저장소 저장 동작 확인 tree /mnt/myefs # 작업용EC2에서 확인 /mnt/myefs ├── dynamic_provisioning │ └── default │ └── efs-claim-7a2c11fc-4a09-4546-ba6d-520268471848 │ └── out ├── out1.txt └── out2.txt kubectl exec efs-app -- bash -c "cat data/out" Sat Mar 23 12:28:04 UTC 2024 Sat Mar 23 12:28:09 UTC 2024 Sat Mar 23 12:28:14 UTC 2024 Sat Mar 23 12:28:20 UTC 2024 Sat Mar 23 12:28:25 UTC 2024 Sat Mar 23 12:28:30 UTC 2024- EFS Access Point 확인

- EFS Access Point 확인