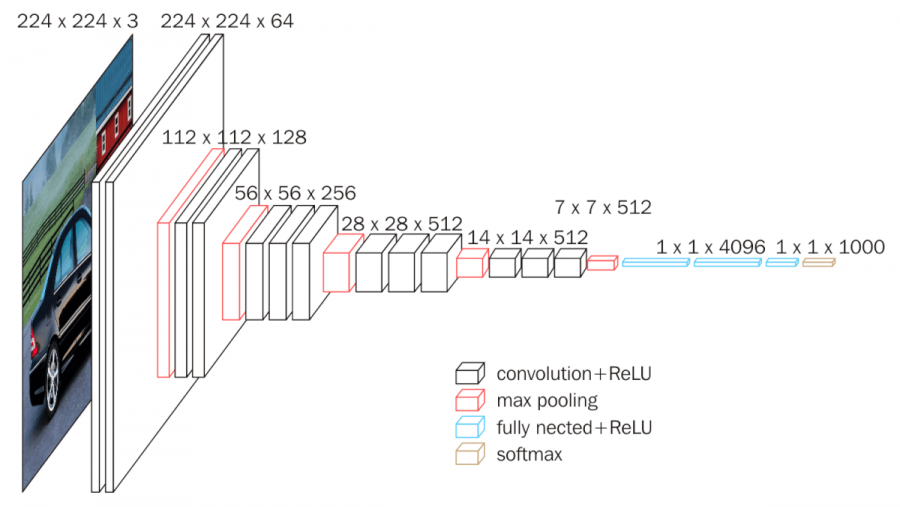

2014sus VGG 연구 그룹에서 제안한 신경망 구조이다.

LeNet이나 AlexNet과 동일하지만 신경망의 층수가 더 많다.

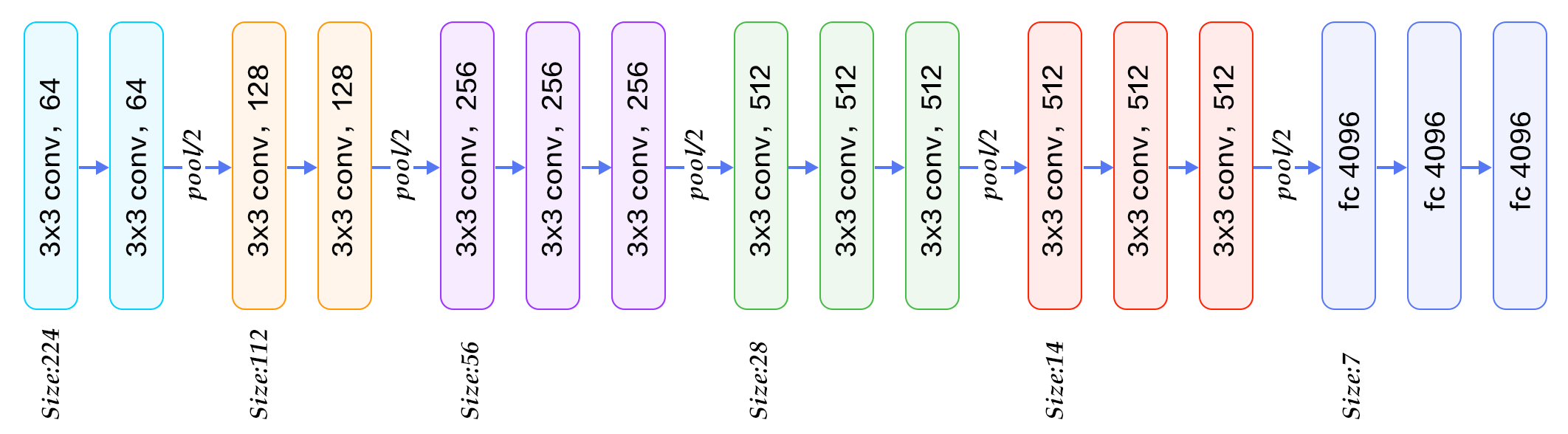

VGG16은 층 16개로 구성되는데 합성곱층 13개 전결합층 3개이다.

합성곱층의 필터 크기가 3x3인데 AlexNet보다 더 세밀한 특징일 추출하기 위해서다.

VGG16 구조

https://www.kaggle.com/code/blurredmachine/vggnet-16-architecture-a-complete-guide

model = Sequential()

# first block

model.add(Conv2D(filters=64, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same',input_shape=(224,224, 3)))

model.add(Conv2D(filters=64, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(MaxPool2D(pool_size=(2,2), strides=(2,2)))

# second block

model.add(Conv2D(filters=128, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(Conv2D(filters=128, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(MaxPool2D(pool_size=(2,2), strides=(2,2)))

# third block

model.add(Conv2D(filters=256, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(Conv2D(filters=256, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(Conv2D(filters=256, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(MaxPool2D(pool_size=(2,2), strides=(2,2)))

# forth block

model.add(Conv2D(filters=512, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(Conv2D(filters=512, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(Conv2D(filters=512, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(MaxPool2D(pool_size=(2,2), strides=(2,2)))

# fifth block

model.add(Conv2D(filters=512, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(Conv2D(filters=512, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(Conv2D(filters=512, kernel_size=(3,3), strides=(1,1), activation='relu', padding='same'))

model.add(MaxPool2D(pool_size=(2,2), strides=(2,2)))

# sixth block (classifier)

model.add(Flatten())

model.add(Dense(4096, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(4096, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(1000, activation='softmax'))

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 224, 224, 64) 1792

_________________________________________________________________

conv2d_2 (Conv2D) (None, 224, 224, 64) 36928

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 112, 112, 64) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 112, 112, 128) 73856

_________________________________________________________________

conv2d_4 (Conv2D) (None, 112, 112, 128) 147584

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 56, 56, 128) 0

_________________________________________________________________

conv2d_5 (Conv2D) (None, 56, 56, 256) 295168

_________________________________________________________________

conv2d_6 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

conv2d_7 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 28, 28, 256) 0

_________________________________________________________________

conv2d_8 (Conv2D) (None, 28, 28, 512) 1180160

_________________________________________________________________

conv2d_9 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

conv2d_10 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 (None, 14, 14, 512) 0

_________________________________________________________________

conv2d_11 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

conv2d_12 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

conv2d_13 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

max_pooling2d_5 (MaxPooling2 (None, 7, 7, 512) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 25088) 0

_________________________________________________________________

dense_1 (Dense) (None, 4096) 102764544

_________________________________________________________________

dropout_1 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_2 (Dense) (None, 4096) 16781312

_________________________________________________________________

dropout_2 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_3 (Dense) (None, 1000) 4097000

=================================================================

Total params: 138,357,544

Trainable params: 138,357,544

Non-trainable params: 0

_________________________________________________________________[참고자료]

https://www.hanbit.co.kr/store/books/look.php?p_code=B6566099029