AlexNet은 2012년 ILSVRC 이미지 분류 콘테스트에서 우승을 차지했다.

VGGNet이나 ResNet처럼 AlexNet의 단점이 이후 나온 신경망을 고안하게 되었다.

LeNet은 6만 개의 파라미터를 가졌으나 AlexNet은 65만개 뉴런과 6천만 개의 파라미터를 갖는다.

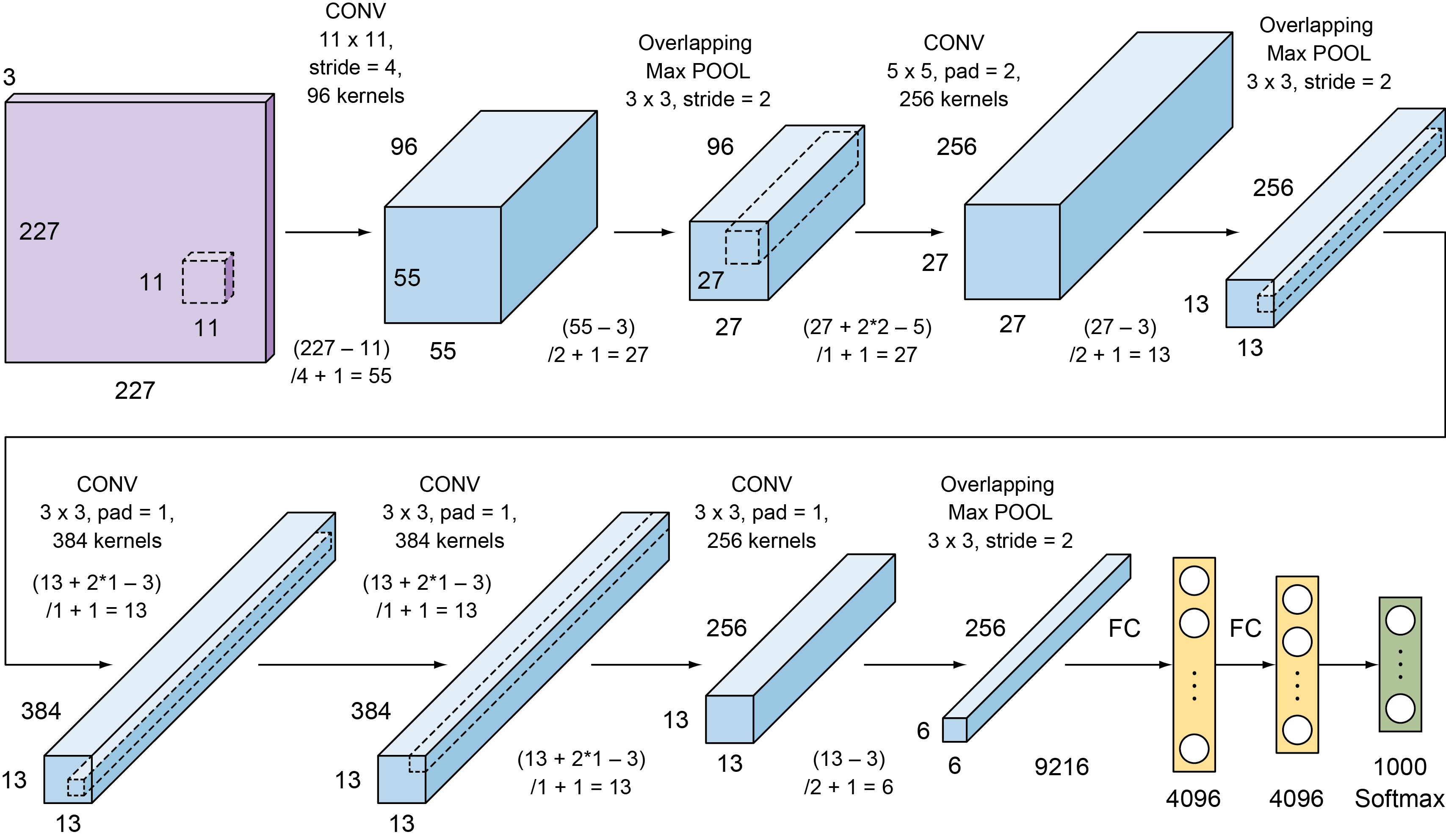

AlexNet 구조

입력 이미지 -> CONV1 -> POOL2 -> CONV3 -> POOL4 CONV5 -> CONV6 -> CONV7 -> POOL8 -> FC9 -> FC10 -> SOFTMAX7

- 합성곱층의 필러 크기 : 11 x 11, 5x5, 3x3

- 최대 풀링 사용

- 과적합 방지를 위한 드롭아웃 적용

- 은닉층을 활성화 함수는 ReLU, 출력층의 활성화 함수는 소프트맥스 함수 사용

from keras.models import Sequential

from keras.layers import Conv2D, AveragePooling2D, Flatten, Dense,Activation,MaxPool2D, BatchNormalization, Dropout

from keras.regularizers import l2

# Instantiate an empty sequential model

model = Sequential(name="Alexnet")

# 1st layer (conv + pool + batchnorm)

model.add(Conv2D(filters= 96, kernel_size= (11,11), strides=(4,4), padding='valid', kernel_regularizer=l2(0.0005),

input_shape = (227,227,3)))

model.add(Activation('relu')) #<---- activation function can be added on its own layer or within the Conv2D function

model.add(MaxPool2D(pool_size=(3,3), strides= (2,2), padding='valid'))

model.add(BatchNormalization())

# 2nd layer (conv + pool + batchnorm)

model.add(Conv2D(filters=256, kernel_size=(5,5), strides=(1,1), padding='same', kernel_regularizer=l2(0.0005)))

model.add(Activation('relu'))

model.add(MaxPool2D(pool_size=(3,3), strides=(2,2), padding='valid'))

model.add(BatchNormalization())

# layer 3 (conv + batchnorm) <--- note that the authors did not add a POOL layer here

model.add(Conv2D(filters=384, kernel_size=(3,3), strides=(1,1), padding='same', kernel_regularizer=l2(0.0005)))

model.add(Activation('relu'))

model.add(BatchNormalization())

# layer 4 (conv + batchnorm) <--- similar to layer 3

model.add(Conv2D(filters=384, kernel_size=(3,3), strides=(1,1), padding='same', kernel_regularizer=l2(0.0005)))

model.add(Activation('relu'))

model.add(BatchNormalization())

# layer 5 (conv + batchnorm)

model.add(Conv2D(filters=256, kernel_size=(3,3), strides=(1,1), padding='same', kernel_regularizer=l2(0.0005)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(MaxPool2D(pool_size=(3,3), strides=(2,2), padding='valid'))

# Flatten the CNN output to feed it with fully connected layers

model.add(Flatten())

# layer 6 (Dense layer + dropout)

model.add(Dense(units = 4096, activation = 'relu'))

model.add(Dropout(0.5))

# layer 7 (Dense layers)

model.add(Dense(units = 4096, activation = 'relu'))

model.add(Dropout(0.5))

# layer 8 (softmax output layer)

model.add(Dense(units = 1000, activation = 'softmax'))

# print the model summary

model.summary()

# model.summary()

Model: "Alexnet"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 55, 55, 96) 34944

_________________________________________________________________

activation_1 (Activation) (None, 55, 55, 96) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 27, 27, 96) 0

_________________________________________________________________

batch_normalization_1 (Batch (None, 27, 27, 96) 384

_________________________________________________________________

conv2d_2 (Conv2D) (None, 27, 27, 256) 614656

_________________________________________________________________

activation_2 (Activation) (None, 27, 27, 256) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 13, 13, 256) 0

_________________________________________________________________

batch_normalization_2 (Batch (None, 13, 13, 256) 1024

_________________________________________________________________

conv2d_3 (Conv2D) (None, 13, 13, 384) 885120

_________________________________________________________________

activation_3 (Activation) (None, 13, 13, 384) 0

_________________________________________________________________

batch_normalization_3 (Batch (None, 13, 13, 384) 1536

_________________________________________________________________

conv2d_4 (Conv2D) (None, 13, 13, 384) 1327488

_________________________________________________________________

activation_4 (Activation) (None, 13, 13, 384) 0

_________________________________________________________________

batch_normalization_4 (Batch (None, 13, 13, 384) 1536

_________________________________________________________________

conv2d_5 (Conv2D) (None, 13, 13, 256) 884992

_________________________________________________________________

activation_5 (Activation) (None, 13, 13, 256) 0

_________________________________________________________________

batch_normalization_5 (Batch (None, 13, 13, 256) 1024

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 6, 6, 256) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 9216) 0

_________________________________________________________________

dense_1 (Dense) (None, 4096) 37752832

_________________________________________________________________

dropout_1 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_2 (Dense) (None, 4096) 16781312

_________________________________________________________________

dropout_2 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_3 (Dense) (None, 1000) 4097000

=================================================================

Total params: 62,383,848

Trainable params: 62,381,096

Non-trainable params: 2,752

_________________________________________________________________

[참고자료]

https://www.hanbit.co.kr/store/books/look.php?p_code=B6566099029