k -n eeapbh get all

k delete resourcequotas sample-resourcequotas

k delete ns eeapbh

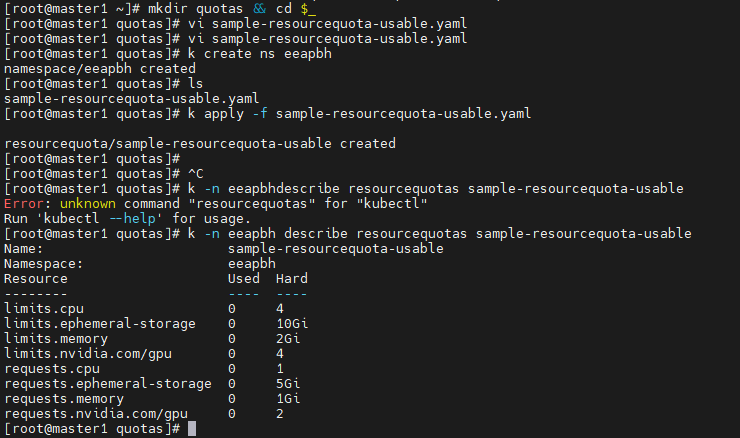

mkdir quotas && cd $_

vi sample-resourcequota-usable.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota-usable

namespace: eeapbh

spec:

hard:

requests.cpu: 1

requests.memory: 1Gi

requests.ephemeral-storage: 5Gi # 임시스토리지

requests.nvidia.com/gpu: 2

limits.cpu: 4

limits.memory: 2Gi

limits.ephemeral-storage: 10Gi

limits.nvidia.com/gpu: 4

k create ns eeapbh

k apply -f sample-resourcequota-usable.yaml

k -n eeapbh describe resourcequotas sample-resourcequota-usable

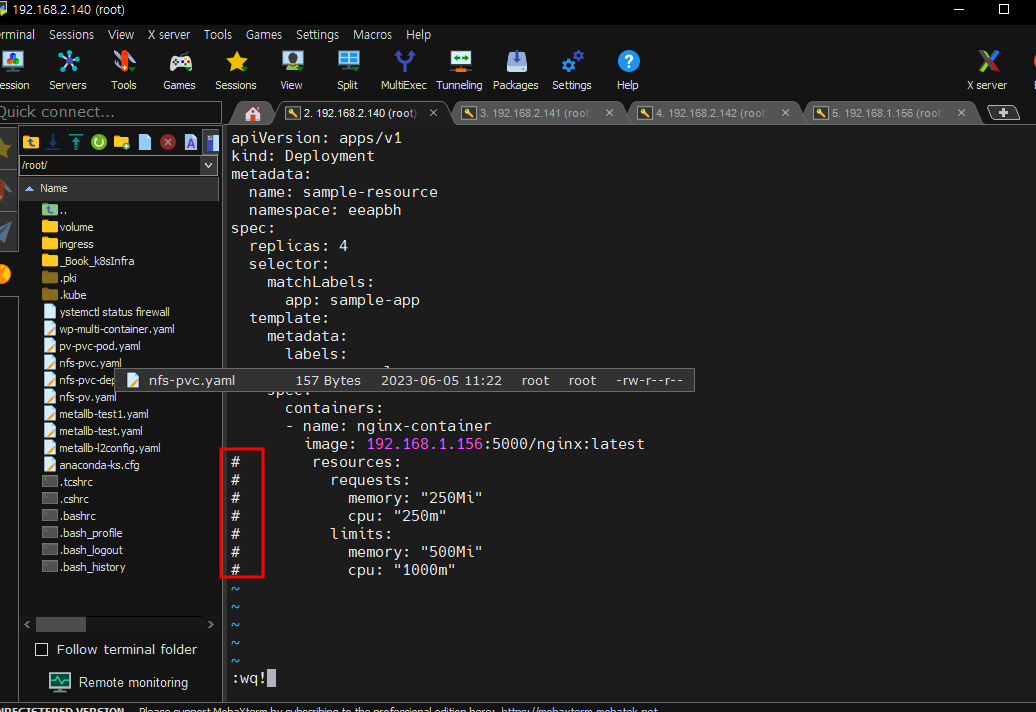

vi sample-resource.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-resource

namespace: eeapbh

spec:

replicas: 4

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: nginx-container

image: nginx:192.168.1.156:5000/nginx:latest

resources:

requests:

memory: "250Mi"

cpu: "250m"

limits:

memory: "500Mi"

cpu: "1000m"

k apply -f sample-resource.yaml vi sample-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: sample-pod

namespace: eeapbh

spec:

containers:

- name: nginx-container

image: 192.168.1.156:5000/nginx:latest

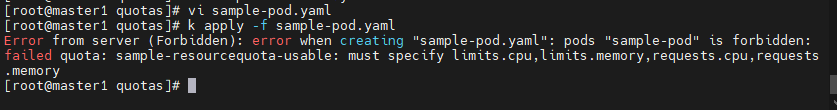

k apply -f sample-pod.yaml- cpu, limits.memory, requests.cpu, requests.memory를 정의해줘야한다.

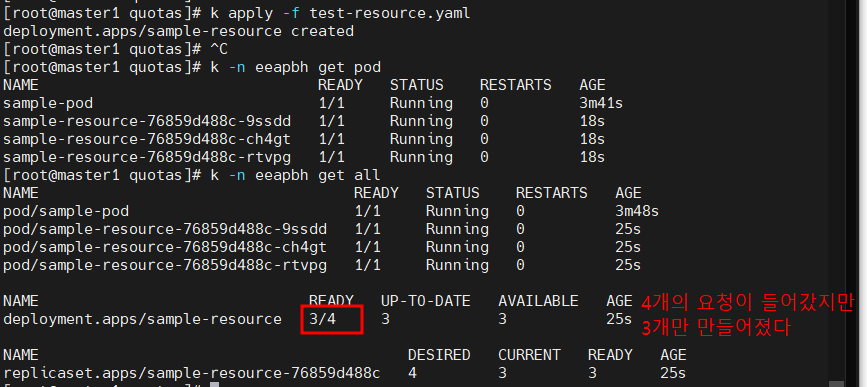

k -n eeapbh get all

k delete -f sample-resource.yaml

LimitRange

vi sample-limitrange-container.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: sample-limitrange-container

namespace: eeapbh

spec:

limits:

- type: Container

default: # limit

memory: 500Mi

cpu: 1000m

defaultRequest: # request

memory: 250Mi

cpu: 250m

max:

memory: 500Mi

cpu: 1000m

min:

memory: 250Mi

cpu: 250m

maxLimitRequestRatio: # 최소/최대 요청 비율

memory: 2

cpu: 4

k apply -f sample-limitrange-container.yaml

k -n eeapbh apply -f sample-pod.yaml

k -n eeapbh describe pod sample-pod

cp sample-resource.yaml test-resource.yaml

vi test-resource.yaml

k apply -f test-resource.yaml

k -n eeapbh get pod

k -n eeapbh get all

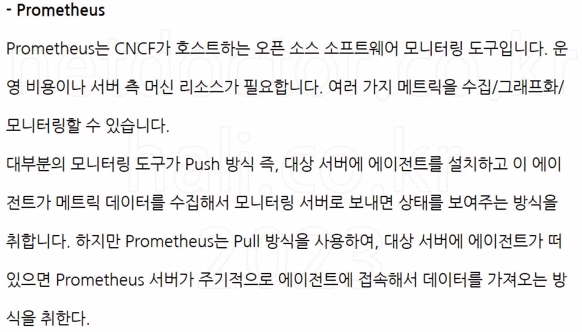

Prometheus

일단 오늘 했던거 다 지워보자

k delete -f .

# 현재있는 폴더안에 있는 모든 yaml파일들을 다 열어가지고 지울수있는건 지워버리기

k delete ns eeapbh

k delete ns test-namespace

Chapter 3

@@ 파드 스케줄(자동 배치)

k config set-context kubernetes-admin@kubernetes --namespace=default

k delete deployments.apps mysql-deploy

k delete deployments.apps wordpress-deploy

mkdir schedule && cd $_

# vi pod-schedule.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-schedule-metadata

labels:

app: pod-schedule-labels

spec:

containers:

- name: pod-schedule-containers

image: 192.168.1.156:5000/nginx:latest

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: pod-schedule-service

spec:

type: NodePort

selector:

app: pod-schedule-labels

ports:

- protocol: TCP

port: 80

targetPort: 80@@ 파드 노드네임(수동 배치)

# vi pod-nodename.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-nodename-metadata

labels:

app: pod-nodename-labels

spec:

containers:

- name: pod-nodename-containers

image: 192.168.1.156:5000/nginx

ports:

- containerPort: 80

nodeName: worker2

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodename-service

spec:

type: NodePort

selector:

app: pod-nodename-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

k apply -f pod-nodename.yaml - 어떤 특정한 라벨을 달고 있는 pod만 보고싶을때

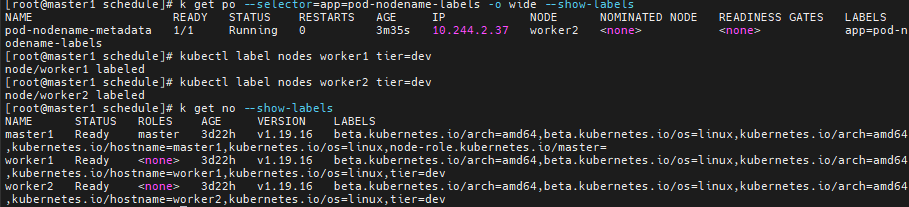

k get po --selector=app=pod-nodename-labels -o wide --show-labels@@ 노드 셀렉터(수동 배치)

# kubectl label nodes worker1 tier=dev

# kubectl get nodes --show-labels

# vi pod-nodeselector.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-nodeselector-metadata

labels:

app: pod-nodeselector-labels

spec:

containers:

- name: pod-nodeselector-containers

image: 192.168.1.156:5000/nginx

ports:

- containerPort: 80

nodeSelector:

tier: dev

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodeselector-service

spec:

type: NodePort

selector:

app: pod-nodeselector-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

k apply -f pod-nodeselector.yaml

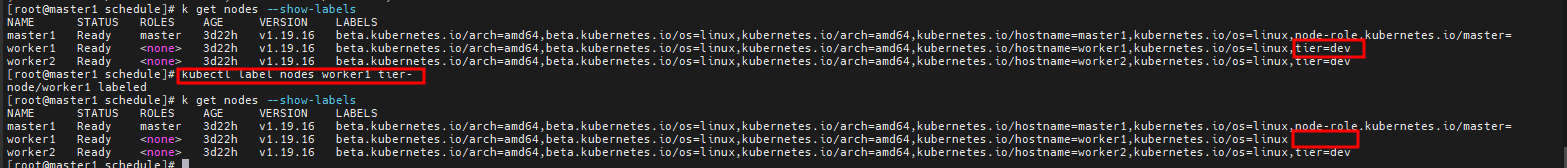

# kubectl label nodes worker1 tier- #지우기

# kubectl get nodes --show-labels

@@ taint와 toleration

# kubectl taint node worker1 tier=dev:NoSchedule # pod가 만들어지지 않는다.

# kubectl describe nodes worker1

# vi pod-taint.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-taint-metadata

labels:

app: pod-taint-labels

spec:

containers:

- name: pod-taint-containers

image: 192.168.1.156:5000/nginx

ports:

- containerPort: 80

tolerations:

- key: "tier"

operator: "Equal"

value: "dev"

effect: "NoSchedule"

---

apiVersion: v1

kind: Service

metadata:

name: pod-taint-service

spec:

type: NodePort

selector:

app: pod-taint-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

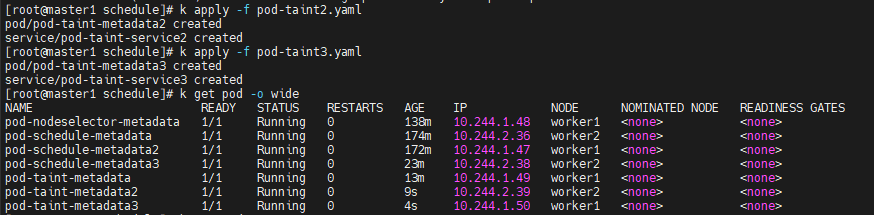

k apply -f pod-taint.yaml

cp pod-taint.yaml pod-taint2.yaml

cp pod-taint.yaml pod-taint3.yaml

k apply -f pod-taint2.yaml

k apply -f pod-taint3.yaml

# kubectl taint node worker1 tier=dev:NoSchedule-

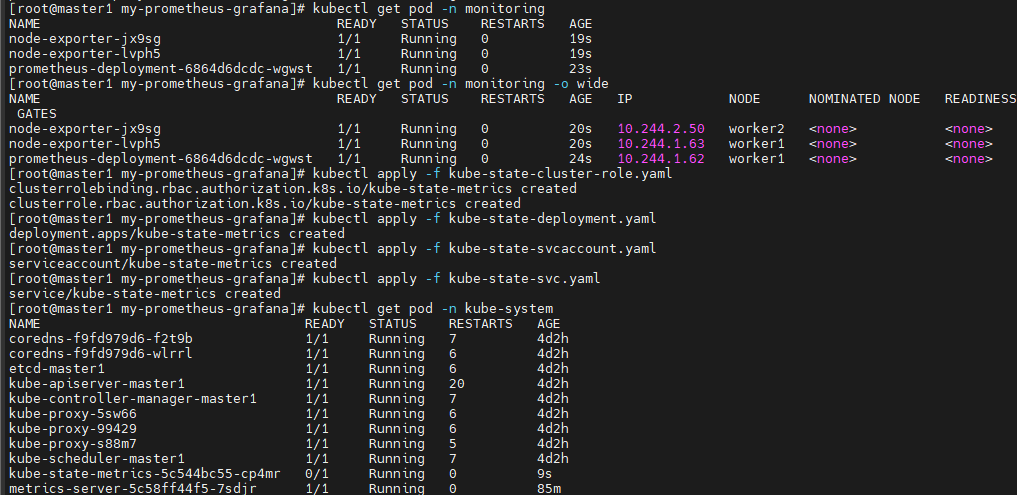

쿠버네티스프로메테우스그라파나_오토스케일링

kubectl top node

kubectl top pod

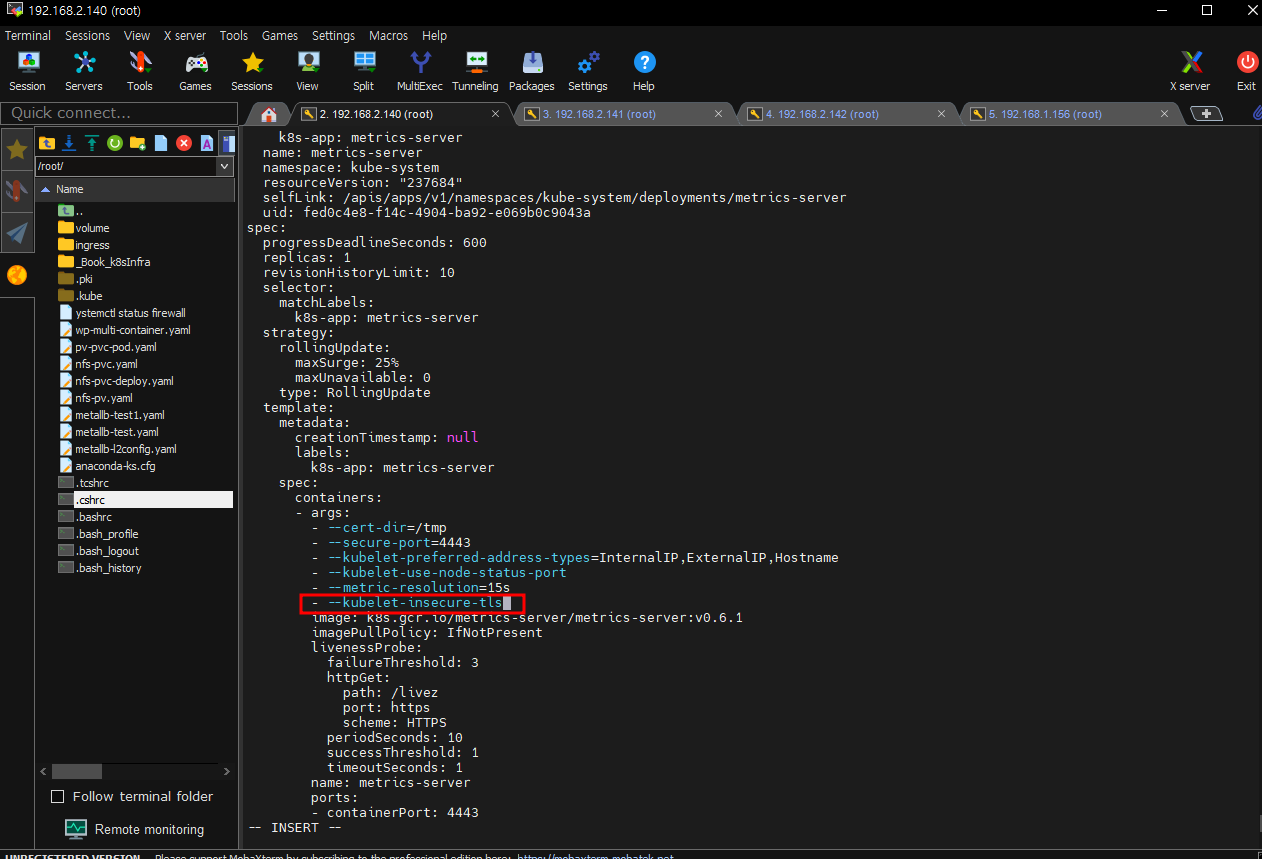

--- metric-server

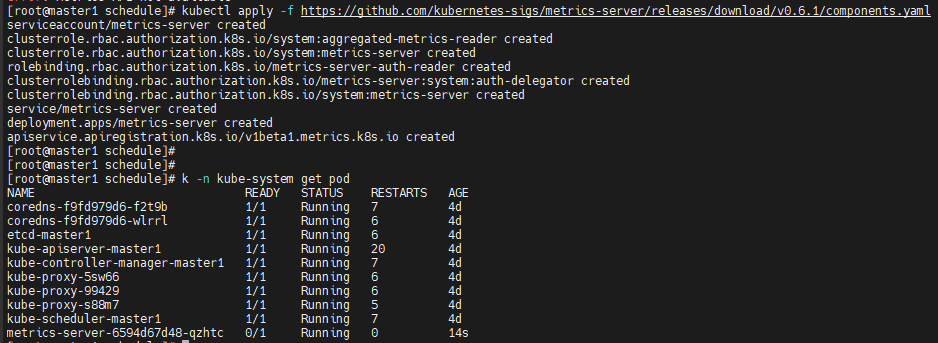

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.1/components.yaml

# edit 명령의 특징 수정하면 바로 적용이됨

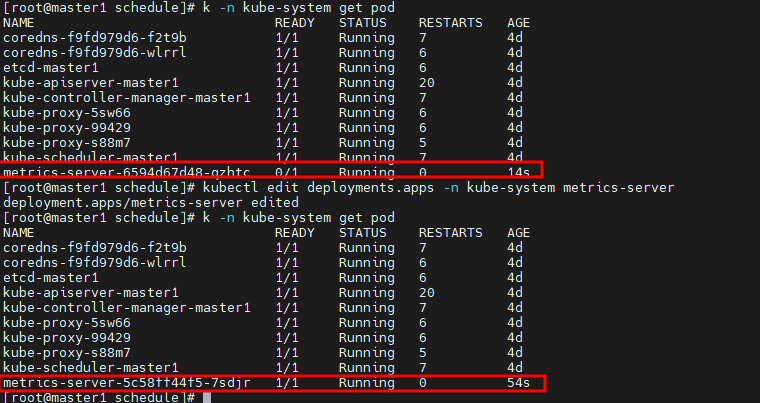

kubectl edit deployments.apps -n kube-system metrics-server

--kubelet-insecure-tls

k -n kube-system get pod

kubectl top node

kubectl top pod

- 추가

- 기존꺼 지워지고 다시 만들어짐

-

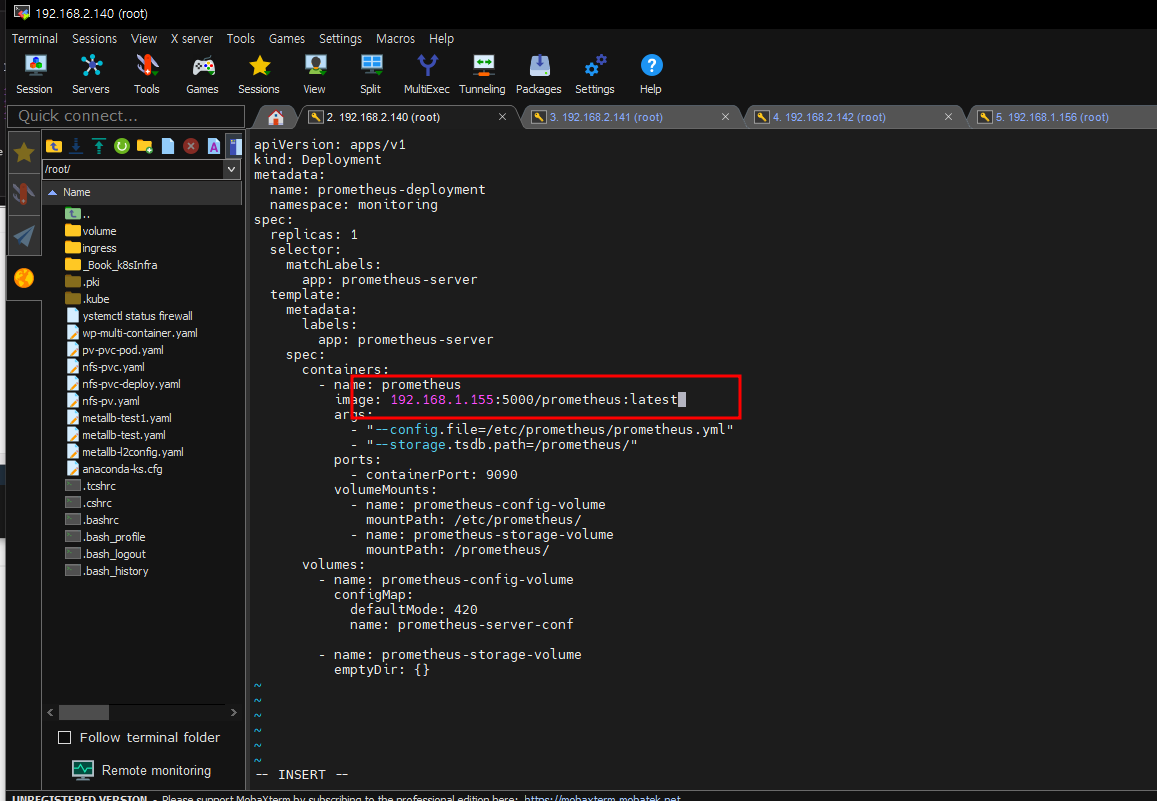

vi prometheus-deployment.yaml

-

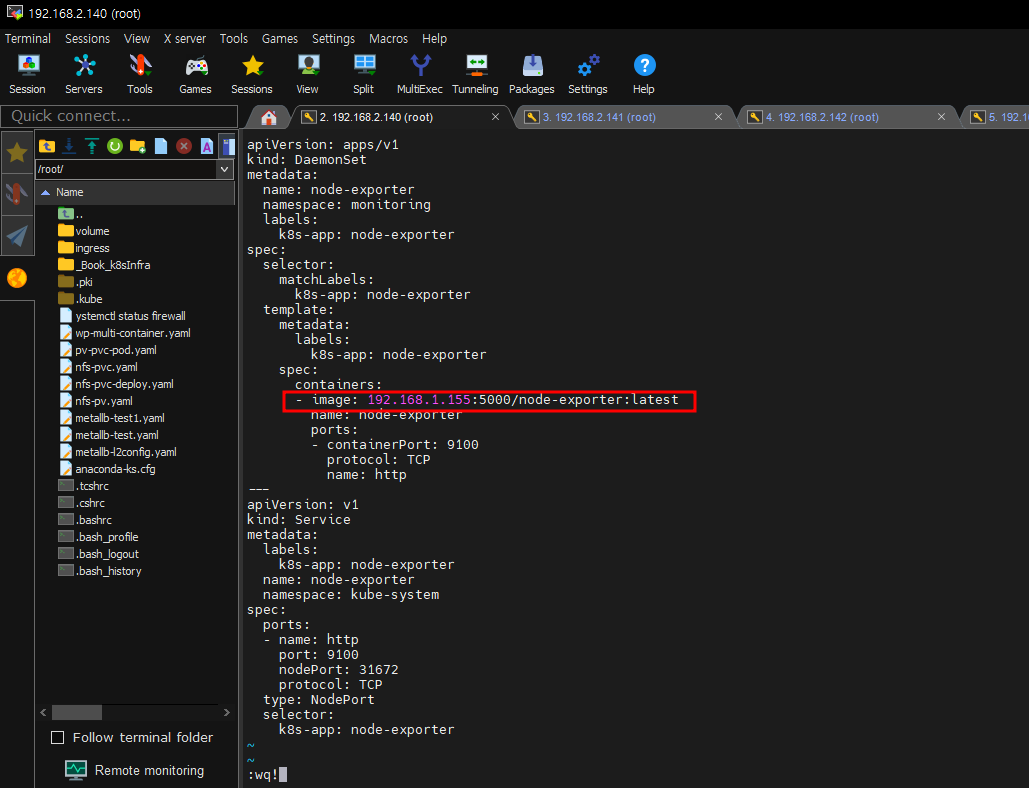

vi prometheus-node-exporter.yaml

pull 받고 tag하고 사설 레지스토리에 push 작업 다해야함

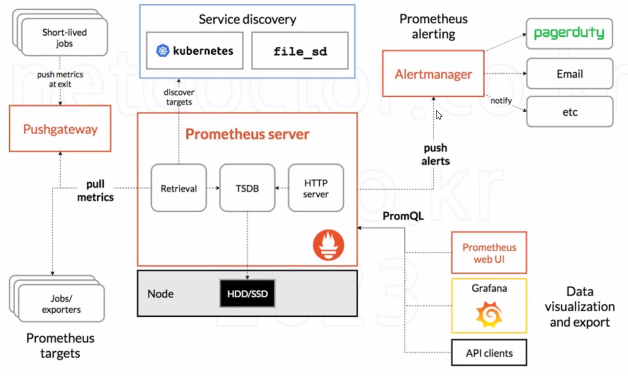

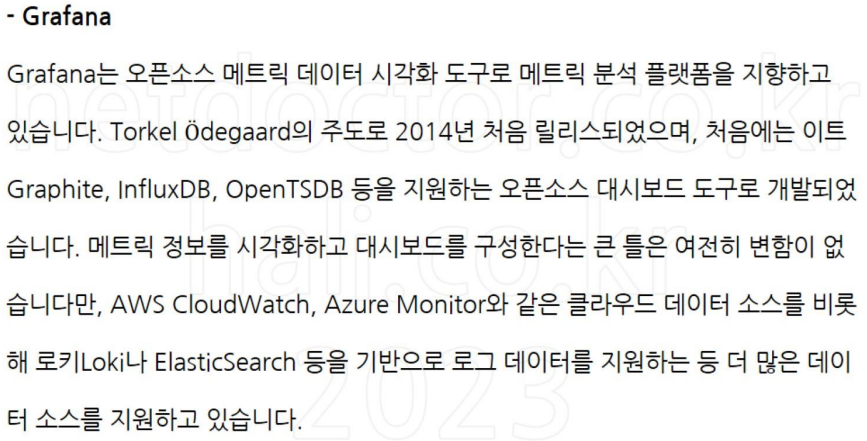

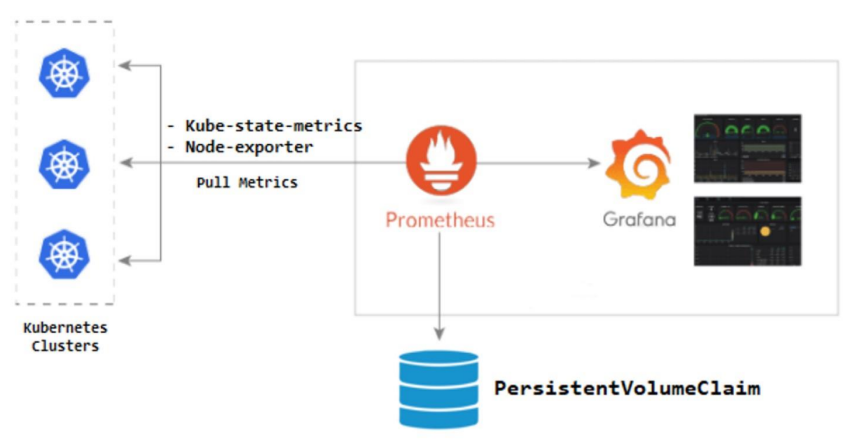

그라파나 설치

kubectl apply -f grafana.yaml

kubectl get pod -n monitoring

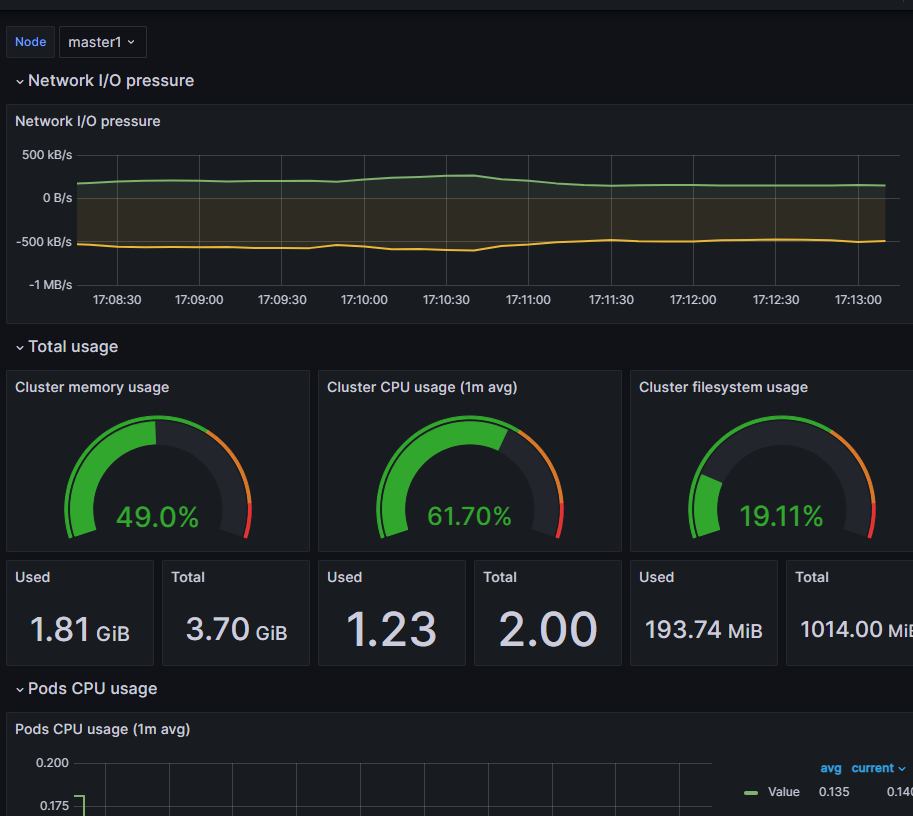

https://grafana.com/grafana/dashboards/

- 연동됨

- dashboards 클릭

- new -> import클릭

- 315치고 load

-

아까 연동시켰던 프로메테우스가 뜸 -> import 클릭

-

그럼 클러스터를 모니터링할수 있다

yes > /dev/null &- cpu 늘어남