k describe nodes worker1 | grep -i Taints

k describe nodes worker2 | grep -i Taints

k describe nodes master1 | grep -i Taints

k taint node worker1 tier=dev:NoSchedule

k taint node worker2 tier=dev:NoSchedule

k delete all --all

k apply -f pod-schedule.yaml

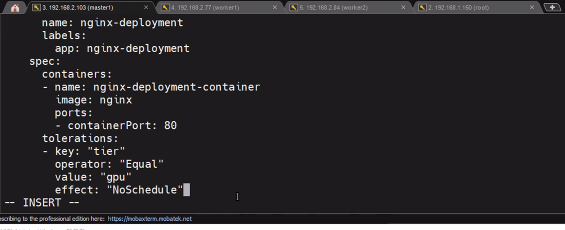

- vi deployment.yaml

$ vi deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment3

spec:

replicas: 4

selector:

matchLabels:

app: nginx-deployment3

template:

metadata:

name: nginx-deployment3

labels:

app: nginx-deployment3

spec:

containers:

- name: nginx-deployment-container3

image: 192.168.1.156:5000/nginx:latest

tolerations:

- key: "tier"

operator: "Equal"

value: "gpu"

effect: "NoSchedule"k apply -f deployment.yaml- untaint

kubectl taint node worker1 tier=dev:NoSchedule-- master1에 untaint

kubectl taint node master1 node-role.kubernetes.io/master:NoSchedule-

cordon, uncordon

커든 명령은 지정된 노드에 추가로 파드를 스케줄하지 않는다.

kubectl cordon worker2

kubectl get no

kubectl apply -f deployment_service.yaml

kubectl get po -o wide # 노드 1만 파드 스케줄

kubectl uncordon worker2-

cordon을 뚫는지 실험해보자

-

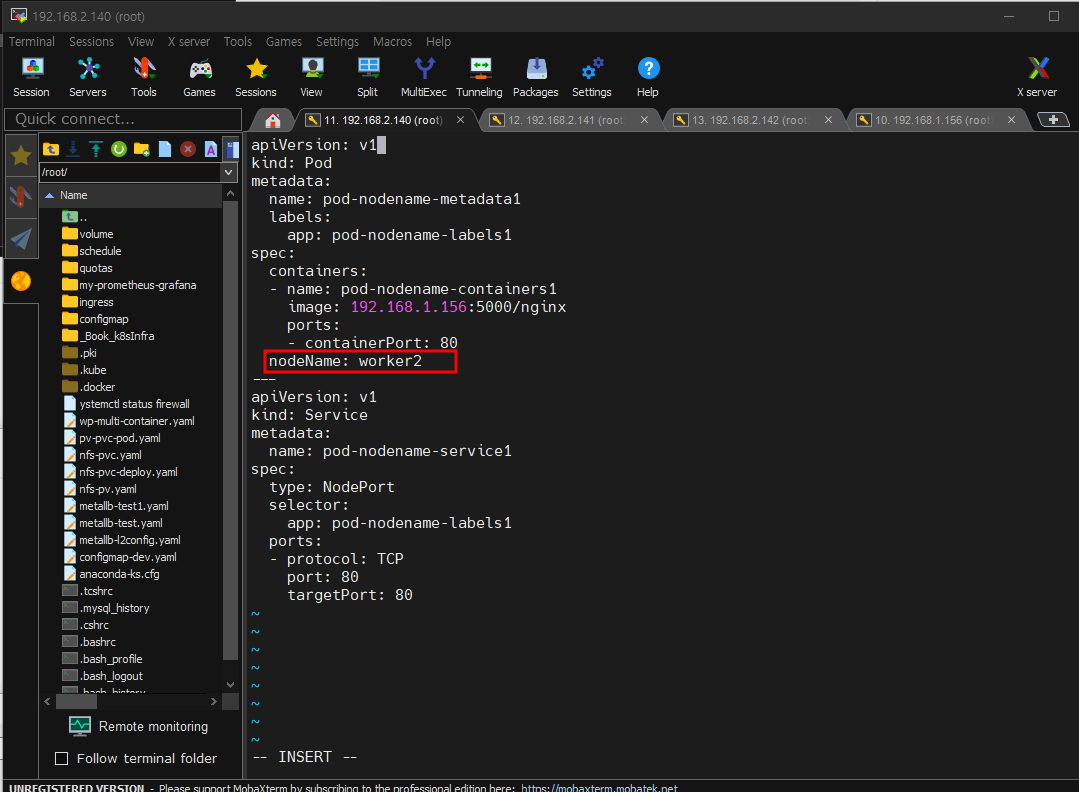

vi pod-nodename.yaml

-

잘뚫는거 확인가능

k apply -f pod-nodename.yaml drain, uncordon

드레인 명령은 지정된 노드에 모든 파드를 다른 노드로 이동시킨다. -> 근데 지워지고 다시만들어 지는 느낌임

kubectl drain worker1 --ignore-daemonsets --force --delete-local-data

kubectl get po -o wide

kubectl get no

kubectl uncordon worker1

kubectl get no

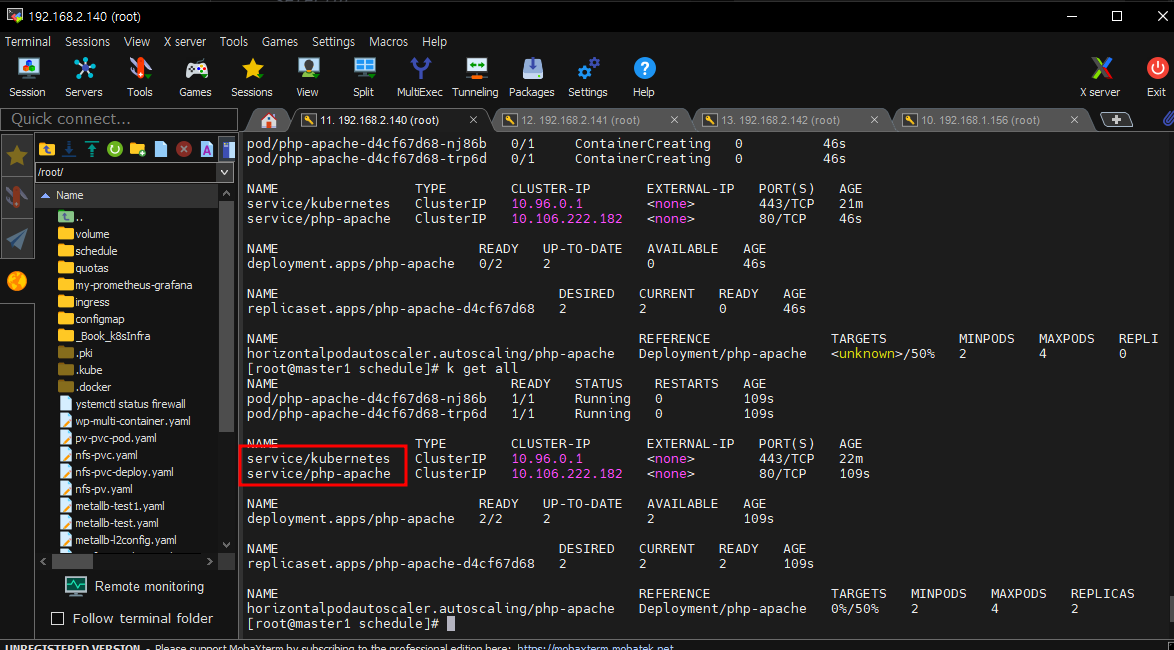

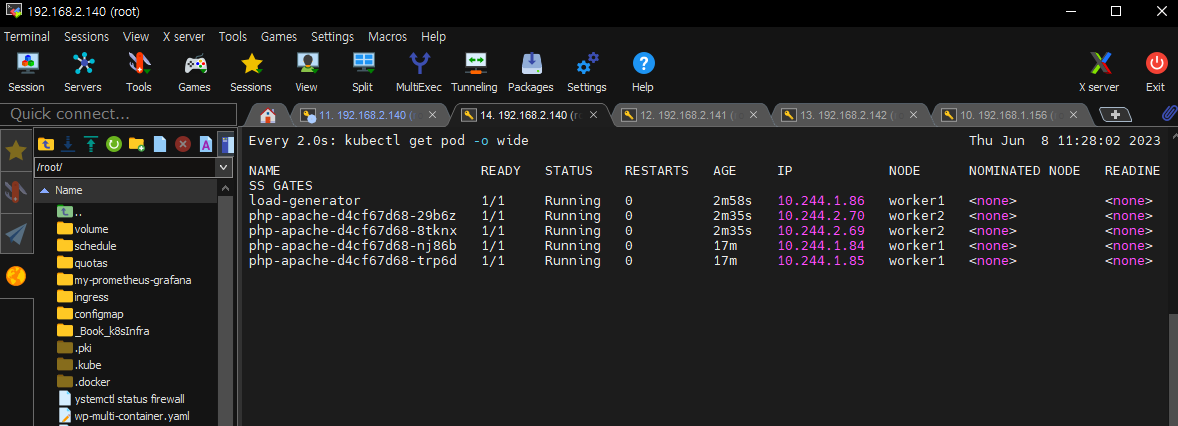

오토스케일링 실습(HPA: Horizontal Pod Autoscaler)

vi php-apache.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 2 # Desired capacity, 시작 갯수

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: k8s.gcr.io/hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

k apply -f ~ vi hpa.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

spec:

maxReplicas: 4

minReplicas: 2

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

targetCPUUtilizationPercentage: 50

status:

currentCPUUtilizationPercentage: 0

currentReplicas: 2

desiredReplicas: 2

kubectl apply -f hpa.yaml

kubectl get all

- pod들 사이에서 도메인으로 쓰인다

- master 탭 복제하고

실시간으로 2초마다 변화는 모습 볼수있음

watch kubectl get pod -o wide원래 master로 가서

docker pull busybox:1.28

docker tag busybox:1.28 192.168.1.156:5000/busybox:1.28

docker push 192.168.1.156:5000/busybox:1.28

- 부하걸기

kubectl run -i --tty load-generator --rm --image=192.168.1.156:5000/busybox:1.28 --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"- 추가됨

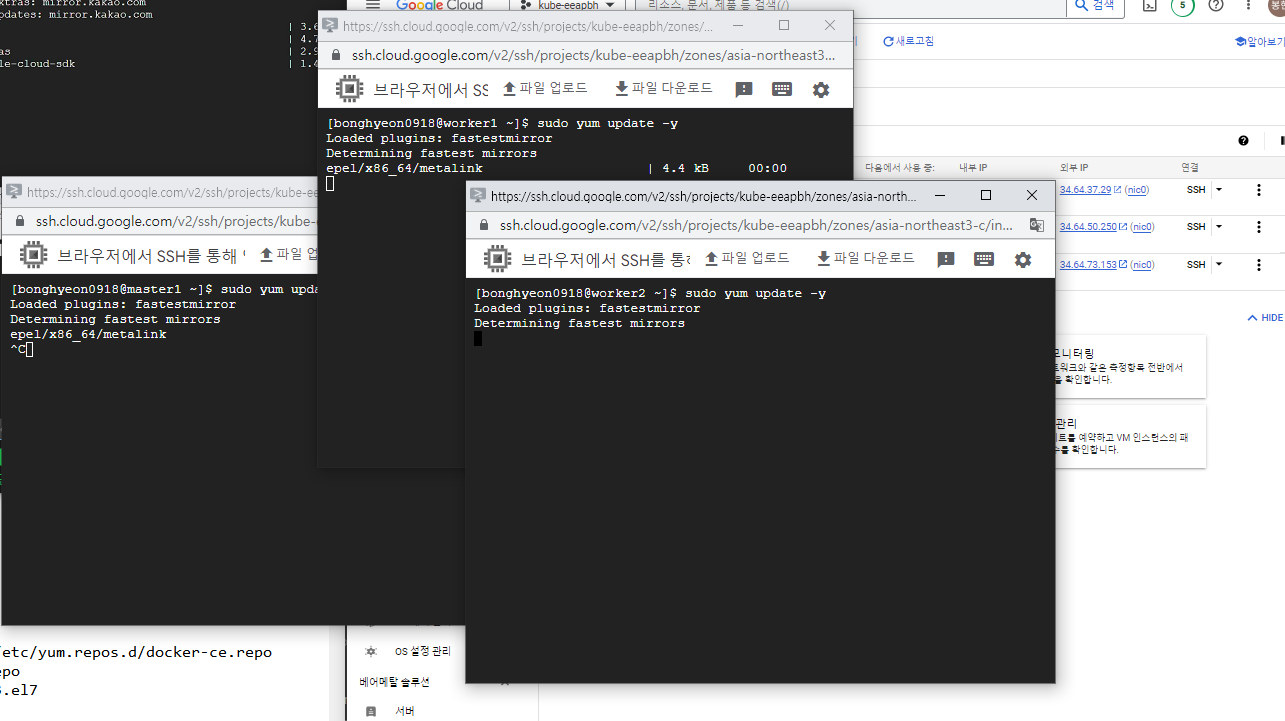

GCP

새로운 프로젝트 만들고 vm ㄱ

- master1만들기

똑같이 각각 가용영역 b, c로 worker1, worker2만든다.

- ssh로 접속후 다 업데이트 ㄱ

작업은 mobaXterm에서 할거임

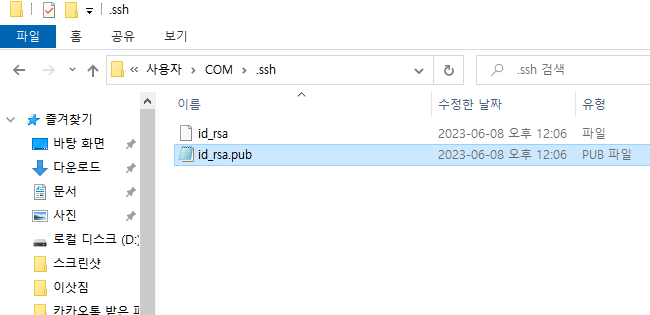

- win10 cmd

ssh-keygen

엔터 3번

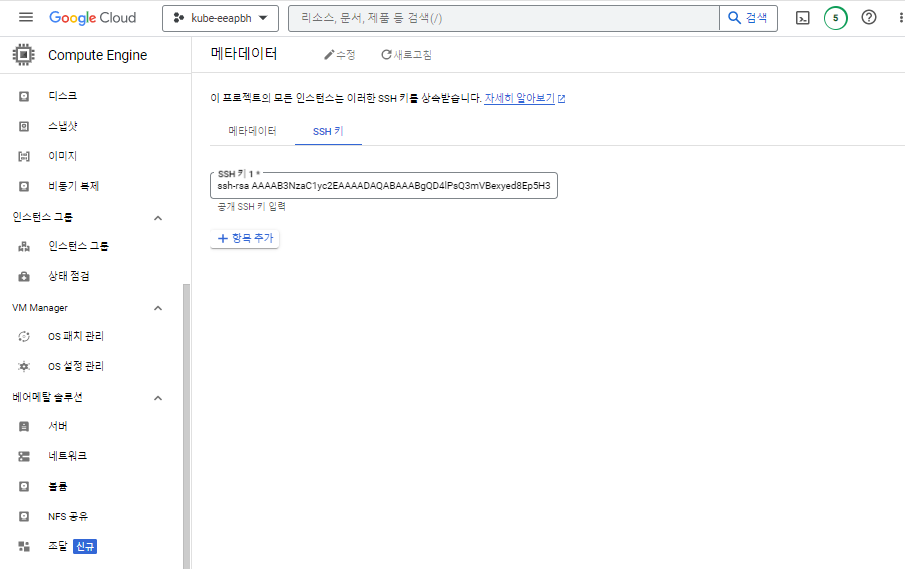

- GCP -> 메타데이터 -> ssh 키에 id_rsa.pub복사해서 ㄱ

mobaXterm으로 접속하고

--- All Node ---

sudo su - root

# cat <<EOF >> /etc/hosts

10.178.0.2 master1

10.178.0.3 worker1

10.178.0.4 worker2

EOF

curl https://download.docker.com/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker-ce.repo

setenforce 0

vi /etc/selinux/config

# -> disabled로 바꾸기

systemctl status firewalld

# sed -i -e "s/enabled=1/enabled=0/g" /etc/yum.repos.d/docker-ce.repo

# yum --enablerepo=docker-ce-stable -y install docker-ce-19.03.15-3.el7

# mkdir /etc/docker

# cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

# systemctl enable --now docker

# systemctl daemon-reload

# systemctl restart docker

# systemctl disable --now firewalld

# setenforce 0

# sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

# swapoff -a

# sed -i '/ swap / s/^/#/' /etc/fstab # 성능저하 필요없어서 swap 끔

# cat <<EOF > /etc/sysctl.d/k8s.conf # kubernetes

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# sysctl --system

# reboot

다시 접속 ㄱ

sudo su - root

--- Kubeadm 설치 (Multi Node: Master Node, Worker Node)

# cat <<'EOF' > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-$basearch

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

# yum -y install kubeadm-1.19.16-0 kubelet-1.19.16-0 kubectl-1.19.16-0 --disableexcludes=kubernetes

# systemctl enable --now kubeletmaster1

kubeadm init --apiserver-advertise-address=10.178.0.2 --pod-network-cidr=10.244.0.0/16

- 복붙 ㄱㄱ

kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

- 잘뜬다.

mkdir workspace && cd $_

kubectl run nginx-pod --image=nginx

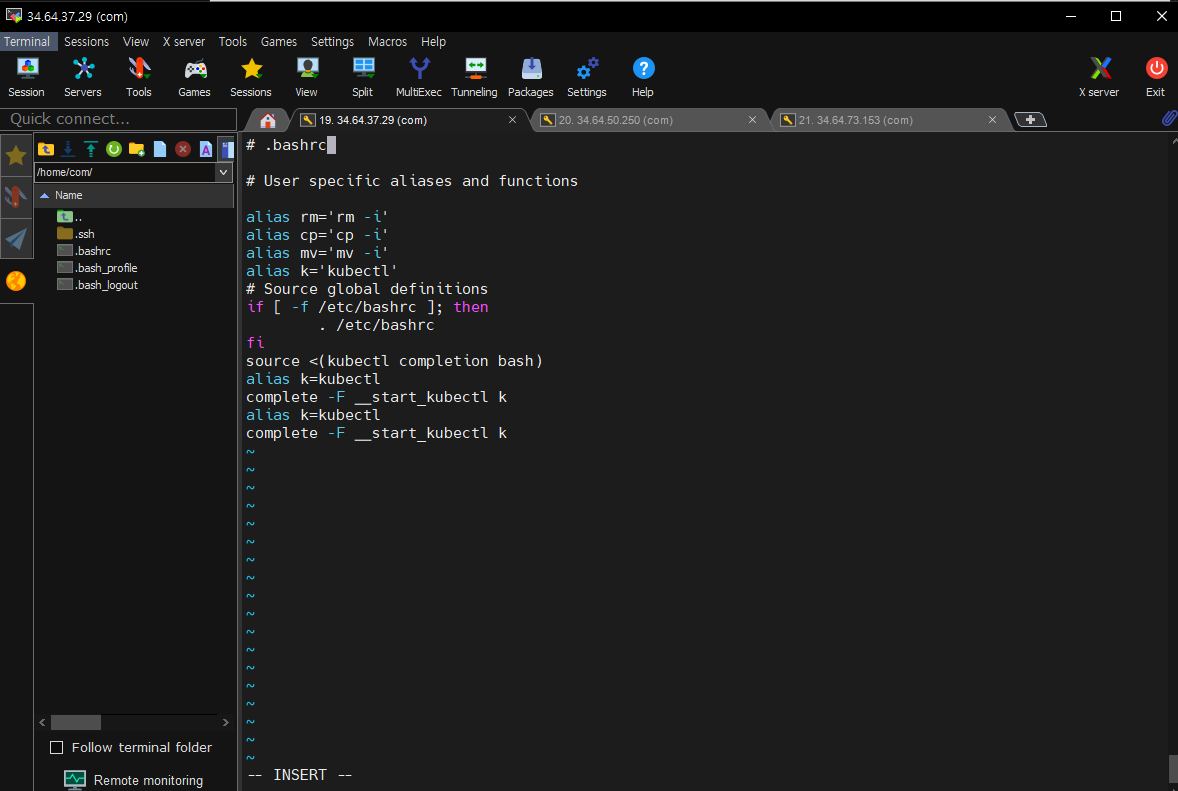

vi ~/.bashrc

alias k=kubectl

complete -o default -F __start_kubectl k

kubectl expose pod nginx-pod --name loadbalancer --type=LoadBalancer --external-ip 10.178.0.3 --port 80-

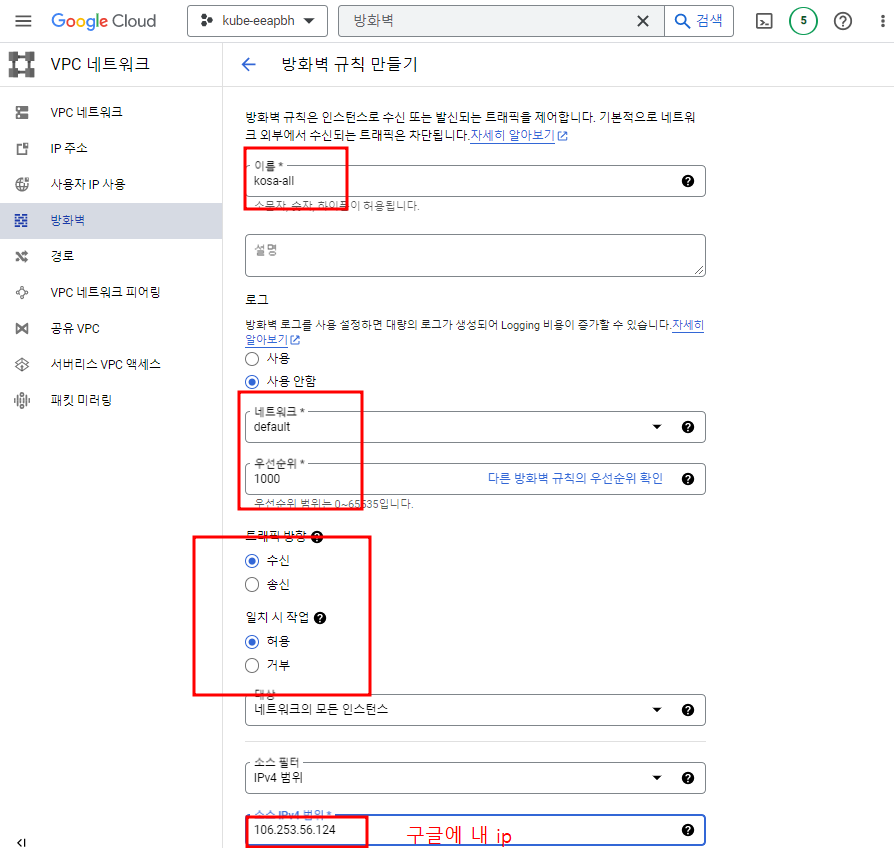

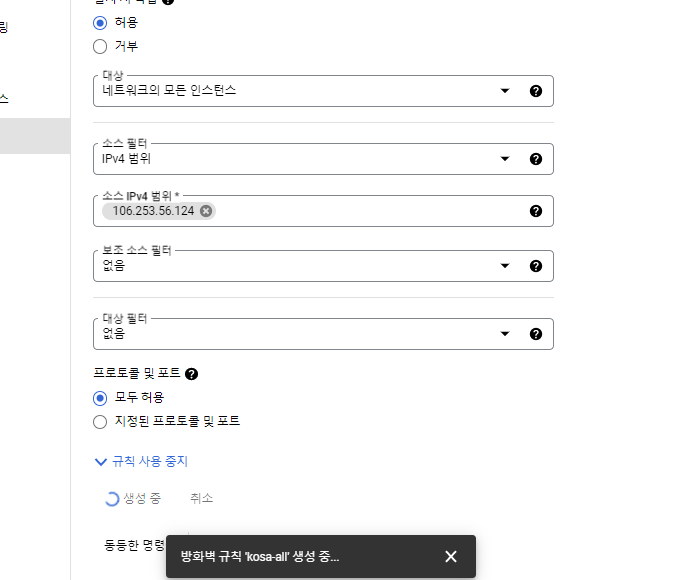

방화벽 규칙 만들기

-

만들기 ㄱ

ReplicaSet은 deployment와 똑같지만 rolling안된다.

master

git clone https://github.com/hali-linux/_Book_k8sInfra.git

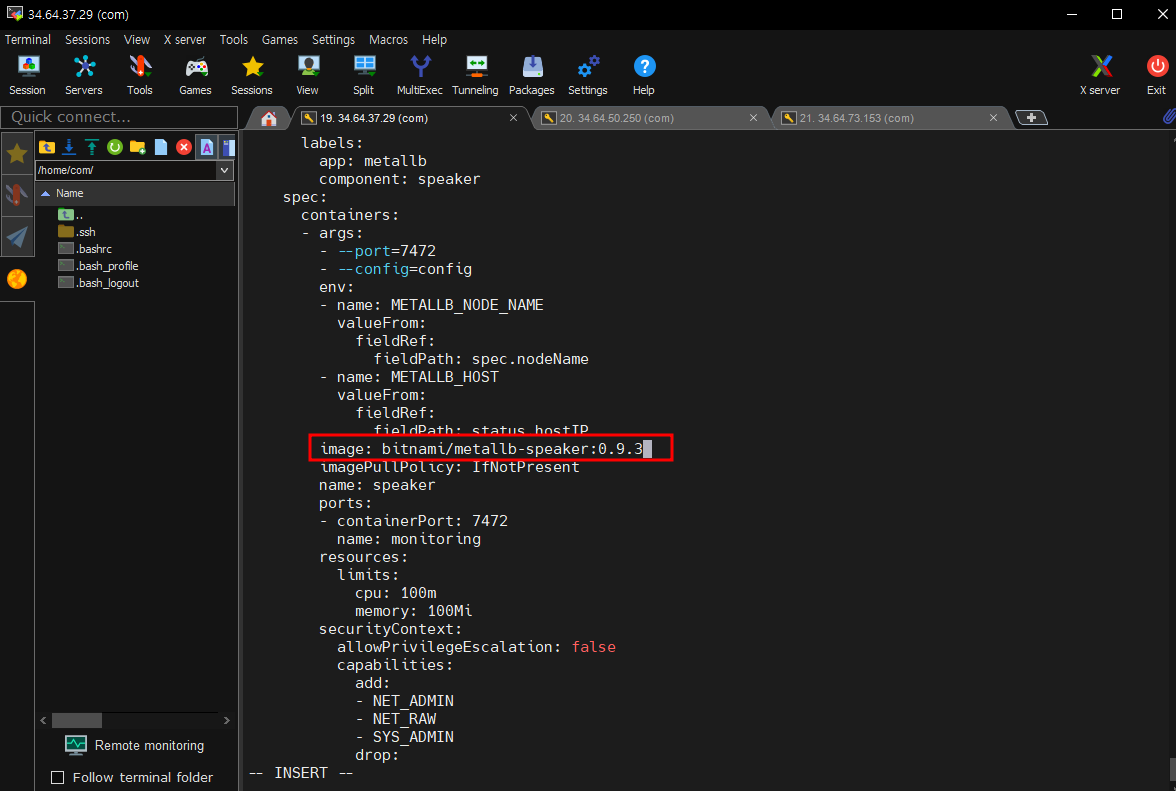

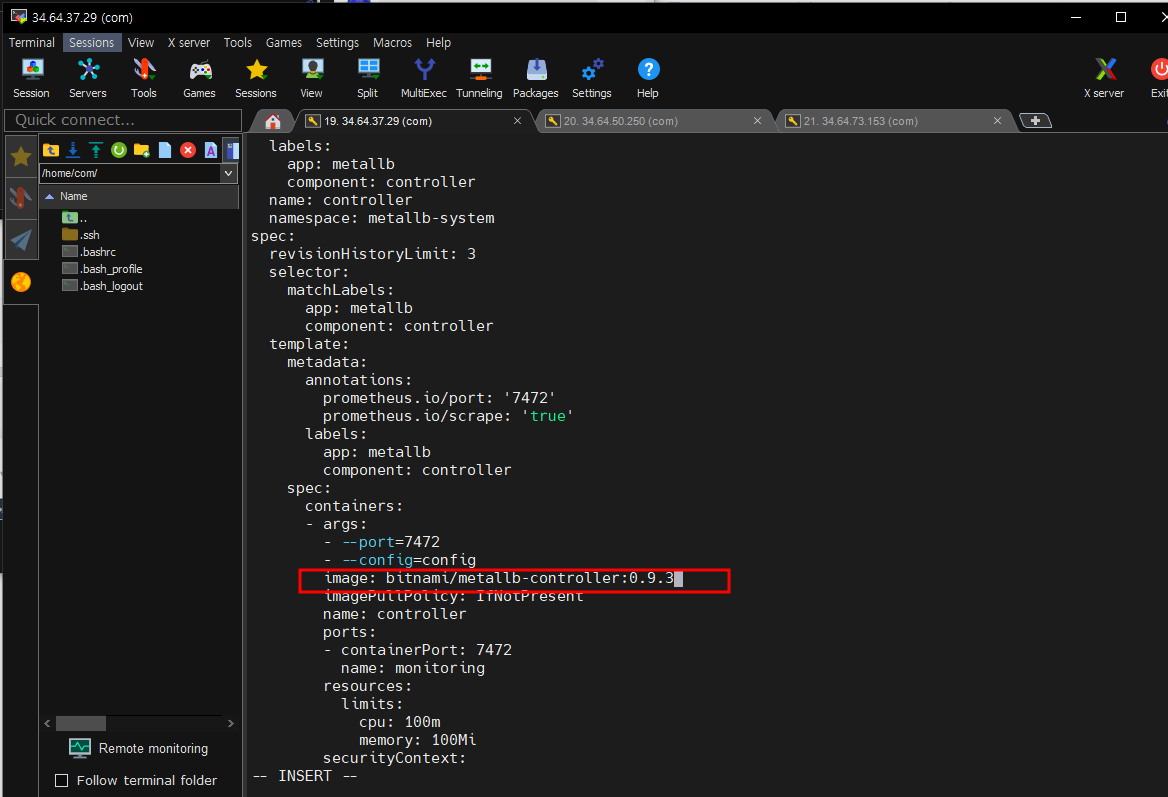

vi /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

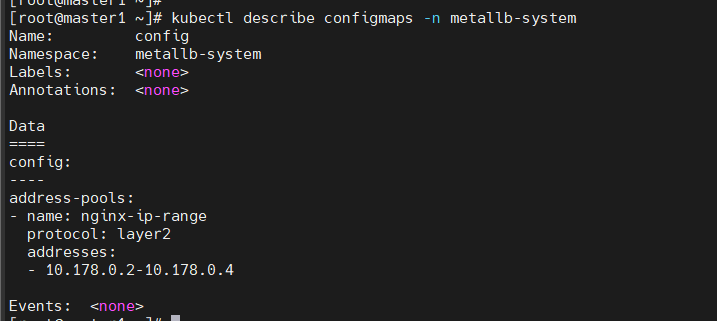

# vi metallb-l2config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: nginx-ip-range

protocol: layer2

addresses:

- 10.178.0.2-10.178.0.4

k delete all --all

vi deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 4

selector:

matchLabels:

app: nginx-deployment

template:

metadata:

name: nginx-deployment

labels:

app: nginx-deployment

spec:

containers:

- name: nginx-deployment-container

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-deployment

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.0.143

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80

k apply -f deployment.yaml

k get all -o wide

curl ipconfig.io

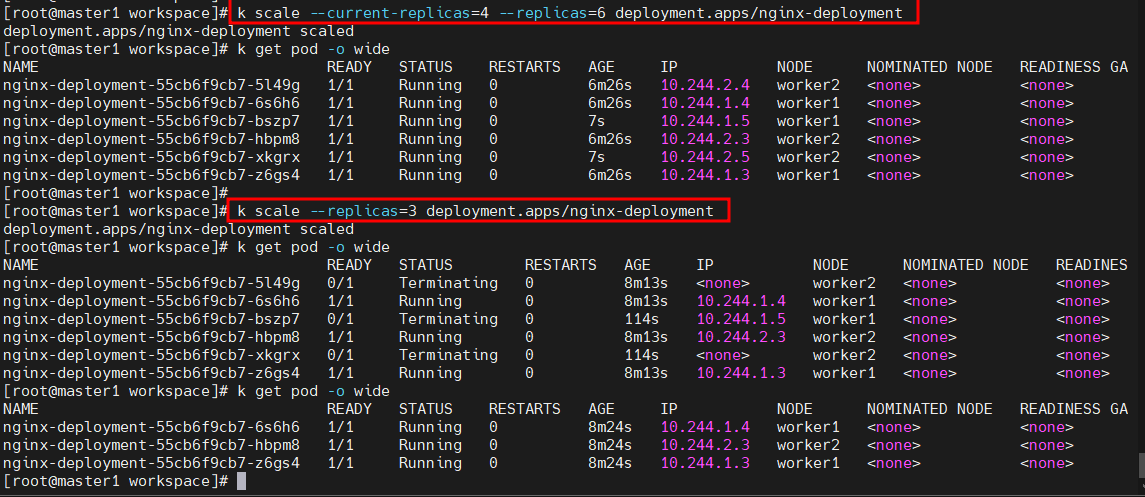

- scale out 해보기

k scale --current-replicas=4 --replicas=6 deployment.apps/nginx-deployment

k get pod -o wide

- scale in 해보기

k scale --replicas=3 deployment.apps/nginx-deployment

- 이미지 바꿔보기

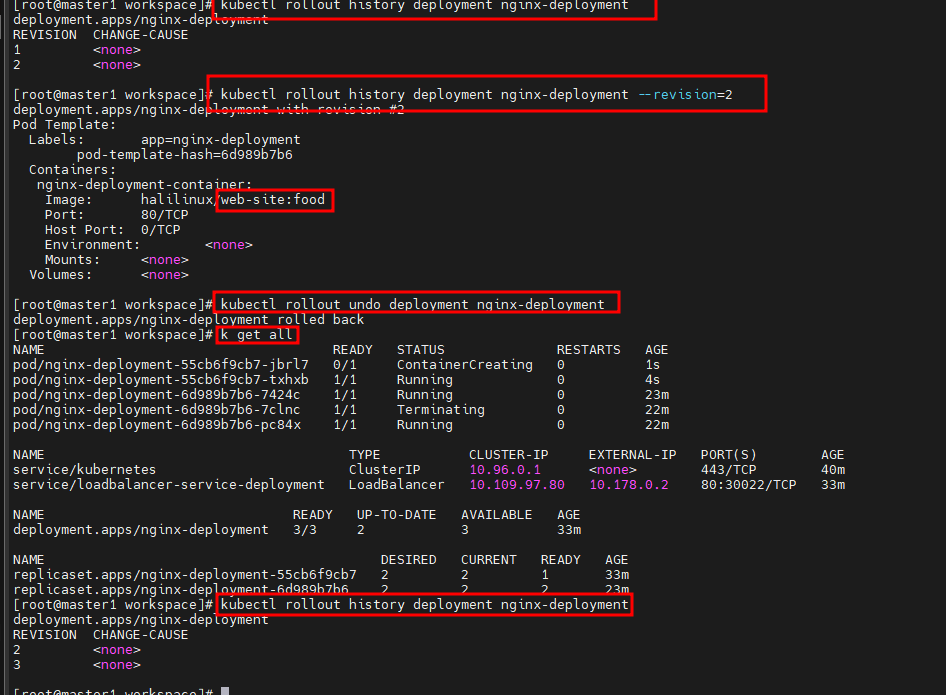

kubectl set image deployment.apps/nginx-deployment nginx-deployment-container=halilinux/web-site:food

- 전버전으로 롤백

multi-container

vi multipod.yaml

apiVersion: v1

kind: Pod

metadata:

name: multipod

spec:

containers:

- name: nginx-container #1번째 컨테이너

image: nginx:1.14

ports:

- containerPort: 80

- name: centos-container #2번째 컨테이너

image: centos:7

command:

- sleep

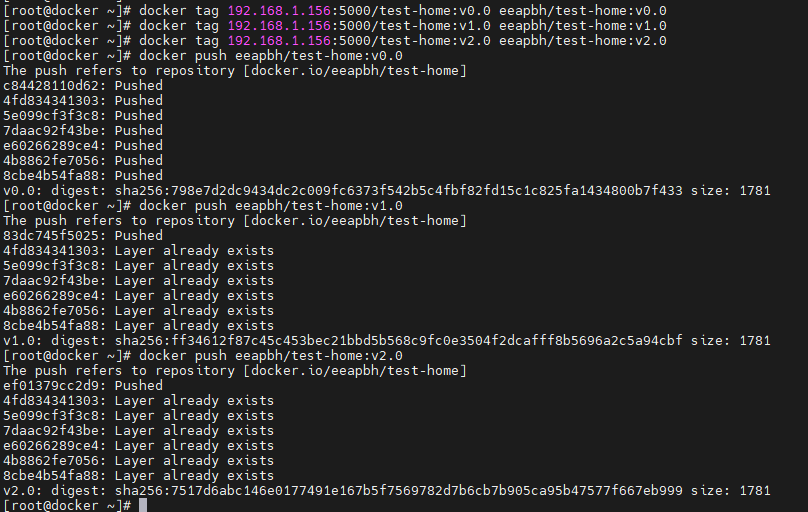

- "10000"docker 에서 push작업하고

Ingress

# kubectl get pods -n ingress-nginx

# mkdir ingress && cd $_

# vi ingress-deploy.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: foods-deploy

spec:

replicas: 1

selector:

matchLabels:

app: foods-deploy

template:

metadata:

labels:

app: foods-deploy

spec:

containers:

- name: foods-deploy

image: eeapbh/test-home:v1.0

---

apiVersion: v1

kind: Service

metadata:

name: foods-svc

spec:

type: ClusterIP

selector:

app: foods-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sales-deploy

spec:

replicas: 1

selector:

matchLabels:

app: sales-deploy

template:

metadata:

labels:

app: sales-deploy

spec:

containers:

- name: sales-deploy

image: eeapbh/test-home:v2.0

---

apiVersion: v1

kind: Service

metadata:

name: sales-svc

spec:

type: ClusterIP

selector:

app: sales-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: home-deploy

spec:

replicas: 1

selector:

matchLabels:

app: home-deploy

template:

metadata:

labels:

app: home-deploy

spec:

containers:

- name: home-deploy

image: eeapbh/test-home:v0.0

---

apiVersion: v1

kind: Service

metadata:

name: home-svc

spec:

type: ClusterIP

selector:

app: home-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

# kubectl apply -f ingress-deploy.yaml

# kubectl get all# vi ingress-config.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /foods

backend:

serviceName: foods-svc

servicePort: 80

- path: /sales

backend:

serviceName: sales-svc

servicePort: 80

- path:

backend:

serviceName: home-svc

servicePort: 80

# kubectl apply -f ingress-config.yaml# vi ingress-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

- name: https

protocol: TCP

port: 443

targetPort: 443

selector:

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

# externalIPs:

# - 192.168.2.0

# kubectl apply -f ingress-service.yaml--- Volume

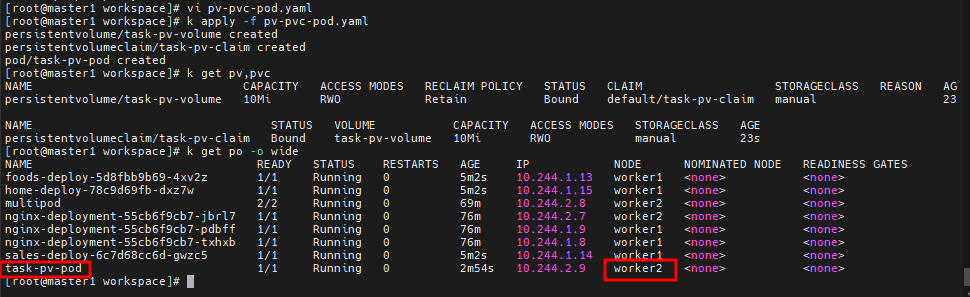

- pv/pvc

# pv-pvc-pod.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Mi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Mi

selector:

matchLabels:

type: local

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

labels:

app: task-pv-pod

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- name: task-pv-container

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

k get pv,pvc

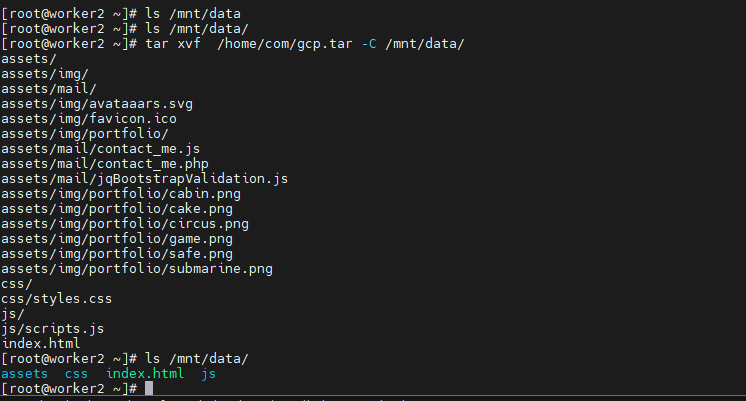

worker2

gcp.tar 업로드하고

tar xvf /home/com/gcp.tar -C /mnt/data/

master1

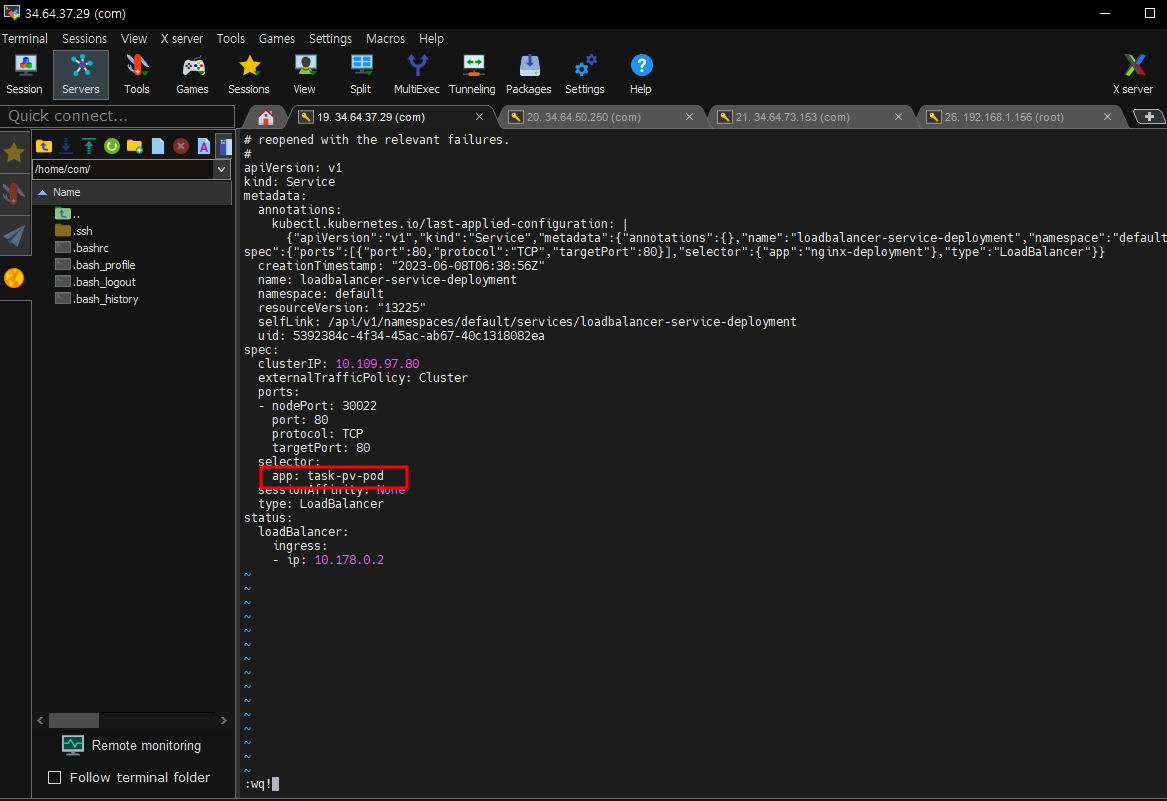

k get svc -o wide

k edit svc loadbalancer-service-deployment-

라벨 바꿔주기

-

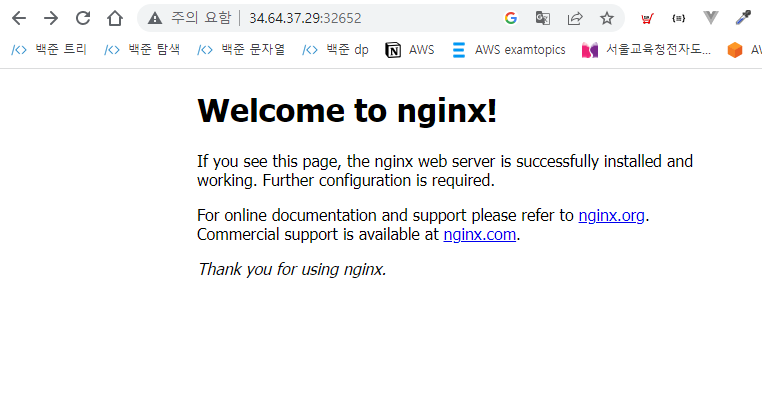

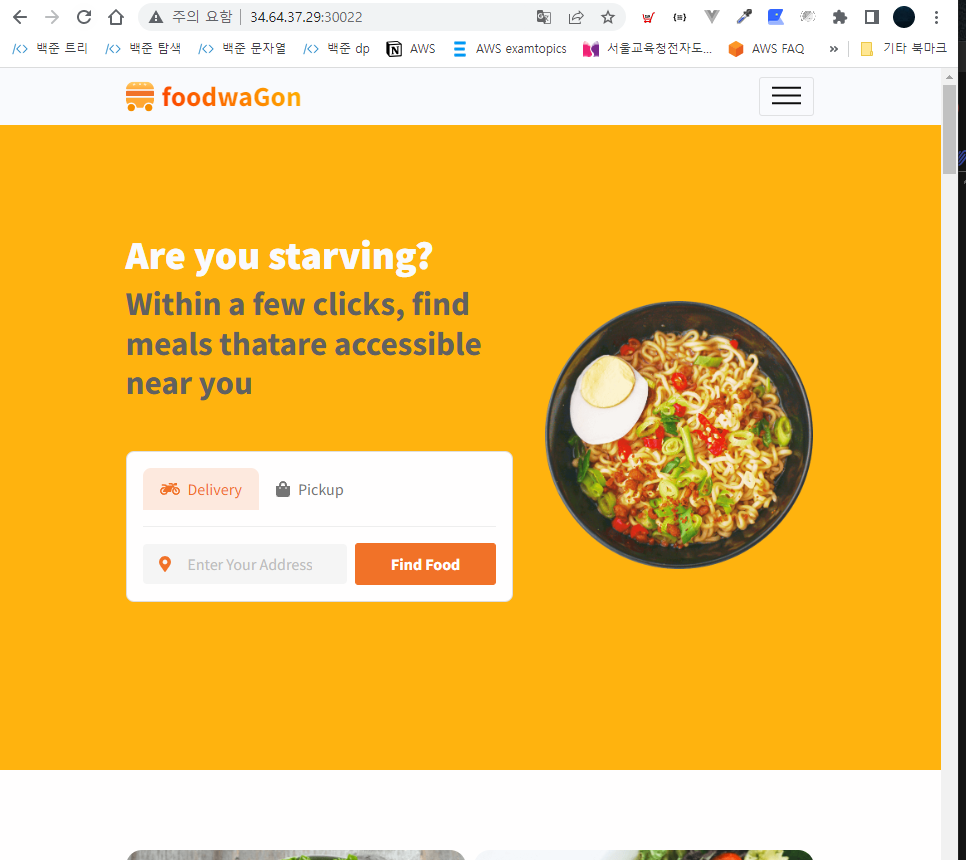

이제 gcp가뜬다.