2주차 Network

본 페이지는 AWS EKS Workshop 실습 스터디의 일환으로 매주 실습 결과와 생각을 기록하는 장으로써 작성하였습니다.

Keyword

- 2주차 강의 내용을 마인드 맵 형태로 정리해보자면 다음과 같다.

AWS VPC CNI

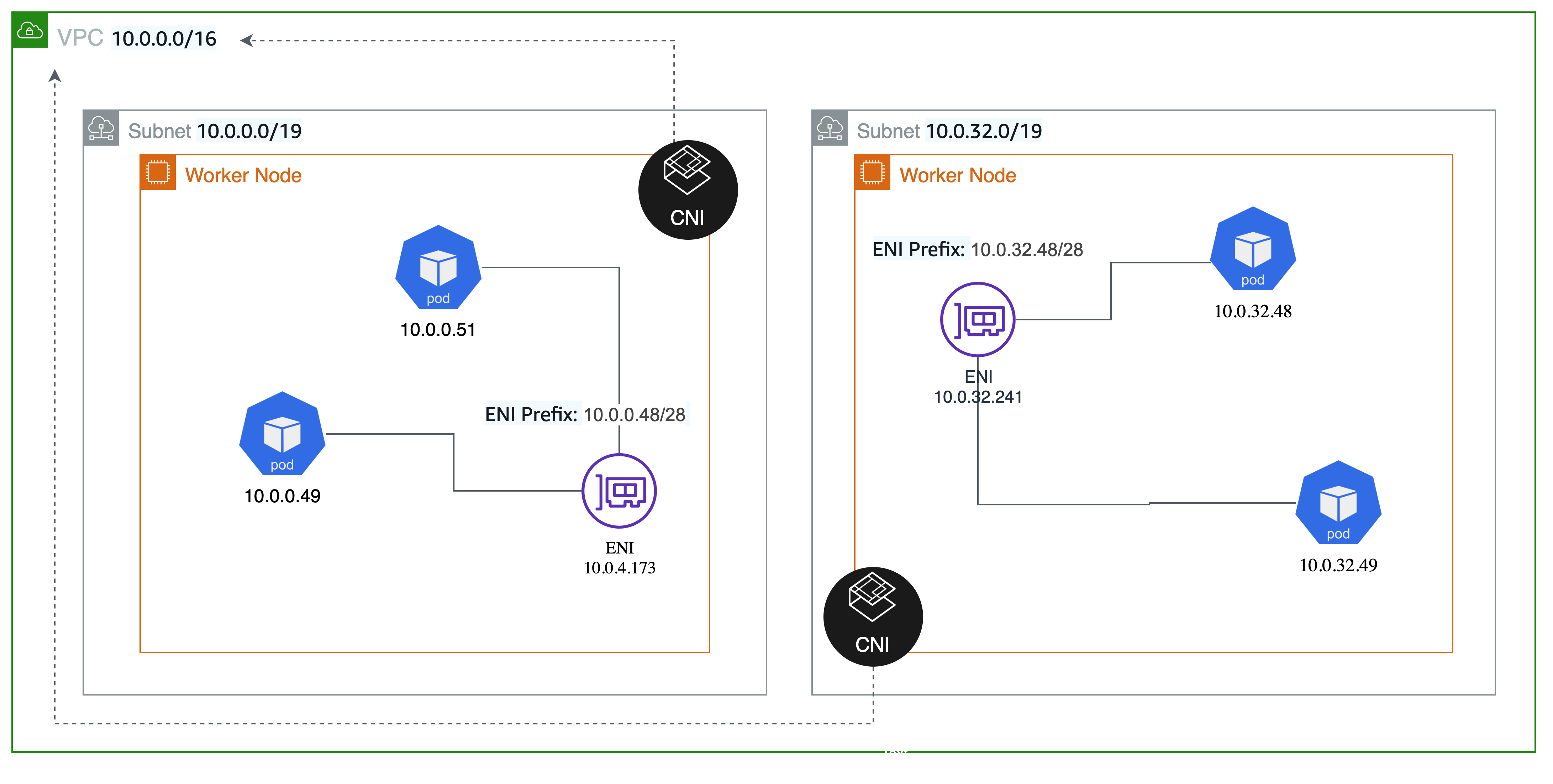

- EKS 클러스터는 AWS VPC 내부에 구현되므로 네트워크 구성을 AWS VPC 기준으로 구성한다. 따라서, 파드는 클러스터가 구현된 VPC 내부 서브넷의 CIDR 대역에 해당하는 IP 주소를 할당받는다.

- 이러한 AWS VPC 네트워크와 EKS 네트워크 간 인터페이스 역할을 수행하는 Network Addon을 AWS VPC CNI(Container Networking Interface)라 한다.

- 이러한 AWS VPC 네트워크와 EKS 네트워크 간 인터페이스 역할을 수행하는 Network Addon을 AWS VPC CNI(Container Networking Interface)라 한다.

- VPC CNI를 사용함으로써 얻게되는 장점은 다음과 같다.

- VPC 기반 리소스들-VPC Flow Logs, Security Group…을 사용할 수 있다. 예컨데 Flow Logs 사용 시 파드에서 송수신되는 IP 트래픽 흐름을 파악할 수 있다.

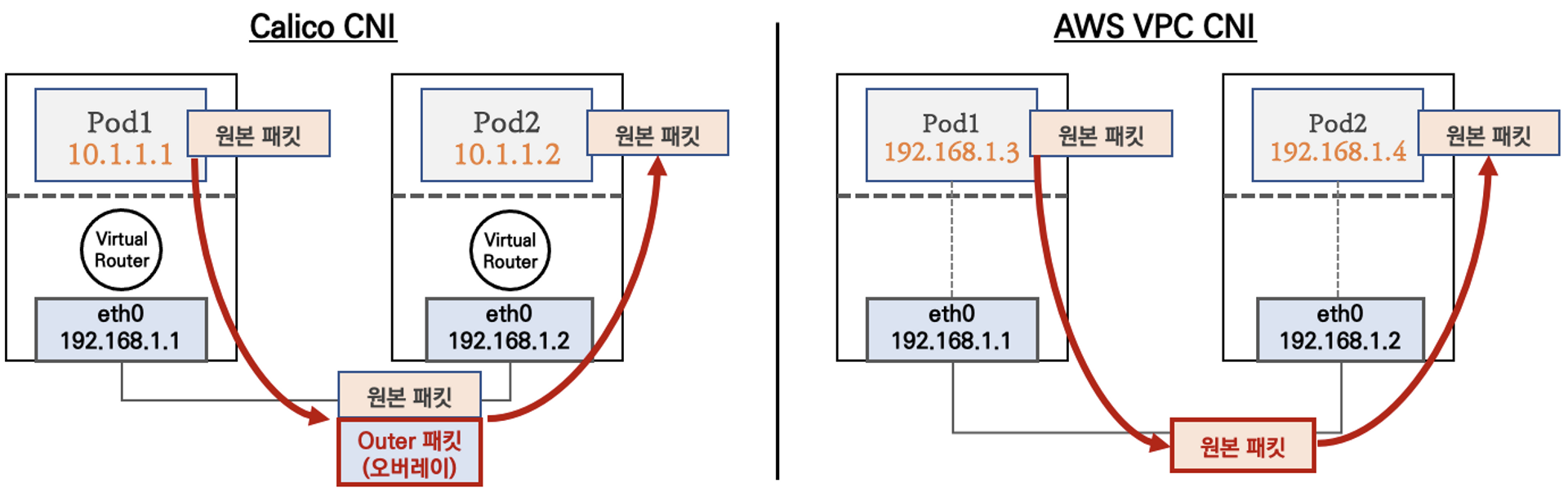

- K8S 기반 CNI와 다르게 Overlay를 통한 패킷의 캡슐화 과정이 필요없다. 즉, 캡슐화에 필요한 자원의 소모를 줄일 수 있고, 지연시간이 짧아지므로 네트워크 성능이 향상된다.

💡Calico CNI와 다르게 VPC와 Pod의 IP 대역이 동일하므로 별도의 캡슐화 과정 필요없이 Pod끼리 직접 통신이 가능하다.

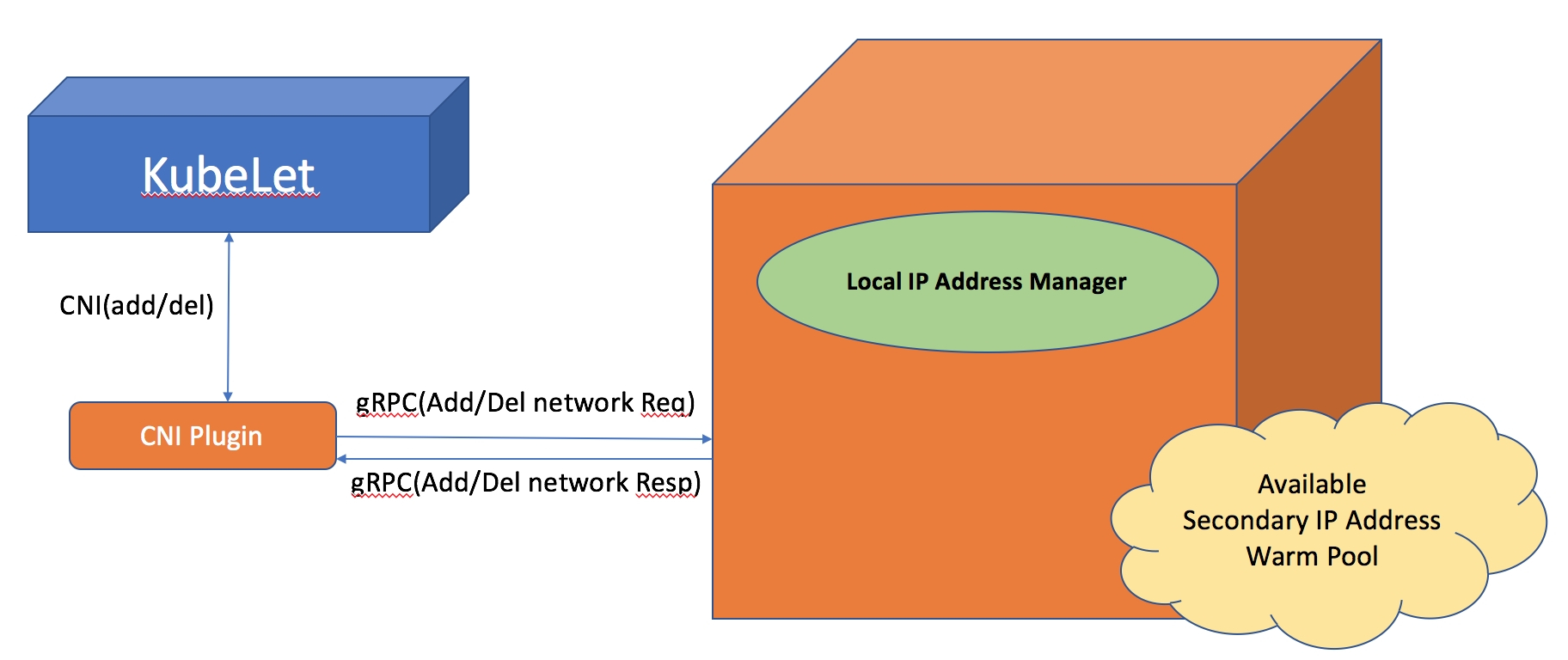

- 플러그인 설치 시, 각 노드에 필요한 네트워크 설정을 구성하는 DaemonSet인 aws-node의 일부로 L-IPAM(Local IP Address Manager)을 설치하는데 이를 통해 파드의 IP를 할당받는다.

$kubectl get daemonset --namespace kube-system NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE aws-node 3 3 3 3 3 <none> 65m kube-proxy 3 3 3 3 3 <none> 65m

L-IPAM이란?

- VPC CNI 깃허브 문서에 따르면 L-IPAM을 다음과 같이 설명한다.

-

각 노드에서 사용 가능한 보조 IP 주소의 warm-pool을 유지하기 위해 L-IPAM을 실행하며, Kubelet에서 파드를 추가하라는 요청을 받을 때마다 L-IPAM이 warm-pool에서 즉시 사용 가능한 보조 IP 주소 하나를 가져와서 파드에 할당한다.

-

L-IPAM은 노드(인스턴스)의 관련 정보인 메타데이터를 통해 사용 가능한 ENI와 보조 IP 주소를 파악하고, DaemonSet이 재시작될 때마다 Kubelet을 통해 파드 이름, 네임스페이스, IP 주소와 같은 현재 실행 중인 파드의 정보를 가져와서 warm-pool을 구축한다.

# 노드 내 ENI와 연결된 프라이빗 IPv4 주소 확인 $curl http://169.254.169.254/latest/meta-data/network/interfaces/macs/02:74:de:b5:a8:34/local-ipv4s 192.168.1.32 192.168.1.11 192.168.1.171 192.168.1.79 192.168.1.211 192.168.1.150 # 노드 Pod 정보 확인 $kubectl get --raw=/api/v1/pods ... "f:podIPs":{ ... "k:{\"ip\":\"192.168.1.32\"}":{ ... } }, ...

-

L-IPAM 동작 과정

-

다음과 같이 실습을 통해 L-IPAM 동작을 자세히 알아보자.

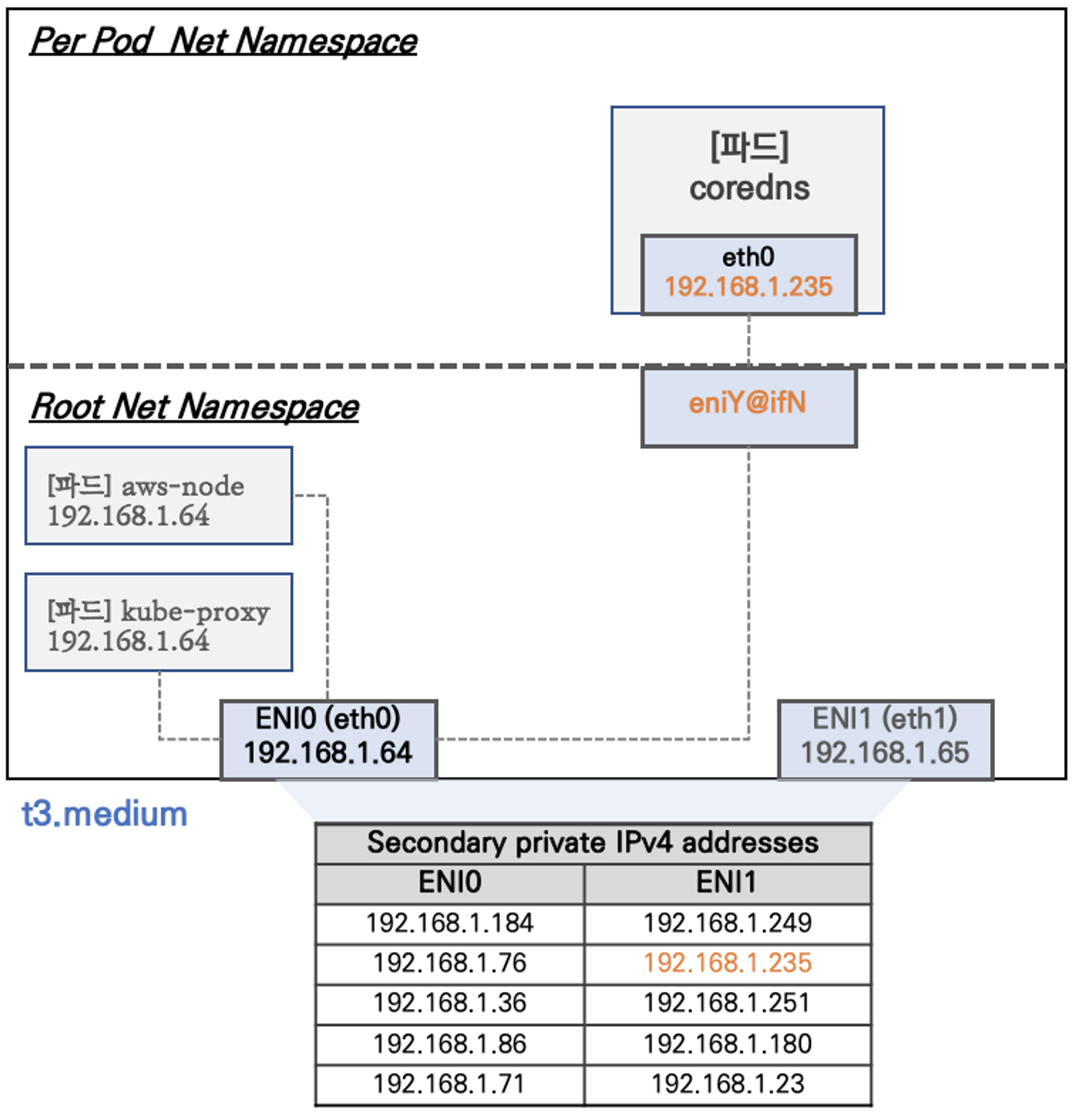

# coredns 파드는 현재 2개만 배포되어있다. $kubectl get pod -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES aws-node-m84hc 1/1 Running 0 87m 192.168.1.32 ip-192-168-1-32.ap-northeast-2.compute.internal <none> <none> aws-node-ngkvr 1/1 Running 0 87m 192.168.3.46 ip-192-168-3-46.ap-northeast-2.compute.internal <none> <none> aws-node-v97xr 1/1 Running 0 87m 192.168.2.124 ip-192-168-2-124.ap-northeast-2.compute.internal <none> <none> coredns-6777fcd775-jdqh7 1/1 Running 0 85m 192.168.2.246 ip-192-168-2-124.ap-northeast-2.compute.internal <none> <none> coredns-6777fcd775-lp7gj 1/1 Running 0 85m 192.168.1.11 ip-192-168-1-32.ap-northeast-2.compute.internal <none> <none> kube-proxy-f58wd 1/1 Running 0 85m 192.168.1.32 ip-192-168-1-32.ap-northeast-2.compute.internal <none> <none> kube-proxy-kdmxx 1/1 Running 0 85m 192.168.2.124 ip-192-168-2-124.ap-northeast-2.compute.internal <none> <none> kube-proxy-mn8pv 1/1 Running 0 85m 192.168.3.46 ip-192-168-3-46.ap-northeast-2.compute.internal <none> <none> # N1 = 위 파드 목록에서 aws-node, kube-proxy, coredns 파드에 해당하는 IP가 있다. $ssh ec2-user@$N1 sudo ip -c route 192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.32 192.168.1.11 dev eni4b74e98200f scope link # N2 = 위 파드 목록에서 aws-node, kube-proxy, coredns 파드에 해당하는 IP가 있다. $ssh ec2-user@$N2 sudo ip -c route 192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.124 192.168.2.246 dev enif8080686c7e scope link # N3 = 위 파드 목록에서 coredns에 해당하는 IP가 없다. $ssh ec2-user@$N3 sudo ip -c route 192.168.3.0/24 dev eth0 proto kernel scope link src 192.168.3.46 # N1 = eth0, eth1 확인 $ssh ec2-user@$N1 sudo ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.1.32 netmask 255.255.255.0 broadcast 192.168.1.255 eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.1.106 netmask 255.255.255.0 broadcast 192.168.1.255 # N2 = eth0, eth1 확인 $ssh ec2-user@$N2 sudo ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.2.124 netmask 255.255.255.0 broadcast 192.168.2.255 eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.2.44 netmask 255.255.255.0 broadcast 192.168.2.255 # N3 = eth0만 확인 # 즉, coredns 파드가 배포되지 않음으로 인해 eth1이 없음을 알 수 있다 $ssh ec2-user@$N3 sudo ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.3.46 netmask 255.255.255.0 broadcast 192.168.3.255 # coredns의 파드를 3개로 늘린다. $kubectl scale deployment/coredns -n kube-system --replicas=3 deployment.apps/coredns scaled $kubectl get pod -n kube-system -l k8s-app=kube-dns -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-6777fcd775-8lrs4 1/1 Running 0 2m36s 192.168.3.8 ip-192-168-3-46.ap-northeast-2.compute.internal <none> <none> coredns-6777fcd775-jdqh7 1/1 Running 0 122m 192.168.2.246 ip-192-168-2-124.ap-northeast-2.compute.internal <none> <none> coredns-6777fcd775-lp7gj 1/1 Running 0 122m 192.168.1.11 ip-192-168-1-32.ap-northeast-2.compute.internal <none> <none> # N3에서 eth1이 생성됨을 확인한다. $ssh ec2-user@$N3 sudo ip -c route 192.168.3.8 dev enif6e3a65e06f scope link $ssh ec2-user@$N3 sudo ifconfig eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.3.48 netmask 255.255.255.0 broadcast 192.168.3.255- 세 번째 노드는 coredns 파드 배포 전 eth0만 확인할 수 있는데 이를 통해 다음과 같은 사실을 유추할 수 있다.

- eth0은 기본적으로 생성되는 Primary ENI이다.

- aws-node와 kube-proxy는 노드와 동일하게 Primary ENI의 Private IPv4 주소를 사용한다.

- 첫, 두 번째 노드 내 coredns 파드는 보조 IPv4 주소 중 하나를 할당 받은 상태이다.

- 세 번째 노드에 coredns 파드가 생성되어야 eth1 즉, Secondary ENI를 생성하고 연결된 보조 Private IPv4 주소 중 하나를 할당한다.

- 위 결론을 통해 L-IPAM의 동작 원리를 파악할 수 있다.

- 최초 노드 생성 후 파드를 배포하지 않은 경우 특정 파드(kube-proxy, aws-node)를 제외한 나머지(coredns와 같은) 파드가 새로 배포되면 L-IPAM은 Secondary ENI를 생성한다.

- L-IPAM은 Secodary ENI의 보조 Private IPv4 주소를 관리하는 warm-pool에서 즉시 사용 가능한 IP 주소를 가져와서 새로 배포된 파드에 할당한다.

- 세 번째 노드는 coredns 파드 배포 전 eth0만 확인할 수 있는데 이를 통해 다음과 같은 사실을 유추할 수 있다.

-

다음과 같이 파드 30개를 배포해보자.

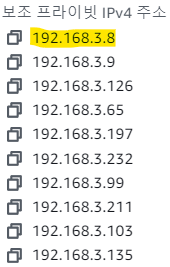

# create deployment curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/2/nginx-dp.yaml kubectl apply -f nginx-dp.yaml $kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-6fb79bc456-kxkrg 1/1 Running 0 56s 192.168.2.41 ip-192-168-2-124.ap-northeast-2.compute.internal <none> <none> nginx-deployment-6fb79bc456-mvkvx 1/1 Running 0 56s 192.168.3.9 ip-192-168-3-46.ap-northeast-2.compute.internal <none> <none> $kubectl get pod -o=custom-columns=NAME:.metadata.name,IP:.status.podIP NAME IP nginx-deployment-6fb79bc456-kxkrg 192.168.2.41 nginx-deployment-6fb79bc456-mvkvx 192.168.3.9 $kubectl scale deployment nginx-deployment --replicas=30 $kubectl get pod -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-6fb79bc456-476ng 1/1 Running 0 3m51s 192.168.1.79 ip-192-168-1-32.ap-northeast-2.compute.internal <none> <none> nginx-deployment-6fb79bc456-4hn7x 1/1 Running 0 3m51s 192.168.1.211 ip-192-168-1-32.ap-northeast-2.compute.internal <none> <none> nginx-deployment-6fb79bc456-7cj8r 1/1 Running 0 3m50s 192.168.1.202 ip-192-168-1-32.ap-northeast-2.compute.internal <none> <none> nginx-deployment-6fb79bc456-8f92z 1/1 Running 0 3m50s 192.168.3.76 ip-192-168-3-46.ap-northeast-2.compute.internal <none> <none> nginx-deployment-6fb79bc456-8ghtj 1/1 Running 0 3m50s 192.168.2.100 ip-192-168-2-124.ap-northeast-2.compute.internal <none> <none> nginx-deployment-6fb79bc456-8q5xc 1/1 Running 0 3m51s 192.168.3.21 ip-192-168-3-46.ap-northeast-2.compute.internal <none> <none> nginx-deployment-6fb79bc456-clrz8 1/1 Running 0 3m51s 192.168.1.150 ip-192-168-1-32.ap-northeast-2.compute.internal <none> <none> nginx-deployment-6fb79bc456-d5wxh 1/1 Running 0 3m51s 192.168.2.78 ip-192-168-2-124.ap-northeast-2.compute.internal <none> <none> ... # N1 IP 확인 $ssh ec2-user@$N1 sudo ip -c route 192.168.1.11 dev eni4b74e98200f scope link 192.168.1.24 dev eni3cc185d3af0 scope link 192.168.1.79 dev eni08b792d0dee scope link 192.168.1.83 dev enic6e9003acaa scope link 192.168.1.129 dev eni4a336668147 scope link 192.168.1.150 dev eni17d2116a1cc scope link 192.168.1.164 dev eni1a47d945267 scope link 192.168.1.171 dev eni5765e51469f scope link 192.168.1.194 dev eniab37df924c0 scope link 192.168.1.202 dev eni28ddc4a3d04 scope link 192.168.1.211 dev enic24bbca3278 scope link # N1 eth 개수 확인 $ssh ec2-user@$N1 sudo ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.1.32 netmask 255.255.255.0 broadcast 192.168.1.255 eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.1.106 netmask 255.255.255.0 broadcast 192.168.1.255 eth2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.1.57 netmask 255.255.255.0 broadcast 192.168.1.255 # N2 IP 확인 $ssh ec2-user@$N2 sudo ip -c route 192.168.2.41 dev eniff11a55cd8b scope link 192.168.2.45 dev eni73ca612c7f6 scope link 192.168.2.78 dev enib872af3c1f2 scope link 192.168.2.85 dev enie2ab3a96887 scope link 192.168.2.100 dev eni7033b469fc1 scope link 192.168.2.138 dev eniccb86b128db scope link 192.168.2.147 dev eni73cd89da3a4 scope link 192.168.2.196 dev eni856b9108742 scope link 192.168.2.202 dev eni3d6120077ac scope link 192.168.2.237 dev eni1d43724dc25 scope link 192.168.2.246 dev enif8080686c7e scope link # N2 eth 개수 확인 $ssh ec2-user@$N2 sudo ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.2.124 netmask 255.255.255.0 broadcast 192.168.2.255 eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.2.44 netmask 255.255.255.0 broadcast 192.168.2.255 eth2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.2.72 netmask 255.255.255.0 broadcast 192.168.2.255 # N3 IP 확인 $ssh ec2-user@$N3 sudo ip -c route 192.168.3.8 dev enif6e3a65e06f scope link 192.168.3.9 dev eni4164e435b01 scope link 192.168.3.21 dev enibccf0266494 scope link 192.168.3.65 dev enia6b4fdc4911 scope link 192.168.3.76 dev eni6219966cf28 scope link 192.168.3.106 dev enid5b2a453b52 scope link 192.168.3.126 dev eni128fb6b733d scope link 192.168.3.135 dev eni14db15d34d4 scope link 192.168.3.155 dev enid29bfa33b37 scope link 192.168.3.197 dev eniab60f8a47f1 scope link 192.168.3.198 dev enicdfb4fd62d8 scope link # N3 eth 개수 확인 $ssh ec2-user@$N3 sudo ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.3.46 netmask 255.255.255.0 broadcast 192.168.3.255 eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.3.48 netmask 255.255.255.0 broadcast 192.168.3.255 eth2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001 inet 192.168.3.147 netmask 255.255.255.0 broadcast 192.168.3.255- 파드를 30개 배포하였는데 왜 ENI가 3개씩 할당될까?

-

그 이유는 노드(인스턴스) 타입 별 최대 ENI 수와 ENI 당 Private IPv4 주소에 있다.

# t3 타입의 최대 ENI 수와 IPv4 주소 개수를 확인한다. $aws ec2 describe-instance-types --filters Name=instance-type,Values=t3.* \ --query "InstanceTypes[].{Type: InstanceType, MaxENI: NetworkInfo.MaximumNetworkInterfaces, IPv4addr: NetworkInfo.Ipv4AddressesPerInterface}" \ --output table -------------------------------------- | DescribeInstanceTypes | +----------+----------+--------------+ | IPv4addr | MaxENI | Type | +----------+----------+--------------+ | 15 | 4 | t3.2xlarge | | 15 | 4 | t3.xlarge | | 6 | 3 | t3.medium | | 12 | 3 | t3.large | | 2 | 2 | t3.nano | | 2 | 2 | t3.micro | | 4 | 3 | t3.small | +----------+----------+--------------+ # 현재 노드의 인스턴스 타입인 t3.medium의 경우 최대 ENI 수는 3개, IPv4 주소 개수는 6개이다. # 할당된 Private IPv4를 제외하고 다음과 같은 계산식을 성립할 수 있다. MaxENI * (IPv4addr - 1) # t3.medium의 경우 최대 배포 가능한 파드 개수는 15개이다. 3 * (6 - 1) = 15 # 노드 상세 정보의 Allocatable는 17개가 찍히는데 이는 aws-node와 kube-proxy를 포함한 결과이다. $kubectl describe node | grep Allocatable: -A7 Allocatable: attachable-volumes-aws-ebs: 25 cpu: 1930m ephemeral-storage: 27905944324 hugepages-1Gi: 0 hugepages-2Mi: 0 memory: 3388360Ki pods: 17 # 최대 파드 수 제한을 넘기는 배포를 진행하면 남는 수만큼 파드가 pending 상태에 머무른다. $kubectl scale deployment nginx-deployment --replicas=50 $kubectl get pods | grep Pending nginx-deployment-6fb79bc456-5vspd 0/1 Pending 0 83s nginx-deployment-6fb79bc456-87wth 0/1 Pending 0 83s ... $kubectl describe pod nginx-deployment-6fb79bc456-5vspd | grep Events: -A5 Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 3m14s default-scheduler 0/3 nodes are available: 3 Too many pods. preemption: 0/3 nodes are available: 3 No preemption victims found for incoming pod.

-

- 파드를 30개 배포하였는데 왜 ENI가 3개씩 할당될까?

파드 통신

-

노드 간 파드 통신을 시도할 때 직접 통신이 되는지 확인해보자.

# 테스트 파드(netshoot-pod) 생성 $cat <<EOF | kubectl create -f - apiVersion: apps/v1 kind: Deployment metadata: name: netshoot-pod spec: replicas: 3 selector: matchLabels: app: netshoot-pod template: metadata: labels: app: netshoot-pod spec: containers: - name: netshoot-pod image: nicolaka/netshoot command: ["tail"] args: ["-f", "/dev/null"] terminationGracePeriodSeconds: 0 EOF # 파드 이름 및 IP 변수 지정 $PODNAME1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].metadata.name}) $PODNAME2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].metadata.name}) $PODNAME3=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[2].metadata.name}) $PODIP1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].status.podIP}) $PODIP2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].status.podIP}) $PODIP3=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[2].status.podIP}) $echo $PODIP1, $PODIP2, $PODIP3 192.168.3.103, 192.168.1.79, 192.168.2.78 # 파드1 > 파드2 ping 테스트 $kubectl exec -it $PODNAME1 -- ping -c 2 $PODIP2 # 파드2 > 파드3 ping 테스트 $kubectl exec -it $PODNAME2 -- ping -c 2 $PODIP3 # 파드3 > 파드1 ping 테스트 $kubectl exec -it $PODNAME3 -- ping -c 2 $PODIP1 -

파드에서 외부 통신은 어떤 흐름인지 확인해보자

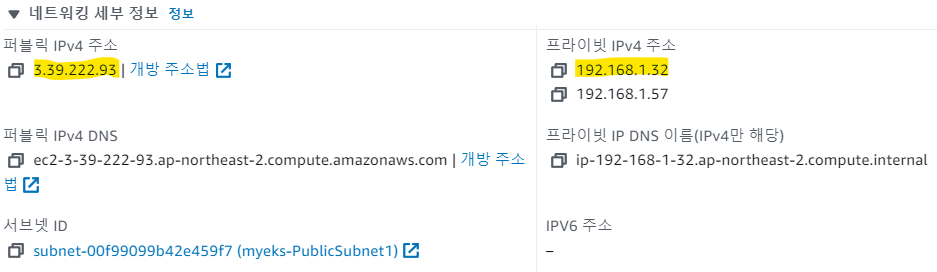

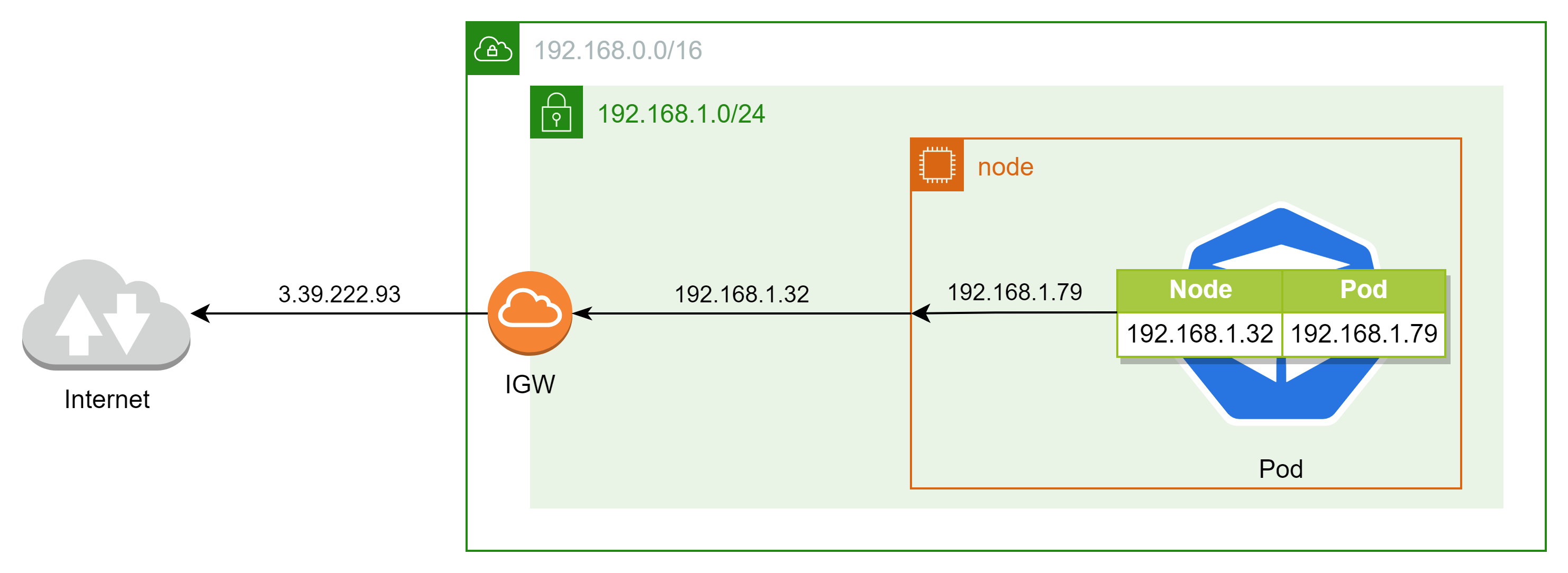

$kubectl exec -it $PODNAME1 -- ping -c 1 www.google.com # 공인 IP 확인 $ssh ec2-user@$N1 curl -s ipinfo.io/ip ; echo 3.39.222.93 # 외부 통신 시 아래 Source NAT Rule에 의해서 Node의 Public IP로 통신한다. $ssh ec2-user@$N1 sudo iptables -t nat -S | grep 'A AWS-SNAT-CHAIN' -A AWS-SNAT-CHAIN-0 ! -d 192.168.0.0/16 -m comment --comment "AWS SNAT CHAIN" -j AWS-SNAT-CHAIN-1 -A AWS-SNAT-CHAIN-1 ! -o vlan+ -m comment --comment "AWS, SNAT" -m addrtype ! --dst-type LOCAL -j SNAT --to-source 192.168.1.32 --random-fully $ssh ec2-user@$N1 watch -d 'sudo iptables -v --numeric --table nat --list AWS-SNAT-CHAIN-0; echo ; sudo iptables -v --numeric --table nat --list AWS-SNAT-CHAIN-1; echo ; sudo iptables -v --numeric --table nat --list KUBE-POSTROUTING' Chain AWS-SNAT-CHAIN-1 (1 references) pkts bytes target prot opt in out source destination 22566 1371K SNAT all -- * !vlan+ 0.0.0.0/0 0.0.0.0/0 /* AWS, SNAT */ ADDRTYPE match dst-type !LOCAL to:192.168.1.32 random-fully- 파드에서 외부로 통신을 수행할 때는 파드 내부의 Source NAT Rule에 의해 Node의 Public IP로 통신을 수행한다.

- 파드에서 외부로 통신을 수행할 때는 파드 내부의 Source NAT Rule에 의해 Node의 Public IP로 통신을 수행한다.

Service

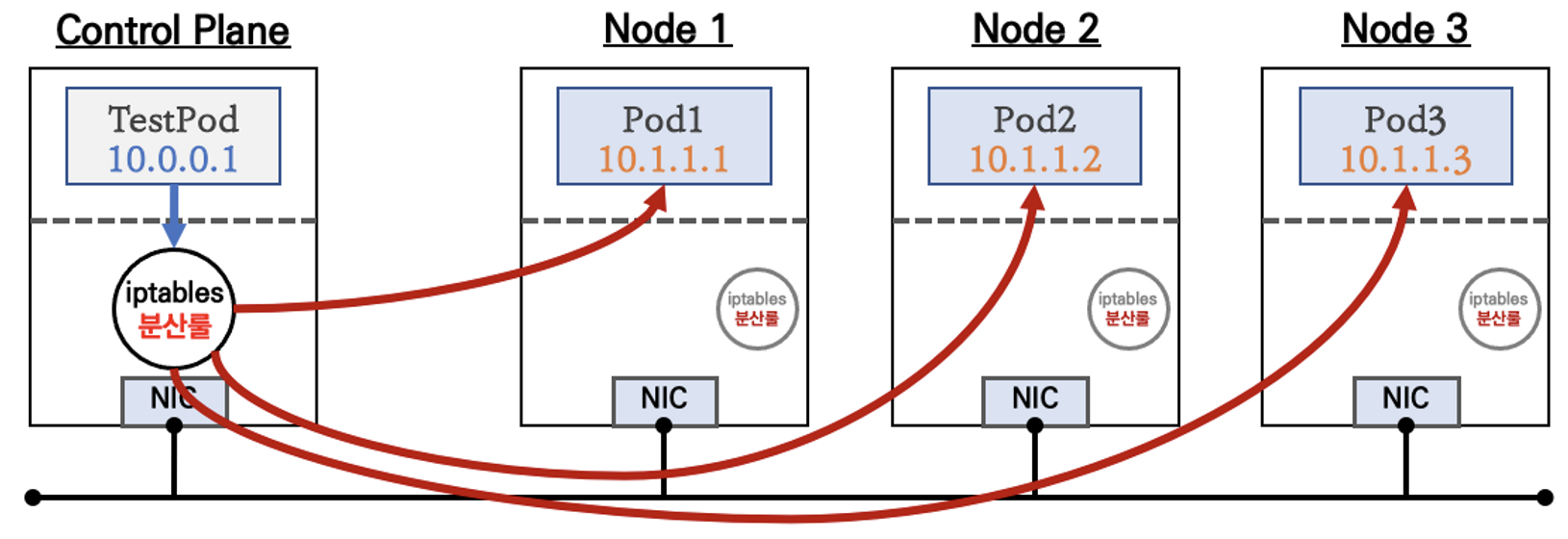

- Pod는 생성될 때마다 IP가 변경되는(Ephemeral) 특성을 가지므로 클러스터 내/외부와 통신을 유지하는 것은 어렵기 때문에 K8S는 파드가 외부와 통신할 수 있도록 고정 IP를 제공하여 단일 고정 진입점을 갖는 서비스를 지원한다.

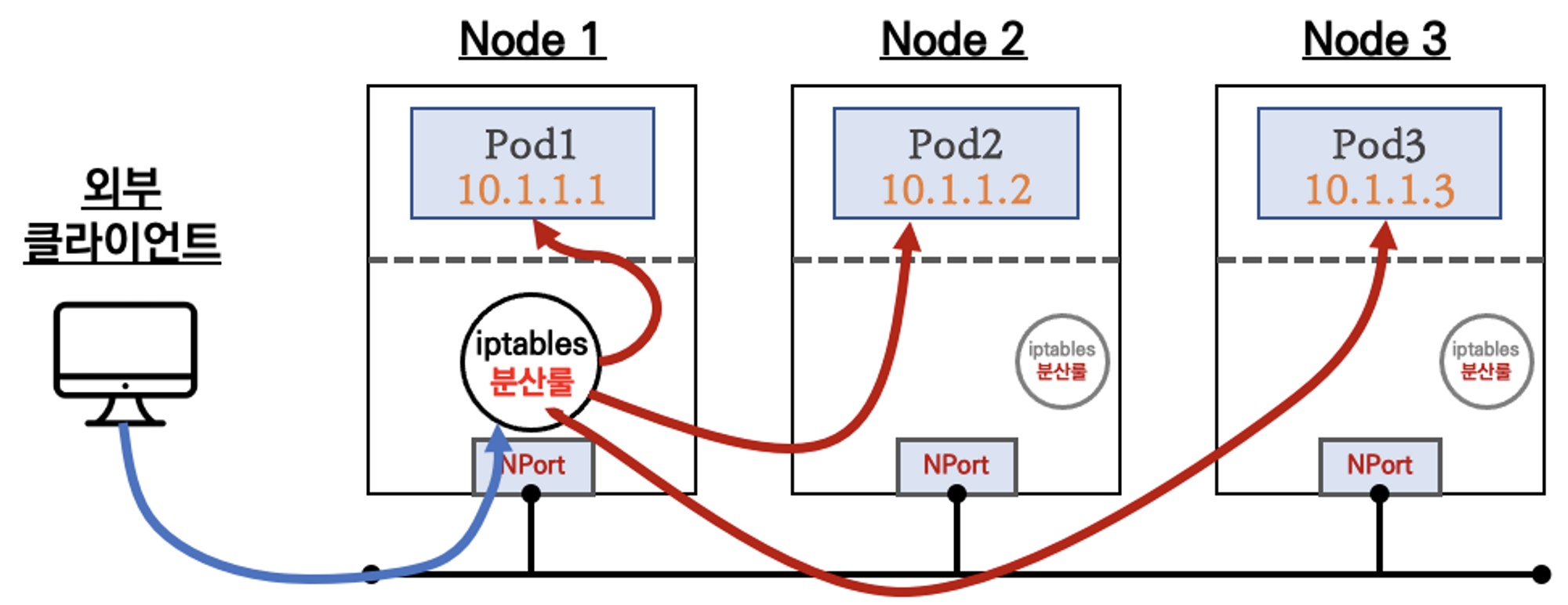

- 서비스의 유형으로는 크게 ClusterIP, NodePort, Loadbalancer 등을 지원한다.

- ClusterIP - Virtual IP를 통해 클러스터 내부 통신을 지원하는 서비스

- NodePort - 외부에서 노드의 <IP:Port>를 통해 들어온 트래픽을 내부에서 ClusterIP를 통해 파드를 구별하여 전달하는 서비스

- LoadBalaner - 클라우드 업체에서 제공하는 로드밸런서 리소스를 서비스로 프로비저닝하여 지원한다.

- AWS : Classic Loadbalancer, Network Loadbalancer 지원

- AWS : Classic Loadbalancer, Network Loadbalancer 지원

- ClusterIP - Virtual IP를 통해 클러스터 내부 통신을 지원하는 서비스

- 서비스의 유형으로는 크게 ClusterIP, NodePort, Loadbalancer 등을 지원한다.

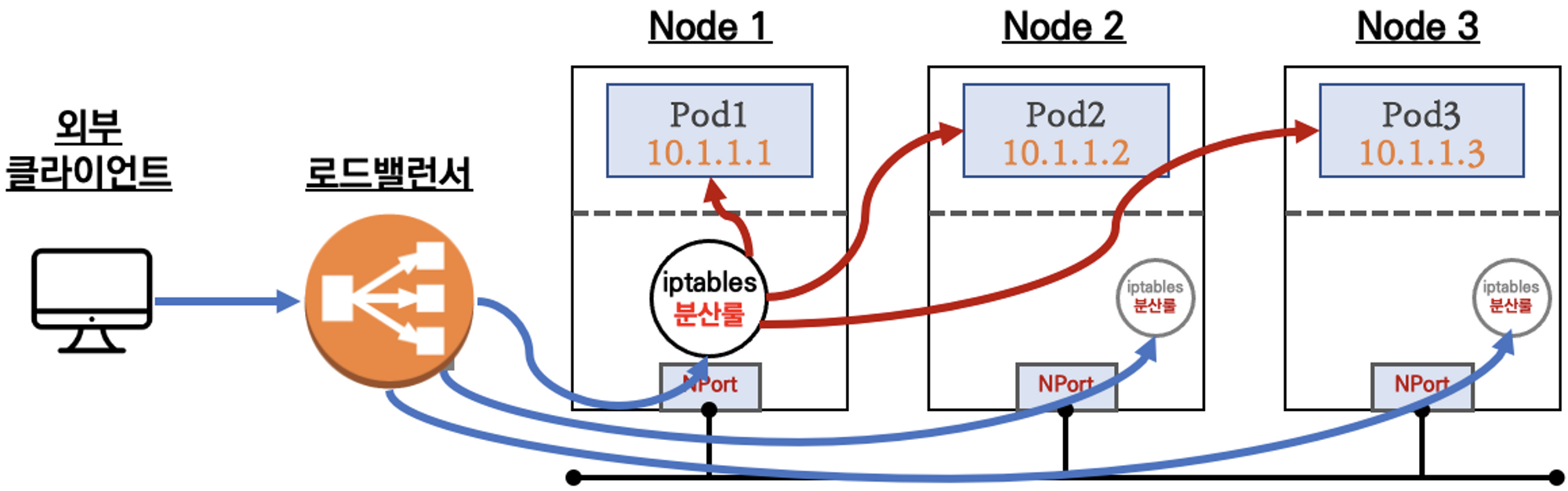

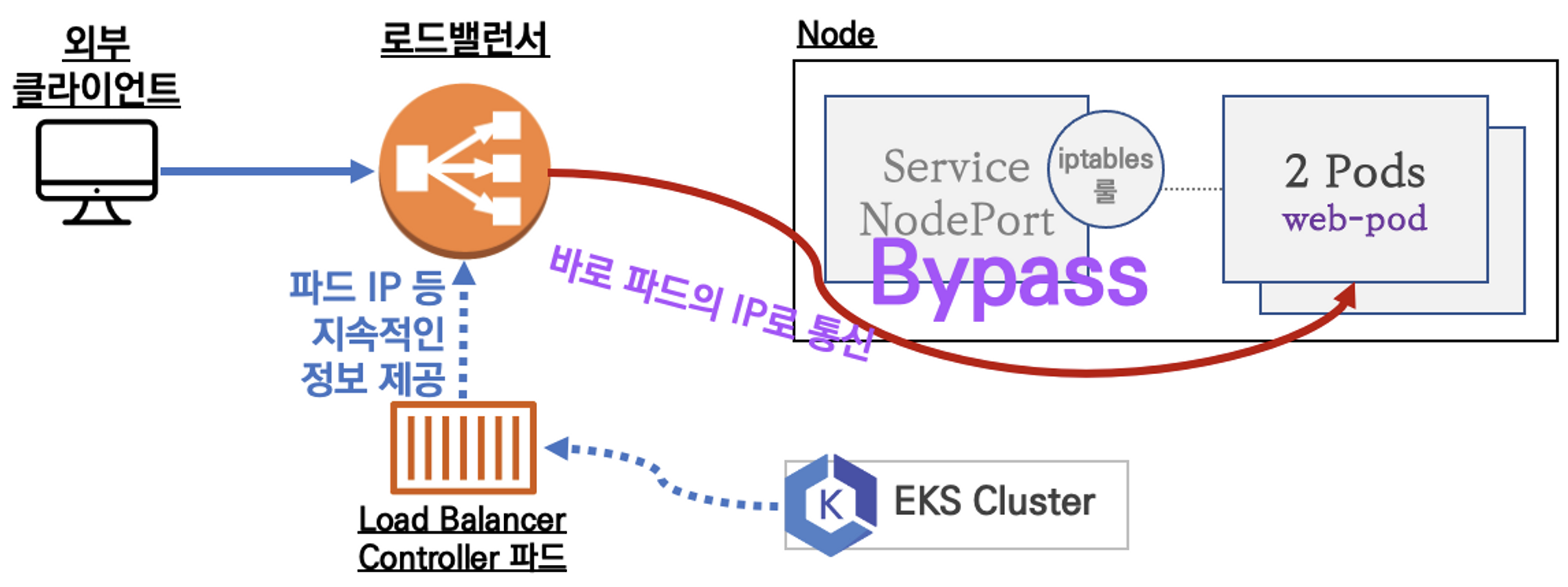

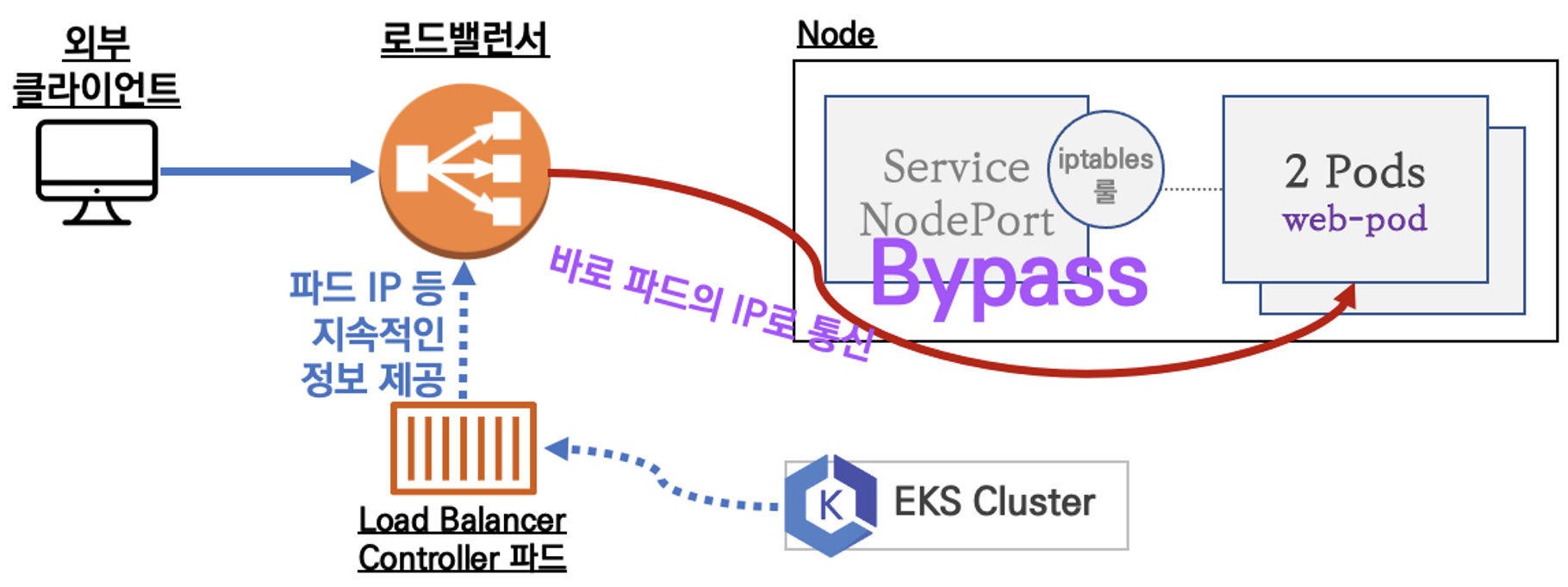

LoadBalancer Controller with AWS VPC CNI

- EKS에서는 다음과 같이 연결된 로드밸런서(서비스의 경우 NLB)에게 지속적으로 파드 정보를 제공하거나 필요 시 로드밸런서를 생성하는 LoadBalancer Controller를 제공한다.

- VPC CNI와 함께 구성하면 노드와 파드의 IP 대역이 동일하기 때문에 NodePort 서비스를 거치지 않고 바로 통신(Bypass)이 가능하다.

- VPC CNI와 함께 구성하면 노드와 파드의 IP 대역이 동일하기 때문에 NodePort 서비스를 거치지 않고 바로 통신(Bypass)이 가능하다.

AWS LB(NLB-IP) Contoller with IRSA(IAM Role for Service Account)

-

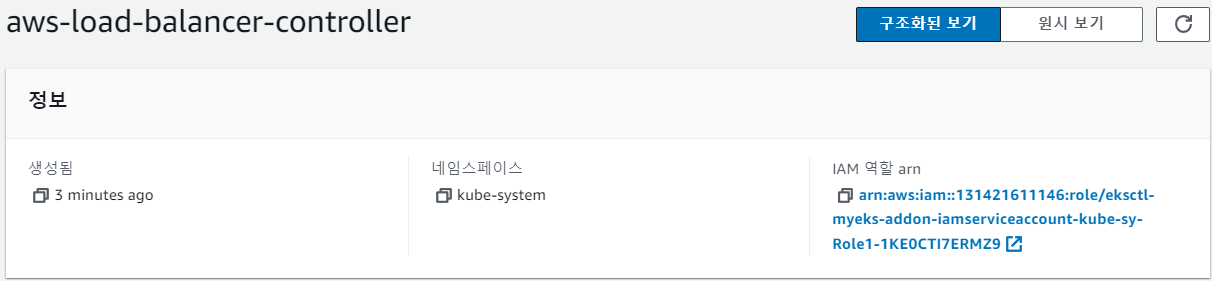

IRSA 생성

# OpenID Connector 확인 $aws eks describe-cluster --name $CLUSTER_NAME --query "cluster.identity.oidc.issuer" --output text $aws iam list-open-id-connect-providers | jq # LB를 제어할 수 있는 IAM 정책 생성 $curl -o iam_policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.4.7/docs/install/iam_policy.json $aws iam create-policy --policy-name AWSLoadBalancerControllerIAMPolicy --policy-document file://iam_policy.json { "Policy": { "PolicyName": "AWSLoadBalancerControllerIAMPolicy", "PolicyId": "ANPAR5GKWUSFGFNV2KAKU", "Arn": "arn:aws:iam::131421611146:policy/AWSLoadBalancerControllerIAMPolicy", "Path": "/", "DefaultVersionId": "v1", "AttachmentCount": 0, "PermissionsBoundaryUsageCount": 0, "IsAttachable": true, "CreateDate": "2023-05-03T08:13:04+00:00", "UpdateDate": "2023-05-03T08:13:04+00:00" } } # 생성한 정책 ARN 확인 $aws iam get-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --query 'Policy.Arn' "arn:aws:iam::131421611146:policy/AWSLoadBalancerControllerIAMPolicy" # ServiceAcconut 생성 Stack 배포 > IAM Role 생성 및 정책 연결 $eksctl create iamserviceaccount --cluster=$CLUSTER_NAME --namespace=kube-system --name=aws-load-balancer-controller \ --attach-policy-arn=arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --override-existing-serviceaccounts --approve # IRSA 정보 확인 $eksctl get iamserviceaccount --cluster $CLUSTER_NAME NAMESPACE NAME ROLE ARN kube-system aws-load-balancer-controller arn:aws:iam::131421611146:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-1KE0CTI7ERMZ9 # 서비스 어카운트 확인 $kubectl get serviceaccounts -n kube-system aws-load-balancer-controller -o yaml | yh apiVersion: v1 kind: ServiceAccount metadata: annotations: eks.amazonaws.com/role-arn: arn:aws:iam::131421611146:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-1KE0CTI7ERMZ9 creationTimestamp: "2023-05-03T08:16:46Z" labels: app.kubernetes.io/managed-by: eksctl name: aws-load-balancer-controller namespace: kube-system resourceVersion: "53718" uid: 6ee23923-9d4b-46f6-8b9b-0481f8730902

-

LB Controller 배포

# Helm Chart $helm repo add eks https://aws.github.io/eks-charts $helm repo update $helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \ --set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller NOTES: AWS Load Balancer controller installed! # 설치 확인 $kubectl describe deploy -n kube-system aws-load-balancer-controller | grep 'Service Account' Service Account: aws-load-balancer-controller # 클러스터 롤 확인 $kubectl describe clusterroles.rbac.authorization.k8s.io aws-load-balancer-controller-role ... PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- targetgroupbindings.elbv2.k8s.aws [] [] [create delete get list patch update watch] events [] [] [create patch] ingresses [] [] [get list patch update watch] services [] [] [get list patch update watch] ingresses.extensions [] [] [get list patch update watch] services.extensions [] [] [get list patch update watch] ingresses.networking.k8s.io [] [] [get list patch update watch] services.networking.k8s.io [] [] [get list patch update watch] endpoints [] [] [get list watch] namespaces [] [] [get list watch] nodes [] [] [get list watch] pods [] [] [get list watch] endpointslices.discovery.k8s.io [] [] [get list watch] ingressclassparams.elbv2.k8s.aws [] [] [get list watch] ingressclasses.networking.k8s.io [] [] [get list watch] ingresses/status [] [] [update patch] pods/status [] [] [update patch] services/status [] [] [update patch] targetgroupbindings/status [] [] [update patch] ingresses.elbv2.k8s.aws/status [] [] [update patch] pods.elbv2.k8s.aws/status [] [] [update patch] services.elbv2.k8s.aws/status [] [] [update patch] targetgroupbindings.elbv2.k8s.aws/status [] [] [update patch] ingresses.extensions/status [] [] [update patch] pods.extensions/status [] [] [update patch] services.extensions/status [] [] [update patch] targetgroupbindings.extensions/status [] [] [update patch] ingresses.networking.k8s.io/status [] [] [update patch] pods.networking.k8s.io/status [] [] [update patch] services.networking.k8s.io/status [] [] [update patch] targetgroupbindings.networking.k8s.io/status [] [] [update patch] -

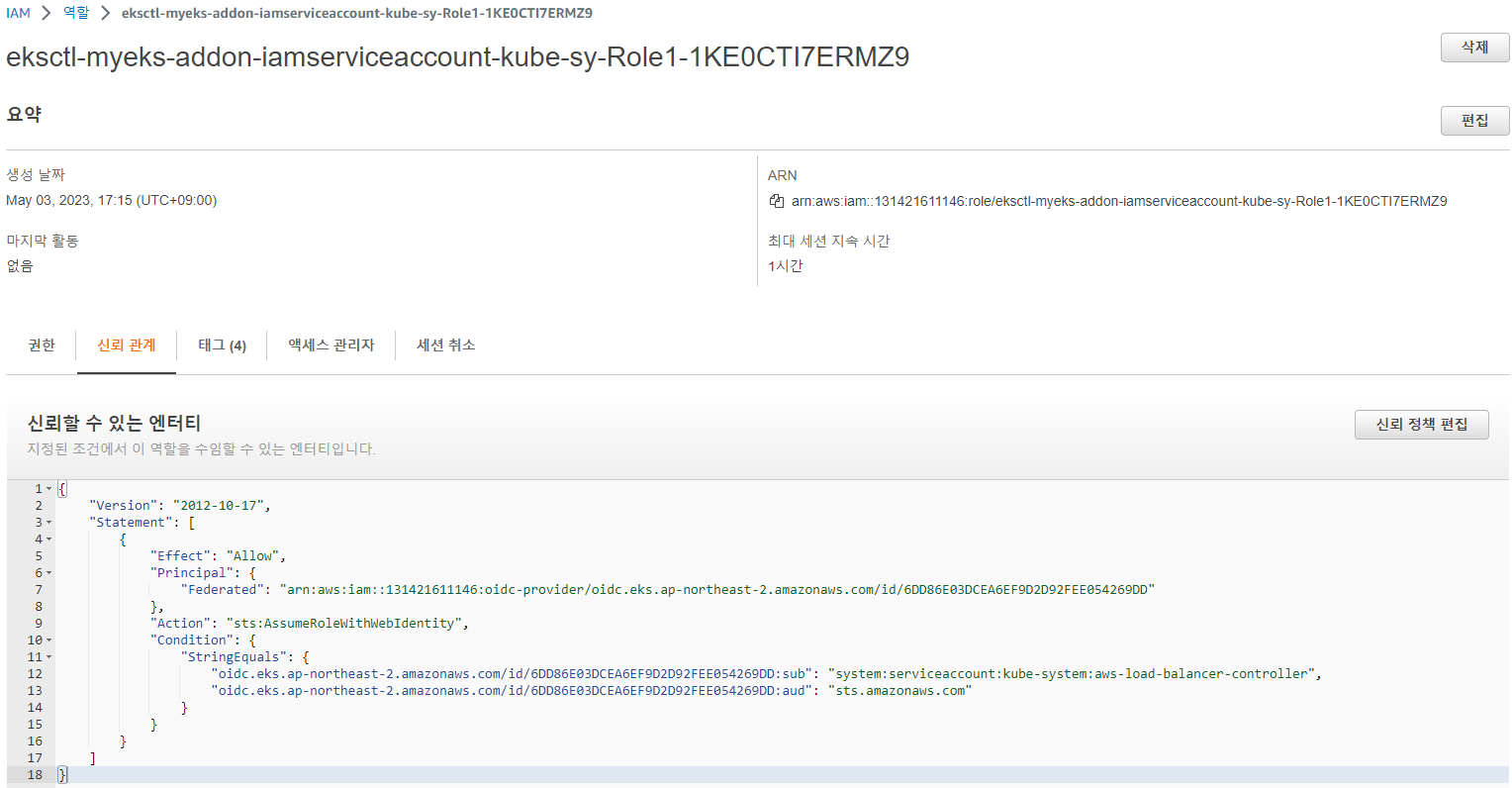

생성한 IAM Role 신뢰관계 확인

-

서비스/파드 배포 with NLB

# Deployment & Service 생성 $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/2/echo-service-nlb.yaml $kubectl apply -f echo-service-nlb.yaml # 정상 배포 확인 $kubectl get deploy,pod NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/deploy-echo 2/2 2 2 2m14s NAME READY STATUS RESTARTS AGE pod/deploy-echo-5c4856dfd6-6smgz 1/1 Running 0 2m14s pod/deploy-echo-5c4856dfd6-q6xff 1/1 Running 0 2m14s $kubectl get svc,ep,ingressclassparams,targetgroupbindings NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 5h55m service/svc-nlb-ip-type LoadBalancer 10.100.4.116 k8s-default-svcnlbip-50cc6c7dcc-11362ea8855af0f7.elb.ap-northeast-2.amazonaws.com 80:32225/TCP 29m NAME ENDPOINTS AGE endpoints/kubernetes 192.168.1.189:443,192.168.3.249:443 5h55m endpoints/svc-nlb-ip-type 192.168.2.202:8080,192.168.3.9:8080 29m NAME GROUP-NAME SCHEME IP-ADDRESS-TYPE AGE ingressclassparams.elbv2.k8s.aws/alb 8m5s NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE targetgroupbinding.elbv2.k8s.aws/k8s-default-svcnlbip-021d9c9ea2 svc-nlb-ip-type 80 ip 7m56s # 배포한 로드밸런서 상태 확인 $aws elbv2 describe-load-balancers --query 'LoadBalancers[*].State.Code' --output text active # 배포한 파드 상태 확인 $kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-6fb4f86d9d-fd6xh 1/1 Running 0 12m aws-load-balancer-controller-6fb4f86d9d-qwt9v 1/1 Running 0 12m # 배포한 로드밸런서 DNS Name 조회 $kubectl get svc svc-nlb-ip-type -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Pod Web URL = http://"$1 }' Pod Web URL = http://k8s-default-svcnlbip-50cc6c7dcc-11362ea8855af0f7.elb.ap-northeast-2.amazonaws.com # NLB DNS Name 변수 등록 NLB=$(kubectl get svc svc-nlb-ip-type -o jsonpath={.status.loadBalancer.ingress[0].hostname}) # 로드 밸런싱 확인 $curl -s $NLB $for i in {1..100}; do curl -s $NLB | grep Hostname ; done | sort | uniq -c | sort -nr 51 Hostname: deploy-echo-5c4856dfd6-6smgz 49 Hostname: deploy-echo-5c4856dfd6-q6xff # 파드 로그 확인 $kubectl logs -l app=deploy-websrv -f 192.168.1.119 - - [03/May/2023:08:41:25 +0000] "GET / HTTP/1.1" 200 691 "-" "curl/7.88.1" 192.168.2.33 - - [03/May/2023:08:41:25 +0000] "GET / HTTP/1.1" 200 690 "-" "curl/7.88.1" 192.168.3.120 - - [03/May/2023:08:41:25 +0000] "GET / HTTP/1.1" 200 691 "-" "curl/7.88.1" 192.168.1.119 - - [03/May/2023:08:41:25 +0000] "GET / HTTP/1.1" 200 691 "-" "curl/7.88.1" 192.168.2.33 - - [03/May/2023:08:41:25 +0000] "GET / HTTP/1.1" 200 690 "-" "curl/7.88.1" 192.168.3.120 - - [03/May/2023:08:41:25 +0000] "GET / HTTP/1.1" 200 691 "-" "curl/7.88.1" # 접속 상세 확인하기 $while true; do curl -s --connect-timeout 1 $NLB | egrep 'Hostname|client_address'; echo "----------" ; date "+%Y-%m-%d %H:%M:%S" ; sleep 1; done Hostname: deploy-echo-5c4856dfd6-q6xff client_address=192.168.3.120 ---------- 2023-05-03 17:43:37 Hostname: deploy-echo-5c4856dfd6-q6xff client_address=192.168.1.119 ---------- 2023-05-03 17:43:38 Hostname: deploy-echo-5c4856dfd6-6smgz client_address=192.168.3.120 ---------- 2023-05-03 17:43:39 Hostname: deploy-echo-5c4856dfd6-6smgz client_address=192.168.2.33 ---------- 2023-05-03 17:43:40 Hostname: deploy-echo-5c4856dfd6-q6xff client_address=192.168.1.119 ---------- 2023-05-03 17:43:42 Hostname: deploy-echo-5c4856dfd6-q6xff client_address=192.168.3.120 ---------- 2023-05-03 17:43:43 Hostname: deploy-echo-5c4856dfd6-q6xff client_address=192.168.2.33 -----------

위 실습을 토대로 흐름을 정리하자면 다음과 같다.

-

ELB를 제어할 수 있는 IAM Policy 생성

-

상기 정책을 연결한 IAM Role for Service Account(aws-load-balancer-controller)를 kube-system 네임스페이스에 생성한다.

$eksctl create iamserviceaccount --cluster=$CLUSTER_NAME --namespace=kube-system --name=aws-load-balancer-controller \ --attach-policy-arn=arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --override-existing-serviceaccounts --approve -

동일한 네임스페이스에 aws-load-balancer-controller 파드를 배포하고 생성한 서비스 어카운트를 할당하여 해당 파드에 역할을 부여한다.

# --set serviceAccount.name 옵션을 통해 파드 배포 시 생성한 SA 할당 $helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \ --set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller $kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-6fb4f86d9d-fd6xh 1/1 Running 0 12m aws-load-balancer-controller-6fb4f86d9d-qwt9v 1/1 Running 0 12m -

NLB 유형(IP 또는 인스턴스)과 속성을 정의한 서비스를 생성하면 aws-load-balancer-controller 파드가 자동으로 NLB를 프로비저닝하고 생성한 서비스의 엔드포인트 IP를 대상그룹에 바인딩한다.

apiVersion: v1 kind: Service metadata: name: svc-nlb-ip-type annotations: service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing service.beta.kubernetes.io/aws-load-balancer-healthcheck-port: "8080" service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true" spec: ports: - port: 80 targetPort: 8080 protocol: TCP type: LoadBalancer loadBalancerClass: service.k8s.aws/nlb selector: app: deploy-websrv$kubectl get svc,ep,targetgroupbindings NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 5h55m service/svc-nlb-ip-type LoadBalancer 10.100.4.116 k8s-default-svcnlbip-50cc6c7dcc-11362ea8855af0f7.elb.ap-northeast-2.amazonaws.com 80:32225/TCP 29m NAME ENDPOINTS AGE endpoints/kubernetes 192.168.1.189:443,192.168.3.249:443 5h55m endpoints/svc-nlb-ip-type 192.168.2.202:8080,192.168.3.9:8080 29m NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE targetgroupbinding.elbv2.k8s.aws/k8s-default-svcnlbip-021d9c9ea2 svc-nlb-ip-type 80 ip 7m56s -

NLB의 DNS Name이 외부로 노출되고 트래픽을 전달받아 로드밸런싱을 수행한다.

$kubectl get svc svc-nlb-ip-type -o jsonpath={.status.loadBalancer.ingress[0].hostname} k8s-default-svcnlbip-50cc6c7dcc-11362ea8855af0f7.elb.ap-northeast-2.amazonaws.com

-

-

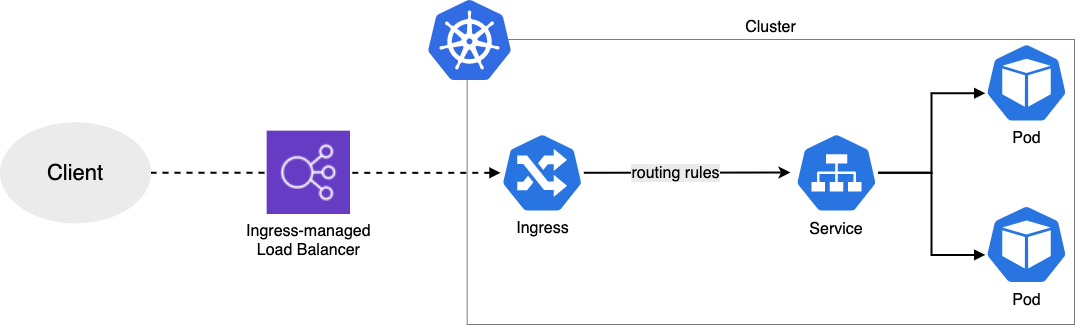

Ingress

- 외부의 HTTP/HTTPS 트래픽이 라우팅되도록 클러스터 내 서비스를 노출시키는 Web-Proxy 동작을 제공한다.

- HTTP/HTTPS 트래픽을 로드밸런싱한다.

- 로드밸런서에서 SSL 인증서를 관리하고 TLS Handshake를 수행하는 SSL/TLS Termination 동작이 가능하다.

- 여러 개의 DNS Name을 호스팅 할 수 있는 가상 호스팅을 제공한다.

- 서비스와 마찬가지로 LoadBalancer Controller를 제공한다.

AWS LB(ALB-IP) Contoller

-

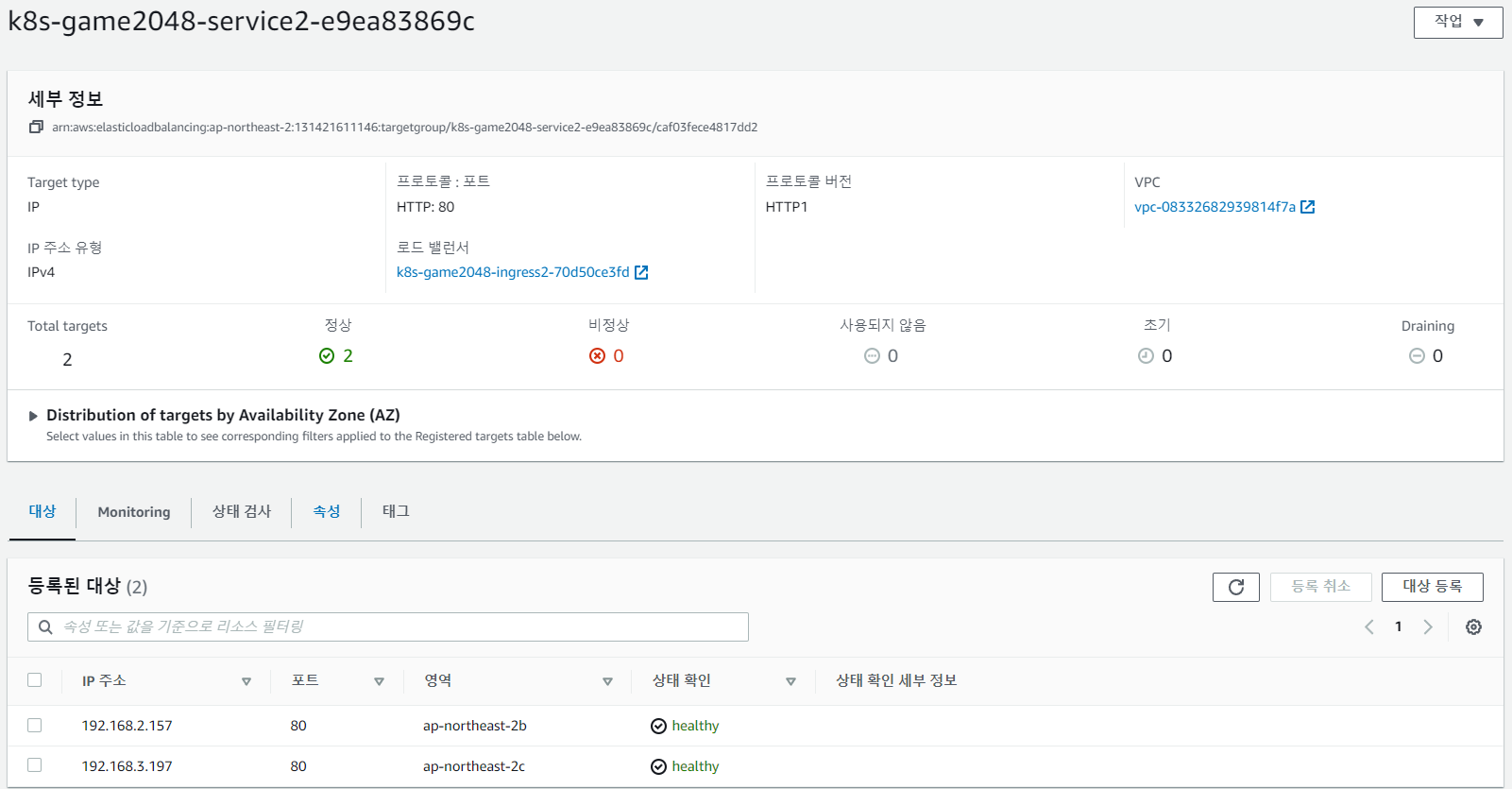

서비스 및 인그레스 배포 진행

# load-balancer-controller 파드가 배포된 상황에서 진행한다. $kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-6fb4f86d9d-m4hvh 1/1 Running 0 29m aws-load-balancer-controller-6fb4f86d9d-prlf4 1/1 Running 0 29m # 테스트 파드와 Service, Ingress 배포 진행 $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ingress1.yaml $kubectl apply -f ingress1.yaml namespace/game-2048 created deployment.apps/deployment-2048 created service/service-2048 created ingress.networking.k8s.io/ingress-2048 created # 인그레스, 서비스, 대상그룹 바인딩 확인 $kubectl get ingress,svc,ep,pod,targetgroupbindings -n game-2048 NAME CLASS HOSTS ADDRESS PORTS AGE ingress.networking.k8s.io/ingress-2048 alb * k8s-game2048-ingress2-70d50ce3fd-1003106901.ap-northeast-2.elb.amazonaws.com 80 75s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/service-2048 NodePort 10.100.233.198 <none> 80:31544/TCP 75s NAME ENDPOINTS AGE endpoints/service-2048 192.168.2.157:80,192.168.3.197:80 75s NAME READY STATUS RESTARTS AGE pod/deployment-2048-6bc9fd6bf5-ckb2d 1/1 Running 0 75s pod/deployment-2048-6bc9fd6bf5-g2vg2 1/1 Running 0 75s NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE targetgroupbinding.elbv2.k8s.aws/k8s-game2048-service2-e9ea83869c service-2048 80 ip 72s # ALB DNS Name 확인 $kubectl get ingress -n game-2048 ingress-2048 -o jsonpath={.status.loadBalancer.ingress[0].hostname} k8s-game2048-ingress2-70d50ce3fd-1003106901.ap-northeast-2.elb.amazonaws.com # 파드 IP 확인 $kubectl get pod -o=custom-columns=NAME:.metadata.name,IP:.status.podIP -n game-2048 NAME IP deployment-2048-6bc9fd6bf5-ckb2d 192.168.2.157 deployment-2048-6bc9fd6bf5-g2vg2 192.168.3.197 -

배포가 정상적으로 진행이 된 경우 대상 그룹 콘솔을 살펴보면 Pod IP로 직접 트래픽을 전달하는 것을 볼 수 있다.

-

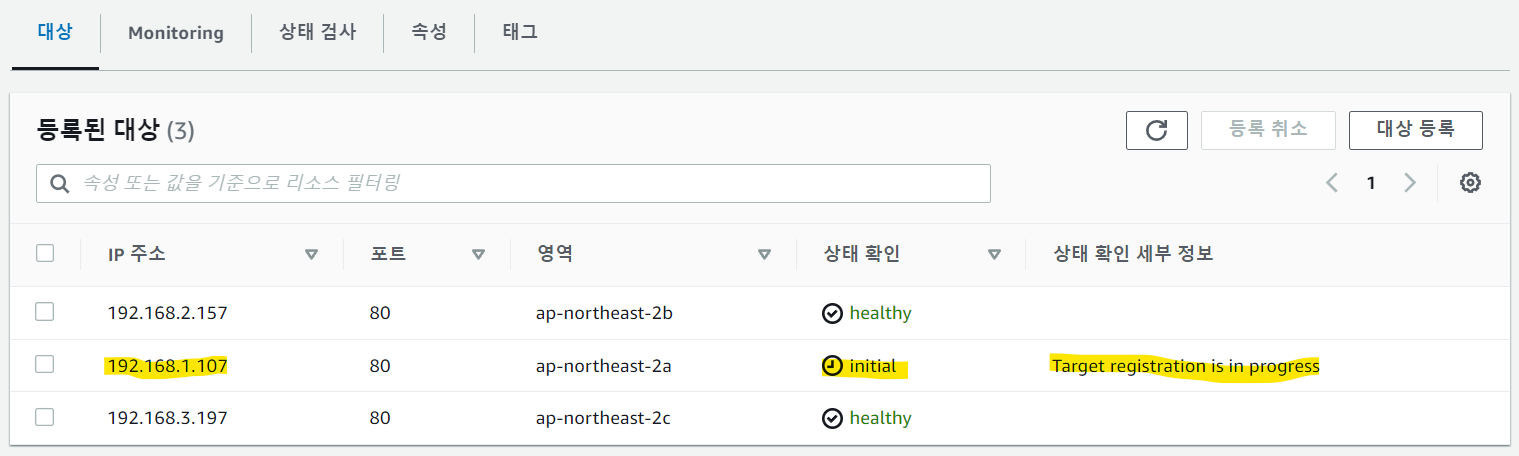

파드를 3개로 증가하면 대상그룹에 자동으로 추가된 파드의 IP를 등록하는 것을 볼 수 있다.

$kubectl scale deployment -n game-2048 deployment-2048 --replicas 3 $kubectl get pod -o=custom-columns=NAME:.metadata.name,IP:.status.podIP -n game-2048 NAME IP deployment-2048-6bc9fd6bf5-ckb2d 192.168.2.157 deployment-2048-6bc9fd6bf5-g2vg2 192.168.3.197 deployment-2048-6bc9fd6bf5-sd77n 192.168.1.107

-

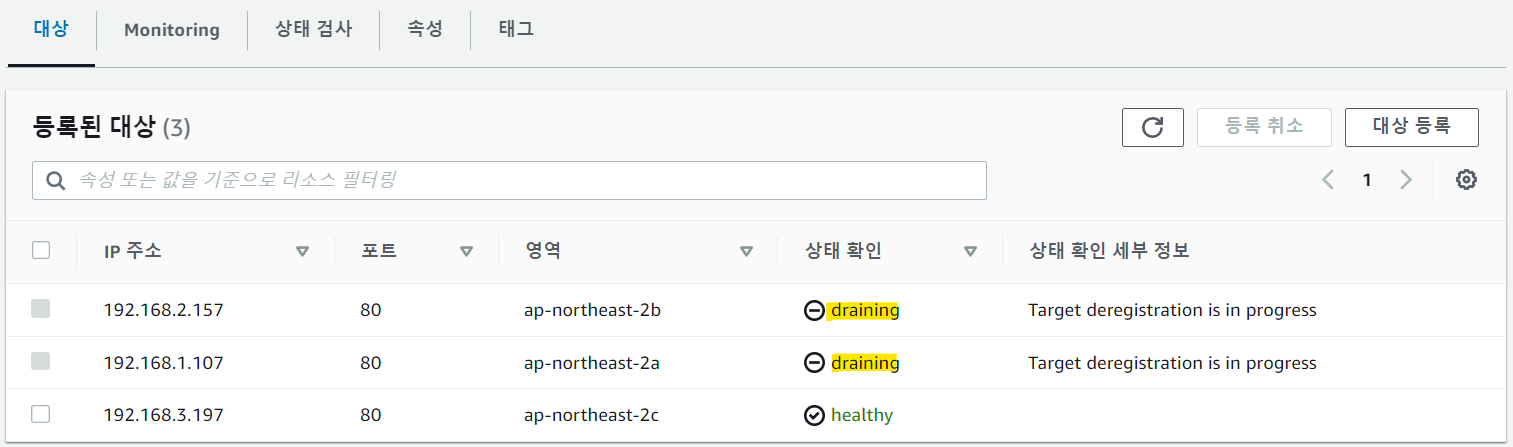

파드를 1개로 감소하면 대상그룹에 자동으로 파드 2개를 draining하는 것을 볼 수 있다.

$kubectl scale deployment -n game-2048 deployment-2048 --replicas 1 $kubectl get pod -o=custom-columns=NAME:.metadata.name,IP:.status.podIP -n game-2048 NAME IP deployment-2048-6bc9fd6bf5-g2vg2 192.168.3.197

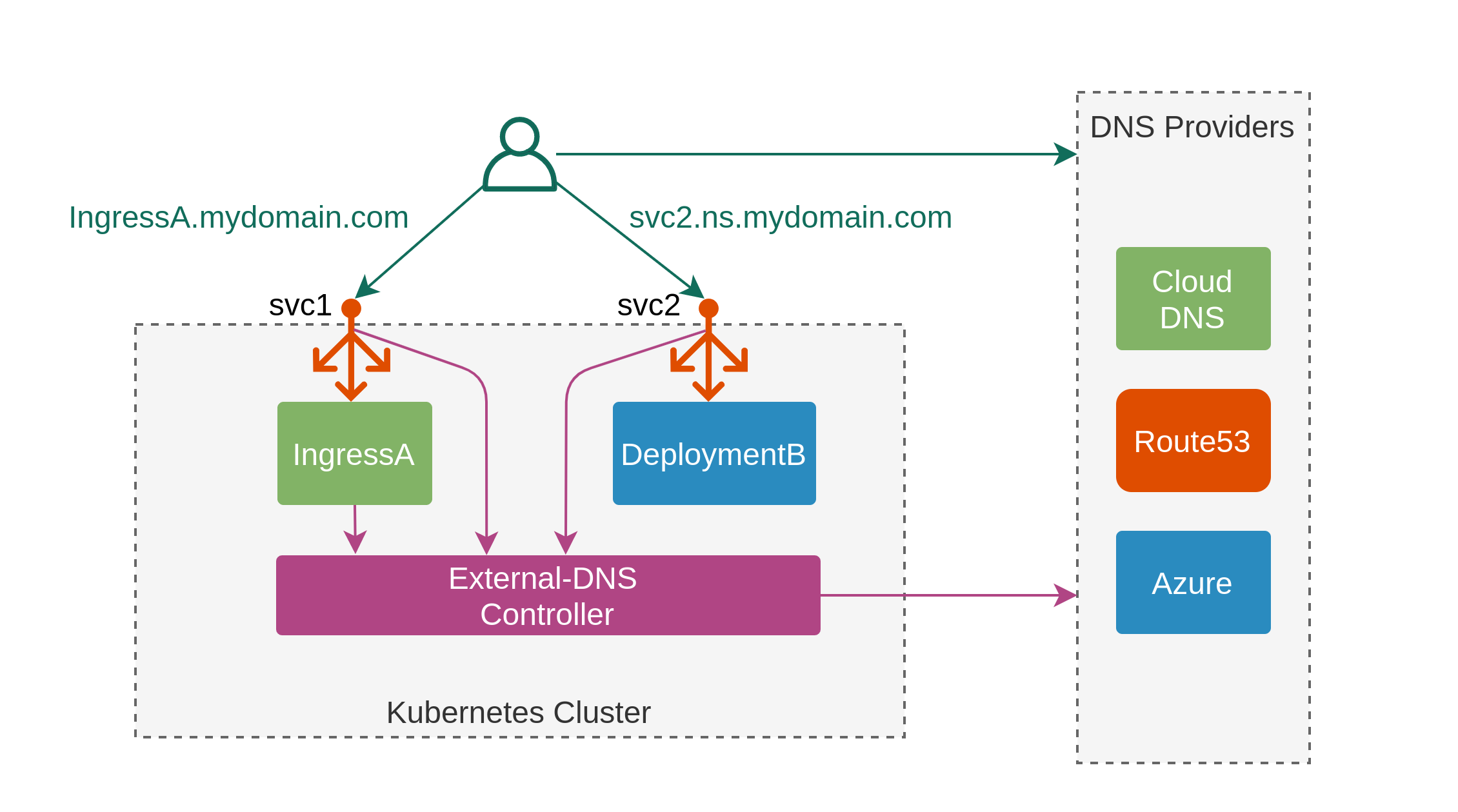

ExternalDNS

- K8S 서비스 또는 인그레스에 퍼블릭 도메인 서버(AWS-Route53, GCP-CloudDNS, Azure-DNS등)에서 제공하는 도메인을 적용하여 해당 도메인 서버의 레코드를 동적으로 관리할 수 있는 서비스이다.

- 설치 전 준비 작업

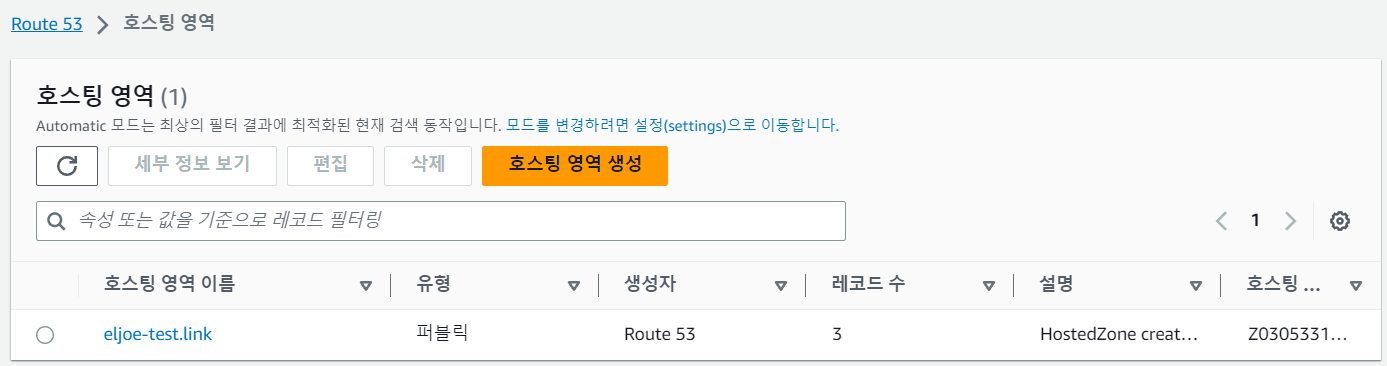

- Route53에서 퍼블릭 도메인을 소유한 상태에서 진행한다.

- LoadBalancer Controller 배포 상태 및 Route53 정보 확인

# 배포한 파드 상태 확인 $kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-6fb4f86d9d-5qs9g 1/1 Running 0 40s aws-load-balancer-controller-6fb4f86d9d-vcrbl 1/1 Running 0 40s # 도메인 변수 선언 $MyDomain=eljoe-test.link # 도메인 ID 변수 선언 $MyDnzHostedZoneId=`aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text` $echo $MyDomain, $MyDnzHostedZoneId

- Route53에서 퍼블릭 도메인을 소유한 상태에서 진행한다.

- ExternalDNS 설치

# ExternalDNS 배포 $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml # envsubst : externaldns.yaml 파일에서 'MyDomain', 'MyDnzHostedZoneId'을 환경변수 값으로 치환 $MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f - serviceaccount/external-dns created clusterrole.rbac.authorization.k8s.io/external-dns created clusterrolebinding.rbac.authorization.k8s.io/external-dns-viewer created deployment.apps/external-dns created # 배포한 파드 상태 확인 $kubectl get pod -l app.kubernetes.io/name=external-dns -n kube-system NAME READY STATUS RESTARTS AGE external-dns-7cbbf47bd4-hm4mm 1/1 Running 0 37s

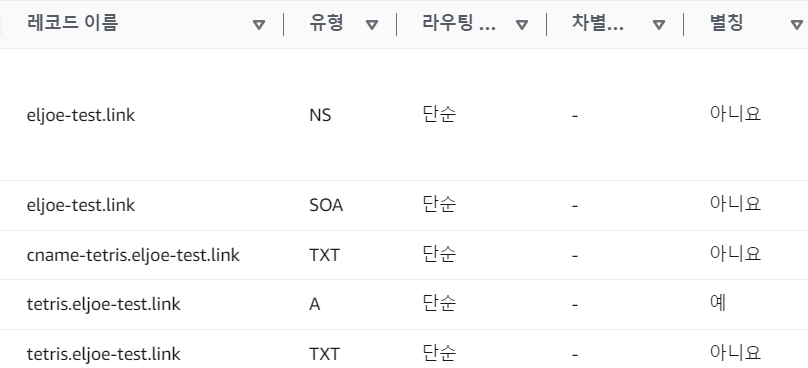

Service(NLB IP) + ExternalDNS 구성

- 테스트 Deployment 배포 및 Service 배포

# Deployment 배포 $cat <<EOF | kubectl create -f - apiVersion: apps/v1 kind: Deployment metadata: name: tetris labels: app: tetris spec: replicas: 1 selector: matchLabels: app: tetris template: metadata: labels: app: tetris spec: containers: - name: tetris image: bsord/tetris --- apiVersion: v1 kind: Service metadata: name: tetris annotations: service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true" service.beta.kubernetes.io/aws-load-balancer-backend-protocol: "http" #service.beta.kubernetes.io/aws-load-balancer-healthcheck-port: "80" spec: selector: app: tetris ports: - port: 80 protocol: TCP targetPort: 80 type: LoadBalancer loadBalancerClass: service.k8s.aws/nlb EOF # Deployment, Service, Endpoint 확인 $kubectl get deploy,svc,ep tetris NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/tetris 1/1 1 1 39s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/tetris LoadBalancer 10.100.42.102 k8s-default-tetris-a98a91fe8d-238b1d0f18f9d7d7.elb.ap-northeast-2.amazonaws.com 80:30786/TCP 39s NAME ENDPOINTS AGE endpoints/tetris 192.168.3.40:80 39s # 서비스(NLB-IP)에 ExternalDNS를 통해 퍼블릭 도메인 연결 $kubectl annotate service tetris "external-dns.alpha.kubernetes.io/hostname=tetris.$MyDomain" service/tetris annotated # A 레코드 확인 $aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A'].Name" | jq .[] "tetris.eljoe-test.link." dig +short tetris.$MyDomain 3.37.58.144 3.36.187.221 3.34.163.58 - Route53 A 레코드 자동 등록 확인

- 웹 접속 진행