3주차

본 페이지는 AWS EKS Workshop 실습 스터디의 일환으로 매주 실습 결과와 생각을 기록하는 장으로써 작성하였습니다.

Keyword

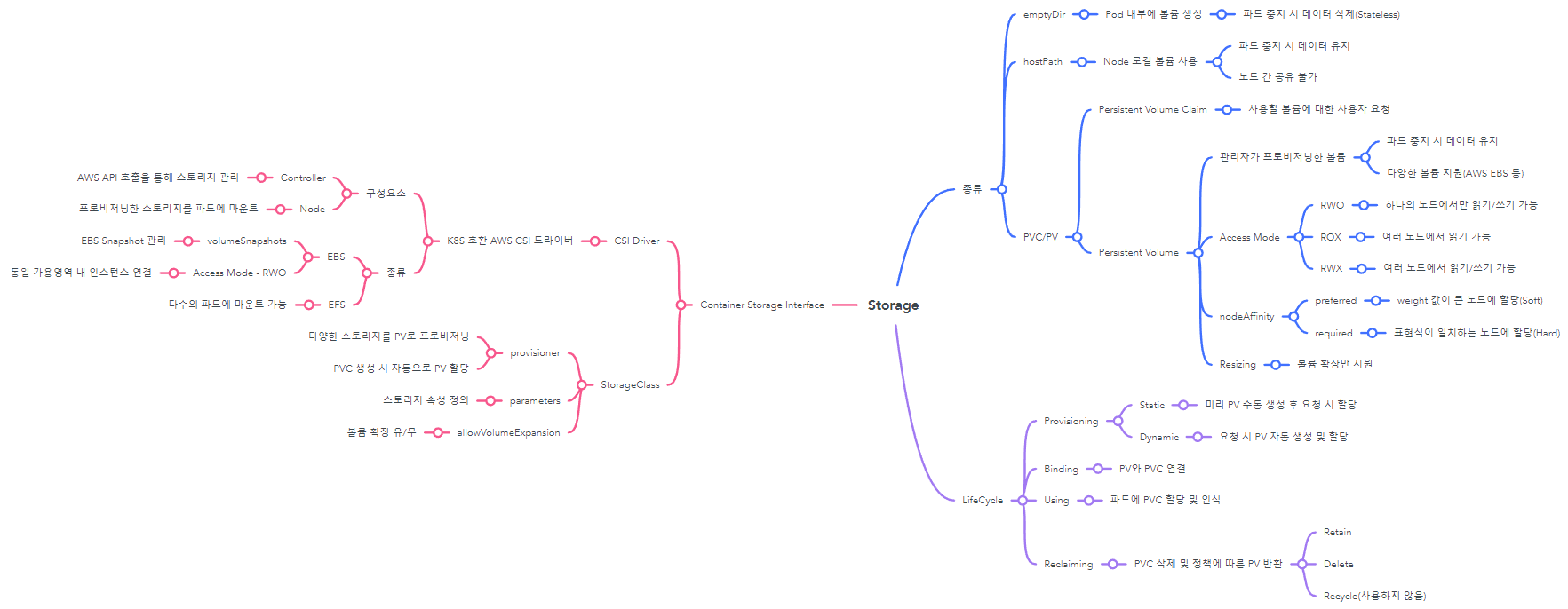

- 3주차 강의 내용을 마인드 맵 형태로 정리해보자면 다음과 같다.

Volumes

-

emptyDir - 파드 생성 시 내부에 할당되는 볼륨으로 파드 중단 시 삭제된다(Stateless)

# 테스트 파드 생성 $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/date-busybox-pod.yaml $kubectl apply -f date-busybox-pod.yaml # 테스트 파일 확인 $kubectl exec busybox -- tail -f /home/pod-out.txt Wed May 10 05:26:47 UTC 2023 Wed May 10 05:26:57 UTC 2023 # 테스트 파드 삭제 후 재생성 # 내용이 다름을 확인한다. $kubectl delete pod busybox $kubectl apply -f date-busybox-pod.yaml $kubectl exec busybox -- tail -f /home/pod-out.txt Wed May 10 05:28:50 UTC 2023 Wed May 10 05:29:00 UTC 2023 $kubectl get pod -o yaml | yh ... volumeMounts: - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: kube-api-access-z4c98 ... volumes: - name: kube-api-access-z4c98 -

hostPath - 파드 생성 시 노드의 로컬 볼륨을 사용한다.

# local-path-provisioner - hostPath를 PV/PVC로 프로비저닝하는 Storage Class $curl -s -O https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml ~~$~~kubectl apply -f local-path-storage.yaml # 파드 상태 확인 $kubectl get pod -n local-path-storage -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES local-path-provisioner-759f6bd7c9-6vc6c 1/1 Running 0 3m25s 192.168.3.252 ip-192-168-3-225.ap-northeast-2.compute.internal <none> <none> # 볼륨 경로 확인 $kubectl get configmap/local-path-config -n local-path-storage -o yaml | yh apiVersion: v1 data: config.json: |- { "nodePathMap":[ { "node":"DEFAULT_PATH_FOR_NON_LISTED_NODES", "paths":["/opt/local-path-provisioner"] } ] } ... # StorageClass 확인 $kubectl get sc local-path -o yaml | yh apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"local-path"},"provisioner":"rancher.io/local-path","reclaimPolicy":"Delete","volumeBindingMode":"WaitForFirstConsumer"} creationTimestamp: "2023-05-10T05:44:19Z" name: local-path resourceVersion: "8993" uid: c5f65e26-99e2-4210-9050-c9698363e2a1 provisioner: rancher.io/local-path reclaimPolicy: Delete volumeBindingMode: WaitForFirstConsumer # PVC 배포 $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/localpath1.yaml $kubectl apply -f localpath1.yaml # 파드 생성 $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/localpath2.yaml $kubectl apply -f localpath2.yaml # 파드, pv, pvc 확인 $kubectl get pod,pv,pvc -o yaml | yh # Pod - apiVersion: v1 kind: Pod .. spec: ... volumeMounts: - mountPath: /data name: persistent-storage - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: kube-api-access-wwnfz readOnly: true ... volumes: - name: persistent-storage persistentVolumeClaim: claimName: localpath-claim # PV - apiVersion: v1 kind: PersistentVolume ... spec: accessModes: - ReadWriteOnce capacity: storage: 1Gi ... hostPath: path: /opt/local-path-provisioner/pvc-15b075e5-62ae-4c8c-a989-08b6516d18d9_default_localpath-claim type: DirectoryOrCreate nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - ip-192-168-1-236.ap-northeast-2.compute.internal persistentVolumeReclaimPolicy: Delete storageClassName: local-path volumeMode: Filesystem ... # PVC - apiVersion: v1 kind: PersistentVolumeClaim ... spec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi storageClassName: local-path volumeMode: Filesystem volumeName: pvc-15b075e5-62ae-4c8c-a989-08b6516d18d9 ... # 테스트 파일 확인 $kubectl exec -it app -- tail -f /data/out.txt Wed May 10 06:10:18 UTC 2023- PV > nodeAffinity 내용 확인 → 특정 노드에 할당하므로 노드간 데이터 공유 불가

nodeAffinity: # preferred: # weight: 1 required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: # 1번 노드에 PV 할당 - ip-192-168-1-236.ap-northeast-2.compute.internal- nodeAffinity.required : matchExpressions에 부합하는 노드에 할당(Hard)

- nodeAffinity.preferred : weight 값이 큰 노드에 할당(Soft)

- 파드 삭제 및 재생성 후 데이터 유지 확인

# 1번 노드 마운트 확인 $ssh ec2-user@$N1 tree /opt/local-path-provisioner /opt/local-path-provisioner └── pvc-15b075e5-62ae-4c8c-a989-08b6516d18d9_default_localpath-claim └── out.txt # 파드 삭제 및 재생성 후 데이터 유지 확인 $kubectl delete pod app $kubectl apply -f localpath2.yaml $kubectl exec -it app -- tail -f /data/out.txt Wed May 10 06:15:43 UTC 2023 ... Wed May 10 06:17:13 UTC 2023

- PV > nodeAffinity 내용 확인 → 특정 노드에 할당하므로 노드간 데이터 공유 불가

-

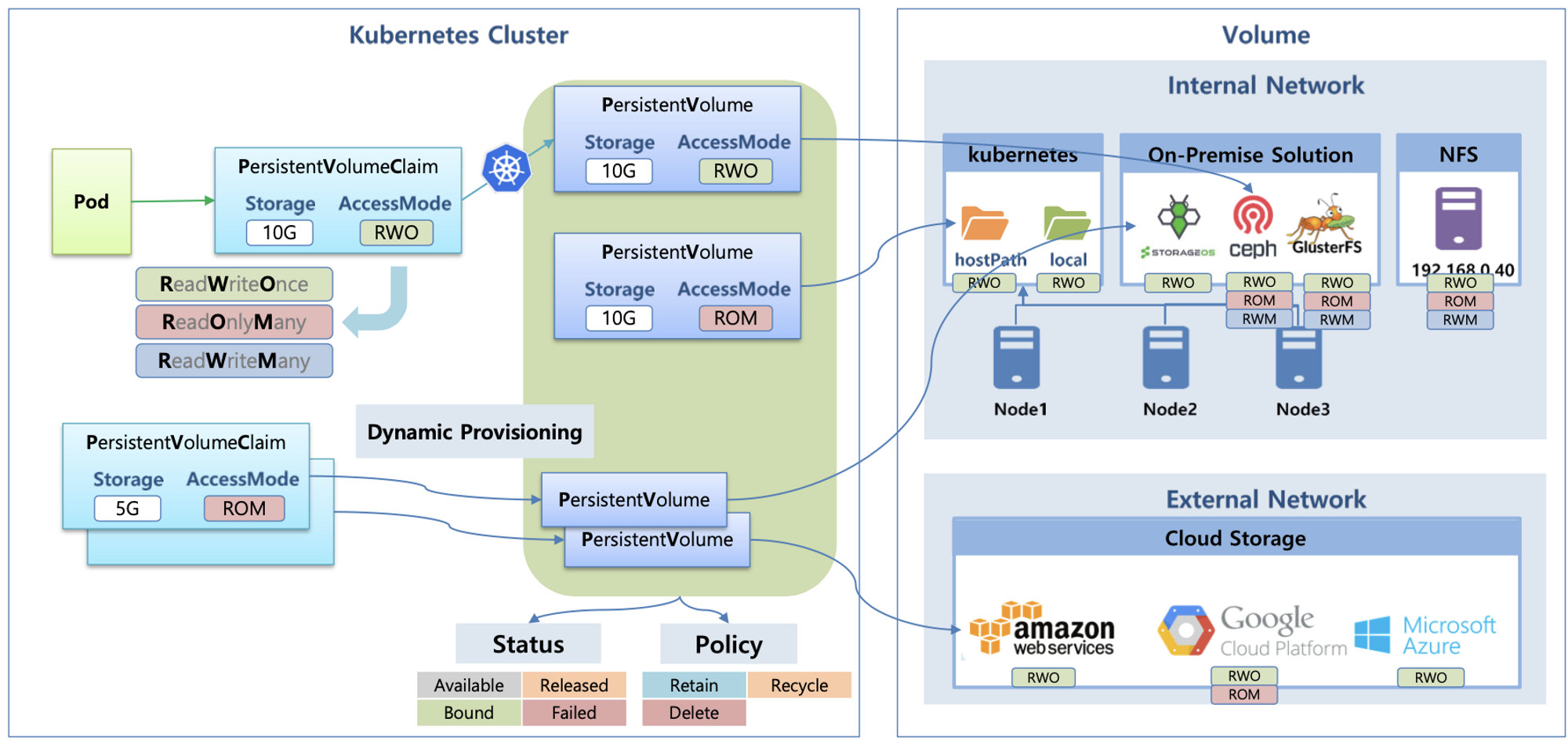

PV/PVC

- Persistent Volume Claim : 사용자가 사용할 볼륨에 대한 요청

- Persistent Volume : 관리자가 프로비저닝한 볼륨

- 다양한 볼륨 지원 - AWS EBS 등

- Lifecycle - Provisioning

- Static - 관리자가 PV를 미리 생성하고 요청 시 할당하는 방식 - 하단 PV for Instance Store 참고

- Dynamic - 요청 시 PV 자동 생성 및 할당 - 하단 EBS CSI Driver 참고

- Access Mode

- RWO(ReadWriteOnce) - 하나의 노드에만 읽기/쓰기 가능

- ROX(ReadOnlyMany) - 여러 노드에서 읽기 가능

- RWX(ReadWriteMany) - 여러 노드에서 읽기/쓰기 가능

- 다양한 볼륨 지원 - AWS EBS 등

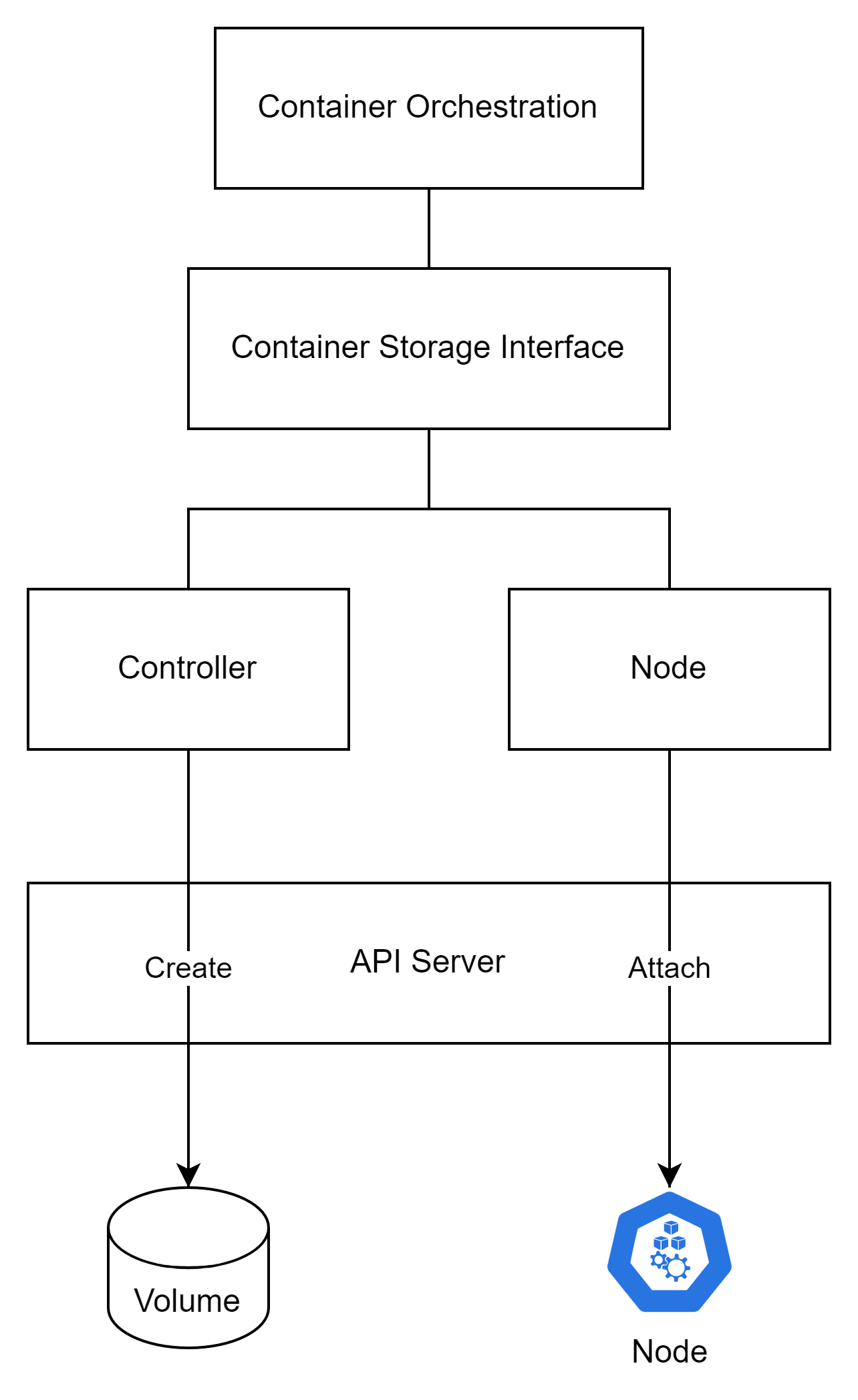

Container Storage Interface

…vendors wanting to add support for their storage system to Kubernetes (or even fix a bug in an existing volume plugin) were forced to align with the Kubernetes release process. In addition, third-party storage code caused reliability and security issues in core Kubernetes binaries and the code was often difficult (and in some cases impossible) for Kubernetes maintainers to test and maintain. - Link

- CSI 이전 K8S와 같은 기존 컨테이너 오케스트레이션에서는 스토리지 프로비저닝을 위한 인터페이스가 공급업체별로 종속되어 보안상 문제를 야기할 수 있고, 테스트 및 유지보수 측면에서 까다로운 상황이 발생하였다. 이에, CNCF에서 CSI라는 표준화 인터페이스를 제공함으로써 각 공급업체는 쿠버네티스 내부 로직을 건드리지 않고 자체 드라이버를 통해 스토리지 프로비저닝을 할 수 있다.

- AWS 또한 CSI Driver를 제공하며 아래와 같은 드라이버들이 있다.

EBS CSI Driver

- EBS CSI Driver 설치 후 StorageClass 및 PV/PVC 생성

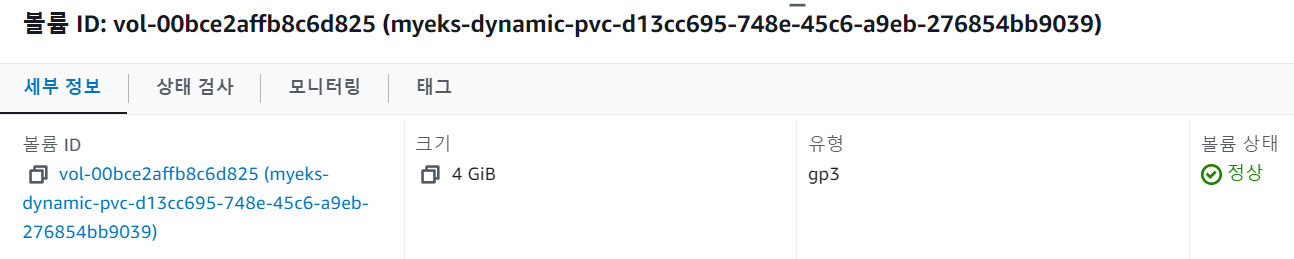

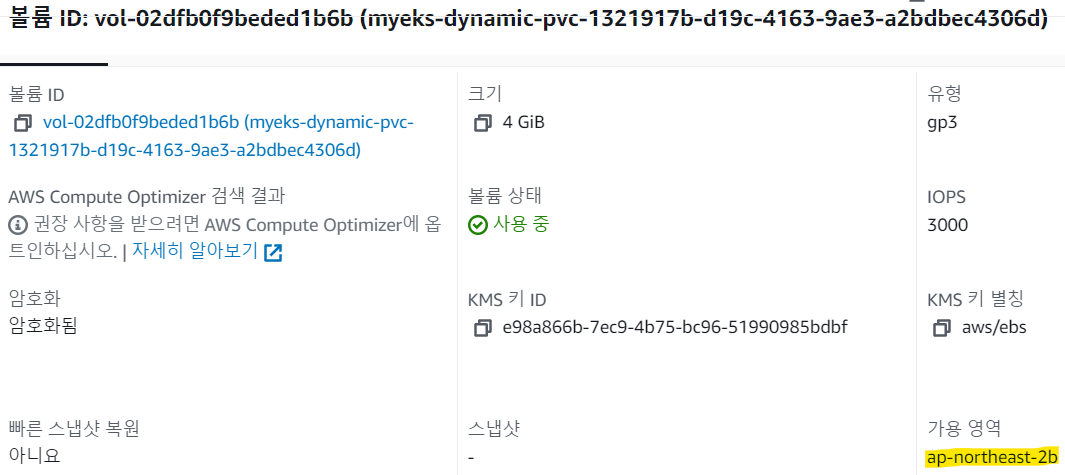

# AWS 관리형 정책(AmazonEBSCSIDriverPolicy) 권한 확인 - AWS 관리형 정책이므로 계정 ID를 할당하지 않는다. $aws iam get-policy-version --policy-arn arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy --version-id v2 ... { "Effect": "Allow", "Action": [ "ec2:CreateSnapshot", "ec2:AttachVolume", "ec2:DetachVolume", "ec2:ModifyVolume", "ec2:DescribeAvailabilityZones", "ec2:DescribeInstances", "ec2:DescribeSnapshots", "ec2:DescribeTags", "ec2:DescribeVolumes", "ec2:DescribeVolumesModifications" ], "Resource": "*" }, ... # AWS 관리형 정책(AmazonEBSCSIDriverPolicy)을 연결한 IRSA 생성 $eksctl create iamserviceaccount \ --name ebs-csi-controller-sa \ --namespace kube-system \ --cluster ${CLUSTER_NAME} \ --attach-policy-arn arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy \ --approve \ --role-only \ --role-name AmazonEKS_EBS_CSI_DriverRole # IRSA 확인 $eksctl get iamserviceaccount --cluster myeks NAMESPACE NAME ROLE ARN kube-system ebs-csi-controller-sa arn:aws:iam::131421611146:role/AmazonEKS_EBS_CSI_DriverRole # EBS CSI Driver Addon 추가 $eksctl create addon --name aws-ebs-csi-driver --cluster ${CLUSTER_NAME} --service-account-role-arn arn:aws:iam::${ACCOUNT_ID}:role/AmazonEKS_EBS_CSI_DriverRole --force # Controller & Node Pod 확인 $kubectl get pod -n kube-system -l app.kubernetes.io/component=csi-driver NAME READY STATUS RESTARTS AGE ebs-csi-controller-67658f895c-2lzq9 6/6 Running 0 95s ebs-csi-controller-67658f895c-nd2xl 6/6 Running 0 95s ebs-csi-node-kjqsj 3/3 Running 0 95s ebs-csi-node-t4lmn 3/3 Running 0 95s ebs-csi-node-zlwbn 3/3 Running 0 95s # ebs-csi-controller 파드 내 6EA 컨테이너 확인 $kubectl get pod -n kube-system -l app=ebs-csi-controller -o jsonpath='{.items[0].spec.containers[*].name}' ; echo ebs-plugin csi-provisioner csi-attacher csi-snapshotter csi-resizer liveness-probe # gp3 Storage Class 생성 $cat <<EOT > gp3-sc.yaml kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: gp3 allowVolumeExpansion: true provisioner: ebs.csi.aws.com volumeBindingMode: WaitForFirstConsumer parameters: type: gp3 allowAutoIOPSPerGBIncrease: 'true' encrypted: 'true' EOT $kubectl apply -f gp3-sc.yaml # Storage Class Parameter 확인 $kubectl describe sc gp3 | grep Parameters Parameters: allowAutoIOPSPerGBIncrease=true,encrypted=true,type=gp3 # gp3 Storage Class를 가리키는 PVC 생성 $cat <<EOT > awsebs-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: ebs-claim spec: accessModes: - ReadWriteOnce resources: requests: storage: 4Gi storageClassName: gp3 EOT $kubectl apply -f awsebs-pvc.yaml # 테스트 파드 생성 $cat <<EOT > awsebs-pod.yaml apiVersion: v1 kind: Pod metadata: name: app spec: terminationGracePeriodSeconds: 3 containers: - name: app image: centos command: ["/bin/sh"] args: ["-c", "while true; do echo \$(date -u) >> /data/out.txt; sleep 5; done"] volumeMounts: - name: persistent-storage mountPath: /data volumes: - name: persistent-storage persistentVolumeClaim: claimName: ebs-claim EOT $kubectl apply -f awsebs-pod.yaml # 각 리소스 상태 확인 $kubectl get pvc,pv,VolumeAttachment,pod NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/ebs-claim Bound pvc-d13cc695-748e-45c6-a9eb-276854bb9039 4Gi RWO gp3 4m55s NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-d13cc695-748e-45c6-a9eb-276854bb9039 4Gi RWO Delete Bound default/ebs-claim gp3 2m6s NAME ATTACHER PV NODE ATTACHED AGE volumeattachment.storage.k8s.io/csi-38c5c7fa05838038f41a253f12ab872d0b4709e7ad45e6636891151c90ae7d6a ebs.csi.aws.com pvc-d13cc695-748e-45c6-a9eb-276854bb9039 ip-192-168-3-225.ap-northeast-2.compute.internal true 2m6s NAME READY STATUS RESTARTS AGE pod/app 1/1 Running 0 2m10s # fsType 기본값 : ext4 $kubectl exec -it app -- sh -c 'df -hT --type=ext4' Filesystem Type Size Used Avail Use% Mounted on /dev/nvme1n1 ext4 3.8G 44K 3.8G 1% /data # nodeAffinity 확인 $kubectl get pv -o yaml | yh ... nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: topology.ebs.csi.aws.com/zone operator: In values: - ap-northeast-2c ... # nodeAffinity에 의해 ap-northeast-2c에 배포된 3번째 노드에 EBS 볼륨 마운트 확인 $kubectl get pod -o yaml | yh | grep nodeName nodeName: ip-192-168-3-225.ap-northeast-2.compute.internal

- 위 실습을 통해 다음과 같은 내용을 확인할 수 있다.

- PV의 Access Mode는 ReadWriteOnce임을 확인할 수 있는데 이는 EBS 설정에 의해 동일 가용영역 내 인스턴스만 연결할 수 있기 때문이다.

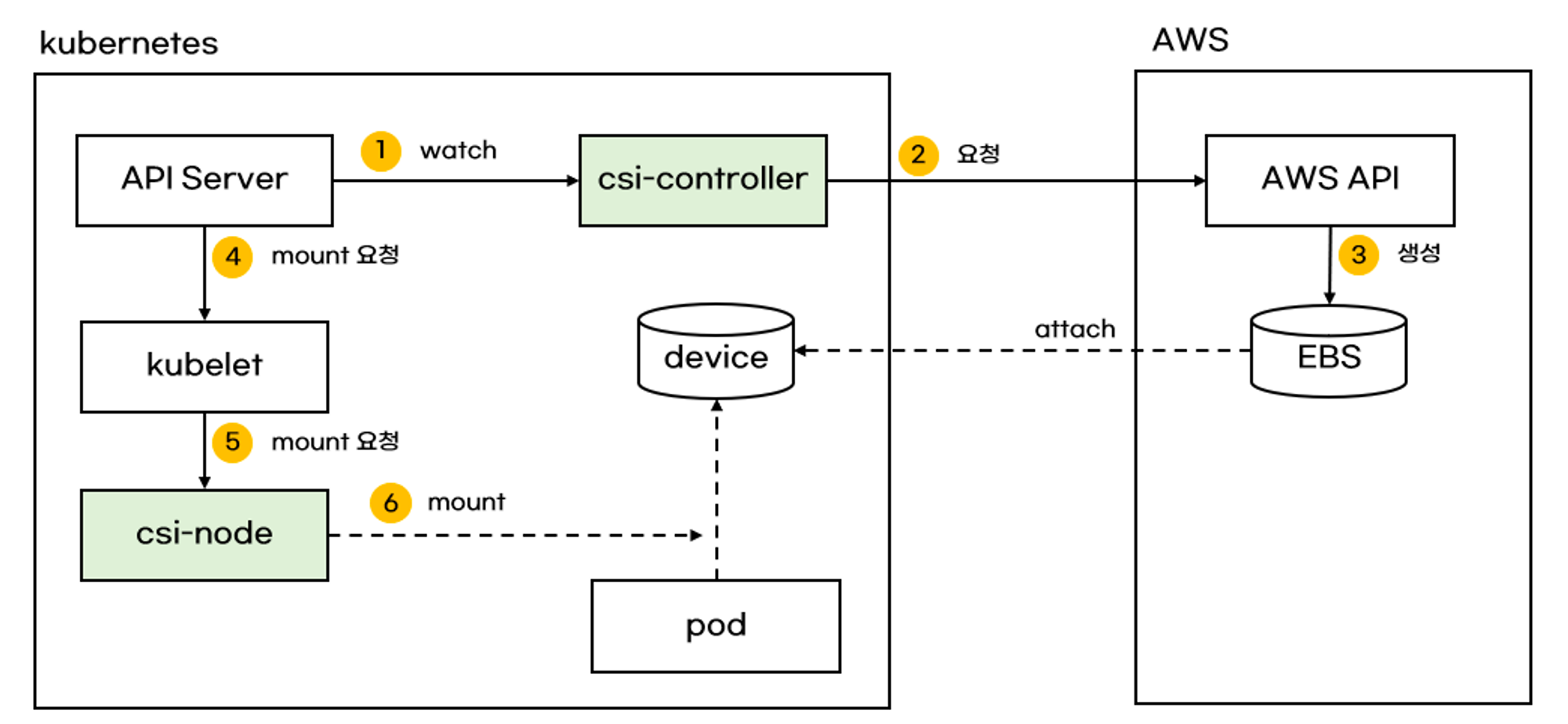

- CSI Driver Addon 추가 시 csi-controller와 csi-node 파드를 배포한다.

- csi-controller : AWS API를 통해 스토리지 관리

- csi-node : 프로비저닝한 스토리지를 파드에 마운트

- ebs-csi-controller 파드 내부에는 1개의 메인 컨테이너와 5개의 사이드카 컨테이너가 동작한다.

- ebs-plugin : 메인 컨테이너, K8S API 서버를 통해 볼륨 관련 작업을 수행한다.

- csi-provisioner : StorageClass를 참고하여 PVC 생성 시 Dynamic Provsioning을 수행하여 EBS 볼륨을 자동으로 생성한다.

- csi-attacher : VolumeAttachment를 참고하여 PVC를 EBS 볼륨과 연결하거나 분리한다.

- csi-snapshotter : EBS 볼륨의 스냅샷을 생성하거나 스냅샷을 통해 볼륨을 복원한다.

- csi-resizer : EBS 볼륨의 크기를 조정한다.

- liveness-probe : 컨트롤러 파드에 대해 Health Check를 수행하여 상태를 주기적으로 확인한다.

- StorageClass는 어떤 스토리지 유형을 생성할지 정의하며 PVC 생성 시 아래와 같은 단계를 통해 PV를 할당한다.

- PVC 생성 요청이 K8S API Server에 전달된다.

# PVC는 PV가 아닌 StorageClassName을 통해 StorageClass를 가리킨다. spec: accessModes: - ReadWriteOnce resources: requests: storage: 4Gi storageClassName: gp3 - 생성 요청을 감지한 csi-controller가 StorageClass 내용을 기반으로 AWS API 호출을 통해 EBS 볼륨을 생성한다. - Params

# StorageClass 파라미터와 속성을 참고하여 EBS 볼륨을 생성한다. allowVolumeExpansion: true provisioner: ebs.csi.aws.com volumeBindingMode: WaitForFirstConsumer parameters: type: gp3 allowAutoIOPSPerGBIncrease: 'true' encrypted: 'true' - 생성 완료 후 API Server > Kubelet > csi-node 순으로 마운트 요청을 전달하고 csi-node가 대상 파드에 EBS 볼륨을 PV로 마운트한다.

- PVC 생성 요청이 K8S API Server에 전달된다.

- PV의 Access Mode는 ReadWriteOnce임을 확인할 수 있는데 이는 EBS 설정에 의해 동일 가용영역 내 인스턴스만 연결할 수 있기 때문이다.

- 위 실습을 통해 다음과 같은 내용을 확인할 수 있다.

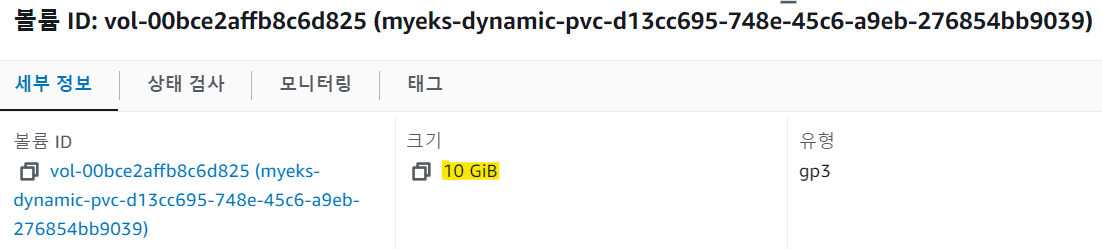

- PV의 리사이징은 증가만 가능하다

# 4Gi > 10Gi로 리사이징 $kubectl patch pvc ebs-claim -p '{"spec":{"resources":{"requests":{"storage":"10Gi"}}}}' $kubectl exec -it app -- sh -c 'df -hT --type=ext4' Filesystem Type Size Used Avail Use% Mounted on /dev/nvme1n1 ext4 9.8G 52K 9.7G 1% /data

Volume Snapshots Controller

- PV로 할당된 EBS 볼륨의 스냅샷을 생성하거나 스냅샷을 통해 볼륨을 복원하는 기능을 제공하는 컨트롤러

- 스냅샷 기능을 사용하려면 먼저 CRD와 컨트롤러를 배포해야 한다.

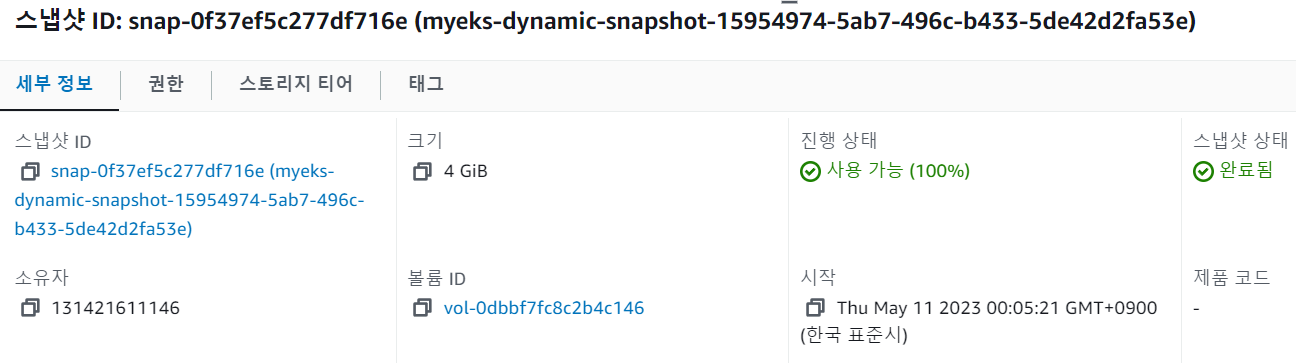

# CRD 배포 $curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml $curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml $curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml $kubectl apply -f snapshot.storage.k8s.io_volumesnapshots.yaml,snapshot.storage.k8s.io_volumesnapshotclasses.yaml,snapshot.storage.k8s.io_volumesnapshotcontents.yaml $kubectl api-resources | grep snapshot volumesnapshotclasses vsclass,vsclasses snapshot.storage.k8s.io/v1 false VolumeSnapshotClass volumesnapshotcontents vsc,vscs snapshot.storage.k8s.io/v1 false VolumeSnapshotContent volumesnapshots vs snapshot.storage.k8s.io/v1 true VolumeSnapshot # Contoller 배포 $curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml $curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yaml $kubectl apply -f rbac-snapshot-controller.yaml,setup-snapshot-controller.yaml $kubectl get pod -n kube-system -l app=snapshot-controller NAME READY STATUS RESTARTS AGE snapshot-controller-76494bf6c9-84zhl 1/1 Running 0 11s snapshot-controller-76494bf6c9-brnlm 1/1 Running 0 11s # snapshotClass 배포 $curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-ebs-csi-driver/master/examples/kubernetes/snapshot/manifests/classes/snapshotclass.yaml $kubectl apply -f snapshotclass.yaml $kubectl get vsclass NAME DRIVER DELETIONPOLICY AGE csi-aws-vsc ebs.csi.aws.com Delete 2m40s # PVC 및 테스트 파드 생성 $kubectl apply -f awsebs-pvc.yaml,awsebs-pod.yaml # 스냅샷 생성 $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ebs-volume-snapshot.yaml $cat ebs-volume-snapshot.yaml | yh apiVersion: snapshot.storage.k8s.io/v1 kind: VolumeSnapshot metadata: name: ebs-volume-snapshot spec: volumeSnapshotClassName: csi-aws-vsc source: persistentVolumeClaimName: ebs-claim $kubectl apply -f ebs-volume-snapshot.yaml $kubectl get volumesnapshot NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE ebs-volume-snapshot true ebs-claim 4Gi csi-aws-vsc snapcontent-15954974-5ab7-496c-b433-5de42d2fa53e 35s 36s # 스냅샷 ID 확인 $kubectl get volumesnapshotcontents -o jsonpath='{.items[*].status.snapshotHandle}' ; echo snap-0f37ef5c277df716e

- 스냅샷 기능을 사용하려면 먼저 CRD와 컨트롤러를 배포해야 한다.

- 스냅샷을 통해 볼륨 복원하기

# 파드, PVC 삭제 $kubectl delete pod app && kubectl delete pvc ebs-claim # dataSource 내 스냅샷 내용을 통해 PVC 생성 $cat <<EOT > ebs-snapshot-restored-claim.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: ebs-snapshot-restored-claim spec: storageClassName: gp3 accessModes: - ReadWriteOnce resources: requests: storage: 4Gi dataSource: name: ebs-volume-snapshot kind: VolumeSnapshot apiGroup: snapshot.storage.k8s.io EOT $kubectl apply -f ebs-snapshot-restored-claim.yaml # 테스트 파드 생성 $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ebs-snapshot-restored-pod.yaml $kubectl apply -f ebs-snapshot-restored-pod.yaml **$**cat ebs-snapshot-restored-pod.yaml | yh ... volumes: - name: persistent-storage persistentVolumeClaim: claimName: ebs-snapshot-restored-claim ... # PVC/PV 상태 확인 $kubectl get pvc,pv NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/ebs-snapshot-restored-claim Bound pvc-175e3403-31cf-46fa-bc04-d42fd74c3944 4Gi RWO gp3 39s NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-175e3403-31cf-46fa-bc04-d42fd74c3944 4Gi RWO Delete Bound default/ebs-snapshot-restored-claim gp3 16s # 이전 파드 삭제 전 내용 확인 $kubectl exec app -- cat /data/out.txt Wed May 10 14:49:13 UTC 2023 ...

EFS Controller

- EFS는 Network File System으로써 여러 가용영역 내 다수의 파드에 마운트하여 데이터를 저장할 수 있다.

# EFS를 제어할 수 있는 정책 생성 curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/docs/iam-policy-example.json aws iam create-policy --policy-name AmazonEKS_EFS_CSI_Driver_Policy --policy-document file://iam-policy-example.json # ServiceAcconut 생성 Stack 배포 > IAM Role 생성 및 정책 연결 eksctl create iamserviceaccount \ --name efs-csi-controller-sa \ --namespace kube-system \ --cluster ${CLUSTER_NAME} \ --attach-policy-arn arn:aws:iam::${ACCOUNT_ID}:policy/AmazonEKS_EFS_CSI_Driver_Policy \ --approve # IRSA 정보 확인 $eksctl get iamserviceaccount --cluster $CLUSTER_NAME NAMESPACE NAME ROLE ARN kube-system efs-csi-controller-sa arn:aws:iam::131421611146:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-17Q70ERZ7807I # 서비스 어카운트 확인 $kubectl get serviceaccounts -n kube-system aws-load-balancer-controller -o yaml | yh apiVersion: v1 kind: ServiceAccount metadata: annotations: eks.amazonaws.com/role-arn: arn:aws:iam::131421611146:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-C7XHVWLZFHOA creationTimestamp: "2023-05-10T05:06:45Z" labels: app.kubernetes.io/managed-by: eksctl name: aws-load-balancer-controller namespace: kube-system resourceVersion: "1224" uid: 44c34434-2c54-4aca-9a28-70f6b50238e6 # Controller 설치 $helm repo add aws-efs-csi-driver https://kubernetes-sigs.github.io/aws-efs-csi-driver/ $helm repo update $helm upgrade -i aws-efs-csi-driver aws-efs-csi-driver/aws-efs-csi-driver \ --namespace kube-system \ --set image.repository=602401143452.dkr.ecr.${AWS_DEFAULT_REGION}.amazonaws.com/eks/aws-efs-csi-driver \ --set controller.serviceAccount.create=false \ --set controller.serviceAccount.name=efs-csi-controller-sa $kubectl get pod -n kube-system -l "app.kubernetes.io/name=aws-efs-csi-driver,app.kubernetes.io/instance=aws-efs-csi-driver" NAME READY STATUS RESTARTS AGE efs-csi-controller-6f64dcc5dc-c92cw 3/3 Running 0 25s efs-csi-controller-6f64dcc5dc-chg66 3/3 Running 0 25s efs-csi-node-9d6xm 3/3 Running 0 25s efs-csi-node-krvmn 3/3 Running 0 25s efs-csi-node-ndr26 3/3 Running 0 25s - Dynamic Provisioning

# EFS 파일시스템 ID 변수 선언 EfsFsId=$(aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text) # StorageClass 생성 $curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/examples/kubernetes/dynamic_provisioning/specs/storageclass.yaml $cat storageclass.yaml | yh kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: efs-sc provisioner: efs.csi.aws.com parameters: provisioningMode: efs-ap fileSystemId: fs-92107410 directoryPerms: "700" gidRangeStart: "1000" # optional gidRangeEnd: "2000" # optional basePath: "/dynamic_provisioning" # optional # YAML 파일 내 파일시스템 ID 변경 $sed -i "s/fs-92107410/**$EfsFsId**/g" storageclass.yaml $kubectl apply -f storageclass.yaml $kubectl get sc efs-sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE efs-sc efs.csi.aws.com Delete Immediate false 8s # PVC 및 테스트 파드 생성 $curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/examples/kubernetes/dynamic_provisioning/specs/pod.yaml $kubectl apply -f pod.yaml $kubectl get pvc,pv,pod NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/efs-claim Bound pvc-24e4259e-0bd4-416e-b623-ad2626f931b7 5Gi RWX efs-sc 14s NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-24e4259e-0bd4-416e-b623-ad2626f931b7 5Gi RWX Delete Bound default/efs-claim efs-sc 14s NAME READY STATUS RESTARTS AGE pod/efs-app 1/1 Running 0 14s # PVC/PV 생성 로그 확인 $kubectl logs -n kube-system -l app=efs-csi-controller -c csi-provisioner -f I0510 16:45:51.522148 1 controller.go:923] successfully created PV pvc-24e4259e-0bd4-416e-b623-ad2626f931b7 for PVC efs-claim and csi volume name fs-06d9c51b37aeb3940::fsap-05e493b21777ed7dc I0510 16:45:51.522180 1 controller.go:1442] provision "default/efs-claim" class "efs-sc": volume "pvc-24e4259e-0bd4-416e-b623-ad2626f931b7" provisioned I0510 16:45:51.522193 1 controller.go:1455] provision "default/efs-claim" class "efs-sc": succeeded I0510 16:45:51.531643 1 event.go:285] Event(v1.ObjectReference{Kind:"PersistentVolumeClaim", Namespace:"default", Name:"efs-claim", UID:"24e4259e-0bd4-416e-b623-ad2626f931b7", APIVersion:"v1", ResourceVersion:"170437", FieldPath:""}): type: 'Normal' reason: 'ProvisioningSucceeded' Successfully provisioned volume pvc-24e4259e-0bd4-416e-b623-ad2626f931b7 # 파드 내 df 명령어 확인 $kubectl exec -it efs-app -- sh -c "df -hT -t nfs4" Filesystem Type Size Used Avail Use% Mounted on 127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /data $kubectl exec efs-app -- bash -c "cat data/out" Wed May 10 16:46:04 UTC 2023 Wed May 10 16:46:09 UTC 2023 Wed May 10 16:46:14 UTC 2023 Wed May 10 16:46:19 UTC 2023 Wed May 10 16:46:24 UTC 2023 # EFS 액세스 포인트 확인 $aws efs describe-access-points | grep Path "Path": "/dynamic_provisioning/pvc-24e4259e-0bd4-416e-b623-ad2626f931b7",

EKS Persistent Volumes for Instance Store

- 높은 IOPS를 가지는 인스턴스 스토어를 PV로 사용할 수 있다.

- 인스턴스 스토리지의 데이터는 관련 인스턴스의 수명 기간 동안만 지속됩니다. 인스턴스가 재부팅(의도적 또는 의도적이지 않게)되면 인스턴스 스토어의 데이터는 유지됩니다. 그러나 다음 상황에서는 인스턴스 스토어의 데이터가 손실됩니다.

- 기본 디스크 드라이브 오류

- 인스턴스가 중지됨

- 인스턴스가 최대 절전 모드로 전환됨

- 인스턴스가 종료됨

- 인스턴스 스토리지의 데이터는 관련 인스턴스의 수명 기간 동안만 지속됩니다. 인스턴스가 재부팅(의도적 또는 의도적이지 않게)되면 인스턴스 스토어의 데이터는 유지됩니다. 그러나 다음 상황에서는 인스턴스 스토어의 데이터가 손실됩니다.

- preBootstrapCommands를 사용하여 로컬볼륨으로 인스턴스 스토어 Static Provisioning 수행

# 50GiB를 제공하는 c5d.large 인스턴스를 노드 그룹으로 생성한다. $eksctl create nodegroup -c $CLUSTER_NAME -r $AWS_DEFAULT_REGION --subnet-ids "$PubSubnet1","$PubSubnet2","$PubSubnet3" --ssh-access \ -n ng2 -t c5d.large -N 1 -m 1 -M 1 --node-volume-size=30 --node-labels disk=nvme --max-pods-per-node 100 --dry-run > myng2.yaml $cat <<EOT > nvme.yaml preBootstrapCommands: - | # Install Tools yum install nvme-cli links tree jq tcpdump sysstat -y # Filesystem & Mount mkfs -t xfs /dev/nvme1n1 mkdir /data mount /dev/nvme1n1 /data # Get disk UUID uuid=\$(blkid -o value -s UUID mount /dev/nvme1n1 /data) # Mount the disk during a reboot echo /dev/nvme1n1 /data xfs defaults,noatime 0 2 >> /etc/fstab EOT $sed -i -n -e '/volumeType/r nvme.yaml' -e '1,$p' myng2.yaml $cat myng2.yaml | yh apiVersion: eksctl.io/v1alpha5 kind: ClusterConfig managedNodeGroups: - amiFamily: AmazonLinux2 desiredCapacity: 1 disableIMDSv1: false disablePodIMDS: false iam: withAddonPolicies: albIngress: false appMesh: false appMeshPreview: false autoScaler: false awsLoadBalancerController: false certManager: false cloudWatch: false ebs: false efs: false externalDNS: false fsx: false imageBuilder: false xRay: false instanceSelector: {} instanceType: c5d.large labels: alpha.eksctl.io/cluster-name: myeks alpha.eksctl.io/nodegroup-name: ng2 disk: nvme maxPodsPerNode: 100 maxSize: 1 minSize: 1 name: ng2 privateNetworking: false releaseVersion: "" securityGroups: withLocal: null withShared: null ssh: allow: true publicKeyPath: ~/.ssh/id_rsa.pub subnets: - subnet-095bafdb7a1fb7987 - subnet-0f55c6363799fb6cf - subnet-0a794ee146223c6d9 tags: alpha.eksctl.io/nodegroup-name: ng2 alpha.eksctl.io/nodegroup-type: managed volumeIOPS: 3000 volumeSize: 30 volumeThroughput: 125 volumeType: gp3 preBootstrapCommands: - | yum install nvme-cli links tree jq tcpdump sysstat -y mkfs -t xfs /dev/nvme1n1 mkdir /data mount /dev/nvme1n1 /data uuid=$(blkid -o value -s UUID mount /dev/nvme1n1 /data) echo /dev/nvme1n1 /data xfs defaults,noatime 0 2 >> /etc/fstab metadata: name: myeks region: ap-northeast-2 version: "1.24" $eksctl create nodegroup -f myng2.yaml # 노드 보안 그룹 ID 변수 선언 및 작업용 인스턴스 인바운드 룰 추가 NG2SGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*ng2* --query "SecurityGroups[*].[GroupId]" --output text) aws ec2 authorize-security-group-ingress --group-id $NG2SGID --protocol '-1' --cidr 192.168.1.100/32 # 노드 SSH 접속 확인 $N4=192.168.1.41 $ssh ec2-user@$N4 hostname # 인스턴스 스토어 확인 $ssh ec2-user@$N4 sudo nvme list Node SN Model Namespace Usage Format FW Rev ---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- -------- /dev/nvme1n1 AWS4FABE51AAB42EDF2A Amazon EC2 NVMe Instance Storage 1 50.00 GB / 50.00 GB 512 B + 0 B 0 $ssh ec2-user@$N4 sudo df -hT -t xfs Filesystem Type Size Used Avail Use% Mounted on /dev/nvme0n1p1 xfs 30G 3.2G 27G 11% / /dev/nvme1n1 xfs 47G 365M 47G 1% /data