5주차

본 페이지는 AWS EKS Workshop 실습 스터디의 일환으로 매주 실습 결과와 생각을 기록하는 장으로써 작성하였습니다.

실습 전 환경 배포

$curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/eks-oneclick4.yaml

$aws cloudformation deploy \

--template-file eks-oneclick4.yaml \

--stack-name myeks \

--parameter-overrides KeyName=atomy-test-key \

SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 \

MyIamUserAccessKeyID='accesskey' \

MyIamUserSecretAccessKey='secretkey' \

ClusterBaseName=myeks --region ap-northeast-2

$ssh -i ~/.ssh/eljoe-test-key.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)

# 퍼블릭 인증서 ARN 입력

$CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

# Set Namespace

$kubectl ns default

# context

$NICK=eljoe

$kubectl ctx

$kubectl config rename-context admin@myeks.ap-northeast-2.eksctl.io $NICK@myeks

# ExternalDNS

$MyDomain=eljoe-test.link

$echo "export MyDomain=eljoe-test.link" >> /etc/profile

$MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

$curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

$MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

# kube-ops-view

$helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

$helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

$kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}'

$kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain"

$echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5"

# LB Controller

$helm repo add eks https://aws.github.io/eks-charts

$helm repo update

$helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

# 노드 보안그룹 설정

$NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values='*ng1*' --query "SecurityGroups[*].[GroupId]" --output text)

$aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.100/32

# Prometheus & Grafana

$helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

# 파라미터 파일 생성

$cat <<EOT > monitor-values.yaml

prometheus:

prometheusSpec:

podMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelectorNilUsesHelmValues: false

retention: 5d

retentionSize: "10GiB"

verticalPodAutoscaler:

enabled: true

ingress:

enabled: true

ingressClassName: alb

hosts:

- prometheus.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

grafana:

defaultDashboardsTimezone: Asia/Seoul

adminPassword: prom-operator

ingress:

enabled: true

ingressClassName: alb

hosts:

- grafana.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

defaultRules:

create: false

kubeControllerManager:

enabled: false

kubeEtcd:

enabled: false

kubeScheduler:

enabled: false

alertmanager:

enabled: false

EOT

# 배포

$kubectl create ns monitoring

$helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 45.27.2 \

--set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \

-f monitor-values.yaml --namespace monitoring

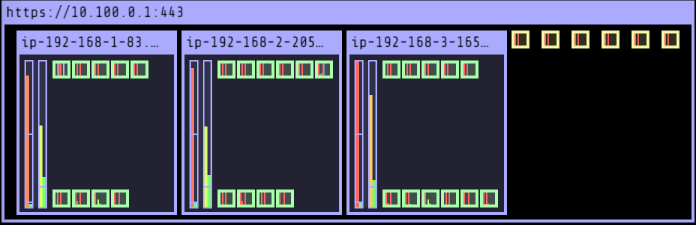

# EKS Node Viewer : 노드 할당 가능 용량과 resources.requests 표시 - 단, 실제 파드 리소스 사용량은 아님!

# golang, eks node viewer 설치

$yum install -y go

$go install github.com/awslabs/eks-node-viewer/cmd/eks-node-viewer@latest

# 확인

$cd ~/go/bin

$./eks-node-viewer

3 nodes (775m/5790m) 13.4% cpu █████░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ $0.156/hour | $113.819 pods (0 pending 19 running 19 bound)

ip-192-168-2-205.ap-northeast-2.compute.internal cpu ██████░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ 17

ip-192-168-1-83.ap-northeast-2.compute.internal cpu ████░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ 12

ip-192-168-3-165.ap-northeast-2.compute.internal cpu ████░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ 12Press any key to quit

# Sample commands

# Display both CPU and Memory Usage

$./eks-node-viewer --resources cpu,memory

# Karenter nodes only

$./eks-node-viewer --node-selector "karpenter.sh/provisioner-name"

# Display extra labels, i.e. AZ

$./eks-node-viewer --extra-labels topology.kubernetes.io/zoneHorizontal Pod Autoscaler

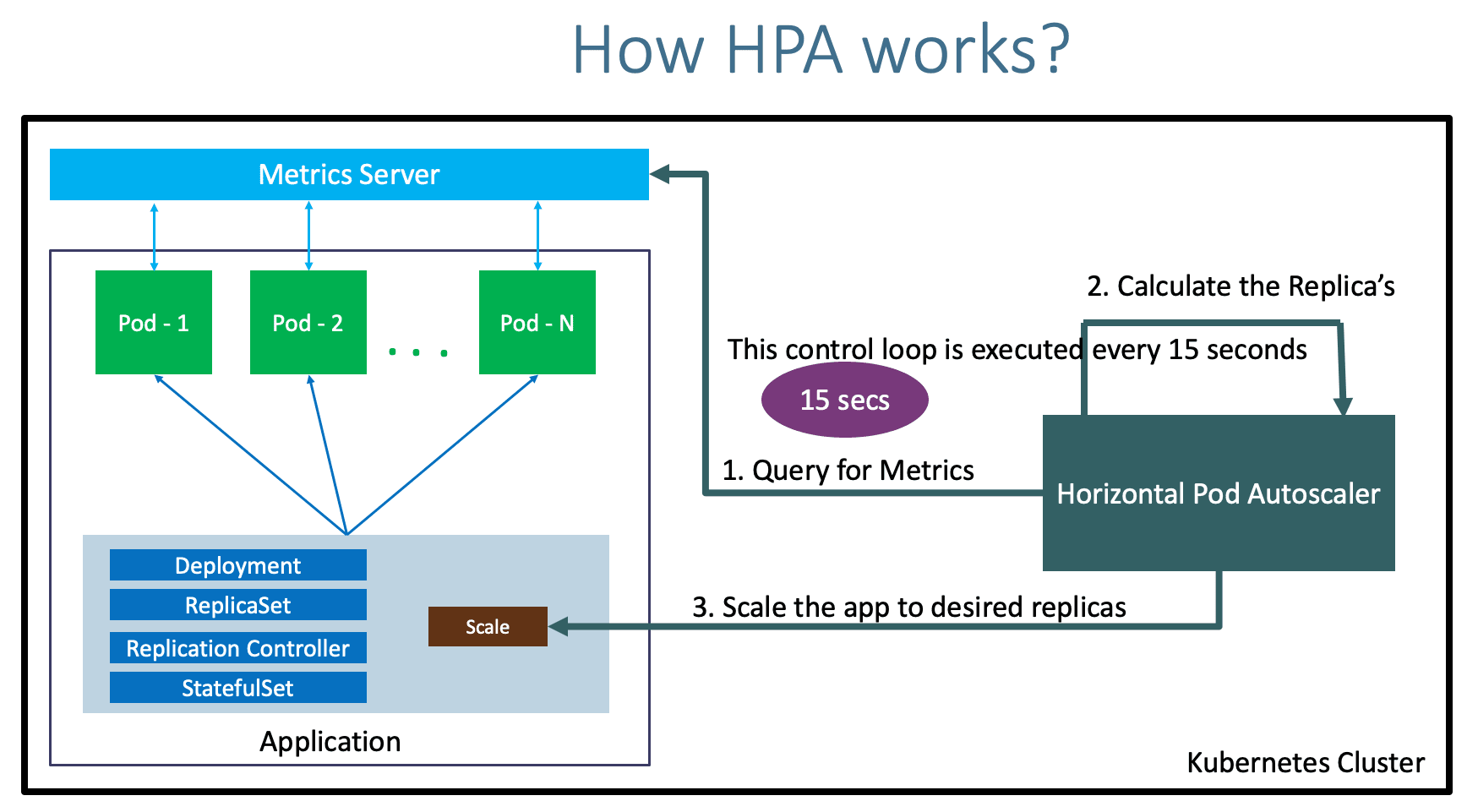

- 클러스터 내 메트릭을 집계하는 Addon인 Metric-server에서 수집한 메트릭을 통해 Auto Scaling(In/Out)하는 기능

# metric-server가 배포되지 않은 경우 HPA는 동작하지 않는다. $kubectl top node error: Metrics API not available $kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml $kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% ip-192-168-1-83.ap-northeast-2.compute.internal 51m 2% 724Mi 21% ip-192-168-2-205.ap-northeast-2.compute.internal 56m 2% 710Mi 21% ip-192-168-3-165.ap-northeast-2.compute.internal 261m 13% 546Mi 16%- Metric-server - 링크

-

CPU/Memory와 같은 리소스 사용량 메트릭의 경우 kubelet에 포함된 cAdvisor라는 데몬이 파드 내 컨테이너의 CPU/Memroy를 수집하고 kubelet은 이를 노출한다.

-

metric-server가 kubelet을 통해 수집 및 집계하여 metric-api에 등록한다.

-

HPA는 metric-api가 제공하는 엔드포인트를 참고하여 15초(기본 값)마다 리소스 사용량을 체크하여 아래 계산식을 통해 원하는 pod 수를 계산한다.

- 현재 파드 개수가 1개이고, 스케일링 대상 메트릭인 CPU의 Utilization이 평균 50% 이상, 현재 수치가 70%일 때 다음과 같다.

ceil(1 * (70/50)) = 2.0

- 현재 파드 개수가 1개이고, 스케일링 대상 메트릭인 CPU의 Utilization이 평균 50% 이상, 현재 수치가 70%일 때 다음과 같다.

-

원하는 수만큼 HPA Controller가 Deployment 또는 Statefullset의 Pod 개수를 수정하는 요청을 보낸다.

-

- Metric type

metrics: - type: Resource resource: name: cpu target: type: Utilization averageUtilization: 50 - type: Object object: metric: name: requests-per-second describedObject: apiVersion: networking.k8s.io/v1 kind: Ingress name: main-route target: type: Value value: 10k - type: External external: metric: name: queue_messages_ready selector: matchLabels: queue: "worker_tasks" target: type: AverageValue averageValue: 30- Resource Metric : CPU, 메모리 사용량과 같은 pod, node 내부 리소스 사용량을 제공하고, cAdviosr가 해당 메트릭을 수집한다.

- Custom Metric : CPU, 메모리 외 Network와 같은 다양한 리소스의 사용량이 필요할 때 사용자가 직접 정의한 메트릭, 별도의 메트릭 수집기가 필요하다.

- External Metric : K8S와 관련이 없는 메트릭 기반 스케일링 작업이 필요한 경우 별도의 수집기를 통해 수집한 메트릭

- Metric-server - 링크

- 실습

# cpu 과부화 연산 수행하는 php-apache pod 배포 $curl -s -O https://raw.githubusercontent.com/kubernetes/website/main/content/en/examples/application/php-apache.yaml $kubectl apply -f php-apache.yaml # 부하 테스트를 위한 Pod IP 설정 $PODIP=$(kubectl get pod -l run=php-apache -o jsonpath={.items[0].status.podIP}) # HPA 생성 $kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10 $kubectl describe hpa ... Metrics: ( current / target ) resource cpu on pods (as a percentage of request): <unknown> / 50% Min replicas: 1 Max replicas: 10 Deployment pods: 0 current / 0 desired Events: <none> # HPA 설정 확인 $kubectl krew install neat # CPU 평균 사용률이 50% 미만/초과 시 파드를 최소 1개, 최대 5개 스케일링 수행 $kubectl get hpa php-apache -o yaml | kubectl neat | yh apiVersion: autoscaling/v2 kind: HorizontalPodAutoscaler metadata: name: php-apache namespace: default spec: maxReplicas: 10 metrics: - resource: name: cpu target: averageUtilization: 50 type: Utilization type: Resource minReplicas: 1 scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: php-apache # 부하 테스트 수행 $kubectl run -i --tty load-generator --rm --image=busybox:1.28 --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done" # 파드 개수 증가 모니터링 - 터미널 추가 $watch -d 'kubectl get hpa,pod;echo;kubectl top pod;echo;kubectl top node' Every 2.0s: kubectl get hpa,pod;echo;kubectl top pod;echo;kubec... Thu May 25 14:33:17 2023 NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE horizontalpodautoscaler.autoscaling/php-apache Deployment/php-apache 88%/50% 1 10 6 10m NAME READY STATUS RESTARTS AGE pod/load-generator 1/1 Running 0 56s pod/php-apache-698db99f59-2zwqh 1/1 Running 0 7s pod/php-apache-698db99f59-4xklb 1/1 Running 0 35m pod/php-apache-698db99f59-6q9jl 1/1 Running 0 22s pod/php-apache-698db99f59-89gc7 1/1 Running 0 7s pod/php-apache-698db99f59-m7jv5 1/1 Running 0 4m8s pod/php-apache-698db99f59-rzmgn 1/1 Running 0 4m8s NAME CPU(cores) MEMORY(bytes) load-generator 6m 0Mi php-apache-698db99f59-4xklb 249m 12Mi php-apache-698db99f59-6q9jl 121m 11Mi php-apache-698db99f59-m7jv5 93m 11Mi php-apache-698db99f59-rzmgn 137m 11Mi NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% ip-192-168-1-83.ap-northeast-2.compute.internal 161m 8% 749Mi 22% ip-192-168-2-205.ap-northeast-2.compute.internal 166m 8% 758Mi 22% ip-192-168-3-165.ap-northeast-2.compute.internal 449m 23% 593Mi 17% # 중지 5분 후 파드 개수 감소 확인 Every 2.0s: kubectl get hpa,pod;echo;kubectl top pod;echo;kubec... Thu May 25 14:39:26 2023 NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE horizontalpodautoscaler.autoscaling/php-apache Deployment/php-apache 0%/50% 1 10 3 16m NAME READY STATUS RESTARTS AGE pod/php-apache-698db99f59-4xklb 1/1 Running 0 41m NAME CPU(cores) MEMORY(bytes) php-apache-698db99f59-4xklb 1m 12Mi NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% ip-192-168-1-83.ap-northeast-2.compute.internal 105m 5% 762Mi 23% ip-192-168-2-205.ap-northeast-2.compute.internal 77m 3% 766Mi 23% ip-192-168-3-165.ap-northeast-2.compute.internal 71m 3% 580Mi 17%

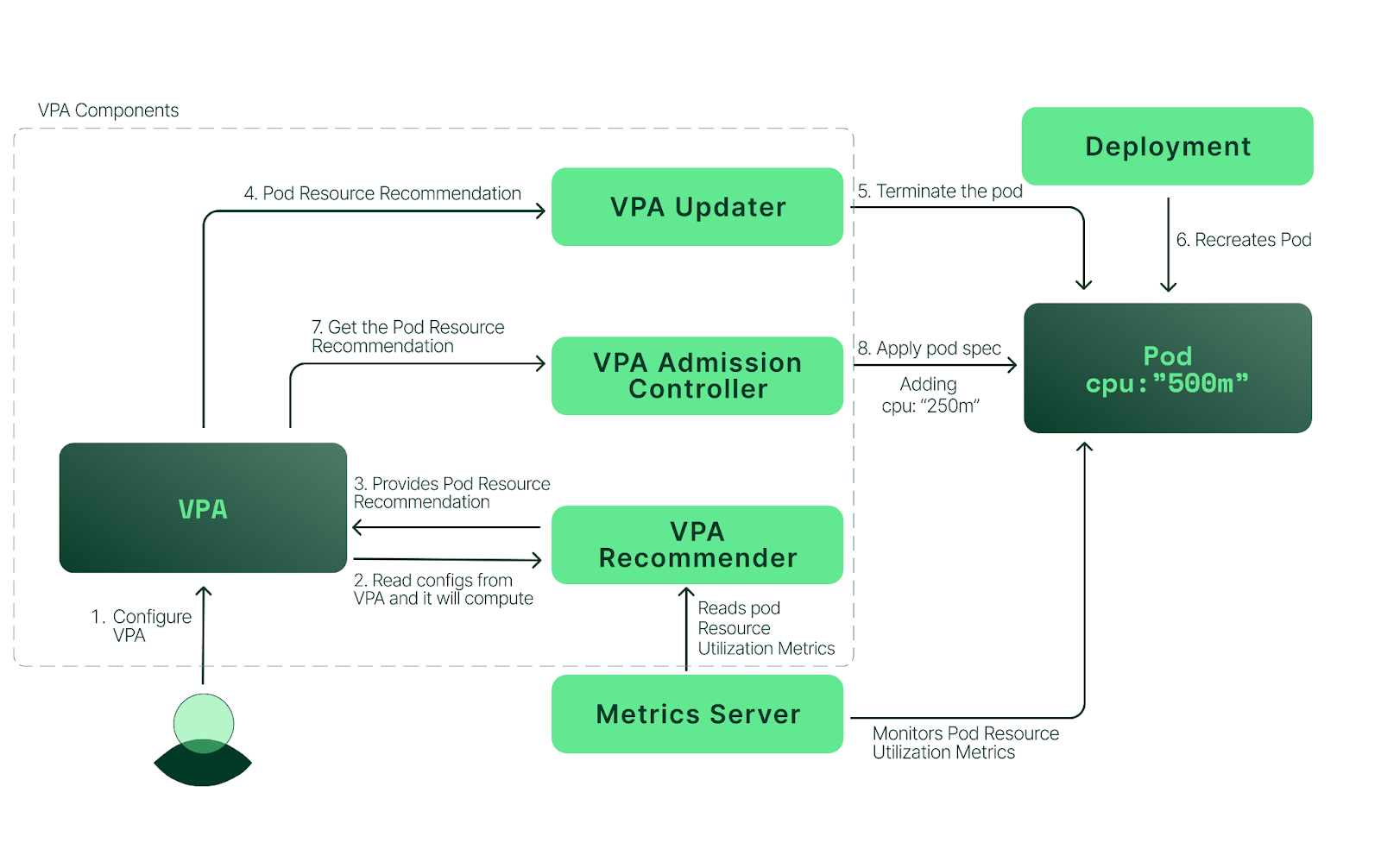

Vertical Pod Autoscaler

- Pod의 CPU/메모리와 같은 리소스 사용량을 일정 시간 모니터링하여 집계한 메트릭을 기반으로

resources.requests.[cpu/memory]값을 통해 Auto Scaling(Up/Down)하는 기능 - 참조

- 사용자가 VPA를 설정한다.

- VPA Recommender는 리소스 사용률 메트릭을 metric-server로부터 수집하고 집계하여 해당 Pod의 리소스 권장 값을 계산하여 제공한다.

- VPA Updater는 리소스 권장 값을 읽고 스케일링 대상 파드를 종료시킨다.

- Deployment는 파드가 종료됨을 인식하고 Replica 설정에 따라 파드를 재생성한다.

- VPA Admission Controller는 리소스 권장 값을 가져와서 새로운 파드에 overwrite한다.

- Update Mode

- VPA는 4가지 업데이트 모드(

updateMode)를 제공한다.apiVersion: "autoscaling.k8s.io/v1" kind: VerticalPodAutoscaler metadata: name: vpa-recommender spec: targetRef: apiVersion: "apps/v1" kind: Deployment name: frontend updatePolicy: updateMode: "Auto"- Auto 또는 Recreate : 파드의 수명 주기동안 리소스 권장 값과 일치하지 않는 파드를 자동으로 Overwrite하고 재생성한다.

- Off : 리소스 권장 값 제공만 수행하고 파드 적용 후 재생성은 수동으로 진행한다.

- Initial : 파드 생성 시에만 자동으로 리소스 권장 값으로 Overwrite하고 재생성한다.

- VPA는 4가지 업데이트 모드(

- 제약사항

- 파드 리소스를 업데이트할 때마다 파드를 재생성하므로 다른 노드에 재생성될 수도 있다.

- 현재 Resource Metric에서 HPA와 함께 사용이 불가하나 Custom Metric & External Metric에서는 함께 사용할 수 있다.

- 실습

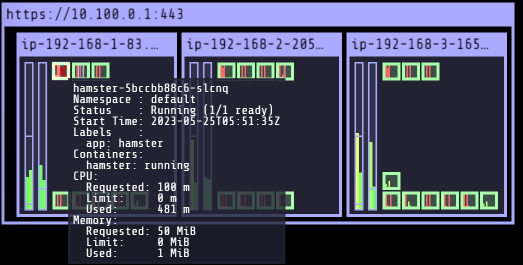

# VPA 저장소 Clone $git clone https://github.com/kubernetes/autoscaler.git $cd autoscaler/vertical-pod-autoscaler/ $tree hack hack ├── boilerplate.go.txt ├── convert-alpha-objects.sh ├── deploy-for-e2e.sh ├── generate-crd-yaml.sh ├── run-e2e.sh ├── run-e2e-tests.sh ├── update-codegen.sh ├── update-kubernetes-deps-in-e2e.sh ├── update-kubernetes-deps.sh ├── verify-codegen.sh ├── vpa-apply-upgrade.sh ├── vpa-down.sh ├── vpa-process-yaml.sh ├── vpa-process-yamls.sh ├── vpa-up.sh └── warn-obsolete-vpa-objects.sh 0 directories, 16 files # openssl11 설치 $yum install openssl11 -y $openssl11 version OpenSSL 1.1.1g FIPS 21 Apr 2020 # admission-controller/gencerts.sh > openssl 버전 수정 $sed -i 's/openssl/openssl11/g' ~/autoscaler/vertical-pod-autoscaler/pkg/admission-controller/gencerts.sh #VPA 배포 $./hack/vpa-up.sh # 배포된 파드 확인 $kubectl get pod -n kube-system | grep vpa vpa-admission-controller-f5b78d56f-cdbpq 1/1 Running 0 96s vpa-recommender-59857557d6-h2sdm 1/1 Running 0 98s vpa-updater-5c8dbcfc55-k2l5j 1/1 Running 0 102s # 배포된 CRD 확인 $kubectl get crd | grep autoscaling verticalpodautoscalercheckpoints.autoscaling.k8s.io 2023-05-25T05:46:47Z verticalpodautoscalers.autoscaling.k8s.io 2023-05-25T05:46:47Z # 공식 예제 배포 - 부하 테스트 파드, VPA 생성 cd ~/autoscaler/vertical-pod-autoscaler/ $kubectl apply -f examples/hamster.yaml && kubectl get vpa -w NAME MODE CPU MEM PROVIDED AGE hamster-vpa Auto 1s hamster-vpa Auto 548m 262144k True 26s $kubectl describe pod | grep Requests: -A2 Requests: cpu: 100m memory: 50Mi -- Requests: cpu: 100m memory: 50Mi -- Requests: cpu: 548m memory: 262144k # 파드 모니터링 - 터미널 추가 $watch -d kubectl top pod Every 2.0s: kubectl top pod Thu May 25 14:53:52 2023 NAME CPU(cores) MEMORY(bytes) hamster-5bccbb88c6-slcnq 497m 0Mi hamster-5bccbb88c6-x9m24 405m 0Mi # VPA가 기존 파드를 삭제하고 변경한 CPU 값을 적용한 파드를 생성함 $kubectl get events --sort-by=".metadata.creationTimestamp" | grep VPA 3m30s Normal EvictedByVPA pod/hamster-5bccbb88c6-jjbl9 Pod was evicted by VPA Updater to apply resource recommendation. 2m30s Normal EvictedByVPA pod/hamster-5bccbb88c6-slcnq Pod was evicted by VPA Updater to apply resource recommendation.- 기존 파드를 삭제하고 변경한 CPU 값을 적용한 파드를 생성한 것을 확인할 수 있다.

- 기존 파드를 삭제하고 변경한 CPU 값을 적용한 파드를 생성한 것을 확인할 수 있다.

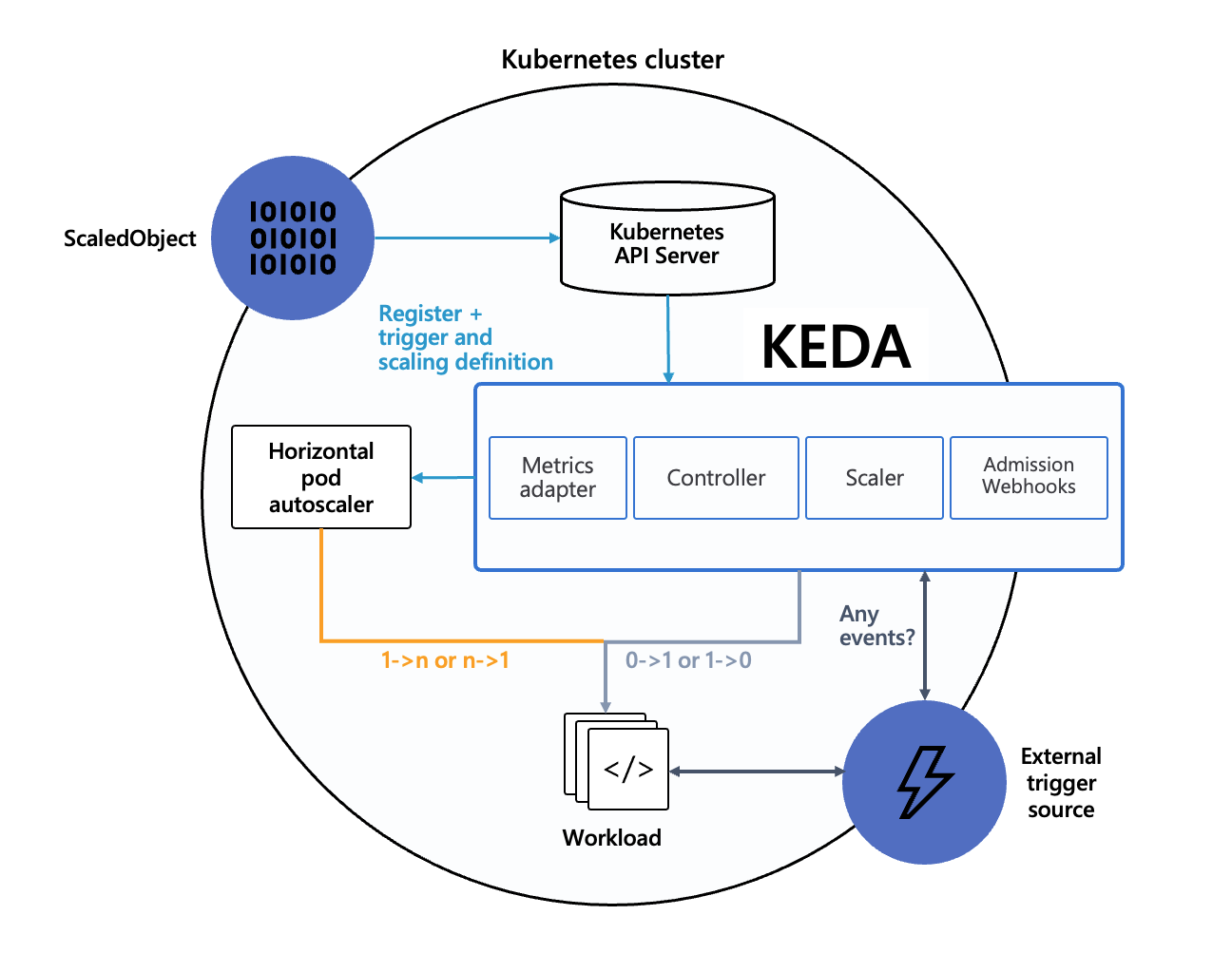

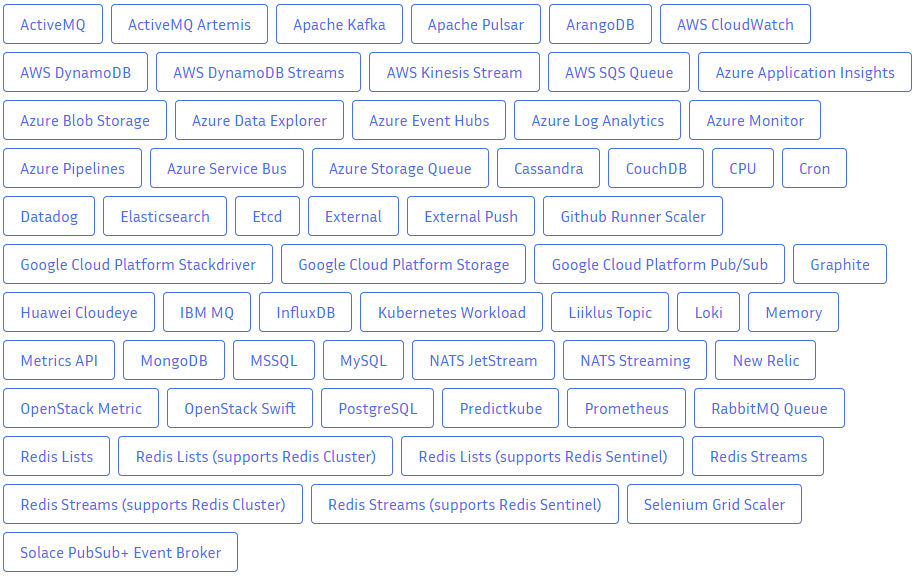

Kubernetes based Event Driven Autoscaler

- 이벤트 기반 Autoscaler

- 아래와 같이 여러가지 이벤트 기반 Autoscaling이 가능하다.

- 실습

# KEDA 설치 $cat <<EOT > keda-values.yaml metricsServer: useHostNetwork: true prometheus: metricServer: enabled: true port: 9022 portName: metrics path: /metrics serviceMonitor: # Enables ServiceMonitor creation for the Prometheus Operator enabled: true podMonitor: # Enables PodMonitor creation for the Prometheus Operator enabled: true operator: enabled: true port: 8080 serviceMonitor: # Enables ServiceMonitor creation for the Prometheus Operator enabled: true podMonitor: # Enables PodMonitor creation for the Prometheus Operator enabled: true webhooks: enabled: true port: 8080 serviceMonitor: # Enables ServiceMonitor creation for the Prometheus webhooks enabled: true EOT # 배포 $kubectl create namespace keda $helm repo add kedacore https://kedacore.github.io/charts $helm install keda kedacore/keda --version 2.10.2 --namespace keda -f keda-values.yaml $kubectl get-all -n keda NAME NAMESPACE AGE configmap/kube-root-ca.crt keda 67s endpoints/keda-admission-webhooks keda 37s endpoints/keda-operator keda 37s endpoints/keda-operator-metrics-apiserver keda 37s pod/keda-admission-webhooks-68cf687cbf-w7c7v keda 36s pod/keda-operator-656478d687-7m25c keda 36s pod/keda-operator-metrics-apiserver-7fd585f657-44g2s keda 36s secret/kedaorg-certs keda 26s secret/sh.helm.release.v1.keda.v1 keda 37s serviceaccount/default keda 67s serviceaccount/keda-operator keda 37s service/keda-admission-webhooks keda 37s service/keda-operator keda 37s service/keda-operator-metrics-apiserver keda 37s deployment.apps/keda-admission-webhooks keda 37s deployment.apps/keda-operator keda 37s deployment.apps/keda-operator-metrics-apiserver keda 37s replicaset.apps/keda-admission-webhooks-68cf687cbf keda 36s replicaset.apps/keda-operator-656478d687 keda 36s replicaset.apps/keda-operator-metrics-apiserver-7fd585f657 keda 36s lease.coordination.k8s.io/operator.keda.sh keda 26s endpointslice.discovery.k8s.io/keda-admission-webhooks-zxvkc keda 37s endpointslice.discovery.k8s.io/keda-operator-4w5dn keda 37s endpointslice.discovery.k8s.io/keda-operator-metrics-apiserver-9pftl keda 37s podmonitor.monitoring.coreos.com/keda-operator keda 36s podmonitor.monitoring.coreos.com/keda-operator-metrics-apiserver keda 36s servicemonitor.monitoring.coreos.com/keda-admission-webhooks keda 36s servicemonitor.monitoring.coreos.com/keda-operator keda 36s servicemonitor.monitoring.coreos.com/keda-operator-metrics-apiserver keda 36s rolebinding.rbac.authorization.k8s.io/keda-operator keda 37s role.rbac.authorization.k8s.io/keda-operator keda 37s $kubectl get crd | grep keda clustertriggerauthentications.keda.sh 2023-05-25T06:00:55Z scaledjobs.keda.sh 2023-05-25T06:00:55Z scaledobjects.keda.sh 2023-05-25T06:00:55Z triggerauthentications.keda.sh 2023-05-25T06:00:55Z # keda 네임스페이스에 테스트 파드 생성 $kubectl apply -f php-apache.yaml -n keda $kubectl get pod -n keda NAME READY STATUS RESTARTS AGE keda-admission-webhooks-68cf687cbf-w7c7v 1/1 Running 0 86s keda-operator-656478d687-7m25c 1/1 Running 1 (75s ago) 86s keda-operator-metrics-apiserver-7fd585f657-44g2s 1/1 Running 0 86s php-apache-698db99f59-x65vl 1/1 Running 0 6s # ScaledObject 정책(cron) 생성 $cat <<EOT > keda-cron.yaml apiVersion: keda.sh/v1alpha1 kind: ScaledObject metadata: name: php-apache-cron-scaled spec: minReplicaCount: 0 maxReplicaCount: 2 pollingInterval: 30 cooldownPeriod: 300 scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: php-apache triggers: - type: cron metadata: timezone: Asia/Seoul start: 00,15,30,45 * * * * end: 05,20,35,50 * * * * desiredReplicas: "1" EOT $kubectl apply -f keda-cron.yaml -n keda # 리소스 모니터링 - 터미널 추가 # 크론 주기마다 파드가 추가/삭제된다. $watch -d 'kubectl get ScaledObject,hpa,pod -n keda' # 삭제(1 -> 0) NAME SCALETARGETKIND SCALETARGETNAME MIN MAX TRIGGERS AUTHENTICATION READY ACTIVE FALLBACK AGE scaledobject.keda.sh/php-apache-cron-scaled apps/v1.Deployment php-apache 0 2 cron True True Unknown 43s NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE horizontalpodautoscaler.autoscaling/keda-hpa-php-apache-cron-scaled Deployment/php-apache <unknown>/1 (avg) 0 2 0 43s NAME READY STATUS RESTARTS AGEpod/keda-admission-webhooks-68cf687cbf-w7c7v 1/1 Running 0 4m30s pod/keda-operator-656478d687-7m25c 1/1 Running 1 (4m19s ago) 4m30s pod/keda-operator-metrics-apiserver-7fd585f657-44g2s 1/1 Running 0 4m30ss # 추가(0 -> 1) NAME SCALETARGETKIND SCALETARGETNAME MIN MAX TRIGGERS AUTHENTICATION READY ACTIVE FALLBACK AGE scaledobject.keda.sh/php-apache-cron-scaled apps/v1.Deployment php-apache 0 2 cron True True Unknown 10m NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE horizontalpodautoscaler.autoscaling/keda-hpa-php-apache-cron-scaled Deployment/php-apache <unknown>/1 (avg) 1 2 0 10m NAME READY STATUS RESTARTS AGE pod/keda-admission-webhooks-68cf687cbf-w7c7v 1/1 Running 0 14m pod/keda-operator-656478d687-7m25c 1/1 Running 1 (14m ago) 14m pod/keda-operator-metrics-apiserver-7fd585f657-44g2s 1/1 Running 0 14m pod/php-apache-698db99f59-2gghq 1/1 Running 0 18s # HPA 설정 확인 $kubectl get hpa -o jsonpath={.items[0].spec} -n keda | jq { "maxReplicas": 2, "metrics": [ { "external": { "metric": { "name": "s0-cron-Asia-Seoul-00,15,30,45xxxx-05,20,35,50xxxx", "selector": { "matchLabels": { "scaledobject.keda.sh/name": "php-apache-cron-scaled" } } }, "target": { "averageValue": "1", "type": "AverageValue" } }, "type": "External" } ], "minReplicas": 1, "scaleTargetRef": { "apiVersion": "apps/v1", "kind": "Deployment", "name": "php-apache" } }

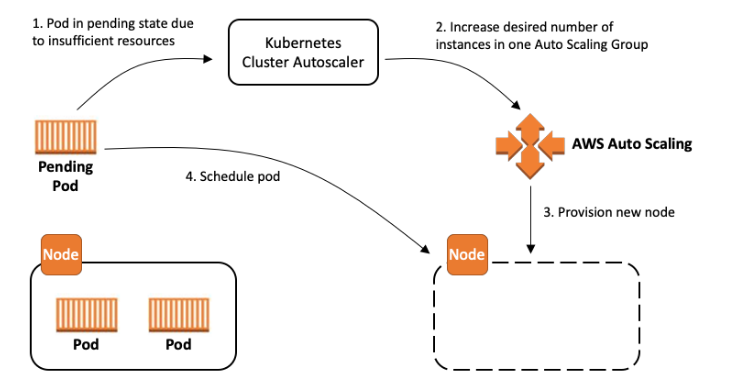

Cluster Autoscaler

- 노드의 수를 Auto Scaling(In/Out)하는 기능 - 참조

- EKS의 경우 Auto Scaling Group을 통해 CA를 수행한다.

- 파드를 생성하였으나 노드의 리소스가 부족하여

Pending상태인 파드가 존재할 경우를 감지하여 노드의 수를 스케일 아웃하고 파드를 배치한다. - 특정 시간마다 사용률을 체크하여 낮은 노드의 경우 스케일 인을 수행하고 해당 파드들을 다른 노드에 생성한다.

- 파드를 생성하였으나 노드의 리소스가 부족하여

- 문제점

- EKS에서 직접 노드를 삭제하여도 해당 EC2 인스턴스는 삭제되지 않는다 - ASG에 의존적

- 특정 노드 축소 방지는 가능하나 축소 되도록 하기엔 어렵다.

- 축소 방지

$kubectl annotate node <nodename> cluster-autoscaler.kubernetes.io/scale-down-disabled=true

- 축소 방지

- 스케일링 속도가 많이 느리다.

- 스케일링 관련 옵션들의 기본 값이 ‘분’단위이다.

- scale-down-delay-after-add(10분) : 스케일 아웃 수행 후 지정한 시간만큼 대기하고 스케일 인 여부를 체크한다.

- scale-down-unneeded-time(10분) : 지정한 시간동안 사용하지 않는 것으로 판단하면 스케일 인을 한다.

- 스케일링 관련 옵션들의 기본 값이 ‘분’단위이다.

- 클러스터 전체나 각 노드의 사용률 평균이 아닌 Pod 내부의 request와 limit 값을 고려하여 스케일 인/아웃을 수행한다.

- Pending 상태의 파드가 생기는 타이밍에 처음으로 CA가 동작하므로 request와 limit 값을 적절하게 설정하지 않은 상태의 경우, 노드의 평균 사용률이 낮은 상황임에도 스케일 아웃이 되거나 반대로 평균 사용률이 높은데도 스케일 아웃이 되지 않을 수 있다.

- 기본적으로 리소스에 의한 스케줄링은 Requests(최소)를 기준으로 이루어진다. 다시 말해 Requests 를 초과하여 할당한 경우에는 최소 리소스 요청만으로 리소스가 꽉 차 버려서 신규 노드를 추가해야만 한다. 이때 실제 컨테이너 프로세스가 사용하는 리소스 사용량은 고려되지 않는다.

- 반대로 Request 를 낮게 설정한 상태에서 Limit 차이가 나는 상황을 생각해보자. 각 컨테이너는 Limits 로 할당된 리소스를 최대로 사용한다. 그래서 실제 리소스 사용량이 높아졌더라도 Requests 합계로 보면 아직 스케줄링이 가능하기 때문에 클러스터가 스케일 아웃하지 않는 상황이 발생한다.

- Pending 상태의 파드가 생기는 타이밍에 처음으로 CA가 동작하므로 request와 limit 값을 적절하게 설정하지 않은 상태의 경우, 노드의 평균 사용률이 낮은 상황임에도 스케일 아웃이 되거나 반대로 평균 사용률이 높은데도 스케일 아웃이 되지 않을 수 있다.

- 실습

# 노드에 해당 태그가 삽입되어야 한다. $aws ec2 describe-instances --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Reservations[*].Instances[*].Tags[*]" --output yaml | yh - Key: k8s.io/cluster-autoscaler/myeks Value: owned - Key: k8s.io/cluster-autoscaler/enabled Value: 'true' # ASG MaxSize 3 -> 6개로 수정 $export ASG_NAME=$(aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].AutoScalingGroupName" --output text) $aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 3 --desired-capacity 3 --max-size 6 $aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table ----------------------------------------------------------------- | DescribeAutoScalingGroups | +------------------------------------------------+----+----+----+ | eks-ng1-dac42868-5c98-7c58-3210-d1ef62e694c6 | 3 | 6 | 3 | +------------------------------------------------+----+----+----+ # Cluster Autoscaler 배포 $curl -s -O https://raw.githubusercontent.com/kubernetes/autoscaler/master/cluster-autoscaler/cloudprovider/aws/examples/cluster-autoscaler-autodiscover.yaml $sed -i "s/<YOUR CLUSTER NAME>/$CLUSTER_NAME/g" cluster-autoscaler-autodiscover.yaml $kubectl apply -f cluster-autoscaler-autodiscover.yaml $kubectl get pod -n kube-system | grep cluster-autoscaler cluster-autoscaler-74785c8d45-52pcx 1/1 Running 0 64s 1/1 Running 0 20s # 테스트 파드 배포 $cat << EOT > nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-to-scaleout spec: replicas: 1 selector: matchLabels: app: nginx template:ca metadata: labels: service: nginx app: nginx spec: containers: - image: nginx name: nginx-to-scaleout resources: limits: cpu: 500m memory: 512Mi requests: cpu: 500m memory: 512Mi EOT $kubectl apply -f nginx.yaml # 스케일 아웃 개수 15개 $kubectl scale --replicas=15 deployment/nginx-to-scaleout && date # 노드 모니터링 - 터미널 추가 $watch -d 'kubectl get nodes' Every 2.0s: kubectl get nodes Thu May 25 15:34:37 2023 NAME STATUS ROLES AGE VERSION ip-192-168-1-169.ap-northeast-2.compute.internal Ready <none> 18s v1.24.13-eks-0a21954 ip-192-168-1-83.ap-northeast-2.compute.internal Ready <none> 3h42m v1.24.13-eks-0a21954 ip-192-168-2-205.ap-northeast-2.compute.internal Ready <none> 3h42m v1.24.13-eks-0a21954 ip-192-168-3-165.ap-northeast-2.compute.internal Ready <none> 3h42m v1.24.13-eks-0a21954 ip-192-168-3-218.ap-northeast-2.compute.internal Ready <none> 17s v1.24.13-eks-0a21954 # 로그 확인 및 Desired Capacity 확인 (3 -> 5) $kubectl -n kube-system logs -f deployment/cluster-autoscaler aws autoscaling describe-auto-scaling-groups \ --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" \ --output table ----------------------------------------------------------------- | DescribeAutoScalingGroups | +------------------------------------------------+----+----+----+ | eks-ng1-dac42868-5c98-7c58-3210-d1ef62e694c6 | 3 | 6 | 5 | +------------------------------------------------+----+----+----+ # EKS Node Viewer $./eks-node-viewer 5 nodes (8725m/9650m) 90.4% cpu ████████████████████████████████████░░░░ $0.260/hour | $189.42 pods (0 pending 42 running 42 bound) ip-192-168-2-205.ap-northeast-2.compute.internal cpu █████████████████████████████████░░ 95 ip-192-168-1-83.ap-northeast-2.compute.internal cpu ███████████████████████████████░░░░ 89 ip-192-168-3-165.ap-northeast-2.compute.internal cpu ███████████████████████████████████ 100 ip-192-168-1-169.ap-northeast-2.compute.internal cpu █████████████████████████████░░░░░░ 84 ip-192-168-3-218.ap-northeast-2.compute.internal cpu █████████████████████████████░░░░░░ 84 # Delete Deployment $kubectl delete -f nginx.yaml && date Thu May 25 15:41:52 KST 2023 # (기본 값 : 10분 후) 노드 스케일 인 확인 watch -d 'kubectl get nodes' # Desired Capacity 확인 (5 -> 3) $aws autoscaling describe-auto-scaling-groups \ --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" \ --output table ----------------------------------------------------------------- | DescribeAutoScalingGroups | +------------------------------------------------+----+----+----+ | eks-ng1-dac42868-5c98-7c58-3210-d1ef62e694c6 | 3 | 6 | 3 | +------------------------------------------------+----+----+----+- pending pod 개수에 비례해 노드가 스케일 아웃된다.

- Running pod 개수를 줄이면 노드가 스케일 인된다.

- pending pod 개수에 비례해 노드가 스케일 아웃된다.

- EKS의 경우 Auto Scaling Group을 통해 CA를 수행한다.

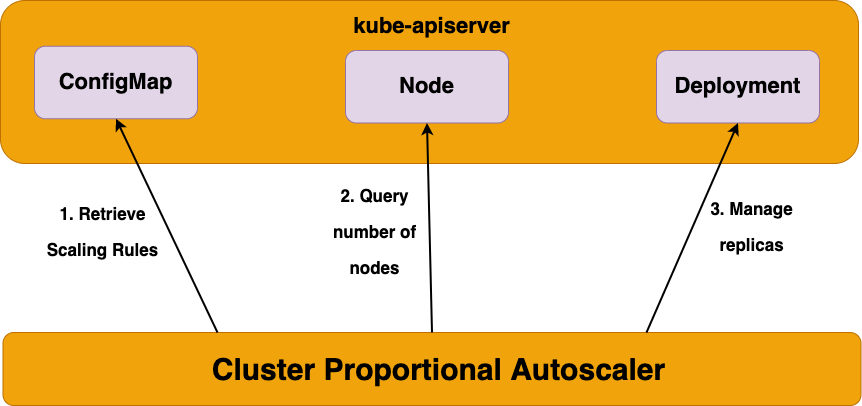

Cluster Proportinal Autoscaler

-

노드 개수에 비례하여 파드 수를 조절하는 Horizontal Pod Autoscaler - 참조

-

실습

# CPA 배포 $helm repo add cluster-proportional-autoscaler https://kubernetes-sigs.github.io/cluster-proportional-autoscaler # 테스트 파드 배포 cat <<EOT > cpa-nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:latest resources: limits: cpu: "100m" memory: "64Mi" requests: cpu: "100m" memory: "64Mi" ports: - containerPort: 80 EOT $kubectl apply -f cpa-nginx.yaml # CPA 규칙 설정 # 노드가 4개면 파드 3개 배치 # 노드가 5개면 파드 5개 배치 $cat <<EOF > cpa-values.yaml config: ladder: nodesToReplicas: - [1, 1] - [2, 2] - [3, 3] - [4, 3] - [5, 5] options: namespace: default target: "deployment/nginx-deployment" EOF # helm 업그레이드 $helm upgrade --install cluster-proportional-autoscaler -f cpa-values.yaml cluster-proportional-autoscaler/cluster-proportional-autoscaler # 노드 개수(3-> 5) 증가 $export ASG_NAME=$(aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].AutoScalingGroupName" --output text) $aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 5 --desired-capacity 5 --max-size 5 $aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table ----------------------------------------------------------------- | DescribeAutoScalingGroups | +------------------------------------------------+----+----+----+ | eks-ng1-dac42868-5c98-7c58-3210-d1ef62e694c6 | 5 | 5 | 5 | +------------------------------------------------+----+----+----+ # 노드 개수(5 -> 4) 감소 $aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 4 --desired-capacity 4 --max-size 4 $aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table ----------------------------------------------------------------- | DescribeAutoScalingGroups | +------------------------------------------------+----+----+----+ | eks-ng1-dac42868-5c98-7c58-3210-d1ef62e694c6 | 4 | 4 | 4 | +------------------------------------------------+----+----+----+ # 파드 모니터링 - 터미널 추가 $watch -d kubectl get pod # 노드 추가 시 파드 증가 확인 NAME READY STATUS RESTARTS AGE cluster-proportional-autoscaler-75bddf49cb-z8jw7 1/1 Running 0 6m5s nginx-deployment-858477475d-dvlv8 1/1 Running 0 2m11s nginx-deployment-858477475d-hqrxj 1/1 Running 0 6m1s nginx-deployment-858477475d-rdsdg 1/1 Running 0 6m1s nginx-deployment-858477475d-wfv92 1/1 Running 0 7m41s nginx-deployment-858477475d-wz7hj 1/1 Running 0 2m11 # 노드 제거 시 파드 감소 확인 NAME READY STATUS RESTARTS AGE cluster-proportional-autoscaler-75bddf49cb-jqmj2 1/1 Running 0 52s nginx-deployment-858477475d-dvlv8 1/1 Running 0 6m4s nginx-deployment-858477475d-hqrxj 1/1 Running 0 9m54s nginx-deployment-858477475d-rdsdg 1/1 Running 0 9m54s-

노드 추가 시 파드 개수 증가

-

노드 제거 시 파드 개수 감소

-

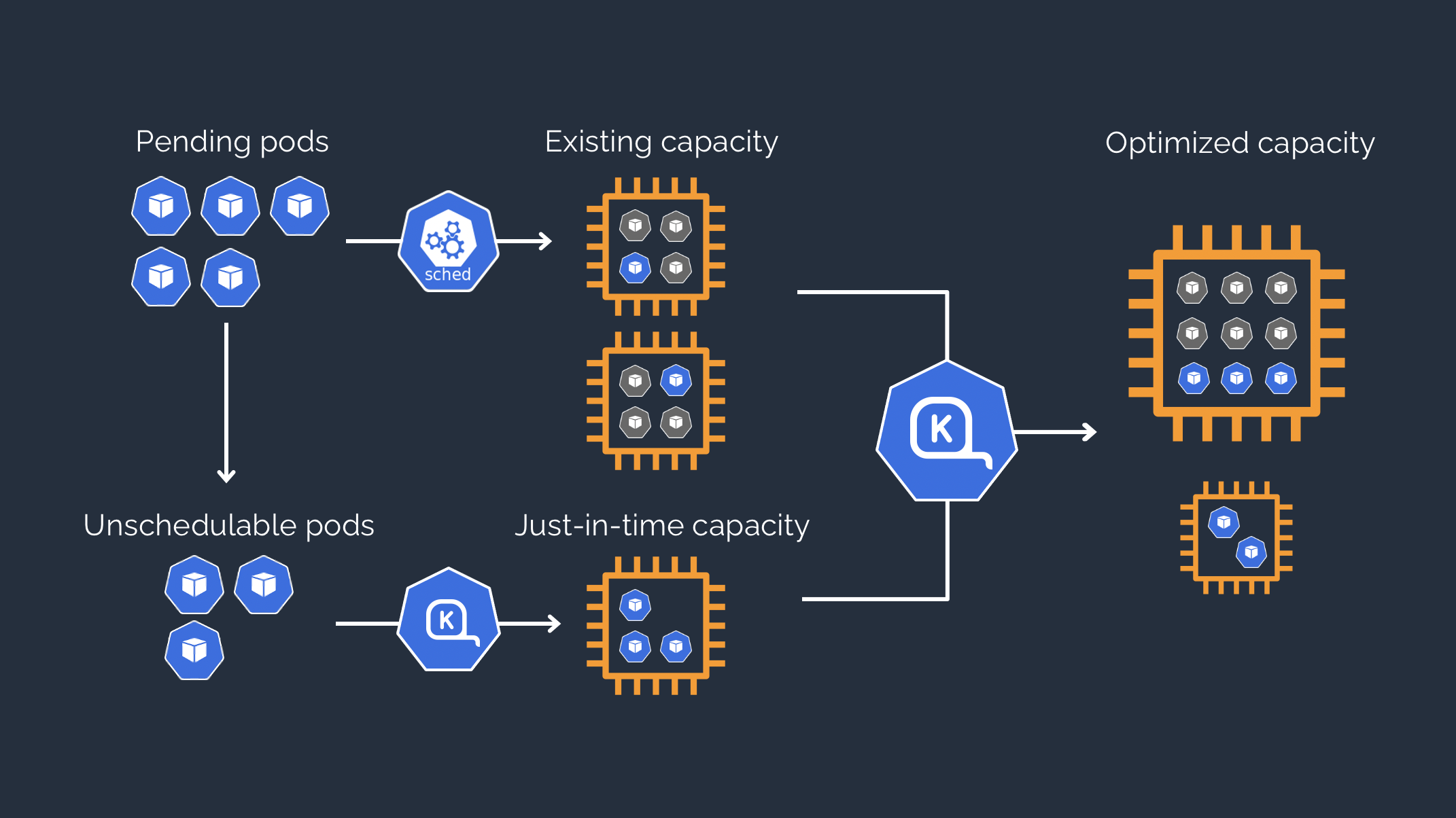

Karpenter

- AWS에서 개발한 오픈소스 고성능 Cluster Autoscaler

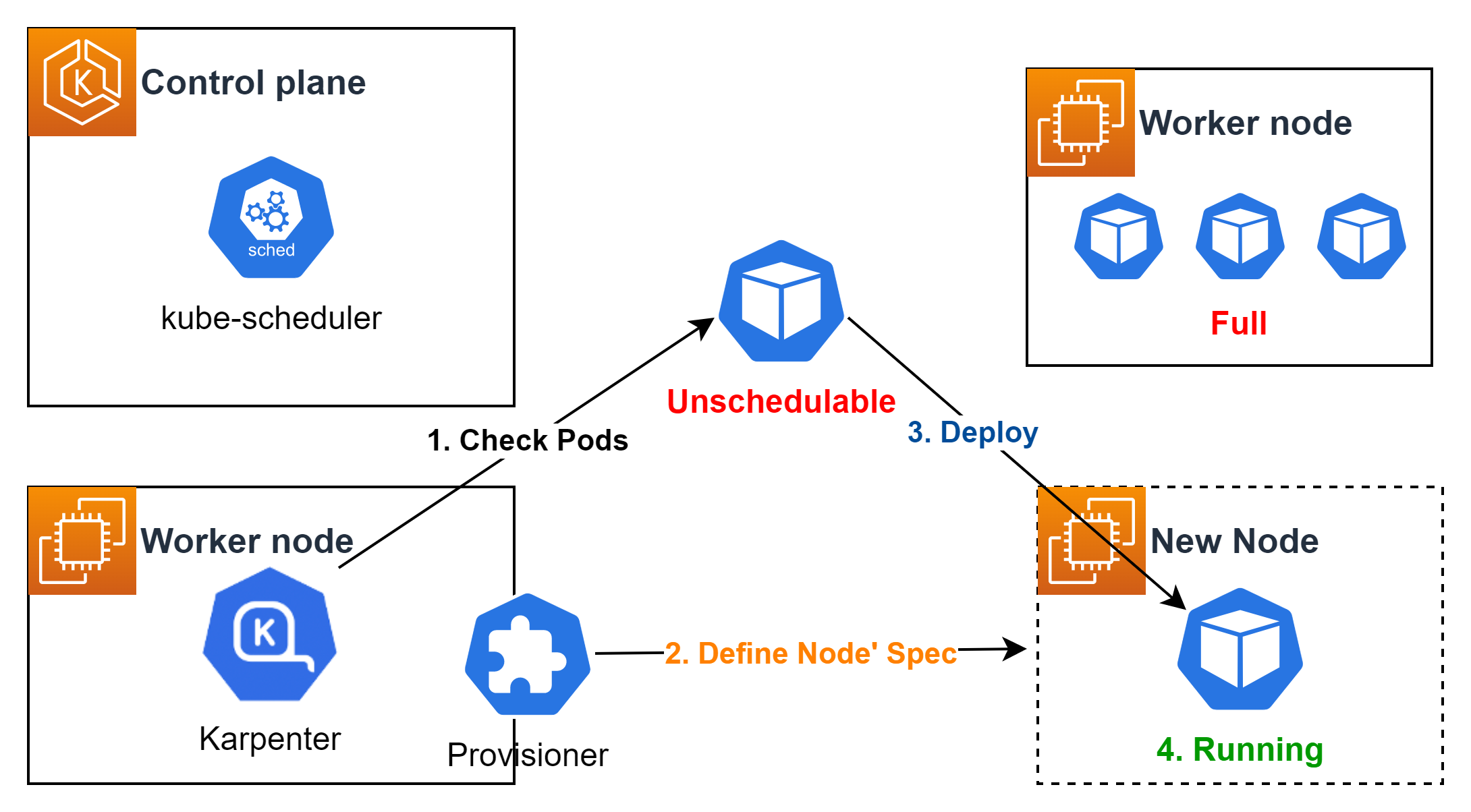

- 동작 방식 - 링크

-

Watching : Unschedulable 파드가 생성되었는지 지속적으로 관찰한다.

-

Evaluating : 해당 파드를 스케줄링하는데 제약 조건(resources, nodeSelector, NodeAffinity, tolerations 등)이 있는지 체크한다.

-

Provisioning : 파드의 요구사항에 맞는 노드를 프로비저닝하고 파드를 배포한다.

-

Removing : 더이상 사용하지 않는 노드는 제거한다.

vs Cluster Autoscaler?

CA의 경우 노드를 스케일링할 때 ASG를 사용하여 별도 리소스를 관리해야 하는데 반해 Karpenter는 스케일링 시 많은 부분을 Karpenter가 처리하므로 훨씬 빠르고 심플하다.

-

- 스케줄링 제약조건

- Resource requests : CPU/Mem

containers: - name: app image: myimage resources: requests: memory: "128Mi" cpu: "500m" limits: memory: "256Mi" cpu: "1000m" - Node selection : 특정 Label이 포함된 노드 선택

nodeSelector: topology.kubernetes.io/zone: us-west-2a karpenter.sh/capacity-type: spot - Node affinity : Node requried & prefer

affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: "topology.kubernetes.io/zone" operator: "In" values: ["us-west-2a, us-west-2b"] - Pod affinity/anti-affinity : Pod requried & prefer

affinity: # 동일한 Pod가 같은 Node에 배포되어야 하는 경우 podAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: system operator: In values: - backend topologyKey: topology.kubernetes.io/zone # 동일한 Pod가 다른 Node에 배포되어야 하는 경우 podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchLabels: app: inflate topologyKey: kubernetes.io/hostname - Topology spread : 파드 분배 제약 조건

topologySpreadConstraints: - maxSkew: 1 # 노드에 분배할 수 있는 최대 파드 수 topologyKey: "topology.kubernetes.io/zone" # 노드의 Label key whenUnsatisfiable: ScheduleAnyway # 조건을 충족하지 않는 경우 처리하는 방법, DoNotSchedule(기본 값), ScheduleAnyway(스케줄링 유지) labelSelector: # 일치하는 파드를 찾아서 분배 개수에 포함한다. matchLabels: dev: jjones

- Resource requests : CPU/Mem

- Provisioner

- 생성할 수 있는 노드와 해당 노드에서 실행할 수 있는 파드에 대한 제약 조건을 설정한다.

apiVersion: karpenter.sh/v1alpha5 kind: Provisioner metadata: name: default spec: labels: type: karpenter requirements: - key: karpenter.sh/capacity-type operator: In values: ["on-demand"] - key: "node.kubernetes.io/instance-type" operator: In values: ["c5.large", "m5.large", "m5.xlarge"] limits: resources: cpu: 1000 memory: 1000Gi providerRef: name: default ttlSecondsAfterEmpty: 30 --- apiVersion: karpenter.k8s.aws/v1alpha1 kind: AWSNodeTemplate metadata: name: default spec: subnetSelector: karpenter.sh/discovery: ${EKS_CLUSTER_NAME} securityGroupSelector: karpenter.sh/discovery: ${EKS_CLUSTER_NAME}-

capacity-type :

on-demand,spot -

instance-type : 파드에 적합하고 가장 저렴한 인스턴스로 프로비저닝

-

limits.resources : 설정한 CPU/Mem 제한 값에 도달하면 프로비저닝 수행하지 않음

-

ttlSecondsAfterEmpty : 데몬셋을 제외한 노드에 파드가 없을 경우 해당 값 이후 자동으로 정리함

-

AWSNodeTemplate : 인스턴스를 프로비저닝하는데 필요한 보안그룹, 서브넷 및 기타요소 검색

apiVersion: karpenter.k8s.aws/v1alpha1 kind: AWSNodeTemplate metadata: name: al2-example spec: amiFamily: Bottlerocket instanceProfile: MyInstanceProfile subnetSelector: karpenter.sh/discovery: ${EKS_CLUSTER_NAME} securityGroupSelector: karpenter.sh/discovery: ${EKS_CLUSTER_NAME} userData: | #!/bin/bash mkdir -p ~ec2-user/.ssh/ touch ~ec2-user/.ssh/authorized_keys cat >> ~ec2-user/.ssh/authorized_keys <<EOF {{ insertFile "../my-authorized_keys" | indent 4 }} EOF chmod -R go-w ~ec2-user/.ssh/authorized_keys chown -R ec2-user ~ec2-user/.ssh amiSelector: karpenter.sh/discovery: my-cluster- subnetSelector(required) : 인스턴스에 연결할 서브넷 검색

- securityGroupSelector(required) : 인스턴스에 연결할 보안그룹 검색

- instnaceProfile : 인스턴스 프로필 연결

- amiFamily : 인스턴스에 프로비저닝할 AMI, 해당 값을 기반으로 EKS optimized AMI를 찾는다.

- amiSelector : 사용자 지정 AMI를 선택한다.

- userdata : userdata를 입력할 수 있다.

-

- 생성할 수 있는 노드와 해당 노드에서 실행할 수 있는 파드에 대한 제약 조건을 설정한다.

- 실습

# 템플릿 다운로드 $curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/karpenter-preconfig.yaml # Deploy CFN Stack $aws cloudformation deploy \ --template-file karpenter-preconfig.yaml \ --stack-name myeks2 \ --parameter-overrides KeyName=atomy-test-key \ SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 \ MyIamUserAccessKeyID='accesskey' \ MyIamUserSecretAccessKey='secretkey' \ ClusterBaseName=myeks2 --region ap-northeast-2 $ssh -i ~/.ssh/eljoe-test-key.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks2 --query 'Stacks[*].Outputs[0].OutputValue' --output text) # EKS Node Viewer 설치 $go install github.com/awslabs/eks-node-viewer/cmd/eks-node-viewer@latest # 환경변수 확인 $export | egrep 'ACCOUNT|AWS_|CLUSTER' | egrep -v 'SECRET|KEY' # karpenter 환경변수 선언 $export KARPENTER_VERSION=v0.27.5 $export TEMPOUT=$(mktemp) $echo $KARPENTER_VERSION, $CLUSTER_NAME, $AWS_DEFAULT_REGION, $AWS_ACCOUNT_ID, $TEMPOUT v0.27.5, myeks2, ap-northeast-2, 131421611146, /tmp/tmp.IrBBdV7XhN # Deploy CFN Stack - IAM Policy, Role, EC2 Instance Profile $curl -fsSL https://karpenter.sh/"${KARPENTER_VERSION}"/getting-started/getting-started-with-karpenter/cloudformation.yaml > $TEMPOUT \ && aws cloudformation deploy \ --stack-name "Karpenter-${CLUSTER_NAME}" \ --template-file "${TEMPOUT}" \ --capabilities CAPABILITY_NAMED_IAM \ --parameter-overrides "ClusterName=${CLUSTER_NAME}" # EKS 클러스터 생성 eksctl create cluster -f - <<EOF --- apiVersion: eksctl.io/v1alpha5 kind: ClusterConfig metadata: name: ${CLUSTER_NAME} region: ${AWS_DEFAULT_REGION} version: "1.24" tags: karpenter.sh/discovery: ${CLUSTER_NAME} iam: withOIDC: true serviceAccounts: - metadata: name: karpenter namespace: karpenter roleName: ${CLUSTER_NAME}-karpenter attachPolicyARNs: - arn:aws:iam::${AWS_ACCOUNT_ID}:policy/KarpenterControllerPolicy-${CLUSTER_NAME} roleOnly: true iamIdentityMappings: - arn: "arn:aws:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}" username: system:node:{{EC2PrivateDNSName}} groups: - system:bootstrappers - system:nodes managedNodeGroups: - instanceType: m5.large amiFamily: AmazonLinux2 name: ${CLUSTER_NAME}-ng desiredCapacity: 2 minSize: 1 maxSize: 10 iam: withAddonPolicies: externalDNS: true *## Optionally run on fargate # fargateProfiles: # - name: karpenter # selectors: # - namespace: karpenter* EOF # EKS 배포 확인 $eksctl get cluster NAME REGION EKSCTL CREATED myeks2 ap-northeast-2 True $eksctl get nodegroup --cluster $CLUSTER_NAMEeksctl get nodegroup --cluster $CLUSTER_NAME CLUSTER NODEGROUP STATUS CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID ASG NAME TYPE myeks2 myeks2-ng ACTIVE 2023-05-25T07:54:10Z 1 10 2 m5.large AL2_x86_64 eks-myeks2-ng-a0c428f3-4c2c-ae51-73af-7217d913c09d managed $eksctl get iamidentitymapping --cluster $CLUSTER_NAME ARN USERNAME GROUPS ACCOUNT arn:aws:iam::131421611146:role/KarpenterNodeRole-myeks2 system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes arn:aws:iam::131421611146:role/eksctl-myeks2-nodegroup-myeks2-ng-NodeInstanceRole-L8PM7V1SM1GA system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes $eksctl get iamserviceaccount --cluster $CLUSTER_NAME NAMESPACE NAME ROLE ARN karpenter karpenter arn:aws:iam::131421611146:role/myeks2-karpenter kube-system aws-node arn:aws:iam::131421611146:role/eksctl-myeks2-addon-iamserviceaccount-kube-s-Role1-AF112N9L0R3V # EKS Node Viewer 확인 $cd ~/go/bin && ./eks-node-viewer 2 nodes (450m/3860m) 11.7% cpu █████░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ $0.236/hour | $172.280/month 6 pods (0 pending 6 running 6 bound) ip-192-168-67-231.ap-northeast-2.compute.internal cpu ██████░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ 17% (4 pods ip-192-168-6-117.ap-northeast-2.compute.internal cpu ██░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ 6% (2 podsPress any key to quit # Kubernetes 확인 $kubectl cluster-info Kubernetes control plane is running at https://905077803119879E9E696232FDB2A63F.gr7.ap-northeast-2.eks.amazonaws.com CoreDNS is running at https://905077803119879E9E696232FDB2A63F.gr7.ap-northeast-2.eks.amazonaws.com/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy $kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone NAME STATUS ROLES AGE VERSION INSTANCE-TYPE CAPACITYTYPE ZONE ip-192-168-6-117.ap-northeast-2.compute.internal Ready <none> 4m25s v1.24.13-eks-0a21954 m5.large ON_DEMAND ap-northeast-2b ip-192-168-67-231.ap-northeast-2.compute.internal Ready <none> 4m26s v1.24.13-eks-0a21954 m5.large ON_DEMAND ap-northeast-2a $kubectl get pod -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES aws-node-fz5xc 1/1 Running 0 4m41s 192.168.67.231 ip-192-168-67-231.ap-northeast-2.compute.internal <none> <none> aws-node-vjt49 1/1 Running 0 4m40s 192.168.6.117 ip-192-168-6-117.ap-northeast-2.compute.internal <none> <none> coredns-dc4979556-jc69s 1/1 Running 0 11m 192.168.86.45 ip-192-168-67-231.ap-northeast-2.compute.internal <none> <none> coredns-dc4979556-qnv9x 1/1 Running 0 11m 192.168.95.64 ip-192-168-67-231.ap-northeast-2.compute.internal <none> <none> kube-proxy-lfvwn 1/1 Running 0 4m40s 192.168.6.117 ip-192-168-6-117.ap-northeast-2.compute.internal <none> <none> kube-proxy-x79v7 1/1 Running 0 4m41s 192.168.67.231 ip-192-168-67-231.ap-northeast-2.compute.internal <none> <none> $kubectl describe cm -n kube-system aws-auth ... mapRoles: ---- - groups: - system:bootstrappers - system:nodes rolearn: arn:aws:iam::131421611146:role/KarpenterNodeRole-myeks2 username: system:node:{{EC2PrivateDNSName}} - groups: - system:bootstrappers - system:nodes rolearn: arn:aws:iam::131421611146:role/eksctl-myeks2-nodegroup-myeks2-ng-NodeInstanceRole-L8PM7V1SM1GA username: system:node:{{EC2PrivateDNSName}} ... # Karpenter 설치를 위한 환경변수 선언 $export CLUSTER_ENDPOINT="$(aws eks describe-cluster --name ${CLUSTER_NAME} --query "cluster.endpoint" --output text)" $export KARPENTER_IAM_ROLE_ARN="arn:aws:iam::${AWS_ACCOUNT_ID}:role/${CLUSTER_NAME}-karpenter" $echo $CLUSTER_ENDPOINT $KARPENTER_IAM_ROLE_ARN $docker logout public.ecr.aws # Karpenter 설치 $helm upgrade --install karpenter oci://public.ecr.aws/karpenter/karpenter --version ${KARPENTER_VERSION} --namespace karpenter --create-namespace \ --set serviceAccount.annotations."eks\.amazonaws\.com/role-arn"=${KARPENTER_IAM_ROLE_ARN} \ --set settings.aws.clusterName=${CLUSTER_NAME} \ --set settings.aws.defaultInstanceProfile=KarpenterNodeInstanceProfile-${CLUSTER_NAME} \ --set settings.aws.interruptionQueueName=${CLUSTER_NAME} \ --set controller.resources.requests.cpu=1 \ --set controller.resources.requests.memory=1Gi \ --set controller.resources.limits.cpu=1 \ --set controller.resources.limits.memory=1Gi \ --wait # 확인 $kubectl get-all -n karpenter NAME NAMESPACE AGE configmap/config-logging karpenter 57s configmap/karpenter-global-settings karpenter 57s configmap/kube-root-ca.crt karpenter 57s endpoints/karpenter karpenter 57s pod/karpenter-6c6bdb7766-5f6rb karpenter 57s pod/karpenter-6c6bdb7766-mjbdt karpenter 57s secret/karpenter-cert karpenter 57s secret/sh.helm.release.v1.karpenter.v1 karpenter 57s serviceaccount/default karpenter 57s serviceaccount/karpenter karpenter 57s service/karpenter karpenter 57s deployment.apps/karpenter karpenter 57s replicaset.apps/karpenter-6c6bdb7766 karpenter 57s lease.coordination.k8s.io/karpenter-leader-election karpenter 49s endpointslice.discovery.k8s.io/karpenter-bfgdd karpenter 57s poddisruptionbudget.policy/karpenter karpenter 57s rolebinding.rbac.authorization.k8s.io/karpenter karpenter 57s role.rbac.authorization.k8s.io/karpenter karpenter 57s $kubectl get cm -n karpenter karpenter-global-settings -o jsonpath={.data} | jq { "aws.clusterEndpoint": "", "aws.clusterName": "myeks2", "aws.defaultInstanceProfile": "KarpenterNodeInstanceProfile-myeks2", "aws.enableENILimitedPodDensity": "true", "aws.enablePodENI": "false", "aws.interruptionQueueName": "myeks2", "aws.isolatedVPC": "false", "aws.nodeNameConvention": "ip-name", "aws.vmMemoryOverheadPercent": "0.075", "batchIdleDuration": "1s", "batchMaxDuration": "10s", "featureGates.driftEnabled": "false" } $kubectl get crd | grep karpenter awsnodetemplates.karpenter.k8s.aws 2023-05-25T08:45:16Z provisioners.karpenter.sh 2023-05-25T08:45:16Z- ExternalDNS & kube-ops-view

# ExternalDNS $MyDomain=eljoe-test.link $echo "export MyDomain=eljoe-test.link" >> /etc/profile $MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text) $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml $MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f - # kube-ops-view $helm repo add geek-cookbook https://geek-cookbook.github.io/charts/ $helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system $kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}' $kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain" $echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5" - Provisioner 생성

$cat <<EOF | kubectl apply -f - apiVersion: karpenter.sh/v1alpha5 kind: Provisioner metadata: name: default spec: requirements: - key: karpenter.sh/capacity-type operator: In values: ["spot"] limits: resources: cpu: 1000 providerRef: name: default ttlSecondsAfterEmpty: 30 --- apiVersion: karpenter.k8s.aws/v1alpha1 kind: AWSNodeTemplate metadata: name: default spec: subnetSelector: karpenter.sh/discovery: ${CLUSTER_NAME} securityGroupSelector: karpenter.sh/discovery: ${CLUSTER_NAME} EOF $kubectl get awsnodetemplates,provisioners NAME AGE awsnodetemplate.karpenter.k8s.aws/default 18s NAME AGE provisioner.karpenter.sh/default 18s - 테스트

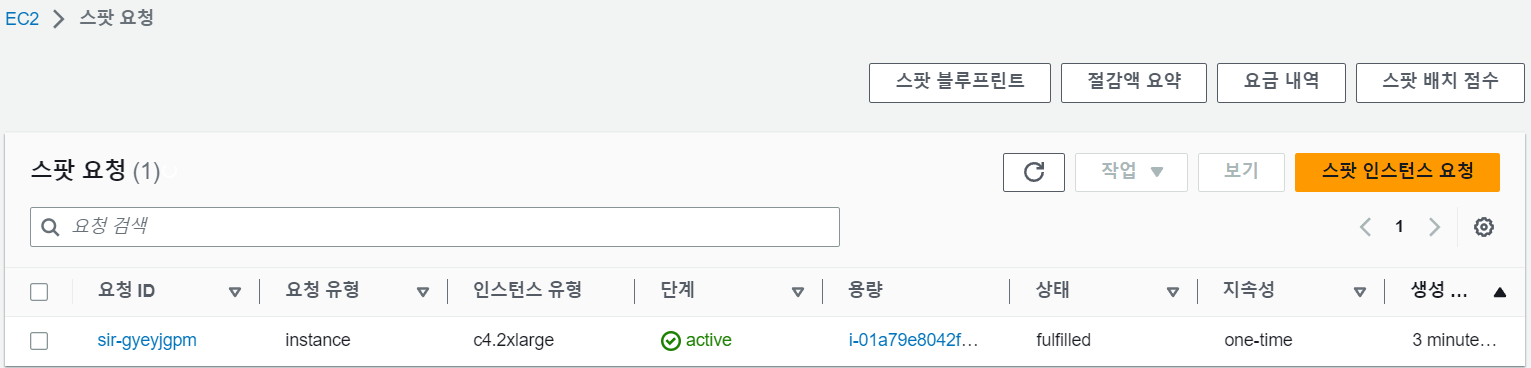

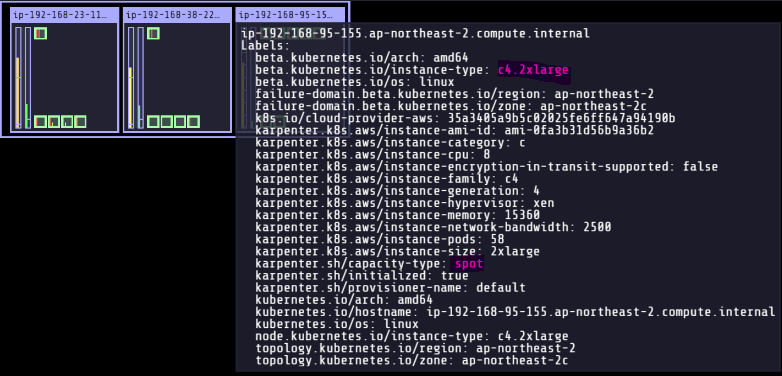

# 파드 하나에 최소 CPU 1개 설정 cat <<EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name: inflate spec: replicas: 0 selector: matchLabels: app: inflate template: metadata: labels: app: inflate spec: terminationGracePeriodSeconds: 0 containers: - name: inflate image: public.ecr.aws/eks-distro/kubernetes/pause:3.7 resources: requests: cpu: 1 EOF # 파드 5개로 스케일 아웃 $kubectl scale deployment inflate --replicas 5 $kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller ... 2023-05-25T09:01:53.689Z INFO controller.provisioner.cloudprovider launched instance {"commit": "698f22f-dirty", "provisioner": "default", "id": "i-01a79e8042f1b6d02", "hostname": "ip-192-168-95-155.ap-northeast-2.compute.internal", "instance-type": "c4.2xlarge", "zone": "ap-northeast-2c", "capacity-type": "spot", "capacity": {"cpu":"8","ephemeral-storage":"20Gi","memory":"14208Mi","pods":"58"}} # Spot Instance 확인 $aws ec2 describe-spot-instance-requests --filters "Name=state,Values=active" --output table +---------------------------------------------------------------------------------------------------+ || SpotInstanceRequests || |+--------------------------------------------------+----------------------------------------------+| || CreateTime | 2023-05-25T09:01:52+00:00 || || InstanceId | i-01a79e8042f1b6d02 || || InstanceInterruptionBehavior | terminate || || LaunchedAvailabilityZone | ap-northeast-2c || || ProductDescription | Linux/UNIX || || SpotInstanceRequestId | sir-gyeyjgpm || || SpotPrice | 0.454000 || || State | active || || Type | one-time || |+--------------------------------------------------+----------------------------------------------+| $kubectl get node --label-columns=eks.amazonaws.com/capacityType,karpenter.sh/capacity-type,node.kubernetes.io/instance-type NAME STATUS ROLES AGE VERSION CAPACITYTYPE CAPACITY-TYPE INSTANCE-TYPE ip-192-168-23-111.ap-northeast-2.compute.internal Ready <none> 25m v1.24.13-eks-0a21954 ON_DEMAND m5.large ip-192-168-38-225.ap-northeast-2.compute.internal Ready <none> 25m v1.24.13-eks-0a21954 ON_DEMAND m5.large ip-192-168-95-155.ap-northeast-2.compute.internal Ready <none> 5m43s v1.24.13-eks-0a21954 spot c4.2xlarge # 스케일 인 - ttlSecondsAfterEmpty(30초) : 노드에 파드가 없을 경우 30초 이후 자동으로 정리 $kubectl delete deployment inflate $kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller ... 2023-05-25T09:09:09.577Z INFO controller.deprovisioning deprovisioning via emptiness delete, terminating 1 machines ip-192-168-95-155.ap-northeast-2.compute.internal/c4.2xlarge/spot {"commit": "698f22f-dirty"} 2023-05-25T09:09:09.611Z INFO controller.termination cordoned node {"commit": "698f22f-dirty", "node": "ip-192-168-95-155.ap-northeast-2.compute.internal"} 2023-05-25T09:09:09.963Z INFO controller.termination deleted node {"commit": "698f22f-dirty", "node": "ip-192-168-95-155.ap-northeast-2.compute.internal"}- 스팟 인스턴스 생성 확인

- 스팟 인스턴스 생성 확인

- ExternalDNS & kube-ops-view