4주차

본 페이지는 AWS EKS Workshop 실습 스터디의 일환으로 매주 실습 결과와 생각을 기록하는 장으로써 작성하였습니다.

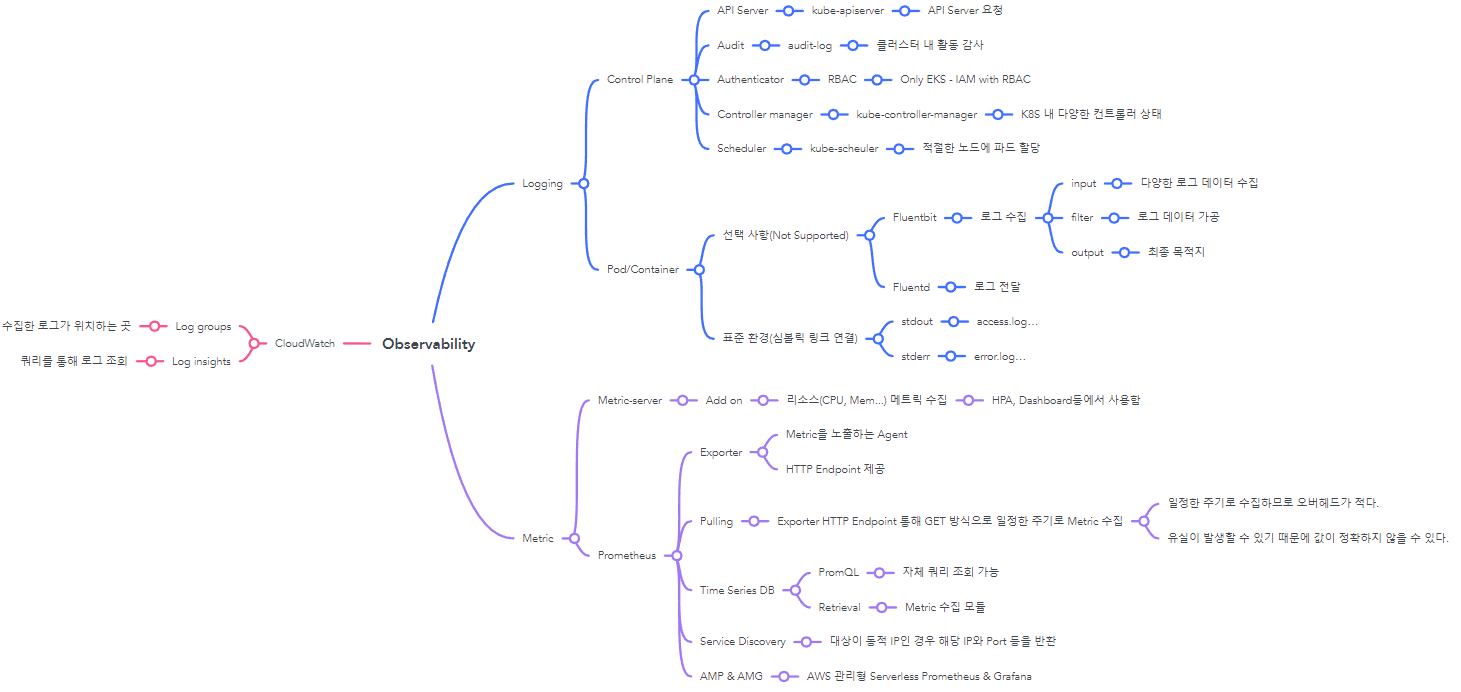

Keyword

- 4주차 강의 내용을 마인드 맵 형태로 정리해보자면 다음과 같다.

배포 전 준비사항

- LB Controller, External DNS, CSI Driver 등과 같이 실습에 필요한 각종 Addon을 설치한다.

# ExternalDNS $MyDomain=eljoe-test.link $echo "export MyDomain=eljoe-test.link" >> /etc/profile $MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text) $curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml $MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f - # kube-ops-view $helm repo add geek-cookbook https://geek-cookbook.github.io/charts/ $helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system $kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}' $kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain" $echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5" Kube Ops View URL = http://kubeopsview.eljoe-test.link:8080/#scale=1.5 #LB Controller $helm repo add eks https://aws.github.io/eks-charts $helm repo update $helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \ --set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller # EBS CSI Driver 설치 확인 $kubectl get pod -n kube-system -l 'app in (ebs-csi-controller,ebs-csi-node)' NAME READY STATUS RESTARTS AGE ebs-csi-controller-67658f895c-dgzcg 6/6 Running 0 14m ebs-csi-controller-67658f895c-v2mzb 6/6 Running 0 14m ebs-csi-node-7fvhw 3/3 Running 0 14m ebs-csi-node-8d2th 3/3 Running 0 14m ebs-csi-node-v5mj9 3/3 Running 0 14m $kubectl get csinodes NAME DRIVERS AGE ip-192-168-1-160.ap-northeast-2.compute.internal 1 18m ip-192-168-2-136.ap-northeast-2.compute.internal 1 18m ip-192-168-3-143.ap-northeast-2.compute.internal 1 18m # gp3 SC 생성 $cat <<EOT > gp3-sc.yaml kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: gp3 allowVolumeExpansion: true provisioner: ebs.csi.aws.com volumeBindingMode: WaitForFirstConsumer parameters: type: gp3 allowAutoIOPSPerGBIncrease: 'true' encrypted: 'true' EOT $kubectl apply -f gp3-sc.yaml # EFS CSI Driver $helm repo add aws-efs-csi-driver https://kubernetes-sigs.github.io/aws-efs-csi-driver/ $helm repo update $helm upgrade -i aws-efs-csi-driver aws-efs-csi-driver/aws-efs-csi-driver \ --namespace kube-system \ --set image.repository=602401143452.dkr.ecr.${AWS_DEFAULT_REGION}.amazonaws.com/eks/aws-efs-csi-driver \ --set controller.serviceAccount.create=false \ --set controller.serviceAccount.name=efs-csi-controller-sa # EFS sc 생성 curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/examples/kubernetes/dynamic_provisioning/specs/storageclass.yaml sed -i "s/fs-92107410/**$EfsFsId**/g" storageclass.yaml kubectl apply -f storageclass.yaml # gp3, efs sc 생성 확인 $kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE efs-sc efs.csi.aws.com Delete Immediate false 60s gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 32m gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 3m45s # 설치 이미지 확인 $kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c 3 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon-k8s-cni:v1.12.6-eksbuild.2 5 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/aws-ebs-csi-driver:v1.18.0 5 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/aws-efs-csi-driver:v1.5.5 2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/coredns:v1.9.3-eksbuild.3 2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-attacher:v4.2.0-eks-1-26-7 3 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-node-driver-registrar:v2.7.0-eks-1-26-7 2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-provisioner:v3.4.1-eks-1-26-7 2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-resizer:v1.7.0-eks-1-26-7 2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-snapshotter:v6.2.1-eks-1-26-7 3 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/kube-proxy:v1.24.10-minimal-eksbuild.2 5 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/livenessprobe:v2.9.0-eks-1-26-7 1 hjacobs/kube-ops-view:20.4.0 2 public.ecr.aws/eks/aws-load-balancer-controller:v2.5.1 2 public.ecr.aws/eks-distro/kubernetes-csi/external-provisioner:v3.4.0-eks-1-27-latest 5 public.ecr.aws/eks-distro/kubernetes-csi/livenessprobe:v2.9.0-eks-1-27-latest 3 public.ecr.aws/eks-distro/kubernetes-csi/node-driver-registrar:v2.7.0-eks-1-27-latest 1 registry.k8s.io/external-dns/external-dns:v0.13.4 # addon 확인 $eksctl get addon --cluster $CLUSTER_NAME 2023-05-19 15:39:17 [ℹ] Kubernetes version "1.24" in use by cluster "myeks" 2023-05-19 15:39:17 [ℹ] getting all addons 2023-05-19 15:39:18 [ℹ] to see issues for an addon run `eksctl get addon --name <addon-name> --cluster <cluster-name>` NAME VERSION STATUS ISSUES IAMROLE UPDATE AVAILABLE CONFIGURATION VALUES aws-ebs-csi-driver v1.18.0-eksbuild.1 ACTIVE 0 arn:aws:iam::123456:role/eksctl-myeks-addon-aws-ebs-csi-driver-Role1-X5Z3P8JNOKP5 coredns v1.9.3-eksbuild.3 ACTIVE 0 kube-proxy v1.24.10-eksbuild.2 ACTIVE 0 vpc-cni v1.12.6-eksbuild.2 ACTIVE 0 arn:aws:iam::123456:role/eksctl-myeks-addon-vpc-cni-Role1-P1NABIU3D826 # deployment 확인 $kubectl get deployment -n kube-system NAME READY UP-TO-DATE AVAILABLE AGE aws-load-balancer-controller 2/2 2 2 9m10s coredns 2/2 2 2 35m ebs-csi-controller 2/2 2 2 21m efs-csi-controller 2/2 2 2 5m external-dns 1/1 1 1 14m kube-ops-view 1/1 1 1 11m # IRSA 확인 $eksctl get iamserviceaccount --cluster $CLUSTER_NAME NAMESPACE NAME ROLE ARN kube-system aws-load-balancer-controller arn:aws:iam::123456:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-JB8SZB3G42WT kube-system efs-csi-controller-sa arn:aws:iam::123456:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-PLL9QBP3T1NE - 컨테이너/파드 로깅 작업 전 해당 파드 외부 노출을 위한 HTTPS 작업을 진행한다.

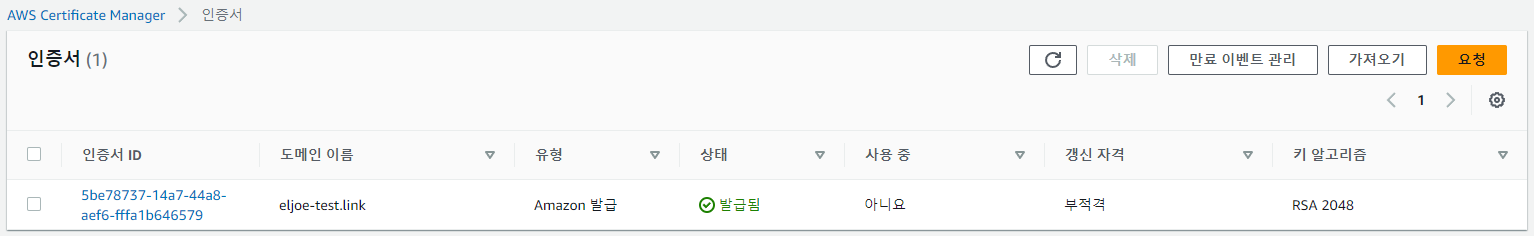

# ACM Public 인증서 요청 및 ARN 변수 선언 CERT_ARN=$(aws acm request-certificate \ --domain-name $MyDomain \ --validation-method 'DNS' \ --key-algorithm 'RSA_2048' \ |jq --raw-output '.CertificateArn') # 인증서 CNAME 이름 변수 선언 CNAME_NAME=$(aws acm describe-certificate \ --certificate-arn $CERT_ARN \ --query 'Certificate.DomainValidationOptions[*].ResourceRecord.Name' \ --output text) # 인증서 CNAME 값 변수 선언 CNAME_VALUE=$(aws acm describe-certificate \ --certificate-arn $CERT_ARN \ --query 'Certificate.DomainValidationOptions[*].ResourceRecord.Value' \ --output text) # 인증서 ARN, CNAME 확인 $echo $CERT_ARN, $CNAME_NAME, $CNAME_VALUE # Route53에 추가할 CNAME Record 파일 생성 $cat <<EOT > cname.json { "Comment": "create a acm's CNAME record", "Changes": [ { "Action": "CREATE", "ResourceRecordSet": { "Name": "CnameName", "Type": "CNAME", "TTL": 300, "ResourceRecords": [ { "Value": "CnameValue" } ] } } ] } EOT # CNAME 치환 $sed -i "s/CnameName/$CNAME_NAME/g" cname.json $sed -i "s/CnameValue/$CNAME_VALUE/g" cname.json # CNAME Record 추가 $aws route53 change-resource-record-sets --hosted-zone-id $MyDnzHostedZoneId --change-batch file://cname.json

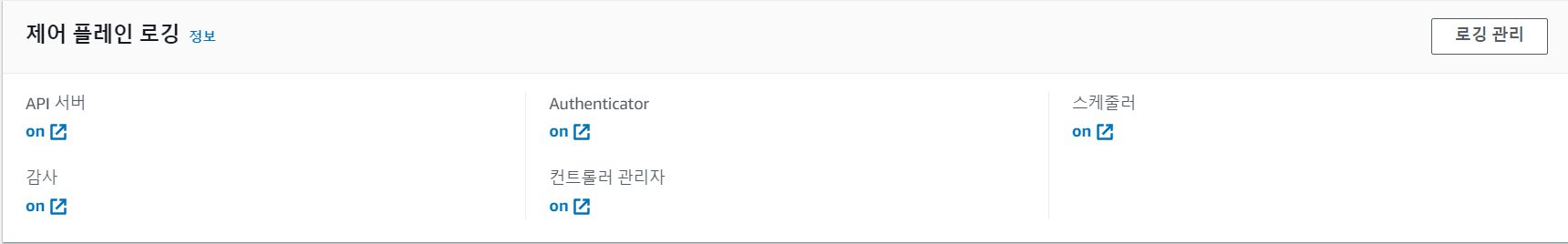

Control Plane Logging

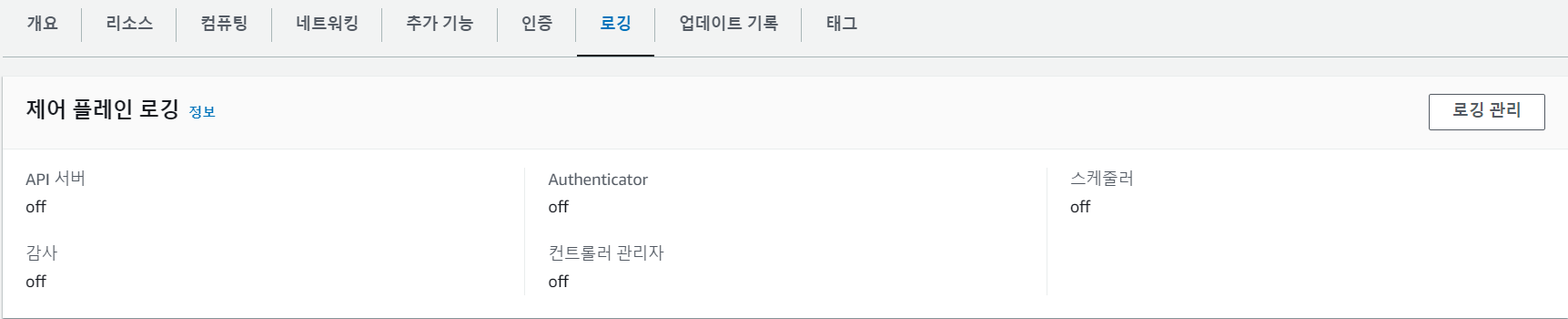

- EKS Control Plane에서 발생한 이벤트 로그를 계정의 CloudWatch로 직접 제공하는 기능, 기본 설정은 비활성화 되어있다.

- 컨트롤 플레인 내 수집 대상 로그 유형은 다음과 같으며, 각 로그 유형은 K8S 컨트롤 플레인 구성요소에서 출력된다. - 링크

- API Server(api, kube-apiserver-) - kube-apiserver 요청 로그

- Audit(audit, kube-apiserver-audit-) - 클러스터 내 관리자 및 사용자 또는 구성요소 활동 로그

- Authenticator(authenticator, authenticator-) - EKS에서 제공하는 고유한 로그, IAM with RBAC 인증 관련 로그

- Controller manager(controllerManager, kube-controller-manager-) - K8S 내 다양한 컨트롤러 상태 로그

- Scheduler(scheduler, kube-scheduler-) - 적절한 노드에 파드를 할당하는 Scheduler 관련 로그

- 컨트롤 플레인 내 수집 대상 로그 유형은 다음과 같으며, 각 로그 유형은 K8S 컨트롤 플레인 구성요소에서 출력된다. - 링크

- 상기 컨트롤 플레인 내 로깅을 모두 활성화하고, 내용을 확인해보자.

# 컨트롤 플레인 내 로깅 모두 활성화 $aws eks update-cluster-config --region $AWS_DEFAULT_REGION --name $CLUSTER_NAME \ --logging '{"clusterLogging":[{"types":["api","audit","authenticator","controllerManager","scheduler"],"enabled":true}]}'

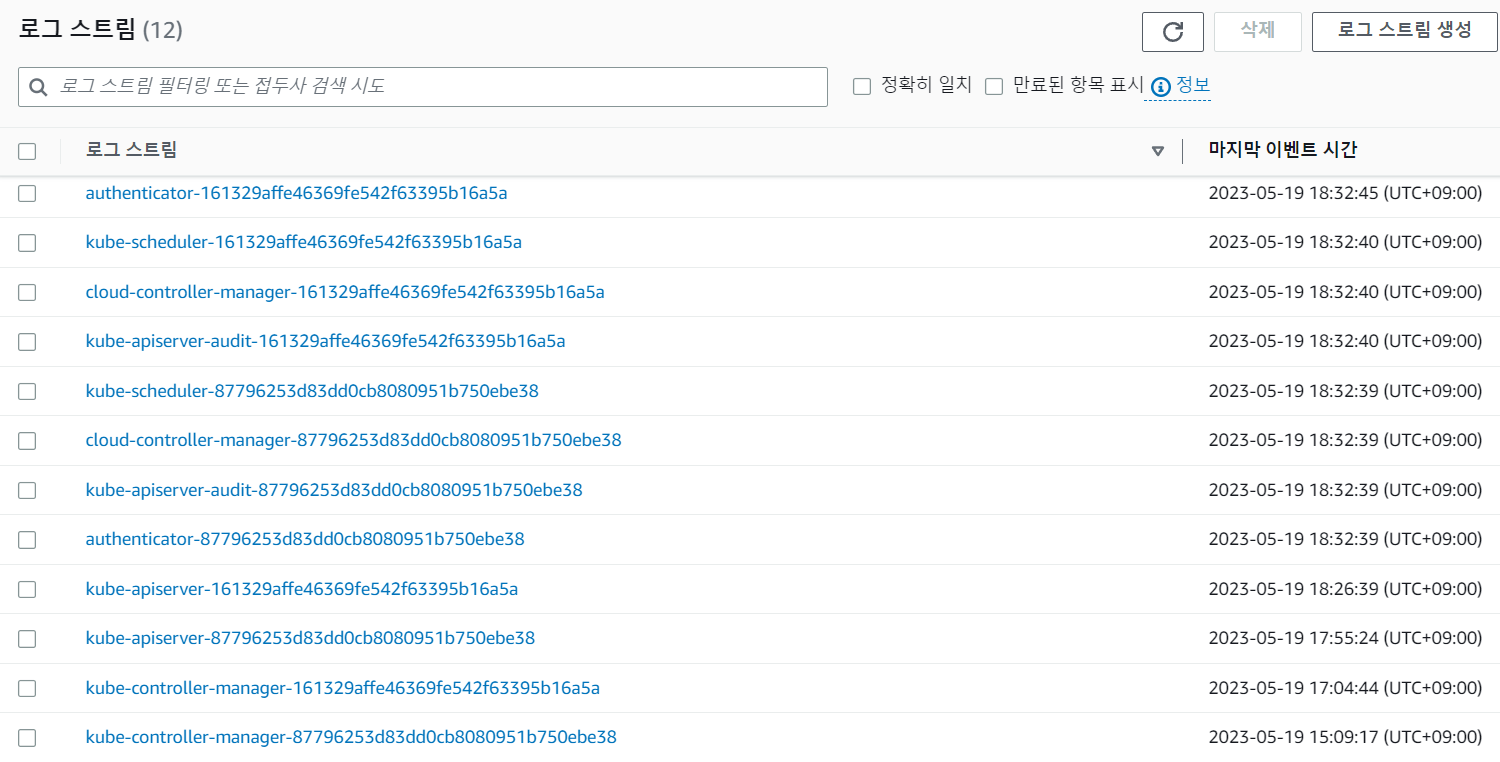

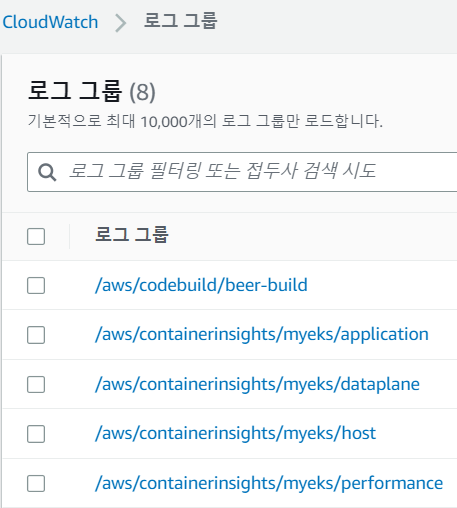

# CloudWatch 로그 그룹 내 EKS 클러스터 로그 그룹 확인 $aws logs describe-log-groups | jq { "logGroups": [ { "logGroupName": "/aws/eks/myeks/cluster", "creationTime": 1684488759837, "metricFilterCount": 0, "arn": "arn:aws:logs:ap-northeast-2:131421611146:log-group:/aws/eks/myeks/cluster:*", "storedBytes": 0 }, ... }

# 새로운 로그 즉시 출력 $aws logs tail /aws/eks/$CLUSTER_NAME/cluster --follow # 특정 로그 스트림 로그 출력 # coredns의 파드 개수를 의도적으로 수정한다(2 -> 1) $kubectl scale deployment -n kube-system coredns --replicas=1 $aws logs tail /aws/eks/$CLUSTER_NAME/cluster --log-stream-name-prefix kube-controller-manager --follow "Event occurred" object="kube-system/coredns" fieldPath="" kind="Deployment" apiVersion="apps/v1" type="Normal" reason="ScalingReplicaSet" message="Scaled down replica set coredns-6777fcd775 to 1" "Too many replicas" replicaSet="kube-system/coredns-6777fcd775" need=1 deleting=1 # coredns 개수 원복 $kubectl scale deployment -n kube-system coredns --replicas=2 $aws logs tail /aws/eks/$CLUSTER_NAME/cluster --log-stream-name-prefix kube-controller-manager --follow "Too few replicas" replicaSet="kube-system/coredns-6777fcd775" need=2 creating=1 "Event occurred" object="kube-system/coredns" fieldPath="" kind="Deployment" apiVersion="apps/v1" type="Normal" reason="ScalingReplicaSet" message="Scaled up replica set coredns-6777fcd775 to 2" - CloudWatch에 수집된 로그는 Logs Instights를 통해 쿼리 형태로 특정 내용을 조회할 수 있다.

# kube-scheduler timestamp 및 로그 내용 조회 $aws logs get-query-results --query-id $(aws logs start-query \ --log-group-name '/aws/eks/myeks/cluster' \ --start-time `date -d "-1 hours" +%s` \ --end-time `date +%s` \ --query-string 'fields @timestamp, @message | filter @logStream ~= "kube-scheduler" | sort @timestamp desc' \ | jq --raw-output '.queryId') # authenticator timestamp 및 로그 내용 조회 $aws logs get-query-results --query-id $(aws logs start-query \ --log-group-name '/aws/eks/myeks/cluster' \ --start-time `date -d "-1 hours" +%s` \ --end-time `date +%s` \ --query-string 'fields @timestamp, @message | filter @logStream ~= "authenticator" | sort @timestamp desc' \ | jq --raw-output '.queryId') # kube-controller-manager timestamp 및 로그 내용 조회 $aws logs get-query-results --query-id $(aws logs start-query \ --log-group-name '/aws/eks/myeks/cluster' \ --start-time `date -d "-1 hours" +%s` \ --end-time `date +%s` \ --query-string 'fields @timestamp, @message | filter @logStream ~= "kube-controller-manager" | sort @timestamp desc' \ | jq --raw-output '.queryId') - 컨트롤 플레인 로깅 비활성화는 다음과 같다.

# 로깅 비활성화 $eksctl utils update-cluster-logging --cluster $CLUSTER_NAME --region $AWS_DEFAULT_REGION --disable-types all --approve # EKS Cluster 로그 그룹 삭제 $aws logs delete-log-group --log-group-name /aws/eks/$CLUSTER_NAME/cluster

Container/Pod Logging

- 파드 내 컨테이너 로그의 경우 UNIX/LINUX 표준 스트림인 STDOUT(표준 출력)와 STDERR(표준 에러)로 보내도록 권고한다.

- 웹 서버 또는 DB와 같은 비대화형 프로세스인 경우 STDOUT, STDERR가 아닌 자체 로그를 출력하는데 이 때는 Dockerfile에서 심볼릭 링크로 연결하거나 직접 구성을 변경한다.

- nginx - access.log와 error.log를 각각 stdout, stderr에 심볼릭 링크로 연결한다. - 링크

# forward request and error logs to docker log collector RUN ln -sf /dev/stdout /var/log/nginx/access.log \ && ln -sf /dev/stderr /var/log/nginx/error.log - httpd - stdout 또는 stderr에 직접 쓰도록 구성을 변경한다. - 링크

RUN ... && sed -ri \ -e 's!^(\s*CustomLog)\s+\S+!\1 /proc/self/fd/1!g' \ -e 's!^(\s*ErrorLog)\s+\S+!\1 /proc/self/fd/2!g' \ "$HTTPD_PREFIX/conf/httpd.conf" \ ...

- nginx - access.log와 error.log를 각각 stdout, stderr에 심볼릭 링크로 연결한다. - 링크

- 웹 서버 또는 DB와 같은 비대화형 프로세스인 경우 STDOUT, STDERR가 아닌 자체 로그를 출력하는데 이 때는 Dockerfile에서 심볼릭 링크로 연결하거나 직접 구성을 변경한다.

- nginx 파드를 배포하여 애플리케이션 로그를 직접 확인해보자.

# bitnami 추가 $helm repo add bitnami https://charts.bitnami.com/bitnami # ingress 파라미터 작성 $cat <<EOT > nginx-values.yaml service: type: NodePort ingress: enabled: true ingressClassName: alb hostname: nginx.$MyDomain path: /* annotations: alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/target-type: ip alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]' alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN alb.ingress.kubernetes.io/success-codes: 200-399 alb.ingress.kubernetes.io/load-balancer-name: $CLUSTER_NAME-ingress-alb alb.ingress.kubernetes.io/group.name: study alb.ingress.kubernetes.io/ssl-redirect: '443' EOT # nginx 배포 $helm install nginx bitnami/nginx --version 14.1.0 -f nginx-values.yaml # 구성요소 확인 $kubectl get ingress,deploy,svc,ep,targetgroupbindings NAME CLASS HOSTS ADDRESS PORTS AGE ingress.networking.k8s.io/nginx alb nginx.eljoe-test.link myeks-ingress-alb-1182578444.ap-northeast-2.elb.amazonaws.com 80 5m53s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx 1/1 1 1 5m53s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 4h49m service/nginx NodePort 10.100.116.111 <none> 80:31888/TCP 5m53s NAME ENDPOINTS AGE endpoints/kubernetes 192.168.2.126:443,192.168.3.181:443 4h49m endpoints/nginx 192.168.1.81:8080 5m53s NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE targetgroupbinding.elbv2.k8s.aws/k8s-default-nginx-cc8ecb59da nginx http ip 5m49s- 파드에 직접 접근하지 않아도 kubectl logs 명령어를 통해 애플리케이션 로그를 확인할 수 있다.

# 실시간 로그 확인 $kubectl logs deploy/nginx -f 192.168.2.220 - - [19/May/2023:10:56:38 +0000] "GET / HTTP/1.1" 200 409 "-" "ELB-HealthChecker/2.0" "-" 192.168.1.85 - - [19/May/2023:10:56:39 +0000] "GET / HTTP/1.1" 200 409 "-" "ELB-HealthChecker/2.0" "-" 192.168.3.99 - - [19/May/2023:10:56:40 +0000] "GET / HTTP/1.1" 200 409 "-" "ELB-HealthChecker/2.0" "-" # 파드 내 nginx 컨테이너의 애플리케이션 로그가 각각 심볼릭 링크를 통해 stdout, stderr로 연결된 것을 확인할 수 있다. $kubectl exec -it deploy/nginx -- ls -l /opt/bitnami/nginx/logs/ total 0 lrwxrwxrwx 1 root root 11 Apr 24 10:13 access.log -> /dev/stdout lrwxrwxrwx 1 root root 11 Apr 24 10:13 error.log -> /dev/stderr - 파드가 종료되면 로그를 조회할 수 없으므로 파드 내 로그를 수집하고 저장하는 기능이 필요하다.

- 파드에 직접 접근하지 않아도 kubectl logs 명령어를 통해 애플리케이션 로그를 확인할 수 있다.

CloudWatch Container Insights & Fluent Bit

- 컨테이너형 애플리케이션 및 마이크로 서비스에 대한 모니터링, 트러블 슈팅 및 알람을 위한 완전 관리형 관측 서비스 - 링크

- EKS의 경우 Data Plane 노드의 로그와 해당 노드 내 실행 중인 파드의 로그를 저장하는 기능을 사용자가 직접 선택하고 설치한다.

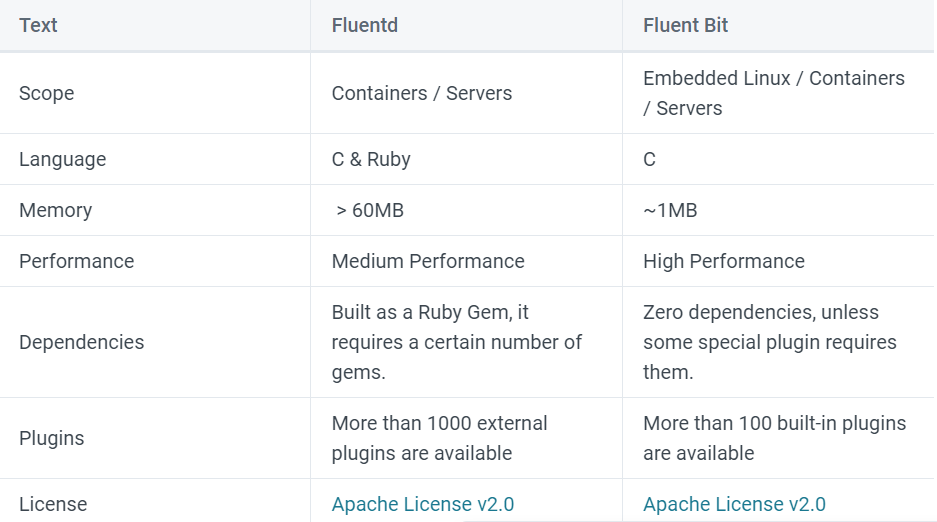

- AWS는 Fluentd 또는 Fluent Bit를 이용한 예제를 제공하는데 하기와 같이 Fluentd보다는 Fluent Bit를 권유한다. - 링크

- Fluent Bit는 Fluentd보다 리소스 공간이 더 작고 메모리 및 CPU와 같은 리소스 사용이 더 효율적입니다. 더 자세한 비교는 Fluent Bit와 Fluentd 성능 비교 단원을 참조하세요.

- Fluent Bit 이미지는 AWS에서 개발 및 유지 관리합니다. 이를 통해 AWS는 훨씬 더 빠르게 새로운 Fluent Bit 이미지 기능을 채택하고 문제에 대응할 수 있습니다.

- 또한 공식 홈페이지에선 아래와 같이 비교한다.

- 즉 Fluent Bit는 Fluentd에 비해 메모리 사용량이 적고 가벼운 경량화 로그 수집기라 보면 되겠다.

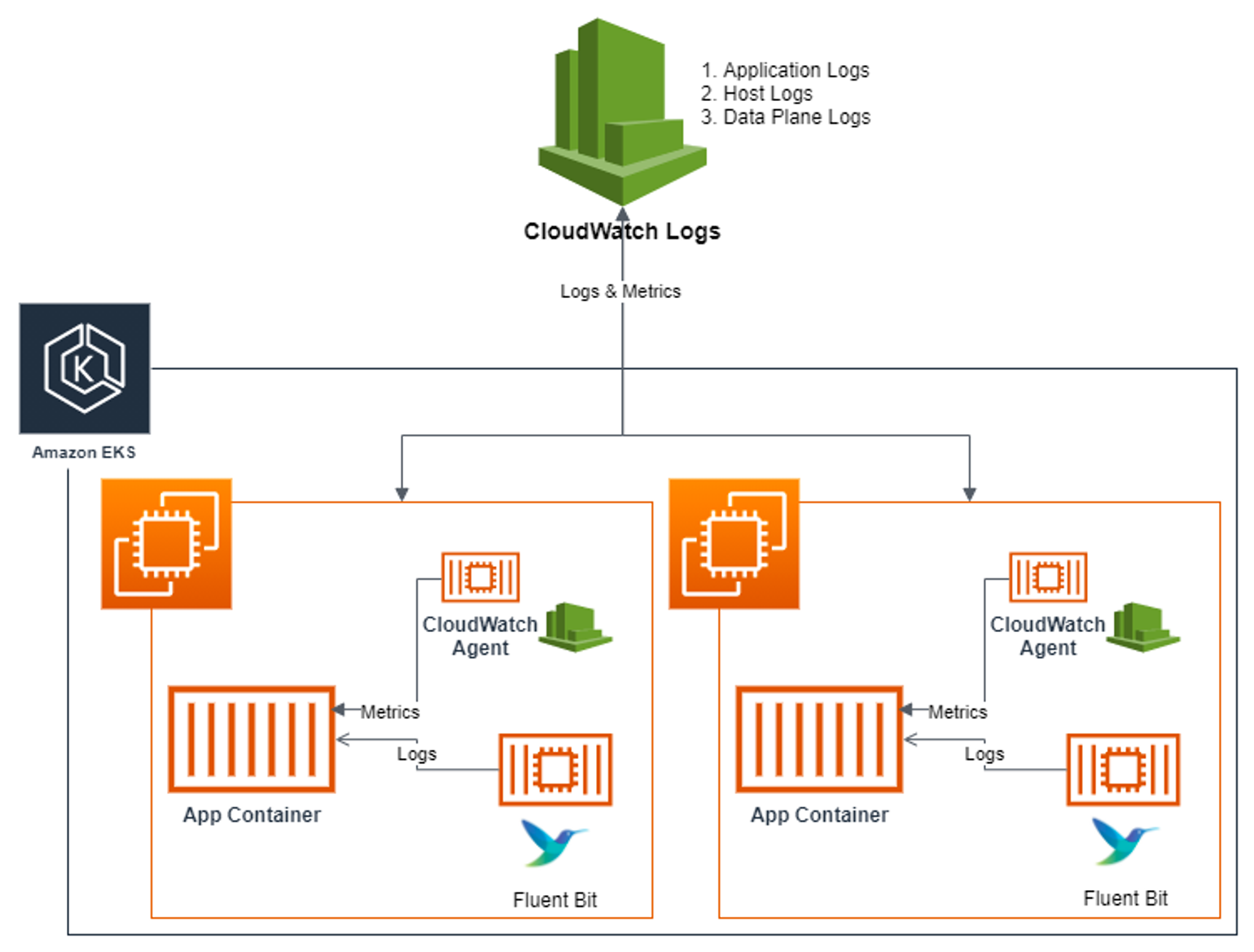

- Fluent Bit와 CloudWatch Agent를 배포하여 Metrics & Logs 수집하기 - 링크

- Container Insights는 파드 내 로그를 아래와 같이 세 가지로 분류하고 Fluent Bit를 통해 수집, CloudWatch Logs에 전송한다.

- Application logs : 각 파드 내 모든 애플리케이션 로그

- 수집 대상 : /var/log/containers/*.log

- 전송 대상 : /aws/containerinsights/<Cluster_Name>/application

- Host logs : 노드(호스트, 인스턴스) 내 시스템 관련 로그

- 수집 대상 : /var/log/messages, /var/log/dmesg, /var/log/secure

- 전송 대상 : /aws/containerinsights/<Cluster_Name>/host

- Data plane logs : 데이터 플레인 로그

- 수집 대상 : /var/log/journal for kubelet.service, kubeproxy.service, and docker.service

- 전송 대상 : /aws/containerinsights/<Cluster_Name>/dataplane

- Application logs : 각 파드 내 모든 애플리케이션 로그

- 노드에 Fluent Bit와 CloudWatch Agent 파드를 데몬셋으로 배포하여 파드의 로그와 메트릭을 수집해보자.

- 노드 내 로그 사전 확인

# Application $ssh ec2-user@$N1 sudo tree /var/log/containers /var/log/containers ├── aws-load-balancer-controller_kube-system_aws-load-balancer-controller.log -> /var/log/pods/kube-system_aws-load-balancer-controller ... # Host $ssh ec2-user@$node sudo tree /var/log/ -L 1 /var/log/ ... ├── dmesg ... ├── secure ... ├── messages ... # Data plane $ssh ec2-user@$node sudo tree /var/log/journal -L 1 /var/log/journal ├── ... - CloudWatch Container Insights 설치

# Fluent Bit 변수 선언 FluentBitHttpServer='On' \ FluentBitHttpPort='2020' \ FluentBitReadFromHead='Off' \ FluentBitReadFromTail='On' # Fluent Bit, CloudWatch agent daemonset 배포 $curl -s https://raw.githubusercontent.com/aws-samples/amazon-cloudwatch-container-insights/latest/k8s-deployment-manifest-templates/deployment-mode/daemonset/container-insights-monitoring/quickstart/cwagent-fluent-bit-quickstart.yaml \ | sed 's/{{cluster_name}}/'${CLUSTER_NAME}'/;s/{{region_name}}/'${AWS_DEFAULT_REGION}'/;s/{{http_server_toggle}}/"'${FluentBitHttpServer}'"/;s/{{http_server_port}}/"'${FluentBitHttpPort}'"/;s/{{read_from_head}}/"'${FluentBitReadFromHead}'"/;s/{{read_from_tail}}/"'${FluentBitReadFromTail}'"/' \ | kubectl apply -f - # 설치 확인 $kubectl get-all -n amazon-cloudwatch NAME NAMESPACE AGE configmap/cwagent-clusterleader amazon-cloudwatch 93s configmap/cwagentconfig amazon-cloudwatch 102s configmap/fluent-bit-cluster-info amazon-cloudwatch 102s configmap/fluent-bit-config amazon-cloudwatch 101s configmap/kube-root-ca.crt amazon-cloudwatch 102s pod/cloudwatch-agent-h99lr amazon-cloudwatch 102s pod/cloudwatch-agent-qskmw amazon-cloudwatch 101s pod/cloudwatch-agent-rvj2b amazon-cloudwatch 101s pod/fluent-bit-ch2zm amazon-cloudwatch 101s pod/fluent-bit-jg7zm amazon-cloudwatch 101s pod/fluent-bit-pd2w4 amazon-cloudwatch 101s serviceaccount/cloudwatch-agent amazon-cloudwatch 102s serviceaccount/default amazon-cloudwatch 102s serviceaccount/fluent-bit amazon-cloudwatch 102s controllerrevision.apps/cloudwatch-agent-bfcddf455 amazon-cloudwatch 102s controllerrevision.apps/fluent-bit-98d767484 amazon-cloudwatch 101s daemonset.apps/cloudwatch-agent amazon-cloudwatch 102s daemonset.apps/fluent-bit amazon-cloudwatch 101s # 클러스터 롤 & 바인딩 확인 $kubectl describe clusterrole cloudwatch-agent-role fluent-bit-role $kubectl describe clusterrolebindings cloudwatch-agent-role-binding fluent-bit-role-binding # CW Agent Config map 확인 $kubectl describe cm cwagentconfig -n amazon-cloudwatch Name: cwagentconfig Namespace: amazon-cloudwatch Labels: <none> Annotations: <none> Data ==== cwagentconfig.json: ---- { "agent": { "region": "ap-northeast-2" }, "logs": { "metrics_collected": { "kubernetes": { "cluster_name": "myeks", "metrics_collection_interval": 60 } }, "force_flush_interval": 5 } } BinaryData ==== Events: <none> # CW Agent는 Volumes > Path를 통해 메트릭을 수집한다. $kubectl describe -n amazon-cloudwatch ds cloudwatch-agent ... Volumes: cwagentconfig: Type: ConfigMap (a volume populated by a ConfigMap) Name: cwagentconfig Optional: false rootfs: Type: HostPath (bare host directory volume) Path: / HostPathType: dockersock: Type: HostPath (bare host directory volume) Path: /var/run/docker.sock HostPathType: varlibdocker: Type: HostPath (bare host directory volume) Path: /var/lib/docker HostPathType: containerdsock: Type: HostPath (bare host directory volume) Path: /run/containerd/containerd.sock HostPathType: sys: Type: HostPath (bare host directory volume) Path: /sys HostPathType: devdisk: Type: HostPath (bare host directory volume) Path: /dev/disk/ HostPathType: ... # fluent-bit-cluster-info $kubectl get cm -n amazon-cloudwatch fluent-bit-cluster-info -o yaml | yh apiVersion: v1 data: cluster.name: myeks http.port: "2020" http.server: "On" logs.region: ap-northeast-2 read.head: "Off" read.tail: "On" ... # Fluent Bit Plugin # INPUT : 다양한 로그 데이터 수집 # FILTER : 로그 데이터 가공 # OUTPUT : 로그가 전달될 최종 목적지 $kubectl describe cm fluent-bit-config -n amazon-cloudwatch application-log.conf: ---- [INPUT] Name tail Tag application.* Path /var/log/containers/cloudwatch-agent* multiline.parser docker, cri DB /var/fluent-bit/state/flb_cwagent.db Mem_Buf_Limit 5MB Skip_Long_Lines On Refresh_Interval 10 Read_from_Head ${READ_FROM_HEAD} [FILTER] Name kubernetes Match application.* Kube_URL https://kubernetes.default.svc:443 Kube_Tag_Prefix application.var.log.containers. Merge_Log On Merge_Log_Key log_processed K8S-Logging.Parser On K8S-Logging.Exclude Off Labels Off Annotations Off Use_Kubelet On Kubelet_Port 10250 Buffer_Size 0 [OUTPUT] Name cloudwatch_logs Match application.* region ${AWS_REGION} log_group_name /aws/containerinsights/${CLUSTER_NAME}/application log_stream_prefix ${HOST_NAME}- auto_create_group true extra_user_agent container-insights- CloudWatch > 로그 그룹 > Fluent Bit가 수집 및 전송한 로그 그룹 확인

- CloudWatch > Logs Insights > 쿼리 조회

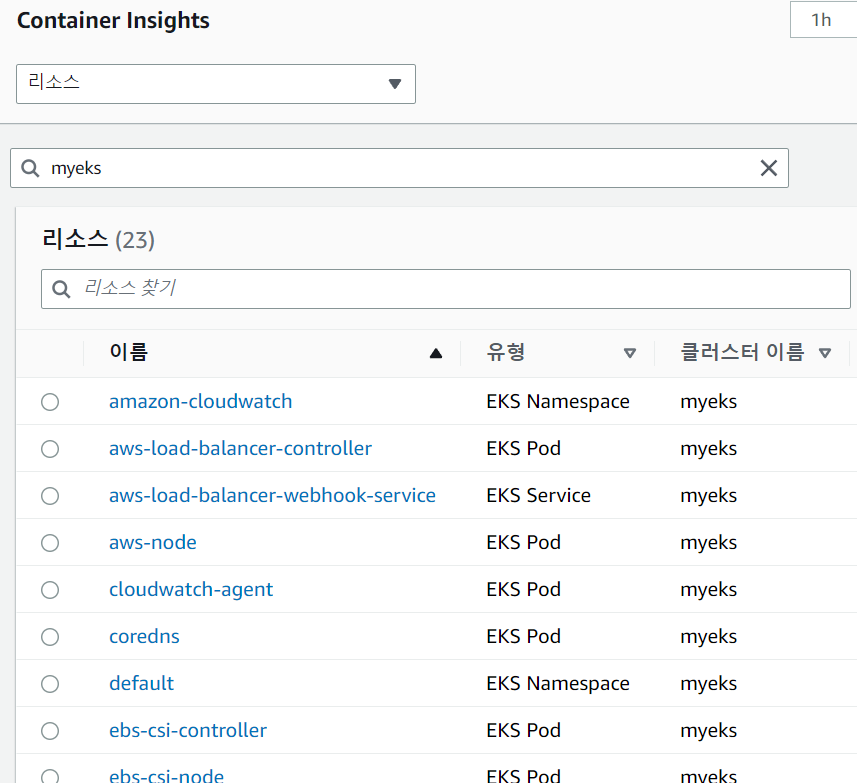

# Application log errors by container name : 컨테이너 이름별 애플리케이션 로그 오류 # 로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/application stats count() as error_count by kubernetes.container_name | filter stream="stderr" | sort error_count desc # All Kubelet errors/warning logs for for a given EKS worker node # /aws/containerinsights/<CLUSTER_NAME>/dataplane fields @timestamp, @message, ec2_instance_id | filter message =~ /.*(E|W)[0-9]{4}.*/ and ec2_instance_id="<YOUR INSTANCE ID>" | sort @timestamp desc # Kubelet errors/warning count per EKS worker node in the cluster # /aws/containerinsights/<CLUSTER_NAME>/dataplane fields @timestamp, @message, ec2_instance_id | filter message =~ /.*(E|W)[0-9]{4}.*/ | stats count(*) as error_count by ec2_instance_id # performance 로그 그룹 # /aws/containerinsights/<CLUSTER_NAME>/performance # 노드별 평균 CPU 사용률 stats avg(node_cpu_utilization) as avg_node_cpu_utilization by NodeName | sort avg_node_cpu_utilization DESC # 파드별 재시작(restart) 카운트 stats avg(number_of_container_restarts) as avg_number_of_container_restarts by PodName | sort avg_number_of_container_restarts DESC # 요청된 Pod와 실행 중인 Pod 간 비교 fields @timestamp, @message | sort @timestamp desc | filter Type="Pod" | stats min(pod_number_of_containers) as requested, min(pod_number_of_running_containers) as running, ceil(avg(pod_number_of_containers-pod_number_of_running_containers)) as pods_missing by kubernetes.pod_name | sort pods_missing desc # 클러스터 노드 실패 횟수 stats avg(cluster_failed_node_count) as CountOfNodeFailures | filter Type="Cluster" | sort @timestamp desc # 파드별 CPU 사용량 stats pct(container_cpu_usage_total, 50) as CPUPercMedian by kubernetes.container_name | filter Type="Container" | sort CPUPercMedian desc - CloudWatch > Container Insights > 클러스터 선택 > 메트릭 리스트 확인

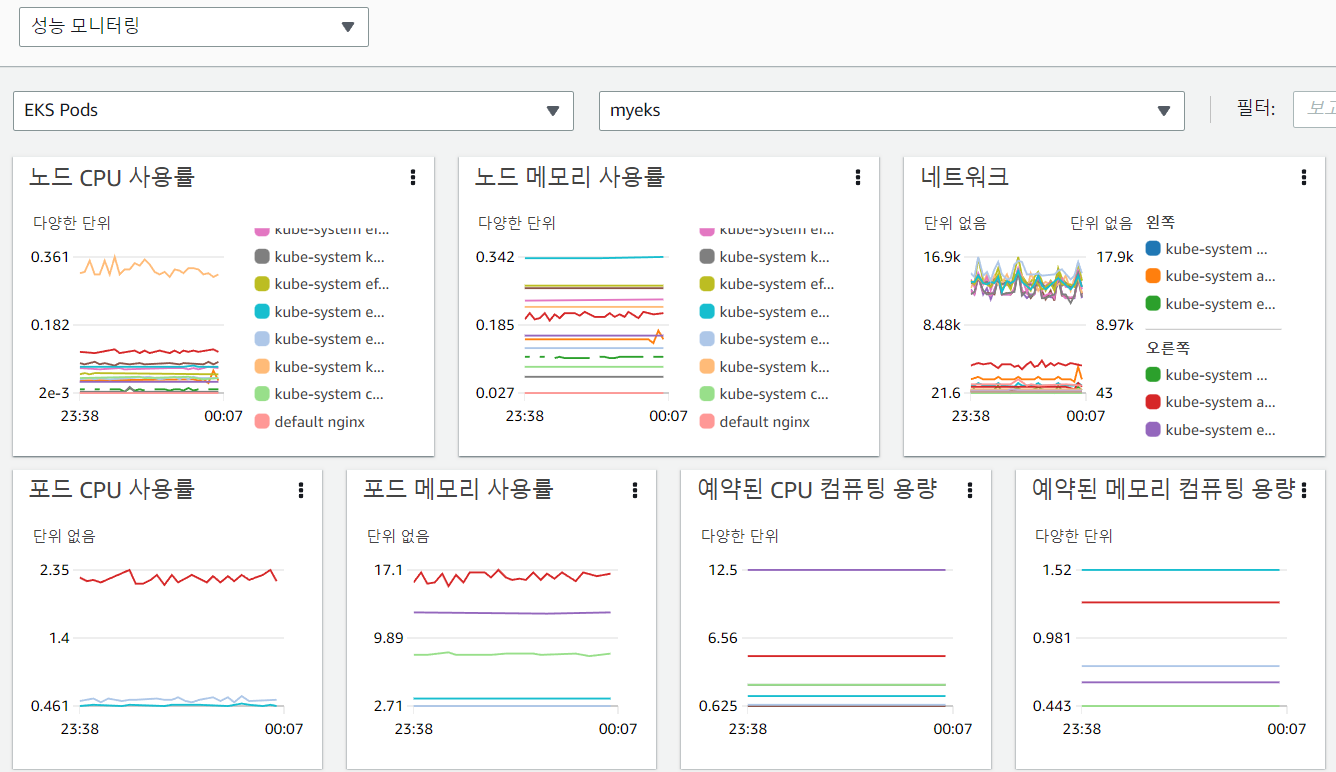

- 성능 모니터링 대시보드 확인

- 성능 모니터링 대시보드 확인

- CloudWatch > 로그 그룹 > Fluent Bit가 수집 및 전송한 로그 그룹 확인

- 노드 내 로그 사전 확인

- Container Insights는 파드 내 로그를 아래와 같이 세 가지로 분류하고 Fluent Bit를 통해 수집, CloudWatch Logs에 전송한다.

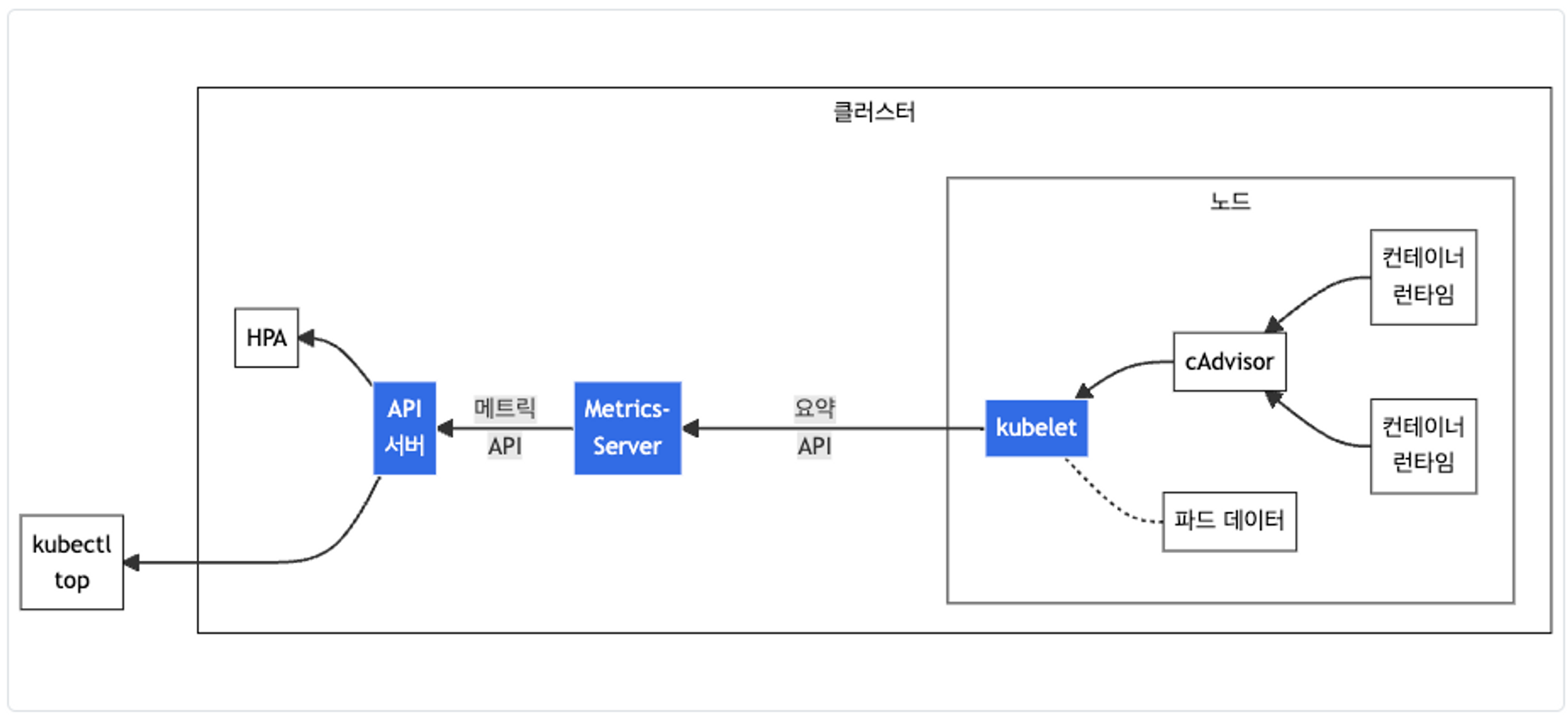

Metric-Server

- Kubelet으로부터 수집한 클러스터 내 리소스 사용량 관련 메트릭을 집계하는 Addon, 주로 HPA, Dashboard와 같은 다른 추가 기능에서 사용한다.

- 각 구성요소는 다음과 같다.

- cAdvisor : kubelet에 포함된 컨테이너의 CPU 메트릭을 수집, 집계, 노출하는 데몬

- metric-server : 각 노드의 kubelet으로부터 리소스 메트릭을 수집 및 집계하여 metric-api를 통해 조회할 수 있다.

- metric-api : 수집한 메트릭을 HPA 및 kubectl top 명령어가 사용할 수 있도록 엔드포인트를 제공한다.

- 각 구성요소는 다음과 같다.

- metric-server를 배포하고, kubectl top 명령어를 수행해보자

# metric-server 배포 $kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml # metric-resolution : 15초 간격으로 cAdvisor를 통해 메트릭을 가져온다. $kubectl describe pod -n kube-system -l k8s-app=metrics-server ... Containers: metrics-server: Container ID: containerd://687d85dc29e61d1cf9ab7ca6cc90b3d302e9f135839d75ceff62ad3eece0ba03 Image: registry.k8s.io/metrics-server/metrics-server:v0.6.3 Image ID: registry.k8s.io/metrics-server/metrics-server@sha256:c60778fa1c44d0c5a0c4530ebe83f9243ee6fc02f4c3dc59226c201931350b10 Port: 4443/TCP Host Port: 0/TCP Args: --cert-dir=/tmp --secure-port=4443 --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --kubelet-use-node-status-port --metric-resolution=15s ... # 노드 메트릭 확인 $kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% ip-192-168-1-160.ap-northeast-2.compute.internal 74m 1% 718Mi 4% ip-192-168-2-136.ap-northeast-2.compute.internal 78m 1% 677Mi 4% ip-192-168-3-143.ap-northeast-2.compute.internal 67m 1% 767Mi 5% # 파드 메트릭 확인 $kubectl top pod -n kube-system --sort-by='cpu' NAME CPU(cores) MEMORY(bytes) kube-ops-view-558d87b798-gsbjl 11m 35Mi ebs-csi-controller-67658f895c-v2mzb 5m 57Mi aws-node-qrmnp 5m 38Mi aws-node-rf9s5 4m 38Mi ...

Prometheus

- SoundCloud에서 개발한 오픈소스 모니터링 시스템으로 CNCF 프로젝트에 속해있다.

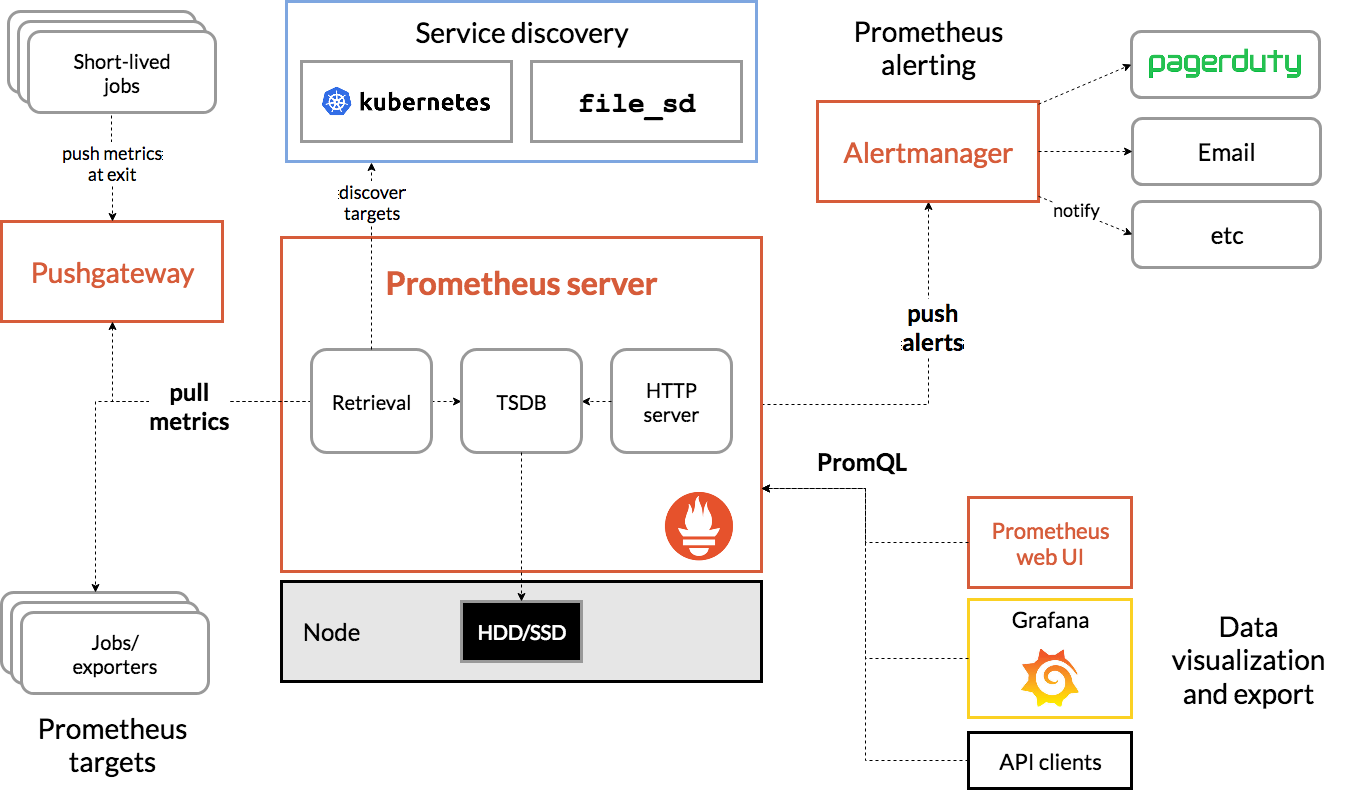

- 구성요소

- Prometheus Server

- Retrieval

- Exporter를 사용하여 대상의 메트릭을 주기적으로 수집하는 모듈

- TSDB(Time Series Database)

- 수집한 메트릭은 프로메테우스 내의 메모리와 로컬 디스크에 저장된다.

- PromQL

- TSDB에 저장된 데이터는 PromQL을 통한 쿼리문으로 조회가 가능하다.

- Retrieval

- Exporter

- Pulling 방식으로 데이터를 수집할 수 있도록 메트릭을 노출하는 agent이다.

- 대상으로부터 메트릭을 수집해 HTTP Endpoint를 제공하며 프로메테우스는 해당 엔드포인트로 단순히 HTTP GET 요청을 날려 메트릭을 텍스트 형태로 수집한다.

- Service Discovery

- 특정 시스템을 사용하여 현재 실행 중인 서비스들의 목록과 IP 주소를 가지는 시스템

- 서비스 디스커버리 전용 솔루션인 Hashicorp의 Consul나 K8S를 통해서 모니터링 대상 서비스의 목록을 가지고 올 수 있다.

- Prometheus Server

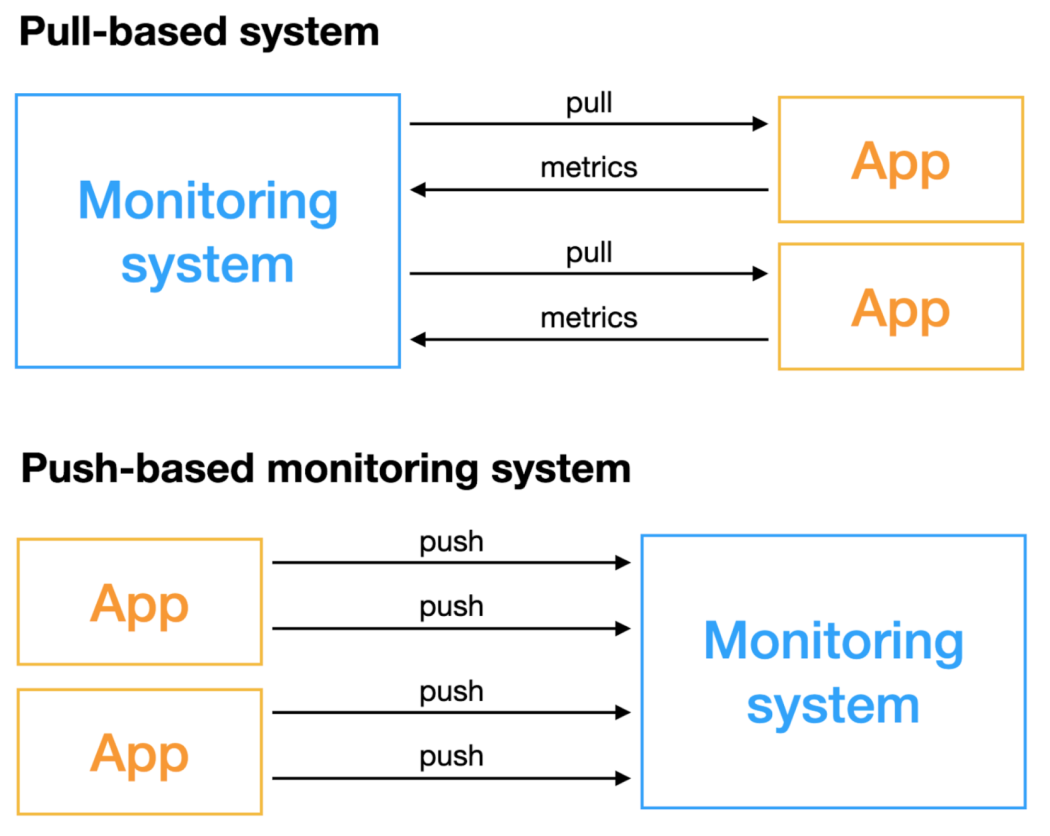

- Pull vs Push

- Pull-based monitoring system

- 에이전트가 메트릭을 수집하고 제공한 엔드포인트를 통해 노출된 메트릭을 모니터링 서버가 수집하는 방식

- 일정한 주기를 통해 메트릭을 수집하기 때문에 오버헤드가 적은 대신 메트릭이 유실될 수 있으므로 정확하지 않을 수 있다.

- 수집 대상의 생성 및 삭제 등과 같은 변경사항이 발생할 경우 대상을 식별할 수 있는 기능을 제공하는 서비스 디스커버리가 필요하다.

- Push-based monitoring system

- 에이전트가 메트릭을 수집하고 모니터링 서버로 직접 메트릭을 보내는 방식

- 메트릭을 지속적으로 보내기 때문에 부하가 높은 상황에서 서비스에 문제를 유발할 수 있는 fail point가 발생할 가능성이 있다.

- Pull-based monitoring system

- helm chart로 프로메테우스를 배포해보자

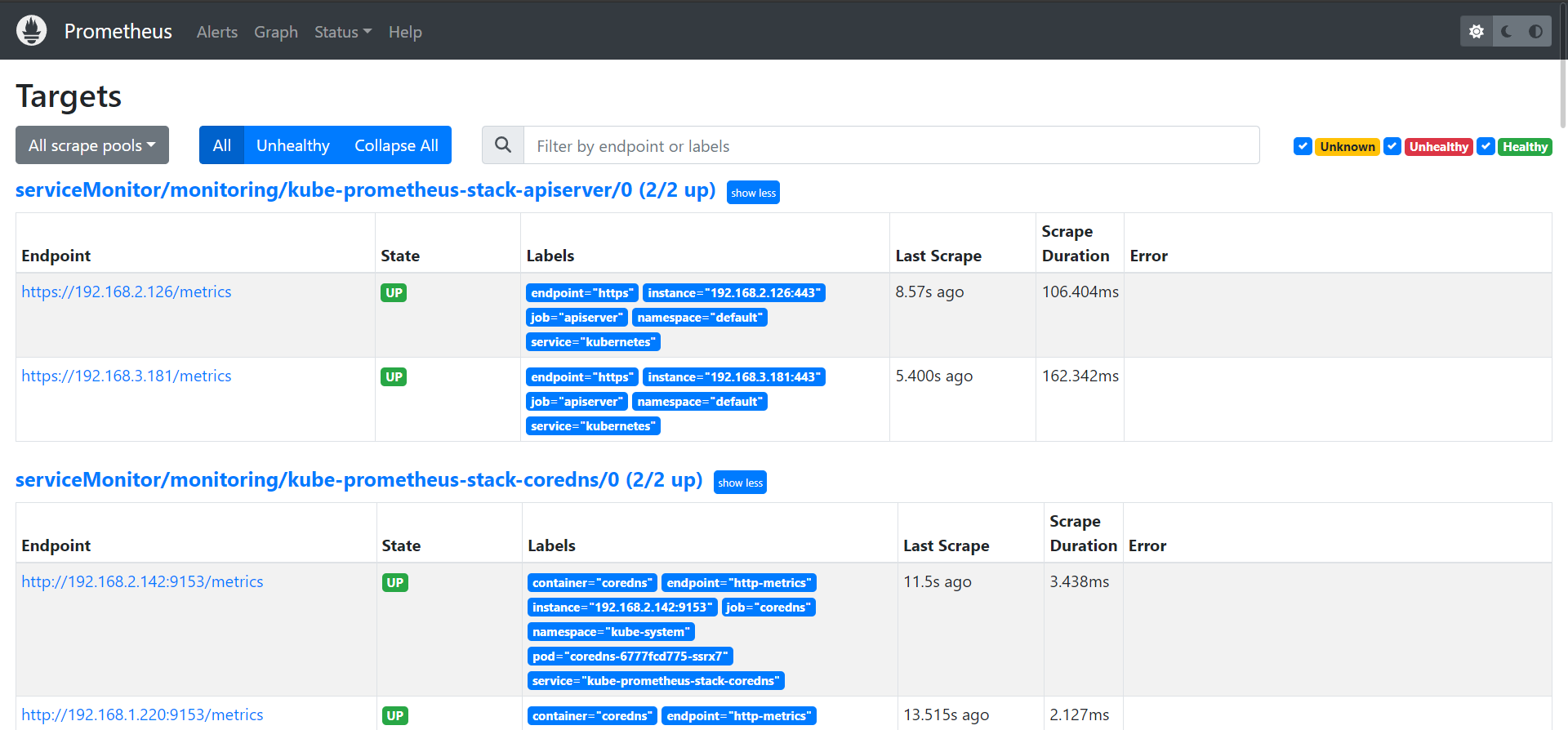

# 네임스페이스 생성 $kubectl create ns monitoring # Prometheus 추가 $helm repo add prometheus-community https://prometheus-community.github.io/helm-charts # 파라미터 파일 생성 cat <<EOT > monitor-values.yaml prometheus: prometheusSpec: podMonitorSelectorNilUsesHelmValues: false serviceMonitorSelectorNilUsesHelmValues: false retention: 5d retentionSize: "10GiB" ingress: enabled: true ingressClassName: alb hosts: - prometheus.$MyDomain paths: - /* annotations: alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/target-type: ip alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]' alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN alb.ingress.kubernetes.io/success-codes: 200-399 alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb alb.ingress.kubernetes.io/group.name: study alb.ingress.kubernetes.io/ssl-redirect: '443' grafana: defaultDashboardsTimezone: Asia/Seoul adminPassword: prom-operator ingress: enabled: true ingressClassName: alb hosts: - grafana.$MyDomain paths: - /* annotations: alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/target-type: ip alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]' alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN alb.ingress.kubernetes.io/success-codes: 200-399 alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb alb.ingress.kubernetes.io/group.name: study alb.ingress.kubernetes.io/ssl-redirect: '443' defaultRules: create: false kubeControllerManager: enabled: false kubeEtcd: enabled: false kubeScheduler: enabled: false alertmanager: enabled: false EOT # 배포 # scrapeInterval : 메트릭 수집 간격 # evaluationInterval : 해당 메트릭의 alert을 보낼지 판단하는 간격 $helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 45.27.2 \ --set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \ -f monitor-values.yaml --namespace monitoring # 정상 배포 확인 $helm list -n monitoring NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION kube-prometheus-stack monitoring 1 2023-05-20 15:11:26.815671916 +0900 KST deployed kube-prometheus-stack-45.27.2 v0.65.1 $kubectl get pod,svc,ingress -n monitoring NAME READY STATUS RESTARTS AGE pod/kube-prometheus-stack-grafana-774f4f8d49-9h4sd 3/3 Running 0 106s pod/kube-prometheus-stack-kube-state-metrics-5d6578867c-w42rn 1/1 Running 0 106s pod/kube-prometheus-stack-operator-74d474b47b-p2q4t 1/1 Running 0 106s pod/kube-prometheus-stack-prometheus-node-exporter-597z7 1/1 Running 0 106s pod/kube-prometheus-stack-prometheus-node-exporter-ck9b8 1/1 Running 0 106s pod/kube-prometheus-stack-prometheus-node-exporter-mk4fw 1/1 Running 0 107s pod/prometheus-kube-prometheus-stack-prometheus-0 2/2 Running 0 100s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kube-prometheus-stack-grafana ClusterIP 10.100.13.156 <none> 80/TCP 107s service/kube-prometheus-stack-kube-state-metrics ClusterIP 10.100.202.146 <none> 8080/TCP 107s service/kube-prometheus-stack-operator ClusterIP 10.100.30.130 <none> 443/TCP 107s service/kube-prometheus-stack-prometheus ClusterIP 10.100.29.58 <none> 9090/TCP 107s service/kube-prometheus-stack-prometheus-node-exporter ClusterIP 10.100.64.153 <none> 9100/TCP 107s service/prometheus-operated ClusterIP None <none> 9090/TCP 100s NAME CLASS HOSTS ADDRESS PORTS AGE ingress.networking.k8s.io/kube-prometheus-stack-grafana alb grafana.eljoe-test.link myeks-ingress-alb-1182578444.ap-northeast-2.elb.amazonaws.com 80 107s ingress.networking.k8s.io/kube-prometheus-stack-prometheus alb prometheus.eljoe-test.link myeks-ingress-alb-1182578444.ap-northeast-2.elb.amazonaws.com 80 107s $kubectl get prometheus,servicemonitors -n monitoring NAME VERSION DESIRED READY RECONCILED AVAILABLE AGE prometheus.monitoring.coreos.com/kube-prometheus-stack-prometheus v2.42.0 1 1 True True 11m NAME AGE servicemonitor.monitoring.coreos.com/kube-prometheus-stack-apiserver 11m servicemonitor.monitoring.coreos.com/kube-prometheus-stack-coredns 11m servicemonitor.monitoring.coreos.com/kube-prometheus-stack-grafana 11m servicemonitor.monitoring.coreos.com/kube-prometheus-stack-kube-proxy 11m servicemonitor.monitoring.coreos.com/kube-prometheus-stack-kube-state-metrics 11m servicemonitor.monitoring.coreos.com/kube-prometheus-stack-kubelet 11m servicemonitor.monitoring.coreos.com/kube-prometheus-stack-operator 11m servicemonitor.monitoring.coreos.com/kube-prometheus-stack-prometheus 11m servicemonitor.monitoring.coreos.com/kube-prometheus-stack-prometheus-node-exporter 11m # Port 9100을 통해 메트릭을 수집한다. $kubectl get svc -n monitoring kube-prometheus-stack-prometheus-node-exporter NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kube-prometheus-stack-prometheus-node-exporter ClusterIP 10.100.64.153 <none> 9100/TCP 3h52m # Node_IP:9100/metrics 접속 시 다양한 메트릭을 확인할수 있다. ssh ec2-user@$N1 curl -s localhost:9100/metrics # ingress > HOSTS 접속 $kubectl get ingress -n monitoring kube-prometheus-stack-prometheus NAME CLASS HOSTS ADDRESS PORTS AGE kube-prometheus-stack-prometheus alb prometheus.eljoe-test.link myeks-ingress-alb-1182578444.ap-northeast-2.elb.amazonaws.com 80 26m - Status > Targets

Grafana

- 다양한 형식의 데이터(로그, 메트릭 등)을 시각화하여 대시보드를 제공하는 오픈소스 모니터링 툴

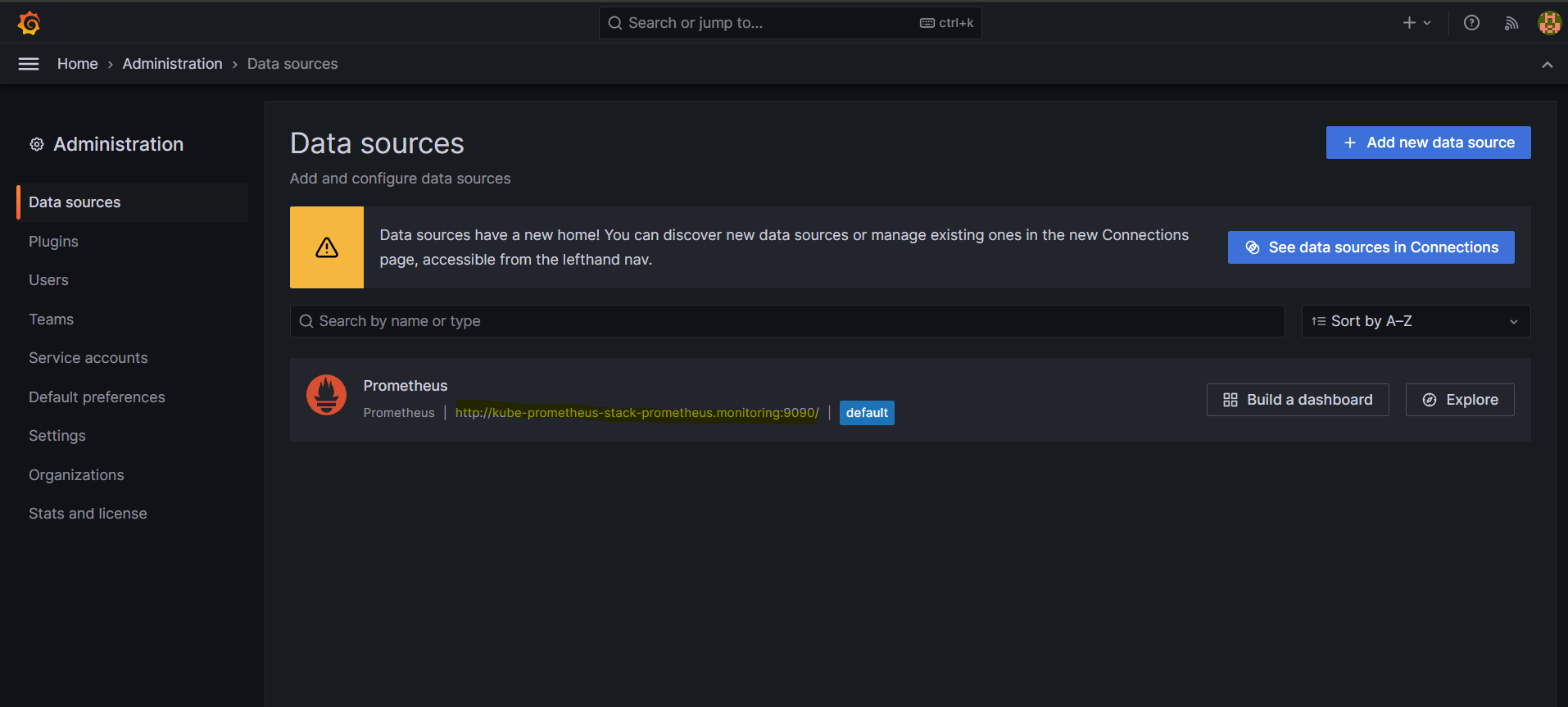

# 그라파나 버전 확인 $kubectl exec -it -n monitoring deploy/kube-prometheus-stack-grafana -- grafana-cli --version grafana cli version 9.5.1 # Ingress > HOSTS 접속 - admin/prom-operator $kubectl get ingress -n monitoring kube-prometheus-stack-grafana NAME CLASS HOSTS ADDRESS PORTS AGE kube-prometheus-stack-grafana alb grafana.eljoe-test.link myeks-ingress-alb-1182578444.ap-northeast-2.elb.amazonaws.com 80 4h - Home > Administration > Data sources > Prometheus(배포 시 서비스를 자동으로 데이터 소스로 추가)

# 서비스 주소 확인 $kubectl get svc,ingress,ep -n monitoring kube-prometheus-stack-prometheus NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kube-prometheus-stack-prometheus ClusterIP 10.100.29.58 <none> 9090/TCP 4h16m NAME CLASS HOSTS ADDRESS PORTS AGE ingress.networking.k8s.io/kube-prometheus-stack-prometheus alb prometheus.eljoe-test.link myeks-ingress-alb-1182578444.ap-northeast-2.elb.amazonaws.com 80 4h16m NAME ENDPOINTS AGE endpoints/kube-prometheus-stack-prometheus 192.168.1.144:9090 4h16m - 대시보드 가져오기 - 링크

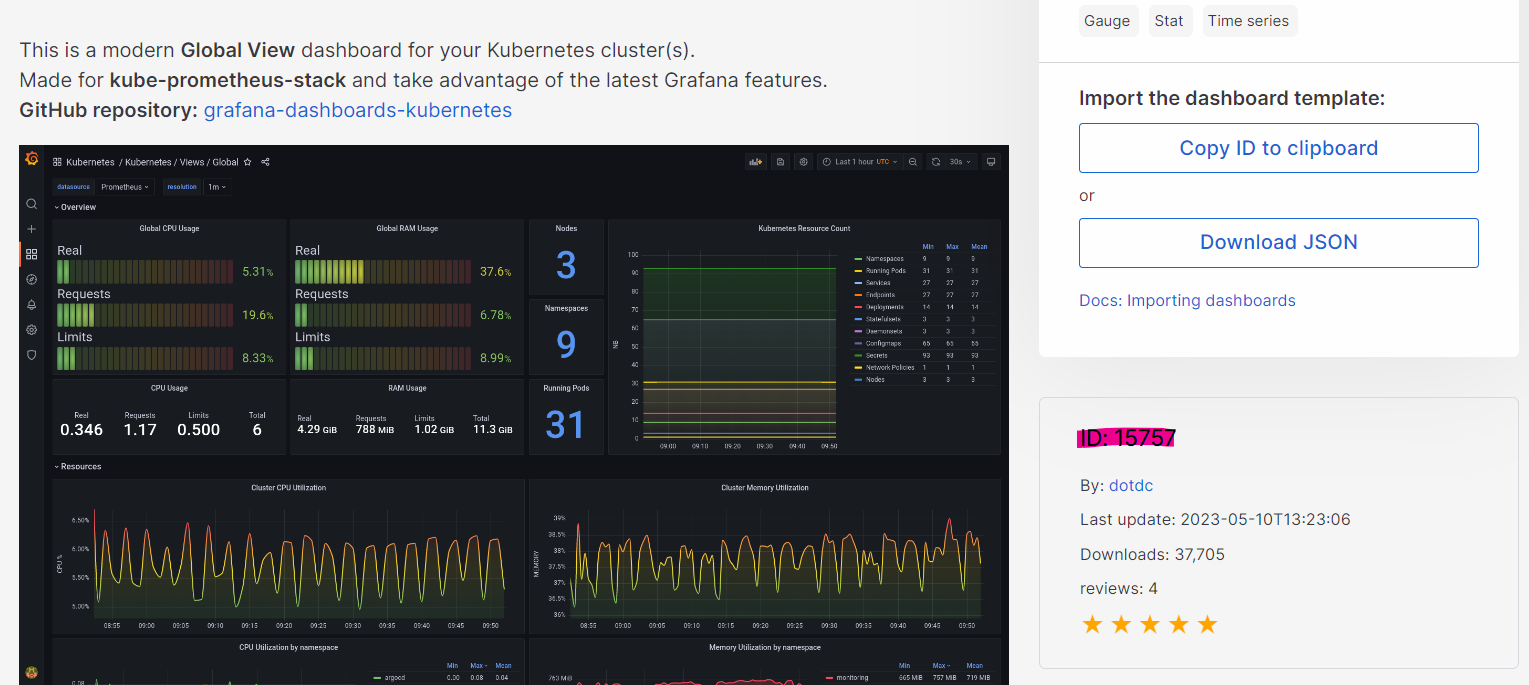

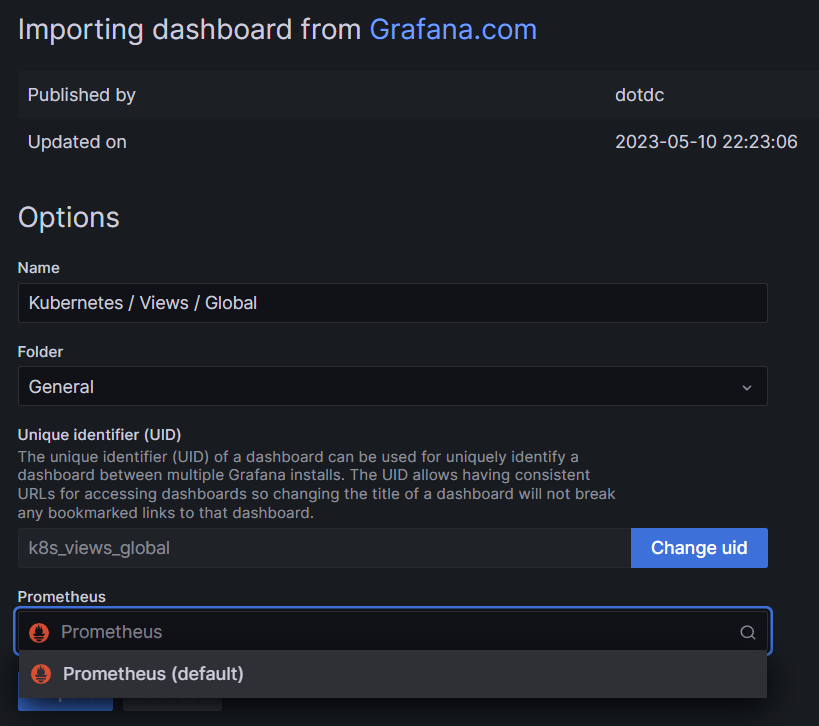

- 링크 내 ‘Kubernetes / Views / Global’ > Detail > 우측 하단 ID : 15757 복사

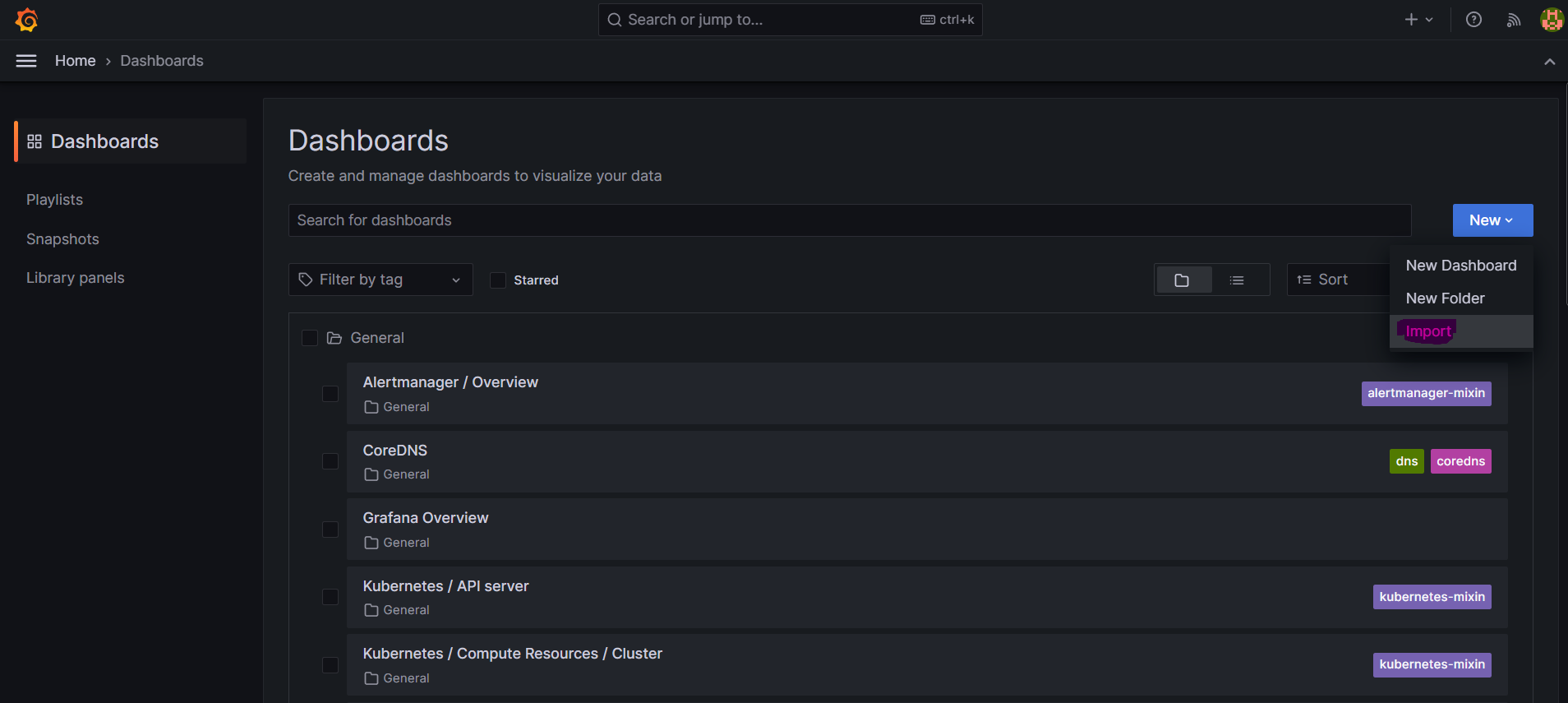

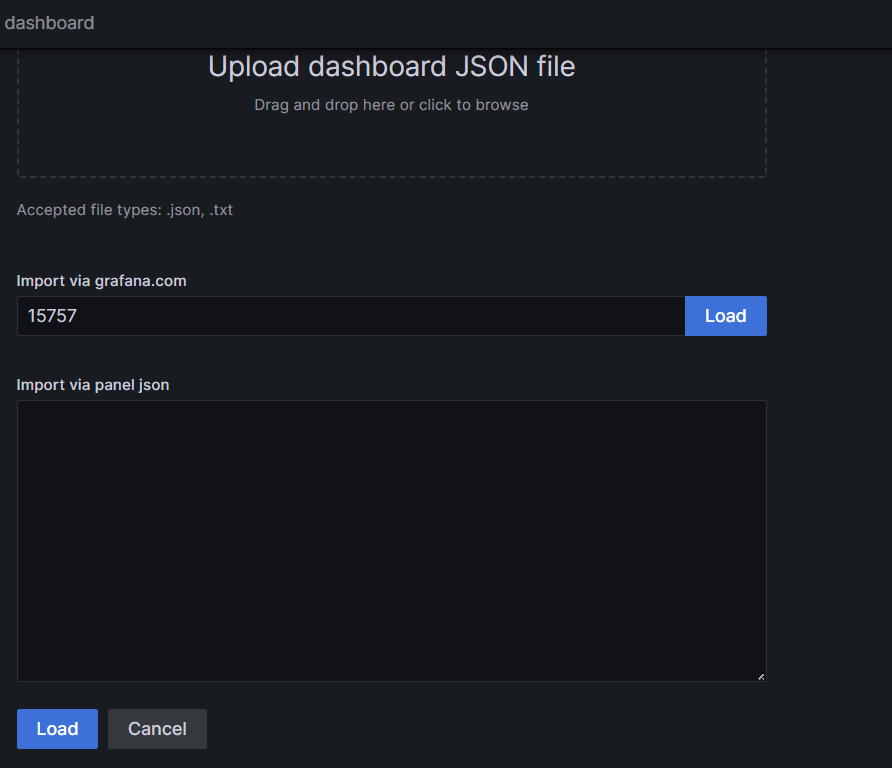

- Dashboard > New > Import

- Import via grafana.com > ‘15757’ 입력 > Load

- Prometheus > Default 선택 > Import

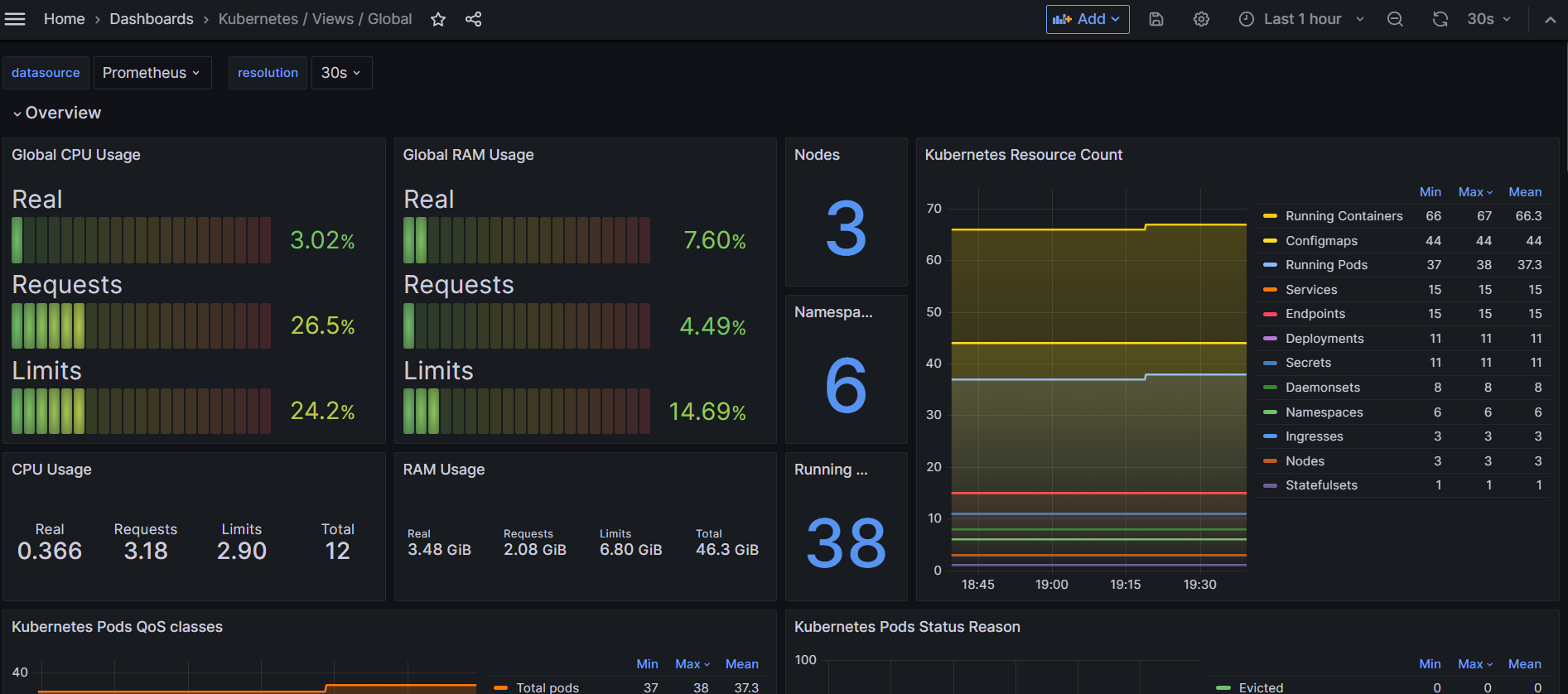

- 대시보드 확인

- 링크 내 ‘Kubernetes / Views / Global’ > Detail > 우측 하단 ID : 15757 복사

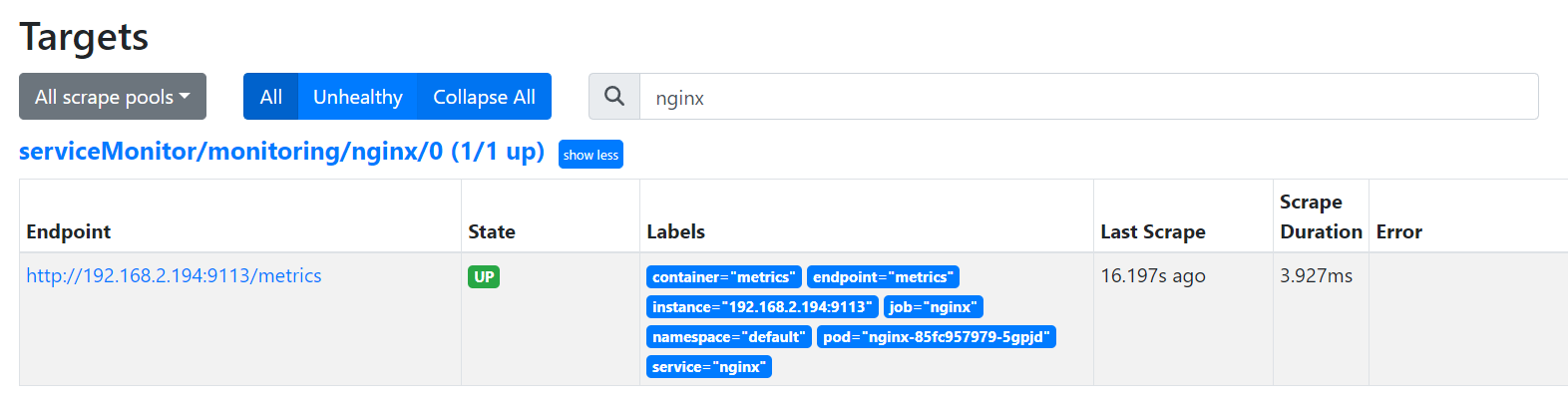

- nginx 웹 서버 배포 및 모니터링 설정 후 대시보드 확인

# Helm 설치 시, Expoter 옵션을 설정하면 자동으로 프로메테우스가 메트릭을 수집한다. # nginx 모니터링 관련 내용을 서비스 모니터라는 CRD로 추가할 수 있다. $cat <<EOT > ~/nginx_metric-values.yaml metrics: enabled: true service: port: 9113 serviceMonitor: enabled: true namespace: monitoring interval: 10s EOT # 기존 nginx 웹 서버에 모니터링 설정 추가 $helm upgrade nginx bitnami/nginx --reuse-values -f nginx_metric-values.yaml # 확인 $kubectl get servicemonitor -n monitoring nginx NAME AGE nginx 67s

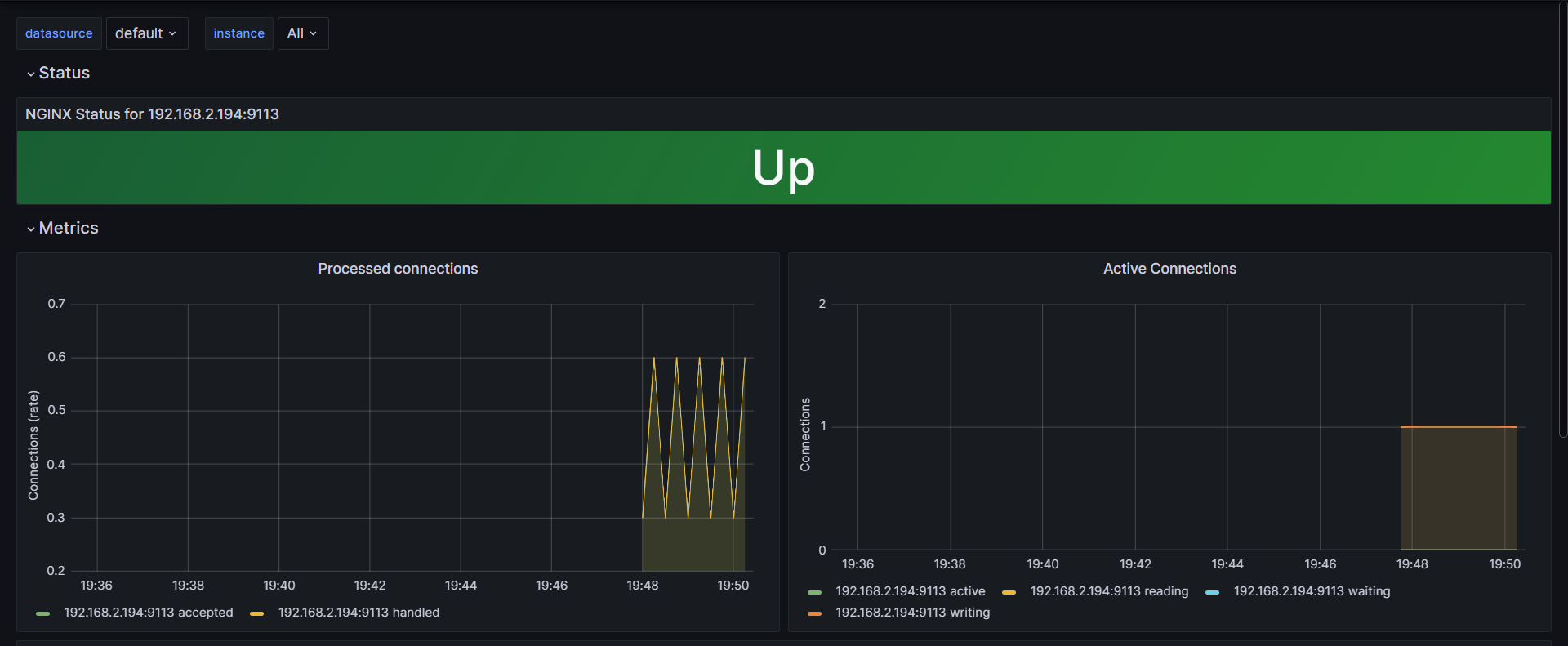

- 그라파나 > 12708 대시보드 Import

- 그라파나 > 12708 대시보드 Import

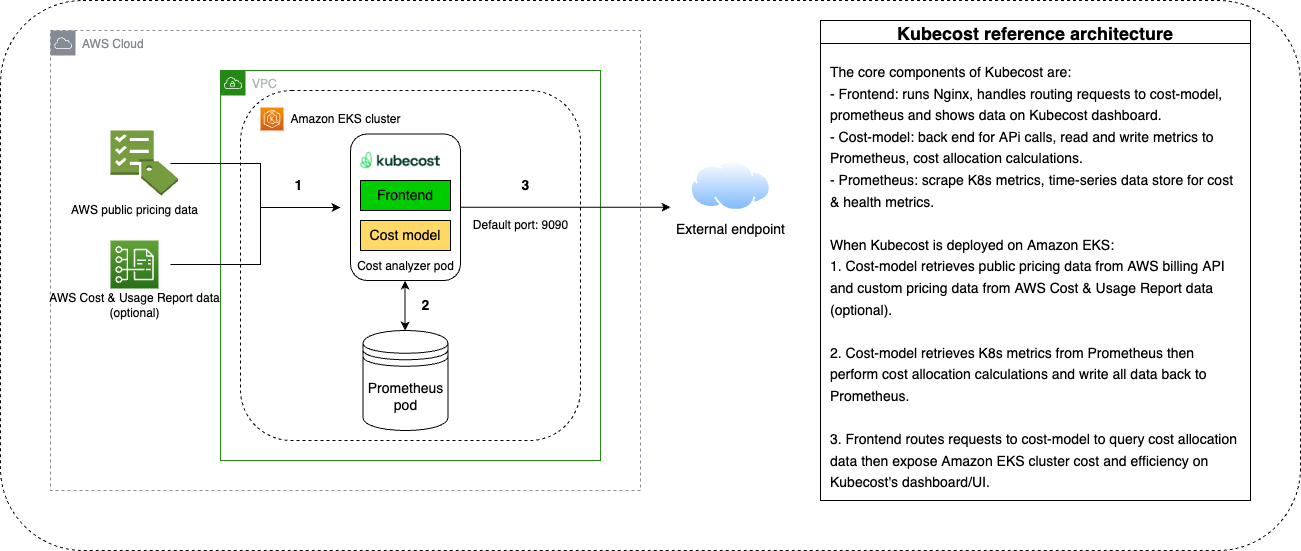

Kubecost

-

OpenCost 기반 K8S 리소스별 비용 시각화 툴 - Architectures

- Frontend : Nginx가 Backend와 Prometheus/Grafana 라우팅

- Cost model : 비용 계산 후 메트릭을 Prometheus에게 제공

-

설치

# 네임스페이스 생성 $kubectl create ns kubecost $cat <<EOT > cost-values.yaml global: grafana: enabled: true proxy: false priority: enabled: false networkPolicy: enabled: false podSecurityPolicy: enabled: false persistentVolume: storageClass: "gp3" prometheus: kube-state-metrics: disabled: false nodeExporter: enabled: true reporting: productAnalytics: true EOT # kubecost chart 에 프로메테우스가 포함되어 있으니, 기존 프로메테우스-스택은 삭제하자 : node-export 포트 충돌 발생 $helm uninstall -n monitoring kube-prometheus-stack # 배포 $helm install kubecost oci://public.ecr.aws/kubecost/cost-analyzer --version 1.103.2 --namespace kubecost -f cost-values.yaml # Ingress 설정 $cat <<EOT > kubecost-ingress.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: kubecost-ingress annotations: alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN alb.ingress.kubernetes.io/group.name: study alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]' alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/ssl-redirect: "443" alb.ingress.kubernetes.io/success-codes: 200-399 alb.ingress.kubernetes.io/target-type: ip spec: ingressClassName: alb rules: - host: kubecost.$MyDomain http: paths: - backend: service: name: kubecost-cost-analyzer port: number: 9090 path: / pathType: Prefix EOT $kubectl apply -f kubecost-ingress.yaml -n kubecost # 배포 확인 $kubectl get-all -n kubecost # kubecost-cost-analyzer 접속 정보 확인 $echo -e "Kubecost Web https://kubecost.$MyDomain"