1. 어제 회고

서비스포트, 타겟포트, 노드 포트에 대한 설명, 예제 문제 풀이

np-test.yml - pod 띄우기 위한 매니페스트 파일

apiVersion: v1

kind: Pod

metadata:

name: np-test

labels:

app: np-test

spec:

containers:

- name: np-container

image: 192.168.0.195:5000/hnginx

np-test-svc.yml - 서비스 매니페스트 파일

apiVersion:v1

kind: Service

metadata:

name: np-test-svc

spec:

selector:

app: np-test

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 30001

type: NodePort

root@master:~# kubectl apply -f np-test.yml -f np-test-svc.yml

이후 curl 명령어로 로드밸런싱 확인

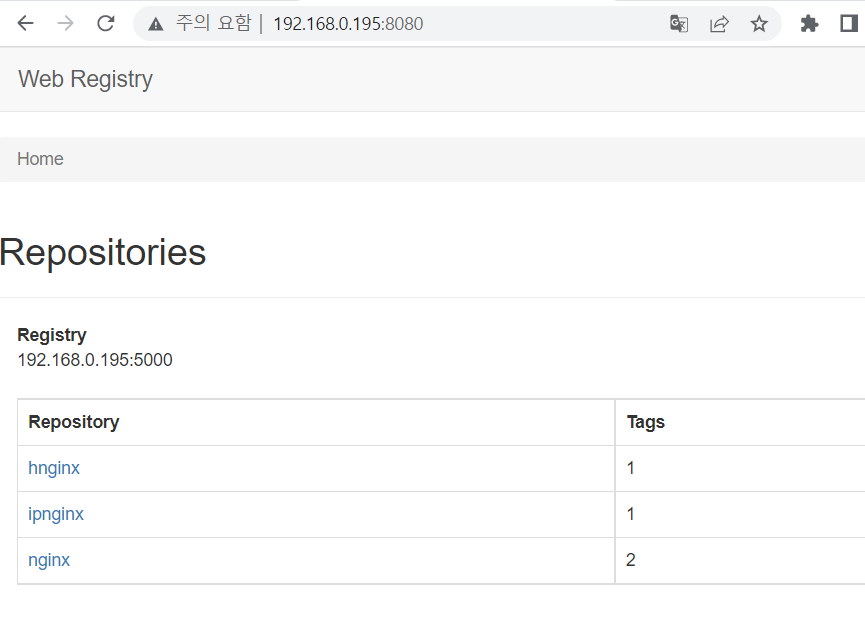

Private Registry 이미지 목록 구경하기

2. ReplicaSet & Service 문제

- 조건 한개의 메니페스트 파일로 구성

192.168.0.195:5000/hnginx 라는 이미지는 호스트 네임을 출력하는 간단한 nginx 컨테이너 이미지이다.

해당 이미지를 통해 app:hginx 라는 label을 갖는 pod 레플리카셋을 3으로 구성한 후, np-rep-svc 라는 서비스를 통해 해당 레플리카셋을 노드 포트(:32768)로 연결해보자

--- # 한개의 매니페스트 파일로 구성하고 싶다면 '---' 단락에 추가해주면 된다

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: np-rep

spec:

selector: #복제할 대상을 '선택'

matchLabels: #매칭라벨은

app: hnginx # app:test이다.

replicas: 3

template: #기존의 Pod 매니페스트 파일 형식과 같다.

metadata:

name: hnginx

labels:

app: hnginx #위에서 선택될 라벨

spec:

containers:

- name: hnginx-container

image: 192.168.0.195:5000/hnginx

---

apiVersion: v1

kind: Service

metadata:

name: np-rep-svc

spec:

selector:

app: hnginx

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 32767

type: NodePort

root@master:~# kubectl apply -f np-rep.yml

root@master:~# kubectl get svc,rs

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 47h

service/np-rep-svc NodePort 10.106.241.197 <none> 80:32767/TCP 79m

service/np-svc NodePort 10.110.210.139 <none> 80:30000/TCP 17h

NAME DESIRED CURRENT READY AGE

replicaset.apps/np-rep 3 3 3 80m

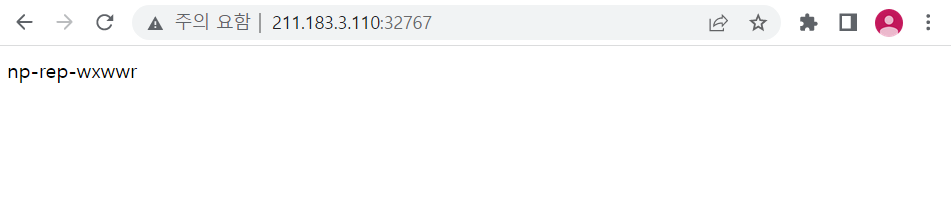

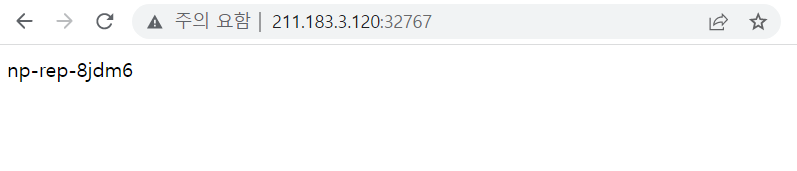

결과

root@master:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

np-rep-7b572 1/1 Running 0 22m

np-rep-8jdm6 1/1 Running 0 22m

np-rep-wxwwr 1/1 Running 0 22m

root@master:~# curl 211.183.3.110:32767

np-rep-wxwwr

root@master:~# curl 211.183.3.110:32767

np-rep-8jdm6

root@master:~# curl 211.183.3.110:32767

np-rep-wxwwr

root@master:~# curl 211.183.3.110:32767

np-rep-7b572

root@master:~# curl 211.183.3.120:32767

np-rep-wxwwr

root@master:~# curl 211.183.3.120:32767

np-rep-7b572

root@master:~# curl 211.183.3.120:32767

np-rep-8jdm63. 네임스페이스(Namespace)

쿠버네티스에서, 네임스페이스는 단일 클러스터 내에서의 리소스 그룹 격리 메커니즘을 제공한다.

리소스의 이름은 네임스페이스 내에서 유일해야 하며, 네임스페이스 간에서 유일할 필요는 없다.

네임스페이스 기반 스코핑은 네임스페이스 기반 오브젝트 (예: 디플로이먼트, 서비스 등) 에만 적용 가능하며 클러스터 범위의 오브젝트 (예: 스토리지클래스, 노드, 퍼시스턴트볼륨 등) 에는 적용 불가능하다.

- 네임스페이스를 정의하지 않으면 default 네임스페이스를 따라간다.

root@master:~# kubectl delete -f np-rep.yml

replicaset.apps "np-rep" deleted

service "np-rep-svc" deleted

root@master:~# kubectl get namespace

NAME STATUS AGE

default Active 47h

kube-node-lease Active 47h

kube-public Active 47h

kube-system Active 47h

kube-system 네임스페이스 정의

root@master:~# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-7bdbfc669-vtkvx 1/1 Running 10 (117m ago) 47h

calico-node-6qwqc 1/1 Running 9 (117m ago) 47h

calico-node-ddk9t 1/1 Running 6 (116m ago) 47h

calico-node-z9vsh 1/1 Running 6 (116m ago) 47h

coredns-787d4945fb-6j6m5 1/1 Running 10 (117m ago) 47h

coredns-787d4945fb-szkxc 1/1 Running 10 (117m ago) 47h

etcd-master 1/1 Running 11 (117m ago) 47h

kube-apiserver-master 1/1 Running 11 (117m ago) 47h

kube-controller-manager-master 1/1 Running 12 (117m ago) 47h

kube-proxy-c8s7q 1/1 Running 6 (116m ago) 47h

kube-proxy-fblbx 1/1 Running 6 (116m ago) 47h

kube-proxy-v6s56 1/1 Running 12 (117m ago) 47h

kube-scheduler-master 1/1 Running 16 (117m ago) 47h네임스페이스 생성

root@master:~# kubectl create namespace rapa

namespace/rapa created

root@master:~# kubectl get namespaces

NAME STATUS AGE

default Active 47h

kube-node-lease Active 47h

kube-public Active 47h

kube-system Active 47h

rapa Active 11s

매니페스트 파일로 생성할 수 있다.

root@master:~# kubectl create namespace kakao --dry-run=client -o yaml > kakao-ns.yml

root@master:~# cat kakao-ns.yml

apiVersion: v1

kind: Namespace

metadata:

creationTimestamp: null

name: kakao

spec: {}

status: {}

root@master:~# vi kakao-ns.yml

<수정 한 kakao.yml>

******

apiVersion: v1

kind: Namespace

metadata:

name: kakao

******

root@master:~# kubectl apply -f kakao-ns.yml

namespace/kakao created간단하게 pod 하나 생성하여 Test

root@master:~# vi nginx.yml

******

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- image: 192.168.0.195:5000/hnginx

name: nginx-container

******

root@master:~# kubectl apply -f nginx.yml

pod/nginx created

root@master:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 27s

생성한 pod를 rapa 네임스페이스에 존재하고 싶어함

root@master:~# kubectl delete -f nginx.yml

pod "nginx" deleted

root@master:~# vi nginx.yml

******

apiVersion: v1

kind: Pod

metadata:

name: nginx

namespace: rapa

spec:

containers:

- image: 192.168.0.195:5000/hnginx

name: nginx-container

******

root@master:~# kubectl apply -f nginx.yml

pod/nginx created

<rapa 네임스페이스에 pod가 들어가게 됨>

root@master:~# kubectl get pod -n rapa

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 16s

config view - 쿠버네티스 설정 볼 수 있는 File

root@master:~# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://211.183.3.100:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: DATA+OMITTED

client-key-data: DATA+OMITTED현재 로컬 클러스터를 구성하고 있다.

또한, 사용자는 쿠버네티스-admin 사용자로 로컬 클러스터를 사용하고 있음

만약, aws 에서 제공하는 EKS 서비스를 이용하고자 하는데,

어떤 환경에서 어떤 사용자로 클러스터를 구성할 것인지를 contexts 로 작성할 수 있다.

- 다른 contexts를 만들어서 default 네임스페이스를 rapa로 변경하고 싶다면 ?

root@master:~# kubectl config set-context rapa-admin@kubernetesContext "rapa-admin@kubernetes" created.

root@master:~# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://211.183.3.100:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

- context:

cluster: ""

user: ""

name: rapa-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: DATA+OMITTED

client-key-data: DATA+OMITTED

<아직 사용자, 클러스터, 디폴트 네임스페이스가 만들어지지 않은 상태

context 내용을 보면 알 수 있음>

root@master:~# kubectl config set-context rapa-admin@kubernetes --cluster=kubernetes --user=rapa-admin --namespace=rapa

Context "rapa-admin@kubernetes" modified.

root@master:~# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://211.183.3.100:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

- context:

cluster: kubernetes

namespace: rapa

user: rapa-admin

name: rapa-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: DATA+OMITTED

client-key-data: DATA+OMITTED

<현재 내가 어떤 context를 쓰고 있는지 확인하고 싶을 때>

root@master:~# kubectl config current-context

kubernetes-admin@kubernetes

<default namespace를 rapa namespace로 바꾸고 싶다>

root@master:~# kubectl config use-context rapa-admin@kubernetesSwitched to context "rapa-admin@kubernetes".

root@master:~# kubectl config set-context rapa-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --namespace=rapa

Context "rapa-admin@kubernetes" modified.

root@master:~# kubectl config use-context rapa-admin@kubernetesSwitched to context "rapa-admin@kubernetes".

root@master:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 17m

<비슷한 방법으로 kakao-admin@kubernetes 라는 context를 한개 추가하고,기본 namespace는 기존에 생성한 kakao로 context create 해보기>

root@master:~# kubectl config set-context kakao-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --namespace=kakao

Context "kakao-admin@kubernetes" created.

<만약 rapa namespace를 삭제하게 된다면 안에 있는 오브젝트들은 전부 없어진다>

root@master:~# kubectl config current-context

rapa-admin@kubernetes

root@master:~# kubectl get pod -n rapa

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 24m

root@master:~# kubectl delete namespace rapa

namespace "rapa" deleted

root@master:~# kubectl get pod -n rapa

No resources found in rapa namespace.

<context 원상 복구>

root@master:~# kubectl config use-context kubernetes-admin@kubernetes

Switched to context "kubernetes-admin@kubernetes".

root@master:~# kubectl config current-context

kubernetes-admin@kubernetes

root@master:~# kubectl delete -f kakao-ns.yml

namespace "kakao" deleted

root@master:~# kubectl get namespaces

NAME STATUS AGE

default Active 2d

kube-node-lease Active 2d

kube-public Active 2d

kube-system Active 2d

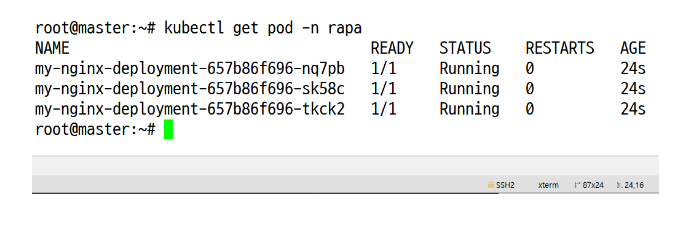

문제

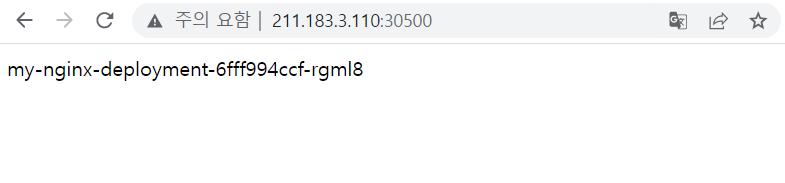

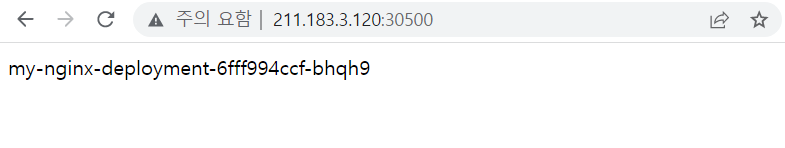

예제) 다음과 같은 결과가 도출되도록 ‘단일’ 매니페스트 파일을 구성후 해당 디플로이먼트가 30500 노드포트로 배포될 수 있도록 하시오.

이미지는 192.168.0.195:5000/hgninx를 사용.

아래와 같은 결과를 유도할 것

root@master:~# vi lunch.yml

****

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: my-nginx-deployment

namespace: rapa

spec:

replicas: 3

selector:

matchLabels:

app: my-nginx-deployment

template:

metadata:

labels:

app: my-nginx-deployment

spec:

containers:

- image: 192.168.0.195:5000/hnginx

name: my-nginx-deployment-container

---

apiVersion: v1

kind: Service

metadata:

name: my-nginx-deployment-service

namespace: rapa

spec:

selector:

app: my-nginx-deployment

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 30500

type: NodePort

****

<네임스페이스 생성>

root@master:~# kubectl create namespace rapa

namespace/rapa created

root@master:~# kubectl get namespaces

NAME STATUS AGE

default Active 2d

kube-node-lease Active 2d

kube-public Active 2d

kube-system Active 2d

rapa Active 6s

root@master:~# kubectl apply -f lunch.yml

deployment.apps/my-nginx-deployment created

service/my-nginx-deployment-service created

root@master:~# kubectl get pod -n rapa

NAME READY STATUS RESTARTS AGE

my-nginx-deployment-6fff994ccf-bhqh9 1/1 Running 0 20s

my-nginx-deployment-6fff994ccf-ntp9n 1/1 Running 0 20s

my-nginx-deployment-6fff994ccf-rgml8 1/1 Running 0 20s

root@master:~# kubectl get pod -o wide -n rapa

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx-deployment-6fff994ccf-bhqh9 1/1 Running 0 74s 10.10.235.136 worker1 <none> <none>

my-nginx-deployment-6fff994ccf-ntp9n 1/1 Running 0 74s 10.10.235.135 worker1 <none> <none>

my-nginx-deployment-6fff994ccf-rgml8 1/1 Running 0 74s 10.10.189.120 worker2 <none> <none>

<로드 밸런싱>

root@master:~# curl 211.183.3.100:30500

my-nginx-deployment-6fff994ccf-bhqh9

root@master:~# curl 211.183.3.100:30500

my-nginx-deployment-6fff994ccf-rgml8

root@master:~# curl 211.183.3.100:30500

my-nginx-deployment-6fff994ccf-bhqh9

root@master:~# curl 211.183.3.100:30500

my-nginx-deployment-6fff994ccf-ntp9n

root@master:~# curl 211.183.3.100:30500

my-nginx-deployment-6fff994ccf-ntp9n

4. Label(Key - Value) 개념 잡기

-

라벨을 사용하면 Pod와 같은 쿠버네티스 리소스에 버전명이나 애플리케이션명, 스테이지 환경인지 실제 환경인지와 같은 임의의 라벨을 설정하여, 클러스터 안에서 편하게 관리할 수 있다.

-

또한,쿠버네티스의 리소스에 설정한 라벨을 LabelSelector라는 기능을 사용하여 필터링할 수 있다.

-

한 Pod에 여러 개의 Label을 추가할 수 있다.

<Pod 매니페스트 파일>

apiVersion: v1

kind: Pod

metadata:

name: pod-2

labels:

type: web # label 1 : web

lo: dev # label 2 : dev

spec:

containers:

- name: container

image: 192.168.0.195:hnginx

<Service 매니페스트 파일>

apiVersion: v1

kind: Service

metadata:

name: svc-1

spec:

selector:

type: web

ports:

- port: 8080

Service 매니페스트 파일의 selector 에서 Key-value 의 형태로 입력하면 해당 입력과 매칭되는 Pod 매니페스트 파일의 지정된 label과 매칭이 된다.

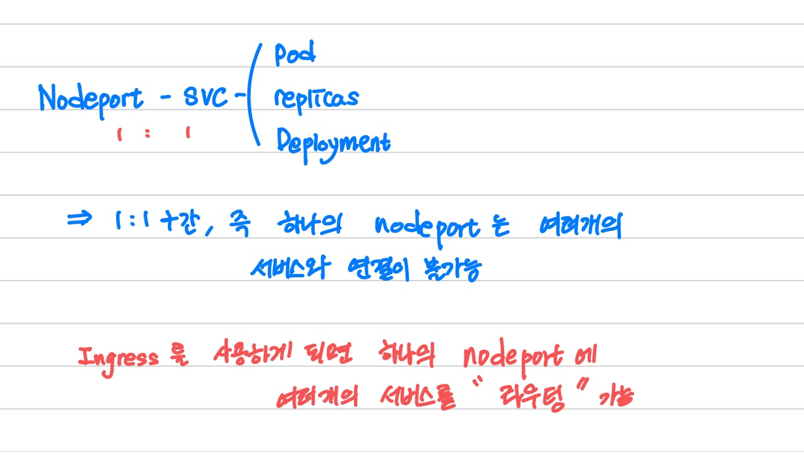

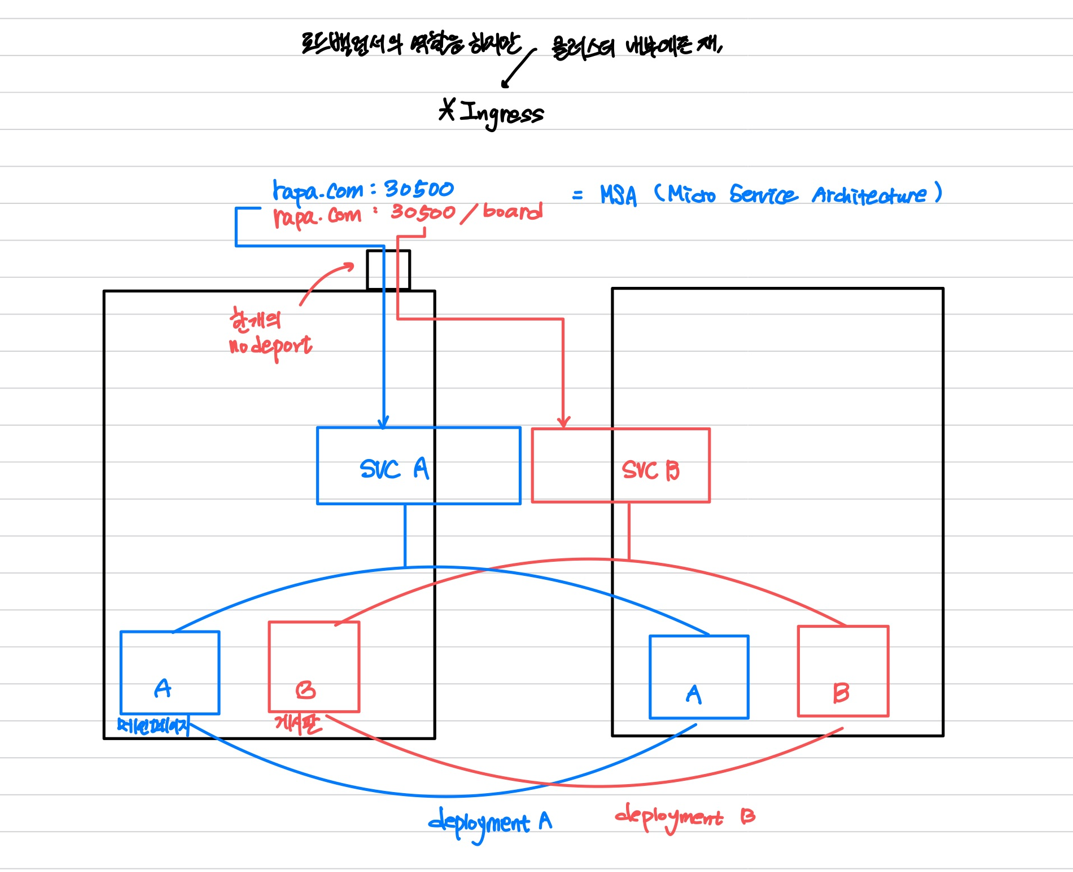

5. Ingress - 하나의 nodePort, 여러개의 서비스"라우팅"

6. Ingress 구성 과정

-

deployment + 2. service => h-deploy.yml(hostname 출력) & ip-deploy.yml (IP 출력)

두개의 디플로이먼트 구성 -

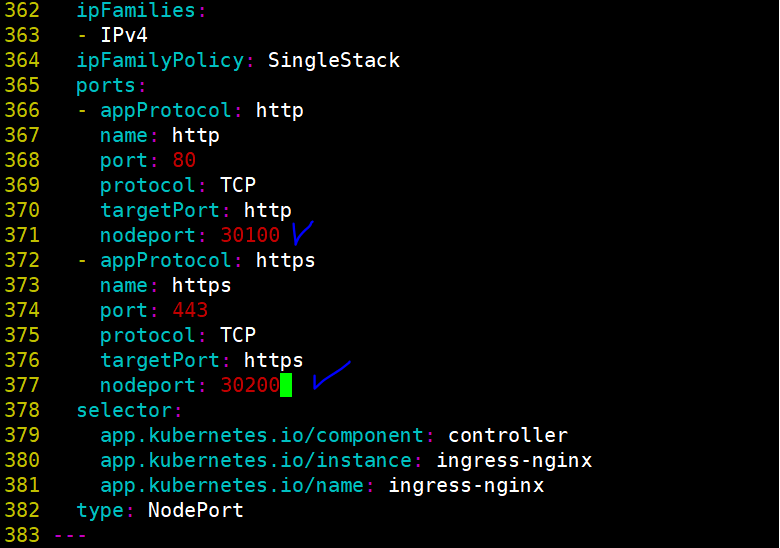

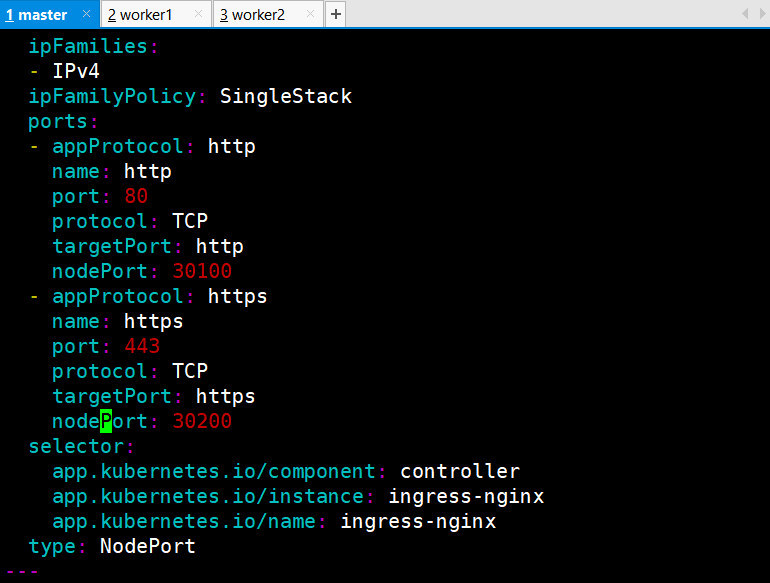

ingress-controller : nginx

http에 대한 nodeport = 30100

https에 대한 nodeport = 30200

위의 설정은 controller 에서 설정 가능하다4.ingress: 실제는 없고, 도메인 주소 및 라우팅.

→ 라우팅 가능, 인증서를 붙일 수 있음. 여러개의 서비스와 매칭 가능.

-> ingress.yml 매니페스트 파일

Ingress controller Download - deploy.yml

https://kubernetes.github.io/ingress-nginx/deploy/

root@master:~# mkdir in

root@master:~# cd in

root@master:~/in# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.5.1/deploy/static/provider/baremetal/deploy.yaml

--2023-01-11 07:08:04-- https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.5.1/deploy/static/provider/baremetal/deploy.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.109.133, 185.199.108.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 15655 (15K) [text/plain]

Saving to: ‘deploy.yaml’

deploy.yaml 100%[=======>] 15.29K --.-KB/s in 0.003s

2023-01-11 07:08:04 (5.89 MB/s) - ‘deploy.yaml’ saved [15655/15655]

<deploy 파일 존재>

root@master:~/in# ls

deploy.yamlroot@master:~/in# vi deploy.ymlport를 고정하기 위해서 설정 - 굳이 안해줘도 괜찮다

node"P(대문자)" 임에 주의!

root@master:~/in# vi deploy.yaml

root@master:~/in# kubectl apply -f deploy.yaml

namespace/ingress-nginx unchanged

serviceaccount/ingress-nginx unchanged

serviceaccount/ingress-nginx-admission unchanged

role.rbac.authorization.k8s.io/ingress-nginx unchanged

role.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

configmap/ingress-nginx-controller unchanged

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission unchanged

deployment.apps/ingress-nginx-controller configured

job.batch/ingress-nginx-admission-create unchanged

job.batch/ingress-nginx-admission-patch unchanged

ingressclass.networking.k8s.io/nginx unchanged

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission configured

root@master:~/in# vi h-deploy.yml

****

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: h-deploy

namespace: ingress-nginx

spec:

replicas: 2

selector:

matchLabels:

app: hnginx

template:

metadata:

labels:

app: hnginx

spec:

containers:

- name: hnginx

image: 192.168.0.195:5000/hnginx

---

apiVersion: v1

kind: Service

metadata:

name: h-svc

namespace: ingress-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: hnginx

****

root@master:~/in# kubectl get svc,pod -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/h-svc ClusterIP 10.107.164.226 <none> 80/TCP 36s

service/ingress-nginx-controller NodePort 10.96.227.85 <none> 80:30100/TCP,443:30200/TCP 10m

service/ingress-nginx-controller-admission ClusterIP 10.110.44.63 <none> 443/TCP 12m

NAME READY STATUS RESTARTS AGE

pod/h-deploy-7d9ff6f9bc-b6fld 1/1 Running 0 36s

pod/h-deploy-7d9ff6f9bc-bk922 1/1 Running 0 36s

pod/ingress-nginx-admission-create-bkd5c 0/1 Completed 0 12m

pod/ingress-nginx-admission-patch-hkd4s 0/1 Completed 2 12m

pod/ingress-nginx-controller-64f79ddbcc-qdf2p 1/1 Running 0 12m

<ip 서비스를 만들기 위해 yaml 파일 복사하여 수정>

root@master:~/in# cp h-deploy.yml ip-deploy.yml

root@master:~/in# vi ip-deploy.yml

*****

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ip-deploy

namespace: ingress-nginx

spec:

replicas: 2

selector:

matchLabels:

app: ipnginx

template:

metadata:

labels:

app: ipnginx

spec:

containers:

- name: ipnginx

image: 192.168.0.195:5000/ipnginx

---

apiVersion: v1

kind: Service

metadata:

name: ip-svc

namespace: ingress-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: ipnginx

*****

root@master:~/in# kubectl apply -f ip-deploy.yml

deployment.apps/ip-deploy created

service/ip-svc created

root@master:~/in# kubectl get svc,pod -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/h-svc ClusterIP 10.107.164.226 <none> 80/TCP 4m47s

service/ingress-nginx-controller NodePort 10.96.227.85 <none> 80:30100/TCP,443:30200/TCP 14m

service/ingress-nginx-controller-admission ClusterIP 10.110.44.63 <none> 443/TCP 16m

service/ip-svc ClusterIP 10.111.124.215 <none> 80/TCP 13s

NAME READY STATUS RESTARTS AGE

pod/h-deploy-7d9ff6f9bc-b6fld 1/1 Running 0 4m47s

pod/h-deploy-7d9ff6f9bc-bk922 1/1 Running 0 4m47s

pod/ingress-nginx-admission-create-bkd5c 0/1 Completed 0 16m

pod/ingress-nginx-admission-patch-hkd4s 0/1 Completed 2 16m

pod/ingress-nginx-controller-64f79ddbcc-qdf2p 1/1 Running 0 16m

pod/ip-deploy-bc5f4ccc8-cdzwm 1/1 Running 0 13s

pod/ip-deploy-bc5f4ccc8-f2nfl 1/1 Running 0 13s

root@master:~/in# curl 10.111.124.215

request_method : GET | ip_dest: 10.10.235.140

root@master:~/in# curl 10.111.124.215

request_method : GET | ip_dest: 10.10.189.124

root@master:~/in# curl 10.111.124.215

root@master:~/in# curl 10.107.164.226

h-deploy-7d9ff6f9bc-bk922

root@master:~/in# curl 10.107.164.226

h-deploy-7d9ff6f9bc-b6fld

<1,2 완료>

root@master:~/in# vi ingress.yml

*****

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress

namespace: ingress-nginx

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: rapa.com

http:

paths:

- path: /h

pathType: Prefix

backend:

service:

name: h-svc

port:

number: 80

- path: /ip

pathType: Prefix

backend:

service:

name: ip-svc

port:

number: 80

*****

root@master:~/in# kubectl apply -f ingress.yml

ingress.networking.k8s.io/ingress created

<adress 가 뜨는게 중요함 만약 안뜨게 된다면, 이유는 annotation ingress 컨트롤러를 명시 안해줬기 때문이다.>

root@master:~/in# kubectl get ingress -n ingress-nginx

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress <none> rapa.com 211.183.3.120 80 3m17s

root@master:~/in# vi /etc/hosts

<3번째 줄 추가>

*****

127.0.0.1 localhost

127.0.1.1 ubun_temp

211.183.3.110 rapa.com211.183.3.110 rapa.com

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

*****

root@master:~/in# curl rapa.com:30100/ip

request_method : GET | ip_dest: 10.10.235.140

root@master:~/in# curl rapa.com:30100/ip

request_method : GET | ip_dest: 10.10.189.124

root@master:~/in# curl rapa.com:30100/h

h-deploy-7d9ff6f9bc-b6fld

root@master:~/in# curl rapa.com:30100/h

h-deploy-7d9ff6f9bc-bk922문제

curl kakao.com:30300/ipnginx

파드의 IP 나오도록!

curl kakao.com:30300/nginx

기본 nginx 페이지가 나오도록!

<vi ip-kakao.yml>

*****

apiVersion: apps/v1

kind: Deployment

metadata:

name: kakao-ip-deploy

namespace: ingress-nginx

spec:

replicas: 2

selector:

matchLabels:

app: kakao-ip

template:

metadata:

labels:

app: kakao-ip

spec:

containers:

- name: kakao-ip

image: 192.168.0.195:5000/ipnginx

---

apiVersion: v1

kind: Service

metadata:

name: kakao-ip-svc

namespace: ingress-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: kakao-ip

*****

root@master:~/in# kubectl apply -f ip-kakao.yml

deployment.apps/kakao-ip-deploy created

service/kakao-ip-svc created

root@master:~/in# kubectl get svc,pod -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/h-svc ClusterIP 10.107.164.226 <none> 80/TCP 51m

service/ingress-nginx-controller NodePort 10.96.227.85 <none> 80:30100/TCP,443:30200/TCP 60m

service/ingress-nginx-controller-admission ClusterIP 10.110.44.63 <none> 443/TCP 62m

service/ip-svc ClusterIP 10.111.124.215 <none> 80/TCP 46m

service/kakao-ip-svc ClusterIP 10.111.12.42 <none> 80/TCP 7s

NAME READY STATUS RESTARTS AGE

pod/h-deploy-7d9ff6f9bc-b6fld 1/1 Running 0 51m

pod/h-deploy-7d9ff6f9bc-bk922 1/1 Running 0 51m

pod/ingress-nginx-admission-create-bkd5c 0/1 Completed 0 62m

pod/ingress-nginx-admission-patch-hkd4s 0/1 Completed 2 62m

pod/ingress-nginx-controller-64f79ddbcc-qdf2p 1/1 Running 0 62m

pod/ip-deploy-bc5f4ccc8-cdzwm 1/1 Running 0 46m

pod/ip-deploy-bc5f4ccc8-f2nfl 1/1 Running 0 46m

pod/kakao-ip-deploy-9f4bd4c8-bqgf2 1/1 Running 0 7s

pod/kakao-ip-deploy-9f4bd4c8-l5mcd 1/1 Running 0 7s

root@master:~/in# cp ip-kakao.yml nginx-kakao.yml

root@master:~/in# vi nginx-kakao.yml

*****

kind: Deployment

metadata:

name: kakao-nginx-deploy

namespace: ingress-nginx

spec:

replicas: 2

selector:

matchLabels:

app: kakao-nginx

template:

metadata:

labels:

app: kakao-nginx

spec:

containers:

- name: kakao-nginx

image: 192.168.0.195:5000/nginx

---

apiVersion: v1

kind: Service

metadata:

name: kakao-nginx-svc

namespace: ingress-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: kakao-nginx

*****

root@master:~/in# kubectl apply -f nginx-kakao.yml

deployment.apps/kakao-nginx-deploy created

service/kakao-nginx-svc created

root@master:~/in# kubectl get svc,pod -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/h-svc ClusterIP 10.107.164.226 <none> 80/TCP 52m

service/ingress-nginx-controller NodePort 10.96.227.85 <none> 80:30100/TCP,443:30200/TCP 62m

service/ingress-nginx-controller-admission ClusterIP 10.110.44.63 <none> 443/TCP 64m

service/ip-svc ClusterIP 10.111.124.215 <none> 80/TCP 48m

service/kakao-ip-svc ClusterIP 10.111.12.42 <none> 80/TCP 106s

service/kakao-nginx-svc ClusterIP 10.110.127.205 <none> 80/TCP 5s

NAME READY STATUS RESTARTS AGE

pod/h-deploy-7d9ff6f9bc-b6fld 1/1 Running 0 52m

pod/h-deploy-7d9ff6f9bc-bk922 1/1 Running 0 52m

pod/ingress-nginx-admission-create-bkd5c 0/1 Completed 0 64m

pod/ingress-nginx-admission-patch-hkd4s 0/1 Completed 2 64m

pod/ingress-nginx-controller-64f79ddbcc-qdf2p 1/1 Running 0 64m

pod/ip-deploy-bc5f4ccc8-cdzwm 1/1 Running 0 48m

pod/ip-deploy-bc5f4ccc8-f2nfl 1/1 Running 0 48m

pod/kakao-ip-deploy-9f4bd4c8-bqgf2 1/1 Running 0 106s

pod/kakao-ip-deploy-9f4bd4c8-l5mcd 1/1 Running 0 106s

pod/kakao-nginx-deploy-fccf59d4f-prffx 1/1 Running 0 5s

pod/kakao-nginx-deploy-fccf59d4f-pzmg9 1/1 Running 0 5s

root@master:~/in# vi ingress.yml

******

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress

namespace: ingress-nginx

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: rapa.com

http:

paths:

- path: /h

pathType: Prefix

backend:

service:

name: h-svc

port:

number: 80

- path: /ip

pathType: Prefix

backend:

service:

name: ip-svc

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress

namespace: ingress-nginx

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: kakao.com

http:

paths:

- path: /ipnginx

pathType: Prefix

backend:

service:

name: kakao-ip-svc

port:

number: 80

- path: /nginx

pathType: Prefix

backend:

service:

name: kakao-nginx-svc

port:

number: 80

******

root@master:~/in# kubectl apply -f ingress.yml

ingress.networking.k8s.io/ingress configured

root@master:~/in# curl kakao.com:30100/ipnginx

request_method : GET | ip_dest: 10.10.235.139

root@master:~/in# curl kakao.com:30100/ipnginx

request_method : GET | ip_dest: 10.10.189.125

root@master:~/in# curl kakao.com:30100/nginx

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

root@master:~/in# curl kakao.com:30100/nginx

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

Prepare for the https://www.examcollection.us/Marketing-Cloud-Email-Specialist-vce.html with our comprehensive study guide. This essential resource covers key topics such as email marketing best practices, subscriber management, and campaign execution. Gain in-depth knowledge of Marketing Cloud features, including Journey Builder and Automation Studio. Our expert tips and practice questions will help you build confidence and enhance your skills. Whether you’re new to email marketing or looking to deepen your expertise, this guide is designed to ensure your success. Start your journey to certification today and unlock the full potential of Salesforce Marketing Cloud!