1. Ingress 에서 TLS 인증서 적용

<TLS 키 발급>

root@master:~/in# openssl req -x509 -newkey rsa:4906 -sha256 -nodes -keyout tls.key -out tls.crt -subj "/CN=kakao.com" -days 365

Generating a RSA private key

...............................................................................................++++

.............................................................................................................++++

writing new private key to 'tls.key'

-----

<tls.crt, tls.key 파일이 생성되었음을 확인>

root@master:~/in# ls

deploy.yaml ingress.yml ip-kakao.yml tls.crt

h-deploy.yml ip-deploy.yml nginx-kakao.yml tls.key

root@master:~/in# kubectl create secret tls kakao.com-tls --cert=tls.crt --key=tls.key

secret/kakao.com-tls created

root@master:~/in# vi ingress.yml

****

28 ---

29 apiVersion: networking.k8s.io/v1

30 kind: Ingress

31 metadata:

32 name: ingress

33 namespace: ingress-nginx

34 annotations:

35 kubernetes.io/ingress.class: "nginx"

36 nginx.ingress.kubernetes.io/rewrite-target: /

37 spec:

38 tls: # 수정

39 - hosts:

40 - kakao.com

41 secretName: kakao.com-tls

42 rules:

43 - host: kakao.com

44 http:

45 paths:

46 - path: /ipnginx

47 pathType: Prefix

48 backend:

49 service:

50 name: kakao-ip-svc

51 port:

52 number: 80

53 - path: /nginx

54 pathType: Prefix

55 backend:

56 service:

57 name: kakao-nginx-svc

58 port:

59 number: 80

****

<검증 안된 인증서라도 괜찮다 -k 옵션>

root@master:~/in# curl -k https://kakao.com:30200/nginx

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

2. Canary-Update

예제) 기존의 어플리케이션(nginx 기본, n-deploy)에서 ip를 출력하는 어플리케이션(ip-deploy)로 카나리 업데이트를 하고 싶다.

또한, 현재 n-deploy는 총 3개의 동작중이며 카나리 업데이트를 통해 2개의 새로운 어플리케이션을 추가하여 정상 동작하는지 확인하고 싶기도 하다.

클라이언트는 노출된 nodePort를 통해 웹페이지에 계속 접속이 가능 해야하고,

다만, 기존페이지와 업데이트된 페이지가 동시에 보일수는 있고 그래야만 한다.

풀이 방법

서로 다른 버전의 Deployment 를 생성 즉, manifest 파일이 2개가 존재해야 함.

하지만, matchLabels를 두 매니페스트 파일에 동일하게 가져가야 한다.

서비스 manifest 파일은 1개로 가져간다.

<이미자는 다른 Deployment 생성>

root@master:~/in# vi canary-deploy.yml

*****

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: n-deploy

namespace: ingress-nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

version: stable

template:

metadata:

labels:

app: nginx

version: stable

spec:

containers:

- name: nginx

image: 192.168.0.195:5000/nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: n-update-deploy

namespace: ingress-nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

version: canary

template:

metadata:

labels:

app: nginx

version: canary

spec:

containers:

- name: nginx

image: 192.168.0.195:5000/ipnginx

---

apiVersion: v1

kind: Service

metadata:

name: update-svc

namespace: ingress-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

*****

<TLS 인증서를 붙이기 위한 ingress manifest 파일 - 호스트는 kakao.com 으로 한다.>

root@master:~/in# vi ingress.yml

*****

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress

namespace: ingress-nginx

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

tls:

- hosts:

- kakao.com

secretName: kakao.com-tls

rules:

- host: kakao.com

http:

paths:

- path: /updatenginx

pathType: Prefix

backend:

service:

name: update-svc

port:

number: 80

*****

결과

root@master:~/in# curl -k https://kakao.com:30200/updatenginx

request_method : GET | ip_dest: 10.10.235.149

root@master:~/in# curl -k https://kakao.com:30200/updatenginx

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

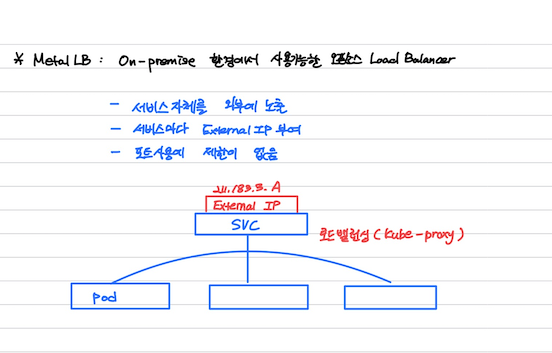

3. MetalLB - 오픈소스 로드밸런서

결국은 외부로 노출시키기 위함이다!

-

크롬 접속창에 metallb manifest 검색

-

[맨위 링크] https://metallb.universe.tf/installation/ 클릭하여 Download

<metallb yaml 파일 get>

root@master:~/metallb# wget https://raw.githubusercontent.com/metallb/metallb/v0.13.7/config/manifests/metallb-native.yaml

root@master:~/metallb# ls

metallb-native.yaml

****

1 apiVersion: v1

2 kind: Namespace

3 metadata:

4 labels:

5 pod-security.kubernetes.io/audit: privileged

6 pod-security.kubernetes.io/enforce: privileged

7 pod-security.kubernetes.io/warn: privileged

8 name: metallb-system # 이정도만 알고있으면 된다.

****

<테스트를 위한 hgninx 이미지 기반의 deployment 생성>

root@master:~/metallb# vi h-deploy.yml

****

apiVersion: apps/v1

kind: Deployment

metadata:

name: h-deploy

namespace: metallb-system

spec:

replicas: 2

selector:

matchLabels:

app: hnginx

template:

metadata:

labels:

app: hnginx

spec:

containers:

- name: hnginx

image: 192.168.0.195:5000/hnginx

---

apiVersion: v1

kind: Service

metadata:

name: h-svc

namespace: metallb-system

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: hnginx

****

<동일한 형식을 가져가므로 ipnginx 이미지 기반의 deploy 생성>

root@master:~/metallb# cp h-deploy.yml ip-deploy.yml

root@master:~/metallb# vi ip-deploy.yml

****

apiVersion: apps/v1

kind: Deployment

metadata:

name: ip-deploy

namespace: metallb-system

spec:

replicas: 2

selector:

matchLabels:

app: ipnginx

template:

metadata:

labels:

app: ipnginx

spec:

containers:

- name: ipnginx

image: 192.168.0.195:5000/ipnginx

---

apiVersion: v1

kind: Service

metadata:

name: ip-svc

namespace: metallb-system

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: ipnginx

****

<deployment, service apply 하여 생성>

root@master:~/metallb# kubectl apply -f h-deploy.yml -f ip-deploy.yml

deployment.apps/h-deploy created

service/h-svc created

deployment.apps/ip-deploy created

service/ip-svc created

4. 설정 잡아주기

configmap 생성

<metallb-c.yml 라는 이름의 configmap 생성>

root@master:~/metallb# vi metal-cm.yml

****

apiVersion: v1

kind: ConfigMap

metadata:

namespace: meatallb-system

name: config

data:

config: |

address-pools:

- name: nginx-ip-range

protocol: layer2

addresses:

- 211.183.3.111-211.183.3.119

****

root@master:~/metallb# kubectl apply -f metal-cm.yml

configmap/config created

root@master:~/metallb# kubectl get svc -n metallb-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

h-svc ClusterIP 10.110.157.93 <none> 80/TCP 7m49s

ip-svc ClusterIP 10.108.207.229 <none> 80/TCP 7m49s

webhook-service ClusterIP 10.104.135.128 <none> 443/TCP 13m

<External IP 가 안받아 짐! 로드밸런서를 지정안해줬기 때문>

<로드밸런서를 지정해주기 위해 deployment 파일 수정>

root@master:~/metallb# cat h-deploy.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: h-deploy

namespace: metallb-system

spec:

replicas: 2

selector:

matchLabels:

app: hnginx

template:

metadata:

labels:

app: hnginx

spec:

containers:

- name: hnginx

image: 192.168.0.195:5000/hnginx

---

apiVersion: v1

kind: Service

metadata:

name: h-svc

namespace: metallb-system

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: hnginx

type: LoadBalancer # 이 부분 추가

****************************************

root@master:~/metallb# cat ip-deploy.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ip-deploy

namespace: metallb-system

spec:

replicas: 2

selector:

matchLabels:

app: ipnginx

template:

metadata:

labels:

app: ipnginx

spec:

containers:

- name: ipnginx

image: 192.168.0.195:5000/ipnginx

---

apiVersion: v1

kind: Service

metadata:

name: ip-svc

namespace: metallb-system

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: ipnginx

type: LoadBalancer # 이 부분 추가

< 다시 서비스, 디플로이먼트 apply>

root@master:~/metallb# kubectl apply -f h-deploy.yml -f ip-deploy.yml

deployment.apps/h-deploy unchanged

service/h-svc configured

deployment.apps/ip-deploy unchanged

service/ip-svc configured

root@master:~/metallb# kubectl get svc -n metallb-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

h-svc LoadBalancer 10.110.157.93 <pending> 80:32227/TCP 11m

ip-svc LoadBalancer 10.108.207.229 <pending> 80:32379/TCP 11m

webhook-service ClusterIP 10.104.135.128 <none> 443/TCP 16m

<IP를 받아왔지만, EXTERNAL-IP 상태가 pending 상태임>

<버전 지정에 오류가 있어서 기존의 metallb.yml 파일을 삭제하고 다시 재생성>

root@master:~/metallb# vi metallb.yml

# All of sources From

# - https://raw.githubusercontent.com/metallb/metallb/v0.8.3/manifests/metallb.yaml

# clone from above to sysnet4admin

apiVersion: v1

kind: Namespace

metadata:

labels:

app: metallb

name: metallb-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: metallb

name: controller

namespace: metallb-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: metallb

name: speaker

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app: metallb

name: metallb-system:controller

rules:

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- update

- apiGroups:

- ''

resources:

- services/status

verbs:

- update

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app: metallb

name: metallb-system:speaker

rules:

- apiGroups:

- ''

resources:

- services

- endpoints

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

resourceNames:

- speaker

resources:

- podsecuritypolicies

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app: metallb

name: config-watcher

namespace: metallb-system

rules:

- apiGroups:

- ''

resources:

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app: metallb

name: metallb-system:controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: metallb-system:controller

subjects:

- kind: ServiceAccount

name: controller

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app: metallb

name: metallb-system:speaker

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: metallb-system:speaker

subjects:

- kind: ServiceAccount

name: speaker

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app: metallb

name: config-watcher

namespace: metallb-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: config-watcher

subjects:

- kind: ServiceAccount

name: controller

- kind: ServiceAccount

name: speaker

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: metallb

component: speaker

name: speaker

namespace: metallb-system

spec:

selector:

matchLabels:

app: metallb

component: speaker

template:

metadata:

annotations:

prometheus.io/port: '7472'

prometheus.io/scrape: 'true'

labels:

app: metallb

component: speaker

spec:

containers:

- args:

- --port=7472

- --config=config

env:

- name: METALLB_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: METALLB_HOST

valueFrom:

fieldRef:

fieldPath: status.hostIP

image: metallb/speaker:v0.8.2

imagePullPolicy: IfNotPresent

name: speaker

ports:

- containerPort: 7472

name: monitoring

resources:

limits:

cpu: 100m

memory: 100Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_ADMIN

- NET_RAW

- SYS_ADMIN

drop:

- ALL

readOnlyRootFilesystem: true

hostNetwork: true

nodeSelector:

beta.kubernetes.io/os: linux

serviceAccountName: speaker

terminationGracePeriodSeconds: 0

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: metallb

component: controller

name: controller

namespace: metallb-system

spec:

revisionHistoryLimit: 3

selector:

matchLabels:

app: metallb

component: controller

template:

metadata:

annotations:

prometheus.io/port: '7472'

prometheus.io/scrape: 'true'

labels:

app: metallb

component: controller

spec:

containers:

- args:

- --port=7472

- --config=config

image: metallb/controller:v0.8.2

imagePullPolicy: IfNotPresent

name: controller

ports:

- containerPort: 7472

name: monitoring

resources:

limits:

cpu: 100m

memory: 100Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- all

readOnlyRootFilesystem: true

nodeSelector:

beta.kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 65534

serviceAccountName: controller

terminationGracePeriodSeconds: 0

<재생성한 metallb.yml apply>

root@master:~/metallb# kubectl apply -f metallb.yml

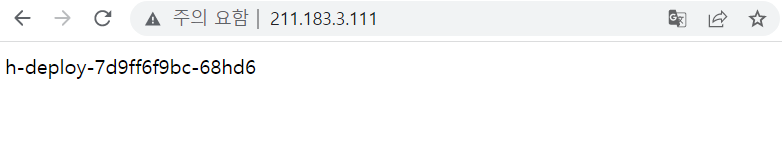

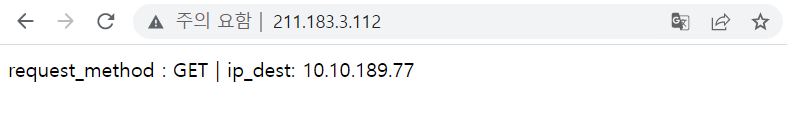

<정상적으로 EXTERNAL-IP 가 올라와있는 것을 확인할 수 있고, 외부 노출을 확인하기 위해서 크롬 접속창에서 확인>

root@master:~/metallb# kubectl get svc -n metallb-system

NAME TYPE CLUSTER-IP EXTERNAL-IP

h-svc LoadBalancer 10.103.181.197 211.183.3.111

ip-svc LoadBalancer 10.96.187.184 211.183.3.112

webhook-service ClusterIP 10.110.228.234 <none>

정상적으로 EXTERNAL-IP 가 올라와있는 것을 확인할 수 있고, 외부 노출을 확인하기 위해서 크롬 접속창에서 확인

5. configMap 생성

오브젝트를 만들고 나서 환경변수를 지정, 컨피그 맵을 만들어서 환경변수를 정해줄 수 있다.

<test1-cm, test2-cm configMap 생성>

root@master:~/metallb# kubectl create configmap test1-cm --from-literal INSTANCE=CENTOS7

configmap/test1-cm created

root@master:~/metallb# kubectl create configmap test2-cm --from-

literal NAME=JOO --from-literal ZONE=AP-NORTHEAST-2a

configmap/test2-cm created

<생성된 configMap 확인>

root@master:~/metallb# kubectl get cm

NAME DATA AGE

kube-root-ca.crt 1 3d1h

test1-cm 1 102s

test2-cm 2 7s

root@master:~/metallb# kubectl describe cm test1-cm

Name: test1-cm

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

INSTANCE:

----

CENTOS7

BinaryData

====

Events: <none>

root@master:~/metallb# kubectl describe cm test2-cm

Name: test2-cm

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

NAME:

----

JOO

ZONE:

----

AP-NORTHEAST-2a

BinaryData

====

Events: <none>

<테스트하기 위해 간단하게 Pod 하나 생성>

root@master:~/metallb# vi pod-cm.yml

*****

apiVersion: v1

kind: Pod

metadata:

name: env-test

spec:

containers:

- name: pod-cm

image: 192.168.0.195:5000/nginx

envFrom:

- configMapRef:

name: test1-cm

- configMapRef:

name: test2-cm

*****

<configMap 파일로 환경변수를 지정한 Pod apply>

root@master:~/metallb# kubectl apply -f pod-cm.yml

pod/env-test created

root@master:~/metallb# kubectl exec env-test -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=env-test

INSTANCE=CENTOS7

ZONE=AP-NORTHEAST-2a

NAME=JOO

KUBERNETES_SERVICE_PORT=443

KUBERNETES_PORT_443_TCP_PROTO=tcp

NP_SVC_SERVICE_HOST=10.110.210.139

NP_SVC_PORT=tcp://10.110.210.139:80

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PORT=443

NP_SVC_SERVICE_PORT=80

KUBERNETES_SERVICE_HOST=10.96.0.1

NP_SVC_PORT_80_TCP=tcp://10.110.210.139:80

NP_SVC_PORT_80_TCP_PORT=80

NP_SVC_PORT_80_TCP_ADDR=10.110.210.139

NP_SVC_PORT_80_TCP_PROTO=tcp

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

NP_SVC_SERVICE_PORT_HTTP=80

NGINX_VERSION=1.23.3

NJS_VERSION=0.7.9

PKG_RELEASE=1~bullseye

HOME=/root

예제 문제

사설저장소를 사용해도 무방

이미지를 안겹치게 적절하게 태깅할것.

본인이 사설 저장소를 구축해도 됨

nginx 베이스 이미지는 192.168.0.195:5000/nginx 를 사용.

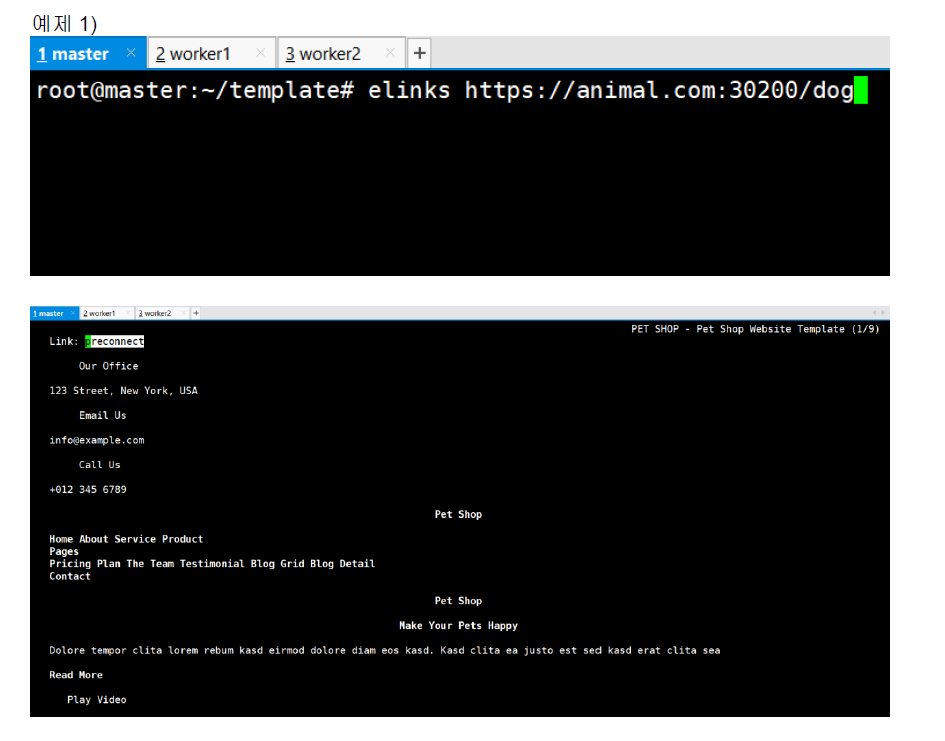

예제1)

https://www.free-css.com/template-categories/animals-or-pets

에보면 다양한 동물 템플릿이 존재한다.

animal.com 을 만들어 최소 두 종류 이상의 카테고리를 제작할 것.

예) animal.com/cat , animal.com/dog

제출은 apt-get -y install elinks 로 elinks라는 cli 브라우저를 다운받아서 사이트 접속후 스샷을 찍으셔도 되고 curl 명령어로 확인해도 무방

답안

<작업 환경 구성>

root@master:~# mkdir docker

root@master:~# cd docker/

<간단한 템플릿 다운로드>

root@master:~/docker# wget https://www.free-css.com/assets/files/free-css-templates/download/page284/pet-shop.zip

root@master:~/docker# apt-get install -y wget

root@master:~/docker# ls

pet-shop.zip

root@master:~/docker# unzip pet-shop.zip

root@master:~/docker# ls

pet-shop-website-template pet-shop.zip

root@master:~/docker# rm -rf pet-shop.zip

root@master:~/docker# mv pet-shop-website-template/ dog

root@master:~/docker# ls

dog dog-Dockerfile index.html

<이미지를 빌드하기위해 간단한 Dockerfile 생성>

root@master:~/docker# vi dog-Dockerfile

*****

FROM 192.168.0.195:5000/nginx

COPY ./dog /usr/share/nginx/html/

WORKDIR /usr/share/nginx/html

*****

root@master:~/docker# apt-get install -y nginx

root@master:~/docker# mv dog-Dockerfile Dockerfile

root@master:~/docker# ls

Dockerfile dog index.html

root@master:~/docker# docker build -t dogtemplate:euijoo .

Sending build context to Docker daemon 1.859MB

Step 1/3 : FROM 192.168.0.195:5000/nginx

latest: Pulling from nginx

3f4ca61aafcd: Pull complete

50c68654b16f: Pull complete

3ed295c083ec: Pull complete

40b838968eea: Pull complete

88d3ab68332d: Pull complete

5f63362a3fa3: Pull complete

Digest: sha256:9a821cadb1b13cb782ec66445325045b2213459008a41c72d8d87cde94b33c8c

Status: Downloaded newer image for 192.168.0.195:5000/nginx:latest

---> 1403e55ab369

Step 2/3 : COPY ./dog /usr/share/nginx/html/

---> bf931fac40eb

Step 3/3 : WORKDIR /usr/share/nginx/html

---> Running in e16d07ebf174

Removing intermediate container e16d07ebf174

---> 525610ee782b

Successfully built 525610ee782b

Successfully tagged dogtemplate:euijoo

root@master:~/docker# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

dogtemplate euijoo 525610ee782b 8 seconds ago 144MB

192.168.0.195:5000/nginx latest 1403e55ab369 3 weeks ago 142MB

<사설 저장소에 이미지를 push 하기 위해 이미지 tag 변경>

root@master:~/docker# docker tag dogtemplate:euijoo 192.168.0.195:5000/dogtemplate:euijoo

root@master:~/docker# docker push 192.168.0.195:5000/dogtemplate:euijoo

The push refers to repository [192.168.0.195:5000/dogtemplate]

de62ba0ec91d: Pushed

c72d75f45e5b: Mounted from nginx

9a0ef04f57f5: Mounted from nginx

d13aea24d2cb: Mounted from nginx

2b3eec357807: Mounted from nginx

2dadbc36c170: Mounted from nginx

8a70d251b653: Mounted from nginx

euijoo: digest: sha256:e9539543ee771753da634ece69a7b843172cd22b80fd6bf157acd28b01f66e10 size: 1781

root@master:~# mv docker/ dog

<생성된 이미지가 잘 작동하는지 간단한 컨테이너를 하나 띄어 확인>

root@master:~/cat# docker run -dp 7878:80 --name dog_template 192.168.0.195:5000/dogtemplate:euijoo

df55e3994fa8ea634cd082235b39c64d8274aff3ffcaf55f21597d219babb1c4

root@master:~/cat# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

df55e3994fa8 192.168.0.195:5000/dogtemplate:euijoo "/docker-entrypoint.…" 3 seconds ago Up 2 seconds 0.0.0.0:7878->80/tcp, :::7878->80/tcp dog_template

root@master:~/dog# cd ../

root@master:~# cd cat/

root@master:~/cat# ls

Dockerfile index.html

root@master:~/cat# vi Dockerfile

*****

FROM 192.168.0.195:5000/nginx

COPY ./cat /usr/share/nginx/html/

WORKDIR /usr/share/nginx/html

*****

<cat 템플릿도 마찬가지로 설정 잡아주기>

root@master:~/cat# cd ../

root@master:~# mv cat/ Cat

root@master:~# cd Cat/

root@master:~/Cat# ls

Dockerfile index.html

root@master:~/Cat# vi Dockerfile

root@master:~/Cat# wget https://www.free-css.com/assets/files/free-css-templates/download/page276/petlover.zip

root@master:~/Cat# unzip petlover.zip

root@master:~/Cat# ls

Dockerfile index.html pet-care-website-template petlover.zip

root@master:~/Cat# rm -rf pet-care-website-template/

root@master:~/Cat# unzip petlover.zip

root@master:~/Cat# ls

Dockerfile index.html pet-care-website-template petlover.zip

root@master:~/Cat# rm -rf petlover.zip

root@master:~/Cat# ls

Dockerfile index.html pet-care-website-template

root@master:~/Cat# mv pet-care-website-template/ cat

root@master:~/Cat# ls

cat Dockerfile index.html

root@master:~/Cat# vi Dockerfile

root@master:~/Cat# docker build -t cattemplate:euijoo .

Sending build context to Docker daemon 2.691MB

Step 1/3 : FROM 192.168.0.195:5000/nginx

---> 1403e55ab369

Step 2/3 : COPY ./cat /usr/share/nginx/html/

---> fc6c0e232c37

Step 3/3 : WORKDIR /usr/share/nginx/html

---> Running in 9dd342cfd7b8

Removing intermediate container 9dd342cfd7b8

---> a9608e10f4b3

Successfully built a9608e10f4b3

Successfully tagged cattemplate:euijoo

root@master:~/Cat# docker run -dp 7879:80 --name cat_template cattemplate:euijoo

79c14b483a29337a72562f2be2af8a2e8ce59124da4c6b006041dd0b6c65ef36

root@master:~/Cat# docker tag cattemplate:euijoo 192.168.0.195:5000/cattemplate:euijoo

root@master:~/Cat# docker push 192.168.0.195:5000/cattemplate:euijoo

The push refers to repository [192.168.0.195:5000/cattemplate]

64d3748a3b16: Pushed

c72d75f45e5b: Mounted from dogtemplate

9a0ef04f57f5: Mounted from dogtemplate

d13aea24d2cb: Mounted from dogtemplate

2b3eec357807: Mounted from dogtemplate

2dadbc36c170: Mounted from dogtemplate

8a70d251b653: Mounted from dogtemplate

euijoo: digest: sha256:9ada2e96edd4798a829e05703168cf5e2ab2d0ece1a5a5e5080cb9922863f1d9 size: 1781

root@master:~/Cat# cd ../

root@master:~# mkdir template

root@master:~# cd template/

root@master:~/template# vi dog-deploy.yml

*****

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dog-deploy

namespace: metallb-system

spec:

replicas: 2

selector:

matchLabels:

app: dog-deploy

template:

metadata:

labels:

app: dog-deploy

spec:

containers:

- name: dog-deploy

image: 192.168.0.195:5000/dogtemplate:euijoo

---

apiVersion: v1

kind: Service

metadata:

name: dog-svc

namespace: metallb-system

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: dog-deploy

type: LoadBalancer

*****

root@master:~/template# cp dog-deploy.yml cat-deploy.yml

root@master:~/template# vi cat-deploy.yml

*****

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cat-deploy

namespace: metallb-system

spec:

replicas: 2

selector:

matchLabels:

app: cat-deploy

template:

metadata:

labels:

app: cat-deploy

spec:

containers:

- name: cat-deploy

image: 192.168.0.195:5000/cattemplate:euijoo

---

apiVersion: v1

kind: Service

metadata:

name: cat-svc

namespace: metallb-system

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: cat-deploy

type: LoadBalancer

*****

<호스트 지정>

root@master:~/template# vi /etc/hosts

*****

127.0.0.1 localhost

127.0.1.1 ubun_temp

211.183.3.120 rapa.com

211.183.3.120 kakao.com

211.183.3.120 animal.com

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

*****

<ingress 파일 수정>

root@master:~/in# vi ingress.yml

*****

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress

namespace: ingress-nginx

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: rapa.com

http:

paths:

- path: /h

pathType: Prefix

backend:

service:

name: h-svc

port:

number: 80

- path: /ip

pathType: Prefix

backend:

service:

name: ip-svc

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress

namespace: ingress-nginx

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

tls:

- hosts:

- animal.com

secretName: animal.com-tls

rules:

- host: animal.com

http:

paths:

- path: /dog

pathType: Prefix

backend:

service:

name: dog-svc

port:

number: 80

- path: /cat

pathType: Prefix

backend:

service:

name: cat-svc

port:

number: 80

*****

root@master:~/in# kubectl apply -f ingress.yml

root@master:~/template# kubectl apply -f cat-deploy.yml

deployment.apps/cat-deploy created

service/cat-svc created

root@master:~/template# kubectl apply -f dog-deploy.yml

deployment.apps/dog-deploy created

service/dog-svc created

root@master:~/template# kubectl get svc,pod,ingress -n ingress-n

NAME TYPE CLUSTER

service/cat-svc ClusterIP 10.100.

service/dog-svc ClusterIP 10.102.

service/h-svc ClusterIP 10.107.

service/ingress-nginx-controller NodePort 10.96.2

service/ingress-nginx-controller-admission ClusterIP 10.110.

service/ip-svc ClusterIP 10.111.

service/kakao-ip-svc ClusterIP 10.111.

service/kakao-nginx-svc ClusterIP 10.110.

service/update-svc ClusterIP 10.111.

NAME READY STATUS

pod/cat-deploy-7f74b7f6bb-54htp 1/1 Running

pod/cat-deploy-7f74b7f6bb-5fxjz 1/1 Running

pod/dog-deploy-8cbfb5fcb-5q7vh 1/1 Running

pod/dog-deploy-8cbfb5fcb-v9ds2 1/1 Running

pod/h-deploy-7d9ff6f9bc-b6fld 1/1 Running

pod/h-deploy-7d9ff6f9bc-bk922 1/1 Running

pod/ingress-nginx-admission-create-bkd5c 0/1 Complete

pod/ingress-nginx-admission-patch-hkd4s 0/1 Complete

pod/ingress-nginx-controller-64f79ddbcc-qdf2p 1/1 Running

pod/ip-deploy-bc5f4ccc8-cdzwm 1/1 Running

pod/ip-deploy-bc5f4ccc8-f2nfl 1/1 Running

pod/kakao-ip-deploy-9f4bd4c8-bqgf2 1/1 Running

pod/kakao-ip-deploy-9f4bd4c8-l5mcd 1/1 Running

pod/kakao-nginx-deploy-fccf59d4f-prffx 1/1 Running

pod/kakao-nginx-deploy-fccf59d4f-pzmg9 1/1 Running

pod/n-deploy-7c6665c4c8-dwrn2 1/1 Running

pod/n-deploy-7c6665c4c8-pbsx8 1/1 Running

pod/n-deploy-7c6665c4c8-xjjm2 1/1 Running

pod/n-update-deploy-64dd9fdc9b-prgns 1/1 Running

pod/n-update-deploy-64dd9fdc9b-qr6l4 1/1 Running

NAME CLASS HOSTS ADDRES

ingress.networking.k8s.io/ingress <none> animal.com 211.1

< animal.com 에 TLS 달기 위해 프라이빗 key 생성>

root@master:~/in# openssl req -x509 -newkey rsa:4906 -sha256 -nodes -keyout tls.key -out tls.crt -subj "/CN=animal.com" -days 365

Generating a RSA private key

..........................................++++

............................................................................................................++++

writing new private key to 'tls.key'

-----

root@master:~/in# kubectl create secret tls animal.com-tls --cert=tls.crt --key=tls.key

secret/animal.com-tls created

root@master:~/in# kubectl apply -f ingress.yml

ingress.networking.k8s.io/ingress configured

ingress.networking.k8s.io/ingress configured결과

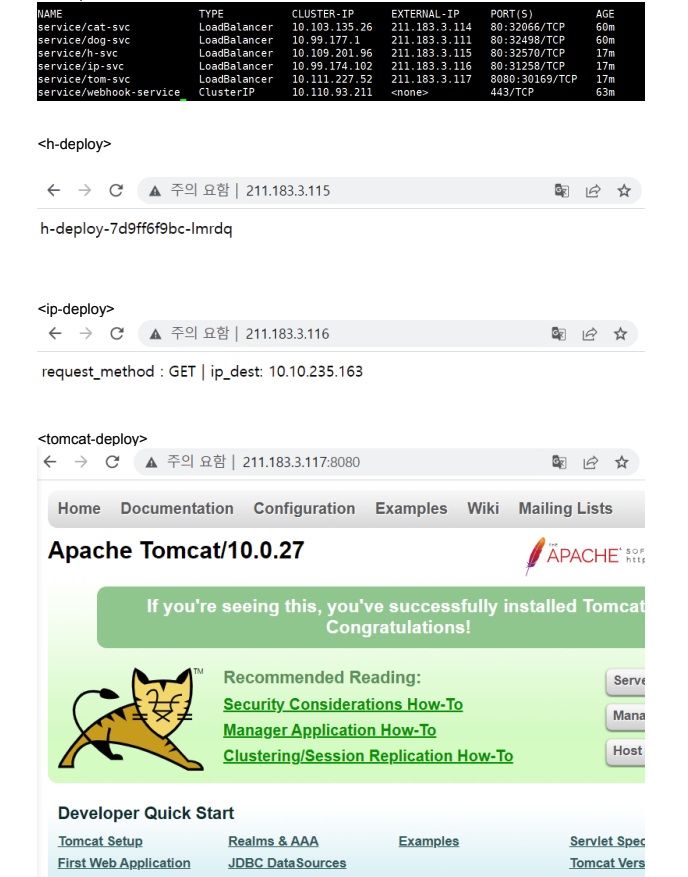

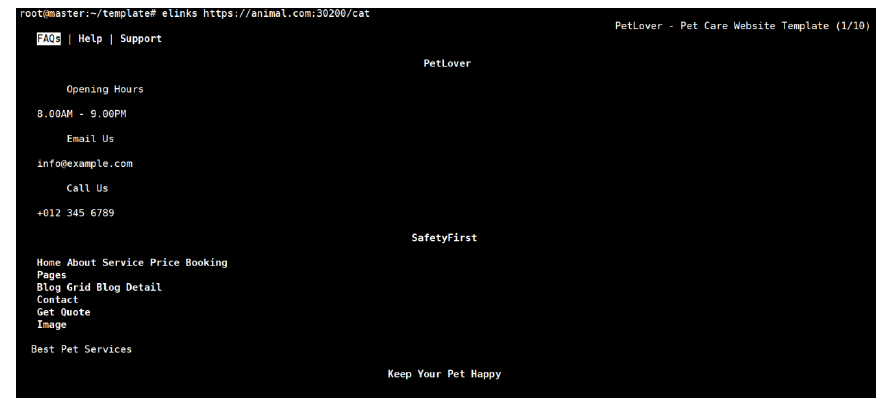

예제2)

아래 화면이 나오도록 구성하시오.

구성과정을 생각해보자면, 일단 각각의 이미지는 다른 3개의 deployment 매니페스트 파일이 필요할 것.

서비스 매니페스트 파일도 당연히 3개로 구성

외부로 노출시키기 위해 Metallb 를 적용시켜주면 된다.

가장 중요한 것은 EXTERNAL-IP 가 떠야한다.

Tomcat 즉, was 서버는 노출을 8080으로 잡아줘야 한다.

root@master:~# cd metallb/

root@master:~/metallb# ls

h-deploy.yml metal-cm.yml metallb.yml

ip-deploy.yml metallb-native.yaml pod-cm.yml

root@master:~/metallb# vi h-deploy.yml

*****

apiVersion: apps/v1

kind: Deployment

metadata:

name: h-deploy

namespace: metallb-system

spec:

replicas: 2

selector:

matchLabels:

app: hnginx

template:

metadata:

labels:

app: hnginx

spec:

containers:

- name: hnginx

image: 192.168.0.195:5000/hnginx

---

apiVersion: v1

kind: Service

metadata:

name: h-svc

namespace: metallb-system

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: hnginx

type: LoadBalancer

*****

root@master:~/metallb# vi ip-deploy.yml

*****

apiVersion: apps/v1

kind: Deployment

metadata:

name: ip-deploy

namespace: metallb-system

spec:

replicas: 2

selector:

matchLabels:

app: ipnginx

template:

metadata:

labels:

app: ipnginx

spec:

containers:

- name: ipnginx

image: 192.168.0.195:5000/ipnginx

---

apiVersion: v1

kind: Service

metadata:

name: ip-svc

namespace: metallb-system

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: ipnginx

type: LoadBalancer

*****

root@master:~/metallb# vi tomcat-deploy.yml

*****

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deploy

namespace: metallb-system

spec:

replicas: 2

selector:

matchLabels:

app: tomcat-deploy

template:

metadata:

labels:

app: tomcat-deploy

spec:

containers:

- name: tomcat-deploy

image: 192.168.0.195:5000/tom

---

apiVersion: v1

kind: Service

metadata:

name: tom-svc

namespace: metallb-system

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

app: tomcat-deploy

type: LoadBalancer

*****

root@master:~/metallb# kubectl apply -f h-deploy.yml -f ip-deploy.yml -f tomcat-deploy.yml

deployment.apps/h-deploy created

service/h-svc created

deployment.apps/ip-deploy created

service/ip-svc created

deployment.apps/tomcat-deploy created

service/tom-svc created

root@master:~/metallb# kubectl get svc -n metallb-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cat-svc LoadBalancer 10.103.135.26 211.183.3.114 80:32066/TCP 42m

dog-svc LoadBalancer 10.99.177.1 211.183.3.111 80:32498/TCP 42m

h-svc LoadBalancer 10.109.201.96 211.183.3.115 80:32570/TCP 10s

ip-svc LoadBalancer 10.99.174.102 211.183.3.116 80:31258/TCP 10s

tom-svc LoadBalancer 10.111.227.52 211.183.3.117 8080:30169/TCP 9s

webhook-service ClusterIP 10.110.93.211 <none> 443/TCP 46m

root@master:~/metallb# kubectl apply -f metal-cm.yml

root@master:~/metallb# kubectl apply -f metallb-native.yml

root@master:~/metallb# kubectl apply -f metallb.yml

결과