Bidirectional Encoder Representation Transformer (BERT)

Pre-trained language representation for language understanding

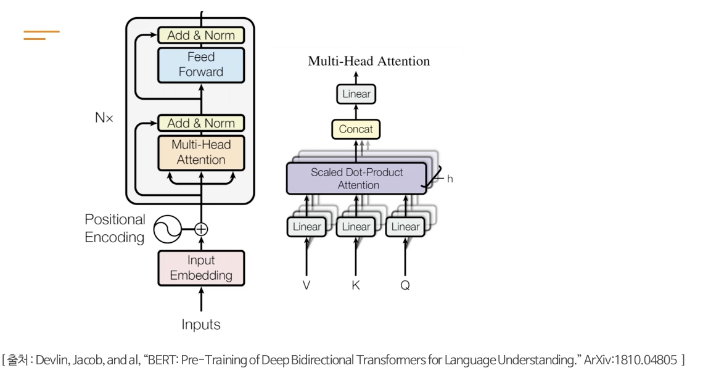

Large scale architecture

BASE: L=12, A=12, ~110M

LARGE: L=24, A=16, ~340M

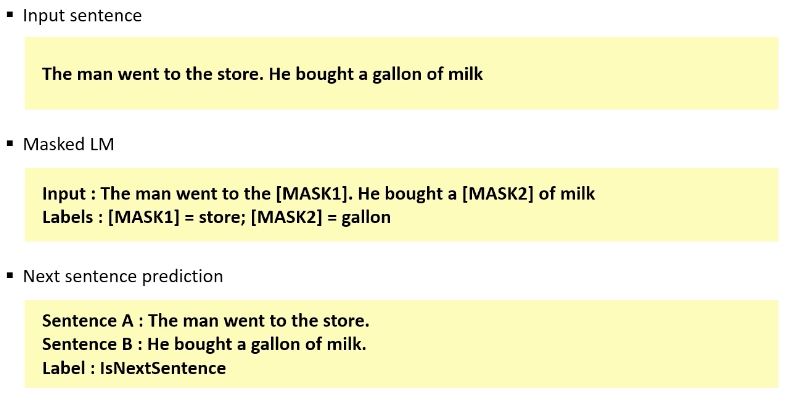

Pre-Training: Masked LM & Sentence Prediction

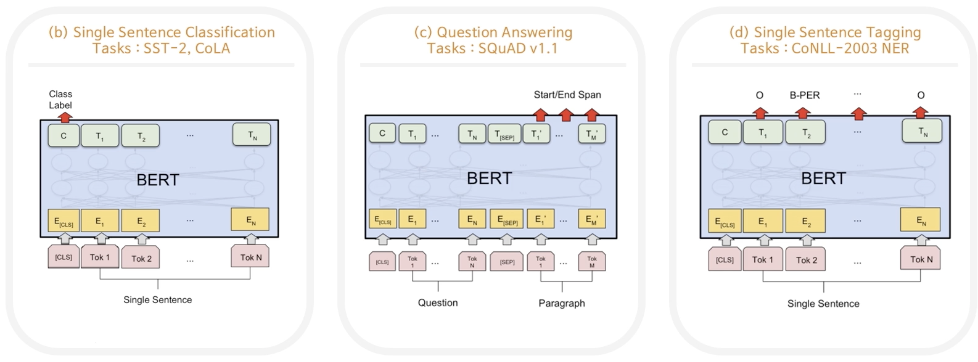

Task Fine-Tuning

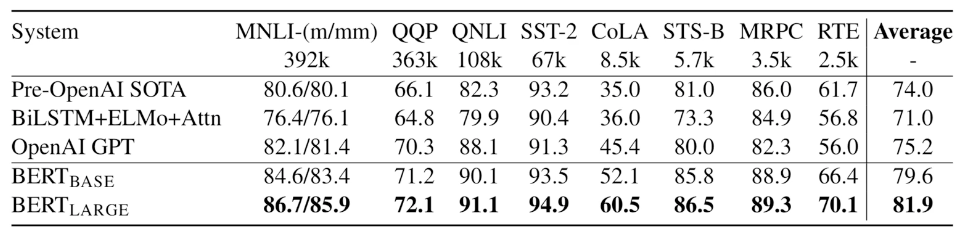

GLUE Results