이번 강의는 이론, 실습, 과제로 구성됩니다.

이번 강의에선 신경망(Neural Networks)의 정의, Deep Neural Networks에 대해 배웁니다.

신경망(Neural Networks)

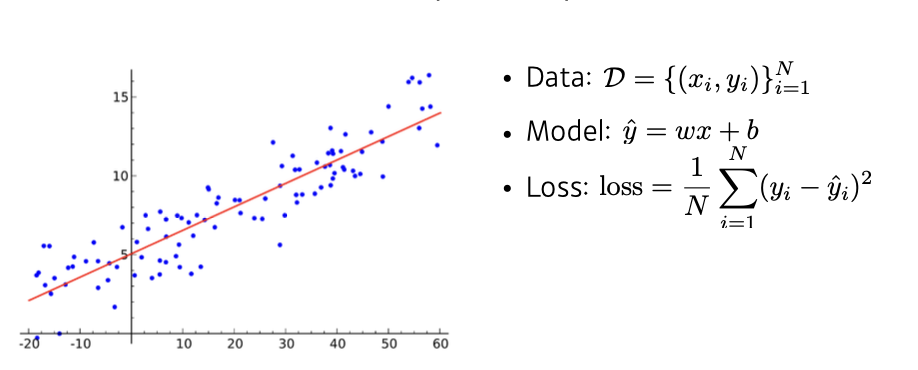

간단한 Linear neural networks 를 예시로 Data, Model, Loss, Optimization algorithm 을 정의해보는 시간을 가집니다.

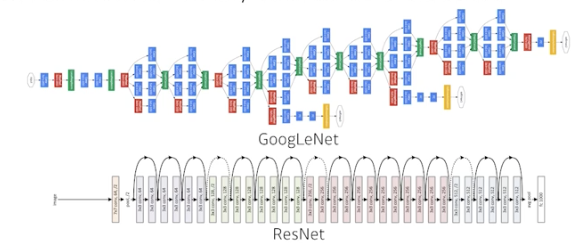

Deep Neural Networks

Deep Neural Netowkrs란 무엇이며 Multi-layer perceptron와 같이 더 깊은 네트워크는 어떻게 구성하는지에 대해 배웁니다.

Nerual Networks

Neural networks are computing systems vaguely inspired by the biological neural networks that constitute animal brains

Neural Networks are functoin approximators that stack affine transformatoins followed by nonlinear transformations

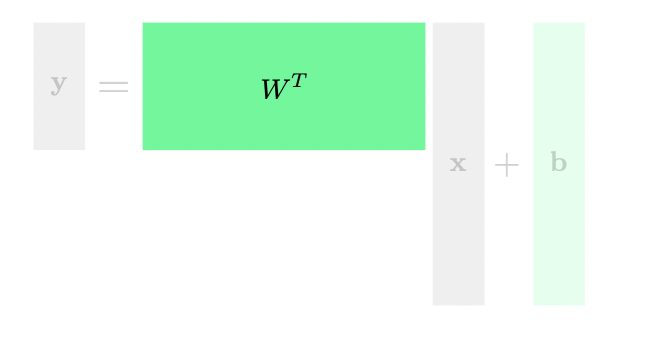

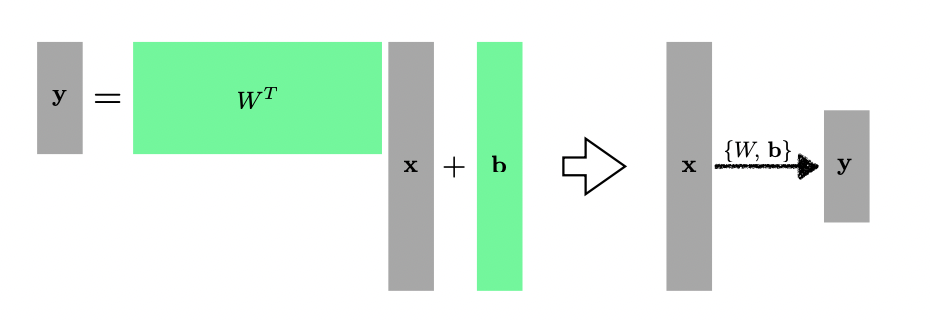

Linear Neural Networks

Let's start with the most simple example

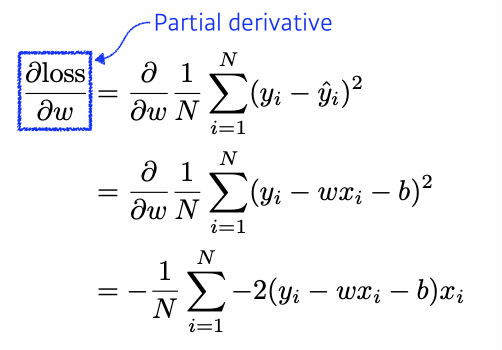

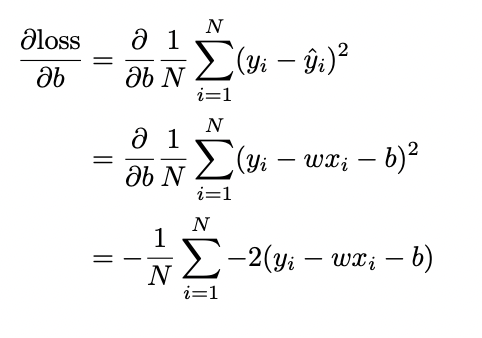

We compute the partial derivatives w.r.t the optimizatoin variables

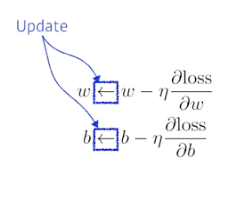

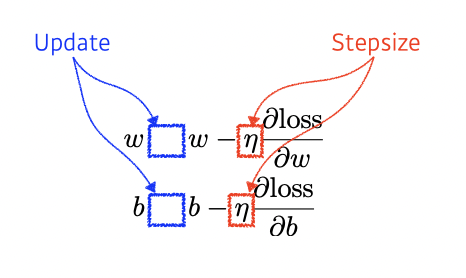

Then, we iteratively update the optimization variables

-> gradient descent

step size가 너무 크거나 0에 가까우면 적절한 학습이 안 됨

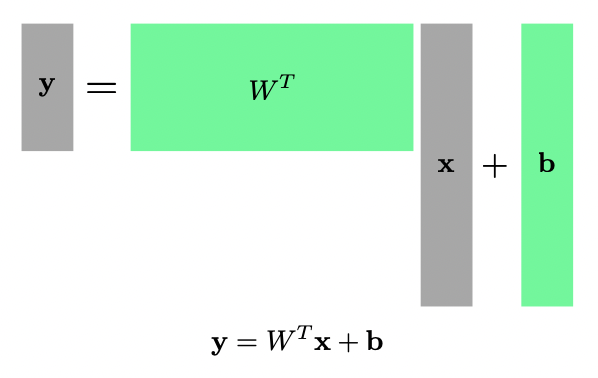

of course we can handle multi dimensional input and output

One way of interpreting a matrix is to regard it as a mapping between two vector spaces

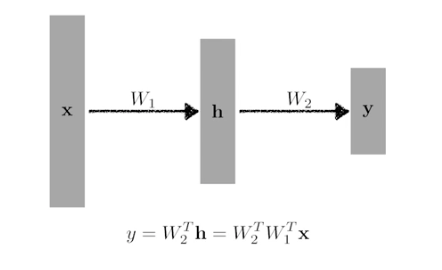

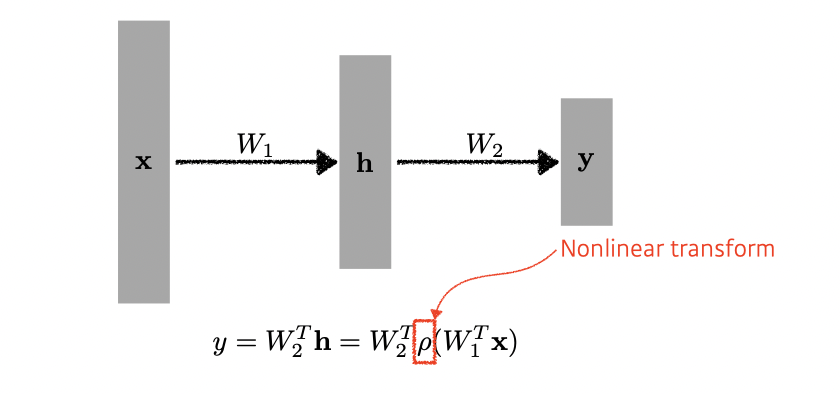

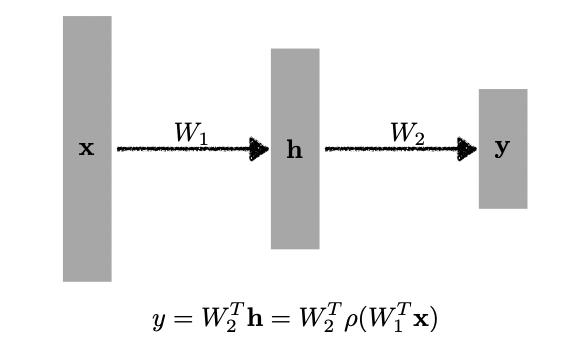

Beyond Linear Neural Networks

What if we stack more?

We need nonlinearity

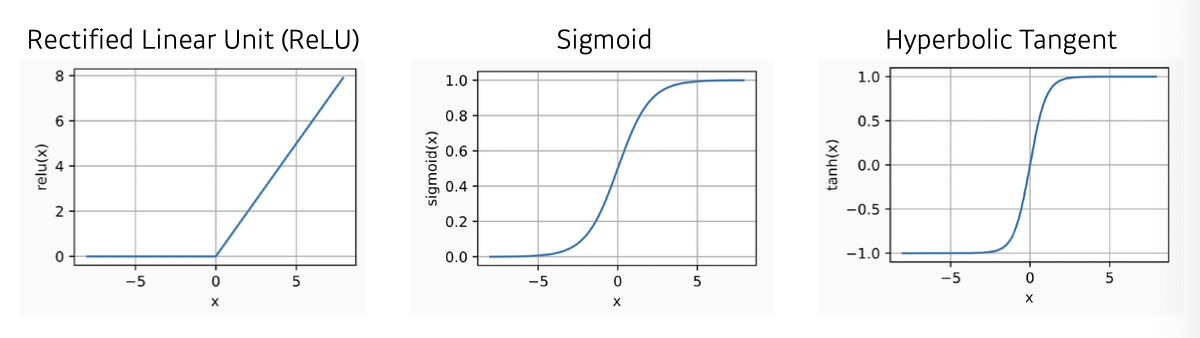

Activation functions

ReLU - Rectified Linear Unit

Sigmoid

Hyperbolic Tangent

Multi-Layer Perceptron

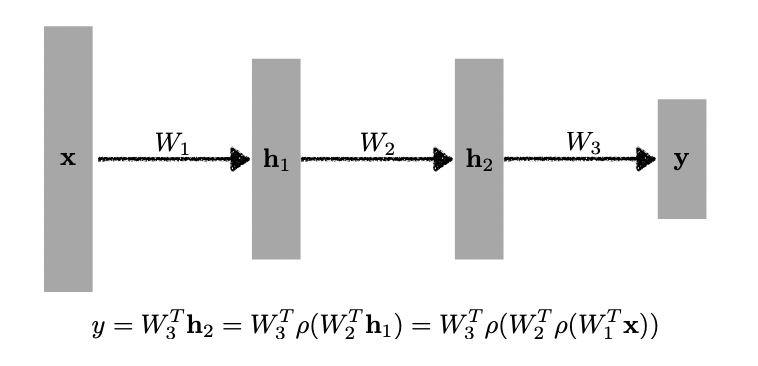

This class of architectures are often called multi-layer perceptrons

Of course, it can go deeper

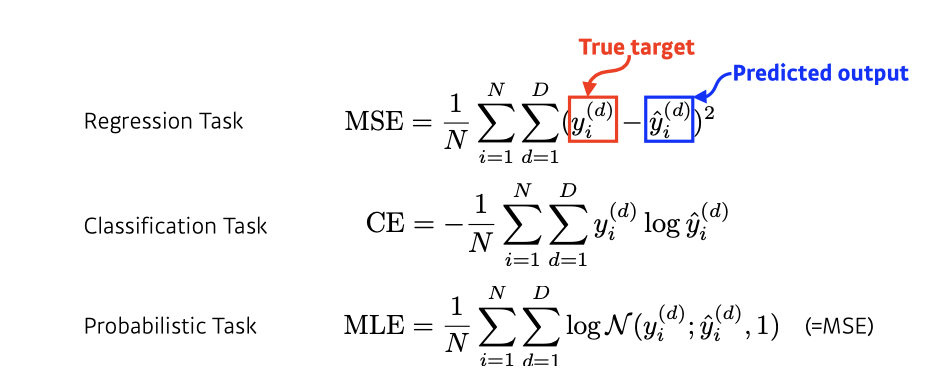

What about the loss functions?