0. 실습 전에

EKS upgrade 환경을 구축하기 위하여 Amazon EKS Workshop에서 실습을 진행하였습니다.

Amazone EKS Workshop 이란 Amazon EKS(Elastic Kubernetes Service)를 보다 쉽게 이해하고 활용할 수 있도록 마련된 실습형 학습 환경입니다.

해당 실습을 진행하기 위해 AEWS 스터디 최영락님 도움으로 AWS Upgrade Workshop 임시 계정을 생성 받아서 실습을 진행하였습니다.

실습 환경을 제공해주신 최영락님과 AEWS 운영진분들에게 감사하단 말씀을 전해드립니다.

1. K8S Upgrade

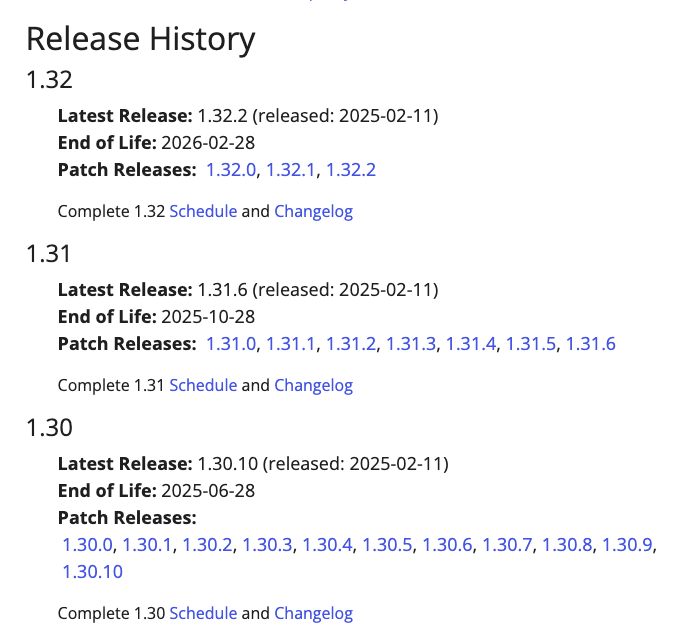

K8S Release

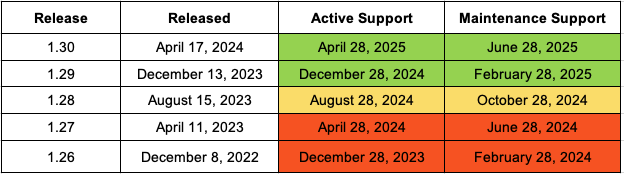

1) 출시 주기

- 정기적인 마이너 릴리스 Kubernetes는 약 3회/년, 즉 3~4개월마다 새로운 마이너 버전(예: 1.32, 1.31 등)을 출시합니다. 이를 통해 새로운 기능과 개선 사항을 빠르게 반영하면서도 전체 시스템의 안정성을 유지할 수 있습니다. 블로그 글이 작성 된 기준으로 가장 최신 버전은 1.32 입니다.

- 패치 릴리스 각 마이너 버전은 버그 수정, 보안 패치 등을 위해 정기적으로(보통 월간) 패치 릴리스를 진행합니다. 또한, 긴급한 보안 이슈나 중요 버그가 발견될 경우 추가적인 긴급 패치가 배포됩니다.

2) 버전 관리 및 지원 정책

- 버전 번호 체계 Kubernetes의 버전은

x.y.z형식을 따릅니다. 여기서x는 메이저 버전,y는 마이너 버전,z는 패치 버전을 의미하며, 이는 Semantic Versioning 규칙에 기반합니다.

- 지원 정책 최신 3개의 마이너 버전에 대해서는 보안 및 버그 수정 패치가 제공됩니다. 예를 들어, Kubernetes 1.30 이후 버전은 약 1년간의 패치 지원을 받고 있으며, 이 기간 이후에는 End-of-Life(EOL) 정책에 따라 지원이 종료됩니다.

2. Amazon EKS Upgrades (1.25 → 1.26)

실습 정보 확인

IDE 정보 확인

- 계정 및 현재 폴더 확인

whoami pwd

- 변수 선언 확인

export - s3 버킷 확인

aws s3 ls - 환경 변수 확인

cat ~/.bashrc - eks 플랫폼 버전 확인

aws eks describe-cluster --name $EKS_CLUSTER_NAME | jq - cluster 확인

eksctl get cluster

- node group 확인

eksctl get nodegroup --cluster $CLUSTER_NAME

- fargate 확인

eksctl get fargateprofile --cluster $CLUSTER_NAME - add-on 확인

eksctl get addon --cluster $CLUSTER_NAME

- capacity type 확인

kubectl get node --label-columns=eks.amazonaws.com/capacityType,node.kubernetes.io/lifecycle,karpenter.sh/capacity-type,eks.amazonaws.com/compute-type

- karpenter 확인

kubectl get node -L eks.amazonaws.com/nodegroup,karpenter.sh/nodepool

- node pools , node claims 확인

kubectl get nodepools kubectl get nodeclaims

- 노드의 AZ 확인

kubectl get node --label-columns=node.kubernetes.io/instance-type,kubernetes.io/arch,kubernetes.io/os,topology.kubernetes.io/zone - crd 확인

kubectl get crd

- helm install list 확인

helm list -A

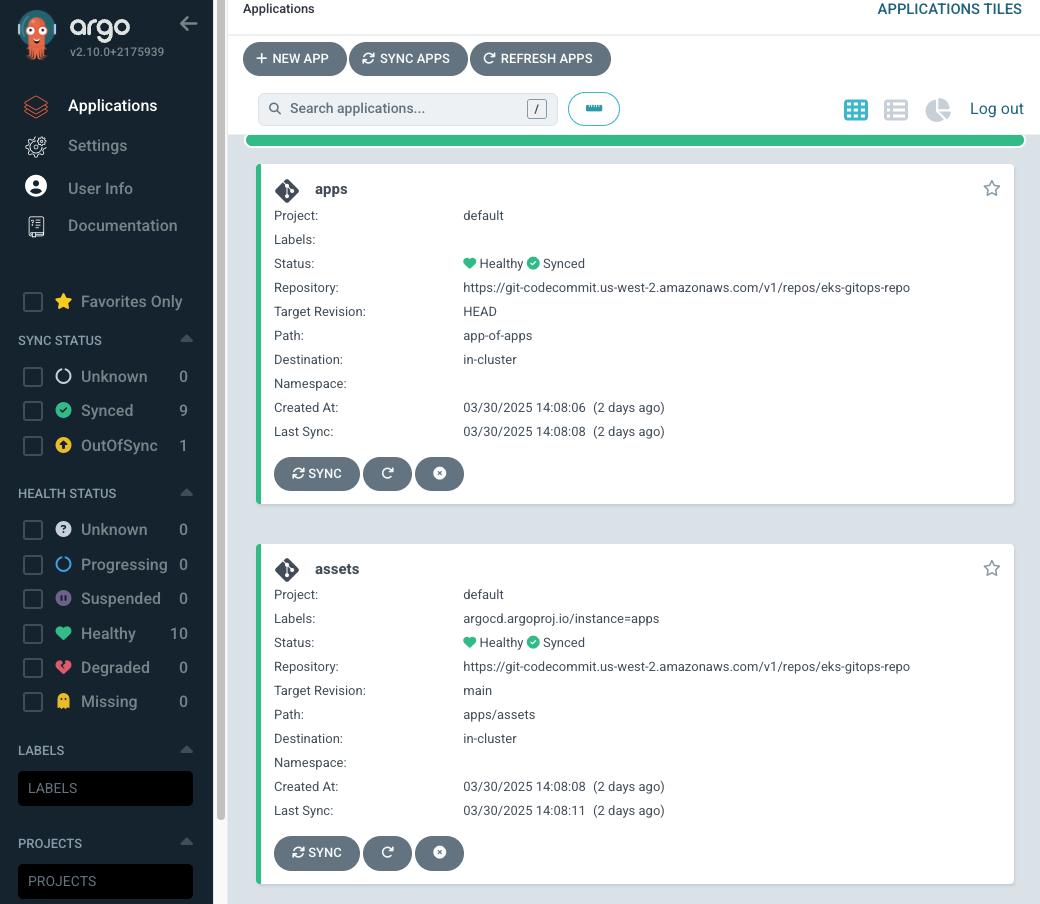

- ArgoCD application 확인

kubectl get applications -n argocd

- 전체 Pod 확인

kubectl get pod -A

- Pod Disruption Budget 조회

kubectl get pdb -A

- argocd svc 확인

kubectl get svc -n argocd argo-cd-argocd-server

- targetgroupbinding 확인

kubectl get targetgroupbindings -n argocd

- node taint 확인

kubectl get nodes -o custom-columns='NODE:.metadata.name,TAINTS:.spec.taints[*].key,VALUES:.spec.taints[*].value,EFFECTS:.spec.taints[*].effect'

- 노드 별 label 확인

kubectl get nodes -o json | jq '.items[] | {name: .metadata.name, labels: .metadata.labels}'

- 모든 StatefulSet 조회

kubectl get sts -A

- StorageClass 조회

kubectl get sc

- 전체 PV, PVC 조회

kubectl get pv,pvc -A

- EKS 클러스터의 액세스 항목 조회

aws eks list-access-entries --cluster-name $CLUSTER_NAME

- IAM, RBAC 조회

eksctl get iamidentitymapping --cluster $CLUSTER_NAME - aws-auth ConfigMap 상세 조회

kubectl describe cm -n kube-system aws-auth

- IRSA 조회

eksctl get iamserviceaccount --cluster $CLUSTER_NAME kubectl describe sa -A | grep role-arn

- EKS API Endpoint 조회

aws eks describe-cluster --name $CLUSTER_NAME | jq -r .cluster.endpoint | cut -d '/' -f 3

실습 목적 추가 설치

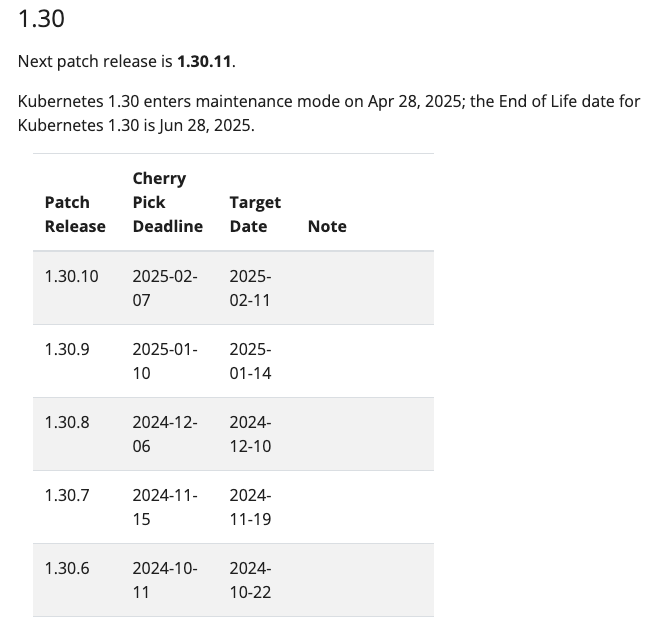

kube-ops-view 설치

helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

helm repo update

helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --namespace kube-system

#

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

annotations:

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-type: external

labels:

app.kubernetes.io/instance: kube-ops-view

app.kubernetes.io/name: kube-ops-view

name: kube-ops-view-nlb

namespace: kube-system

spec:

type: LoadBalancer

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

selector:

app.kubernetes.io/instance: kube-ops-view

app.kubernetes.io/name: kube-ops-view

EOF

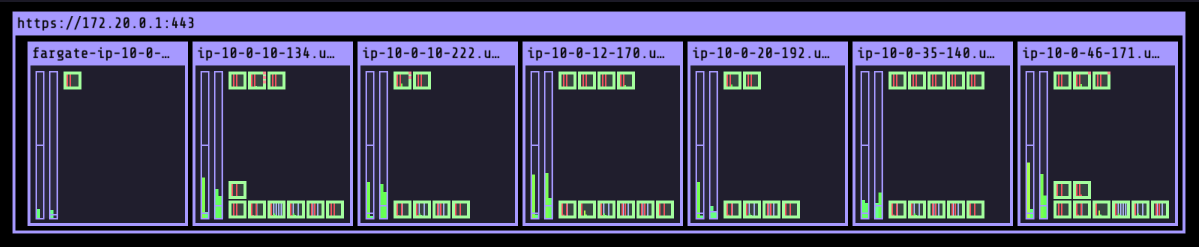

Krew 설치

# 설치

(

set -x; cd "$(mktemp -d)" &&

OS="$(uname | tr '[:upper:]' '[:lower:]')" &&

ARCH="$(uname -m | sed -e 's/x86_64/amd64/' -e 's/\(arm\)\(64\)\?.*/\1\2/' -e 's/aarch64$/arm64/')" &&

KREW="krew-${OS}_${ARCH}" &&

curl -fsSLO "https://github.com/kubernetes-sigs/krew/releases/latest/download/${KREW}.tar.gz" &&

tar zxvf "${KREW}.tar.gz" &&

./"${KREW}" install krew

)

# PATH

export PATH="${KREW_ROOT:-$HOME/.krew}/bin:$PATH"

vi ~/.bashrc

-----------

export PATH="${KREW_ROOT:-$HOME/.krew}/bin:$PATH"

-----------

# 플러그인 설치

kubectl krew install ctx ns df-pv get-all neat stern oomd whoami rbac-tool rolesum

kubectl krew list

#

kubectl df-pv

#

kubectl whoami --all

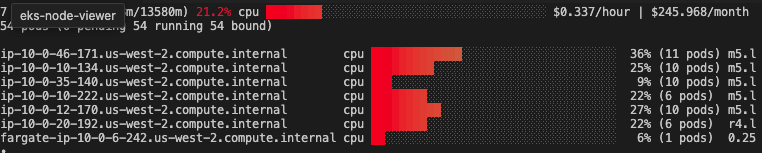

eks-node-view 설치

wget -O eks-node-viewer https://github.com/awslabs/eks-node-viewer/releases/download/v0.7.1/eks-node-viewer_Linux_x86_64

chmod +x eks-node-viewer

sudo mv -v eks-node-viewer /usr/local/bin

# 설치 확인

eks-node-viewer

K9S 설치

curl -sS https://webinstall.dev/k9s | bash

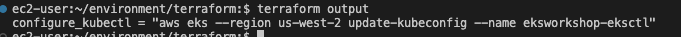

Terraform 배포 파일 확인 (실습 환경 배포)

- 파일 확인

ls -lrt terraform/

- sc 확인

aws s3 ls

- backend_override.tf 수정

terraform { backend "s3" { bucket = "${s3-url}" region = "us-west-2" key = "terraform.tfstate" } }

- 확인

terraform state list terraform output

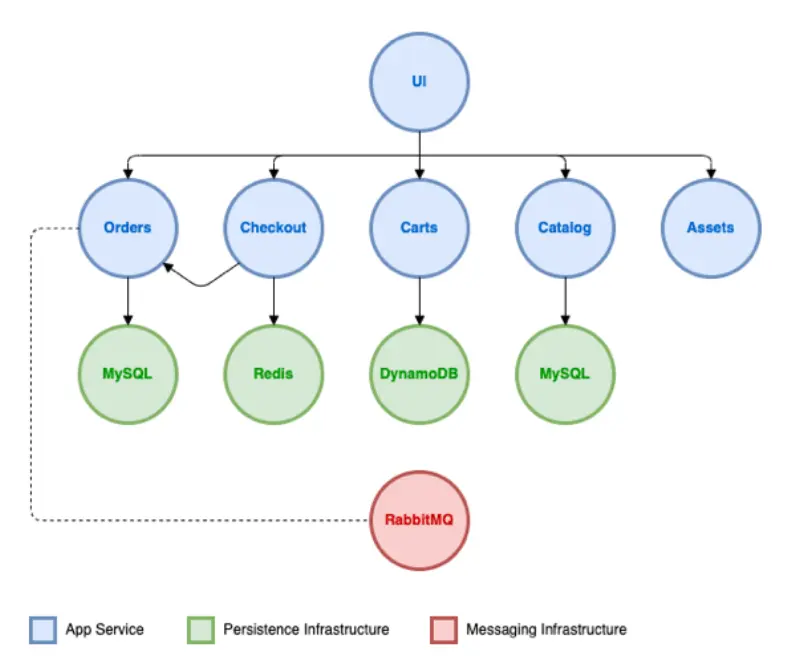

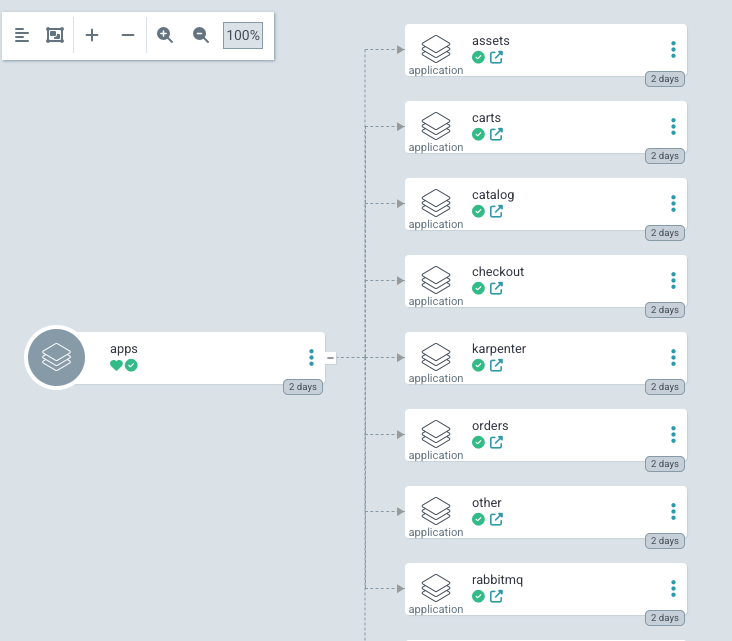

Sample Application 확인

-

샘플 애플리케이션은 고객이 카탈로그를 탐색하고 장바구니에 항목을 추가하며 결제 프로세스를 통해 주문을 완료할 수 있는 간단한 웹 스토어 애플리케이션

-

애플리케이션은 다음과 같이 구성되어 있다.

Component Description UI 프런트엔드 사용자 인터페이스를 제공하며, 다양한 다른 서비스에 대한 API 호출을 집계 Catalog 제품 목록 및 상세 정보를 제공하는 API Cart 고객의 쇼핑 카트 기능을 제공하는 API Checkout 결제 프로세스를 조정하는 API Orders 고객 주문을 수신하고 처리하는 API Static assets 제품 카탈로그와 관련된 이미지 등의 정적 자산을 제공하는 서비스 -

이미지 도커 파일, ECR 공개 저장소 정보 - Link

-

모든 구성요소는 ArgoCD를 통하여 EKS 클러스터에 배포 된다.

-

AWS CodeCommit 저장소를 GitOps repo로 사용하여 IDE에 복제할 수 있다.

cd ~/environment git clone codecommit::${REGION}://eks-gitops-repo export ARGOCD_SERVER=$(kubectl get svc argo-cd-argocd-server -n argocd -o json | jq --raw-output '.status.loadBalancer.ingress[0].hostname') echo "ArgoCD URL: http://${ARGOCD_SERVER}" export ARGOCD_USER="admin" export ARGOCD_PWD=$(kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d) echo "Username: ${ARGOCD_USER}" echo "Password: ${ARGOCD_PWD}"

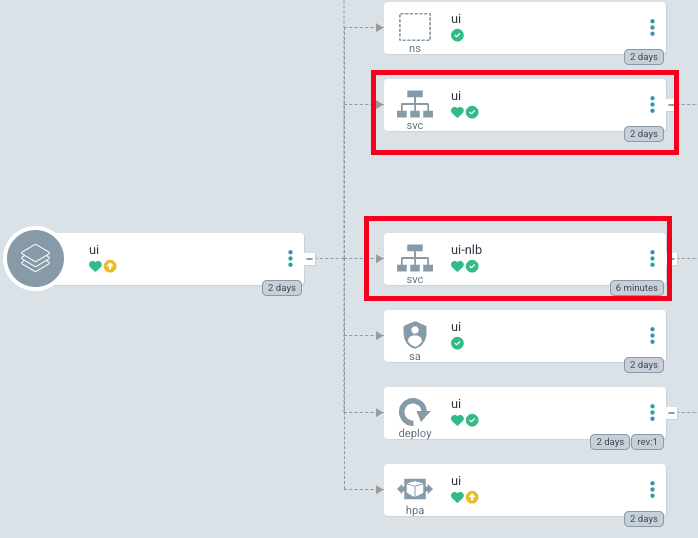

- ArgoCD 웹 확인

- UI 접속을 위한 NLB 설정

cat << EOF > ~/environment/eks-gitops-repo/apps/ui/service-nlb.yaml apiVersion: v1 kind: Service metadata: annotations: service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing service.beta.kubernetes.io/aws-load-balancer-type: external labels: app.kubernetes.io/instance: ui app.kubernetes.io/name: ui name: ui-nlb namespace: ui spec: type: LoadBalancer ports: - name: http port: 80 protocol: TCP targetPort: 8080 selector: app.kubernetes.io/instance: ui app.kubernetes.io/name: ui EOF cat << EOF > ~/environment/eks-gitops-repo/apps/ui/kustomization.yaml apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization namespace: ui resources: - namespace.yaml - configMap.yaml - serviceAccount.yaml - service.yaml - deployment.yaml - hpa.yaml - service-nlb.yaml EOF # cd ~/environment/eks-gitops-repo/ git add apps/ui/service-nlb.yaml apps/ui/kustomization.yaml git commit -m "Add to ui nlb" git push argocd app sync ui ... # # UI 접속 URL 확인 (1.5, 1.3 배율) kubectl get svc -n ui ui-nlb -o jsonpath='{.status.loadBalancer.ingress[0].hostname}' | awk '{ print "UI URL = http://"$1""}'

Amazon EKS Upgrades 소개

K8S(+AWS EKS) Release Cycles

- Kubernetes 버전 체계 및 릴리스 주기

- Kubernetes는 Semantic Versioning을 따르며, 형식은 x.y.z (x: 주요, y: 마이너, z: 패치)입니다.

- 새로운 마이너 버전은 약 4개월마다 출시되며, v1.19 이상부터는 12개월 동안 표준 지원을 받고, 동시에 최소 3개의 마이너 버전을 지원합니다.

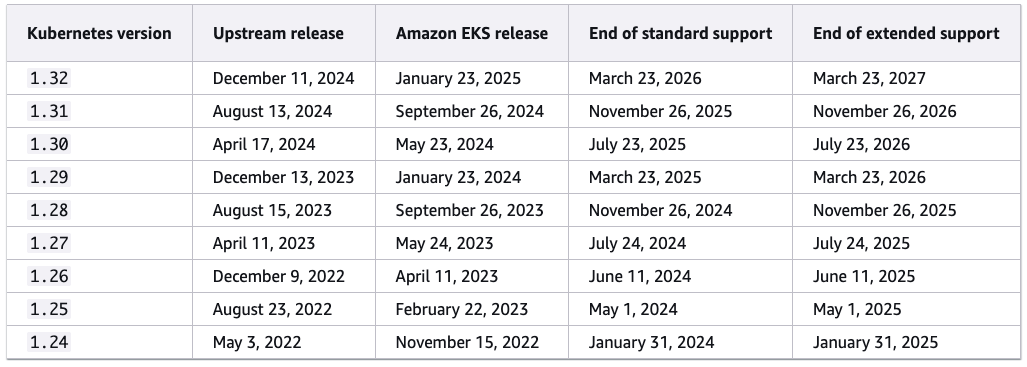

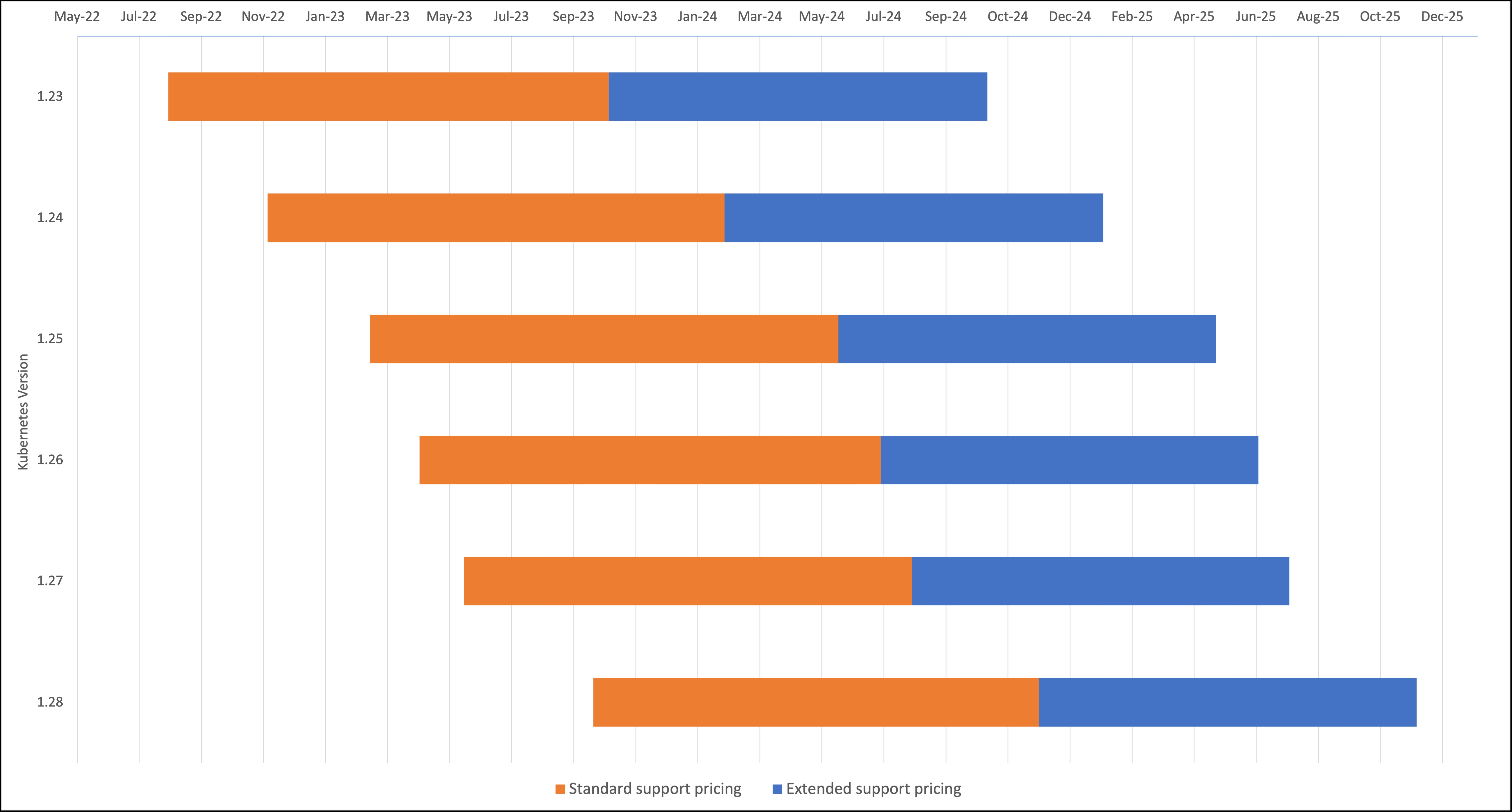

- Amazon EKS의 Kubernetes 지원 정책

- Amazon EKS는 업스트림 릴리스 주기를 따르면서, 해당 버전이 처음 제공된 후 14개월 동안 4개의 마이너 버전을 표준 지원합니다.

- 지원 기간 중 업스트림에서 지원이 종료된 버전에 대해서도 보안 패치를 백포트합니다.

- 릴리스 캘린더 및 안정성 검증

- Amazon EKS Kubernetes 릴리스 캘린더에서 각 버전의 주요 날짜를 확인할 수 있습니다.

- 새로운 버전이 EKS에 적용되기 전, AWS의 다른 서비스 및 도구와의 안정성 및 호환성을 철저히 테스트합니다. 출처 : https://docs.aws.amazon.com/eks/latest/userguide/kubernetes-versions.html#kubernetes-release-calendar

출처 : https://docs.aws.amazon.com/eks/latest/userguide/kubernetes-versions.html#kubernetes-release-calendar

- 확장 지원(Extended Support) 기능

- Kubernetes 1.21 이상 버전부터는 표준 지원 종료 후 즉시 시작되는 12개월의 확장 지원이 제공되어, 총 지원 기간이 26개월로 연장됩니다.

- 확장 지원 기간이 종료되면 클러스터는 자동으로 최신 지원 버전으로 업그레이드됩니다.

출처 : https://aws.amazon.com/ko/blogs/containers/amazon-eks-extended-support-for-kubernetes-versions-pricing/

출처 : https://aws.amazon.com/ko/blogs/containers/amazon-eks-extended-support-for-kubernetes-versions-pricing/

- 비용 및 버전 정책 제어

- 비용: 표준 지원은 클러스터당 시간당 $0.10, 확장 지원은 $0.60이 부과됩니다(2024년 4월 1일부터 적용).

- 버전 정책 제어: 클러스터 관리자는 EKS 콘솔이나 CLI를 통해 표준 지원 또는 확장 지원 방식을 선택할 수 있으며, 이를 통해 비즈니스 요구 사항에 맞는 버전 업그레이드 전략을 설정할 수 있습니다.

왜 Update를 해야 하는가?

- 업데이트의 중요성

- Amazon EKS 클러스터 업데이트는 보안, 안정성, 성능, 호환성 유지에 필수적이며 최신 기능 활용을 보장합니다.

출처 : AEWS 스터디 3기

출처 : AEWS 스터디 3기

- Amazon EKS 클러스터 업데이트는 보안, 안정성, 성능, 호환성 유지에 필수적이며 최신 기능 활용을 보장합니다.

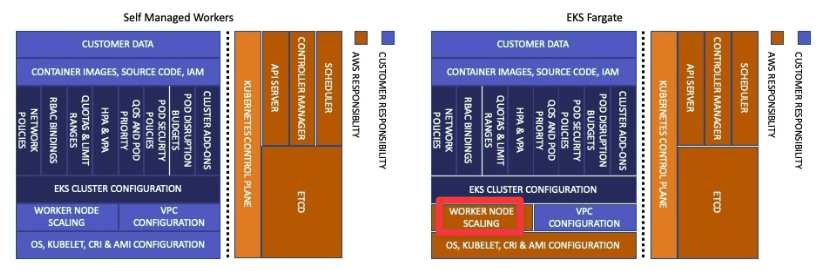

- 업그레이드 프로세스

- Kubernetes는 제어 평면과 데이터 평면 모두를 포함하며, AWS는 제어 평면을 관리하고 업그레이드를 시작합니다.

- 클러스터 소유자는 제어 평면과 데이터 평면 업그레이드를 시작할 책임이 있으며, Fargate, 자가/관리형 노드 그룹, 그리고 Karpenter Controller를 통한 작업자 노드 등이 포함됩니다.

- 작업 부하 가용성을 위해 PodDisruptionBudgets와 topologySpreadConstraint 설정이 필요합니다.

- 플랫폼 버전

- Amazon EKS는 새로운 Kubernetes 마이너 버전과 함께 초기 플랫폼 버전을 제공하며, 플랫폼 버전은 eks.1부터 시작해 새로운 버전 출시 시 증가합니다.

- 모든 기존 클러스터는 자동으로 최신 Amazon EKS 플랫폼 버전으로 업그레이드되어 사용자 개입이 필요 없습니다.

- 공유 책임 모델

- 최신 마이너 버전을 유지하는 것이 보안 패치, 버그 수정, 성능 향상에 중요하며, 이를 통해 애플리케이션과 고객에게 더 나은 서비스를 제공합니다.

업그레이드 전 준비 사항

요구사항

- 버전 호환성 확인

- 컨트롤 플레인과 노드 버전 일치: 노드의 Kubernetes 버전이 현재 컨트롤 플레인과 동일한 마이너 버전인지 확인합니다. 예를 들어, 컨트롤 플레인이

1.29면 노드도1.29여야 합니다. - EKS 지원 버전 검토: AWS는 일반적으로 최신 3개 버전만 표준 지원하며, 14개월 후 추가 지원으로 전환됩니다.

aws eks describe-cluster-versions로 지원 기간을 확인하세요

- 컨트롤 플레인과 노드 버전 일치: 노드의 Kubernetes 버전이 현재 컨트롤 플레인과 동일한 마이너 버전인지 확인합니다. 예를 들어, 컨트롤 플레인이

- 사용 중단된 API 및 구성 검토

- Kubernetes 변경 로그와 Deprecated API Migration Guide를 확인하여 워크로드에서 더 이상 사용되지 않는 API를 수정합니다.

kubent(Kube No Trouble) 도구로 클러스터 내 deprecated API 사용 여부를 스캔

- 네트워크 및 보안 준비

- 서브넷에 최소 5개의 사용 가능한 IP 주소가 있어야 합니다.

- 보안 그룹 규칙이 모든 서브넷 간 통신을 허용하는지 확인

- 애드온 및 타사 도구 호환성

- VPC CNI, CoreDNS, kube-proxy 등 EKS 애드온을 최신 버전으로 업데이트합니다.

- AWS Load Balancer Controller나 Cluster Autoscaler와 같은 타사 도구의 버전도 Kubernetes 대상 버전과 호환되는지 검증

- 백업 및 로깅 설정

- Velero 등을 사용해 클러스터 상태를 백업합니다.

- 컨트롤 플레인 로깅을 활성화하여 업그레이드 중 발생할 수 있는 오류를 모니터링 합니다.

업그레이드 워크플로우

- Amazon EKS 및 Kubernetes 버전에 대한 주요 업데이트 식별

- 사용 중단 정책 이해 및 적절한 매니페스트 리팩토링

- 올바른 업그레이드 전략을 사용하여 EKS 제어 평면 및 데이터 평면 업그리으

- 마지막으로 다운스트림 애드온 종속성 업그레이드

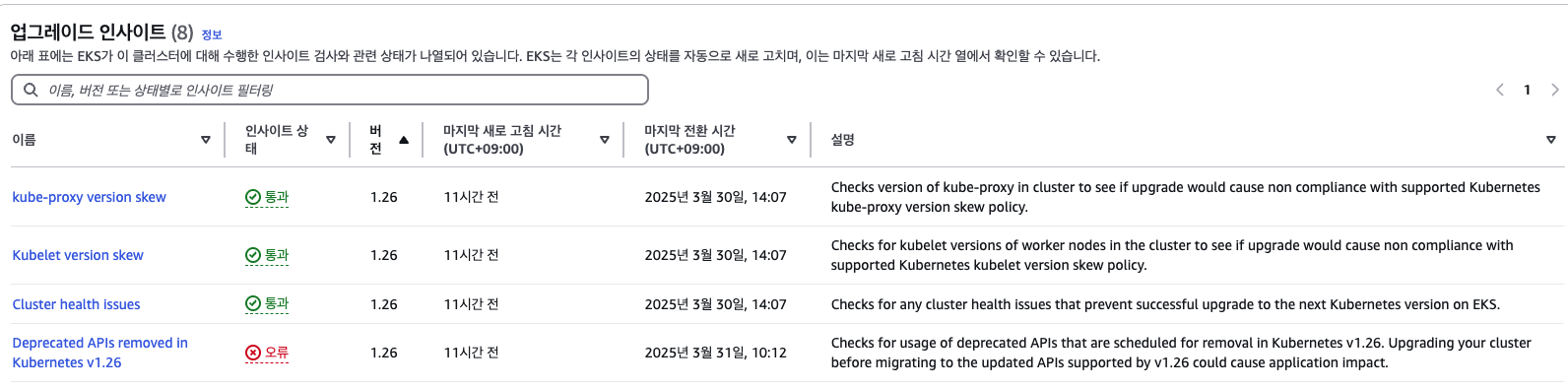

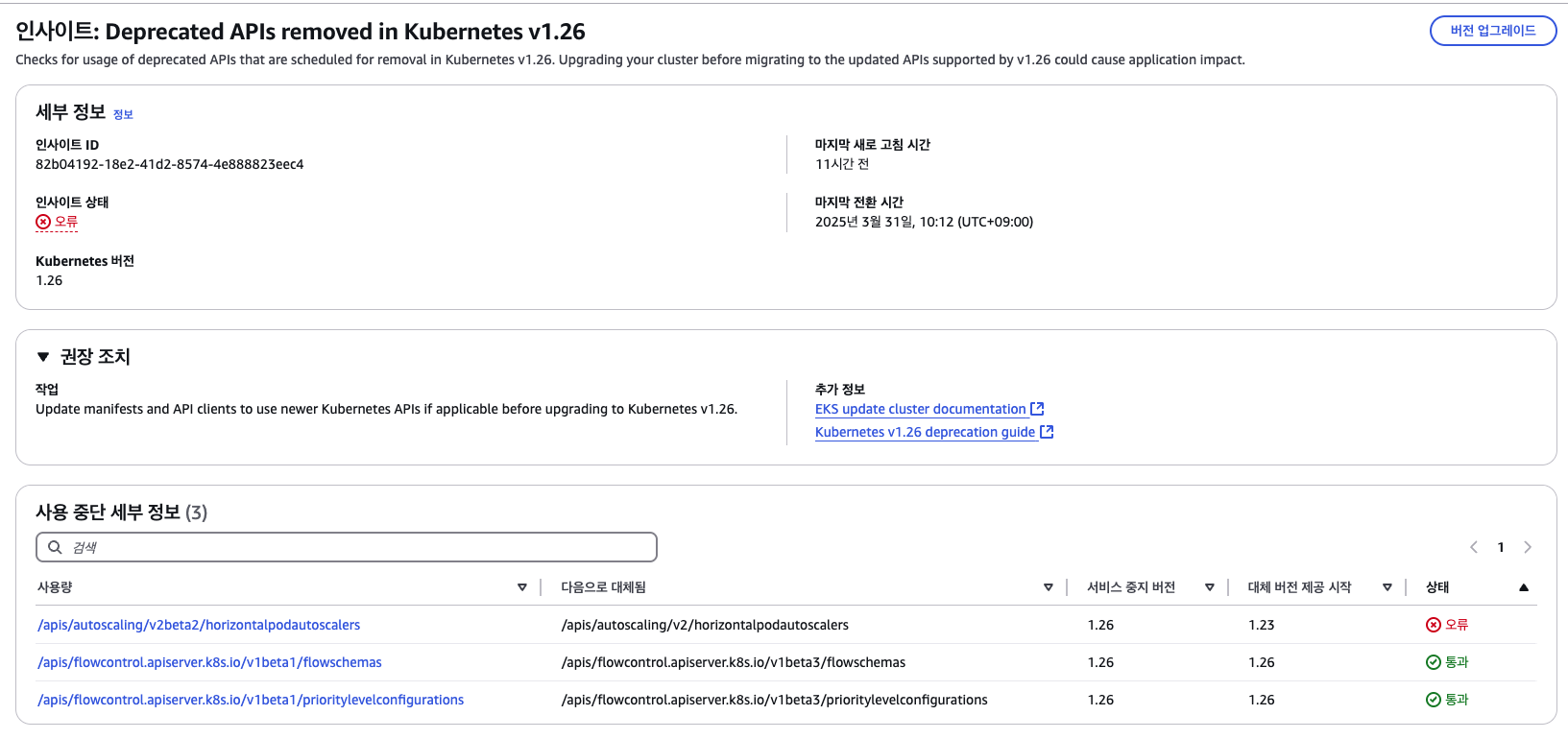

EKS Upgrade Insights

Amazon EKS는 제어 평면 업그레이드는 자동화하지만, 업그레이드 시 영향을 받을 리소스나 애플리케이션 식별은 기존에 수동으로 수행되었습니다. 이를 위해 릴리스 노트를 검토해 더 이상 사용되지 않거나 제거된 Kubernetes API를 찾아내고, 이를 사용하는 애플리케이션을 수정해야 했습니다.

이 문제를 해결하기 위해 EKS Upgrade Insights 기능이 도입되었습니다. 이 기능은 다음과 같은 특징을 가집니다:

- 자동 인사이트 검사: 모든 Amazon EKS 클러스터는 정기적으로 자동 검사되며, Kubernetes 모범 사례를 따르는 데 필요한 권장 사항을 제공합니다.

- 문제 해결 가이드: 각 인사이트는 문제를 해결하기 위한 구체적인 단계와, 릴리스 노트나 블로그 게시물 등 추가 정보를 제공하는 링크를 포함합니다.

- 리소스 상태 표시: 인사이트에는 영향을 받는 Kubernetes 리소스 목록이 포함되며, 각 리소스는 다음과 같은 심각도 상태로 표시됩니다.

- 오류: 다음 마이너 버전에서 API 호출이 제거되어 업그레이드 실패를 유발할 수 있음.

- 경고: 즉각적인 조치는 필요 없으나, 향후 문제 발생 가능성이 있음.

- 알 수 없음: 백엔드 처리 오류.

- 전체 상태 판단: 인사이트 내 모든 리소스 중 가장 높은 심각도 상태를 전체 상태로 표시하여, 업그레이드 전 클러스터 수정 필요 여부를 쉽게 확인할 수 있습니다.

- 일일 스캔: 클러스터 감사 로그를 매일 스캔하여 사용되지 않는 리소스를 찾아내고, 그 결과를 EKS 콘솔이나 API/CLI를 통해 제공하며, Kubernetes 버전 업그레이드 준비와 관련된 인사이트만 출력합니다.

- 자동 업데이트: 클러스터 인사이트는 주기적으로 업데이트되며, 수동으로 새로 고칠 수 없습니다.

이렇게 Upgrade Insights를 활용하면 최신 Kubernetes 버전으로의 업그레이드 시 발생할 수 있는 문제를 사전에 파악하고 최소한의 노력으로 대응할 수 있습니다.

업그레이드 전략 선택

전략을 선택할 떄 고려 요소

- 가동 중지 허용 범위: 업그레이드 중 허용 가능한 다운타임 수준을 고려합니다.

- 복잡성: 애플리케이션 아키텍처, 종속성 및 상태 구성 요소의 복잡성을 평가합니다.

- Kubernetes 버전 차이: 현재와 목표 버전 간 차이 및 호환성을 검토합니다.

- 리소스 제약: 업그레이드 시 필요한 인프라와 예산을 고려하며, 불필요한 클러스터 확장을 최소화합니다.

- 팀 전문성: 여러 클러스터 관리 및 트래픽 전환 전략 구현에 대한 팀의 역량을 평가합니다.

In-place 전략

- 방식: 기존 클러스터를 직접 업그레이드

- 단계

- EKS 제어 평면 업그레이드 → 작업자 노드 AMI/구성 업데이트 → 점진적 노드 교체 → 매니페스트/애드온 호환성 검증 → 테스트

- 장점

- 기존 리소스 유지 (VPC, 보안 그룹 등).

- 외부 통합 도구 변경 최소화.

- 인프라 오버헤드 적음.

- 상태 저장 애플리케이션 마이그레이션 불필요.

- 단점

- 롤백 불가(제어 평면 업그레이드 후).

- 다중 버전 건너뛸 경우 연속 업그레이드 필요.

- 가동 중단 위험 관리 및 철저한 테스트 필수.

Blue-Green 전략

- 방식: 신규 클러스터 생성 후 트래픽 전환

- 단계

- 새 클러스터 생성 → 애플리케이션/애드온 배포 → 테스트 → 트래픽 전환 → 구 클러스터 삭제

- 장점

- 안전한 테스트 환경 및 즉시 롤백 가능.

- 다중 버전 한 번에 업그레이드 가능.

- 가동 중단 시간 최소화.

- 단점

- 병렬 클러스터 유지로 인한 비용 증가.

- 트래픽 전환 및 외부 시스템 조정 복잡.

- 상태 저장 애플리케이션 데이터 동기화 어려움.

핵심 차이점

| 항목 | In-Place | Blue-Green |

|---|---|---|

| 롤백 | 불가능 | 즉시 가능 |

| 비용 | 낮음 | 높음 (병렬 클러스터) |

| 복잡성 | 낮음 (단일 클러스터) | 높음 (트래픽 전환/동기화) |

| 적합 케이스 | 단순 환경, 상태 저장 앱 | 대규모/중요 시스템, 다중 버전 건너뛰기 |

선택 기준:

- In-Place: 빠르고 저렴한 업그레이드, 상태 저장 앱 유지.

- Blue-Green: 안정성 우선, 복잡한 환경/롤백 필요 시.

EKS Upgrade 실습 1 - In-place

- In-place 방식 : 1.25 → 1.26 버전

1) Control Plane 업그레이드

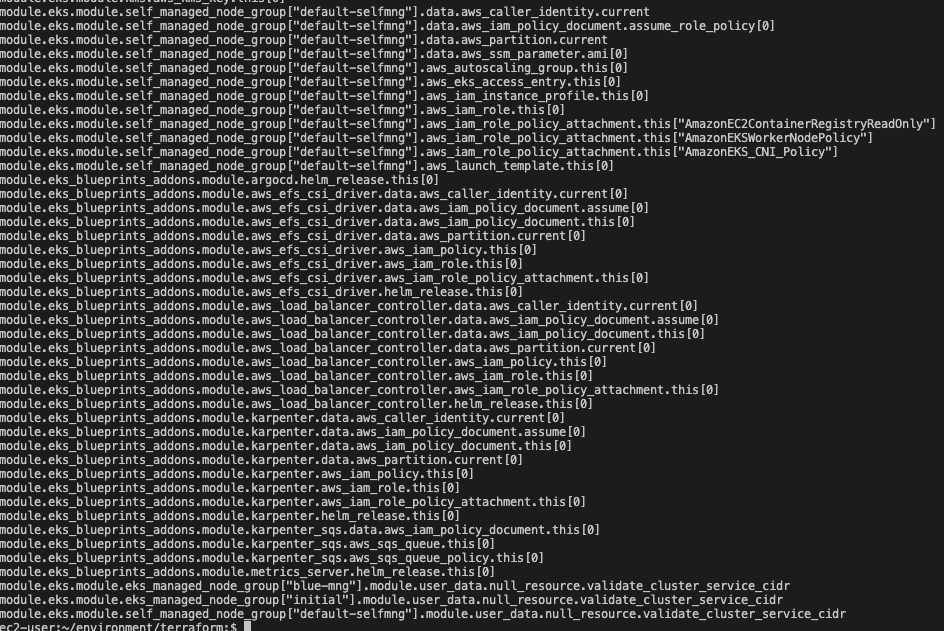

여러가지 방법 (eksctl, AWS 관리 콘솔, AWS CLI 등)이 있으나 금일 실습에서는 Terraform를 이용하여 배포를 진행하고자 합니다.

- terraform 모든 리소스 나열

cd ~/environment/terraform terraform state list

- 현재 버전 확인 및 파일 기록

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c > 1.25.txt

- EKS 현재 상태 확인

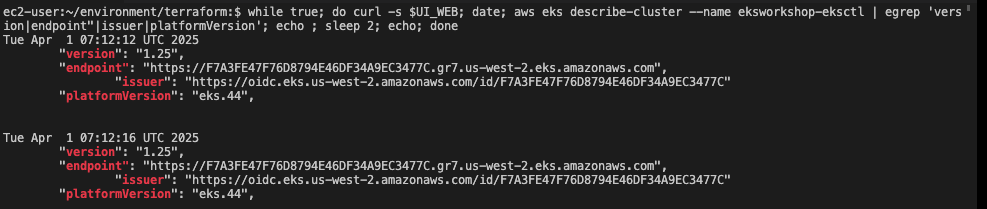

while true; do curl -s $UI_WEB; date; aws eks describe-cluster --name eksworkshop-eksctl | egrep 'version|endpoint"|issuer|platformVersion'; echo ; sleep 2; echo; done

- EKS Version 변경

# 1.25 -> 1.26 버전으로 변경 variable "cluster_version" { description = "EKS cluster version." type = string default = "1.26" } variable "mng_cluster_version" { description = "EKS cluster mng version." type = string default = "1.26" } variable "ami_id" { description = "EKS AMI ID for node groups" type = string default = "" }

- terraform plan 확인

terraform plan -no-color > plan-output.txt # module.eks.module.eks_managed_node_group["initial"].aws_eks_node_group.this[0] will be updated in-place ~ resource "aws_eks_node_group" "this" { id = "" tags = { "Blueprint" = "eksworkshop-eksctl" "GithubRepo" = "github.com/aws-ia/terraform-aws-eks-blueprints" "Name" = "initial" "karpenter.sh/discovery" = "eksworkshop-eksctl" } ~ version = "1.25" -> "1.26" # (15 unchanged attributes hidden) # (4 unchanged blocks hidden) }

- EKS Upgrade 실행

terraform apply -auto-approve

- EKS Control Plane 버전 확인

aws eks describe-cluster --name $EKS_CLUSTER_NAME | jq

- 파드 컨테이너 이미지 버전 확인 : 동일함

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c > 1.26.txt diff 1.26.txt 1.25.txt - cluster Insights

- Insights - EKS add-on version compatibility

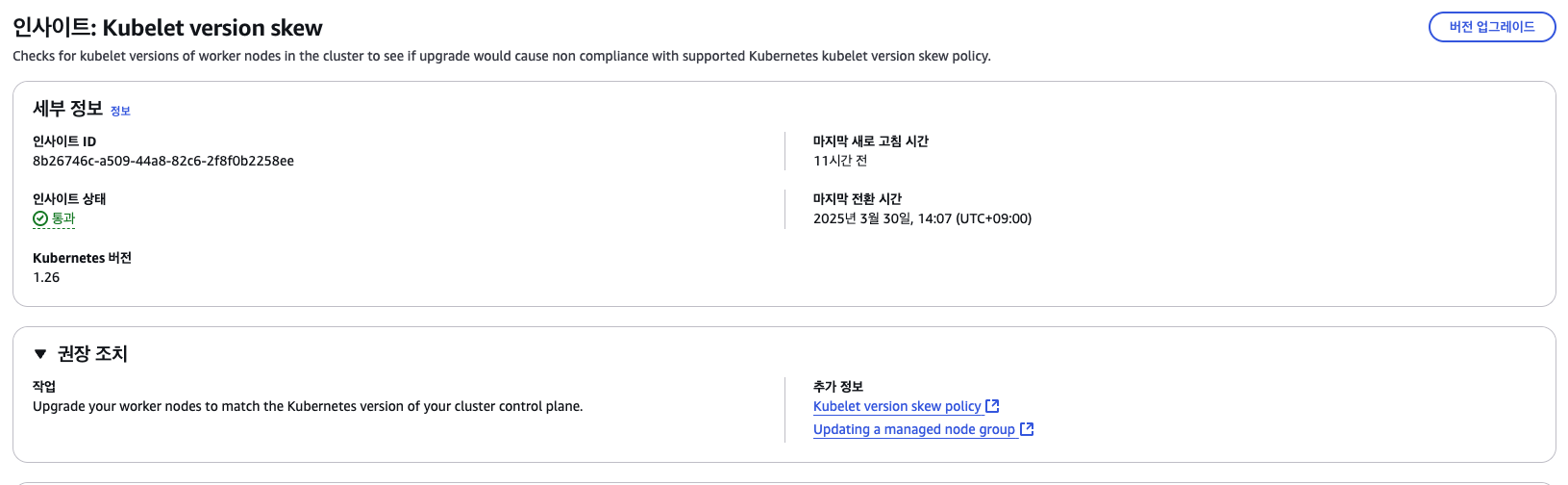

- Insights - Kubelet version skew

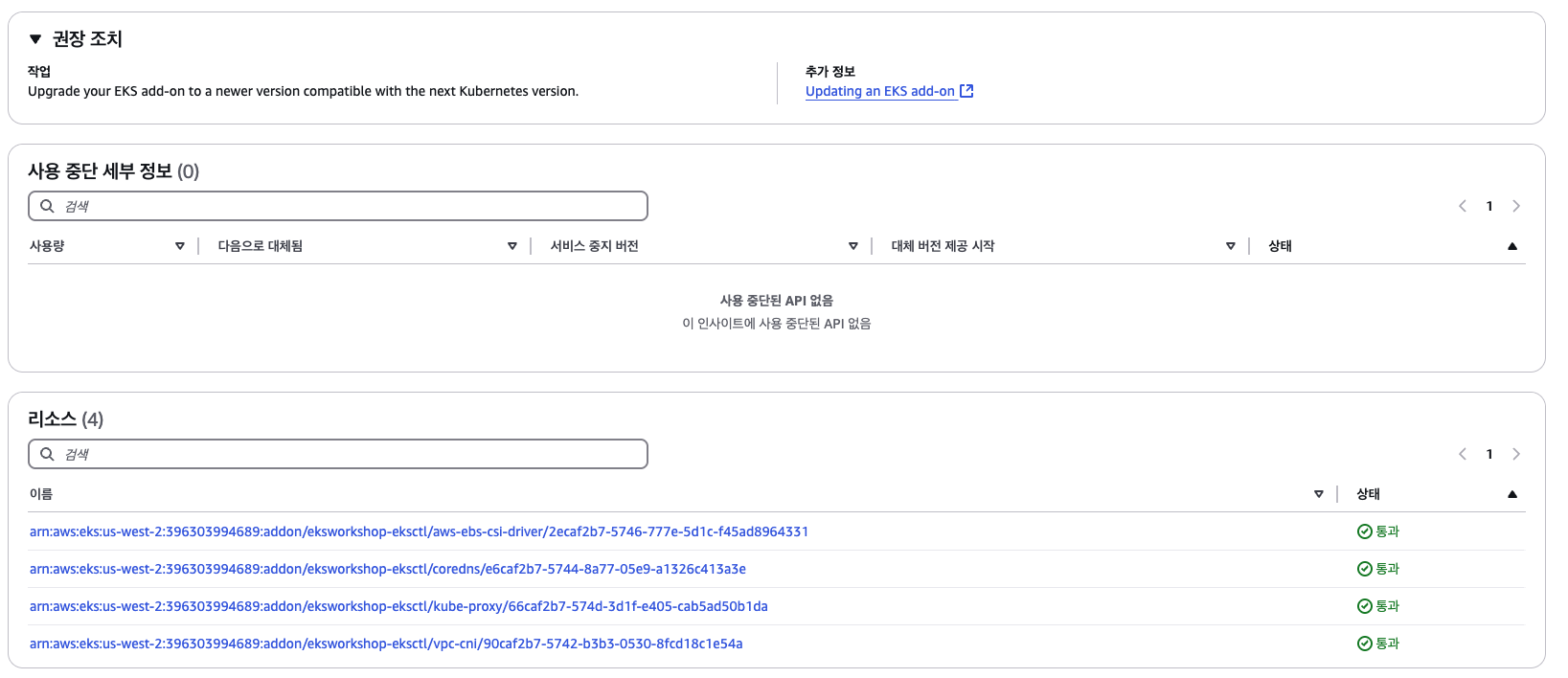

- insight - Deprecated APIs removed in Kubernetes v1.26

- ArgoCD UI APP 리소스 확인

- UI HPA 확인

- code

kubectl get hpa -n ui ui -o yaml - 확인 결과

## 확인 결과 apiVersion: autoscaling/v2 kind: HorizontalPodAutoscaler metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"autoscaling/v2beta2","kind":"HorizontalPodAutoscaler","metadata":{"annotations":{},"labels":{"argocd.argoproj.io/instance":"ui"},"name":"ui","namespace":"ui"},"spec":{"maxReplicas":4,"minReplicas":1,"scaleTargetRef":{"apiVersion":"apps/v1","kind":"Deployment","name":"ui"},"targetCPUUtilizationPercentage":80}} creationTimestamp: "2025-03-30T05:08:11Z" labels: argocd.argoproj.io/instance: ui name: ui namespace: ui resourceVersion: "1341799" uid: 43c15519-3f51-40cc-b2b6-e66caeeaa39

- code

2) EKS Addons 업그레이드

K8S Version 별로 호환 되는 버전이 있다.

- CoreDNS - Link (v1.9.3-eksbuild.22)

- kube-proxy - Link (v1.26.15-eksbuild.24)

- VPC CNI - Link (v1.19.2-eksbuild.5)

해당 버전 확인은 아래 명령어를 통하여서 확인할수 있습니다.

단 VPC CNI, EBS CSI Driver는 현재 최신 버전으로 사용중이므로 변경 불필요

-

Core DNS , Kube-proxy 조회

aws eks describe-addon-versions --addon-name coredns --kubernetes-version 1.26 --output table \ --query "addons[].addonVersions[:10].{Version:addonVersion,DefaultVersion:compatibilities[0].defaultVersion}" aws eks describe-addon-versions --addon-name kube-proxy --kubernetes-version 1.26 --output table \ --query "addons[].addonVersions[:10].{Version:addonVersion,DefaultVersion:compatibilities[0].defaultVersion}" -

addons.tf수정eks_addons = { coredns = { version = "v1.9.3-eksbuild.22" # Recommended version for EKS 1.26 } kube_proxy = { version = "v1.26.15-eksbuild.24" # Recommended version for EKS 1.26 } }

- terraform plan

-

code

terraform plan -no-color | tee addon.txt -

확인 결과

# module.eks_blueprints_addons.aws_eks_addon.this["coredns"] will be updated in-place ~ resource "aws_eks_addon" "this" { ~ addon_version = "v1.8.7-eksbuild.10" -> "v1.9.3-eksbuild.22" id = "eksworkshop-eksctl:coredns" tags = { "Blueprint" = "eksworkshop-eksctl" "GithubRepo" = "github.com/aws-ia/terraform-aws-eks-blueprints" } # (11 unchanged attributes hidden) # (1 unchanged block hidden) } # module.eks_blueprints_addons.aws_eks_addon.this["kube-proxy"] will be updated in-place ~ resource "aws_eks_addon" "this" { ~ addon_version = "v1.25.16-eksbuild.8" -> "v1.26.15-eksbuild.24" id = "eksworkshop-eksctl:kube-proxy" tags = { "Blueprint" = "eksworkshop-eksctl" "GithubRepo" = "github.com/aws-ia/terraform-aws-eks-blueprints" } # (11 unchanged attributes hidden) # (1 unchanged block hidden) }

-

- terraform apply

terraform apply -auto-approve

- pod 확인

- code

kubectl get pod -n kube-system -l 'k8s-app in (kube-dns, kube-proxy)' kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c - 확인 결과

6 602401143452.dkr.ecr.us-west-2.amazonaws.com/amazon-k8s-cni:v1.19.3-eksbuild.1 6 602401143452.dkr.ecr.us-west-2.amazonaws.com/amazon/aws-network-policy-agent:v1.2.0-eksbuild.1 8 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/aws-ebs-csi-driver:v1.41.0 2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/coredns:v1.9.3-eksbuild.22 2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-attacher:v4.8.1-eks-1-32-7 6 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-node-driver-registrar:v2.13.0-eks-1-32-7 2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-provisioner:v5.2.0-eks-1-32-7 2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-resizer:v1.13.2-eks-1-32-7 2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-snapshotter:v8.2.1-eks-1-32-7 6 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/kube-proxy:v1.26.15-minimal-eksbuild.24 8 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/livenessprobe:v2.14.0-eks-1-32-7 8 amazon/aws-efs-csi-driver:v1.7.6 1 amazon/dynamodb-local:1.13.1 1 ghcr.io/dexidp/dex:v2.38.0 1 hjacobs/kube-ops-view:20.4.0 1 public.ecr.aws/aws-containers/retail-store-sample-assets:0.4.0 1 public.ecr.aws/aws-containers/retail-store-sample-cart:0.7.0 1 public.ecr.aws/aws-containers/retail-store-sample-catalog:0.4.0 1 public.ecr.aws/aws-containers/retail-store-sample-checkout:0.4.0 1 public.ecr.aws/aws-containers/retail-store-sample-orders:0.4.0 1 public.ecr.aws/aws-containers/retail-store-sample-ui:0.4.0 1 public.ecr.aws/bitnami/rabbitmq:3.11.1-debian-11-r0 2 public.ecr.aws/docker/library/mysql:8.0 1 public.ecr.aws/docker/library/redis:6.0-alpine 1 public.ecr.aws/docker/library/redis:7.0.15-alpine 2 public.ecr.aws/eks-distro/kubernetes-csi/external-provisioner:v3.6.3-eks-1-29-2 8 public.ecr.aws/eks-distro/kubernetes-csi/livenessprobe:v2.11.0-eks-1-29-2 6 public.ecr.aws/eks-distro/kubernetes-csi/node-driver-registrar:v2.9.3-eks-1-29-2 2 public.ecr.aws/eks/aws-load-balancer-controller:v2.7.1 2 public.ecr.aws/karpenter/controller:0.37.0@sha256:157f478f5db1fe999f5e2d27badcc742bf51cc470508b3cebe78224d0947674f 5 quay.io/argoproj/argocd:v2.10.0 1 registry.k8s.io/metrics-server/metrics-server:v0.7.0

- code

3.1) Managed 노드 그룹 업그레이드

in-place Upgrade

base.tf에서 두 Managed 노드 그룹 구성 확인eks_managed_node_group_defaults = { cluster_version = var.mng_cluster_version } eks_managed_node_groups = { initial = { instance_types = ["m5.large", "m6a.large", "m6i.large"] min_size = 2 max_size = 10 desired_size = 2 update_config = { max_unavailable_percentage = 35 } } blue-mng={ instance_types = ["m5.large", "m6a.large", "m6i.large"] cluster_version = "1.25" min_size = 1 max_size = 2 desired_size = 1 update_config = { max_unavailable_percentage = 35 } labels = { type = "OrdersMNG" } subnet_ids = [module.vpc.private_subnets[0]] taints = [ { key = "dedicated" value = "OrdersApp" effect = "NO_SCHEDULE" } ] } }

- 사용자 지정 AMI 조회

aws ssm get-parameter --name /aws/service/eks/optimized-ami/1.25/amazon-linux-2/recommended/image_id \ --region $AWS_REGION --query "Parameter.Value" --output text ## 조회 결과 ami-xxxx

variable.tf수정variable "ami_id" { description = "EKS AMI ID for node groups" type = string default = "ami-xxx" # 조회 된 ami 추가 }

base.tf코드 추가custom = { instance_types = ["t3.medium"] min_size = 1 max_size = 2 desired_size = 1 update_config = { max_unavailable_percentage = 35 } ami_id = try(var.ami_id) enable_bootstrap_user_data = true }

- 배포 전 모니터링

while true; do aws autoscaling describe-auto-scaling-groups --query 'AutoScalingGroups[*].AutoScalingGroupName' --output json | jq; echo ; kubectl get node -L eks.amazonaws.com/nodegroup; echo; date ; echo ; kubectl get node -L eks.amazonaws.com/nodegroup-image | grep ami; echo; sleep 1; echo; done - terraform 실행

-

code

terraform apply -auto-approve # 모니터링 while true; do aws autoscaling describe-auto-scaling-groups --query 'AutoScalingGroups[*].AutoScalingGroupName' --output json | jq; echo ; kubectl get node -L eks.amazonaws.com/nodegroup; echo; date ; echo ; kubectl get node -L eks.amazonaws.com/nodegroup-image | grep ami; echo; sleep 1; echo; done -

조회 결과

## 확인 결과 ip-10-0-13-56.us-west-2.compute.internal Ready <none> 80m v1.25.16-eks-59bf375 initial-2025033004585862020000002a ip-10-0-26-122.us-west-2.compute.internal Ready <none> 81m v1.26.15-eks-59bf375 initial-2025033004585862020000002a- 1.25로 적용 된 이유는

initial에 버전을 지정하지 않은 경우 variables.tf에 mng_cluster_version을 따라간다.(현재 mng_cluster_version는 1.25로 지정 되어 있다.)# base.tf eks_managed_node_group_defaults = { cluster_version = var.mng_cluster_version } eks_managed_node_groups = { initial = { instance_types = ["m5.large", "m6a.large", "m6i.large"] min_size = 2 max_size = 10 desired_size = 2 update_config = { max_unavailable_percentage = 35 } } # variable.tf variable "mng_cluster_version" { description = "EKS cluster mng version." type = string default = "1.25" }

- 1.25로 적용 된 이유는

-

- kubernetes 버전 1.26에 대해 ami_id를 검색

aws ssm get-parameter --name /aws/service/eks/optimized-ami/1.26/amazon-linux-2/recommended/image_id \ --region $AWS_REGION --query "Parameter.Value" --output text

variable.tf수정variable "mng_cluster_version" { description = "EKS cluster mng version." type = string default = "1.26" # 1.25 -> 1.26 변경 } # 다른 AMI으로 변경 variable "ami_id" { description = "EKS AMI ID for node groups" type = string default = "ami-xxx" # 조회 된 ami 추가 }

-

terraform 실행 : custom 또한 버전이 바뀌었음을 확인

-

code

terraform apply -auto-approve # 모니터링 while true; do aws autoscaling describe-auto-scaling-groups --query 'AutoScalingGroups[*].AutoScalingGroupName' --output json | jq; echo ; kubectl get node -L eks.amazonaws.com/nodegroup; echo; date ; echo ; kubectl get node -L eks.amazonaws.com/nodegroup-image | grep ami; echo; sleep 1; echo; done -

조회 결과

## 확인 ip-10-0-13-56.us-west-2.compute.internal Ready <none> 100m v1.26.15-eks-59bf375 initial-2025033004585862020000002a ip-10-0-26-122.us-west-2.compute.internal Ready <none> 101m v1.26.15-eks-59bf375 initial-2025033004585862020000002a NAME STATUS ROLES AGE VERSION NODEGROUP ip-10-0-44-132.us-west-2.compute.internal Ready <none> 78s v1.26.15-eks-59bf375 custom-20250325154855579500000007

-

-

실습 목적을 위해 만든 custom은 제거하자

~~custom = { instance_types = ["t3.medium"] min_size = 1 max_size = 2 desired_size = 1 update_config = { max_unavailable_percentage = 35 } ami_id = try(var.ami_id) enable_bootstrap_user_data = true }

Blue-Green upgrade

-

base.tf수정blue-mng={ instance_types = ["m5.large", "m6a.large", "m6i.large"] cluster_version = "1.25" min_size = 1 max_size = 2 desired_size = 1 update_config = { max_unavailable_percentage = 35 } labels = { type = "OrdersMNG" } subnet_ids = [module.vpc.private_subnets[0]] # 해당 MNG은 프라이빗서브넷1 에서 동작(ebs pv 사용 중) taints = [ { key = "dedicated" value = "OrdersApp" effect = "NO_SCHEDULE" } ] } -

terraform state 확인

-

code

terraform state show 'module.vpc.aws_subnet.private[0]' terraform state show 'module.vpc.aws_subnet.private[1]' terraform state show 'module.vpc.aws_subnet.private[2]' -

조회 결과

## 0번 조회 availability_zone = "us-west-2a" ## 1번 조회 availability_zone = "us-west-2b" ## 2번 조회 availability_zone = "us-west-2c"

-

-

base.tf→ blue-mng 밑에 green-mng 추가green-mng={ instance_types = ["m5.large", "m6a.large", "m6i.large"] subnet_ids = [module.vpc.private_subnets[0]] min_size = 1 max_size = 2 desired_size = 1 update_config = { max_unavailable_percentage = 35 } labels = { type = "OrdersMNG" } taints = [ { key = "dedicated" value = "OrdersApp" effect = "NO_SCHEDULE" } ] }

- 모니터링 및 배포 진행

terraform apply -auto-approve

- green-mng 확인

# 노드 조회 kubectl get node -l type=OrdersMNG -o wide ## 노드 조회 결과 ip-10-0-10-222.us-west-2.compute.internal Ready <none> 2d4h v1.25.16-eks-59bf375 10.0.10.222 <none> Amazon Linux 2 5.10.234-225.910.amzn2.x86_64 containerd://1.7.25 ip-10-0-8-175.us-west-2.compute.internal Ready <none> 104s v1.26.15-eks-59bf375 10.0.8.175 <none> Amazon Linux 2 5.10.234-225.910.amzn2.x86_64 containerd://1.7.25 # AZ 조회 kubectl get node -l type=OrdersMNG -L topology.kubernetes.io/zone ## AZ 조회 결과 ip-10-0-10-222.us-west-2.compute.internal Ready <none> 2d4h v1.25.16-eks-59bf375 us-west-2a ip-10-0-8-175.us-west-2.compute.internal Ready <none> 2m3s v1.26.15-eks-59bf375 us-west-2a # NoSchedule taint 조회 kubectl get nodes -l type=OrdersMNG -o jsonpath="{range .items[*]}{.metadata.name} {.spec.taints[?(@.effect=='NoSchedule')]}{\"\n\"}{end}" ## NoSchedule taint 조회 결과 ip-10-0-10-222.us-west-2.compute.internal {"effect":"NoSchedule","key":"dedicated","value":"OrdersApp"} ip-10-0-8-175.us-west-2.compute.internal {"effect":"NoSchedule","key":"dedicated","value":"OrdersApp"}

- green-mng , blue-mng 변수 선언

export BLUE_MNG=$(aws eks list-nodegroups --cluster-name eksworkshop-eksctl | jq -c .[] | jq -r 'to_entries[] | select( .value| test("blue-mng*")) | .value') echo $BLUE_MNG # 조회 결과 blue-mng-2025033004585862560000002c export GREEN_MNG=$(aws eks list-nodegroups --cluster-name eksworkshop-eksctl | jq -c .[] | jq -r 'to_entries[] | select( .value| test("green-mng*")) | .value') echo $GREEN_MNG # 조회 결과 green-mng-20250401094211402300000007

- order deploy 반영

cd ~/environment/eks-gitops-repo/ sed -i 's/replicas: 1/replicas: 2/' apps/orders/deployment.yaml git add apps/orders/deployment.yaml git commit -m "Increase orders replicas 2" git push

- argoCD orders sync 작업 진행

argocd app sync orders

base.tf수정 → 기존 blue-mng 제거~~blue-mng={ instance_types = ["m5.large", "m6a.large", "m6i.large"] cluster_version = "1.25" min_size = 1 max_size = 2 desired_size = 1 update_config = { max_unavailable_percentage = 35 } labels = { type = "OrdersMNG" } subnet_ids = [module.vpc.private_subnets[0]] taints = [ { key = "dedicated" value = "OrdersApp" effect = "NO_SCHEDULE" } ] }

- terraform 적용

cd ~/environment/terraform/ terraform plan && terraform apply -auto-approve

- 기존 노드 스케줄 중지 확인

NAME STATUS ROLES AGE VERSION ip-10-0-10-222.us-west-2.compute.internal Ready,SchedulingDisabled <none> 2d5h v1.25.16-eks-59bf375 ip-10-0-8-175.us-west-2.compute.internal Ready <none> 35m v1.26.15-eks-59bf375 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES orders-5b97745747-85pzw 0/1 Running 0 4s 10.0.7.212 ip-10-0-8-175.us-west-2.compute.internal <none> <none> orders-5b97745747-czxl6 1/1 Running 0 6m 10.0.6.149 ip-10-0-8-175.us-west-2.compute.internal <none> <none> NAME READY UP-TO-DATE AVAILABLE AGE orders 1/2 2 1 2d5h

- orders 파드가 다른 노드로 옮겨짐

Tue Apr 1 10:19:35 UTC 2025 NAME STATUS ROLES AGE VERSION ip-10-0-10-222.us-west-2.compute.internal Ready,SchedulingDisabled <none> 2d5h v1.25.16-eks-59bf375 ip-10-0-8-175.us-west-2.compute.internal Ready <none> 36m v1.26.15-eks-59bf375 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES orders-5b97745747-85pzw 0/1 Running 2 (38s ago) 59s 10.0.7.212 ip-10-0-8-175.us-west-2.compute.internal <none> <none> orders-5b97745747-czxl6 1/1 Running 0 6m55s 10.0.6.149 ip-10-0-8-175.us-west-2.compute.internal <none> <none> NAME READY UP-TO-DATE AVAILABLE AGE orders 1/2 2 1 2d5h

- 기존 파드 이동이 완료되면서 기존 노드가 삭제

ip-10-0-8-175.us-west-2.compute.internal Ready <none> 37m v1.26.15-eks-59bf375 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES orders-5b97745747-85pzw 1/1 Running 2 (101s ago) 2m2s 10.0.7.212 ip-10-0-8-175.us-west-2.compute.internal <none> <none> orders-5b97745747-czxl6 1/1 Running 0 7m58s 10.0.6.149 ip-10-0-8-175.us-west-2.compute.internal <none> <none> NAME READY UP-TO-DATE AVAILABLE AGE orders 2/2 2 2 2d5h

- 이벤트 로그 확인

38m Normal Starting node/ip-10-0-8-175.us-west-2.compute.internal 2m46s Normal Starting node/ip-10-0-28-42.us-west-2.compute.internal 26m Normal Starting node/fargate-ip-10-0-18-197.us-west-2.compute.internal 38m Normal NodeAllocatableEnforced node/ip-10-0-8-175.us-west-2.compute.internal Updated Node Allocatable limit across pods 38m Normal Synced node/ip-10-0-8-175.us-west-2.compute.internal Node synced successfully 38m Normal Starting node/ip-10-0-8-175.us-west-2.compute.internal Starting kubelet. 38m Warning InvalidDiskCapacity node/ip-10-0-8-175.us-west-2.compute.internal invalid capacity 0 on image filesystem 38m Normal NodeHasSufficientMemory node/ip-10-0-8-175.us-west-2.compute.internal Node ip-10-0-8-175.us-west-2.compute.internal status is now: NodeHasSufficientMemory 38m Normal NodeHasNoDiskPressure node/ip-10-0-8-175.us-west-2.compute.internal Node ip-10-0-8-175.us-west-2.compute.internal status is now: NodeHasNoDiskPressure 38m Normal NodeHasSufficientPID node/ip-10-0-8-175.us-west-2.compute.internal Node ip-10-0-8-175.us-west-2.compute.internal status is now: NodeHasSufficientPID 38m Normal RegisteredNode node/ip-10-0-8-175.us-west-2.compute.internal Node ip-10-0-8-175.us-west-2.compute.internal event: Registered Node ip-10-0-8-175.us-west-2.compute.internal in Controller 38m Normal NodeReady node/ip-10-0-8-175.us-west-2.compute.internal Node ip-10-0-8-175.us-west-2.compute.internal status is now: NodeReady 26m Normal Starting node/fargate-ip-10-0-18-197.us-west-2.compute.internal Starting kubelet. 26m Warning InvalidDiskCapacity node/fargate-ip-10-0-18-197.us-west-2.compute.internal invalid capacity 0 on image filesystem 26m Normal NodeHasSufficientMemory node/fargate-ip-10-0-18-197.us-west-2.compute.internal Node fargate-ip-10-0-18-197.us-west-2.compute.internal status is now: NodeHasSufficientMemory 26m Normal NodeHasNoDiskPressure node/fargate-ip-10-0-18-197.us-west-2.compute.internal Node fargate-ip-10-0-18-197.us-west-2.compute.internal status is now: NodeHasNoDiskPressure 26m Normal NodeAllocatableEnforced node/fargate-ip-10-0-18-197.us-west-2.compute.internal Updated Node Allocatable limit across pods 26m Normal NodeHasSufficientPID node/fargate-ip-10-0-18-197.us-west-2.compute.internal Node fargate-ip-10-0-18-197.us-west-2.compute.internal status is now: NodeHasSufficientPID 26m Normal RegisteredNode node/fargate-ip-10-0-18-197.us-west-2.compute.internal Node fargate-ip-10-0-18-197.us-west-2.compute.internal event: Registered Node fargate-ip-10-0-18-197.us-west-2.compute.internal in Controller 26m Normal Synced node/fargate-ip-10-0-18-197.us-west-2.compute.internal Node synced successfully 26m Normal NodeReady node/fargate-ip-10-0-18-197.us-west-2.compute.internal Node fargate-ip-10-0-18-197.us-west-2.compute.internal status is now: NodeReady 26m Normal RemovingNode node/fargate-ip-10-0-6-242.us-west-2.compute.internal Node fargate-ip-10-0-6-242.us-west-2.compute.internal event: Removing Node fargate-ip-10-0-6-242.us-west-2.compute.internal from Controller 3m5s Normal NodeNotSchedulable node/ip-10-0-10-222.us-west-2.compute.internal Node ip-10-0-10-222.us-west-2.compute.internal status is now: NodeNotSchedulable 2m52s Normal NodeHasNoDiskPressure node/ip-10-0-28-42.us-west-2.compute.internal Node ip-10-0-28-42.us-west-2.compute.internal status is now: NodeHasNoDiskPressure 2m52s Normal NodeHasSufficientPID node/ip-10-0-28-42.us-west-2.compute.internal Node ip-10-0-28-42.us-west-2.compute.internal status is now: NodeHasSufficientPID 2m52s Normal NodeAllocatableEnforced node/ip-10-0-28-42.us-west-2.compute.internal Updated Node Allocatable limit across pods 2m52s Normal NodeHasSufficientMemory node/ip-10-0-28-42.us-west-2.compute.internal Node ip-10-0-28-42.us-west-2.compute.internal status is now: NodeHasSufficientMemory 2m52s Warning InvalidDiskCapacity node/ip-10-0-28-42.us-west-2.compute.internal invalid capacity 0 on image filesystem 2m52s Normal Starting node/ip-10-0-28-42.us-west-2.compute.internal Starting kubelet. 2m51s Normal Synced node/ip-10-0-28-42.us-west-2.compute.internal Node synced successfully 2m47s Normal RegisteredNode node/ip-10-0-28-42.us-west-2.compute.internal Node ip-10-0-28-42.us-west-2.compute.internal event: Registered Node ip-10-0-28-42.us-west-2.compute.internal in Controller 2m35s Normal NodeReady node/ip-10-0-28-42.us-west-2.compute.internal Node ip-10-0-28-42.us-west-2.compute.internal status is now: NodeReady 76s Normal NodeNotReady node/ip-10-0-10-222.us-west-2.compute.internal Node ip-10-0-10-222.us-west-2.compute.internal status is now: NodeNotReady 73s Normal DeletingNode node/ip-10-0-10-222.us-west-2.compute.internal Deleting node ip-10-0-10-222.us-west-2.compute.internal because it does not exist in the cloud provider 71s Normal RemovingNode node/ip-10-0-10-222.us-west-2.compute.internal Node ip-10-0-10-222.us-west-2.compute.internal event: Removing Node ip-10-0-10-222.us-west-2.compute.internal from Controller 70s Normal Unconsolidatable node/ip-10-0-20-192.us-west-2.compute.internal SpotToSpotConsolidation is disabled, can't replace a spot node with a spot node 70s Normal Unconsolidatable nodeclaim/default-6v8kl SpotToSpotConsolidation is disabled, can't replace a spot node with a spot node

3.2) Karpenter 노드 업그레이드

내용

Karpenter는 Kubernetes 클러스터 오토스케일러로, 스케줄링되지 않은 포드를 감지하고 적절한 크기의 노드를 동적으로 프로비저닝하여 인프라 관리를 간소화합니다.

주요 특징

- 스케줄링 불가능한 포드 대응

- CPU, 메모리, 볼륨 요청 및 스케줄링 제약(친화도, 토폴로지 확산 등)을 고려하여 자동 확장

- EC2 Auto Scaling Group과 독립적 운영

- 노드 그룹이나 ASG와 별개로 동작하여 운영 방식을 단순화

- 최신 보안 패치 및 기능을 유지하여 관리 부담 감소

- AMI 선택 지원

- EKS 최적화 AMI(AL2, AL2023, Bottlerocket, Ubuntu, Windows 등) 또는 사용자 정의 AMI 선택 가능

- 사용자 정의 AMI 사용 시

amiSelectorTerms설정 필요

- 자동 패치 기능

- Drift 감지 또는 타이머(expireAfter) 기능을 통해 Karpenter가 프로비저닝한 노드를 자동으로 패치

- 기본 노드 조회

kubectl get nodes -l team=checkout ## 조회 결과 NAME STATUS ROLES AGE VERSION ip-10-0-20-192.us-west-2.compute.internal Ready <none> 2d5h v1.25.16-eks-59bf375

- 적용된 taint 조회

kubectl get nodes -l team=checkout -o jsonpath="{range .items[*]}{.metadata.name} {.spec.taints[?(@.effect=='NoSchedule')]}{\"\n\"}{end}" ## 조회 결과

- checkout에 존재하는 pod 조회

kubectl get pods -n checkout -o wide ## 조회 결과 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES checkout-558f7777c-5tpt9 1/1 Running 0 2d5h 10.0.25.210 ip-10-0-20-192.us-west-2.compute.internal <none> <none> checkout-redis-f54bf7cb5-khx6c 1/1 Running 0 2d5h 10.0.26.220 ip-10-0-20-192.us-west-2.compute.internal <none> <none>

- 모니터링

while true; do kubectl get nodeclaim; echo ; kubectl get nodes -l team=checkout; echo ; kubectl get nodes -l team=checkout -o jsonpath="{range .items[*]}{.metadata.name} {.spec.taints}{\"\n\"}{end}"; echo ; kubectl get pods -n checkout -o wide; echo ; date; sleep 1; echo; done

- 체크아웃 애플리케이션 확장 (

eks-gitops-repo/apps/checkout폴더의deployment.yaml)replicas: 15 # 1 -> 15 로 변경

- 소스 커밋

cd ~/environment/eks-gitops-repo git add apps/checkout/deployment.yaml git commit -m "scale checkout app" git push --set-upstream origin main

- ArgoCD sync 작업 진행

argocd app sync checkout

- Karpenter Node 정보 조회

kubectl get nodes -l team=checkout NAME STATUS ROLES AGE VERSION ip-10-0-20-192.us-west-2.compute.internal Ready <none> 2d5h v1.25.16-eks-59bf375 ip-10-0-6-135.us-west-2.compute.internal Ready <none> 29s v1.25.16-eks-59bf375

- 1.26 버전으로 업그레이드를 위한 ami 조회

aws ssm get-parameter --name /aws/service/eks/optimized-ami/1.26/amazon-linux-2/recommended/image_id \ --region ${AWS_REGION} --query "Parameter.Value" --output text # 조회 결과 ami-086414611b43bb691

default-ec2nc.yaml수정- id: "ami-086414611b43bb691" # 1.26 버전 적용

default-np.yaml수정-

Node 1개씩 순차적으로 반영 설정

spec: disruption: consolidationPolicy: WhenUnderutilized expireAfter: Never budgets: # 해당 코드 추가 - nodes: "1"

-

- 반영전 모니터링

while true; do kubectl get nodeclaim; echo ; kubectl get nodes -l team=checkout; echo ; kubectl get nodes -l team=checkout -o jsonpath="{range .items[*]}{.metadata.name} {.spec.taints}{\"\n\"}{end}"; echo ; kubectl get pods -n checkout -o wide; echo ; date; sleep 1; echo; done

- karpenter 적용

cd ~/environment/eks-gitops-repo git add apps/karpenter/default-ec2nc.yaml apps/karpenter/default-np.yaml git commit -m "disruption changes" git push --set-upstream origin main argocd app sync karpenter

- 로그 확인

kubectl stern -n karpenter deployment/karpenter -c controller

- 진행중 로그

NAME TYPE ZONE NODE READY AGE default-5bhtl c5.large us-west-2a ip-10-0-6-135.us-west-2.compute.internal True 8m31s default-6v8kl r4.large us-west-2b ip-10-0-20-192.us-west-2.compute.internal True 2d5h default-w59p5 c4.large us-west-2b ip-10-0-22-247.us-west-2.compute.internal True 56s NAME STATUS ROLES AGE VERSION ip-10-0-20-192.us-west-2.compute.internal Ready <none> 2d5h v1.25.16-eks-59bf375 ip-10-0-22-247.us-west-2.compute.internal Ready <none> 24s v1.26.15-eks-59bf375 ip-10-0-6-135.us-west-2.compute.internal Ready <none> 8m7s v1.25.16-eks-59bf375 ip-10-0-20-192.us-west-2.compute.internal [{"effect":"NoSchedule","key":"dedicated","value":"CheckoutApp"},{"effect":"NoSchedule","key":"karpenter.sh/disruption","value":"disrupting"}] ip-10-0-22-247.us-west-2.compute.internal [{"effect":"NoSchedule","key":"dedicated","value":"CheckoutApp"}] ip-10-0-6-135.us-west-2.compute.internal [{"effect":"NoSchedule","key":"dedicated","value":"CheckoutApp"}]

- 적용 완료시 로그

NAME TYPE ZONE NODE READY AGE default-w59p5 c4.large us-west-2b ip-10-0-22-247.us-west-2.compute.internal True 3m54s default-wtkwt c5.large us-west-2a ip-10-0-0-170.us-west-2.compute.internal True 2m40s NAME STATUS ROLES AGE VERSION ip-10-0-0-170.us-west-2.compute.internal Ready <none> 2m5s v1.26.15-eks-59bf375 ip-10-0-22-247.us-west-2.compute.internal Ready <none> 3m21s v1.26.15-eks-59bf375 ip-10-0-0-170.us-west-2.compute.internal [{"effect":"NoSchedule","key":"dedicated","value":"CheckoutApp"}] ip-10-0-22-247.us-west-2.compute.internal [{"effect":"NoSchedule","key":"dedicated","value":"CheckoutApp"}]

3.3) EKS Self-managed 노드 업그레이드

- 기본 정보 확인

kubectl get nodes --show-labels | grep self-managed ## 조회 결과 ip-10-0-10-134.us-west-2.compute.internal Ready <none> 2d4h v1.25.16-eks-59bf375 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/instance-type=m5.large,beta.kubernetes.io/os=linux,failure-domain.beta.kubernetes.io/region=us-west-2,failure-domain.beta.kubernetes.io/zone=us-west-2a,k8s.io/cloud-provider-aws=a94967527effcefb5f5829f529c0a1b9,kubernetes.io/arch=amd64,kubernetes.io/hostname=ip-10-0-10-134.us-west-2.compute.internal,kubernetes.io/os=linux,node.kubernetes.io/instance-type=m5.large,node.kubernetes.io/lifecycle=self-managed,team=carts,topology.ebs.csi.aws.com/zone=us-west-2a,topology.kubernetes.io/region=us-west-2,topology.kubernetes.io/zone=us-west-2a ip-10-0-46-171.us-west-2.compute.internal Ready <none> 2d4h v1.25.16-eks-59bf375 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/instance-type=m5.large,beta.kubernetes.io/os=linux,failure-domain.beta.kubernetes.io/region=us-west-2,failure-domain.beta.kubernetes.io/zone=us-west-2c,k8s.io/cloud-provider-aws=a94967527effcefb5f5829f529c0a1b9,kubernetes.io/arch=amd64,kubernetes.io/hostname=ip-10-0-46-171.us-west-2.compute.internal,kubernetes.io/os=linux,node.kubernetes.io/instance-type=m5.large,node.kubernetes.io/lifecycle=self-managed,team=carts,topology.ebs.csi.aws.com/zone=us-west-2c,topology.kubernetes.io/region=us-west-2,topology.kubernetes.io/zone=us-west-2c kubectl get pods -n carts -o wide ## 조회 결과 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES carts-7ddbc698d8-vs95q 1/1 Running 1 (2d4h ago) 2d4h 10.0.47.8 ip-10-0-46-171.us-west-2.compute.internal <none> <none> carts-dynamodb-6594f86bb9-8h4k9 1/1 Running 0 2d4h 10.0.13.242 ip-10-0-10-134.us-west-2.compute.internal <none> <none> # AMI 조회 aws ssm get-parameter --name /aws/service/eks/optimized-ami/1.26/amazon-linux-2/recommended/image_id --region $AWS_REGION --query "Parameter.Value" --output text ami-xxxx

- base.tf 수정

self_managed_node_groups = { default-selfmng = { instance_type = "m5.large" min_size = 1 max_size = 2 desired_size = 2 # Additional configurations ami_id = "ami-086414611b43bb691" # AMI 변경 disk_size = 100 # Optional bootstrap_extra_args = "--kubelet-extra-args '--node-labels=node.kubernetes.io/lifecycle=self-managed,team=carts'" # Required for self-managed node groups create_launch_template = true launch_template_use_name_prefix = true } }

- terraform apply 적용

# 모니터링 while true; do kubectl get nodes -l node.kubernetes.io/lifecycle=self-managed; echo ; aws ec2 describe-instances --query "Reservations[*].Instances[*].[Tags[?Key=='Name'].Value | [0], ImageId]" --filters "Name=tag:Name,Values=default-selfmng" --output table; echo ; date; sleep 1; echo; done # cd ~/environment/terraform/ terraform apply -auto-approve

- 확인

kubectl get nodes -l node.kubernetes.io/lifecycle=self-managed ## 조회 결과 aws ec2 describe-instances --query "Reservations[*].Instances[*].[Tags[?Key=='Name'].Value | [0], ImageId]" --filters "Name=tag:Name,Values=default-selfmng" --output table ## 조회 결과

3.4) fargate 노드 업그레이드

- 기본 정보 확인

kubectl get pods -n assets -o wide ## 확인 결과 assets-7ccc84cb4d-qzfsj 1/1 Running 0 2d4h 10.0.6.242 fargate-ip-10-0-6-242.us-west-2.compute.internal <none> <none> kubectl get node $(kubectl get pods -n assets -o jsonpath='{.items[0].spec.nodeName}') -o wide ## 확인 결과 NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME fargate-ip-10-0-6-242.us-west-2.compute.internal Ready <none> 2d4h v1.25.16-eks-2d5f260 10.0.6.242 <none> Amazon Linux 2 5.10.234-225.910.amzn2.x86_64 containerd://1.7.25

- 디플로이먼트 재시작시 Restart 으로 신규 버전 마이그레이션 진행

# 디플로이먼트 재시작 kubectl rollout restart deployment assets -n assets # asset 신규 파드 배포 일시 대기 kubectl wait --for=condition=Ready pods --all -n assets --timeout=180s

- 신규 파드가 Ready 상태가 되면 Fargate 노드의 버전을 확인

kubectl get pods -n assets -o wide ## 확인 결과 assets-dd595ff54-7z54b 1/1 Running 0 94s 10.0.18.197 fargate-ip-10-0-18-197.us-west-2.compute.internal <none> <none> kubectl get node $(kubectl get pods -n assets -o jsonpath='{.items[0].spec.nodeName}') -o wide ## 확인 결과 NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME fargate-ip-10-0-18-197.us-west-2.compute.internal Ready <none> 70s v1.26.15-eks-2d5f260 10.0.18.197 <none> Amazon Linux 2 5.10.234-225.910.amzn2.x86_64 containerd://1.7.25

4) Data Plain 확인

kubectl get node

## 조회 결과

fargate-ip-10-0-18-197.us-west-2.compute.internal Ready <none> 49m v1.26.15-eks-2d5f260

ip-10-0-0-170.us-west-2.compute.internal Ready <none> 3m31s v1.26.15-eks-59bf375

ip-10-0-13-56.us-west-2.compute.internal Ready <none> 3h10m v1.26.15-eks-59bf375

ip-10-0-22-247.us-west-2.compute.internal Ready <none> 4m47s v1.26.15-eks-59bf375

ip-10-0-26-122.us-west-2.compute.internal Ready <none> 3h11m v1.26.15-eks-59bf375

ip-10-0-28-42.us-west-2.compute.internal Ready <none> 25m v1.26.15-eks-59bf375

ip-10-0-37-112.us-west-2.compute.internal Ready <none> 20m v1.26.15-eks-59bf375

ip-10-0-8-175.us-west-2.compute.internal Ready <none> 61m v1.26.15-eks-59bf375

kubectl get node --label-columns=eks.amazonaws.com/capacityType,node.kubernetes.io/lifecycle,karpenter.sh/capacity-type,eks.amazonaws.com/compute-type

## 조회 결과

NAME STATUS ROLES AGE VERSION CAPACITYTYPE LIFECYCLE CAPACITY-TYPE COMPUTE-TYPE

fargate-ip-10-0-18-197.us-west-2.compute.internal Ready <none> 50m v1.26.15-eks-2d5f260 fargate

ip-10-0-0-170.us-west-2.compute.internal Ready <none> 4m42s v1.26.15-eks-59bf375 spot

ip-10-0-13-56.us-west-2.compute.internal Ready <none> 3h11m v1.26.15-eks-59bf375 ON_DEMAND

ip-10-0-22-247.us-west-2.compute.internal Ready <none> 5m58s v1.26.15-eks-59bf375 spot

ip-10-0-26-122.us-west-2.compute.internal Ready <none> 3h13m v1.26.15-eks-59bf375 ON_DEMAND

ip-10-0-28-42.us-west-2.compute.internal Ready <none> 26m v1.26.15-eks-59bf375 self-managed

ip-10-0-37-112.us-west-2.compute.internal Ready <none> 21m v1.26.15-eks-59bf375 self-managed

ip-10-0-8-175.us-west-2.compute.internal Ready <none> 62m v1.26.15-eks-59bf375 ON_DEMAND

kubectl get node -L eks.amazonaws.com/nodegroup,karpenter.sh/nodepool

## 조회 결과

fargate-ip-10-0-18-197.us-west-2.compute.internal Ready <none> 51m v1.26.15-eks-2d5f260

ip-10-0-0-170.us-west-2.compute.internal Ready <none> 5m9s v1.26.15-eks-59bf375 default

ip-10-0-13-56.us-west-2.compute.internal Ready <none> 3h12m v1.26.15-eks-59bf375 initial-2025033004585862020000002a

ip-10-0-22-247.us-west-2.compute.internal Ready <none> 6m25s v1.26.15-eks-59bf375 default

ip-10-0-26-122.us-west-2.compute.internal Ready <none> 3h13m v1.26.15-eks-59bf375 initial-2025033004585862020000002a

ip-10-0-28-42.us-west-2.compute.internal Ready <none> 27m v1.26.15-eks-59bf375

ip-10-0-37-112.us-west-2.compute.internal Ready <none> 21m v1.26.15-eks-59bf375

ip-10-0-8-175.us-west-2.compute.internal Ready <none> 62m v1.26.15-eks-59bf375 green-mng-20250401094211402300000007

kubectl get node --label-columns=node.kubernetes.io/instance-type,kubernetes.io/arch,kubernetes.io/os,topology.kubernetes.io/zone

## 조회 결과

fargate-ip-10-0-18-197.us-west-2.compute.internal Ready <none> 51m v1.26.15-eks-2d5f260 amd64 linux us-west-2b

ip-10-0-0-170.us-west-2.compute.internal Ready <none> 5m30s v1.26.15-eks-59bf375 c5.large amd64 linux us-west-2a

ip-10-0-13-56.us-west-2.compute.internal Ready <none> 3h12m v1.26.15-eks-59bf375 m5.large amd64 linux us-west-2a

ip-10-0-22-247.us-west-2.compute.internal Ready <none> 6m46s v1.26.15-eks-59bf375 c4.large amd64 linux us-west-2b

ip-10-0-26-122.us-west-2.compute.internal Ready <none> 3h13m v1.26.15-eks-59bf375 m5.large amd64 linux us-west-2b

ip-10-0-28-42.us-west-2.compute.internal Ready <none> 27m v1.26.15-eks-59bf375 m5.large amd64 linux us-west-2b

ip-10-0-37-112.us-west-2.compute.internal Ready <none> 22m v1.26.15-eks-59bf375 m5.large amd64 linux us-west-2c

ip-10-0-8-175.us-west-2.compute.internal Ready <none> 63m v1.26.15-eks-59bf375 m5.large amd64 linux us-west-2a