This article is about conditioned generation with diffusion model.

Diffusion Models Beat GANs on Image Synthesis is the first work of guiding image synthesis with classifier guidance, while recent advancement includes another approaches such as classifier-free guidance.

1. Recap: Diffusion model

Generative modeling in diffusion model can be understood by learning reversed gradual noising process, intuitively corresponds to the denoising action. Ho et al. formulated the variational lower bound loss into a simple training objecive which is a mean-squared error loss between the true noise and the predicted noise.

Remark:

Estimating a noise also has a linkage with Song et al., which aims at estimating gradients of the data distribution via score matching.

Sampling from a noise predictor can be done by learning a gaussian transition kernel of reverse diffusion process, where the mean can be calculated as a function of while variance can be fixed into a known constant or learned with separate neural network.

There were some improvements after Ho et al., for instance Nichol and Dhariwal found that fixing the variance is suboptimal for sampling with fewer diffusion steps. They proposed a parametrization of by interpolating between with learned scalar , i.e.

2. Classifier guidance

GANs for conditional image synthesis heavily exploit class labels to add class-conditoinal normalization statistics as well as "discriminators" which explicitly behave like classifiers . Conditional diffusion models resemble this philosophy, especially incorporate class information into normalization layers and exploit classifier to improve a diffusion generator.

Simply, adaptive group normalization(AdaGN) layer is proposed to incorporate class information into normalization layers by explicitly performing a transformation of

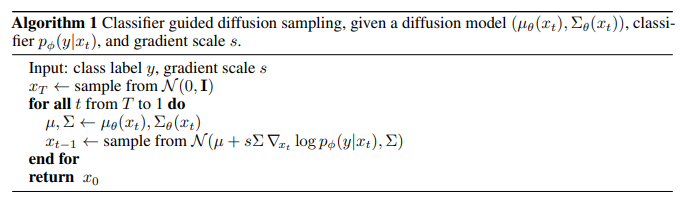

In this paper, authors introduce two methods of conditional sampling using classifiers. Each of the method is applied at DDPM and DDIM, respectively.

Conditional Reverse Noising Process

Classifier guided diffusion sampling follows the equation above. In this section, we will discuss about mathematical justification of this sampling process.

Unconditional reverse noising process at conventional diffusion model, should be conditioned on a label . We can think of a classifier to guide each transition into , where is a normalization constant. It is mathematically intractable for the most cases, but it can be approximated as a perturbed guassian distribution with shifted mean.

We can approximate the log of class probability with taylor expansion under assumption that has low curvature compared to .

Here, we substitute to obtain .

This gives

In detail, authors introduced an additional parameter to rescale classifier gradients. So, the clasifier guided diffusion sampling can be done through the following process.

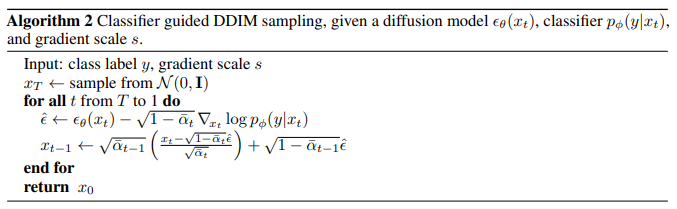

Conditional Sampling for DDIM

Previosly described conditional sampling is invalid for deterministic sampling methods like DDIM. We can adopt a score-based conditioning trick to derive a new formulation of estimated score.

At score-based generative modeling, we have a noise predictor which is used to derive a score function

We can derive a score function for

We obtain a new predictor which corresponds to the score of joint distribuiton: . Thus, conditional sapmling for DDIM can be done with the following process.

3. Next article

In this article, we discussed about conditional generation with diffusion model. DDPM have been already discussed in the previous article, but DDIM and the concept of score-matching is weakly introduced.

So in the next article, we'll be able to reveal the relationship between DDPM and energy-based models, including noise-conditoned score networks(NCSN) proposed at Song et al. and DDIM.