from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import RandomizedSearchCV

Bayesian Optimization

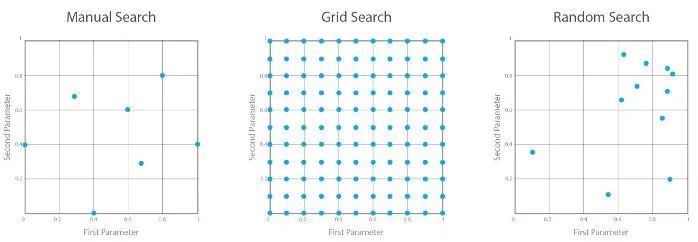

- Grid Search와 Random Search는 이전까지의 조사 과정에서 얻어진 hyperparameter 값들의 성능 결과에 대한 '사전 지식'이 전혀 반영되어 있지 않기 때문에 비효율적인 요소가 있음.

- 매 회 새로운 hyperparameter 값에 대한 조사를 수행할 시 '사전 지식'을 충분히 반영하면서, 동시에 전체적인 탐색 과정을 체계적으로 수행할 수 있는 방법이 Bayesian Optimization임.

- https://github.com/fmfn/BayesianOptimization

-Bayes Optimization 기초부터 XGB까지

- Bayesian Optimization with Optuna

You can optimize Scikit-Learn hyperparameters in three steps:

1. Wrap model training with an objective function and return accuracy

2. Suggest hyperparameters using a trial object

3. Create a study object and execute the optimization

def objective(trial):

# optuna.trial.Trial.suggest_categorical() for categorical parameters

# optuna.trial.Trial.suggest_int() for integer parameters

# optuna.trial.Trial.suggest_float() for floating point parameters

rf_n_estimators = trial.suggest_int("n_estimators", 100, 2000, step=100)

rf_max_depth = trial.suggest_int("max_depth", 2, 64, log=True)

rf_criterion = trial.suggest_categorical("criterion", ["gini", "entropy"])

classifier_obj = ensemble.RandomForestClassifier(

n_estimators=rf_n_estimators,

max_depth=rf_max_depth,

criterion=rf_criterion,

n_jobs=-1,

random_state=0

)

score = model_selection.cross_val_score(classifier_obj, X, y, cv=5, n_jobs=-1)

accuracy = score.mean()

return accuracy

study = optuna.create_study(direction="maximize")

study.optimize(objective, n_trials=72)

print(study.best_trial)ML_0925_03_model_tuning_supp.ipynb

Hyperparameter Optimization(그룹과제).ipynb

.jpg)