[Tensorflow] 2. Convolutional Neural Networks in Tensorflow (4 week Multiclass Classifications) - Programming

Tensorflow_certification(텐서플로우 자격증)

목록 보기

28/71

[Tensorflow] 2. Convolutional Neural Networks in Tensorflow (4 week Multiclass Classifications) - Programming

다중 클래스 분류기 (Multi-class classifier)

- 여기서는 두 개 이상의 클래스를 구별하는 모델을 구축하는 방법을 살펴본다. 코드는 모델과 훈련 매개변수의 몇 가지 주요 변경 사항을 제외하고 이전에 사용했던 코드와 유사하다.

[1] Data download & prepare the dataset

import requests

url_1 = 'https://storage.googleapis.com/tensorflow-1-public/course2/week4/rps.zip'

fileName_1= 'rps.zip'

url_2 = 'https://storage.googleapis.com/tensorflow-1-public/course2/week4/rps-test-set.zip'

fileName_2 = 'rps-test-set.zip'

response = requests.get(url_1)

with open(fileName_1, 'wb') as f:

f.write(response.content)

response = requests.get(url_2)

with open(fileName_2, 'wb') as f:

f.write(response.content)import zipfile

local_zip = './rps.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('./rps-train')

zip_ref.close()

local_zip = './rps-test-set.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('./rps-test')

zip_ref.close()import os

base_dir = './rps-train/rps/'

rock_dir = os.path.join(base_dir, 'rock')

paper_dir = os.path.join(base_dir, 'paper')

scissors_dir = os.path.join(base_dir, 'scissors')

print(rock_dir)

print(paper_dir)

print(scissors_dir)

print('total training rock images:', len(os.listdir(rock_dir)))

print('total training paper images:', len(os.listdir(paper_dir)))

print('total training scissors images:', len(os.listdir(scissors_dir)))

rock_files = os.listdir(rock_dir)

print(rock_files[:10])

paper_files = os.listdir(paper_dir)

print(paper_files[:10])

scissors_files = os.listdir(scissors_dir)

print(scissors_files[:10])

# output

./rps-train/rps/rock

./rps-train/rps/paper

./rps-train/rps/scissors

total training rock images: 840

total training paper images: 840

total training scissors images: 840

['rock04-059.png', 'rock01-108.png', 'rock04-065.png', 'rock05ck01-067.png', 'rock05ck01-073.png', 'rock04-071.png', 'rock05ck01-098.png', 'rock02-008.png', 'rock07-k03-013.png', 'rock02-034.png']

['paper03-088.png', 'paper05-026.png', 'paper05-032.png', 'paper03-077.png', 'paper03-063.png', 'paper02-099.png', 'paper04-037.png', 'paper04-023.png', 'paper02-066.png', 'paper02-072.png']

['testscissors03-040.png', 'testscissors03-054.png', 'testscissors03-068.png', 'testscissors03-083.png', 'testscissors03-097.png', 'scissors03-113.png', 'scissors03-107.png', 'testscissors02-051.png', 'testscissors02-045.png', 'scissors01-002.png'][2] Model build & compile

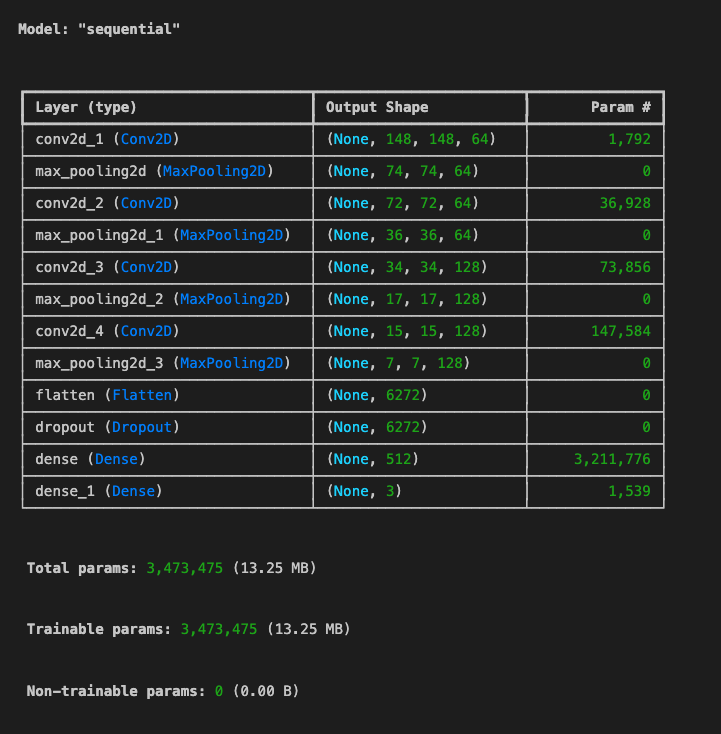

- CNN을 모델을 구축하는데, 64-64-128-128 필터가 포함된 4개의 컨볼루션 레이어를 사용한 다음 과적합을 방지하기 위해 Dropout 레이어를 추가하고 분류를 위해 일부 Dense 레이어를 추가한다.

- 출력 레이어는 Softmax에 의해 활성화된 3개의 뉴런으로 구성된 Dense 레이어이다. 출력을 합산하면 1이 되는 확률 집합으로 확장된다.

- 이 3개 뉴런 출력의 순서는 가위바위보가 됩니다(예: [0.8 0.2 0.0] 출력은 모델이 종이에 대한 확률을 80%로 예측하고 바위일 확률은 20% 이다.

아래의 model.summary()를 사용하여 아키텍처를 검사할 수 있다.

import tensorflow as tf

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(64, (3,3), input_shape=(150,150,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dense(3, activation='softmax')

])

model.summary()

모델을 컴파일한다.

model.compile(

loss = 'categorical_crossentropy',

optimizer= 'rmsprop',

metrics=['accuracy']

)[3] ImageGenerator 인스턴스 생성

from tensorflow.keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(

rescale=1./255.,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

horizontal_flip=True,

fill_mode='nearest',

zoom_range=0.2

)

test_datagen = ImageDataGenerator(

rescale=1./255.

)

train_generator = train_datagen.flow_from_directory(

'rps-train/rps',

target_size=(150,150),

batch_size=126,

class_mode = 'categorical'

)

validation_generator = test_datagen.flow_from_directory(

'rps-test/rps-test-set/',

target_size=(150,150),

batch_size=126,

class_mode='categorical',

)

# output

Found 2520 images belonging to 3 classes.

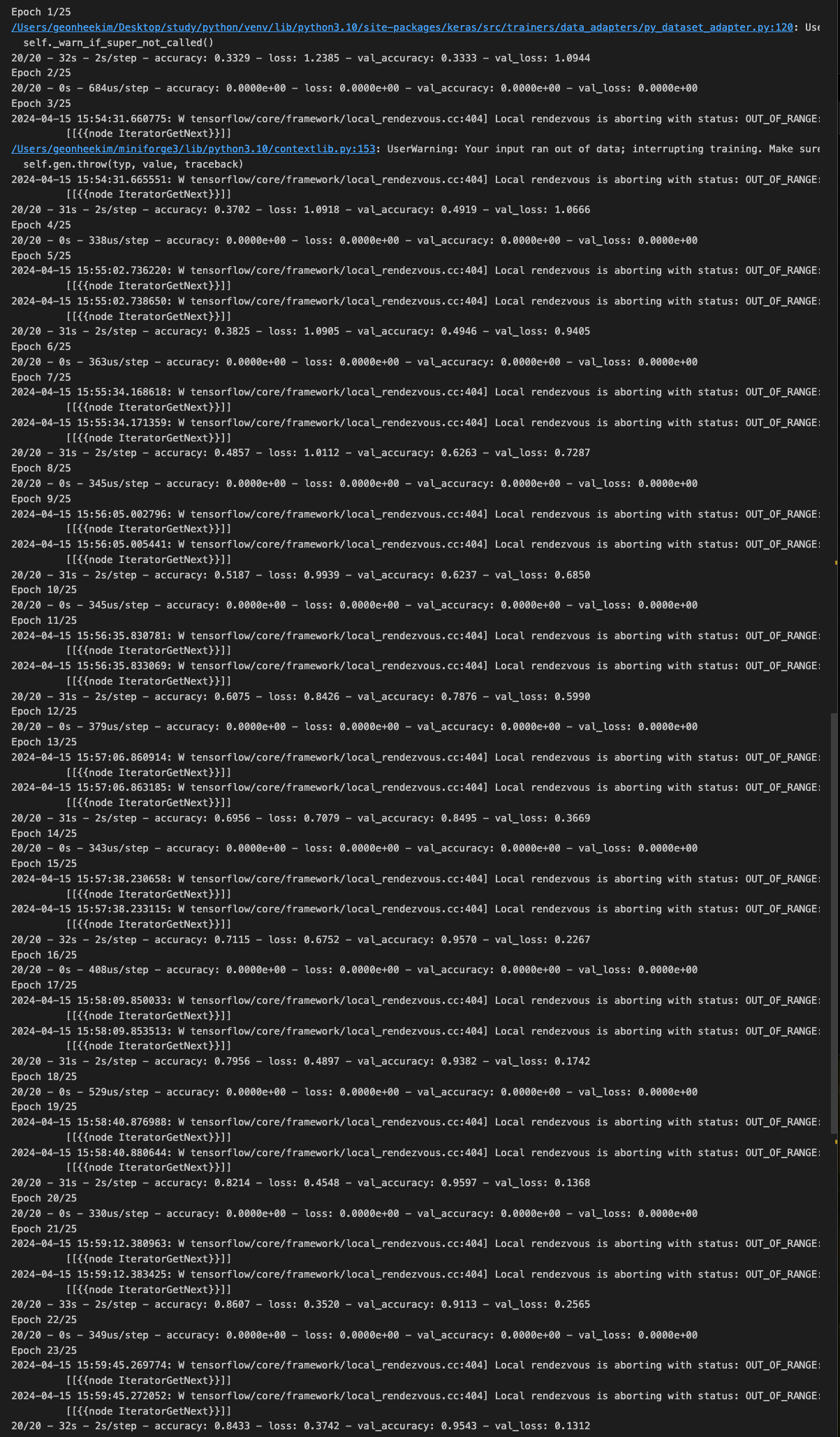

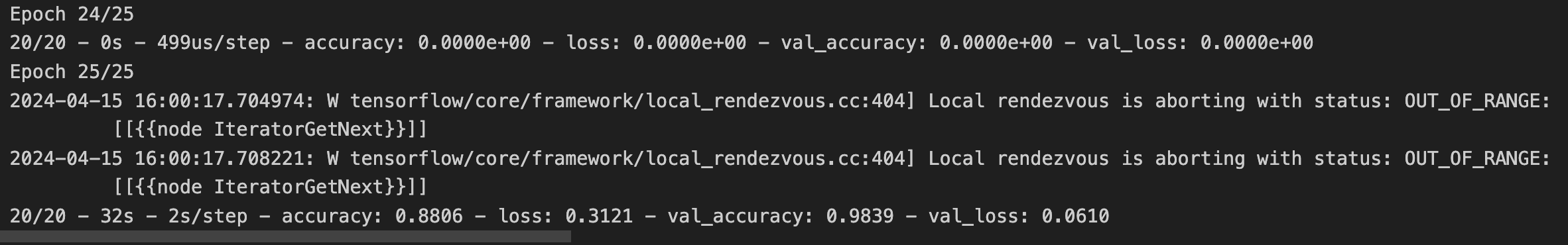

Found 372 images belonging to 3 classes.[4] model training

history = model.fit(train_generator,

steps_per_epoch=20,

validation_data=validation_generator,

epochs=25,

validation_steps=3,

verbose=2)

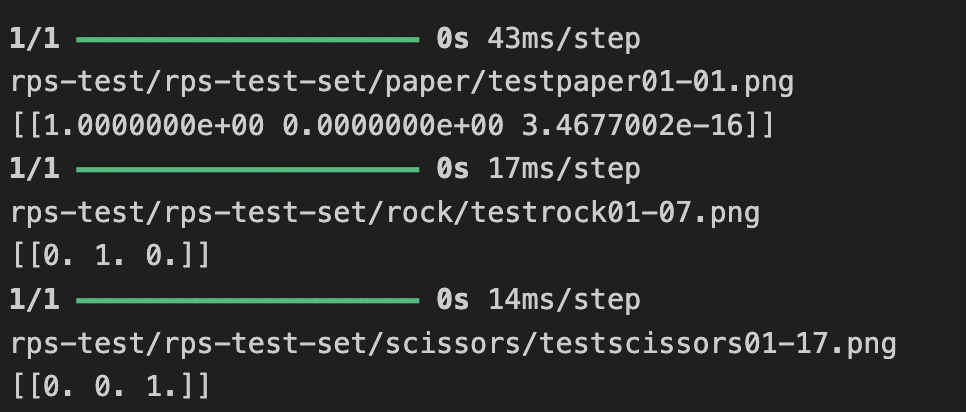

[5] Model Prediction

import numpy as np

from tensorflow.keras.utils import load_img, img_to_array

file1 = 'rps-test/rps-test-set/paper/testpaper01-01.png'

file2 = 'rps-test/rps-test-set/rock/testrock01-07.png'

file3 = 'rps-test/rps-test-set/scissors/testscissors01-17.png'

for file in [file1, file2, file3]:

img = load_img(file, target_size=(150,150))

x = img_to_array(img)

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

print(file)

print(classes)