실습 2

--- Service

vi nginx-pod.yaml

# vi nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx-pod

spec:

containers:

- name: nginx-pod-container

image: nginx

ports:

- containerPort: 8080- 적용

$ kubectl apply -f nginx-pod.yaml

$ kubectl get pod -o wide

$ kubectl describe pod nginx-podvi clusterip-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: clusterip-service-pod

spec:

type: ClusterIP

externalIPs:

- 192.168.56.101

selector:

app: nfs-pvc-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

$ kubectl apply -f clusterip-svc.yaml

$ kubectl get svc -o wide

$ kubectl describe svc clusterip-service-pod

$ kubectl edit svc clusterip-service-podvi nodeport-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nodeport-service-pod

spec:

type: NodePort

selector:

app: nginx-pod

ports:

- protocol: TCP

port: 80

targetPort: 80 # 컨테이너 포트가 맞다.

nodePort: 30080- 적용

$ kubectl apply -f nodeport-svc.yaml

$ kubectl get svc -o wide

$ kubectl describe svc nodeport-service-pod

$ kubectl edit svc nodeport-service-pod

# 라벨까지 표기

$ kubectl get pod -o wide --show-labelsvi loadbalancer-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-pod

spec:

type: LoadBalancer

externalIPs:

- 192.168.1.188

- 192.168.1.211

- 192.168.1.216

selector:

app: nginx-pod

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

$ kubectl apply -f loadbalancer-svc.yaml

$ kubectl get svc -o wide

$ kubectl describe svc loadbalancer-service-pod--- ReplicaSet

# vi replicaset.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: nginx-replicaset

spec:

replicas: 3 # desired state (kube-controller-manager)

selector:

matchLabels:

app: nginx-replicaset

template:

metadata:

name: nginx-replicaset

labels:

app: nginx-replicaset

spec:

containers:

- name: nginx-replicaset-container

image: nginx

ports:

- containerPort: 80- 적용

# kubectl apply -f replicaset.yaml

# kubectl get replicasets.apps -o wide

# kubectl describe replicasets.apps nginx-replicasetvi clusterip-replicaset.yaml

apiVersion: v1

kind: Service

metadata:

name: clusterip-service-replicaset

spec:

type: ClusterIP

selector:

app: nginx-replicaset

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

$ kubectl apply -f clusterip-replicaset.yaml

$ kubectl get svc -o wide

$ kubectl describe svc clusterip-service-replicasetvi nodeport-replicaset.yaml

apiVersion: v1

kind: Service

metadata:

name: nodeport-service-replicaset

spec:

type: NodePort

selector:

app: nginx-replicaset

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30080- 적용

$ kubectl apply -f nodeport-replicaset.yaml

$ kubectl get svc -o wide

$ kubectl describe svc nodeport-service-replicasetvi loadbalancer-replicaset.yaml

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-replicaset

spec:

type: LoadBalancer

externalIPs:

- 192.168.1.125

#- 192.168.1.211

#- 192.168.1.216

selector:

app: nginx-replicaset

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

$ kubectl apply -f loadbalancer-replicaset.yaml

$ kubectl get svc -o wide

$ kubectl describe svc loadbalancer-service-replicaset--- Deployment

vi deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx-deployment

template:

metadata:

name: nginx-deployment

labels:

app: nginx-deployment

spec:

containers:

- name: nginx-deployment-container

image: nginx

ports:

- containerPort: 80- 적용

$ kubectl apply -f deployment.yaml

$ kubectl get deployments.apps -o wide

$ kubectl describe deployments.apps nginx-deploymentvi clusterip-deployment.yaml

apiVersion: v1

kind: Service

metadata:

name: clusterip-service-deployment

spec:

type: ClusterIP

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

$ kubectl apply -f clusterip-deployment.yaml

$ kubectl get svc -o wide

$ kubectl describe svc clusterip-service-deploymentvi nodeport-deployment.yaml

apiVersion: v1

kind: Service

metadata:

name: nodeport-service-deployment

spec:

type: NodePort

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30080- 적용

$ kubectl apply -f nodeport-deployment.yaml

$ kubectl get svc -o wide

$ kubectl describe svc nodeport-service-deploymentvi loadbalancer-deployment.yaml

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-deployment

spec:

type: LoadBalancer

externalIPs:

- 192.168.2.125

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

$ kubectl apply -f loadbalancer-deployment.yaml

$ kubectl get svc -o wide

$ kubectl describe svc loadbalancer-service-deployment리소스 정리

$ kubectl get all

$ kubectl delete pod,svc --all

$ kubectl delete replicaset,svc --all

$ kubectl delete deployment,svc --allDeployment 롤링 업데이트 제어

$ kubectl set image deployment.apps/nginx-deployment nginx-deployment-container=halilinux/web-site:v1.0

$ kubectl get all

$ kubectl rollout history deployment nginx-deployment

# 리비전2 상세보기

$ kubectl rollout history deployment nginx-deployment --revision=2

# 롤백(전 단계로 복원)

$ kubectl rollout undo deployment nginx-deployment

$ kubectl get all

$ kubectl rollout history deployment nginx-deployment

# 리비전3 상세보기

$ kubectl rollout history deployment nginx-deployment --revision=3

# 리비전2로 복원

$ kubectl rollout undo deployment nginx-deployment --to-revision=2pod 강제 삭제

$kubectl delete pods <pod> --grace-period=0 --force pod

multi-container

vi multipod.yaml

apiVersion: v1

kind: Pod

metadata:

name: multipod

spec:

containers:

- name: nginx-container #1번째 컨테이너

image: nginx

ports:

- containerPort: 80

# base 이미지는 도커에서 실행하면 바로 꺼지기 때문에, 이를 구동하기 위해서

# 살리기 위해서 커맨드를 수행

- name: centos-container #2번째 컨테이너

image: centos:7

command:

- sleep

- "10000"- 적용

# Pod 안의 컨테이너가 두개라면 컨테이너를 지정해야한다.

$ kubectl exec -it multipod --container nginx-container -- bash

# 컨테이너를 명시하지 않는다면 첫번째 컨테이너를 선택

$ kubectl exec -it multipod -- bashwp-multi-container

- pod의 이름은 컨테이너안에서는 마치 도메인처럼 쓰인다.

$ vi wp-multi-container.yaml

----------------------------------------

apiVersion: v1

kind: Pod

metadata:

name: wordpress-pod

labels:

app: wordpress-pod

spec:

containers:

- name: mysql-container

#image: mysql:5.7

image: 192.168.1.143:5000/mysql:5.7

env:

- name: MYSQL_ROOT_HOST

value: '%' # wpuser@%

- name: MYSQL_ROOT_PASSWORD

value: mode1752

- name: MYSQL_DATABASE

value: wordpress

- name: MYSQL_USER

value: wpuser

- name: MYSQL_PASSWORD

value: wppass

ports:

- containerPort: 3306

- name: wordpress-container

#image: wordpress

image: 192.168.1.143:5000/wordpress:latest

env:

- name: WORDPRESS_DB_HOST

value: wordpress-pod:3306

- name: WORDPRESS_DB_USER

value: wpuser

- name: WORDPRESS_DB_PASSWORD

value: wppass

- name: WORDPRESS_DB_NAME

value: wordpress

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-deployment-wordpress

spec:

type: LoadBalancer

externalIPs:

- 192.168.2.125

selector:

app: wordpress-pod

ports:

- protocol: TCP

#- name: web-port

# protocol: TCP

port: 80

targetPort: 80

# 이런식으로 추가한다면 외부서도 DB 접속이 가능

#- name: db-port

# protocol: TCP

# port: 3306

# ClusterIP 를 통해서 부여해보기 ExternalIp를 내가 노드중 하나의 IP로 부여해보면 접속이 가능

---

apiVersion: v1

kind: Service

metadata:

name: clusterip-service-deployment-wordpress

spec:

type: ClusterIP

externalIPs:

- 192.168.2.126

selector:

app: wordpress-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

name: web-port

- protocol: TCP

port: 3306

targetPort: 3306

name: db-port

----------------------------------------도커 저장소구축

- Worker1,2 스펙 변경

- RAM 4GB, CPU 2 => RAM 2GB, CPU 1

- 추가 VM : RAM 2GB, CPU 1

$ docker run -d -p 5000:5000 --restart=always --name private-docker-registry registry # 저장소 서버

# 각 쿠버네티스 노드에서 수행

# 클라이언트 세팅

$ vi /etc/docker/daemon.json # 클라이언트

{ "insecure-registries":["도커의 IP":5000"] }

$ systemctl restart docker

- 이미지 올리기

$ docker tag nginx:latest 192.168.1.143:5000/nginx

$ docker push 192.168.1.143:5000/nginx- 리포지토리 조회

$ curl -X GET http://192.168.1.143:5000/v2/_catalog

$ curl -X GET http://192.168.1.143:5000/v2/nginx/tags/list

$ curl -X GET http://192.168.1.143:5000/v2/web-site/tags/listVolume

- pv/pvc

vi pv-pvc-pod.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Mi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Mi

selector:

matchLabels:

type: local

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

labels:

app: task-pv-pod

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- name: task-pv-container

#image: nginx

image: 192.168.1.143:5000/nginx:latest

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage- 확인

# Master 노드에서 수행

* kubectl get pod -o wide # "test-pv-volume" 볼륨이 어떤 워크 노드에 있느지 알아야한다.

* rm -rf /mnt/data

* kubectl apply -f .

* echo "HELLO" > /mnt/data/index.html # 볼륨이 생성된 노드서 수행

# 마스터 노드에서 수행

* kubectl expose pod task-pv-pod --name nodeport --type=NodePort --port 80

* kubectl get svc

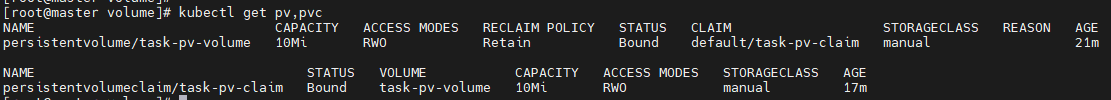

* kubectl get pv,pvc

# 마스터 노드에서 "task-pv-pod" 파드를 지워도 볼륨은 남아 있다.

* kubectl delete pod task-pv-pod

# "pvc" 제거

* kubectl delete pvc task-pv-claim

* Retain 동일한 스토리지 자산을 재사용하려는 경우, 동일한 스토리지 자산 정의로 새 퍼시스턴트볼륨을 생성한다.

RECLAIM POLICY - Retain(PVC 가 지워져도 데이터를 유지)

ACCESS MODES - RWO(; ReadWriteOnce)

CLAIM - PVC 가 어디가 바인드 되어있는지 확인 가능

RWO : 볼륨은 단일 노드에서 읽기-쓰기로 마운트할 수 있습니다. ReadWriteOnce 액세스 모드는 포드가 동일한 노드에서 실행 중일 때 여러 포드가 볼륨에 액세스하도록 허용할 수 있습니다.

NFS

- nfs ( docker 저장소에서 )

$ yum install -y nfs-utils.x86_64

$ mkdir /nfs_shared

$ chmod 777 /nfs_shared/

$ echo '/nfs_shared 192.168.0.0/21(rw,sync,no_root_squash)' >> /etc/exports

$ systemctl enable --now nfs

$ systemctl stop firewalld

- 노드와 와 마스터 노드에서

$ yum install -y nfs-utils.x86_64vi nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

# Mi - 이전법에 따른 MB 표현

storage: 100Mi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

# nfs 저장소의 IP (도커의 IP)

server: 192.168.1.143

path: /nfs_shared

# hostPath - 호스트의 일정 경로를 이용하겠다.- 적용

$ kubectl apply -f nfs-pv.yaml

$ kubectl get pvvi nfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

# 접근 모드

accessModes:

- ReadWriteMany

resources:

# 요청할 스토리지 공간의 크기 (100 MB 중 10 MB 사용 요청)

requests:

storage: 10Mi- 적용

$ kubectl apply -f nfs-pvc.yaml

$ kubectl get pvc

$ kubectl get pv

$ kubectl get pv,pvcvi nfs-pvc-deploy.yaml

- 'pv' 와 'pvc' 를 연동해서 'deplyment' 에 적용

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-pvc-deploy

spec:

replicas: 4

selector:

matchLabels:

# 템플릿 부분의 라벨과 동일 해야한다.

app: nfs-pvc-deploy

template:

metadata:

# 스펙의 셀렉터의 "matchLabels" 의 정보와 "labels" 의 이름이 같아야하며

# 이는 아래 "템플릿.spe"c 에 정의된 "containers" 를 이용한다.

labels:

app: nfs-pvc-deploy

spec:

containers:

- name: nginx

image: 192.168.1.143:5000/nginx:latest

volumeMounts:

- name: nfs-vol

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-vol

persistentVolumeClaim:

claimName: nfs-pvc- 실습

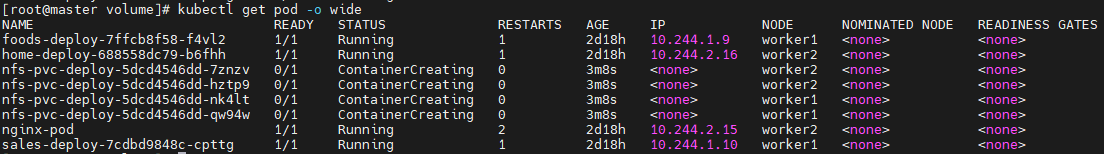

$ kubectl apply -f nfs-pvc-deploy.yaml

$ kubectl get pod

$ - ❗ 워커 1,2는 nfs 마운트 하는 법을 모르니 워커 1,2에 nfs-utils를 설치해야한다. ❗

ConfigMap

- 컨피그맵은 컨테이너를 필요한 환경 설정은 컨테이너와 분리해서 제공하는 기능

- 개발용과 상용 서비스에서 서로 다른 설정이 필요할 경우

- 다른 설정으로 컨테이너를 실행해야될 경우 사용하는 것

- 컨테이너 이미지에서 환경별 구성을 분리하여, 애플리케이션을 쉽게 이식할 수 있습니다.

- 컨피그맵은 키-값 쌍으로 기밀이 아닌 데이터를 저장하는 데 사용하는 API 오브젝트

- 파드는 볼륨에서 환경 변수, 커맨드-라인 인수 또는 구성 파일로 컨피그맵을 사용할 수 있습니다.

워크스페이스 설정

# master node

$ cd ~

$ mkdir configmap && cd $_ConfigMap 실습 1

vi configmap-dev.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: config-dev

namespace: default

data:

DB_URL: localhost

DB_USER: myuser

DB_PASS: mypass

DEBUG_INFO: debug- 적용

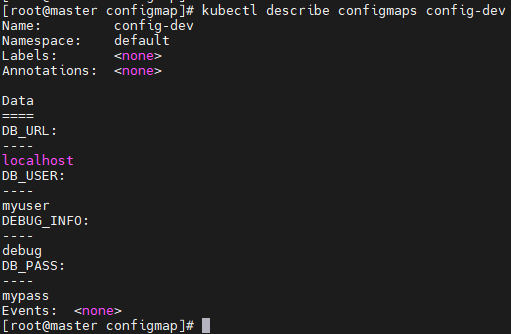

$ kubectl apply -f configmap-dev.yaml

$ kubectl describe configmaps config-dev- 결과

DATA정보 밑에 변수 명("DB_URL")과 , 변수 값("localhost")이 나온다.

vi deployment-config01.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: configapp

labels: # deployment의 라벨

app: configapp

spec:

replicas: 1

selector:

matchLabels:

app: configapp # 템플릿에 정의도 라벨

template:

metadata:

labels: # 'matchLabels' 에서 사용되는 라벨

app: configapp

spec:

containers:

- name: testapp

image: nginx

ports:

- containerPort: 8080

env:

# "DEBUG_INFO" 를 가져와 "DEBUG_LEVEL" 변수를 만들겠다.

- name: DEBUG_LEVEL

valueFrom:

# 컨테이너 안쪽의 환경 변수 값으로 이용되었다.

configMapKeyRef: # configMapKeyRef - 컨피그맵에서 정보를 가져오겠다.

name: config-dev # 그중에 'config-dev' 라는 컨피그 맵의 정보를 가져오겠다.

key: DEBUG_INFO # 'DEBUG_INFO' 에 있는 "value" 를 가져오겠따.

---

apiVersion: v1

kind: Service

metadata:

labels:

app: configapp

name: configapp-svc

namespace: default

spec:

type: NodePort

ports:

- nodePort: 30800

port: 8080

protocol: TCP

# "port" 와 컨테이너가 수신할 포트와 다르다면 "tartgetPort" 를 명시해야한다.

targetPort: 80

selector:

app: configapp- 적용

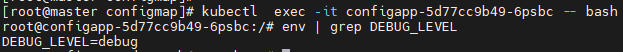

$ kubectl apply -f deployment-config01.yamlkubectl apply -f deployment-config01.yaml

$ kubectl exec -it configapp-5d77cc9b49-6psbc -- bash

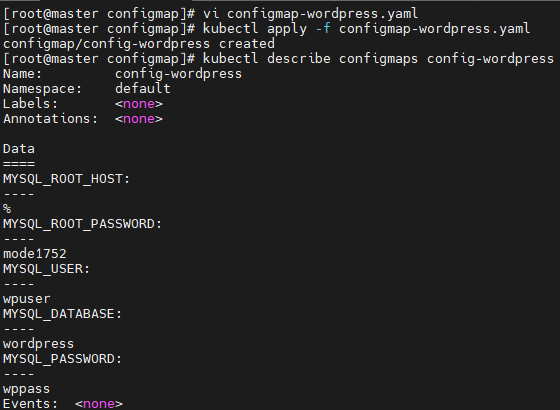

ConfigMap 실습 2 - WP

vi configmap-wordpress.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: config-wordpress

namespace: default

data:

MYSQL_ROOT_HOST: '%'

MYSQL_ROOT_PASSWORD: mode1752

MYSQL_DATABASE: wordpress

MYSQL_USER: wpuser

MYSQL_PASSWORD: wppass- 적용

$ kubectl apply -f configmap-wordpress.yaml

$ kubectl describe configmaps config-wordpress- 결과

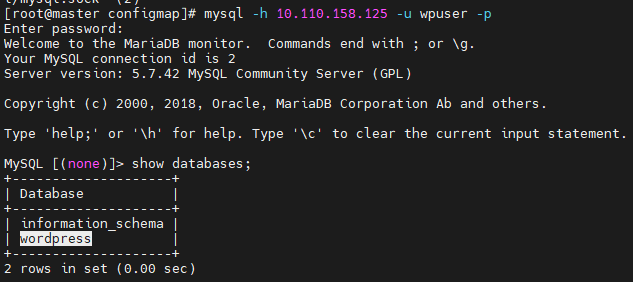

vi mysql-pod-svc.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

labels:

app: mysql-pod

spec:

containers:

- name: mysql-container

image: mysql:5.7

# "configMapRef" - 'config-wordpress' 에서

# config 정보를 통으로 가져온다.

envFrom:

- configMapRef:

name: config-wordpress

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-pod

ports:

- protocol: TCP

port: 3306

targetPort: 3306- 적용

$ kubectl apply -f mysql-pod-svc.yaml

$ kubectl get pod- 결과

vi wordpress-pod-svc.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress-pod

labels:

app: wordpress-pod

spec:

containers:

- name: wordpress-container

image: wordpress

# 환경변수 정의

env:

# "name" - 환경 변수 명

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

# "config-wordpress" 라는 컨피그맵에서 'MYSQL_USER' 를 가져옴

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

externalIPs:

- 192.168.56.103

selector:

app: wordpress-pod

ports:

- protocol: TCP

port: 80

targetPort: 80vi mysql-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-deploy

labels:

app: mysql-deploy

spec:

replicas: 1

selector:

matchLabels:

app: mysql-deploy

template:

metadata:

labels:

app: mysql-deploy

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom:

- configMapRef:

name: config-wordpress

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

# type: ClusterIP # 기본값 - ClusterIP

selector:

app: mysql-deploy

ports:

- protocol: TCP

port: 3306

targetPort: 3306vi wordpress-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress-deploy

labels:

app: wordpress-deploy

spec:

replicas: 1

selector:

matchLabels:

app: wordpress-deploy

template:

metadata:

labels:

app: wordpress-deploy

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.1.102

selector:

app: wordpress-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

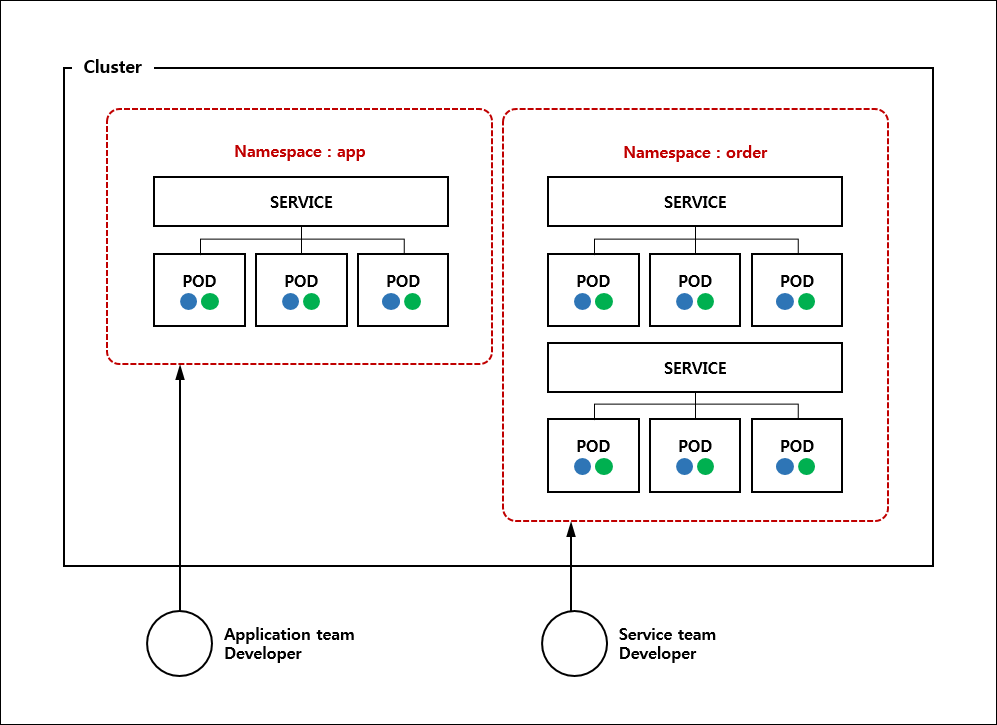

namespace 관리

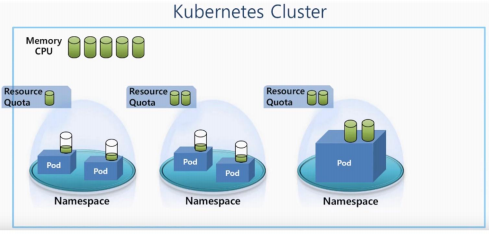

- 쿠버네티스 클러스터 하나를 여러 개 논리적인 단위로 나눠서 사용

- 클러스터 안에서 용도에 따라 실행해야하는 앱을 구분할 경우

- 네임스페이스별로 별도의 쿼터(할당량)를 설정해서 특정 네임스페이스의 사용량을 제한 가능

- WEBNORI - Namespace

실습

# 네임스페이스 조회

$ kubectl get namespaces

# 기본 네임스페이스 조회

$ kubectl config get-contexts kubernetes-admin@kubernetes

# 기본 네임스페이스 설정

$ kubectl config set-context kubernetes-admin@kubernetes --namespace=kube-system

# kube-system 의 파드들을 볼수 있다.

$ kubectl get pods

$ kubectl config get-contexts kubernetes-admin@kubernetes

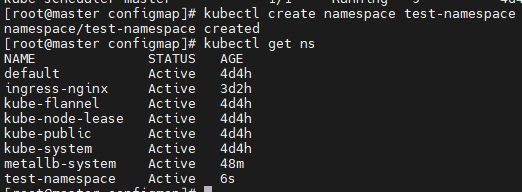

$ kubectl config set-context kubernetes-admin@kubernetes --namespace=default- "test-namespace"

# test-namespace 생성

$ kubectl create namespace test-namespace

$ kubectl get namespace

$ kubectl config set-context kubernetes-admin@kubernetes --namespace=test-namespace

$ kubectl config set-context kubernetes-admin@kubernetes --namespace=defaultResourceQuota 관리

- 각 네임스페이스(가상 쿠버네티스 클러스터)마다 사용 가능한 리소스를 제한

- 생성이나 변경으로 그 시점에 제한이 걸린 상태가 되어도 이미 생성된 리소스에는 영향을 주지 않기 때문에 주의 해야한다.

- 2가지

- 생성 가능한 리소스 수 제한

- 리소스 사용량 제한

실습

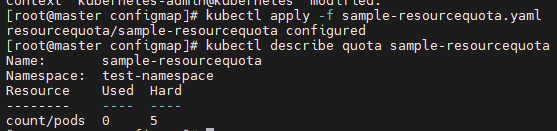

vi sample-resourcequota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota

namespace: test-namespace

spec:

# 엄격하게(hard), soft(조금 유연하게)

hard:

# 최대 "pod" 를 5개 까지만 만들도록 제한

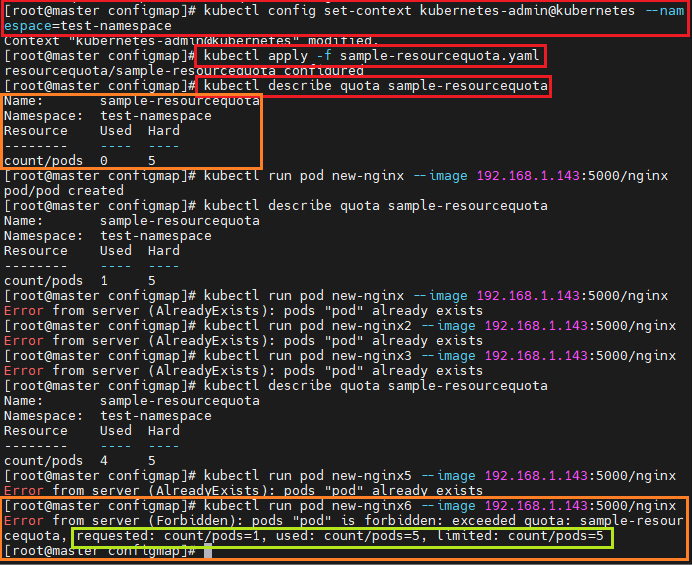

count/pods: 5- 적용

# 네임스페이스 설정을 "test-namespace" 로 지정해야한다.

$ kubectl describe resourcequotas sample-resourcequota

# "run" 명령은 'pod'를 만드는 명령어

$ kubectl run pod new-nginx --image=nginx

vi sample-resourcequota-usable.yaml

$ kubectl create ns jsb

$ kubectl get ns- 본 파일

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota-usable

namespace: jsb # kubectl create ns jsbkube

spec:

hard:

requests.cpu: 1 # 1000m / 4 = 250m (milii core)

requests.memory: 1Gi # 1Gi / 4 = 250Mi

# "requests.storage" 파드에 주어지는 스토리지 공간

#requests.storage: 5Gi

#sample-storageclass.storageclass.storage.k8s.io/requests.storage: 5Gi

# 임시 스토리지 (파드에 주어지는 스토리지량 제한)

requests.ephemeral-storage: 5Gi

requests.nvidia.com/gpu: 2

limits.cpu: 4

limits.memory: 2 Gi

limits.ephemeral-storage: 10Gi

limits.nvidia.com/gpu: 4- 적용

$ kubectl apply -f sample-resourcequota-usable.yaml

$ kubectl get -n jsb quota

$ kubectl -n jsb describe resourcequotas sample-resourcequota-usablevi sample-pod.yaml

- vi sample-resourcequota-usable.yaml 을 이용하하는 것

- ❗ 자원의 제한을 정의 했기 때문에

must specify limits.cpu,requests.memory- cpu, memory의 용량 정의 필수 ❗

apiVersion: v1

kind: Pod

metadata:

name: sample-pod

namespace: jsb

spec:

containers:

- name: nginx-container

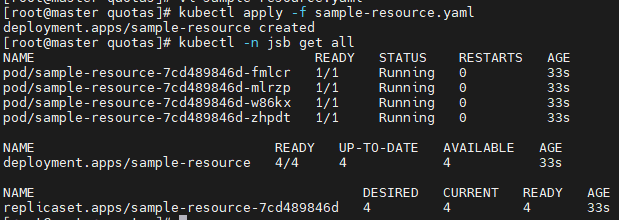

image: nginx:1.16vi sample-resource.yaml

- vi sample-resourcequota-usable.yaml 을 이용하하는 것

- 파드 및 컨테이너 리소스 관리

- 파드가 실행 중인 노드에 사용 가능한 리소스가 충분하면, 컨테이너가 해당 리소스에 지정한

request보다 더 많은 리소스를 사용할 수 있도록 허용된다. 그러나, 컨테이너는 리소스limit보다 더 많은 리소스를 사용할 수는 없다. - CPU 리소스 단위

- 쿠버네티스에서 CPU 리소스를

1m보다 더 정밀한 단위로 표기할 수 없다. 이 때문에, CPU 단위를1.0또는1000m보다 작은 밀리 CPU 형태로 표기하는 것이 유용하다.- 예를 들어,

0.005보다는5m으로 표기하는 것이 좋다.

- 예를 들어,

- 쿠버네티스에서 CPU 리소스를

- 메모리 리소스 단위

- 파드가 실행 중인 노드에 사용 가능한 리소스가 충분하면, 컨테이너가 해당 리소스에 지정한

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-resource

namespace: jsb

spec:

replicas: 4

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: nginx-container

image: nginx:1.16

resources:

# 메모리: 최소 250, 최대 250

# cpu: 최소 0.25코어, 최대 1 코어

requests:

memory: "250Mi"

cpu: "250m"

limits:

memory: "500Mi"

cpu: "1000m"

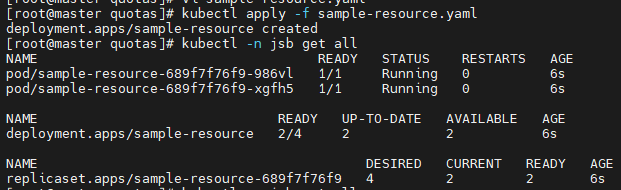

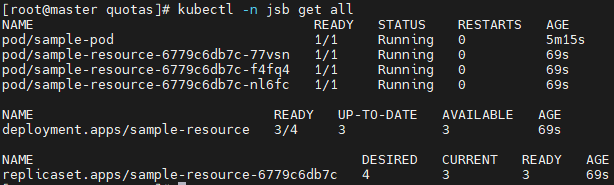

#cpu: "1500m"- 적용 및 결과

$ kubectl apply -f sample-resource.yaml

$ kubectl -n jsb get all

$ kubectl -n jsb describe quota sample-resource

# 지우는 방법

$ kubectl delete -f sample-resource.yaml- 정상적으로 한도를 책정한 경우

- 한도가 넘었을 경우 한도를 넘으면 더이상 리소스가 생성되지 않는다.

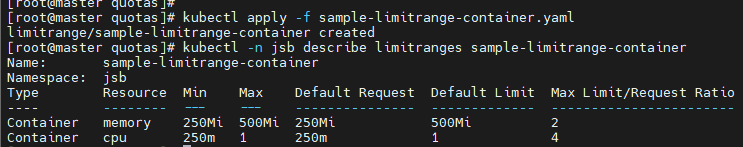

LimitRange

- 리밋 레인지

- 파드의 모든 컨테이너에 CPU 상한이 명시되지 않은 경우 컨트롤 플레인이 해당 컨테이너에 기본 CPU 상한을 할당할 수 있도록 설정

- 네임스페이스의 총 제한이 파드/컨테이너의 제한 합보다 작은 경우 리소스에 대한 경합이 있을 수 있다.

- 이 경우 컨테이너 또는 파드가 생성되지 않는다.

default,defaultRequet,max,min,maxLimitRequestRatio알아보기,,,

실습

vi sample-limitrange-container.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: sample-limitrange-container

namespace: jsb

spec:

limits:

- type: Container

default: # limit

memory: 500Mi

cpu: 1000m

defaultRequest: # request

memory: 250Mi

cpu: 250m

max:

memory: 500Mi

cpu: 1000m

min:

memory: 250Mi

cpu: 250m

maxLimitRequestRatio: # 최소/최대 요청 비율

memory: 2

cpu: 4- 적용

$ kubectl apply -f sample-limitrange-container.yaml

$ kubectl -n jsb describe limitranges sample-limitrange-container

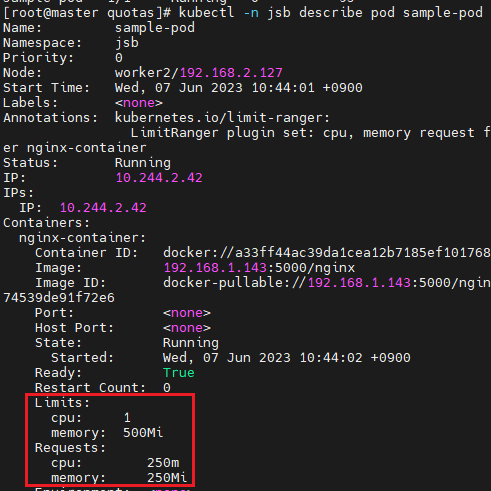

vi sample-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: sample-pod

spec:

containers:

- name: nginx-container

image: nginx:1.16- 적용

$ kubectl -n jsb apply -f sample-pod.yaml

$ kubectl -n jsb get pod

$ kubectl -n jsb describe pod sample-pod

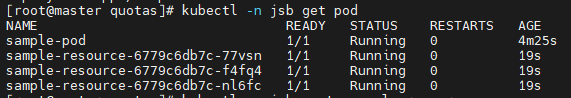

vi test-resource.yaml

- 할당이 넘어 갈수 없다.

- 위의

sample-pod.yaml로 생성한 pod 가 있기 때문에 "4개" 를 모두 생성 하지 않고 네임스페이스의 기본 리소스 쿼터 제한과 기본 LimitRange 덕분에 "3개" 까지 생성된다.

- 위의

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-resource

namespace: jsb

spec:

replicas: 4

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: nginx-container

image: nginx:1.16- 적용

$ kubectl apply -f test-resource.yaml

$ kubectl -n jsb get pod

$ kubectl -n jsb get all

Schdule

워크스페이스 생성

$ mkdir schedule && cd $_

# 네임스페이스가 "default" 가 아니면 변경

$ kubectl config get-contexts

$ kubectl config set-context kubernetes-admin@kubernetes --namespace default파드 스케줄(자동 배치)

pod-schedule.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-schedule-metadata

labels:

app: pod-schedule-labels

spec:

containers:

- name: pod-schedule-containers

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: pod-schedule-service

spec:

type: NodePort

selector:

app: pod-schedule-labels

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

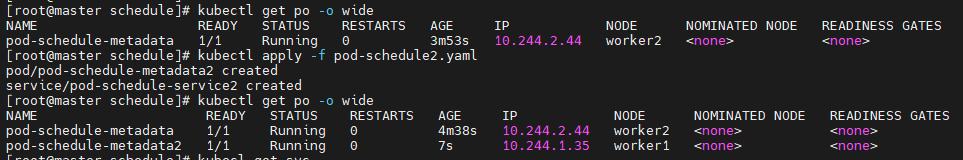

$ kubectl apply -f pod-schedule.yaml

$ kubectl get po -o wide

pod-schedule2.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-schedule-metadata2

labels:

app: pod-schedule-labels2

spec:

containers:

- name: pod-schedule-containers2

image: 192.168.1.143:5000/nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: pod-schedule-service2

spec:

type: NodePort

selector:

app: pod-schedule-labels2

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

$ kubectl apply -f pod-schedule2.yaml

$ kubectl get po -o wide

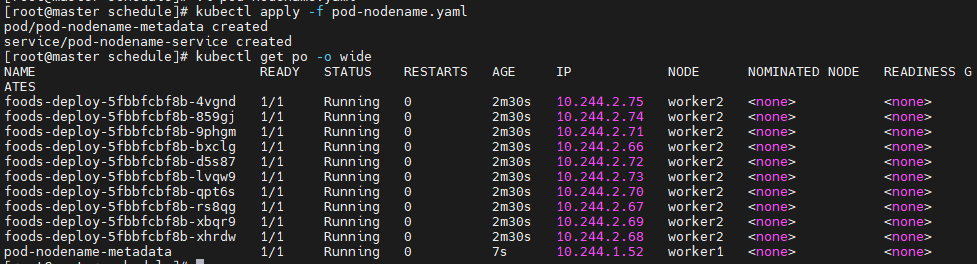

파드 노드네임(수동 배치)

- 파드를 수동으로 배치

nodeName를 지정

vi pod-nodename.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-nodename-metadata

labels:

app: pod-nodename-labels

spec:

containers:

- name: pod-nodename-containers

image: nginx

ports:

- containerPort: 80

# "worker2" 에 파드를 지정 배치

nodeName: worker2

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodename-service

spec:

type: NodePort

selector:

app: pod-nodename-labels

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

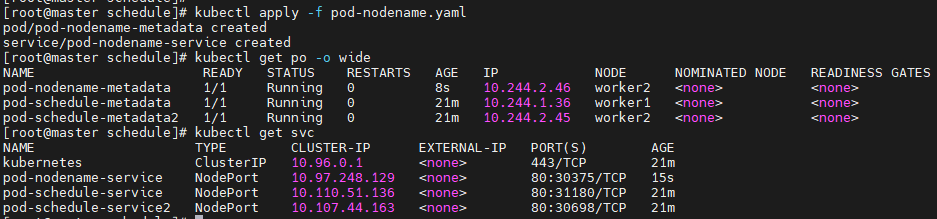

$ kubectl apply -f pod-nodename.yaml

$ kubectl get po -o wide

$ kubectl get svc

$ kubectl get po --show-labels

# 어떤 특정 라벨을 달고 있는 "pod" 를 보고싶은 경우

$ kubectl get po --selector=app=pod-nodename-labels -o wide --show-labels

노드 셀렉터(수동 배치)

- 라벨을 가지고 노드를 선택

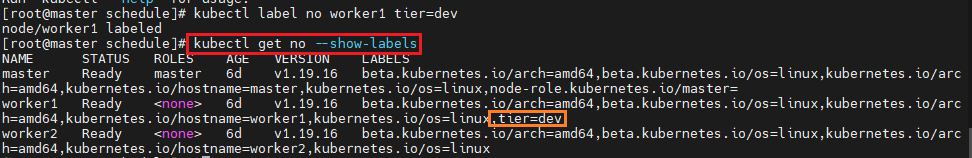

$ kubectl label nodes worker1 tier=dev

$ kubecl get nodes --show-labels- 라벨 제거

$ kubectl label nodes worker1 tier-

vi pod-nodeselector.yaml

nodeSelector.tier설정으로 "worker1" 에 pod 가 배치된다.

apiVersion: v1

kind: Pod

metadata:

name: pod-nodeselector-metadata

labels:

app: pod-nodeselector-labels

spec:

containers:

- name: pod-nodeselector-containers

image: nginx

ports:

- containerPort: 80

# "worker1" 에 pod 가 배치된다.

nodeSelector:

tier: dev

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodeselector-service

spec:

type: NodePort

selector:

app: pod-nodeselector-labels

ports:

- protocol: TCP

port: 80

targetPort: 80- 적용

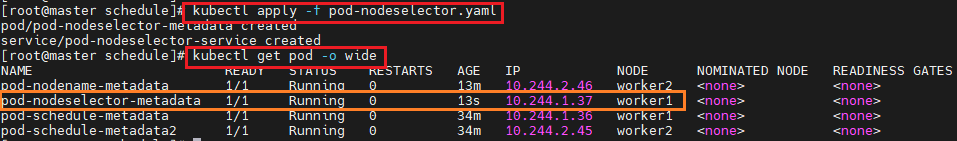

$ kubectl apply -f pod-nodeselector.yaml

$ kubectl get pod -o wide

# 라벨 제거

$ kubectl label nodes worker1 tier-

taint와 toleration

- 테인트(Taints)와 톨러레이션(Tolerations)

- 4.7. 테인트(Taints) 및 톨러레이션(Tolerations)

- [k8s] 노드 스케쥴링 - Taints와 Toleratioin(테인트와 톨러레이션)

taint- NoSchedule -> "worker1" 에는 파드를 만들지 않겠다.- 모든 노드에 테인트롤 설정하면 된다.

taint셋팅을 하는 이유 - AI/ML 위한 노드(GPU, 특정한 노드) 즉, 같은 그룹 안에서 특정 노드를 분류 하기 위한 것

tolerations- 기본적으로 테인트를 설정한 노드는 파드들을 자동 스케줄링하지 않습니다.

- 테인트를 설정한 노드에 파드들을 스케줄링하려면 파드에 톨러레이션(Toleration)을 설정

자동 스케줄에만 적용수동 스케줄링 이 자동 스케줄링보다 우선순위가 더 높다.

실습

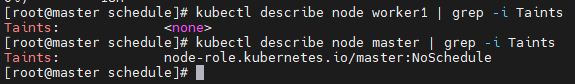

# "Taint" 여부 확인

# 마스터 노드는 기본적으로 "Taint" 가 있다.

$ kubectl describe node <NODE_NAME> | grep -i Taints

# Key 가 tier 이고 값이 dev와 gpu

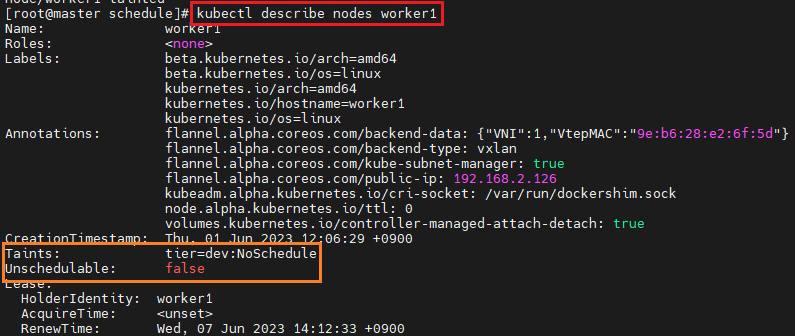

$ kubectl taint node worker1 tier=dev:NoSchedule

$ kubectl taint node worker2 tier=gpu:NoSchedule

# taint 조회

$ kubectl describe nodes worker1

# 다 taint가 걸려있어서 노드에 스케쥴링이 되지 않음.

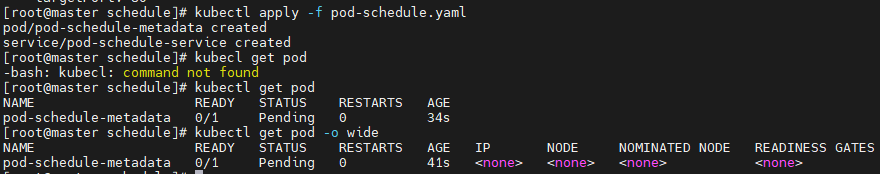

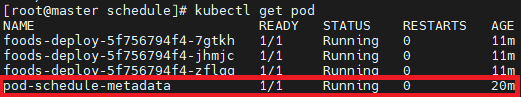

$ kubectl apply -f pod-schedule.yaml

$ kubectl get pod -o wide

# 아래 그림 참고 (dev 라는 taint 에 적용)

$ kubectl apply -f deployment.yaml

$ kubectl get po -o wide

# 제거

$ kubectl taint node worker1 tier=dev:NoSchedule-

$ kubectl taint node worker2 tier=gpu:NoSchedule-

- taint 조회 해본 것

- 다 taint가 걸려있어서 노드에 자동 스케쥴링이 되지 않음.

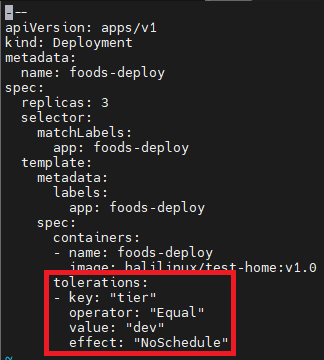

- deployment.yaml 에

tolerations설정

- taint 해제시, 스케줄 된 노드가 없어 생성이 pending 된 pod도 생성된 것을 볼 수 있다.

vi pod-taint.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-taint-metadata

labels:

app: pod-taint-labels

spec:

containers:

- name: pod-taint-containers

image: nginx

ports:

- containerPort: 80

# `tier=dev` 이면 파드를 생성하지 않는다.

tolerations:

- key: "tier"

operator: "Equal"

value: "dev"

effect: "NoSchedule"

---

apiVersion: v1

kind: Service

metadata:

name: pod-taint-service

spec:

type: NodePort

selector:

app: pod-taint-labels

ports:

- protocol: TCP

port: 80

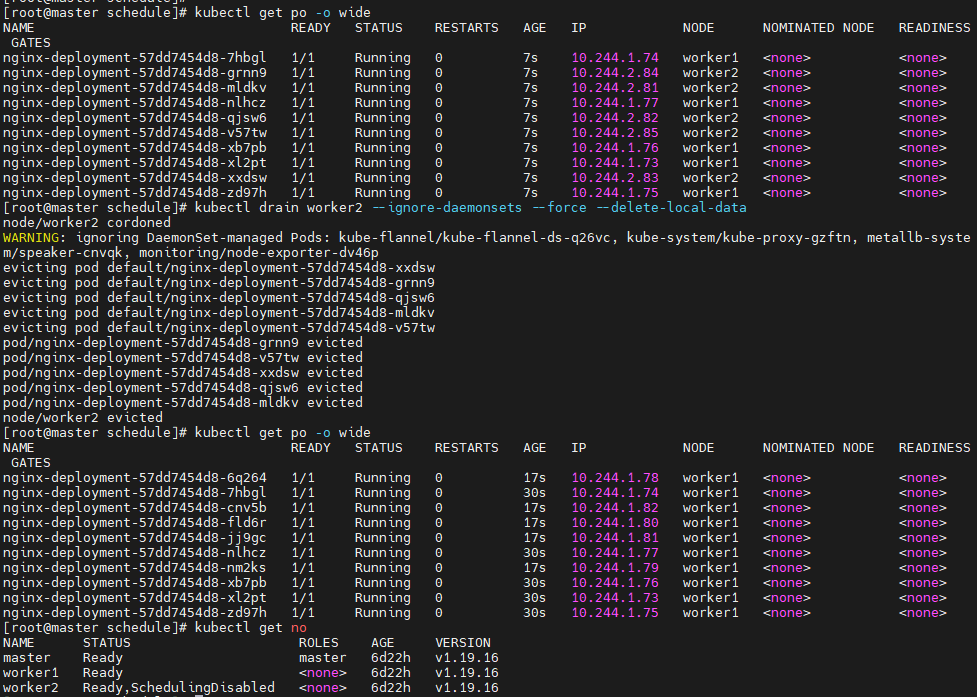

targetPort: 80커든과 드레인

- Manual Node administration

kubectl cordon $NODENAME

- 커든: 노드에 추가로 파드를 자동 스케줄링하지 않도록 함.

- 드레인: 노드 관리를 위해 파드를 다른 노드로 이동

- k8s 노드의 하드웨어 리소스가 부족한 경우 더이상 pod 가 생기지 않도록 하는 경우에 사용 가능.

자동 스케줄에만 적용수동 스케줄링 이 자동 스케줄링보다 우선순위가 더 높다.

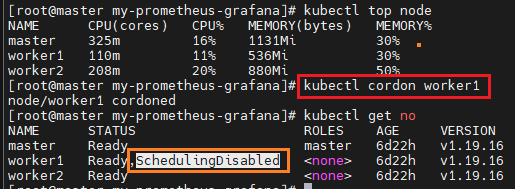

cordon, uncordon

- 커든 명령은 지정된 노드에 추가로 파드를 스케줄하지 않는다.

# 사용량 조회, 사용량을 확인하는 메트릭??에이전트? 이런것이 있어야한다.

$ kubectl top node

$ kubectl cordon worker1

$ kubectl get no

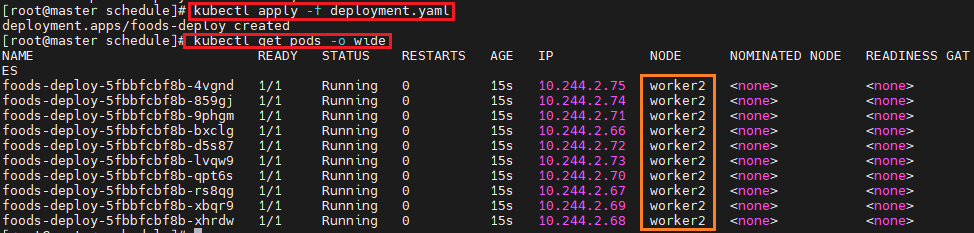

$ kubectl apply -f deployment_service.yaml

$ kubectl get po -o wide # 노드 2만 파드 스케줄

$ kubectl uncordon worker1

- 수동으로 스케줄링 하면

cordon이 적용되지 않는다.

drain, uncordon

- 드레인 명령은 지정된 노드에 모든 파드를 다른 노드로 이동시킨다.

- DaemonSet에서 관리하는 포드가 있는 경우 노드를 성공적으로 비우려면

kubectl과 함께--ignore-daemonsets를 지정해야 합니다.

# "--ignore-daemonsets" "--force"

$ kubectl drain worker2 --ignore-daemonsets --force --delete-local-data

$ kubectl get po -o wide

$ kubectl get no

$ kubectl uncordon worker2

$ kubectl get no- evicting(퇴거) 배제

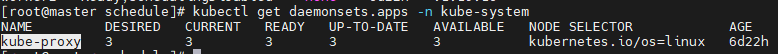

daemonsets 이란?

- What is a DaemonSet?

daemon: 사용자가 직접적으로 제어하지 않고, 백그라운드에서 돌면서 여러 작업을 하는 프로그램- 디플로이먼트와 닮았으나 지워질수 없음.

기타

- PORT와 TargetPort의 관계

$ kubectl scale --current-replicas=4 --replicas=6 deployment/nginx-deployment

$ kubectl scale --replicas=6 deployment/nginx-deployment- Ingress 알아보기

- ConfigMap 알아보기

- Namespace 알아보기

- 테인트(Taints)와 톨러레이션(Tolerations)

- [k8s] 노드 스케쥴링 - Taints와 Toleratioin(테인트와 톨러레이션)