Artificial Neural Networks (ANNs)

-

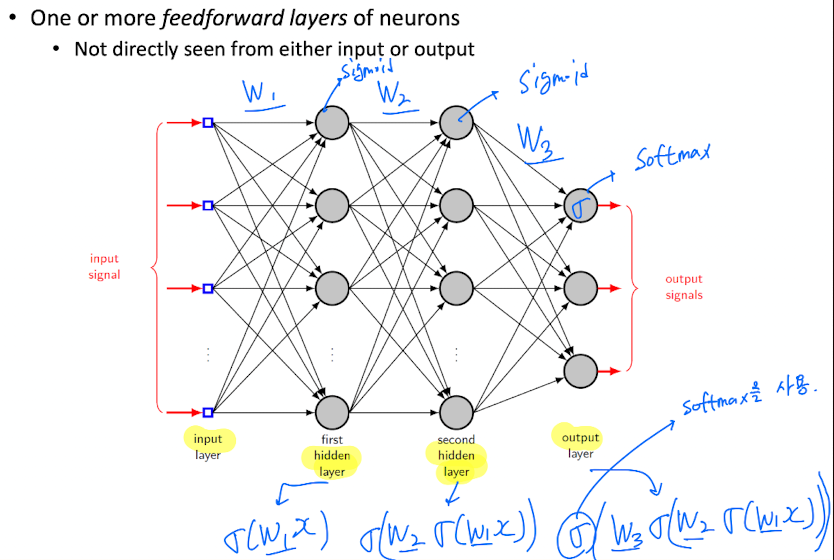

Traditionally most studied model : feedforward neural network

-

Comprises

multiple layers of logistic regression models -

Central idea

- 주어진 입력으로부터 좋은 feature를 추출해내는 것이 목적.

Representations

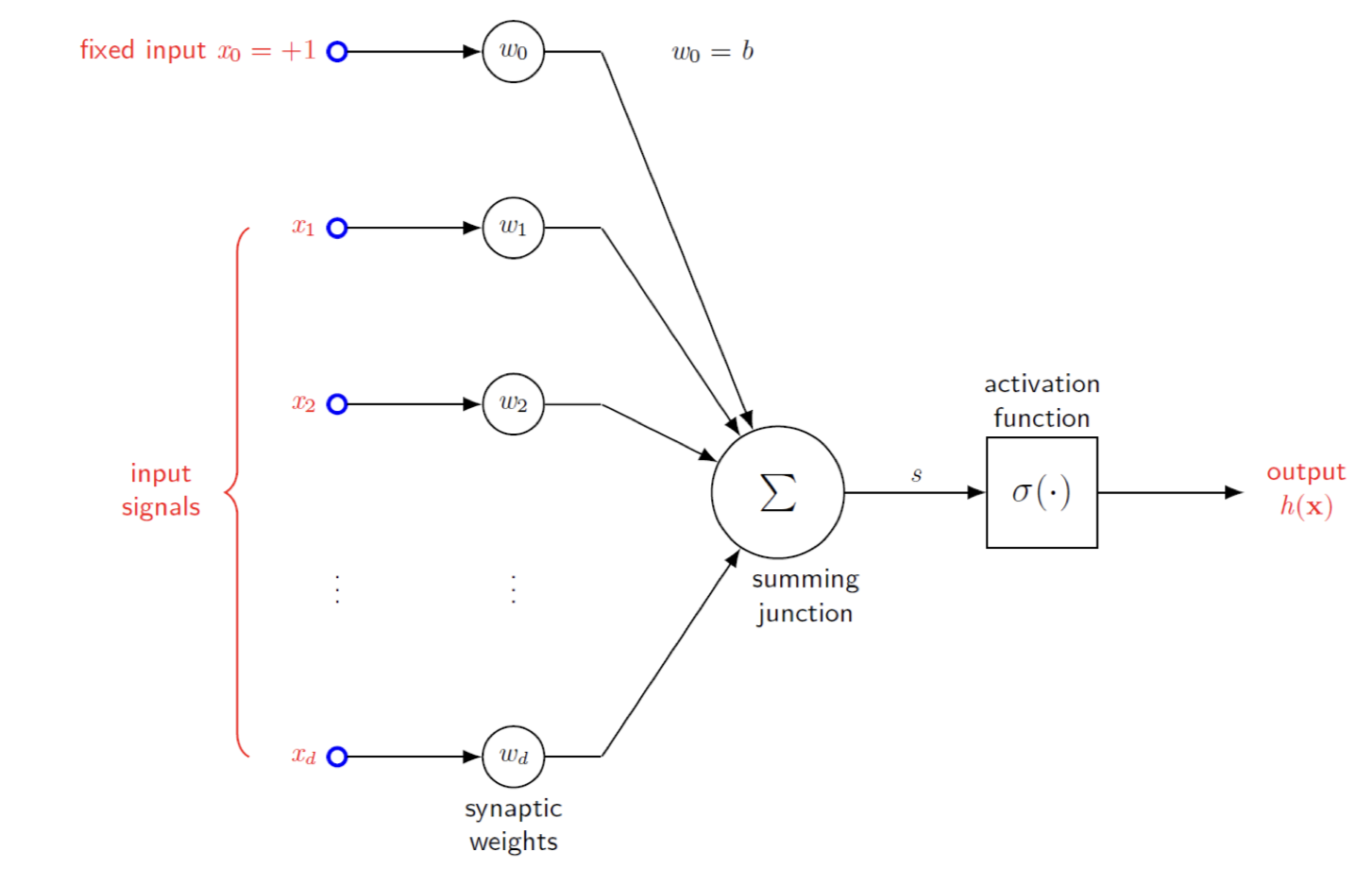

Models of a neuron

- Three basic elements

- Synapses (with weights)

- Adder (input vector ➡️ scalar)

- Activation function (possibly nonlinear)

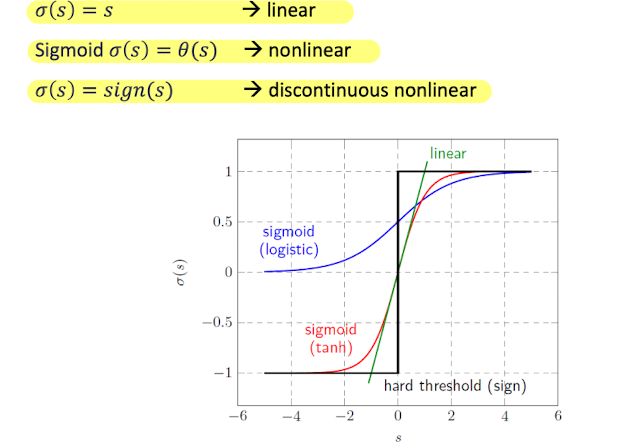

Activation Function

- 주로 Sigmoid == Logistic Sigmoid == Logistic 이 주로 사용됨.

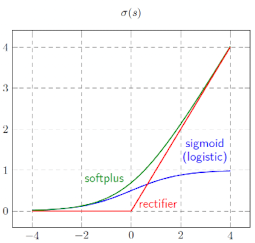

ReLU

-

ReLU: Rectified Linear Unit

- 어느 순간 역치가 되면, 그 다음 neuron으로 signal이 전달되는 것을 생물학적으로 묘사.

-

ReLU advantages- Allows back-propagation of activations and gradients :

only a small number of units have non-zero values - ReLU preserves information about relative intensities as information travels through multiple layers of feature detectors.

- Better for handling the vanishing gradient problem

- Allows back-propagation of activations and gradients :

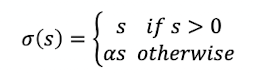

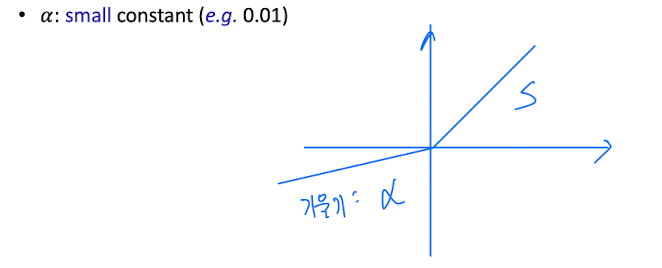

Leaky ReLU

Leaky ReLU

: ReLU units can be fragile during training and can "die"

an attempt to fix thedying ReLU problem

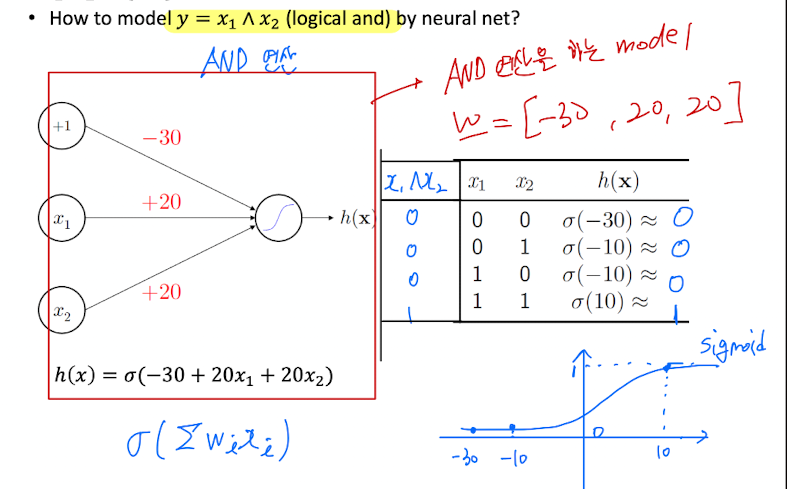

Example 1 : Logical AND

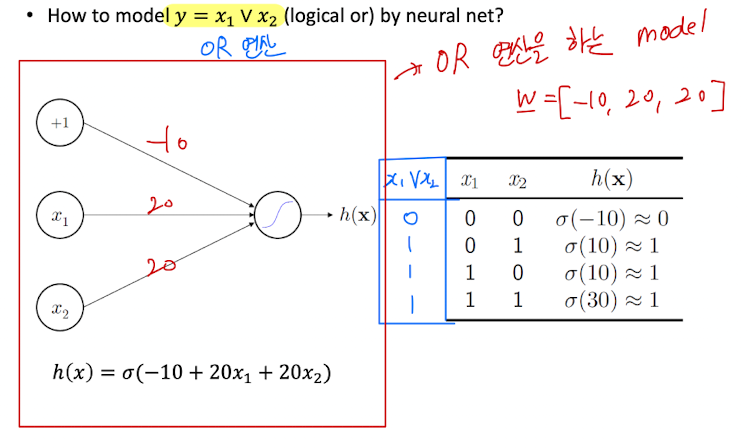

Example 2 : Logical OR

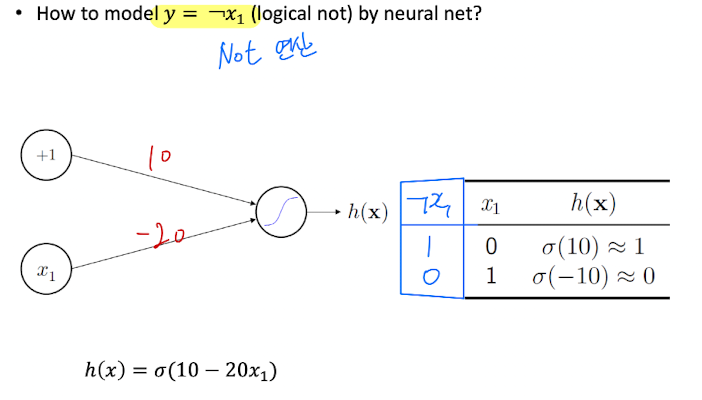

Example 3 : Logical NOT

Network Architectures

Single-layer feedforward network

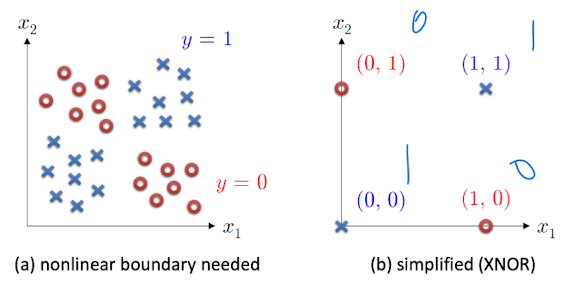

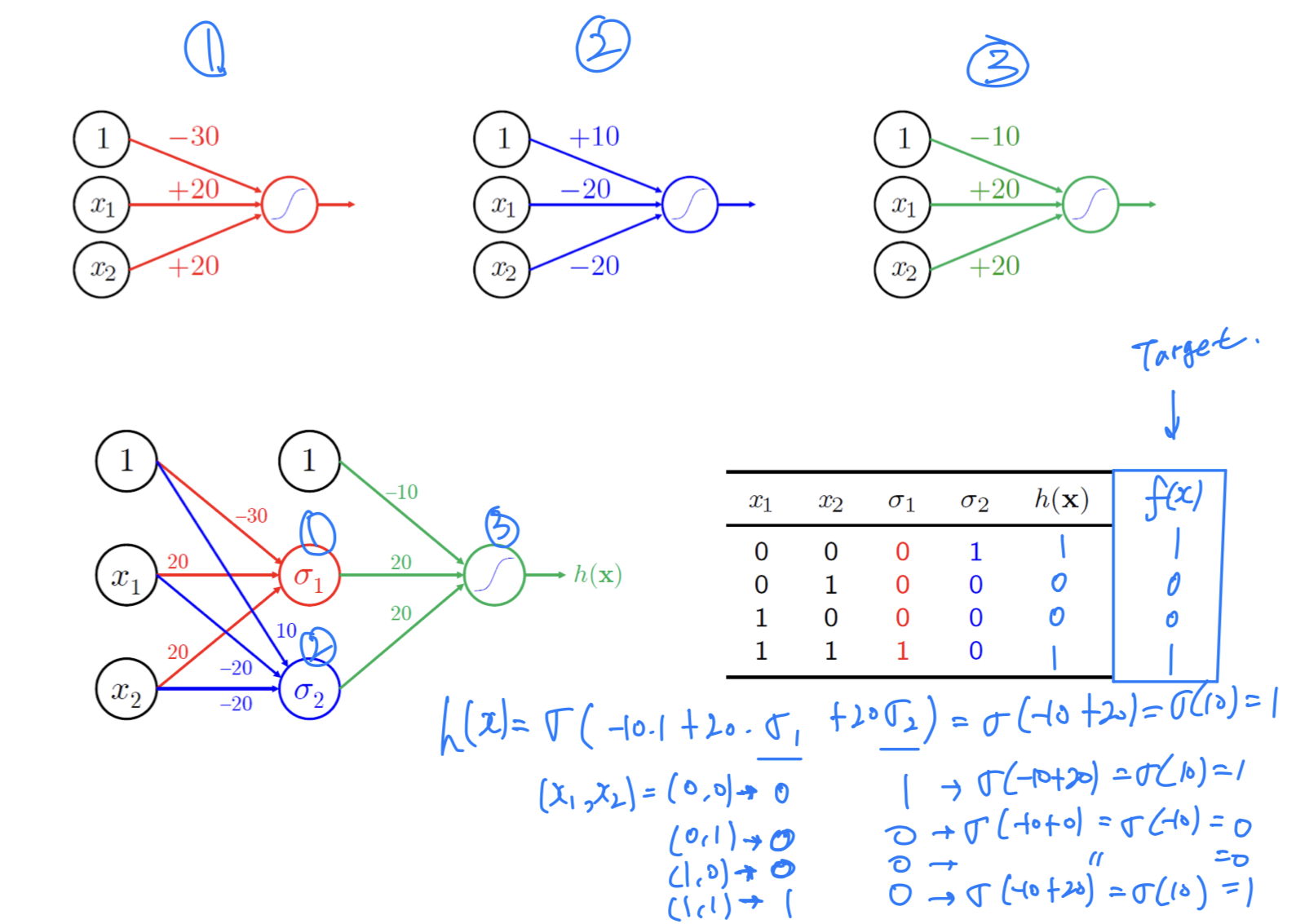

Example 4 : Logical XNOR

- XNOR is

nonlinear classification

- But, 여러 개의 Neuron을 사용하여 nonlinear classification을 modeling할 수 있음.

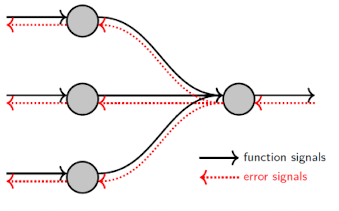

Types of signals in neural networks

- Each

hidden/output neuronperforms two tpyes of computations

Function signal- Propagates forward through network

- 는 고정, Inference만 한다.

Error signals==Back Propagation- Propagates backward

- <-

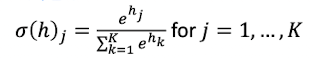

Softmax Function

-

Generalization of the logistic function

: Final layer of a network for multi-class classification -

Definition: given a K-dimensional vector

Numerical expression example

- 손글씨 0~4 에 대한 input image를 분류하는 Example.

- Output Layer의 대소 기준 순서를 유지하면서, Normalization.

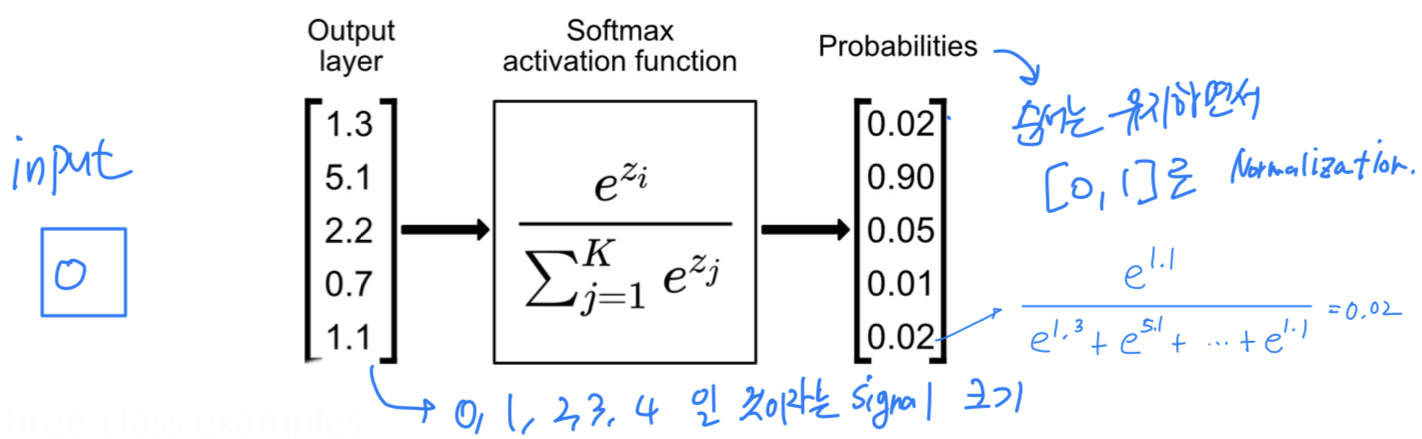

Three-class example

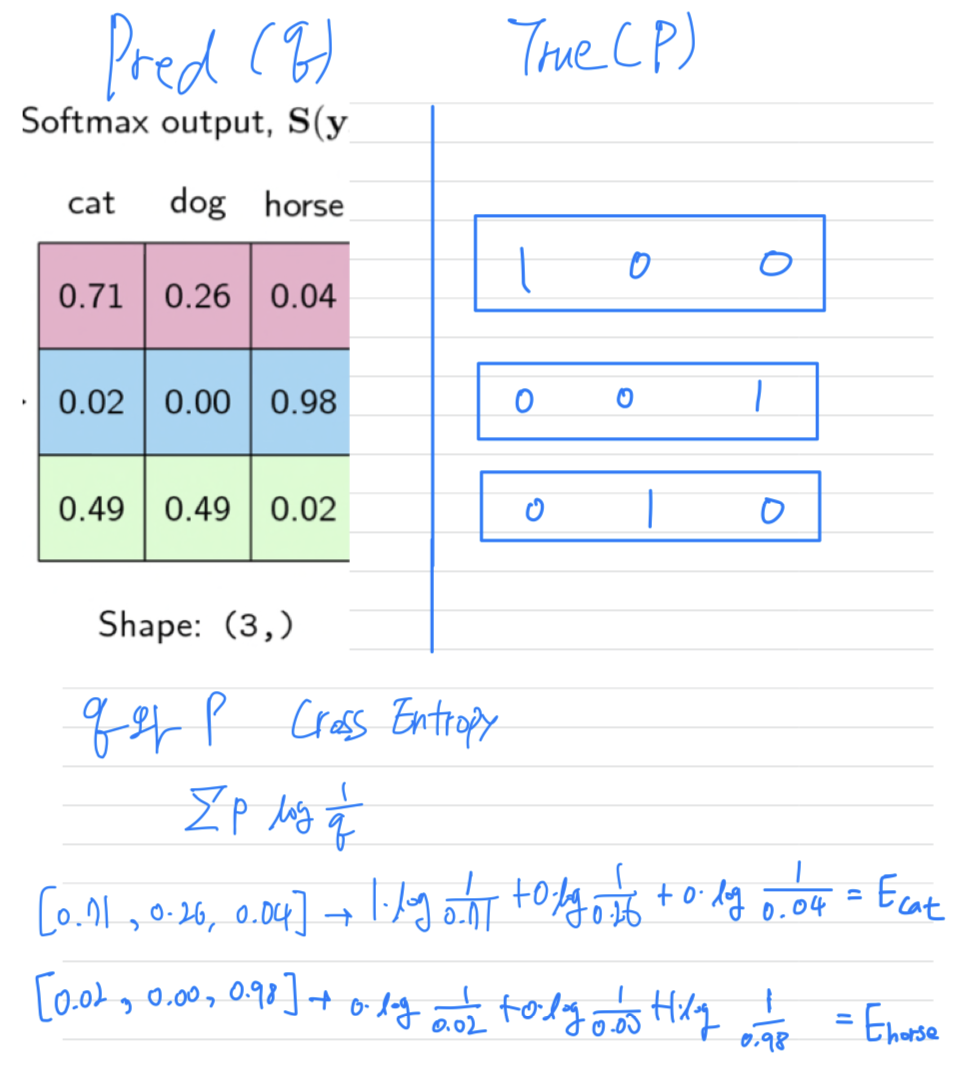

Function of Hidden Neurons

- Play critical role in operation of MLP

- Each layer corresponds to

distributed representation

➡️ Hidden Layer에서 나오는 vector값들의 의미를 한 번에 알아볼 수 없다.

node들의 output에 의미들이 분산되어 있음.

- Each layer corresponds to

- Hidden neurons acts as

feature detecotrs- They do so by performing nonlinear transformation on input data into new space called

feature space

- They do so by performing nonlinear transformation on input data into new space called

Summary

-

Artificial Neural Network

- Universal approximator of functions (어떠한 함수든지 ANN이 그럴싸하게 근접할 수 있다.)

- Neuron model

- weight

- adder

- activation function

-

Two types of signals for training feed-forward MLP

- function signals : forward propagation

- error signals : backward propagation

-

Hidden Layers act as feature detectors

- hidden layers ⬆️, intelligence ⬆️ but training difficulty ⬆️