Attention Mechanism

What is Attention

- Deep Neural Netowkr에서 중요한 정보를 강조한다.

Channel Attention

- Feature map에서 channel 간의 관계를 고려하여 중요한 channel을 강조한다.

- Channel scale을 조절하여 network의 성능을 높인다.

- Channel 방향 squeeze

- 기존의 CNN 등 Deep neural netowrk는 channel들의 상관관계를 weight 안에 암묵적으로 표현하여 채널 간의 관계를 파악하고 특정 채널을 강조하였다.

- Channel Attention은 명시적으로 모델링하여 Channel을 강조하고 스케일링한다.

- 상관관계가 높은, 중요한 채널을 강조하는 효과가 있다.

- Channel Attention Step (Input feature n, h, w, c)

- Global average pooling

- Fully Connected Layer 1

- ReLU activation

- Fully Connected Layer 2

- Sigmoid activation

- element-wise multiplication

Spatial Attention

- Feature map에서 공간적 정보를 포착하여 물체를 강조한다.

- Feature map의 영역을 강조한다.

- height, width 방향 squeeze

- Spatial Attention Step

- Convoltion layer 1

- Sigmoid activation

- element-wise multiplication

Basic Network

Auto Encoder

- Auto Encoder: 신경망을 이용해 입력 이미지를 복원

- Encoder + Decoder

- Encoder: 입력 이미지를 latent value로 encoding

- Decoder: Latent value를 원래의 입력 이미지로 Decoding

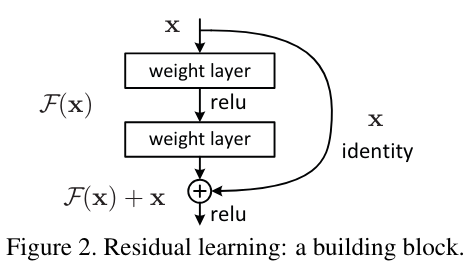

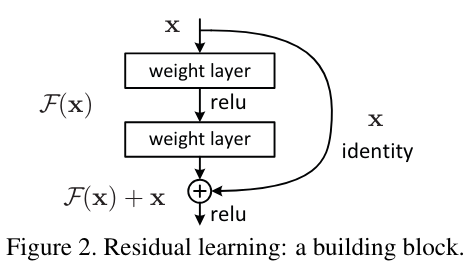

ResNet

- Task: Classification

- Residual Connection을 이용한 Residual block

- Network가 깊어질수록 학습이 안 되는 문제를 해결한다.

Implementation

AutoEncoder

- Import Tensorflow and additional library

# Import tensorflow and additional libraries

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

tf.keras.backend.clear_session()

# Check version

print('tensorflow version: ', tf.__version__)

- Load fasion MNIST dataset

# Load : Fashion MNIST Dataest

(x_train, _), (x_test, _) = tf.keras.datasets.fashion_mnist.load_data()

size_of_train = len(x_train)

size_of_test = len(x_test)

num_of_class = 10

print('The Shape of dataset:', x_train[0].shape)

print('The number of train image:', size_of_train)

print('The number of test image:', size_of_test)

# Train Data

plt.figure(figsize=(16, 10))

for i in range(num_of_class):

ax = plt.subplot(1, num_of_class, i+1)

plt.imshow(x_train[i], cmap='gray')

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()

- Pre-processing

- Image shape (28, 28)을 (28,28,1)로 reshape

- Image pixel 값을 0~255에서 0~1로 normalization

print('== Before normalization ==')

print('Image Shape:', x_train[0].shape)

print('Value MIN: %d, MEAN:%.2f, MAX:%d'%(np.min(x_train[0]), np.mean(x_train[0]), np.max(x_train[0])))

## Dataset Pre-processing ##

# Normalization

x_train = x_train/255.

x_test = x_test/255.

# Reshape(Adding channel)

x_train = x_train.reshape((size_of_train, 28, 28, 1))

x_test = x_test.reshape((size_of_test, 28, 28, 1))

print('\n== After normalization ==')

print('Image Shape:', x_train[0].shape)

print('Value MIN: %d, MEAN:%.2f, MAX:%d'

%(np.min(x_train[0]), np.mean(x_train[0]), np.max(x_train[0])))

def channel_attention(x, name):

####Fill your code####

return x

def spatial_attention(x, name):

####Fill your code####

return x

def autoencoder_block(x, filter, name):

x = tf.keras.layers.Conv2D(filters=filter, kernel_size=3, strides=(1, 1), padding='same', name=name+'_conv')(x)

x = tf.keras.layers.BatchNormalization(name=name+'_bn')(x)

x = tf.keras.layers.ReLU(name=name+'_relu')(x)

return x