🎈 본 리뷰는 DETR 및 리뷰를 참고해 작성했습니다.

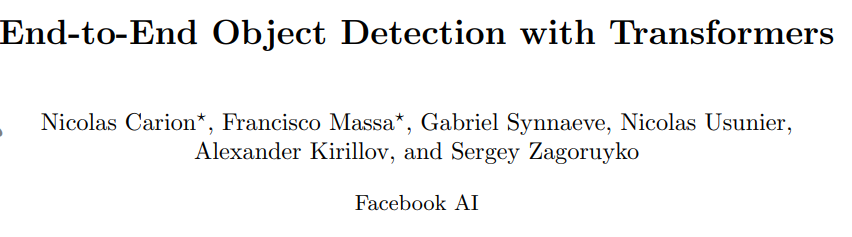

👩💻 오늘은 DETR에 대해 리뷰해보도록 하겠습니다. DETR은 transformer을 사용한 End-to-End object detection입니다. Transformer가 세상에 나온 이후 많은 분야에서 기존의 방법론 대신 transformer를 적용하고 있습니다. DETR은 기존 Transformer 아키텍처와 object detection에 대한 기본적인 지식만 있다면 수월하게 읽을 수 있는 논문이라고 생각됩니다.

Keywords

🎈 Removing NMS and anchor generation

🎈 Transforemr encoder-decoder architecture

🎈 Bipartite matching(이분 매칭)

Introduction

✔ Object detection의 목적은 bounding box의 집합과 각 카테고리의 라벨를 예측하는 것입니다. 현대의 detector들은 많은 set of proposals, anchors 등과 같은 간접적인 방법을 사용해 예측을 수행합니다. 또한 중복을 제거하는 postprocessing step이나 heuristics한 방법으로 정하는 anchor들이 많은 영향을 줍니다.

✔ DETR은 training pipeline을 간소화해 직접적으로 detecting 할 수 있는 방법론을 제시합니다. 즉, 위에서 언급한 postprocessing 방법들을 간소화 한다고 말할 수 있습니다. 이에 대한 방법으로 self-attention 기반인 transformer를 채택했고, 이는 중복을 제거하는 것(ex.NMS)에 매우 적합하다고 이야기합니다.

✔ DETR은 모든 개체를 한 번에 예측하며, predicted와 ground-truth objects의 bipatite matching(이분 매칭) loss function을 사용해 end-to-end로 학습합니다. DETR은 존재하는 다른 detection들과 다르게, customized layer가 필요하지 않다고 이야기 합니다. 따라서 모든 프레임 워크에서 쉽게 재현할 수 있습니다.

✔ 결과적으로 DETR은 병렬적인 decoding transforemr와 bipatite matching(이분 매칭)이 결합된 구조라고 이야기 할 수 있습니다. bipatite matching(이분 매칭) loss fucntion은 각각의 predicted를 GT object에 unique하게 할당하며, 예측된 객체의 순열에는 불변함으로, 병렬로 내보낼 수 있습니다.

✔ DETR은 large objects에서는 좋은 성능을 보이며 상대적으로 small object에 대해서는 낮은 성능을 보여줍니다. 이는 CNN의 feature map을 사용하기 때문에, small object에 대한 성능이 낮을 것이라는 추측을 해볼 수 있습니다.

✔ DETR은 매우 긴 training schedule이 필요하며, transformer 보조 decoding loss을 사용해 benefits을 얻을 수 있습니다.

Related work

Set Prediction

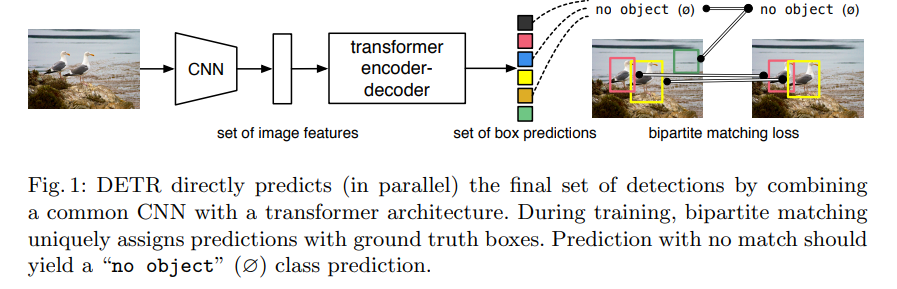

✔ 먼저 Set(집합)의 의미에 대해서 생각해봐야합니다. Set(집합)이란 순서가 없으며, 중복이 없는 것들을 의미합니다. 기존의 detector들의 어려움 중 하나는 바로 중복을 피하는 것이였으며, 이를 해결하기위해 NMS와 같은 방법들을 사용했습니다. 하지만 DETR의 direct set predicttion은 NMS와 같은 postprocessing이 필요하지 않습니다. 이는 Hungarain algorithm을 기반으로한 bipatite matching(이분 매칭) loss function을 사용해 보장할 수 있습니다.

✅ bipatite matching(이분 매칭)

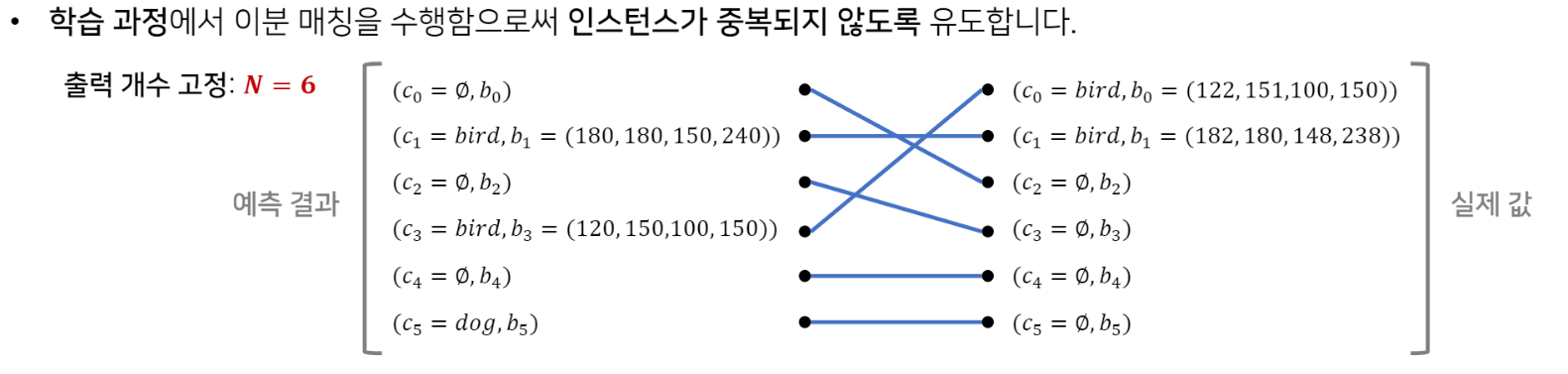

- 동빈나 Youtube님의 DETR 리뷰에서 좋은 예시가 있어 가지고 왔습니다. 위의 그림과 같이 prediction과 GT값을 각각 중복되지 않게 매칭을 하는 것이 이분 매칭이라고 간단하게 이야기 할 수 있습니다.

The DETR model

✅ Direct set predictions in detection

- a set prediction loss that forces unique matching between predicted and GT box

- an architecture that predicts a set of objects and models their relation

Object detection set prediction loss

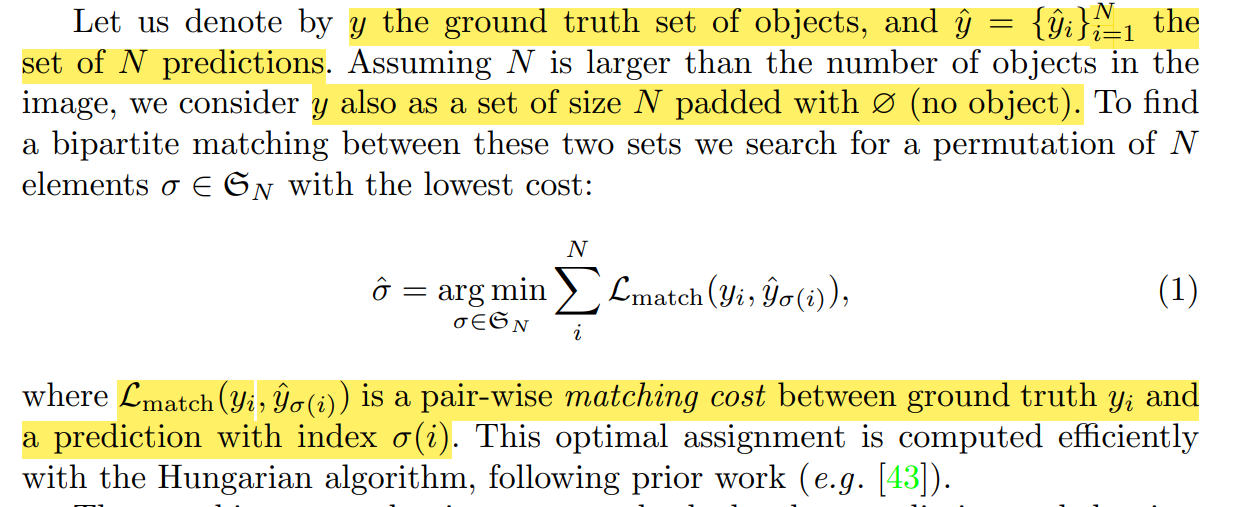

✔ DETR에서는 decoder에서 고정된 N predictions 사이즈를 사용합니다. 여기서 N은 한 이미지에서 보여지는 개체수보다 더 큰 수로 지정해야합니다. 고정된 N predictions은 추후 decoder에 대한 내용을 다룰때 더욱 자세하게 설명하겠습니다.

✔ 각 GT값과 prediction 값이 합이 가장 작은 것을 구하는 것이 목표입니다.

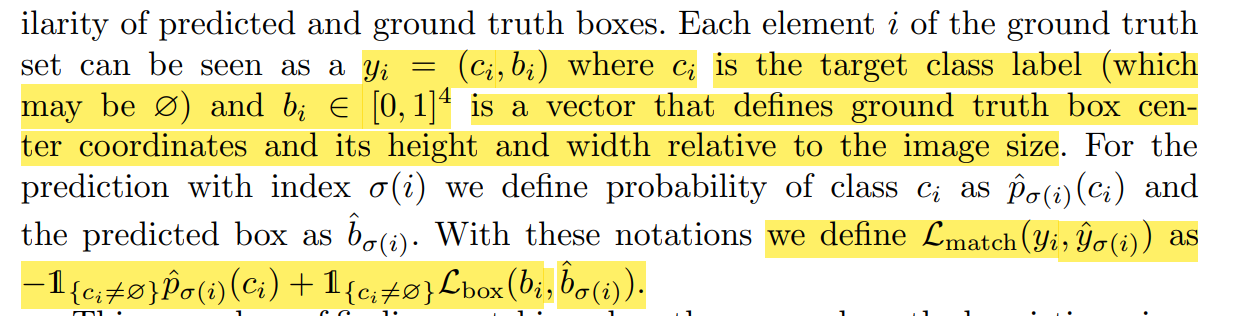

✔ 위와 같이 는 다음과 같이 정의되며, 각각의 는 타켓 클레스 레이블, 는 GT box의 중심 좌표와 높이와 너비의 상대적인 사이즈로 정의됩니다. 위와 같은 matching을 찾는 방법은 기존의 heuristic한 방법으로 찾는 match proposal과 anchors과 같은 역활은 하는 방법입니다.

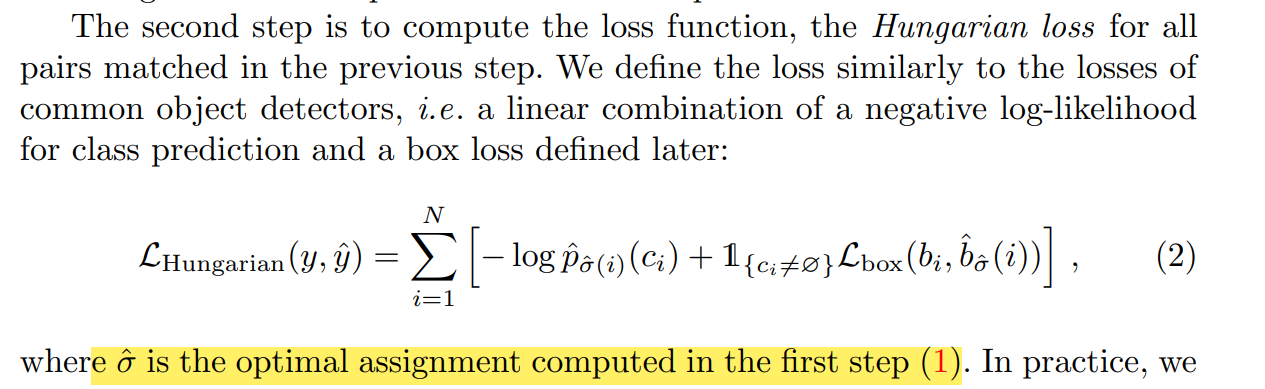

✔ 다음 스텝으로 Hungarain loss function를 수행합니다.

✔ Hungarain loss function는 위의 식으로 정의되며, 여기서 중요한 건 class imbalance를 고려해 log-probability term에 대해 object가 no object일 경우 1/10로 줄여 계산한다고 합니다.

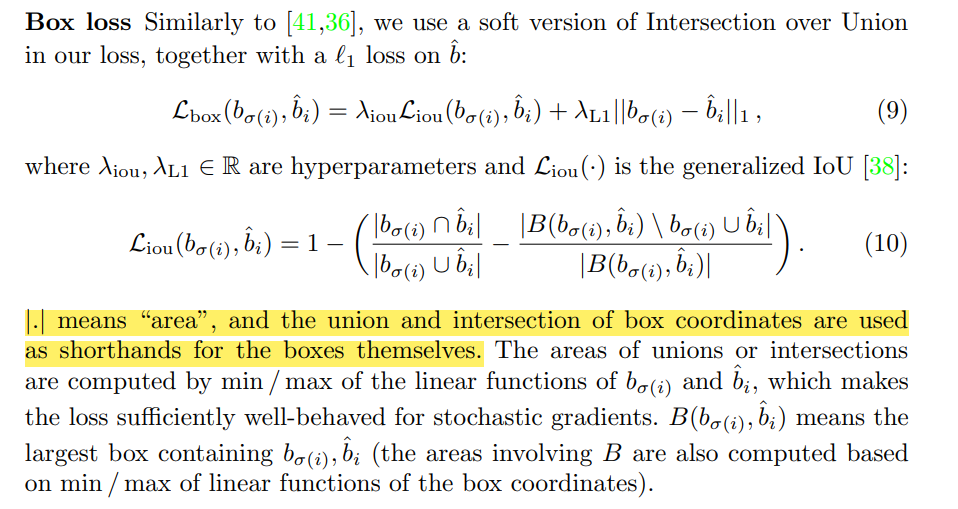

Bounding box loss

✔ 위 Hungarain loss function에서 는 기존의 detector들에서 사용하는 offset을 사용하지 않고, L1 loss 과 GIoU(Generalized Iou)를 사용해 계산을 수행합니다. 각각의 은 하이퍼 파라미터 입니다. 또한 위의 두 loss는 배치 내부의 객체 수에 의해 정규화됩니다.

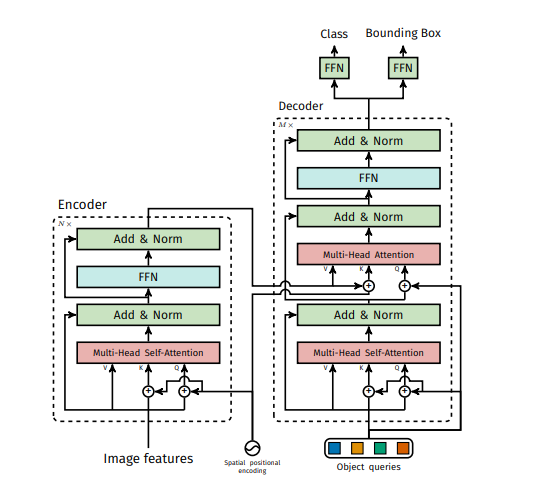

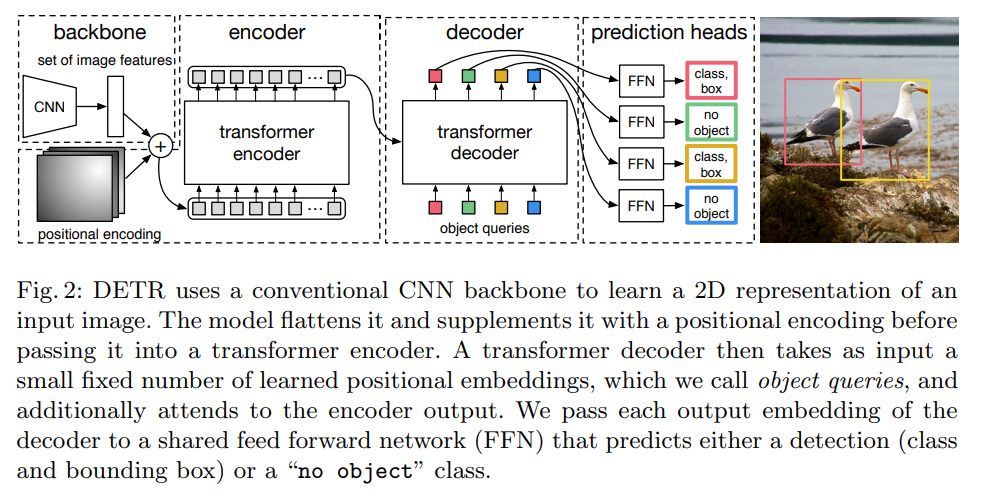

DETR architecture

✔ DETR 아키텍처는 매우 간단하게 구성되어있습니다. 3가지 메인 요소들이 존재하는데, CNN backbone 아키텍쳐와 encoder-decoder transformer 그리고 simple feed forward network(FFN)으로 구성되어 있습니다. 위의 그림이 DETR의 상세 아키텍처 구조인데, 거의 기존의 transformer와 비슷한 것을 알 수 있었습니다.

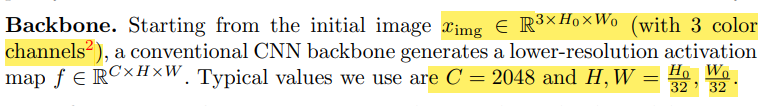

Backbone

✔ Backbone에서의 마지막 feature map size는 C = 2048 그리고 H,W = H/32, w/32 입니다. 이는 기본적으로 Resnet50을 사용해 위와 같은 결과를 보여줍니다.

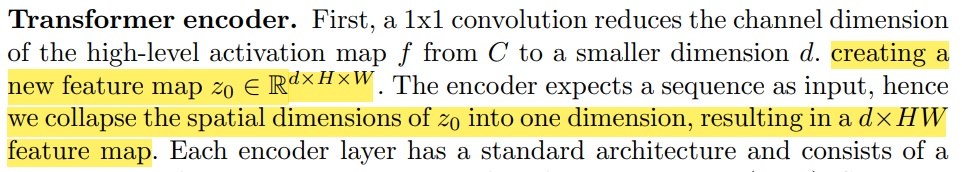

Transformer encoder

✔ Transformer encoder는 처음에 d dimension으로 mapping을 해주며, encoder에 input으로 넣기 위해 차원으로 변환해줍니다. 또한 transformer 아키텍처는 permutaion-invariant 이므로, 각 계층의 입력에 고정된 position encoding을 추가해 보완합니다.

Transforemr decoder

✔ Decoder의 경우 기존의 transformer 구조를 그대로 가져가며, multi-headed self 그리고 encoder-decoder attention 메커니즘을 사용해 N을 임베딩 사이즈 d로 변환합니다. 기존의 구조랑 다른 점은 DETR은 N obects를 병렬로 디코딩 한다는 점입니다. 여기서 N은 앞서 이야기 한 것과 같은 한 이미지에서 볼 수있는 개체 수 이상을 지정해야합니다. 예를 들어 COCO에서 한 이미지에 최대 63개의 개체를 가지고있다면, 최소한 63개 이상의 N을 지정해야합니다.

✔ Decoder 또한 permutation-invariant 하기 때문에, N의 input embedding은 항상 다른 결과를 도출해야합니다. Decoder의 input embedding은 object queries(N)라고 하는 학습된 position encoding이며, 인코더와 유사하게 각 attention layer에 추가합니다.

✔ N개의 object queries는 디코더를 통해 output embedding으로 변환됩니다. 그들은 box 좌표와 class label로 FFN을 통해 독립적으로 디코드됩니다.

Prediction feed-forward networks(FFNs)

✔ 마지막 prediction은 3개의 ReLU가 포함된 퍼셉트론과 hidden 차원 d, 그리고 linear projection layer로 구성되어 있습니다. FFN은 표준화된 좌표와 box의 너비와 높이로 구성되어 있습니다. 또한 class label은 softmax를 사용해 예측하며, 고정된 N개의 bbox set을 예측합니다.

Experiments

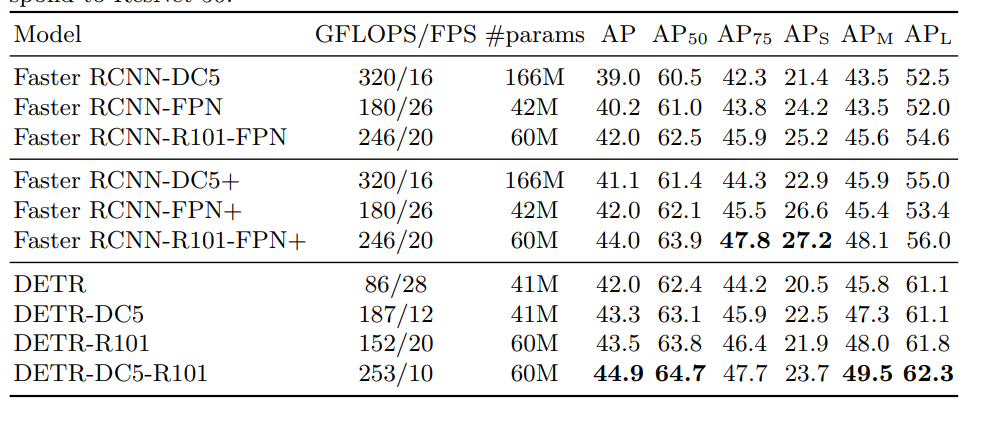

📌 비교 모델: Faster R-CNN

📌 Dataset: COCO minival

📌 Optimizer: AdamW

📌 Backbone: ResNet50(pre-train ImageNet), ResNet101(pre-train ImageNet) call DETR-R101

📌 additional: Conv5 layer의 stride를 삭제하고 dilation 방법을 추가해 resolution을 증가시켰다.(RETR-DC5),

📌 Scal augmentation, Random crop augmentation, Add dropout 0.1

✔ Faster R-CNN과 DETR의 비교 결과 테이블 입니다. DETR-DC5-R101에서 가장 높은 AP를 확인 할 수 있으며, 모든 영역에서 가장 좋은 결과를 보여주지는 않지만, 대부분에서 좋은 결과를 보여줍니다.

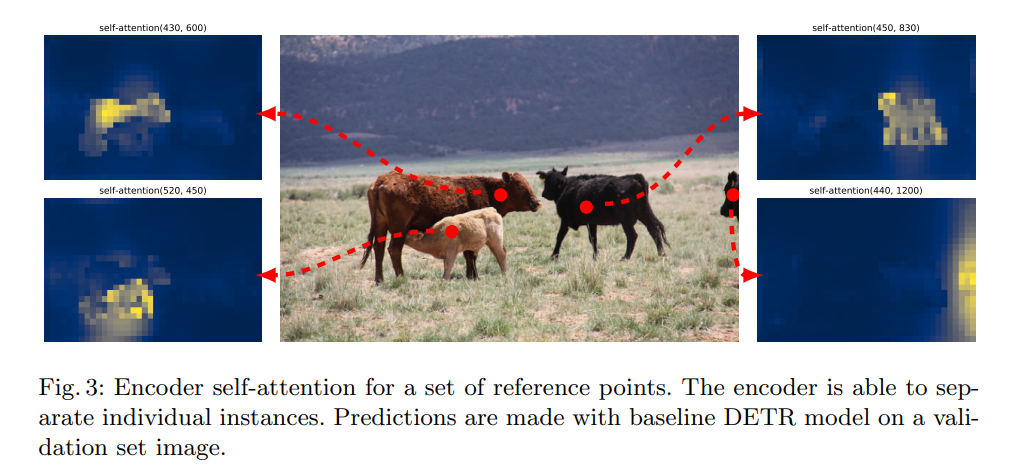

✔ 위의 사진은 Encoder self-attention의 attention map을 보여주는데, 이는 각각의 개체를 잘 구분하는 것을 확인할 수 있습니다.

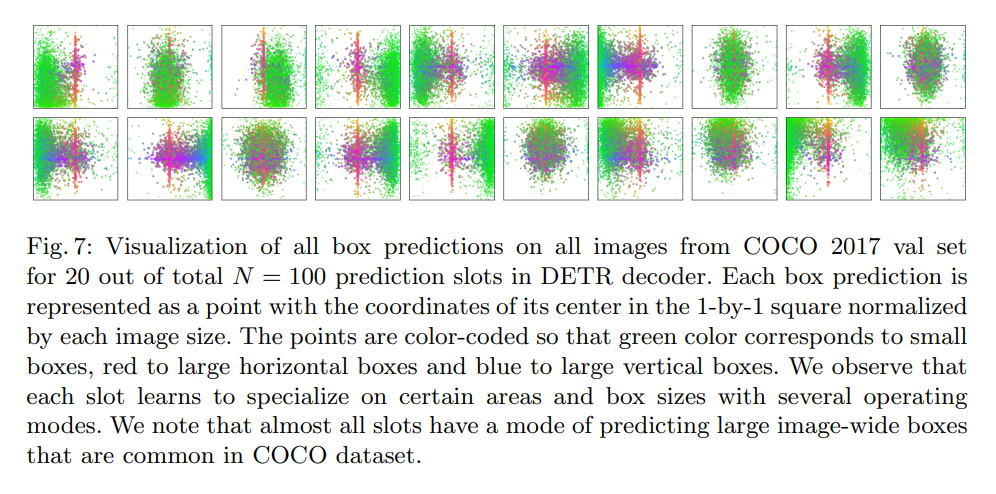

✔ 이는 각각의 decoder에서의 prediction slot을 visualization한 그래프입니다. 각각의 slot들은 특정 범위에 대해 구체화 하는것을 확인할 수 있습니다.

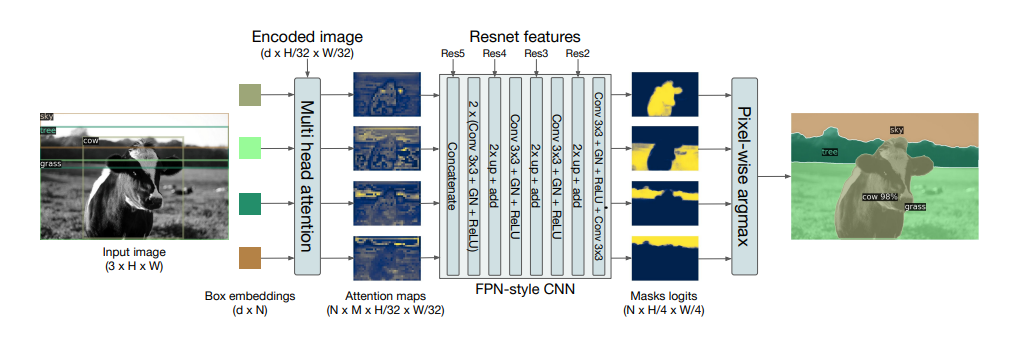

✅ 이 외에도 다양한 실험결과를 본 논문에서 제시해주고 있습니다. ablation을 통해 제시된 방법론들에 대한 실험 결과와 decoder, encoder layer의 반복 횟수에 따른 실혐 결과 등을 제시하고 있습니다. 궁금하시다면 한 번쯤 읽어보시는 걸 추천드립니다. 또한 DETR for panoptic sementation을 수행하는데 이는 아래의 구조를 따른다고 합니다.