Joint Decoding

CTC/attention-based end-to-end speech recognition is performed by label synchronous decoding with a beam search similar to conventional attention-based ASR. However, we take the CTC probabilities into account to find a hyphothesis that is better aligned to the input speech.

Beam search process를 위해 decoder는 각 character가 등장할 probability = score를 구해줘야 한다. 하지만, CTC와 attention-based scores를 combine해주는 것은 쉽지 않다. CTC는 frame-synchronously하게 compute하고 attention-based는 label-synchronously하게 compute하기 때문이다. 이 논문에서는 scores를 함께 사용할 수 있는 두 가지 방법을 제시하고 있다.

1) Rescoring

Two-pass approach

첫 번째, 오직 attention-based sequence probabilities를 가지고 beam search를 진행해 a set of complete hypotheses를 구해준다.

두 번째, CTC probabilities와 attention probabilities를 가지고 앞에서 구한 complete hypotheses를 rescore 해준다.

2) One-Pass Decoding

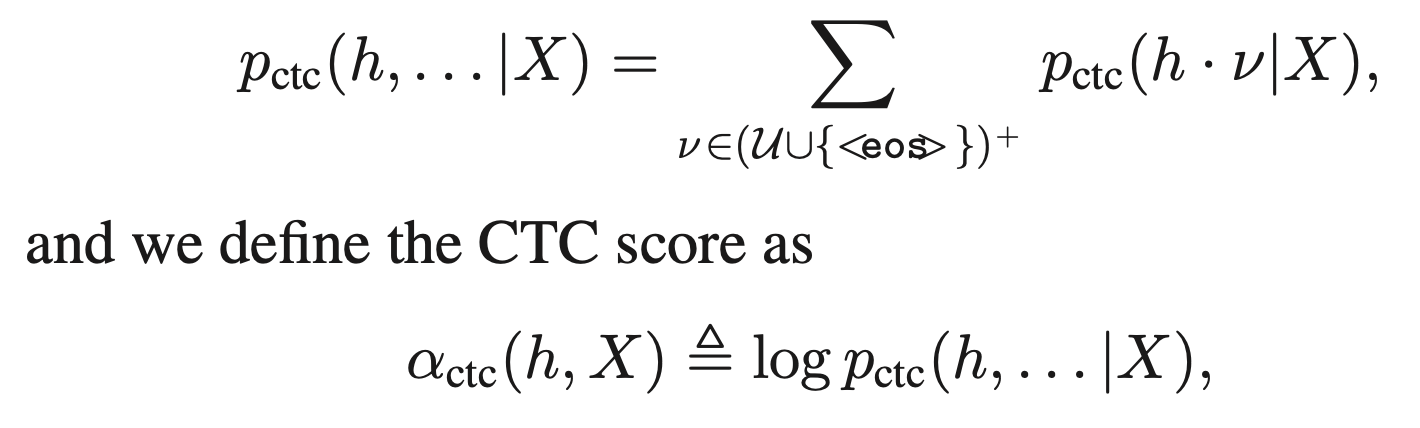

CTC prefix probability

It is defined as the cumulative probability of all label sequences that have h as their prefix.

Why beam search?

In order for us find the optimal label, we need to first calculate the probability of all possible paths in ctc, sum together the probability of paths yielding the same label, and then select the label with the highest probability.

Furthermore, max decoding doesn’t give us the option to incorporate a language model. A language model would have penalized the use of a non-word like BUNNI. And if BUNNI is successfully corrected to BUNNY, the word BUGS should be a more likely preceding word than BOX. Using prefix beam search, we can alleviate both these issues.

[Watanabe et al.,2017]Hybrid CTC/Attention Architecture for End-to-End Speech Recognition, Shinji Watanabe, Takaaki Hori, Suyoun Kim, John R. Hershey, Tomoki Hayashi, IEEE STSP Vol.11, 2017

https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8068205