KOBert 감성 분석 모델링

구현 목표

- iOS 앱에서 온-디바이스로 사용 가능한 머신 러닝은 CoreML 또는 CreateML이지만, 생각보다 CreateML에서 지원 가능한 NLP 기법은 제한되어 있어서 CoreML로 커스텀하기로 결정

- CoreML 문법보다 기존의 파이썬에서의 NLP 모델 설계가 익숙했기 때문에 CoreML에서 제공하는 모델 컨버팅 기법을 통해 파이썬에서 훈련한 모델을 CoreML로 옮기기로 결정

구현 과정

한국어 감성 분류 데이터셋

- 한국어 감성 데이터셋을 상세한 감정 라벨링과 함께 제공하는 AIHub의 공공 데이터셋 사용

- 약 사만 건의 데이터 셋을 통해 훈련

Prepare Model

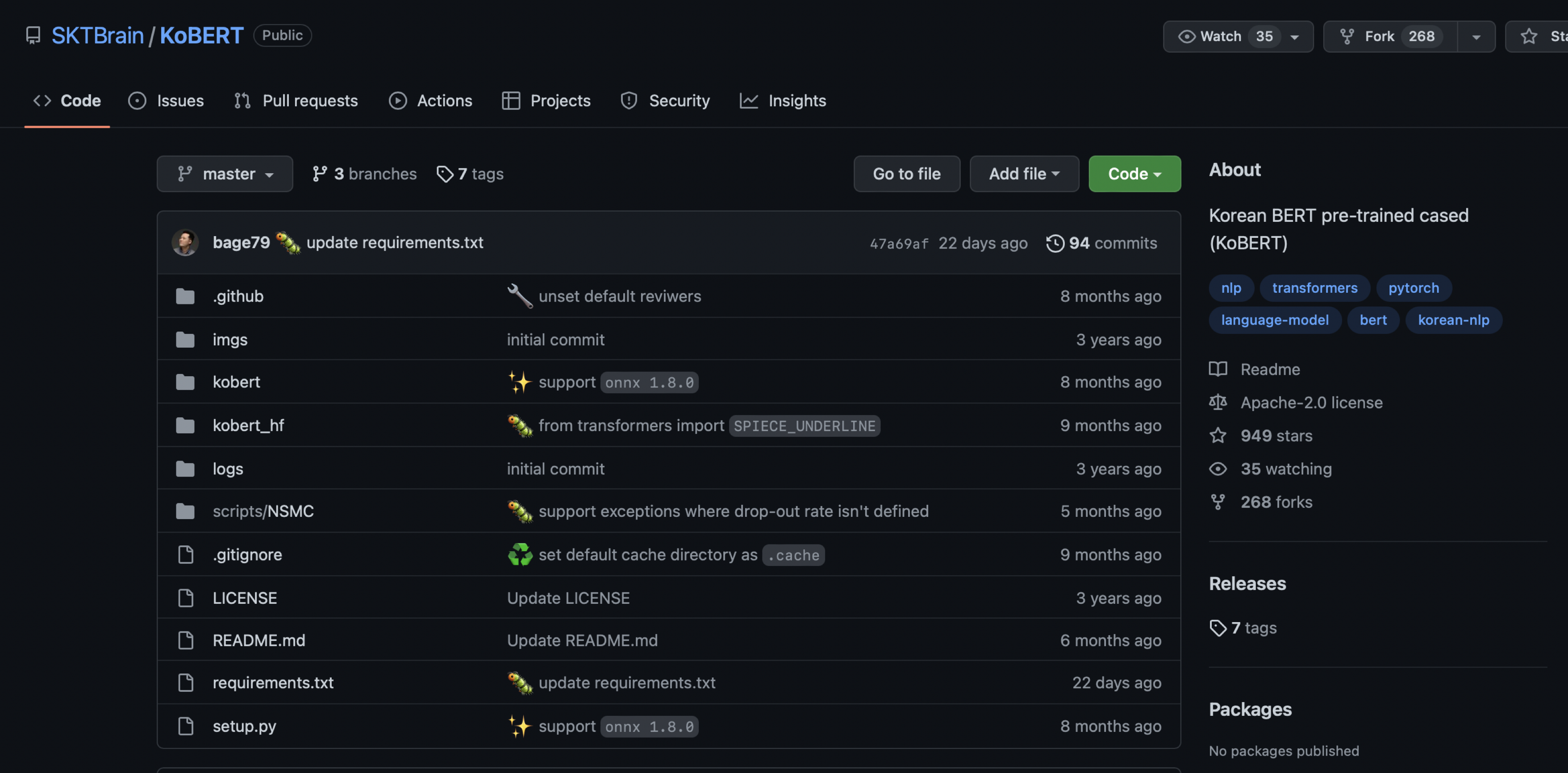

- KOBert Hugging Face를 통한 토크나이저 사용(직접적인 모델 깃 사용은 불가능한 것으로 보임)

!pip install gluonnlp pandas tqdm

!pip install mxnet

!pip install sentencepiece

!pip install transformers

!pip install torch

!pip install 'git+https://github.com/SKTBrain/KoBERT.git#egg=kobert_tokenizer&subdirectory=kobert_hf'

import torch

from torch import nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

import gluonnlp as nlp

import numpy as np

from tqdm import tqdm, tqdm_notebook

import pandas as pd

from sklearn.model_selection import train_test_split

from kobert_tokenizer import KoBERTTokenizer

from transformers import BertModel

from transformers import AdamW

from transformers.optimization import get_cosine_schedule_with_warmup

from google.colab import drive

drive.mount('/content/drive')

device = torch.device("cuda:0")

# device = torch.device("cpu")- 구글 코랩에서 파이토치, ML 모델링, KoBERT 토크나이저를 사용하기 위한 설치 및 임포트

- 구글 드라이브에 올린 AIHub 데이터셋 (csv) 로드

tokenizer = KoBERTTokenizer.from_pretrained('skt/kobert-base-v1')

bertmodel = BertModel.from_pretrained('skt/kobert-base-v1', return_dict=False)

vocab = nlp.vocab.BERTVocab.from_sentencepiece(tokenizer.vocab_file, padding_token='[PAD]')

tok = tokenizer.tokenize

# Setting parameters

max_len = 64

batch_size = 64

warmup_ratio = 0.1

num_epochs = 5

max_grad_norm = 1

log_interval = 200

learning_rate = 5e-5- KoBERT가 사용한 토크나이저, 사전 훈련된 모델, 모델의 어휘 세트(보카) 로드

- KoBERT가 감성 분석에서 사용한 모델 파라미터 그대로 적용

Model Class & Funcs

class BERTDataset(Dataset):

def __init__(self, dataset, sent_idx, label_idx, bert_tokenizer,vocab, max_len,

pad, pair):

transform = nlp.data.BERTSentenceTransform(

bert_tokenizer, max_seq_length=max_len,vocab=vocab, pad=pad, pair=pair)

self.sentences = [transform([i[sent_idx]]) for i in dataset]

self.labels = [np.int32(i[label_idx]) for i in dataset]

def __getitem__(self, i):

return (self.sentences[i] + (self.labels[i], ))

def __len__(self):

return (len(self.labels))- BERT 모델을 사용할 때 어떤 데이터 클래스를 사용할 것인지 결정해야 함

class BERTClassifier(nn.Module):

def __init__(self,

bert,

hidden_size = 768,

num_classes=6,

dr_rate=None,

params=None):

super(BERTClassifier, self).__init__()

self.bert = bert

self.dr_rate = dr_rate

self.classifier = nn.Linear(hidden_size , num_classes)

if dr_rate:

self.dropout = nn.Dropout(p=dr_rate)

def gen_attention_mask(self, token_ids, valid_length):

attention_mask = torch.zeros_like(token_ids)

for i, v in enumerate(valid_length):

attention_mask[i][:v] = 1

return attention_mask.float()

def forward(self, token_ids, valid_length, segment_ids):

attention_mask = self.gen_attention_mask(token_ids, valid_length)

_, pooler = self.bert(input_ids = token_ids, token_type_ids = segment_ids.long(), attention_mask = attention_mask.float().to(token_ids.device))

if self.dr_rate:

out = self.dropout(pooler)

return self.classifier(out)- BERT 데이터셋을 구성하는 라벨의 개수를

num_classes로 넣어주거나 모델을 통해 직접 추론할 수도 있을 것

def calc_accuracy(X,Y):

max_vals, max_indices = torch.max(X, 1)

train_acc = (max_indices == Y).sum().data.cpu().numpy()/max_indices.size()[0]

return train_accdef predict(sentence):

dataset = [[sentence, '0']]

test = BERTDataset(dataset, 0, 1, tok, vocab, max_len, True, False)

test_dataloader = torch.utils.data.DataLoader(test, batch_size=batch_size, num_workers=2)

model.eval()

answer = 0

for batch_id, (token_ids, valid_length, segment_ids, label) in enumerate(test_dataloader):

token_ids = token_ids.long().to(device)

segment_ids = segment_ids.long().to(device)

valid_length= valid_length

label = label.long().to(device)

out = model(token_ids, valid_length, segment_ids)

for logits in out:

logits = logits.detach().cpu().numpy()

answer = np.argmax(logits)

return answer- 주어진 문장이 현재 학습이 완료된 모델 내에서 어떤 라벨과 argmax인지 판단하고 추론된 결과를 리턴하는 함수

KoBERT 네이버 영화 리뷰 감성분석 with Hugging Face

허깅페이스 사용 관련 코드를 많이 참조했다!

Train Model

train_set = pd.read_csv('/content/drive/MyDrive/Colab Notebooks/감성대화말뭉치_최종데이터__Training.csv')

validation_set = pd.read_csv('/content/drive/MyDrive/Colab Notebooks/감성대화말뭉치_최종데이터__Validation.csv')

train_set = train_set.loc[:, ['감정_대분류', '사람문장1']]

validation_set = validation_set.loc[:, ['감정_대분류', '사람문장1']]

train_set.dropna(inplace=True)

validation_set.dropna(inplace=True)

train_set.columns = ['label', 'data']

validation_set.columns = ['label', 'data']

train_set.loc[(train_set['label'] == '기쁨'), 'label'] = 0

train_set.loc[(train_set['label'] == '기쁨 '), 'label'] = 0

train_set.loc[(train_set['label'] == '불안'), 'label'] = 1

train_set.loc[(train_set['label'] == '불안 '), 'label'] = 1

train_set.loc[(train_set['label'] == '당황'), 'label'] = 2

train_set.loc[(train_set['label'] == '슬픔'), 'label'] = 3

train_set.loc[(train_set['label'] == '분노'), 'label'] = 4

train_set.loc[(train_set['label'] == '상처'), 'label'] = 5

validation_set.loc[(validation_set['label'] == '기쁨'), 'label'] = 0

validation_set.loc[(validation_set['label'] == '불안'), 'label'] = 1

validation_set.loc[(validation_set['label'] == '당황'), 'label'] = 2

validation_set.loc[(validation_set['label'] == '슬픔'), 'label'] = 3

validation_set.loc[(validation_set['label'] == '분노'), 'label'] = 4

validation_set.loc[(validation_set['label'] == '상처'), 'label'] = 5 - CSV 파일을 로드한 뒤 사용할 칼럼을 정리

- 사용할 정보는 감정 대분류 및 사람 문장 1이라는 단 두 개의 칼럼 → 나머지 칼럼은 드랍, 유니크한 감정 대분류를 뽑아낸 뒤 한글 칼럼명을 숫자로 변경

train_set_data = [[i, str(j)] for i, j in zip(train_set['data'], train_set['label'])]

validation_set_data = [[i, str(j)] for i, j in zip(validation_set['data'], validation_set['label'])]

train_set_data, test_set_data = train_test_split(train_set_data, test_size = 0.2, random_state=4)

train_set_data = BERTDataset(train_set_data, 0, 1, tok, vocab, max_len, True, False)

test_set_data = BERTDataset(test_set_data, 0, 1, tok, vocab, max_len, True, False)

train_dataloader = torch.utils.data.DataLoader(train_set_data, batch_size=batch_size, num_workers=2)

test_dataloader = torch.utils.data.DataLoader(test_set_data, batch_size=batch_size, num_workers=2)- 모델 학습에 사용할 데이터셋은

[data, label]배열로 피팅 - 모델 학습에 사용할 훈련 데이터 셋은 기존에 주어진

train_set_data를 1:4 비율로 분리

model = BERTClassifier(bertmodel, dr_rate=0.5).to(device)

# Prepare optimizer and schedule (linear warmup and decay)

no_decay = ['bias', 'LayerNorm.weight']

optimizer_grouped_parameters = [

{'params': [p for n, p in model.named_parameters() if not any(nd in n for nd in no_decay)], 'weight_decay': 0.01},

{'params': [p for n, p in model.named_parameters() if any(nd in n for nd in no_decay)], 'weight_decay': 0.0}

]

optimizer = AdamW(optimizer_grouped_parameters, lr=learning_rate)

loss_fn = nn.CrossEntropyLoss()

t_total = len(train_dataloader) * num_epochs

warmup_step = int(t_total * warmup_ratio)

scheduler = get_cosine_schedule_with_warmup(optimizer, num_warmup_steps=warmup_step, num_training_steps=t_total)

for e in range(num_epochs):

train_acc = 0.0

test_acc = 0.0

model.train()

for batch_id, (token_ids, valid_length, segment_ids, label) in enumerate(tqdm_notebook(train_dataloader)):

optimizer.zero_grad()

token_ids = token_ids.long().to(device)

segment_ids = segment_ids.long().to(device)

valid_length= valid_length

label = label.long().to(device)

out = model(token_ids, valid_length, segment_ids)

loss = loss_fn(out, label)

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), max_grad_norm)

optimizer.step()

scheduler.step() # Update learning rate schedule

train_acc += calc_accuracy(out, label)

if batch_id % log_interval == 0:

print("epoch {} batch id {} loss {} train acc {}".format(e+1, batch_id+1, loss.data.cpu().numpy(), train_acc / (batch_id+1)))

print("epoch {} train acc {}".format(e+1, train_acc / (batch_id+1)))

model.eval()

for batch_id, (token_ids, valid_length, segment_ids, label) in enumerate(tqdm_notebook(test_dataloader)):

token_ids = token_ids.long().to(device)

segment_ids = segment_ids.long().to(device)

valid_length= valid_length

label = label.long().to(device)

out = model(token_ids, valid_length, segment_ids)

test_acc += calc_accuracy(out, label)

print("epoch {} test acc {}".format(e+1, test_acc / (batch_id+1)))- 에포크를 5로 설정, 훈련

torch.save(model, f'/content/drive/MyDrive/Colab Notebooks/SentimentAnalysisKOBert.pt')

torch.save(model.state_dict(), f'/content/drive/MyDrive/Colab Notebooks/SentimentAnalysisKOBert_StateDict.pt')- 학습이 완료된 모델을 추후에 반복적으로 사용하기 위해 모델을 저장하는 파이토치 코드

- 모델 전체를 저장하는 첫 번째 방법, 상태 파라미터 값만 저장하는 두 번째 방법이 존재함

Implement Model

sentence = '임시 데이터 문장입니다!'

predict(sentence)- 앞에서 구현한

predict함수가 주어진 문장을 토크나이저로 전처리 → 모델에 넣은 뒤 argmax로 나오는 라벨을 리턴하는 형태의 간단한input-output형태의 지도 학습 모델 완성

생각보다 AIHub, KoBERT 등 사용 가능한 데이터 + 모델이 많았고, 예제 또한 풍부했기 때문에 코랩에서 모델링을 하는 데에는 큰 무리가 없었다. 하지만 생각지도 못한 이슈가 생겼다...!

안녕하세요 사용하신 데이터 공유가 가능하신가요 ?