1. 와인데이터 분석

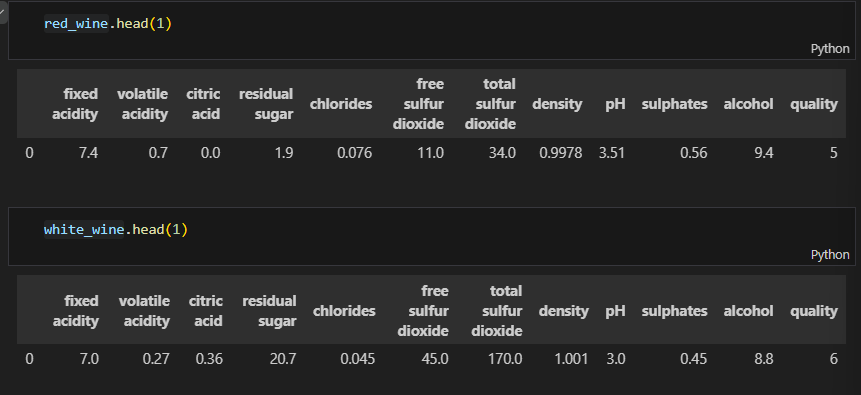

1_데이터 읽어오기

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')

# (주의) ; 로 해야 아래 처럼 뜸. :로 하면 이상하게 뜬다...2_컬럼조사

white_wine.columnsIndex(['fixed acidity', 'volatile acidity', 'citric acid', 'residual sugar',

'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density',

'pH', 'sulphates', 'alcohol', 'quality'],

dtype='object')

3_데이터합치기

데이터를 합치기 전에 레드/화이트 구분을 지어줘야 함

red_wine['color'] = 1.

white_wine['color'] = 0.

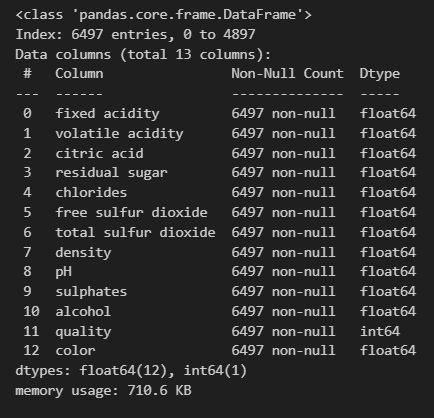

wine = pd.concat([red_wine, white_wine])

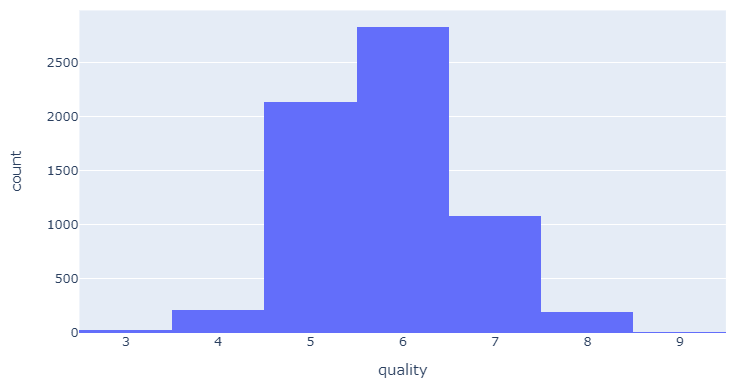

wine.info()4_['quality'] 컬럼 histogram

wine['quality'].unique()array([5, 6, 7, 4, 8, 3, 9], dtype=int64)

import plotly.express as px

# 데이터는 wine, x축은 quality

fig = px.histogram(wine, x='quality')

fig.show()

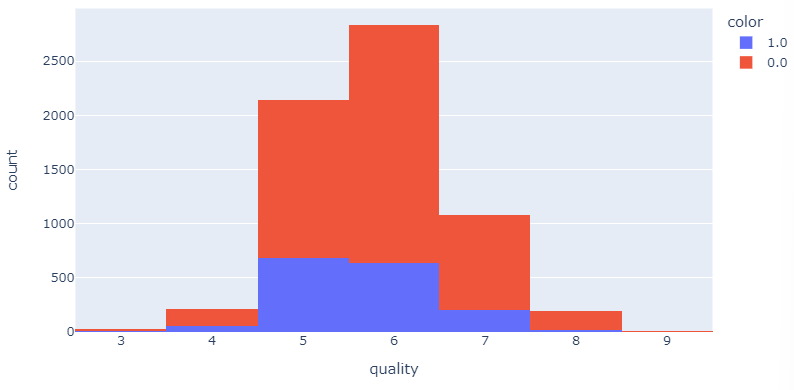

5_등급별 histogram (레드/화이트)

# y자리에 color 로 함으로써 데이터별 색상을 넣어 줌

fig = px.histogram(wine, x='quality', color='color')

fig.show()6_분류기 (레드/화이트)

# 1) feature data = 레드/화이트 맞추기

X = wine.drop(['color'], axis=1)

# 2) label data = 맞추고 싶은 대상

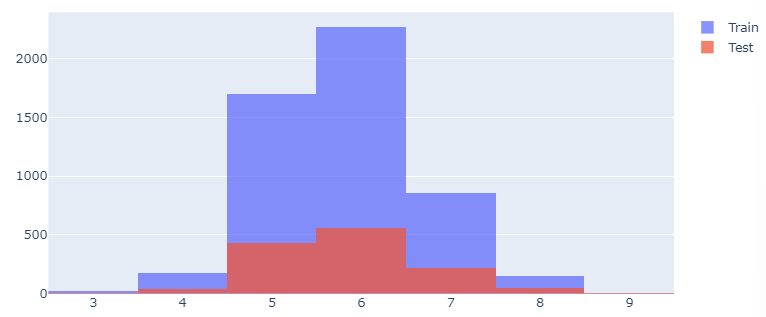

y = wine['color']# 3) 훈련/테스트용 설정 (train/test split)

# 모듈

from sklearn.model_selection import train_test_split

import numpy as np

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=13)

# y_train에 어떤게 있는지 확인, return_counts(갯수) 확인

np.unique(y_train, return_counts=True)(array([0., 1.]), array([3913, 1284], dtype=int64))

# 4) 어느 정도 구분되었는지 Histogram으로 확인

# graph_objects 모듈

import plotly.graph_objects as go

# Figure 호출

fig = go.Figure()

# go에서 Histogram을 가지고 옴

fig.add_trace(go.Histogram(x=X_train['quality'], name='Train'))

fig.add_trace(go.Histogram(x=X_test['quality'], name='Test'))

# 설정

# update_layout은 겹쳐지게(overlay)

# 투명도(update_traces)는 0.75

fig.update_layout(barmode='overlay')

fig.update_traces(opacity=0.75)

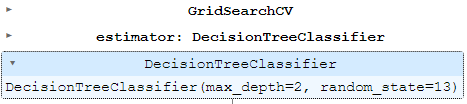

fig.show()7_Decision tree

# 1) fit(학습)

# 모듈

from sklearn.tree import DecisionTreeClassifier

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)# 2) train accuracy(학습 결과 확인)

# 모듈

from sklearn.metrics import accuracy_score

# predict(훈련된 값)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

# 결과 확인 (참값, 예측값)

print('Train Acc :', accuracy_score(y_train, y_pred_tr))

print('Test Acc :', accuracy_score(y_test, y_pred_test))Train Acc : 0.9553588608812776

Test Acc : 0.9569230769230769

8_데이터 전처리

X.columns

# feature data = 레드/화이트 맞추기

# X = wine.drop(['color'], axis=1)Index(['fixed acidity', 'volatile acidity', 'citric acid', 'residual sugar',

'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density',

'pH', 'sulphates', 'alcohol', 'quality'],

dtype='object')

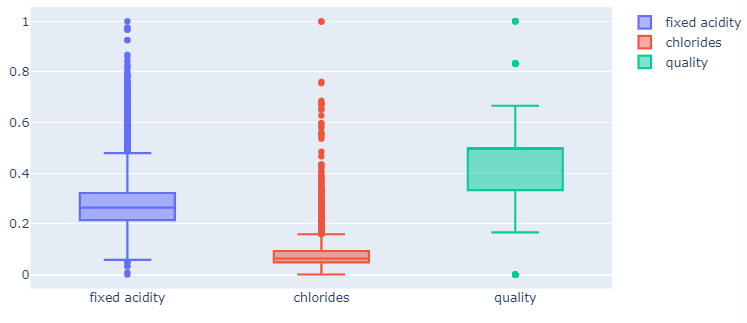

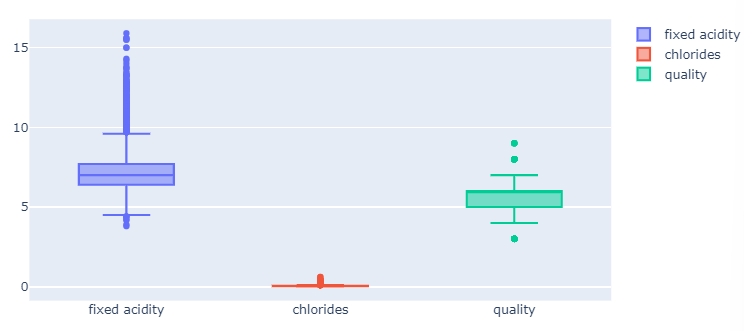

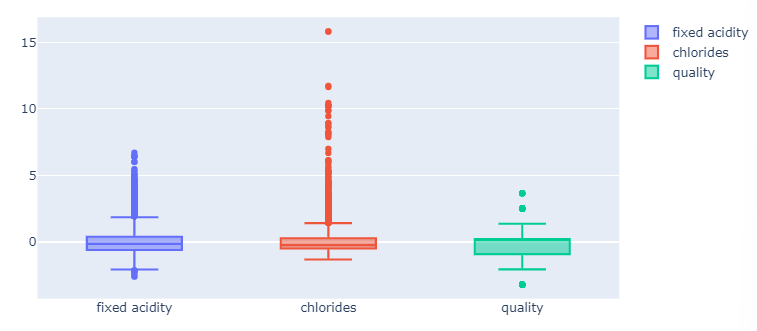

- 1) Boxplot

# graph_objects 모듈

import plotly.graph_objects as go

# Figure 호출

fig = go.Figure()

# go에서 Boxplot 가지고 옴

fig.add_trace(go.Box(y=X['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=X['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X['quality'], name='quality'))

fig.show()-

-

2) MinMaxScaler & StandardScaler 중 어떤게 좋을지 확인

# 모듈

from sklearn.preprocessing import MinMaxScaler, StandardScaler

# 인스턴시에이션 (instantiation) : 이름을 가진 독립된 객체를 다룰 수 있게 함

# 둘 다 해봐야 어떤 것이 좋은지 알 수 있음

MMS = MinMaxScaler()

SS = StandardScaler()

# fit()

MMS.fit(X)

SS.fit(X)

# transform()

X_mms = MMS.transform(X)

X_ss = SS.transform(X)

# 그래프를 그리고 싶어서 DataFrame을 만듬

X_mms_pd = pd.DataFrame(X_mms, columns=X.columns)

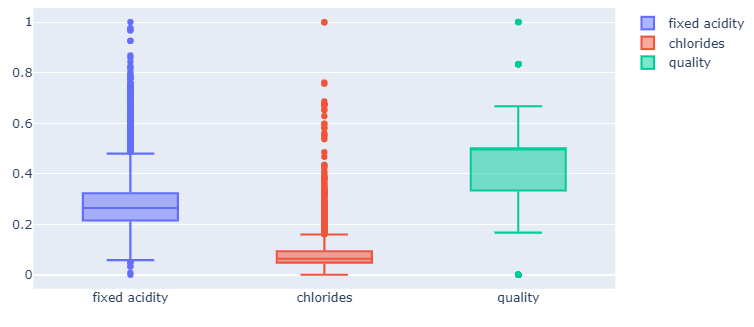

X_ss_pd = pd.DataFrame(X_ss, columns=X.columns)- 3) MinMaxScaler : 최대/최소값을 1,0으로 강제로 맞춤

# graph_objects 모듈

import plotly.graph_objects as go

# Figure 호출

fig = go.Figure()

# go에서 Boxplot 가지고 옴

fig.add_trace(go.Box(y=X_mms_pd['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=X_mms_pd['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X_mms_pd['quality'], name='quality'))

fig.show()-

-

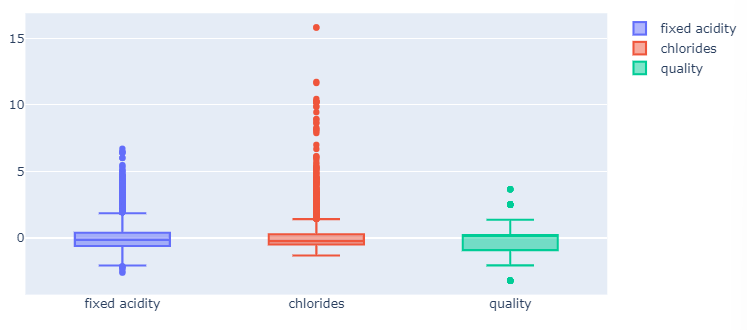

4) StandardScaler : 평균을 0, 표준편차를 1로 맞춤

# graph_objects 모듈

import plotly.graph_objects as go

# Figure 호출

fig = go.Figure()

# go에서 Boxplot 가지고 옴

fig.add_trace(go.Box(y=X_ss_pd['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=X_ss_pd['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X_ss_pd['quality'], name='quality'))

fig.show()

-

-

5) [함수]로 만들어보기 : MinMaxScaler, StandardScaler

# graph_objects 모듈

import plotly.graph_objects as go

# target_df 만들고

def px_box(target_df):

# Figure 호출

fig = go.Figure()

# y값에 target_df 반영

fig.add_trace(go.Box(y=target_df['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=target_df['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=target_df['quality'], name='quality'))

fig.show()px_box(X_mms_pd)px_box(X_ss_pd)-

-

6) MinMaxScaler 적용/학습

#split

X_train, X_test, y_train, y_test = train_test_split(X_mms_pd, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

# fit

wine_tree.fit(X_train, y_train)

# predict

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Mms Train Acc :', accuracy_score(y_train, y_pred_tr))

print('Mms Test Acc :', accuracy_score(y_test, y_pred_test))Mms Train Acc : 0.9553588608812776

Mms Test Acc : 0.9569230769230769

- 7) StandardScaler 적용/학습

#split

X_train, X_test, y_train, y_test = train_test_split(X_ss_pd, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

# fit

wine_tree.fit(X_train, y_train)

# predict

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Ss Train Acc :', accuracy_score(y_train, y_pred_tr))

print('Ss Test Acc :', accuracy_score(y_test, y_pred_test))Ss Train Acc : 0.9553588608812776

Ss Test Acc : 0.9569230769230769

# 8) zip - 레드/화이트 와인 구분 특성

# X_train의 컬럼 이름을 그대로 사용

# 분류기 : fit 했던 wine_tree

# feature_importances_

# 트리 기반 모델 중, 밀접한 관련이 있는 피처를 중요도 순으로 나열함

# 가중치가 적은 변수를 제거, 모델의 성능을 최적화 & 정확도를 높임

# zip-> dict 로 바꿈

dict(zip(X_train.columns, wine_tree.feature_importances_))

# ▼ 결과

# max_depth=2 로 잡았기 때문에, 2개 결과만 값이 0이 아님을 확인할 수 있다

{'fixed acidity': 0.0,

'volatile acidity': 0.0,

'citric acid': 0.0,

'residual sugar': 0.0,

'chlorides': 0.24230360549660776,

'free sulfur dioxide': 0.0,

'total sulfur dioxide': 0.7576963945033922,

'density': 0.0,

'pH': 0.0,

'sulphates': 0.0,

'alcohol': 0.0,

'quality': 0.0}

9_이진분류

# 1) quality 컬럼 이진화

# wine 데이터의 ['taste'] 컬럼 생성

# quality column울 grade로 잡고, 5등급 보다 크면 1, 그게 아니라면 0으로 잡음

wine['taste'] = [1. if grade>5 else 0. for grade in wine['quality']]

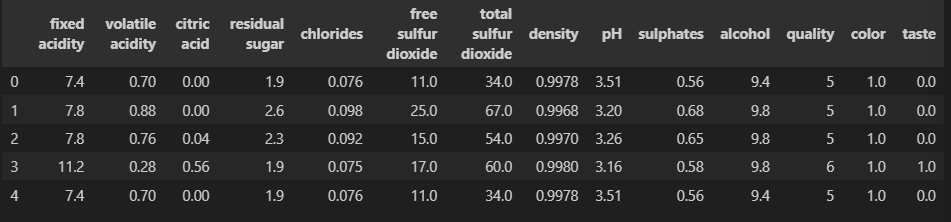

wine.head()--

# 2) 모델링(fit)

# label인 taste를 drop, 나머지를 X의 특성으로 봄

X = wine.drop(['taste'], axis=1)

# 새로만들 y데이터

y = wine['taste']

#split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=13)

# fit

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)# 3) 평가(accuracy)

# 모듈

from sklearn.metrics import accuracy_score

# predict(훈련된 값)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

# 결과 확인 (참값, 예측값)

print('Train Acc :', accuracy_score(y_train, y_pred_tr))

print('Test Acc :', accuracy_score(y_test, y_pred_test))Train Acc : 1.0

Test Acc : 1.0

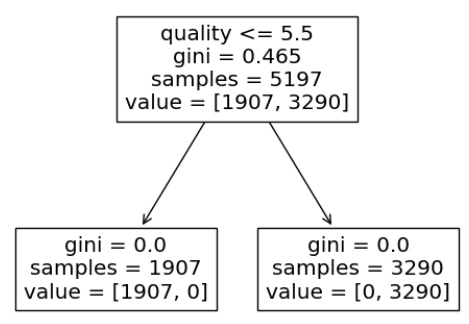

- 결정트리 모델의 정확도가 1.0이 나온다면, 이는 모델이 학습 데이터에 완벽하게 적합되어 과적합(overfitting)된 상태일 수 있는데 결정트리를 시각화하여 무엇이 잘못되었는지 확인해봐야 함

# 4) 결정트리

import matplotlib.pyplot as plt

import sklearn.tree as tree

plt.figure(figsize=(6,5))

tree.plot_tree(wine_tree, feature_names=X.columns.tolist());- taste를 만들었던 quality 컬럼 값이 아직 살아 있음

- quality 컬럼을 가지고 학습하여 1.0이 나온 것임

- 따라서 quality 컬럼을 drop해서 다시 모델을 제작

# 5) drop(['taste','quality'] 후 모델링 & 평가

x = wine.drop(['taste','quality'], axis=1)

y = wine['taste']

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(x_train, y_train)

y_pred_tr = wine_tree.predict(x_train)

y_pred_test = wine_tree.predict(x_test)

accuracy_score(y_train, y_pred_tr)

accuracy_score(y_test, y_pred_test)

print('Train Acc: ', accuracy_score(y_train, y_pred_tr))

print('Test Acc: ', accuracy_score(y_test, y_pred_test))[0.6007692307692307,

0.6884615384615385,

0.7090069284064665,

0.7628945342571208,

0.7867590454195535]

0.709578255462782

[0.5523076923076923,

0.6884615384615385,

0.7143956889915319,

0.7321016166281755,

0.7567359507313318]

fixed acidity volatile acidity citric acid residual sugar chlorides free sulfur dioxide total sulfur dioxide density pH sulphates alcohol quality

0 7.4 0.7 0.0 1.9 0.076 11.0 34.0 0.9978 3.51 0.56 9.4 5

fixed acidity volatile acidity citric acid residual sugar chlorides free sulfur dioxide total sulfur dioxide density pH sulphates alcohol quality

0 7.0 0.27 0.36 20.7 0.045 45.0 170.0 1.001 3.0 0.45 8.8 6

Index(['fixed acidity', 'volatile acidity', 'citric acid', 'residual sugar',

'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density',

'pH', 'sulphates', 'alcohol', 'quality'],

dtype='object')

<class 'pandas.core.frame.DataFrame'>

Index: 6497 entries, 0 to 4897

Data columns (total 13 columns):

Column Non-Null Count Dtype

0 fixed acidity 6497 non-null float64

1 volatile acidity 6497 non-null float64

2 citric acid 6497 non-null float64

3 residual sugar 6497 non-null float64

4 chlorides 6497 non-null float64

5 free sulfur dioxide 6497 non-null float64

6 total sulfur dioxide 6497 non-null float64

7 density 6497 non-null float64

8 pH 6497 non-null float64

9 sulphates 6497 non-null float64

10 alcohol 6497 non-null float64

11 quality 6497 non-null int64

12 color 6497 non-null float64

dtypes: float64(12), int64(1)

memory usage: 710.6 KB

array([5, 6, 7, 4, 8, 3, 9], dtype=int64)

(array([0., 1.]), array([3913, 1284], dtype=int64))

DecisionTreeClassifier

DecisionTreeClassifier(max_depth=2, random_state=13)

Train Acc : 0.9553588608812776

Test Acc : 0.9569230769230769

Index(['fixed acidity', 'volatile acidity', 'citric acid', 'residual sugar',

'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density',

'pH', 'sulphates', 'alcohol', 'quality'],

dtype='object')

Mms Train Acc : 0.9553588608812776

Mms Test Acc : 0.9569230769230769

Ss Train Acc : 0.9553588608812776

Ss Test Acc : 0.9569230769230769

{'fixed acidity': 0.0,

'volatile acidity': 0.0,

'citric acid': 0.0,

'residual sugar': 0.0,

'chlorides': 0.24230360549660776,

'free sulfur dioxide': 0.0,

'total sulfur dioxide': 0.7576963945033922,

'density': 0.0,

'pH': 0.0,

'sulphates': 0.0,

'alcohol': 0.0,

'quality': 0.0}

fixed acidity volatile acidity citric acid residual sugar chlorides free sulfur dioxide total sulfur dioxide density pH sulphates alcohol quality color taste

0 7.4 0.70 0.00 1.9 0.076 11.0 34.0 0.9978 3.51 0.56 9.4 5 1.0 0.0

1 7.8 0.88 0.00 2.6 0.098 25.0 67.0 0.9968 3.20 0.68 9.8 5 1.0 0.0

2 7.8 0.76 0.04 2.3 0.092 15.0 54.0 0.9970 3.26 0.65 9.8 5 1.0 0.0

3 11.2 0.28 0.56 1.9 0.075 17.0 60.0 0.9980 3.16 0.58 9.8 6 1.0 1.0

4 7.4 0.70 0.00 1.9 0.076 11.0 34.0 0.9978 3.51 0.56 9.4 5 1.0 0.0

DecisionTreeClassifier

DecisionTreeClassifier(max_depth=2, random_state=13)

Train Acc : 1.0

Test Acc : 1.0

Train Acc: 0.7294593034442948

Test Acc: 0.7161538461538461

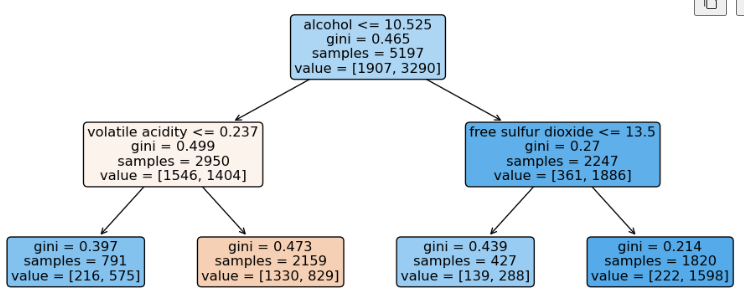

- 6)결정트리 값을 통해 와인의 맛 평가의 기준이 [alchol] 인 것을 확인함

# 6) 결정트리

import matplotlib.pyplot as plt

import sklearn.tree as tree

plt.figure(figsize=(12,5))

tree.plot_tree(wine_tree, rounded=True, filled=True, feature_names=X.columns.tolist());

plt.show()2. Pipeline

- 단순히 Iris, Wine 데이터를 받아서 사용했을 뿐인데, 직접 공부하면서 코드를 하나씩 실행해보면 혼돈이 크다는 것을 알 수 있다.

- Jupyter Notebook 상황에서 데이터의 전처리와 여러 알고리즘의 반복 실행, 하이퍼 파라미터의 튜닝 과정을 번갈아 하다 보면 코드의 실행 순서에 혼돈이 있을 수 있다.

- 이런 경우 클래스(class)로 만들어서 진행해도 되지만, sklearn 유저에게는 꼭 그럴 필요없이 준비된 기능인 Pipeline이 있다.

# 1) 데이터 불러오기 & concat

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')

red_wine['color'] = 1.

white_wine['color'] = 0.

wine = pd.concat([red_wine, white_wine])

x = wine.drop(['color'], axis=1)

y = wine['color']- 파이프라인을 한번 짜 놓으면, 호출 시 알아서 진행

# 2) 파이프라인 생성

# 3가지 모듈

from sklearn.pipeline import Pipeline

from sklearn.tree import DecisionTreeClassifier

from sklearn.preprocessing import StandardScaler

# 변수에 리스트 형, 튜플로 지정

estimators = [

('scaler', StandardScaler()),

('clf', DecisionTreeClassifier())

]

# 변수에 파이프라인 설정

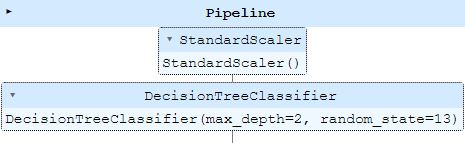

pipe = Pipeline(estimators)- 어떤 스텝으로 움직이는지 확인

- 첫번쨰 단계는 scaler라고 부르고, StandardScaler() 가 지정되어 있음

- 두번쨰 단계는 clf라고 부르고, DecisionTreeClassifier() 가 지정되어 있음

pipepipe.steps[('scaler', StandardScaler()), ('clf', DecisionTreeClassifier())]

- 객체 호출 방법

pipe.steps[0]('scaler', StandardScaler())

pipe['scaler']- DecisionTreeClassifier() 에는 지정해야 하는 [파라미터]가 있음

- set_params (스탭이름 “clf” + 언더바 두 개 “- -” + 속성 이름)

# 3) Params 접근

# DecisionTreeClassifier() 메서드를 'clf'로 위에서 정의했고, 언더바를 붙여서 max_dept 파라미터를 2로 설정 한 것

# 즉, 언더바 2개를 추가해서 접근했다고 보면 됨 (https://guru.tistory.com/50)

# clf에 max_depth=2를 설정 : clf + _ _ + max_depth=2

pipe.set_params(clf__max_depth=2)

pipe.set_params(clf__random_state=13)# 4) split + fit

from sklearn.model_selection import train_test_split

# stratify=y : y데이터의 분로픞 맞춰라

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=13, stratify=y)

# (1) Parameter

# arrays : 분할시킬 데이터를 입력 (Python list, Numpy array, Pandas dataframe 등..)

# test_size : 테스트 데이터셋의 비율(float)이나 갯수(int) (default = 0.25)

# train_size : 학습 데이터셋의 비율(float)이나 갯수(int) (default = test_size의 나머지)

# random_state : 데이터 분할시 셔플이 이루어지는데 이를 위한 시드값 (int나 RandomState로 입력)

# shuffle : 셔플여부설정 (default = True)

# stratify : 지정한 Data의 비율을 유지한다.

# 예를 들어, Label Set인 Y가 25%의 0과 75%의 1로 이루어진 Binary Set일 때, stratify=Y로 설정하면 나누어진 데이터셋들도 0과 1을 각각 25%, 75%로 유지한 채 분할된다.- (예전) Scaler 통과 + 분류기 학습

- (지금) 이미 선언해둔 pipe 이용

# 5) pipe

pipe.fit(X_train, y_train)# 6) 결과 확인

from sklearn.metrics import accuracy_score

y_pred_tr = pipe.predict(X_train)

y_pred_test = pipe.predict(X_test)

print('Train Acc :', accuracy_score(y_train, y_pred_tr))

print('Test Acc :', accuracy_score(y_test, y_pred_test))Train Acc : 1.0

Test Acc : 1.0

3. 교차검증

# 1) 데이터 불러오기 & concat

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')

red_wine['color'] = 1.

white_wine['color'] = 0.

wine = pd.concat([red_wine, white_wine])# 2) 맛 분류를 위한 데이터 정리

wine['taste'] = [1. if grade > 5 else 0 for grade in wine['quality']]

X = wine.drop(['taste','quality'], axis= 1)

y = wine['taste']

# 3) 의사 결정 나무 모델 확인

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(x_train, y_train)

y_pred_tr = wine_tree.predict(x_train)

y_pred_test = wine_tree.predict(x_test)

print('Train Acc: ', accuracy_score(y_train, y_pred_tr))

print('Test Acc: ', accuracy_score(y_test, y_pred_test))Train Acc: 0.7294593034442948

Test Acc: 0.7161538461538461

1_KFold()

Train Acc: 0.7294593034442948

Test Acc: 0.7161538461538461- 위 값이 최선인지, acc를 신뢰할 수 있는지 확인하기 위해 KFold(교차검증)이 필요

# 4) KFold

# 모듈

from sklearn.model_selection import KFold

# n_splits는 몇 개의 폴드(fold)로 나눌 것인지를 의미하는 매개변수

# 5겹 교차 검증이 가장 일반적

kfold = KFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=2, random_state=13)# 5) 모델링 (학습 & 결과 확인)

# 기록 보관을 위해 '빈리스트'생성

cv_accuracy =[]

for train_idx, test_idx in kfold.split(X):

# 데이터 구성

X_train, X_test = X.iloc[train_idx], X.iloc[test_idx]

y_train, y_test = y.iloc[train_idx], y.iloc[test_idx]

# 학습

wine_tree_cv.fit(X_train, y_train)

# 모델링

pred = wine_tree_cv.predict(X_test)

# 리스트 저장 (결과값)

cv_accuracy.append(accuracy_score(y_test, pred))

cv_accuracy

[0.6007692307692307,

0.6884615384615385,

0.7090069284064665,

0.7628945342571208,

0.7867590454195535]

# 6) KFold 평균값 확인

import numpy as np

np.mean(cv_accuracy)0.709578255462782

2_StratifiedKFold()

# 7) StratifiedKFold

from sklearn.model_selection import StratifiedKFold

skfold = StratifiedKFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=2, random_state=13)

cv_accuracy = []

for train_idx, test_idx in skfold.split(X, y):

# 데이터 구성

X_train, X_test = X.iloc[train_idx], X.iloc[test_idx]

y_train, y_test = y.iloc[train_idx], y.iloc[test_idx]

# 학습

wine_tree_cv.fit(X_train, y_train)

# 모델링

pred = wine_tree_cv.predict(X_test)

# 리스트 저장 (결과값)

cv_accuracy.append(accuracy_score(y_test, pred))

cv_accuracy[0.5523076923076923,

0.6884615384615385,

0.7143956889915319,

0.7321016166281755,

0.7567359507313318]

# 8) StratifiedKFold 평균값 확인

import numpy as np

np.mean(cv_accuracy)0.6888004974240539

3_cross validation

from sklearn.model_selection import cross_val_score

skfold = StratifiedKFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=2, random_state=13)

cross_val_score(wine_tree_cv, X, y, scoring=None, cv=skfold)array([0.55230769, 0.68846154, 0.71439569, 0.73210162, 0.75673595])

4_함수로 풀어보기

def skfold_dt(depth):

from sklearn.model_selection import cross_val_score

skfold = StratifiedKFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=depth, random_state=13)

print(cross_val_score(wine_tree_cv, X, y, scoring=None, cv=skfold))

skfold_dt(3)[0.56846154 0.68846154 0.71439569 0.73210162 0.75673595]

5_train score와 함께 보고 싶다면

from sklearn.model_selection import cross_validate

cross_validate(wine_tree_cv, X, y, scoring=None, cv=skfold, return_train_score=True){'fit_time': array([0.01700068, 0.01591516, 0.01597691, 0.01599717, 0.01500058]),

'score_time': array([0.00108767, 0.01203322, 0.00293016, 0.00198984, 0.00199056]),

'test_score': array([0.50076923, 0.62615385, 0.69745958, 0.7582756 , 0.74903772]),

'train_score': array([0.78795459, 0.78045026, 0.77568295, 0.76356291, 0.76279338])}

4. 하이퍼파라미터 튜닝

- 튜닝 대상

- 결정나무에서 아직 우리가 튜닝해 볼만한 것은 max_depth이다.

- 간단하게 반복문으로 max_depth를 바꿔가며 테스트해볼 수 있을 것이다.

- 그런데 앞으로를 생각해서 보다 간편하고 유용한 방법을 생각해보자.

# 1) 데이터 불러오기 & concat

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')

red_wine['color'] = 1.

white_wine['color'] = 0.

wine = pd.concat([red_wine, white_wine])

# 2) 맛 분류를 위한 데이터 정리

wine['taste'] = [1. if grade > 5 else 0 for grade in wine['quality']]

X = wine.drop(['taste','quality'], axis= 1)

y = wine['taste']

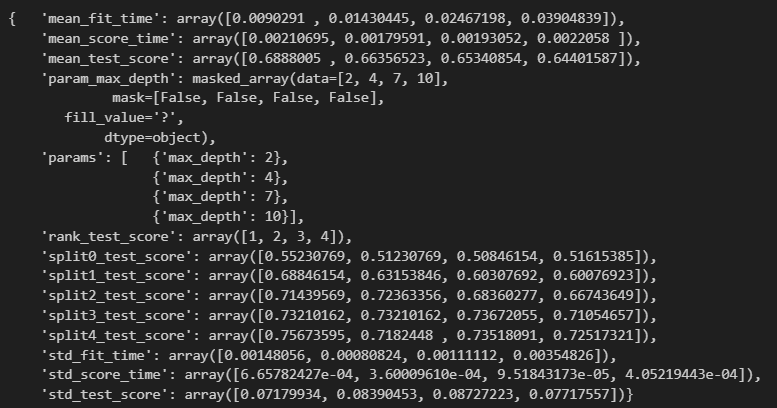

1) GridSearchCV

- 매번 하이퍼파라미터를 수정할 순 없음

- 예를 들어 pipeline을 5개 만든 경우, 하이퍼파라미터를 수정해야 하는 경우의 수는 엄청남

- 그래서, 수정할 파라미터를 지정 -> GridSearchCV(분류기)에 알아서 cv=5겹으로 fit해라는 명령인 "GridSearchCV"를 이용

- (참고)https://blog.naver.com/dalgoon02121/222103377185

# 모듈

from sklearn.model_selection import GridSearchCV

from sklearn.tree import DecisionTreeClassifier

# 파라미터 지정

params = {'max_depth':[2,4,7,10]}

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

# 변수에 = GridSearchCV(분류기로 wine_tree를 지정, param_grid는 params로, 5겹으로)

gridsearch = GridSearchCV(estimator=wine_tree, param_grid=params, cv=5)

# 학습 (split을 쓰지 않아도 됨)

gridsearch.fit(X,y)# 결과 확인

# pprint는 데이터를 보기 좋게 출력(pretty print)할 때 사용하는 모듈

import pprint

pp = pprint.PrettyPrinter(indent=4)

pp.pprint(gridsearch.cv_results_)2) 데이터 관찰

# 최고의 성능을 가진 모델

gridsearch.best_estimator_

# 결과 : max_depth=2 일때# 최고 점수

gridsearch.best_score_

# 결과 : 69%# 최고 파라미터

gridsearch.best_params_

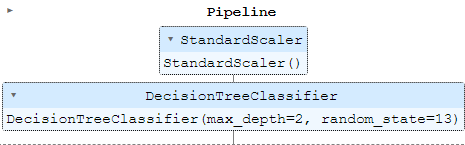

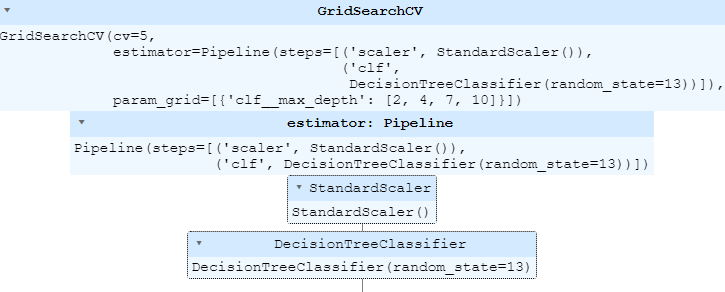

# 결과 : {'max_depth': 2}3) pipeline + GridSearchCV

# pipeline 생성 모델

from sklearn.pipeline import Pipeline

from sklearn.tree import DecisionTreeClassifier

from sklearn.preprocessing import StandardScaler

estimators = [

('scaler', StandardScaler()),

('clf', DecisionTreeClassifier(random_state=13))

]

pipe = Pipeline(estimators)# param 지정

param_grid = [{'clf__max_depth':[2,4,7,10]}]

# GridSearchCV

GridSearch = GridSearchCV(estimator=pipe, param_grid=param_grid, cv=5)

# fit

GridSearch.fit(X,y)4) DataFrame으로 예쁘게 정리

import pandas as pd

# GridSearch 변수에 cv_results_를 호출

# cv_results_ : 파라미터 조합별 결과 조회

score_df = pd.DataFrame(GridSearch.cv_results_)

score_df# 보고 싶은 컬럼들만 확인

score_df[['params', 'rank_test_score', 'mean_test_score', 'std_test_score']]`