0. Abstract

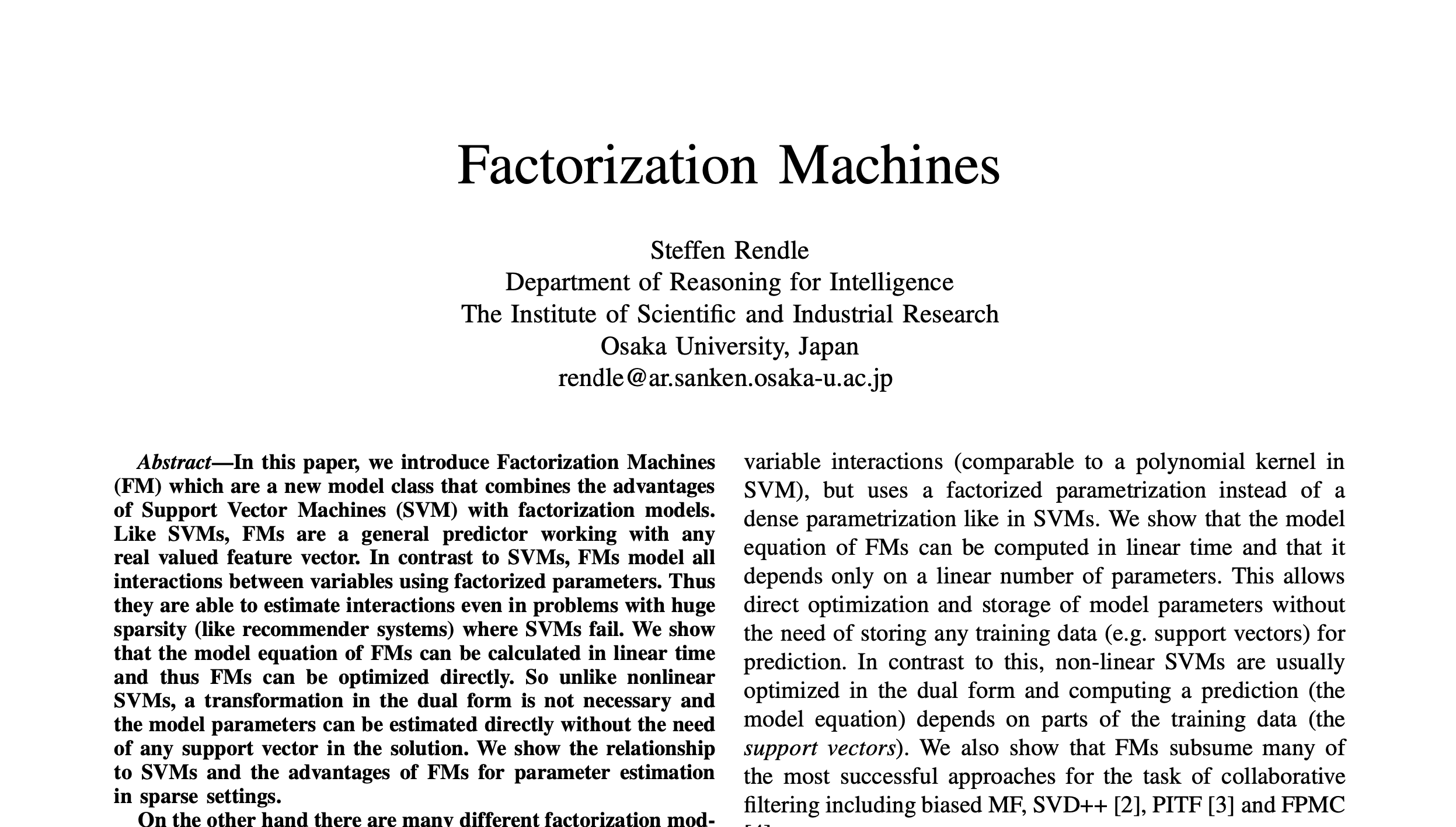

본 논문에서는 SVM(Support Vector Machines)과 Factorization model들의 장점을 합친 Factorization Machine(FM)에 대해서 소개한다. SVM처럼 FM은 어느 실수 값 feature vector가 input값으로 들어와도 잘 작동하는 general predictor working을 한다. SVM과는 반대로 FM은 factorized parameter들을 이용해서 변수들 사이의 상호작용을 모델화 한다. 때문에 SVM은 예측할 수 없는 huge sparsity의 문제에서도 상호작용을 잘 예측할 수 있다.

general predictor

데이터의 형태에 크게 규제 받지 않고, 분류, 회귀 등 다양한 작업을 수행 할 수 있다.

본 논문에서는 FM 모델 방정식이 linear time에 계산될 수 있어 바로 최적화할 수 있음을 보여준다. 그래서 nonlinear인 SVM과 달리, dual form에서의 변환이 필요하지 않고, support vector의 도움없이 바로 model parameter들을 추정할 수 있다.

또 다른 Factorization Model에는 matrix factorization, parallel factor analysis, SVD++, PITF, FPMC와 같은 specialized model들이 있다. 이 모델들은 general prediction 업무를 하지 못하며 특정 input값이 들어왔을 때만 작동한다. 이 모델들의 방정식과 최적화 알고리즘은 각 작업에 대해 독립적으로 파생된다. 본 논문에서는 FM이 input값을 특정함으로써 이 모델들을 흉내낼 수 있음을 보여준다. 따라서 Factorization Model에 대한 전문 지식이 없더라도 FM을 사용할 수 있다.

1. Introduction

SVM은 머신러닝과 데이터 마이닝에서 가장 유명한 predictor 중 하나이다. 그러나 협업필터링과 같은 환경에서는 SVM은 중요한 역할을 하지 못한다. 본 논문에서는 표준 SVM predictor가 이 작업을 성공하지 못하는 이유가 매우 sparse한 데이터의 복잡한(비선형적) kernel 공간에서 reliabel parameter(hyperplane: 초평면)를 학습할 수 없기 때문임을 보여준다. 반면에 tensor factorization model들과 특수 factorization model들은 일반적인 문제에 적용할 수 없다.

본 논문에서는 SVM처럼 general predictor이면서 매우 sparsity한 데이터에서 reliabel parameter들을 추청할 수 있는 FM을 소개한다.

FM은 모든 nested(중첩된) 변수들의 상호작용을 모델화하지만, SVM처럼 dense parametrization이 아닌 factorized parametrization을 사용한다. 본 논문에서는 FM의 모델 방정식을 linear time으로 계산할 수 있으며 parameter의 수에 따라 결점됨을 보여준다. 이는 즉시 최적화와 예측을 위한 별도의 학습 데이터의 저장 없이 모델 parameter의 저장을 가능하게 한다. 반대로 nonlinear SVM은 보통 dual form으로 최적화하며 학습데이터(support vectors)에 의존하여 예측을 계산한다.

2. Prediction Under Sparsity

▶︎ 가장 일반적인 예측은 실수 값 feature vector x로부터 target 범위 T(e.g. T = R for regression or T = {+, −} for classification)로 매핑하는 함수(y:R^n →T)를 추정하는 것이다. supervied setting에서는 target 함수 y가 주어졌을 때 학습 데이터 D = {(x(1), y(1)), (x(2), y(2)), . . .}가 있다고 가정한다. 순위를 매기는 작업에서 함수 y는 feature vectors x에 점수를 매기고 정렬할 수 있으며 scoring 함수는 pairwise 학습데이터(feature tuple (x(A),x(B)) : x(A)는 x(B)보다 랭크가 높아야 한다.)를 이용하여 학습할 수 있다. pairwise ranking 관계는 비대칭이기 때문에 positive training instance들만 사용해도 충분하다.

본 논문에서는 x(feature vector)가 highly sparse(예를 들어 vector x의 거의 모든 원소 x_i가 0)일 때의 문제를 다룬다.

-

m(X) : feature vector x에서 non-zero 원소들의 수

-

mD : 모든 feature vector x의 m(x) 평균

Huge sparity는 거래 발생(예를 들어 추천시스템 구매내역)의 feature vector나 텍스트 분석과 같이 실제 세계에서 많이 보이는데 원인 중 한가지는 거대한 범주형 변수 도메인을 다룬다는 것이다.

Example

user u ∈ U

movie(item) i ∈ I

- U = {Alice (A), Bob (B), Charlie (C), . . .}

- I = {Titanic (TI), Notting Hill (NH), Star Wars (SW),

Star Trek (ST), . . .}certain time t ∈ R

rating r ∈ {1,2,3,4,5}

👉🏻 Observed data S

- S = {(A, TI, 2010-1, 5), (A, NH, 2010-2, 3), (A, SW, 2010-4, 1), (B, SW, 2009-5, 4), (B, ST, 2009-8, 5), (C, TI, 2009-9, 1), (C, SW, 2009-12, 5)}

▶︎ (A, TI, 2010-1, 5) : 사용자 A가 영화 TI(titanic)의 평점을 2010년 1월에 5점으로 남김

🎬 영화 리뷰 시스템의 거래 데이터

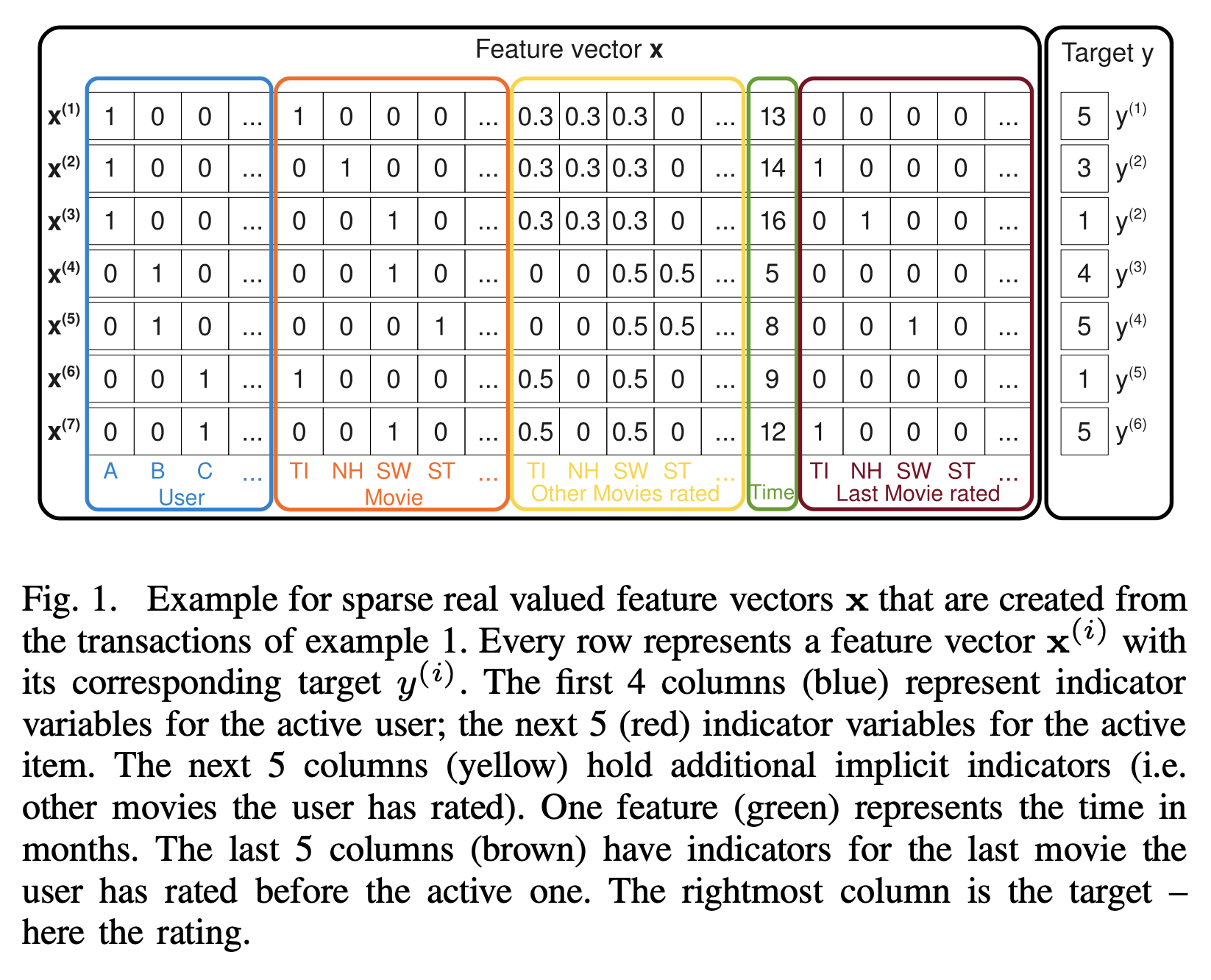

Figure 1은 S를 이용하여 feature vectors를 만든 예시이다.

- |U|는 해당 유저를 나타내는 binary indicator변수 (파란색 박스)

- |I|는 item을 보여주는 binary indicator변수 (주황색 박스)

- 사용자가 평점을 매긴 모든 영화에 대해 총합이 1이 되도록 정규화시켜 표현 (노란색 박스)

- 2009년 1월부터 1로 정의하며 월의 정보를 담고 있다. (녹색 박스)

- 사용자가 본 영화를 보기전에 점수를 매겼던 영화에 대한 정보를 담고 있다. (갈색 박스)

3. Factorization Machines(FM)

A. Factorization Machine Model

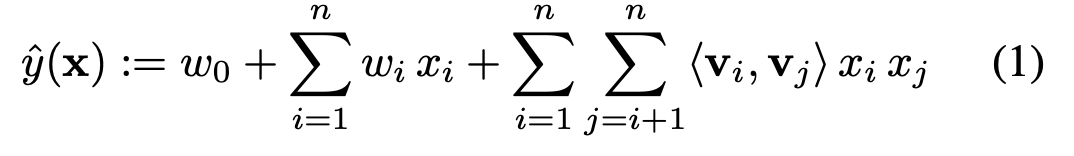

1) 모델 방정식 (degree가 d = 2일 때)

- W_0 : global bias

- w_i : i번째 변수의 영향력을 모델화

- w^_i,j : <v_i,v_j> i번쨰 변수와 j번쨰 변수의 상호작용

👉🏻 w_i,j을 사용하지 않고 factorizing을 통해 표현한다. sparse 데이터임에도 불구하고 고차원의 상호작용에 대한 훌륭한 파라미터 추정치를 산출할 수 있는 중요한 역할을 하게 된다.

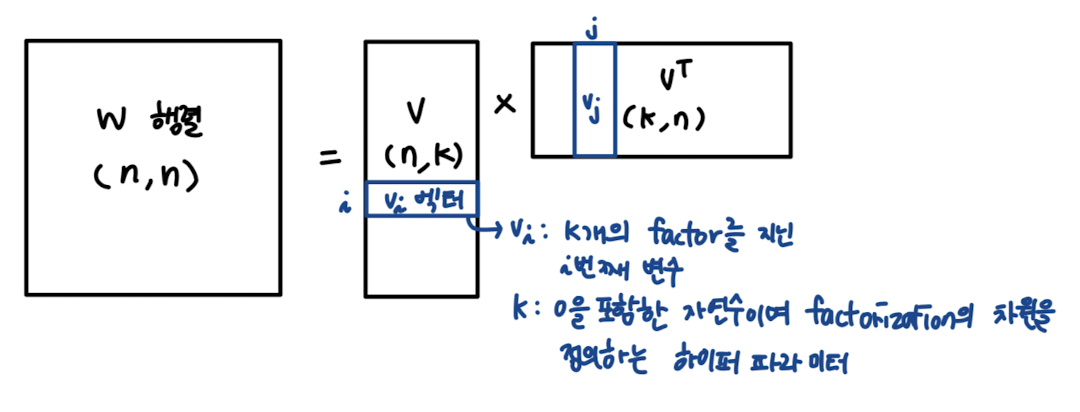

2) 표현방법

k가 충분히 크면 positive definite 행렬 W에 대해 W = V∙Vt를 만족시키는 행렬 V가 반드시 존재한다. 따라서 만약 k가 충분히 크다면 FM는 행렬 W로 표현할 수 있다. 그러나 sparse 환경에서는 복잡한 상호작용을 추정할 충분한 데이터가 없기 때문에 전형적으로 작은 k를 선택해야한다. k를 제한하는 것은 더 좋은 일반화로 이어지며 sparsity에서도 상호작용 행렬을 만들 수 있다.

3) Sparsity 상의 파라미터 추청

sparse 환경에서는 변수간의 상호작용을 독립적으로 directly하게 추정할 충분한 데이터가 없다. FM은 factorizing을 함으로써 상호작용 파라미터의 독립성을 깨버리기 때문에(연관이 생기기 때문에) sparse 환경에서도 상호작용을 잘 추정할 수 있다.

Example

Figure 1을 통해서 이를 이해해 보자!

Q : target y(rating)를 예측하기 위해 Alice(A)와 Star Trek(ST)의 상호작용을 추정하고 싶다.

👉🏻 x_A와 x_ST가 non-zero인 학습 데이터가 없기 때문에 직관적으로 상호작용이 없다고 할 수 있다.(wA,ST = 0)

👉🏻 그러나 ⟨v_A,v_ST⟩(factorized interaction parameters)를 사용하면서 이 상황에서 상호작용을 추정할 수 있다.

- Bob(B)과 Charlie(C)는 비슷한 factor vector v_B, v_C를 가지고 있다.(Star Wars에 대해 비슷한 평점을 매겼기 때문에 ⟨vB,vSW⟩ 와 ⟨vC,vSW⟩는 비슷해야 한다.)

- Alice(A)는 Charlie(C)는 다른 factor vector v_A, v_C를 가지고 있다.(평점을 매김에 있어 Titanic과 Star Wars의 factors가 다른 상호작용을 가지고 있기 때문)

- Star Trek의 factor vectors는 Star Wars와 비슷할 가능성이 있다.(Bob(B)이 두 영화에 대해 비슷한 상호작용을 보이고 있기 때문이다.)

💡 Answer

Alice(A)와 Star Trek의 factor vector의 내적(상호작용)은 Alice(A)와 Star Wars의 상호작용과 비슷할 것이다.

4) 계산

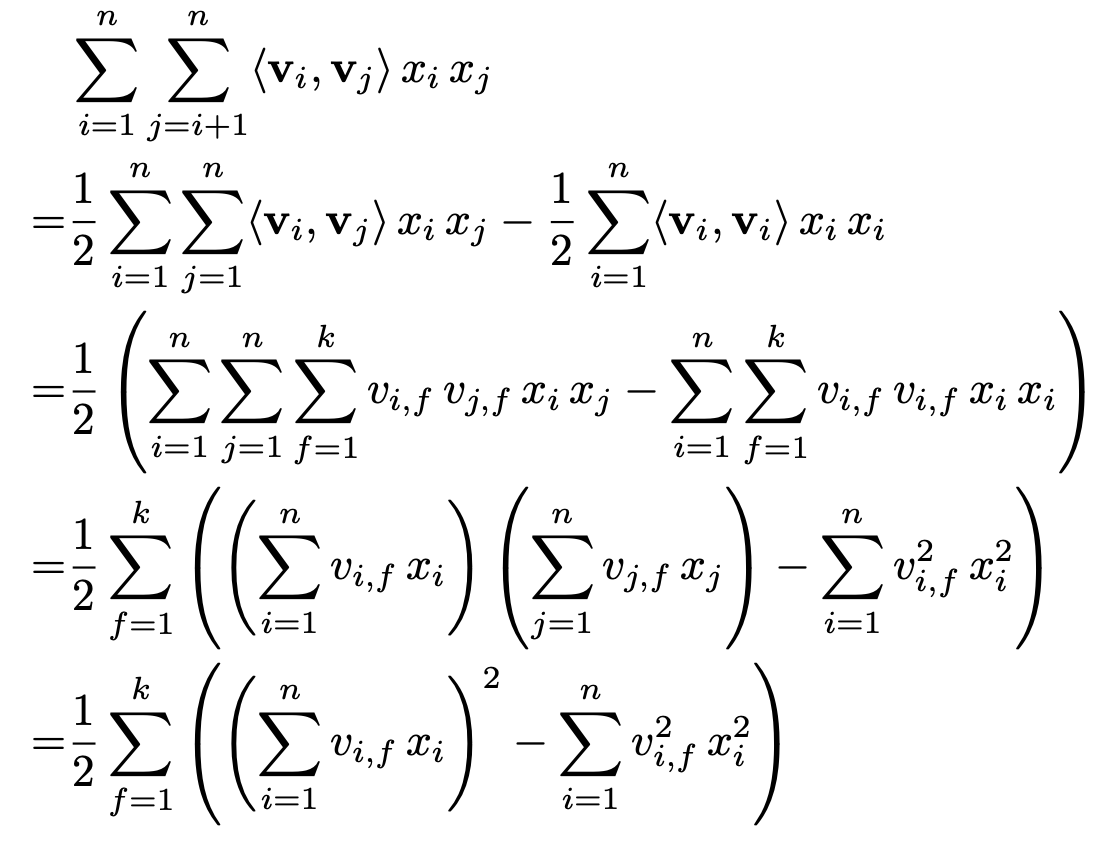

모든 pairwise interaction들이 계산되어야하기 때문에 (1)의 방정식의 복잡도는 O(kn^2)이다. 그러나 재구성을 통해 linear time으로 줄어들 수 있다.

Lemma 3.1) FM의 모델 방정식은 linear time O(kn)으로 계산 할 수 있다.

증명 : Pairwise 상호작용항에 대한 factorization 때문에, 직접적으로 두 변수들에 의존하는 파라미터가 없다. pairwise 상호작용 부분은 아래와 같이 재표현할 수 있다.

이때 방정식의 복잡도는O(kn)으로 linear time이다.

sparsity하에서는 대부분의 원소가 0이기 때문에 non-zero 원소들만 계산한다.

B. Factorization Machines as Predictors

FM은 Regression, Binary classification, Ranking 등 다양한 예측 업무에서 적용할 수 있다.

이 모든 경우에서 L2와 같은 정규화 term은 오버피팅을 방지하기 위해서 추가된다.

C. Learning Factorization Machines

FM의 모델 파라미터(W_0,w, V)는 gradient descent methods(예를 들어 SGD)를 통해 효과적으로 학습할 수 있다. FM의 gradient는 다음과 같다.

sum 부분은 i와 독립이기 때문에 미리 계산이 가능하다.

D. d-way Factorization Machine

2-way FM은 쉽게 d-way FM으로 확장 할 수 있다.

l번째 상관관계 항에 대한 파라미터들은 PARAFAC(parallel factor analysis : 병렬분석)모델의 파라미터로 factorize한다.

(5) 방적식의 복잡도는 O(k_d n^d)이다. 그러나 lemma 3.1을 적용하면 linear time으로 계산할 수 있다.

E. Summary

FM은 full feature vectore들 대신 factorized interaction들을 사용하여 feature vector x 의 가능한 모든 상호작용을 모델화한다.

이는 두 가지의 장점을 가지고 있는데

- high sparsity에서 값 사이의 상호작용을 추정할 수 있다. 심지어 관찰되지 않은 상호작용을 generalize(일반화)할 수 있다.

- 예측과 학습에 있어 파라미터의 수만큼 linear time이 소요된다. 이는 SGD을 사용하여 바로 최적화를 하게 하고 다양한 손실 함수에서 최적화를 쓸 수 있게 했다.