Variational Auto-Encoder (VAE)

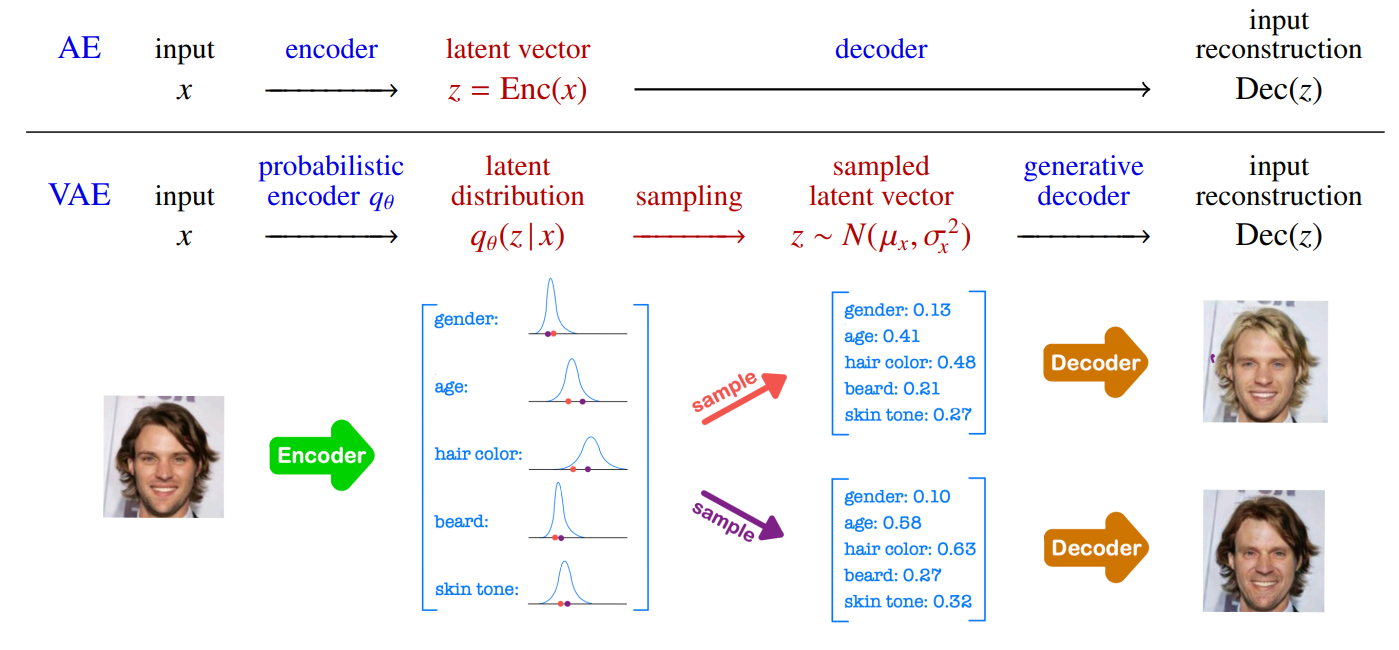

• AE encoder directly produces a latent vector (single value for each attribute).

Then, AE decoder takes these values to reconstruct the original input.

Goal is getting a compressed latent vector of the input.

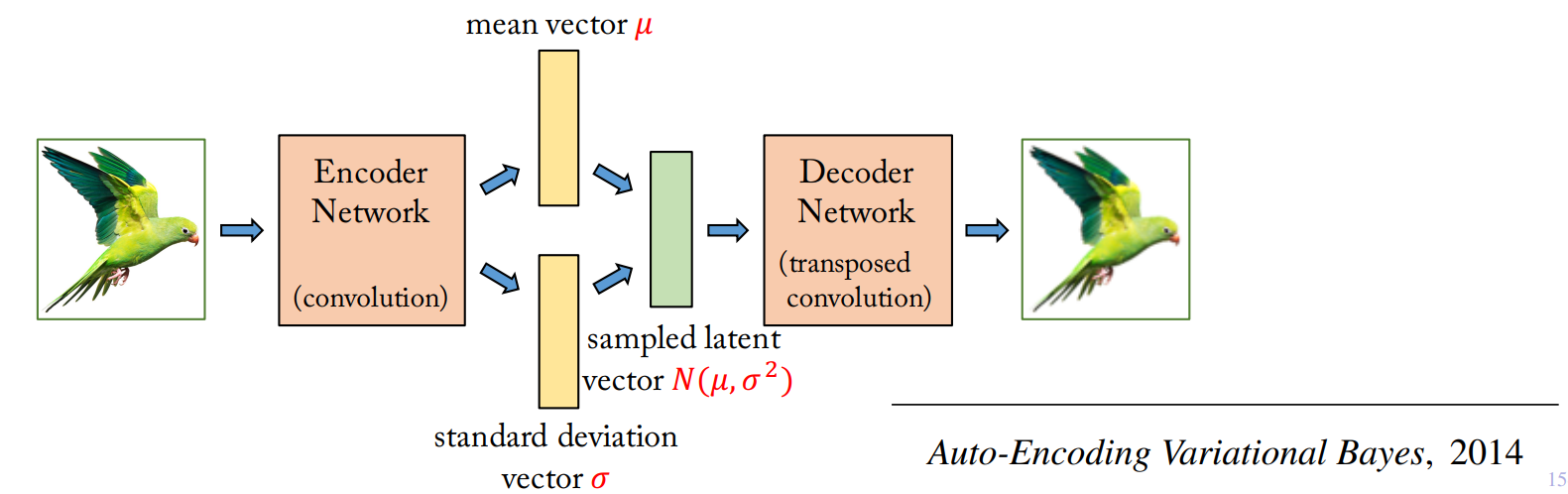

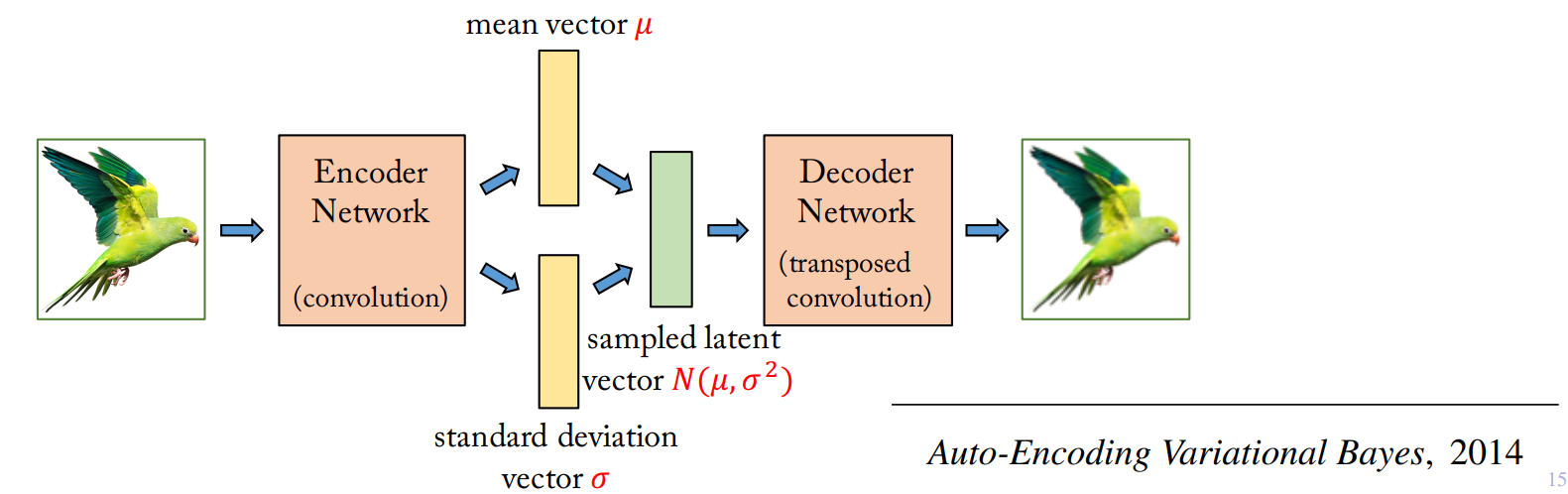

• VAE encoder produces 2 latent vectors mean µ and standard deviation σ of Gaussian N(µ, σ2) approximating the posterior distribution p(z| x) of each attribute (Variational inference).

Then, using sampled latent vectors from the distributions, the decoder reconstructs the input.

Goal is generating many variations of the input (probabilistic encoder + generative decoder).

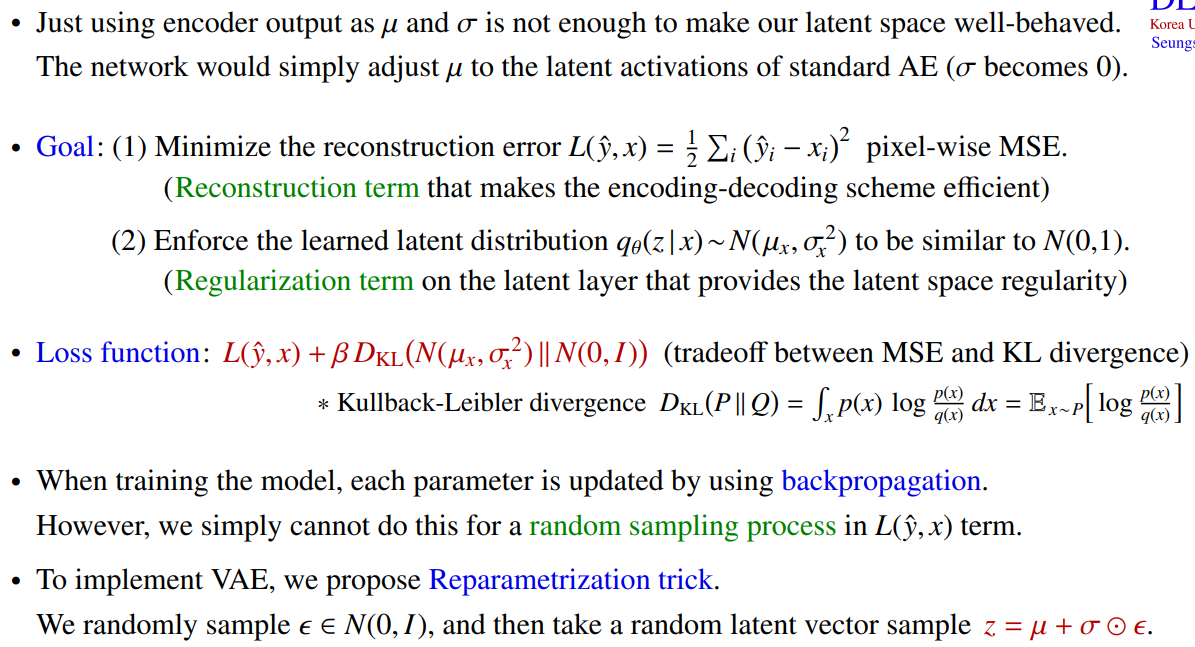

VAE encoder would like to produce the posterior instead of a single point , but because of the intractability of , we approximate it by variational posterior which is Gaussian so that the encoder network is enforced to produce and σx.

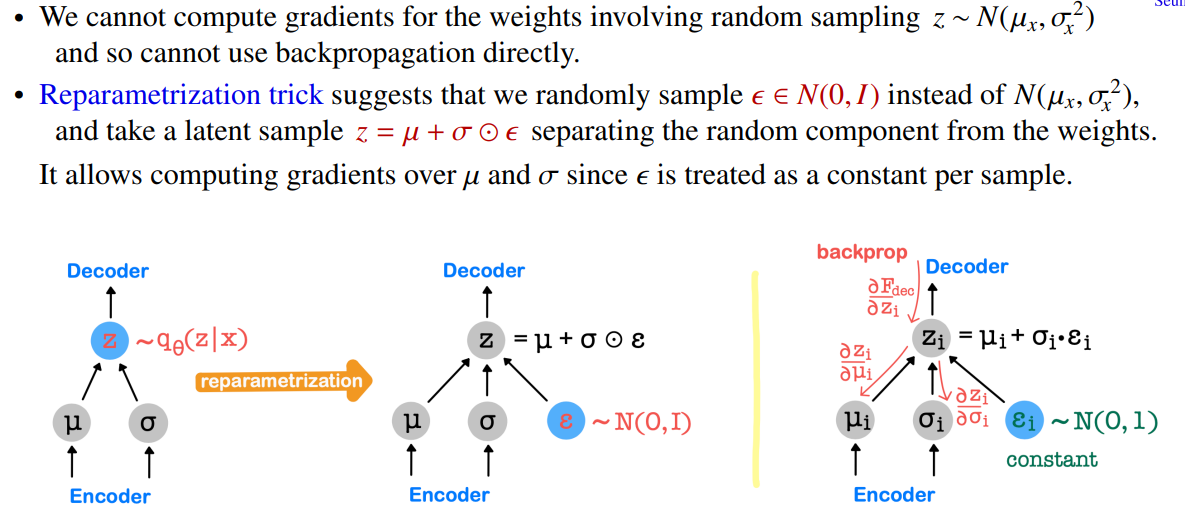

Reparametrization trick