🌈 Crawling 연습

🔥 Get vs Post 의 이해

🔥 post 방식 requests

🔥 Cine21 crawling

🔥 크롤링과 데이터 전처리

🔥 MongoDB에 crawling 데이터 저장

1. Get vs Post 의 이해

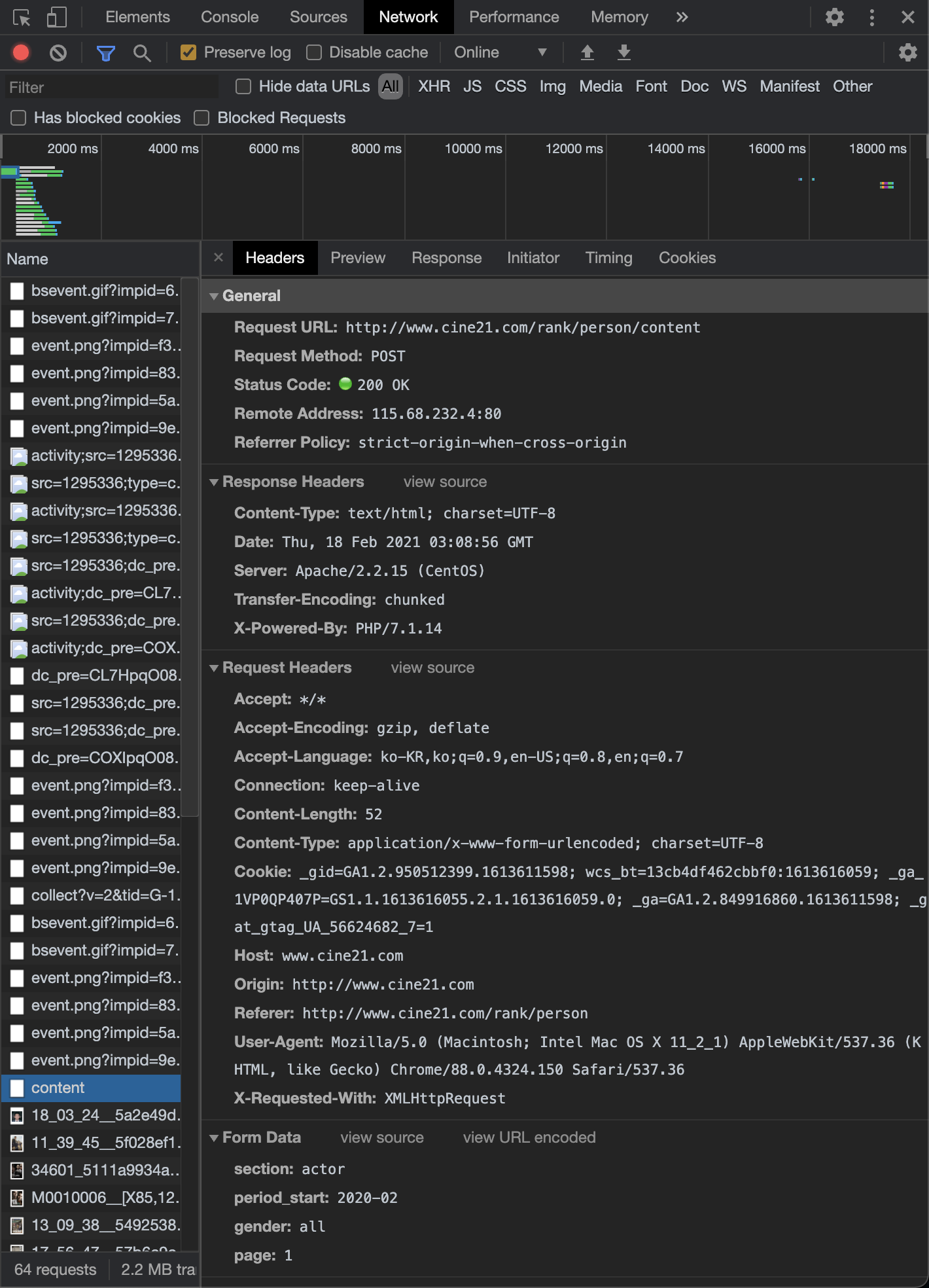

- 크롤링을 위해 해당 주소로 requests 할 때, requests method에 대한 확인이 필요

- get 방식의 웹 페이지는 페이지가 변할 때 마다 주소창에 주소가 변동되기 때문에 이 패턴을 분석

- post 방식으로 작성된 웹페이지는 페이지가 변해도 주소창에 주소가 바뀌지 않음

- 이에 post 방식의 웹페이지를 크롤링하기 위해서는 [개발자 도구] ⇢ [Network]에서 정보를 확인해야 함

1) post 방식 웹페이지 requests 방법

- 설정 : Preserve log에 체크에 하고, All로 설정되어있는지 확인

- page가 바뀔 때 마다, From Data 정보가 변동되는 것이 확인 가능

- Genaral 정보 및 Form Data 정보 확인

2. post 방식 requests

- requests 주소는 [개발자 도구] ⇢ [Network] 에서 Requests URL을 이용

- 또한 [개발자 도구] ⇢ [Network] 에서 Form Data 정보를 딕셔너리 형태로 함께 전달함

- requestes 방식은 requests.post(url, data=[딕셔너리])

- 🔍 res = requests.post(url, data=post_data)

- 🔍 get방식과 비교 : res = requests.get(url)

✍🏻 python

# 1단계 : 라이브러리 import import pymongo import requests from bs4 import BeautifulSoup # 2단계 : mongodb connection conn = pymongo.MongoClient() # pymongo로 mongodb 연결(localhost:27017) actor_db = conn.cine21 # database 생성(cine21) 후 객체(actor_db)에 담음 actor_collection = actor_db.actor_collection # collection 생성(actor_collection) 후 객체(actor_collection)에 담음 # 3단계 : crawling 주소 requests(http://www.cine21.com/rank/person) url = 'http://www.cine21.com/rank/person/content' post_data = dict() # Form data 부분을 딕셔너리 형태로 전달 post_data['section'] = 'actor' post_data['period_start'] = '2020-02' post_data['gender'] = 'all' post_data['page'] = 1 res = requests.post(url, data=post_data) # requests 요청 # 4단계 : parsing과 데이터 추출 soup = BeautifulSoup(res.content, 'html.parser') # parsing 방법

3. Cine21 crawling

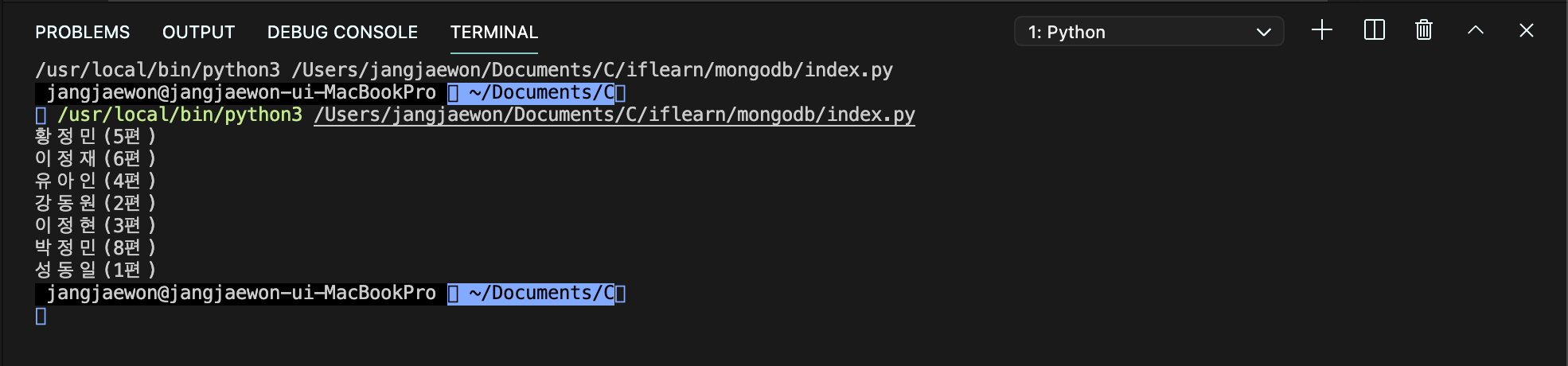

1) crawling target1 : 배우 이름만 클로링 해보기

✍🏻 python

# 1단계 : 라이브러리 import import pymongo import requests from bs4 import BeautifulSoup # 2단계 : mongodb connection conn = pymongo.MongoClient() # pymongo로 mongodb 연결(localhost:27017) actor_db = conn.cine21 # database 생성(cine21) 후 객체(actor_db)에 담음 actor_collection = actor_db.actor_collection # collection 생성(actor_collection) 후 객체(actor_collection)에 담음 # 3단계 : crawling 주소 requests(http://www.cine21.com/rank/person) url = 'http://www.cine21.com/rank/person/content' post_data = dict() # Form data 부분을 딕셔너리 형태로 전달 post_data['section'] = 'actor' post_data['period_start'] = '2020-02' post_data['gender'] = 'all' post_data['page'] = 1 res = requests.post(url, data=post_data) # requests 요청 # 4단계 : parsing과 데이터 추출(CSS 셀럭터) soup = BeautifulSoup(res.content, 'html.parser') # parsing 방법 actors = soup.select('li.people_li div.name') # 배우 이름 모두 담기 for actor in actors: print(actor.text)

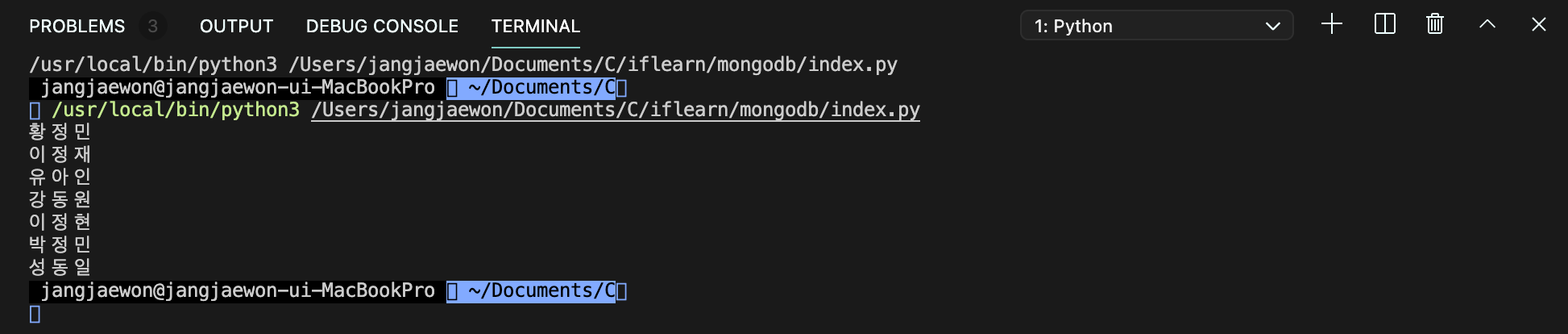

2) 정규표현식으로 데이터 전처리

- 정규표현식 라이브러리 추가 ⇢ import re

- re.sub([정규표현식], [변경할 값], [원본 값]) ⇢ 원본 값에서 정규표현식 패턴에 해당하는 부분 수정

- 🔍 re.sub('(\w*)', '', [변수]) ⇢ 변수의 값을 정규표현식(앞뒤 괄호+숫자문자)를 없애겠다

✍🏻 python# 1단계 : 라이브러리 import import pymongo import re import requests from bs4 import BeautifulSoup # 2단계 : mongodb connection conn = pymongo.MongoClient() # pymongo로 mongodb 연결(localhost:27017) actor_db = conn.cine21 # database 생성(cine21) 후 객체(actor_db)에 담음 actor_collection = actor_db.actor_collection # collection 생성(actor_collection) 후 객체(actor_collection)에 담음 # 3단계 : crawling 주소 requests(http://www.cine21.com/rank/person) url = 'http://www.cine21.com/rank/person/content' post_data = dict() # Form data 부분을 딕셔너리 형태로 전달 post_data['section'] = 'actor' post_data['period_start'] = '2020-02' post_data['gender'] = 'all' post_data['page'] = 1 res = requests.post(url, data=post_data) # requests 요청 # 4단계 : parsing과 데이터 추출(CSS 셀럭터) soup = BeautifulSoup(res.content, 'html.parser') # parsing 방법 actors = soup.select('li.people_li div.name') # 배우 이름 모두 담기 for actor in actors: print(re.sub('\(\w*\)', '', actor.text))

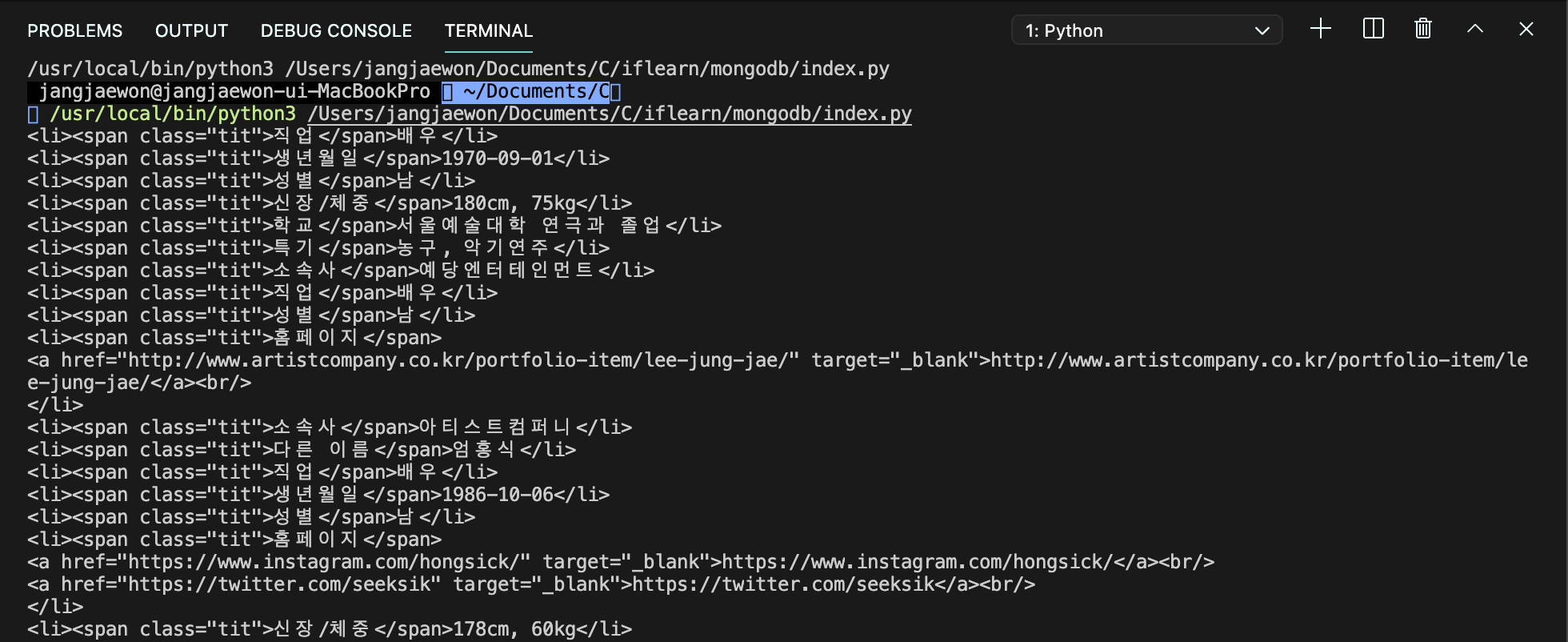

3) crawling target2 : 배우 개별 링크에서 배우 정보 크롤링 해보기

- 링크주소 가져오기 : 배우 링크 정보 확인하기

✍🏻 pythonactors = soup.select('li.people_li div.name') for actor in actors: print('http://www.cine21.com' + actor.select_one('a').attrs['href'])

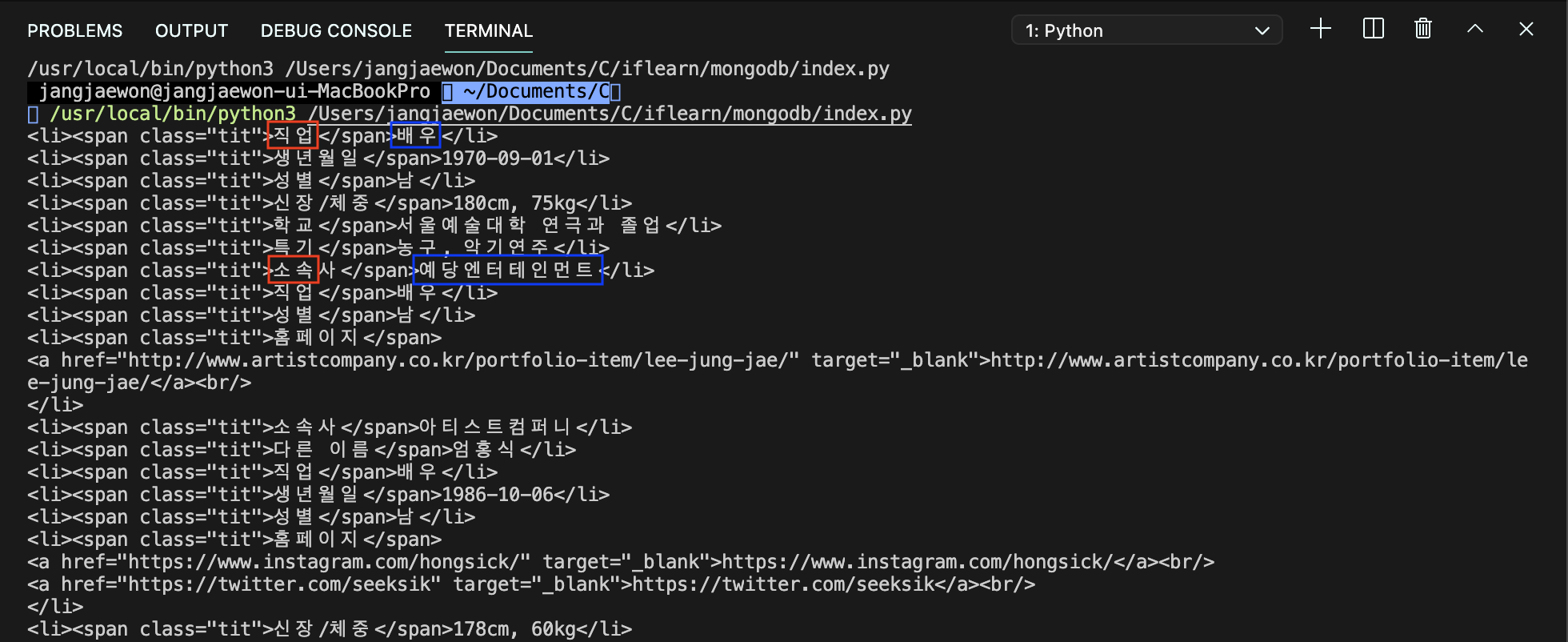

- 배우 상세정보 추출 : 배우

✍🏻 python# 1단계 : 라이브러리 import import pymongo import re import requests from bs4 import BeautifulSoup # 2단계 : mongodb connection conn = pymongo.MongoClient() # pymongo로 mongodb 연결(localhost:27017) actor_db = conn.cine21 # database 생성(cine21) 후 객체(actor_db)에 담음 actor_collection = actor_db.actor_collection # collection 생성(actor_collection) 후 객체(actor_collection)에 담음 # 3단계 : crawling 주소 requests(http://www.cine21.com/rank/person) url = 'http://www.cine21.com/rank/person/content' post_data = dict() # Form data 부분을 딕셔너리 형태로 전달 post_data['section'] = 'actor' post_data['period_start'] = '2020-02' post_data['gender'] = 'all' post_data['page'] = 1 res = requests.post(url, data=post_data) # requests 요청 # 4단계 : parsing과 데이터 추출(CSS 셀럭터) soup = BeautifulSoup(res.content, 'html.parser') # parsing 방법 actors = soup.select('li.people_li div.name') # 배우 이름 모두 담기 for actor in actors: actor_link = 'http://www.cine21.com' + actor.select_one('a').attrs['href'] res_actor = requests.get(actor_link) soup_actor = BeautifulSoup(res_actor.content, 'html.parser') default_info = soup_actor.select_one('ul.default_info') actor_details = default_info.select('li') for actor_item in actor_details: print(actor_item)

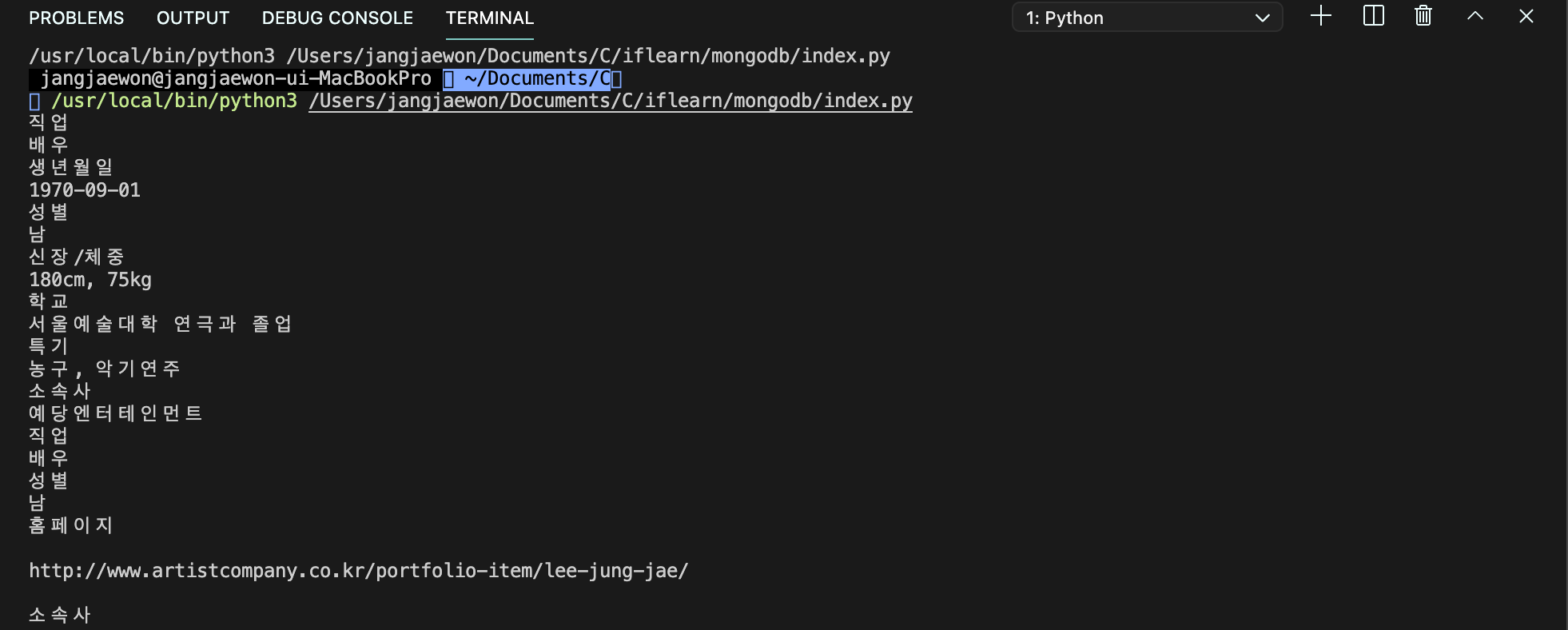

4. 크롤링과 데이터 전처리

-

<span class="tit">의 텍스트 값은 가져오기 쉽지만, 그 뒤에 있는 텍스트를 가져오기 어려움

-

이런 구조일 때 뒷부분 텍스트(파란네모)를 정규표현식을 통해 처리하여 추출할 수 있음

-

정규표현식 연습 싸이트 : https://regexr.com/

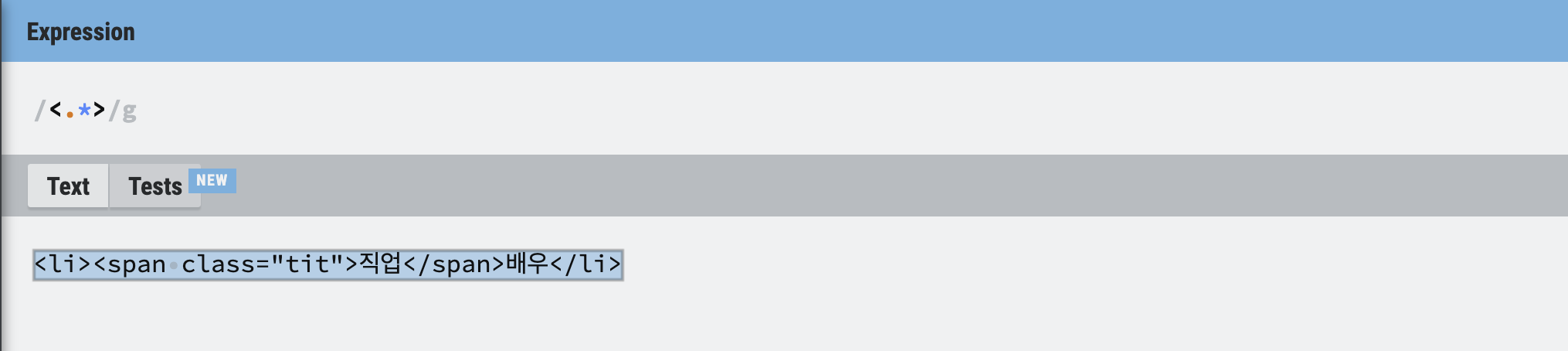

1) 특수한 정규 표현식 : Greedy

(.*)- 정규표현식에서 점(.)은 줄바꿈 문제인 \n 를 제외한 모든 문자 1개를 의미함

- 정규표현식에서 별(*)은 앞 문자가 0번 또는 그 이상 반복되는 패턴을 의미함

- 이에 .* 는 \n 를 제외한 문자가 0번 또는 그 이상 반복되는 패턴을 뜻함(=기호를 포함한 모든 문자)

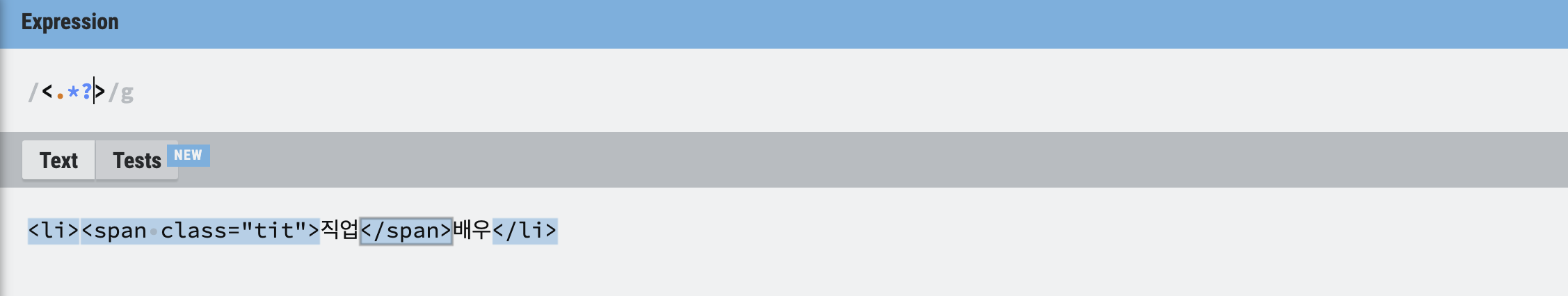

2) 특수한 정규 표현식 : Non-Greedy

(.*?)- 이에 반해 Non-Greedy

(.*?)는 첫번째 매칭되는데 까지만 패턴으로 인지 - Non-Greedy

(.*?)는 태그를 제외하고 택스트만 추출할 때 사용하기 좋음

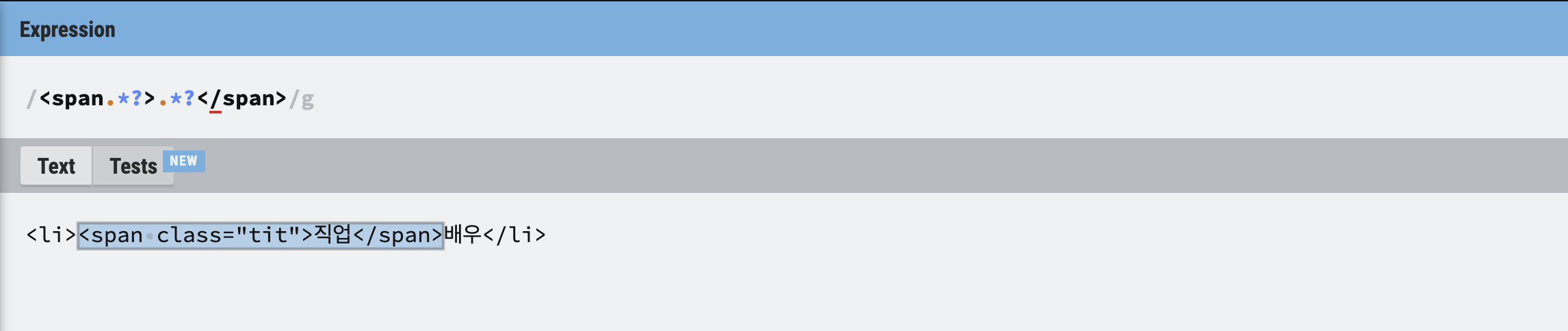

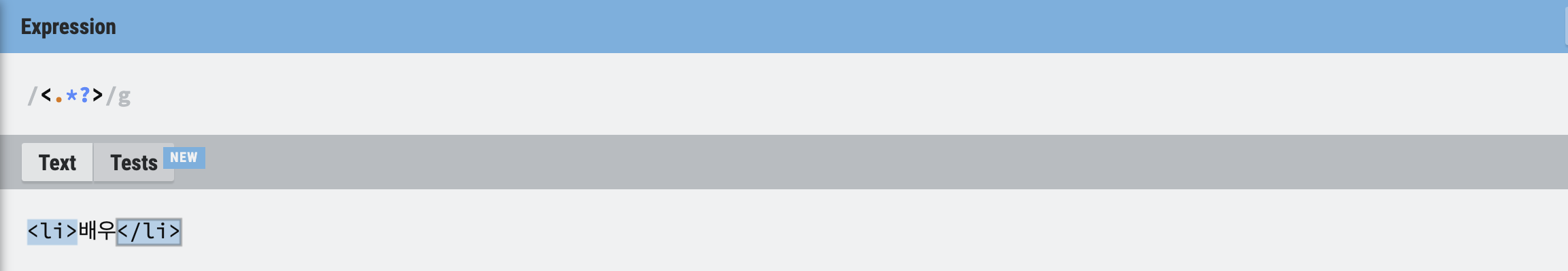

- 즉,

<span.*?>.*?</span>이렇게 정규표현식을 작성하면 span과 span 사이의 텍스트를 지정할 수 있음

- re.sub()을 이용하여 해당 영역만 지우면

<li>텍스트<li>만 추출 가능 - 여기서

<li></li>를 한번더 처리해주면 우리가 원하는 뒷부분 텍스트를 추출 가능

-

정규표현식 Non-Greedy로 데이터를 처리해주면 아래와 같음

✍🏻 python

# 1단계 : 라이브러리 import import pymongo import re import requests from bs4 import BeautifulSoup # 2단계 : mongodb connection conn = pymongo.MongoClient() # pymongo로 mongodb 연결(localhost:27017) actor_db = conn.cine21 # database 생성(cine21) 후 객체(actor_db)에 담음 actor_collection = actor_db.actor_collection # collection 생성(actor_collection) 후 객체(actor_collection)에 담음 # 3단계 : crawling 주소 requests(http://www.cine21.com/rank/person) url = 'http://www.cine21.com/rank/person/content' post_data = dict() # Form data 부분을 딕셔너리 형태로 전달 post_data['section'] = 'actor' post_data['period_start'] = '2020-02' post_data['gender'] = 'all' post_data['page'] = 1 res = requests.post(url, data=post_data) # requests 요청 # 4단계 : parsing과 데이터 추출(CSS 셀럭터) soup = BeautifulSoup(res.content, 'html.parser') # parsing 방법 actors = soup.select('li.people_li div.name') # 배우 이름 모두 담기 for actor in actors: actor_link = 'http://www.cine21.com' + actor.select_one('a').attrs['href'] res_actor = requests.get(actor_link) soup_actor = BeautifulSoup(res_actor.content, 'html.parser') default_info = soup_actor.select_one('ul.default_info') actor_details = default_info.select('li') for actor_item in actor_details: print(actor_item.select_one('span.tit').text) # <span class="tit">텍스트</span>의 텍스트 추출 actor_item_value = re.sub('<span.*?>.*?</span>', '', str(actor_item)) # <li>텍스트</li> 추출 actor_item_value = re.sub('<.*?>', '', actor_item_value) print(actor_item_value)

- MongoDB에 데이터로 삽입시키기 위해서는 딕셔너리(JSON형식)으로 변환시켜줘야 함

- insert_many()를 쓰기 위해 그 결과를 리스트에 담음

✍🏻 python

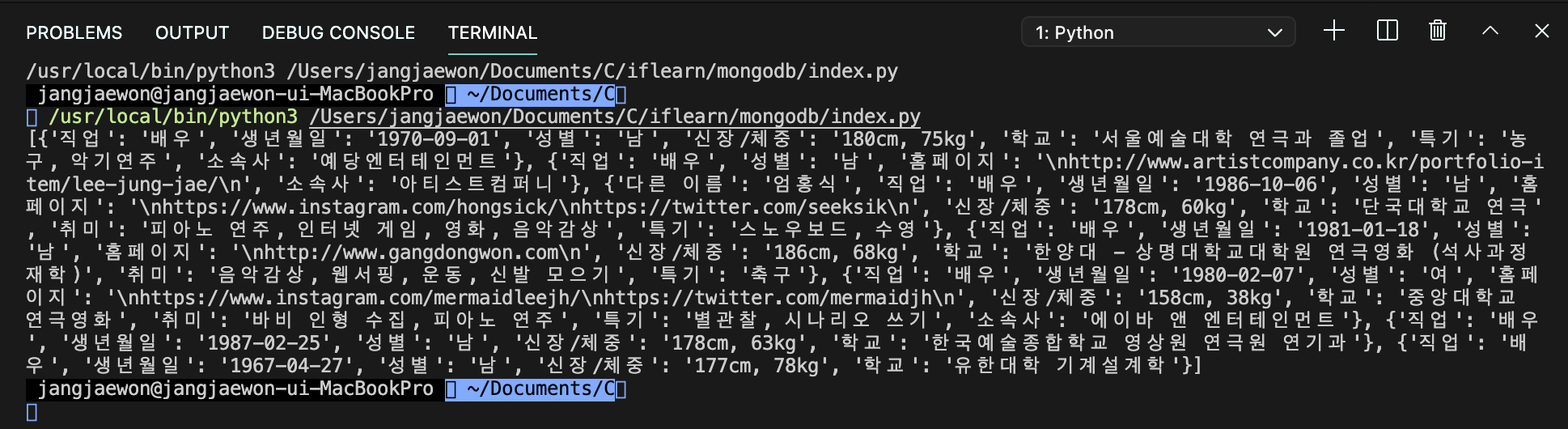

# 1단계 : 라이브러리 import import pymongo import re import requests from bs4 import BeautifulSoup # 2단계 : mongodb connection conn = pymongo.MongoClient() # pymongo로 mongodb 연결(localhost:27017) actor_db = conn.cine21 # database 생성(cine21) 후 객체(actor_db)에 담음 actor_collection = actor_db.actor_collection # collection 생성(actor_collection) 후 객체(actor_collection)에 담음 # 3단계 : crawling 주소 requests(http://www.cine21.com/rank/person) url = 'http://www.cine21.com/rank/person/content' post_data = dict() # Form data 부분을 딕셔너리 형태로 전달 post_data['section'] = 'actor' post_data['period_start'] = '2020-02' post_data['gender'] = 'all' post_data['page'] = 1 res = requests.post(url, data=post_data) # requests 요청 # 4단계 : parsing과 데이터 추출(CSS 셀럭터) soup = BeautifulSoup(res.content, 'html.parser') # parsing 방법 actors = soup.select('li.people_li div.name') # 배우 이름 모두 담기 actors_info_list = list() # 배우 전체의 상세정보가 담길 리스트 for actor in actors: actor_link = 'http://www.cine21.com' + actor.select_one('a').attrs['href'] res_actor = requests.get(actor_link) soup_actor = BeautifulSoup(res_actor.content, 'html.parser') default_info = soup_actor.select_one('ul.default_info') actor_details = default_info.select('li') actor_info_dict = dict() # 배우별 상세 정보가 JSON형식으로 담길 딕셔너리 for actor_item in actor_details: actor_item_key = actor_item.select_one('span.tit').text # <span class="tit">텍스트</span>의 텍스트 추출 actor_item_value = re.sub('<span.*?>.*?</span>', '', str(actor_item)) # <li>텍스트</li> 추출 actor_item_value = re.sub('<.*?>', '', actor_item_value) actor_info_dict[actor_item_key] = actor_item_value actors_info_list.append(actor_info_dict) # 딕셔너리를 리스트에 추가 print(actors_info_list)

- 배우이름, 출연영화, 흥행지수도 추가해서 추출해 보기

✍🏻 python

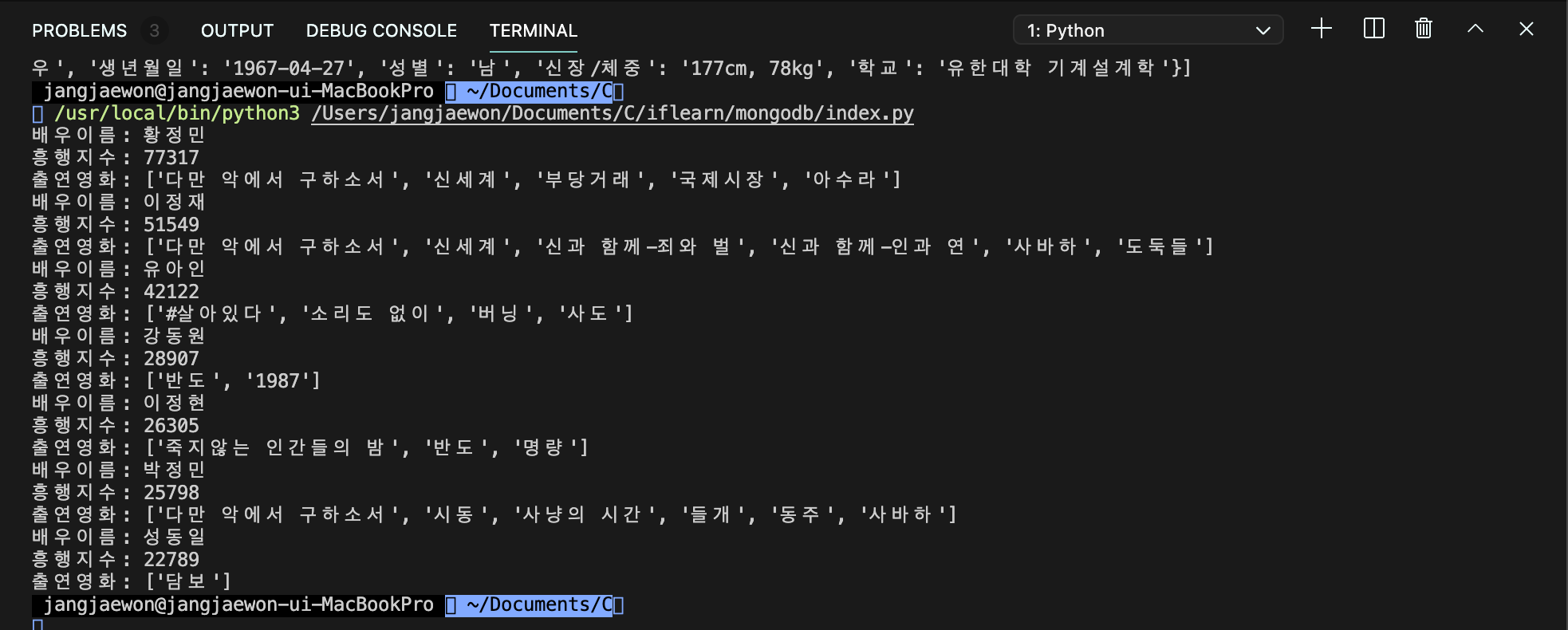

# 1단계 : 라이브러리 import import pymongo import re import requests from bs4 import BeautifulSoup # 2단계 : mongodb connection conn = pymongo.MongoClient() # pymongo로 mongodb 연결(localhost:27017) actor_db = conn.cine21 # database 생성(cine21) 후 객체(actor_db)에 담음 actor_collection = actor_db.actor_collection # collection 생성(actor_collection) 후 객체(actor_collection)에 담음 # 3단계 : crawling 주소 requests(http://www.cine21.com/rank/person) url = 'http://www.cine21.com/rank/person/content' post_data = dict() # Form data 부분을 딕셔너리 형태로 전달 post_data['section'] = 'actor' post_data['period_start'] = '2020-02' post_data['gender'] = 'all' post_data['page'] = 1 res = requests.post(url, data=post_data) # requests 요청 # 4단계 : parsing과 데이터 추출(CSS 셀럭터) soup = BeautifulSoup(res.content, 'html.parser') # parsing 방법 actors = soup.select('li.people_li div.name') # 배우 이름 모두 담기 actors_info_list = list() # 배우 전체의 상세정보가 담길 리스트 for actor in actors: actor_link = 'http://www.cine21.com' + actor.select_one('a').attrs['href'] res_actor = requests.get(actor_link) soup_actor = BeautifulSoup(res_actor.content, 'html.parser') default_info = soup_actor.select_one('ul.default_info') actor_details = default_info.select('li') actor_info_dict = dict() # 배우별 상세 정보가 JSON형식으로 담길 딕셔너리 for actor_item in actor_details: actor_item_key = actor_item.select_one('span.tit').text # <span class="tit">텍스트</span>의 텍스트 추출 actor_item_value = re.sub('<span.*?>.*?</span>', '', str(actor_item)) # <li>텍스트</li> 추출 actor_item_value = re.sub('<.*?>', '', actor_item_value) actor_info_dict[actor_item_key] = actor_item_value actors_info_list.append(actor_info_dict) # 딕셔너리를 리스트에 추가 # 배우 이름, 흥행지수, 출연영화 추출 # actors = soup.select('li.people_li div.name') # 위에 있어서 생략 hits = soup.select('ul.num_info > li > strong') movies = soup.select('ul.mov_list') for index, actor in enumerate(actors): print ("배우이름:", re.sub('\(\w*\)', '', actor.text)) print ("흥행지수:", int(hits[index].text.replace(',', ''))) movie_titles = movies[index].select('li a span') movie_title_list = list() for movie_title in movie_titles: movie_title_list.append(movie_title.text) print ("출연영화:", movie_title_list)

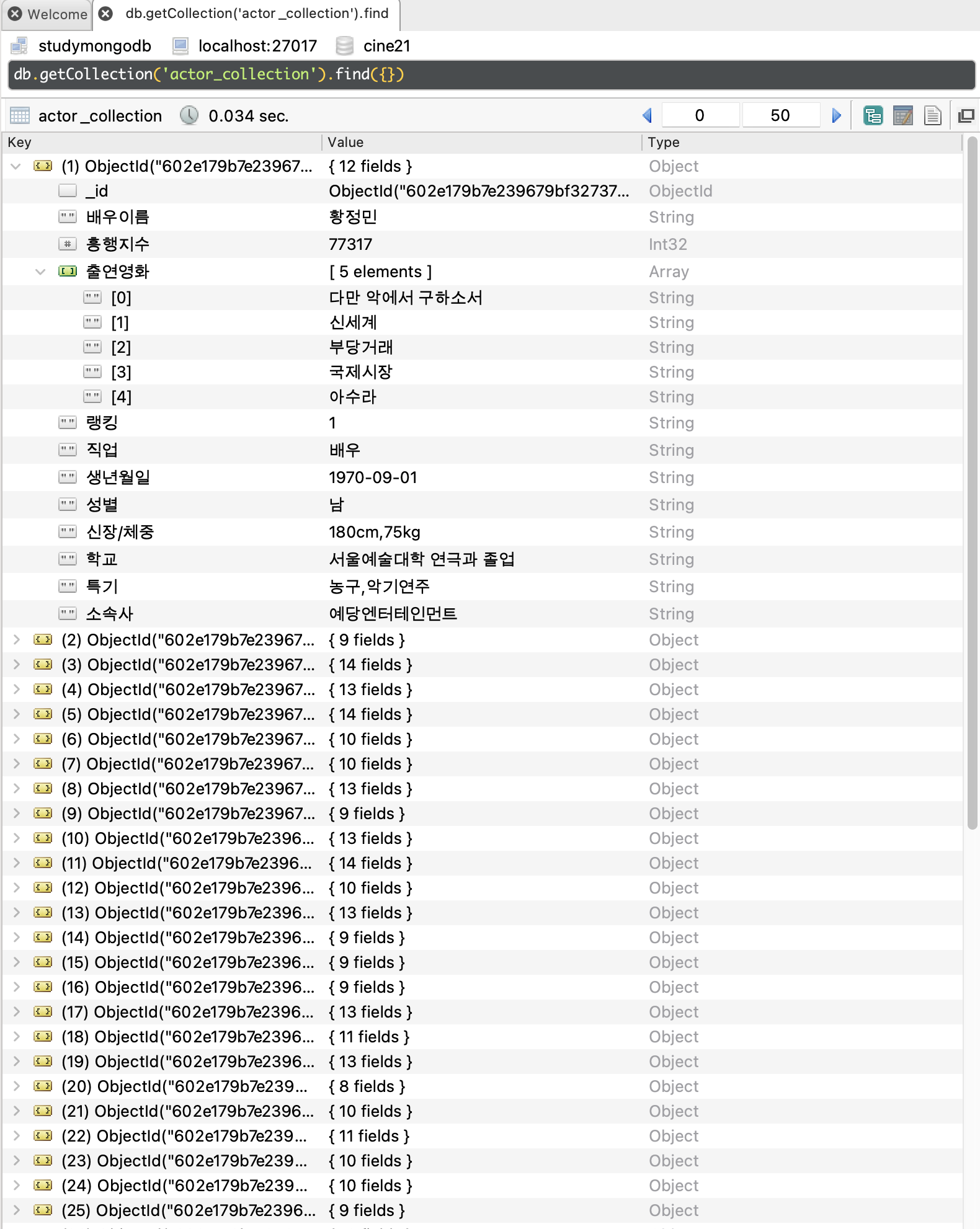

5. MongoDB에 crawling 데이터 저장

- ✔️ 추가사항 : crawling할 페이지 반복문으로 확장시키기

- ✔️ 추가사항 :

actors_info_list = list()를 최대한 위쪽으로 올려줘야함. 그렇지 않으면 페이지가 바뀔 때마다 list가 초기화되어 이전 페이지에서 클롤링한 결과들이 사라짐 - ✔️ 추가사항 : 랭킹 정보 추가

✍🏻 python

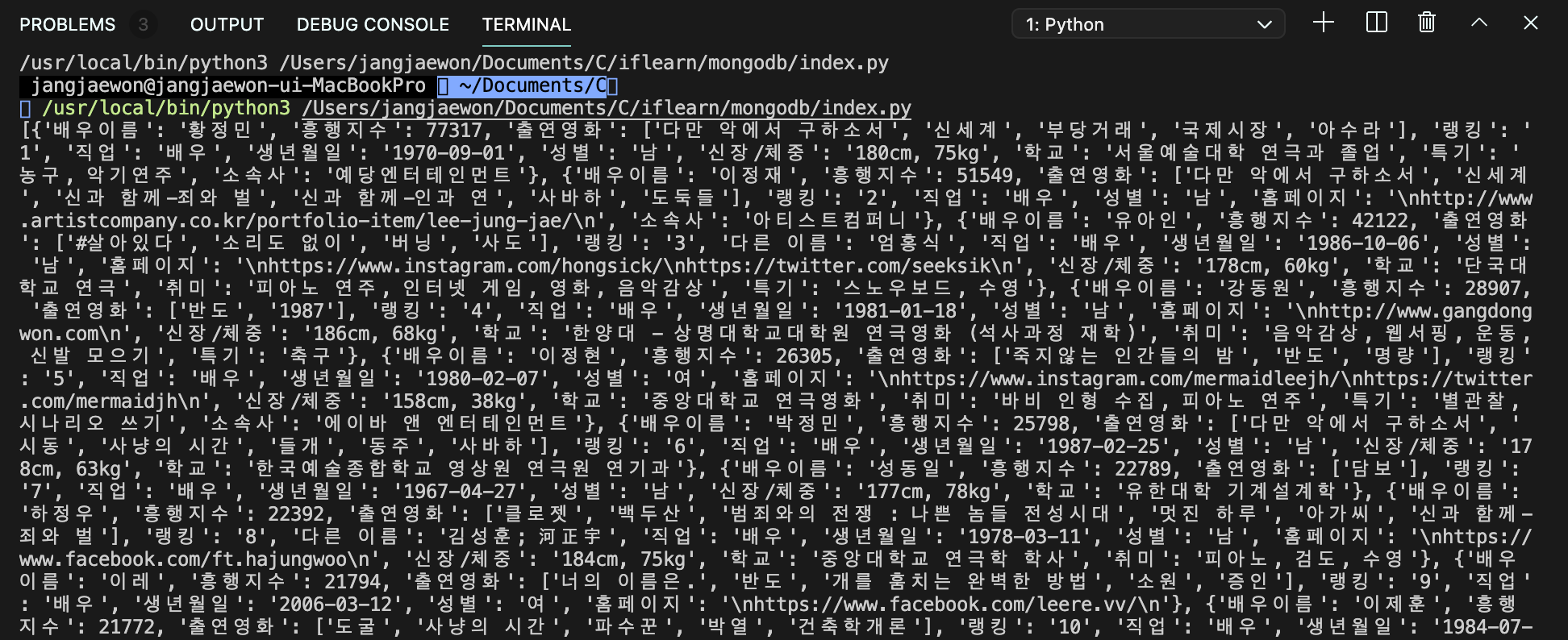

# 1단계 : 라이브러리 import import pymongo import re import requests from bs4 import BeautifulSoup actors_info_list = list() # 배우 전체의 상세정보가 담길 리스트 # 2단계 : mongodb connection conn = pymongo.MongoClient() # pymongo로 mongodb 연결(localhost:27017) actor_db = conn.cine21 # database 생성(cine21) 후 객체(actor_db)에 담음 actor_collection = actor_db.actor_collection # collection 생성(actor_collection) 후 객체(actor_collection)에 담음 # 3단계 : crawling 주소 requests(http://www.cine21.com/rank/person) url = 'http://www.cine21.com/rank/person/content' post_data = dict() # Form data 부분을 딕셔너리 형태로 전달 post_data['section'] = 'actor' post_data['period_start'] = '2020-02' post_data['gender'] = 'all' for index in range(1,21): post_data['page'] = index res = requests.post(url, data=post_data) # requests 요청 # 4단계 : parsing과 데이터 추출(CSS 셀럭터) soup = BeautifulSoup(res.content, 'html.parser') # parsing 방법 # 5단계 : 배우 이름, 흥행지수, 출연영화 soup에 담기 actors = soup.select('li.people_li div.name') # 배우이름 모두 담기 hits = soup.select('ul.num_info > li > strong') # 흥행지수 모두 담기 movies = soup.select('ul.mov_list') # 흥행영화 모두 담기 rankings = soup.select('li.people_li > span.grade') # 배우 랭킹정보 담기 # 6단계 : 부분 crawling for index, actor in enumerate(actors): actor_name = re.sub('\(\w*\)', '', actor.text) actor_hits = int(hits[index].text.replace(',', '')) movie_titles = movies[index].select('li a span') movie_title_list = list() for movie_title in movie_titles: movie_title_list.append(movie_title.text) # 배우이름, 흥행지수, 출연영화 dict형태(JSON)로 추가 actor_info_dict = dict() # 배우별 상세 정보가 JSON형식으로 담길 딕셔너리 actor_info_dict['배우이름'] = actor_name actor_info_dict['흥행지수'] = actor_hits actor_info_dict['출연영화'] = movie_title_list actor_info_dict['랭킹'] = rankings[index].text # 배우별 상세페이지에서 배우정보 가져와 dict에 추가 actor_link = 'http://www.cine21.com' + actor.select_one('a').attrs['href'] # 배우별 링크 res_actor = requests.get(actor_link) # 배우별 링크에 requests 날림 soup_actor = BeautifulSoup(res_actor.content, 'html.parser') # parsing default_info = soup_actor.select_one('ul.default_info') actor_details = default_info.select('li') for actor_item in actor_details: actor_item_key = actor_item.select_one('span.tit').text # <span class="tit">텍스트</span>의 텍스트 추출 actor_item_value = re.sub('<span.*?>.*?</span>', '', str(actor_item)) # <li>텍스트</li> 추출 actor_item_value = re.sub('<.*?>', '', actor_item_value) # <li><li> 제거 후 텍스트만 추출 actor_info_dict[actor_item_key] = actor_item_value actors_info_list.append(actor_info_dict) # 딕셔너리를 리스트에 추가 print(actors_info_list) # 콘솔에 데이터 출력해보기 # MongoDB에 데이터 삽입 actor_collection.insert_many(actors_info_list)