Before operating systems existed

Computers in the 1940s and early 50s ran one program at a time.

- A programmer would write one at their desk, for example, on punch cards.

- Then carry it to a room conataing a room-sized computer, and hand it to a dedicated computer operator (person).

- The operator would then feed the program into the computer when it was next available.

- The computer would run it, spit out some output, and halt.

This manual process worked OK when computers were slow, and running a program oftern took hours, days, or even weeks.

Computers became exponentailly faster, that having humans run around and inserting programs into readers was taking longer than running the actual programs themselves.

We needed a way for computer to operate themselves and so, operating systems were born.

First operating systems

Operating sytstems (OS) are just pragrams, but special privileges on the hardware let them run and manage other programs.

They are typically the first one to start when a computer is turn on, and all subsequent programs are launched by the OS.

-

They first got their start in the 1950s, as computer became more widespread and more powerful.

The very first OSs augmented the mundane, manual task of loading progrmas by hand. -

Instead of being given one program at a time, computer could be given batches; when the computer was done with one, it would automatically and near-instantly start the next.

There was no downtime while someone scurried around an office to find the next program to run. This was called batch processing. -

While computers got faster, they got cheaper.

They were popping up all over the world, especially in universities and government offices.

Soon, people started sharing software. However there was a problem. -

In the era of one-off computers, like the Harard MARK 1 or ENIAC, programmers only had to write code for that one single machine.

The processor, punch card readers, and printers were known and unchanging. -

But comptuers became more widespread, their configurations were not always identical, like computers might have the same CPU, but not the same printer. This was a huge pain for programmers.

-

Not only did they have to worry about writing their program, but also how to interface with each and every model of printer, and all devices connected to a computer, what are called peripherals.

-

Interfacing with early peripherals was very low level, requiring programmers to know intimate hardware details about each device. On top of that, programers rarely had access to every model of a peripheral to test their code on.

-

So, they had to write code as best they could, often just by reading mannuals, and hope(or pray) it worked when shared which was just terrible.

Abilities of operating systems

Device drivers

Operating systems stepped in as intermediaries between software programs and hardware peripherals.

-

More specifically, they provided a software abstraction, through API's, called device drivers. These allow programmers to talk to common input and output hardware, or I/O for short, using standardized mechanisms.

-

By the end of 1950s, computers had gotton so fast, they were often idle waiting for slow mechanical things, like printers and punch card readers. While program were blocked on I/O, the expensive processor was just chillin'.

In the late 50's the University of Manchester started working on a supercomuter called Atlas, one of the first in the world. They knew it would be very fast that it needed a clever way to make maximal use of the machine. Their solution was Atlas Supervisor, an OS for Atals.

Multitaking

Allowing many programs to be in progress all at once, sharing time on a single CPU.

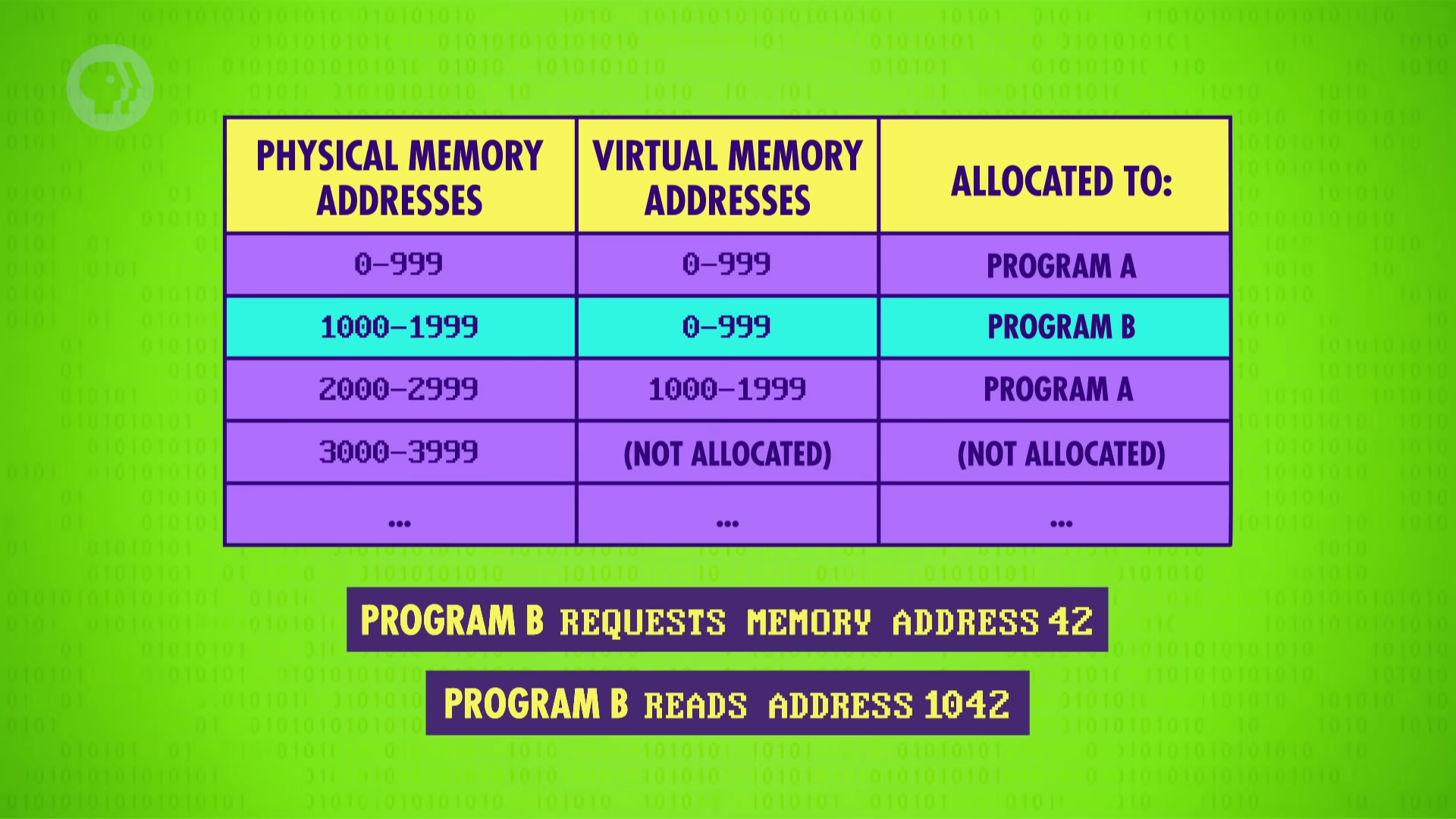

Virtualizing memory locations

With virtual memory, programs can assume their memory always atart at address 0, keeping things simple and consistent. However the actual, physical location in computer memory is hidden and abstracted by the operating system.

This mechanism allows programs to have flexible memory sizes, called dynamic memory allocation, that appear to be continuous to them. It simplifies everything and offers tremendous flexibility to the OS in running multiple programs simultaneously.

Memory protection

Another upside of allocating each program its own memory is that it isolates one another. A program can only trash its own memory, not that of other programs. Even if a programs goes awry, it will not affect other programs memory. Also it protects from malicious attacks to access other program's memory(ex. email) and steal information.

Evolution of operating systems

By the 1970s, computers were sufficiently fast and cheap. It could not multitask, but also give several users simultaneous, interactive access.

- This was done through a terminal, which is a keyboard and screen that connets to a big computer, but doesn't contain any processing power itself.

- A refrigerator-sized computer might have 50 terminals connected to it, allowing up to 50 users.

Time-sharing

With time-sharing, each individual user was only allowed to utilize a small fraction of the computer's processor, memory, and so on.

-

The most influential of early time-sharing OS was Multics, or Multiplexed Information and Computer Service, released in 1969. It was the first major OS designed to be secure from the outset

-

Multics was able to block access of data from users without having proper previledges. Features like this was very complicated that it used arount 1 megabit of memory, which might be half of computer's memory, just to run the OS.

-

This leads Dennis Ritchie and Ken Thompson, reserchers of Multics, to build a new, lean operating system called Unix.

UNIX

They wanted to separate the OS into two parts:

-

First was the core functionality of the OS, things like memory management, multitasking, and dealing with I/O, which is called kernel.

-

Second part was a wide array of useful tools that came bundled with, but not part of the kernel, things like programs and libraries.

-

Building a compact, lean kernel meant intentionally leaving some functionality out; Unix left out error recovery code. It there is an error, a routine called 'panic' is called, and the machine crashes.

-

This simplicity meant that Unix could be run on cheaper and more diverse hardware.

-

As more developers started using Unix to build and run their own programs, the number of contributed tool grew.

-

Soon after its release in 1971, it gained compilers for different programming languages, quickly making it one of the most popular Oses of the 1970s and 80s.

Modern operating systems

At the same time, by the early 1980s, the cost of a bacisc computer had fallen to the point where individual people could afford one, called a personal or home computer.

As these computers were much simpler than the big mainframes found at universities, corporations and governments, their OS had to be equally simple.

MS-DOS

For example, Microsoft's Disk Operating System, or MS-DOS, was just 160 kilobytes, allowing it to fit, as the name suggests, onto a single disk.

-

First released in 1981, it became the most popular OS for early home computers, even though it lacked multitasking and protected memory. This meant that programs could and would regularly crash the system.

-

While annoying, it was an acceptable tradeoff, as users could just turn their own computers on and off.

-

Even early versions of Windows, first released by Microsoft in 1985 and which dominated the OS scene throughout the 1990s, lacked strong memory protection.

-

When programs misbehaved, you could get the blue screen of death, a sign that a program had crashed so badly that it took down the whole operationg system.

Modern verions of OSs (mac OS X, Windows 10, Linux, iOS, Android) have multitasking and virtual and protected memory.

Vocabulary

- subsequent - 다음의, 차후의 <-> previous

- augment - 늘리다, 증가시키다.

- batch - 일괄적으로 처리되는 집단. (ex. batch of graduating students).

- downtime - the time during which machinary or equiptment is not operating

- scurry - move quickly and hurridly, often frightened.

- one-off - (BRIT) 단 하나밖에 없는 (것)

- peripherls - 컴퓨터의 주변 기기, 가장자리의, 중요하지 않은.

- intermediary - a person who acts as a link between people in order to try to bring about an agreement or reconciliation; a mediator. 중재자.

- tiddlywinks - 놀이중의 하나.

- awry - away from the appropriate, planned, or expected course, out of the normal or correct position.

- at/from the outset - 처음에/처음부터

- kernel - (견과류의) 알맹이, 핵심

Thoughts

정리하느라 매우 힘들었다. 적다보니 영상의 멘트 하나하나를 다 적어 벼렸다. 그래도 운영체제의 역사와 개념, 주요 기능에 대한 정리를 할 수 있어 재미있었고 좋았다.