In the early days of computing, processors were made faster by improving the switching time of the transistors insde the chip.

- It faced limitations, so various techniques were developed.

Division in a classic way : keep subtracting until zero.

- Takes a lot of clock cycles and not efficient.

- Processors today have division as one of the instructions that the ALU can perform in hardware.

Extra circuitry make the ALU bigger and complicated, but a complexity-for-speed tradeoff has been made many times in computing history (more complexity, but faster speed.)

Modern computer processors now have special circuits for operations that would take much more clock cycles with standard operations:

- graphics operations, decoding compressed video, encrypting files, ...

As the number of instructions increase, it was hard to remove them once people utilized them - gets larger overtime for backward compatibility.

- Intel 4004 (first CPU) : 44 instructions

- Modern processors : thousands.

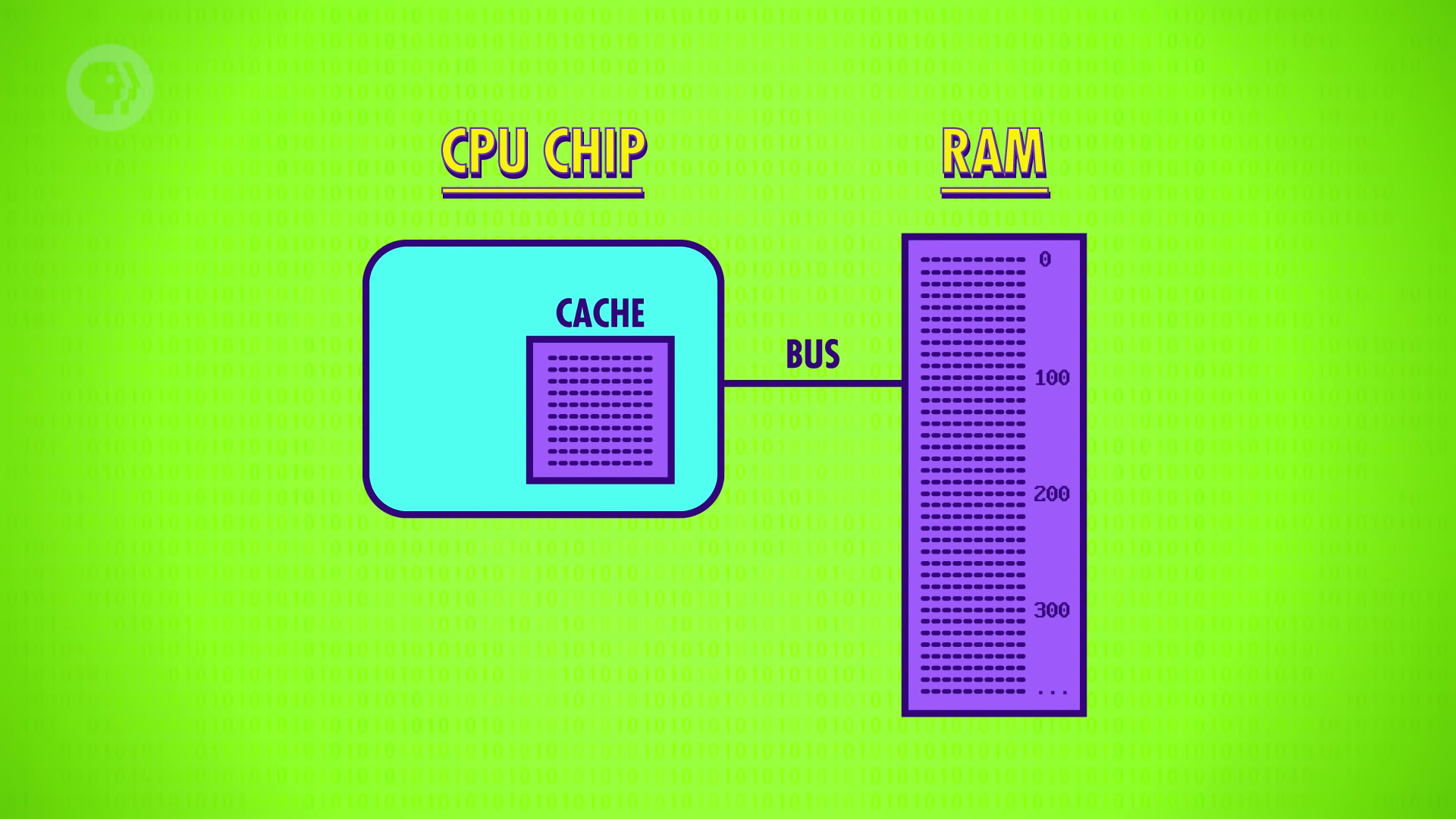

High clock speeds and fancy instruction sets lead to another problem - transfering data in and out between CPU and RAM.

Cache

To decrease the duration for transferring data between CPU and RAM, CPUs have small RAM inside themselves - a cache.

- Cache have kilobytes or megabytes of data due to a small space in CPU.

- Instead of getting one data at a time, a block of data is copied to the cache.

- The CPU can increase speed by looking up the data in cache.

- This is possible because computer data is often arranged and processed sequentially (ex - totalling up daily sales).

Cache Hit - when data requested in RAM is already stores in the cache.

Cache Miss - when data requested is not stored in the cache.

- The cache can also be used like a scratch space(임시 저장소), storing intermediate values when performing a longer, or more complicated calcuation.

Instead of saving the data at address 130 in RAM, CPU can save it at address 15 of the data block in the cache to save and access it faster.

- In this situation, data in the cache and data in the RAM are different, and needs to be synchronized.

The cache has a flag for each block of memory it stores, called dirty bit.

Synchronization happens when the cache is full and the processor requests new block of data from the RAM.

- If there is a dirty bit, the block of data is written back to the RAM before loading a new block.

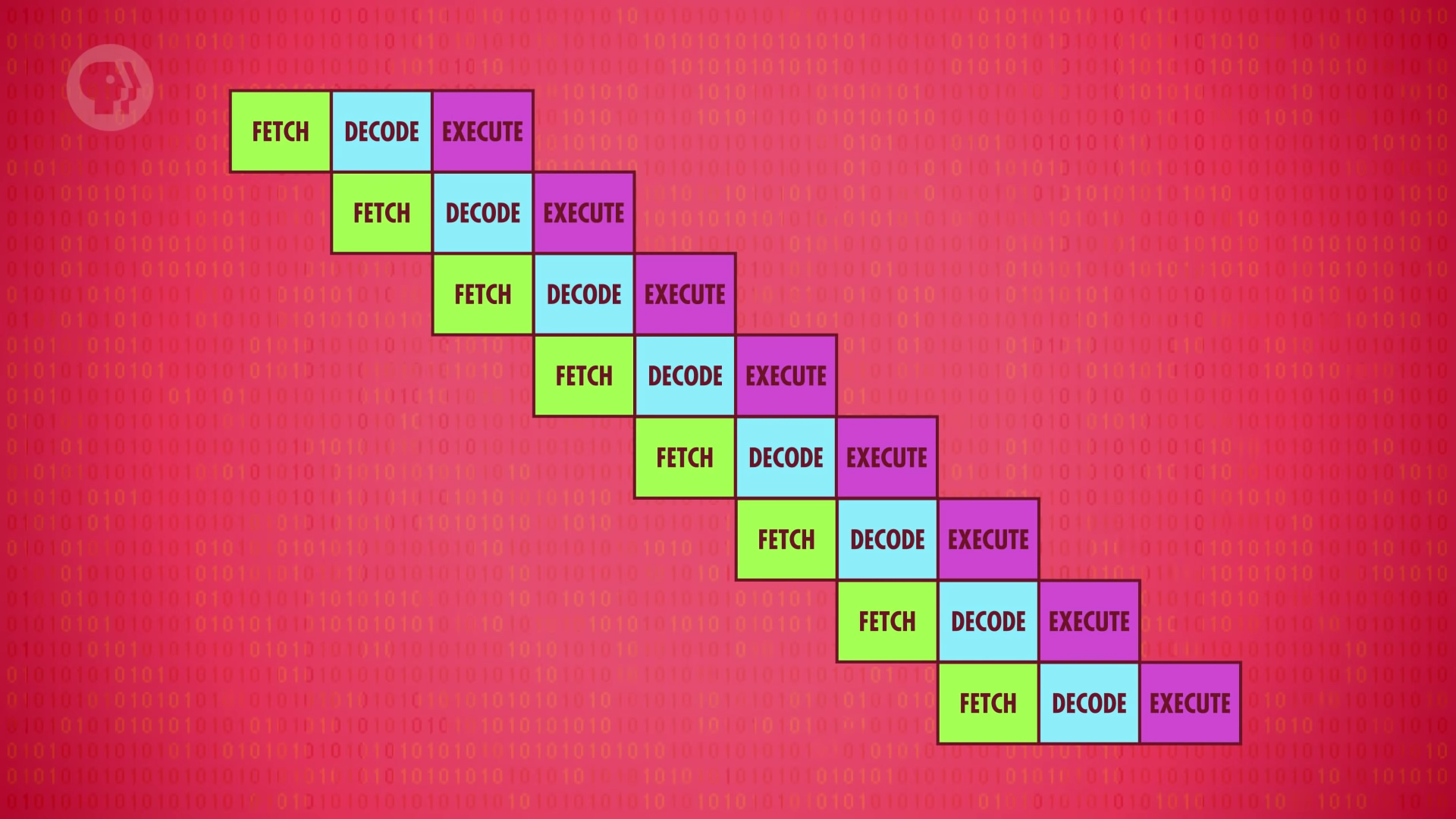

Instruction Pipelining

- Overlap processes to utilize every part of the CPU at the same time.

Hazard 1 - dependency in the instructions

- A data might be fetched that is about to be modified.

- To compensate for this, pipelined processors have to look ahead for data dependencies, and if necessary, stall their pipelines to avoid problems.

- High-end processors(laptop, smartphone) use out-of-order execution:

- Dynamically reorder instructions with dependencies to minimize stalls and keep the pipeline moving.

Hazard 2 - conditional jump instructions

- Delay might occur when the process stall when it sees a jump instruction, waiting for the value to be finalized.

- High-end processors use speculative execution:

- Guess the value, start filling their pipeline with instructions based off that guess.

- If the guess is wrong, discard all of its speculative results and perform pipeline flush : a U-turn.

- Making a sophisticated guess on which way to go - branch prediction.

- Modern processors can guess with over 90% accuracy.

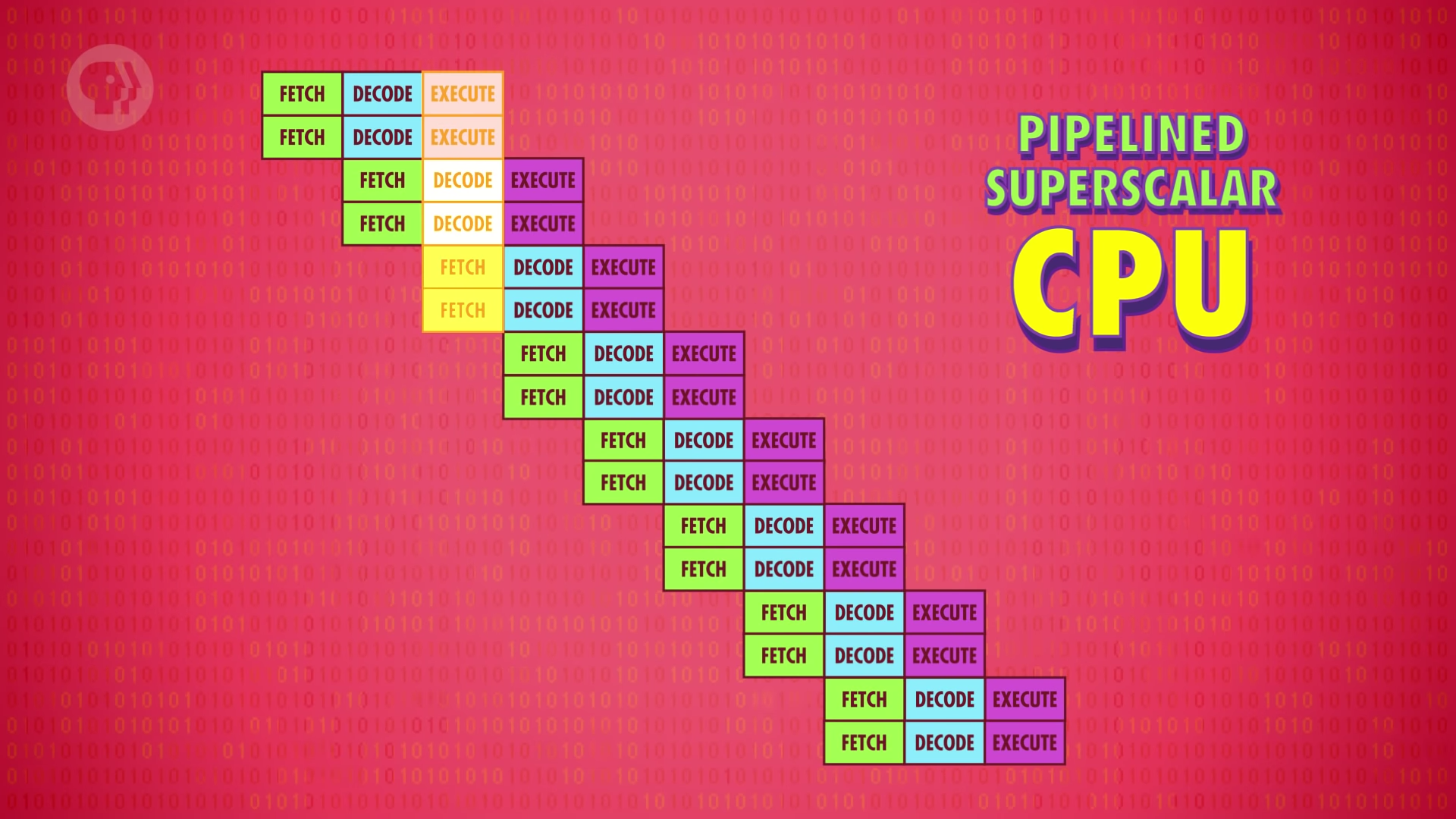

Superscalar Processor

- Can execute more than one instruction per cycle.

- Fetch-and-decode several instructions at once.

- Many processors have several identical ALUs to execute many mathmatical instructions all in parallel.

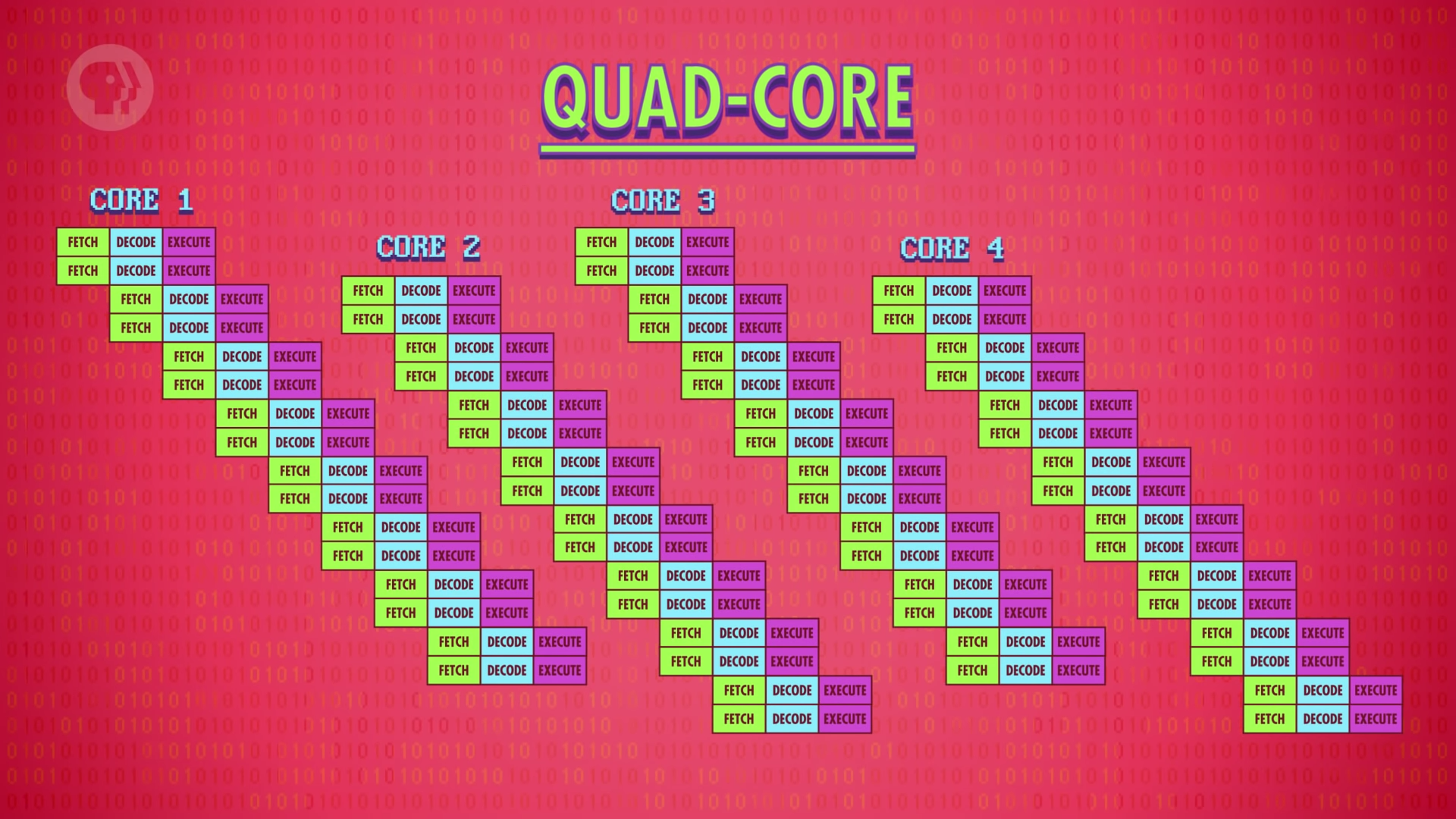

Multi-Core Processor

- Run several streams of instructions at once.

- Multiple independent processing units inside of a single CPU chip.

- Cores are tightly integrated, sharing resources like cache to work together on shared computations.

Multi-Processor

High end computers like Youtube servers need several CPUs to handle traffic.

Supercomputer "The Sunway Taihulight"

- 40,960 CPU's, each with 256 cores (over 10,000,000 cores).

- 93 Quadrillion (9경 3천조) floating point math operations per second (FLOPS).

영어 표현

- go so far - to reach an unexpected extent in doing something

- gobble up - to consume something rapidly

- cache - quantity of things such as weapons that have been hidden (숨겨진 것)

- stall - avoid doing something until later

- out of order - broken

- fork (in a road) - 갈림길

- high-end - High end products, especially electronic products, are the most expensive of their kind.

Thoughts

- 이번 에피소드는 내용이 엄청 압축되어 있다. 여러차례 나누어 정리했다.

- CPU는 가면 갈수록 복잡해지고 있다. 기술이 계속 누적되어 왔기 때문이다.

- 내용이 너무 많아서 오래걸리긴 했는데 내가 너무 많은 내용을 담으려 하는건 아닌지 하는 생각도 든다. 영어로 요약하다보니까 요약력이 조금 떨어지나? 하는 생각도 들고. 근데 내가 하나같이 모르는 내용들이다보니까 포함시킬수밖에 없는것 같기도 하고...

- 다음 편은 프로그래밍에 대한 이야기다. 너무 기대된다...!