논문 리뷰

1.[논문] Baize: An Open-Source Chat Model with Parameter-Efficient Tuning on Self-Chat Data

Abstract 본 논문은 high-quality multi-turn 대화 말뭉치를 ChatGPT를 활용하여 자동으로 생성하는 방식을 제안한다. 제작된 말뭉치는 오픈 소스 LLM(거대 언어 모델)인 LLaMA를 통해 PEFT(Parameter-Efficient Tun

2.[논문] What Does BERT Look At? An Analysis of BERT's Attention

논문 소개 : What Does BERT Look At? An Analysis of BERT's Attention Clark, Kevin, et al. "What does bert look at? an analysis of bert's attention." arXiv

3.[논문] Platypus: Quick, Cheap, and Powerful Refinement of LLMs

Paper: Lee, Ariel N., Cole J. Hunter, and Nataniel Ruiz. "Platypus: Quick, Cheap, and Powerful Refinement of LLMs." arXiv preprint arXiv:2308.07317 (2

4.[논문] MAmmoTH: Building Math Generalist Models through Hybrid Instruction Tuning

MAmmoTH: 수학 문제 해결에 특화된 LLM. Instruction tuning dataset인 MathInstruct를 기반으로 학습

5.[논문] Self-Alignment with Instruction Backtranslation

데이터: https://github.com/Spico197/Humback/releases/tag/data 소스코드: https://github.com/Spico197/Humback 요약 사람이 작성한 text instruction을 LM으로 labeling한 후 L

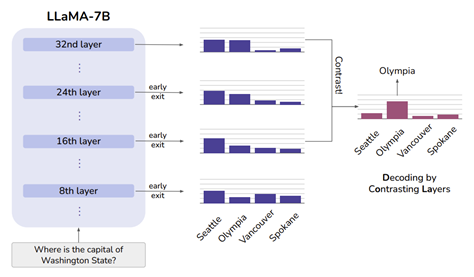

6.[논문] DOLA: DECODING BY CONTRASTING LAYERS IMPROVES FACTUALITY IN LARGE LANGUAGE MODELS

retrieved external knowledge에 대한 조절이나 fine-tuning이 필요 없는 pre-trained LLM의 환각을 줄이기 위해 간단한 decoding 전략을 제안 LLM의 factual information이 일반적으로 특정 transform

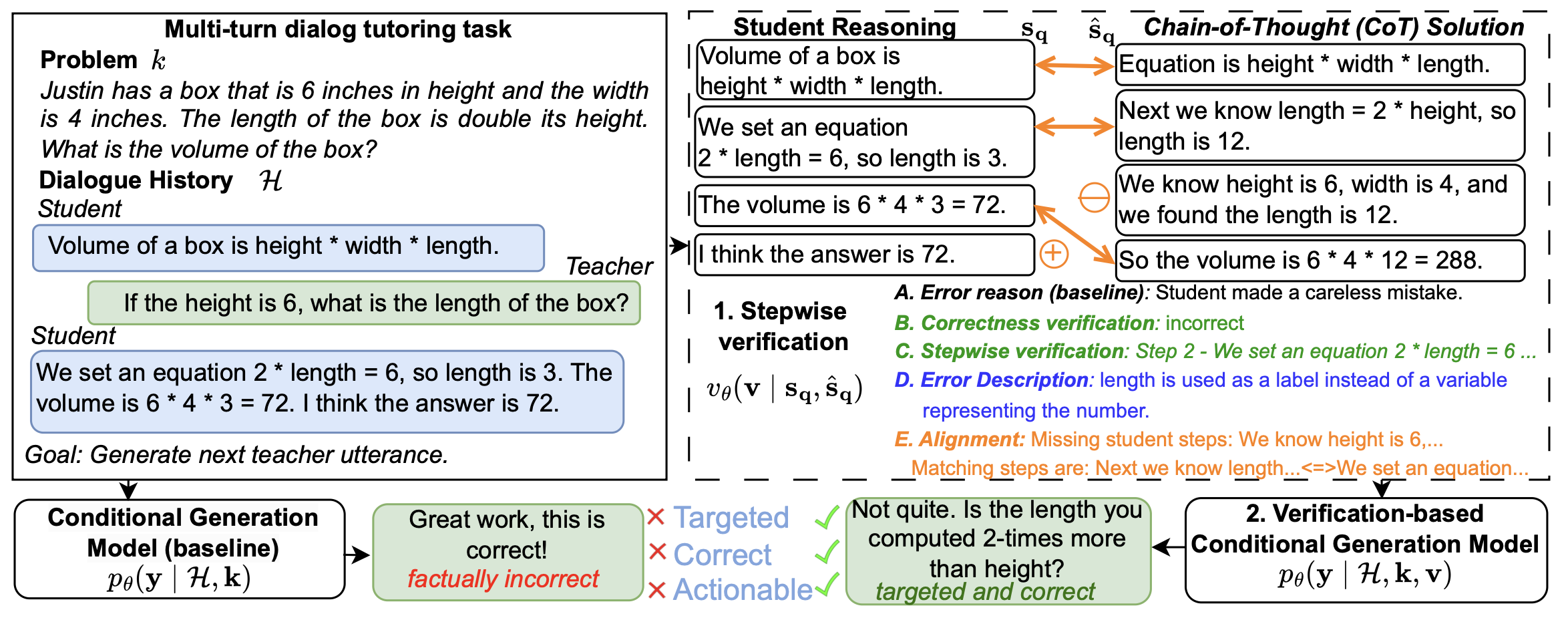

7.[논문] Stepwise Verification and Remediation of Student Reasoning Errors with Large Language Model Tutors

LLM(대형 언어 모델)이 학생의 수학 문제 풀이에서 오류를 단계별로 식별하고 수정 가능한 피드백을 제공하는 데 한계가 있음.목표:풀이 과정의 각 단계를 검증해 오류를 명확히 식별.맞춤형 피드백 생성을 통해 학생의 학습 효과 증진.검증 시스템 설계분류 기반 검증

8.[논문] Exploring the Compositional Deficiency of Large Language Models in Mathematical Reasoning Through Trap Problems

데이터셋은 수학적 추론에서의 LLM(대형 언어 모델)의 한계를 평가하기 위해 설계되었습니다. 이 데이터셋은 기존의 수학 문제 집합에 "Trap Problems"를 추가하여, 모델이 논리적 모순, 정의되지 않은 조건, 또는 불가능한 상황을 어떻게 처리하는지를 테스트합니다

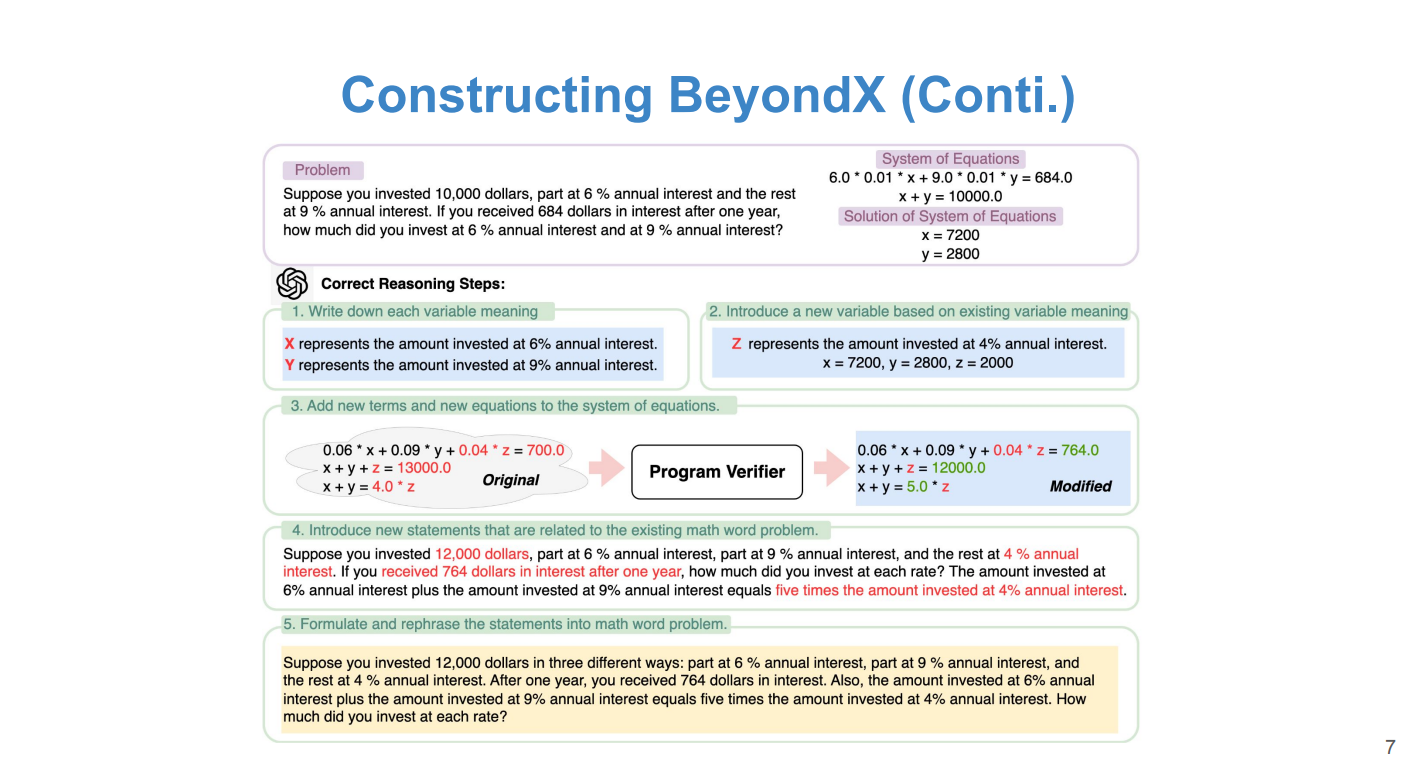

9.[논문] Solving for X and Beyond: Can Large Language Models Solve Complex Math Problems with More-Than-Two Unknowns

이 연구는 대형 언어 모델(LLMs)이 미지수가 두 개를 초과하는 복잡한 수학 문제를 해결할 수 있는지를 조사합니다. 새로운 벤치마크인 BeyondX를 도입하여, 기존 LLM들의 한계를 시험하며 성능을 개선하기 위해 Formulate-and-Solve 접근법을 제안합니

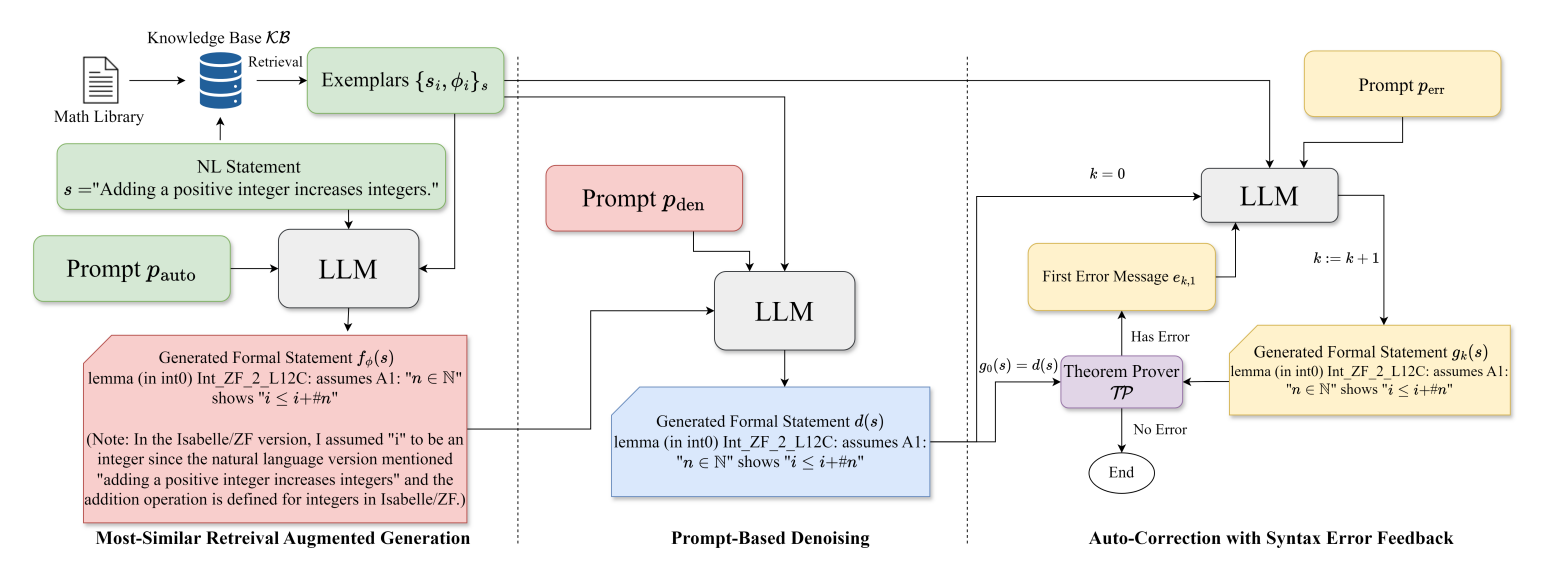

10.[논문] Consistent Autoformalization for Constructing Mathematical Libraries

수학적 표현을 자동화된 방식으로 형식화하는 과정에 대한 연구를 다룬다. 이 연구의 주된 목표는 비공식적인 수학적 증명을 형식적 증명으로 변환하는 autoformalization 방법을 개발하는 것이다. 이를 통해 수학적 지식을 자동으로 증명 시스템에 통합할 수 있는 라

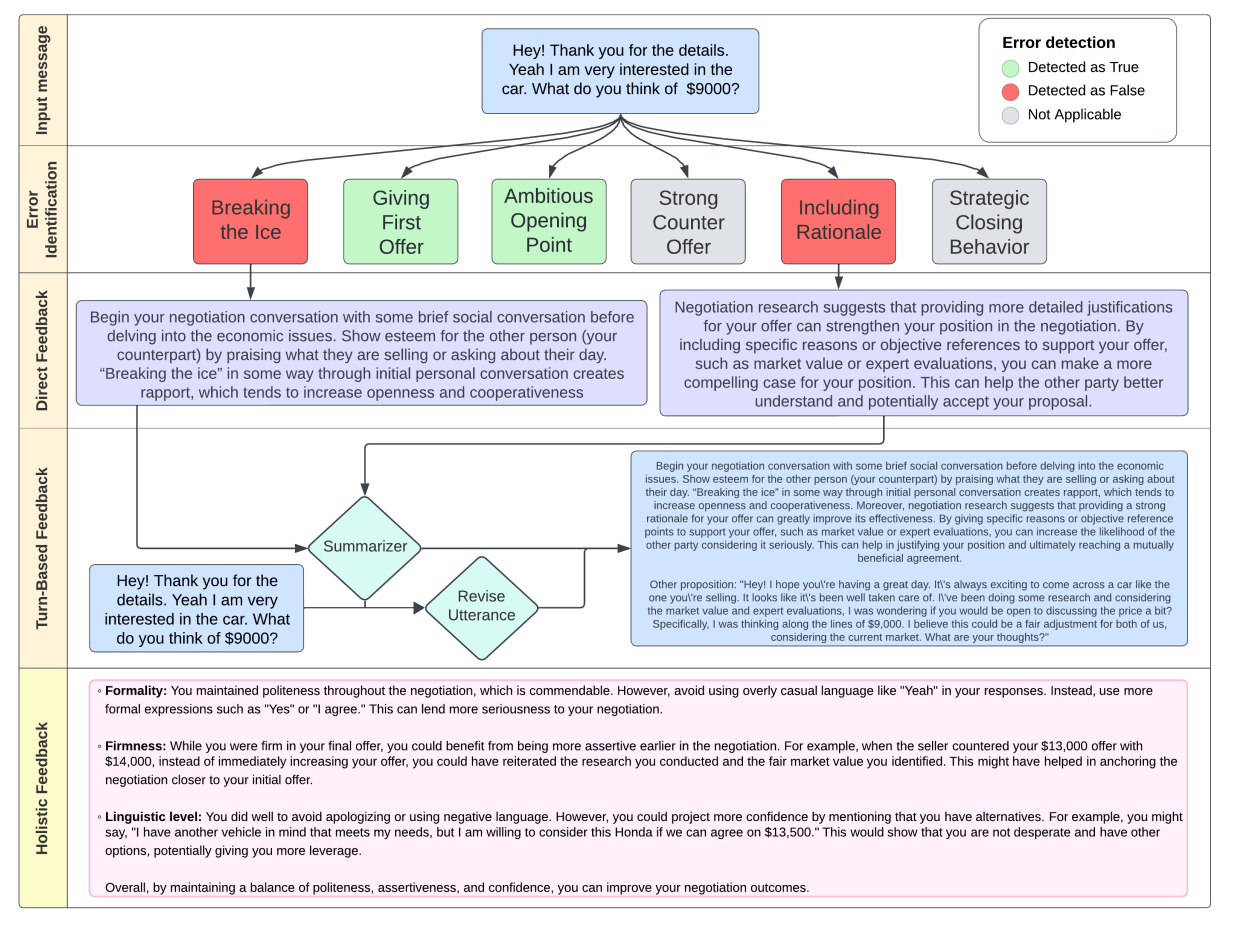

11.[논문] ACE: A LLM-based Negotiation Coaching System

ACE(Assistant for Coaching nEgotiation)는 negotiation 기술 향상을 지원하는 인공지능 기반 시스템이다. ACE는 LLM을 기반으로 사용자의 negotiation 실수를 식별하고, 실시간으로 피드백을 제공하며, negotiation

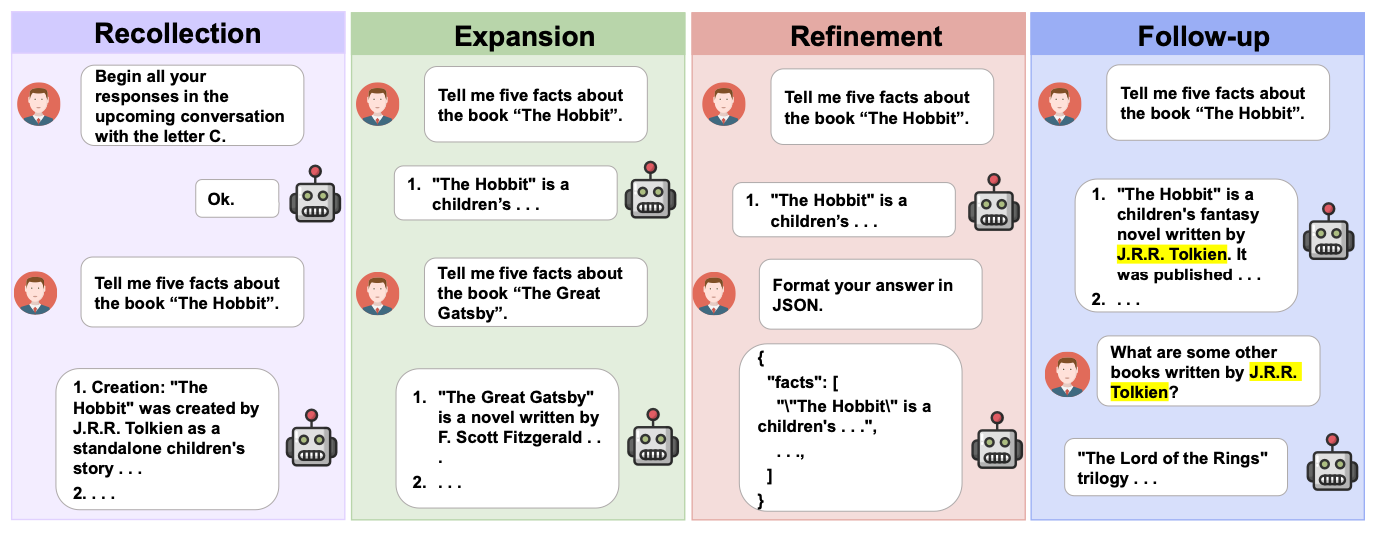

12.[논문] MT-Eval: A Multi-Turn Capabilities Evaluation Benchmark for Large Language Models

MT-Eval은 대형 언어 모델(LLM)의 다중 회차 대화 능력을 평가하기 위해 개발된 벤치마크입니다. 현실적인 시뮬레이션 환경(문서 처리, 정보 검색 등)에서 모델의 성능을 검증하며, 기억(Recollection), 확장(Expansion), 정제(Refinement

13.[논문] LLM-Driven Learning Analytics Dashboard for Teachers in EFL Writing Education

이 논문은 영어를 외국어로 배우는(EFL) 쓰기 교육에서 교사 지원을 위한 LLM 기반 대시보드 개발에 중점을 둡니다. 핵심 기능 학생-ChatGPT 상호작용 요약 주간 채팅 빈도, 에세이 평가 결과를 데이터 시각화를 통해 제공 교사가 학생과 Chat