지난 포스팅에 이어서 데이터를 생성해보려고 한다.

손 제스처 인식 딥러닝 인공지능 학습시키기 : 진행하면서 참고한 영상이다.

개발 환경 구성

- mediapipe 설치

- cmd에 pip install mediapipe

- cmd에 pip install mediapipe

- tensorflow-gpu 설치 : 참고한 블로그

- GPU : NVIDIA GeForce GTX 1660 SUPER

- 파이썬 설치 (3.8)

- 파이썬 환경변수 설정

- CUDA 설치 (11.5)

- cuDNN 설치 (8.3.2)

- Tensorflow 설치 (2.4.0)

데이터 생성 (create_dataset.py)

import cv2

import mediapipe as mp

import numpy as np

import time, os

actions = ['a', 'b', 'c']

seq_length = 30

secs_for_action = 30

# MediaPipe hands model

mp_hands = mp.solutions.hands

mp_drawing = mp.solutions.drawing_utils

hands = mp_hands.Hands(

max_num_hands=1,

min_detection_confidence=0.5,

min_tracking_confidence=0.5)

cap = cv2.VideoCapture(0)

created_time = int(time.time())

os.makedirs('dataset', exist_ok=True)

while cap.isOpened():

for idx, action in enumerate(actions):

data = []

ret, img = cap.read()

img = cv2.flip(img, 1)

cv2.putText(img, f'Waiting for collecting {action.upper()} action...', org=(10, 30), fontFace=cv2.FONT_HERSHEY_SIMPLEX, fontScale=1, color=(255, 255, 255), thickness=2)

cv2.imshow('img', img)

cv2.waitKey(3000)

start_time = time.time()

while time.time() - start_time < secs_for_action:

ret, img = cap.read()

img = cv2.flip(img, 1)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

result = hands.process(img)

img = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

if result.multi_hand_landmarks is not None:

for res in result.multi_hand_landmarks:

joint = np.zeros((21, 4))

for j, lm in enumerate(res.landmark):

joint[j] = [lm.x, lm.y, lm.z, lm.visibility]

# Compute angles between joints

v1 = joint[[0,1,2,3,0,5,6,7,0,9,10,11,0,13,14,15,0,17,18,19], :3] # Parent joint

v2 = joint[[1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20], :3] # Child joint

v = v2 - v1 # [20, 3]

# Normalize v

v = v / np.linalg.norm(v, axis=1)[:, np.newaxis]

# Get angle using arcos of dot product

angle = np.arccos(np.einsum('nt,nt->n',

v[[0,1,2,4,5,6,8,9,10,12,13,14,16,17,18],:],

v[[1,2,3,5,6,7,9,10,11,13,14,15,17,18,19],:])) # [15,]

angle = np.degrees(angle) # Convert radian to degree

angle_label = np.array([angle], dtype=np.float32)

angle_label = np.append(angle_label, idx)

d = np.concatenate([joint.flatten(), angle_label])

data.append(d)

mp_drawing.draw_landmarks(img, res, mp_hands.HAND_CONNECTIONS)

cv2.imshow('img', img)

if cv2.waitKey(1) == ord('q'):

break

data = np.array(data)

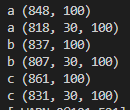

print(action, data.shape)

np.save(os.path.join('dataset', f'raw_{action}_{created_time}'), data)

# Create sequence data

full_seq_data = []

for seq in range(len(data) - seq_length):

full_seq_data.append(data[seq:seq + seq_length])

full_seq_data = np.array(full_seq_data)

print(action, full_seq_data.shape)

np.save(os.path.join('dataset', f'seq_{action}_{created_time}'), full_seq_data)

break- 코드 출처 (깃허브)

- 원래의 계획은 수어영상으로 좌표값을 생성하려했으나 그렇게 진행하면 시간이 더 오래 걸릴 것 같아 웹캠으로 성공하면 영상으로 바꿔보려고 한다.

- 이렇게 ㄱ, ㄴ, ㄷ의 데이터가 생성되었다.

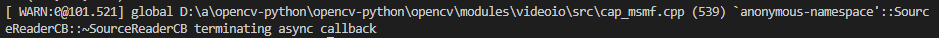

- 무시해도 되는 warning일까...?

Trouble Shooting

- 코드를 작성하고 실행 시키는데 터미널에 이것만 뜨고 아무것도 실행되지 않는다.

INFO: Created TensorFlow Lite XNNPACK delegate for CPU. - cmd에서 실행시켜도 동일한 INFO가 뜸

- .py를 더블클릭하여 실행시켜도 동일한 INFO가 뜸

- INFO 내용을 검색했을 때 유일하게 나오는 한국어 블로그 : 하지만 여기서도 특별한 정보를 얻지 못했다

- mediapipe 깃허브 이슈

INFO는 오류가 아니라 단순히 정보로그라고 한다. 위의 INFO는 단순히 'CPU용 TensorFlow Lite XNNPACK 대리자를 생성했습니다.'라는 정보라고 한다.그럼 왜 이후에 실행되는 건 아무것도 없는 걸까 - 카메라의 문제인가 싶어서 cv2.VideoCapture(0)의 카메라 번호도 바꿔봤지만 차이가 없었다.

- vscode에서의 mediapipe 실행, mediapipe 실행안됨, 파이썬 mediapipe 실행안됨, mediapipe 웹캠 실행안됨 등으로 구글링을 해보았지만 해결책조차 찾을 수 없었다.

- 그러다가 갑자기 웹캠이 잘 작동이 되고 있는건가 라는 의문이 머리를 스쳐지나갔다.

설마... 라는 생각으로 혼자 zoom을 열어서 카메라를 확인해봤고 나의 카메라는 작동하지않고있었다.... 웹캠의 오류를 해결하고 다시 실행시켜보니 실행되는 코드에 절망과 행복이 동시에 찾아왔다....

출처