23.11.6 (월) 33일차

영상처리 비전 (C++ 기반)

영상에서 등록된 이미지의 패턴(동일 이미지) 위치를 알 수 있는 방법은?

패턴과 영상을 비교하면서 가장 정확한 부분을 찾음.

Apple Vision Pro

디즈니에서 캐릭터 뱃지를 1만 원에 팔고 애플이 100만 원 핸드폰을 판다고 하면.. 핸드폰 하나를 만들기 위해서 많은 국가와 협력해야 하고 반도 공정 등 여러 기술이 필요함.

이에 반해 캐릭터 굿즈는 계속 찍어낼 수 있으니까 가격 경쟁력을 갖게 되는 것,,

그래서 산업군이 변화하고 있는 것

- 다른 스크린에 비해 눈과 스크린 사이 거리가 짧은데 눈에 무리가 없는지?

eye safety 국제 표준을 통과해야 기술을 낼 수 있음. 애플은 그 중에서도 더 빡세게 관리하는 편..

apple structure light FaceID는 레이저 빛을 쏴서 얼굴 판독을 하는 거라 처음 나왔을 때 우려의 시선이 많았음.

- 이 기술은 어떤 시장을 대체할 것인가..

생각해 볼 것..

우리는 어디를 인식하는가?

눈, 모자의 털, 어깨, 배경의 아치.. 등등

눈, 모자의 털, 어깨, 배경의 아치.. 등등

우리가 인식하는 것은 정보량이 많은 것이다

=> 즉 고주파에 해당하는 것을 인식함

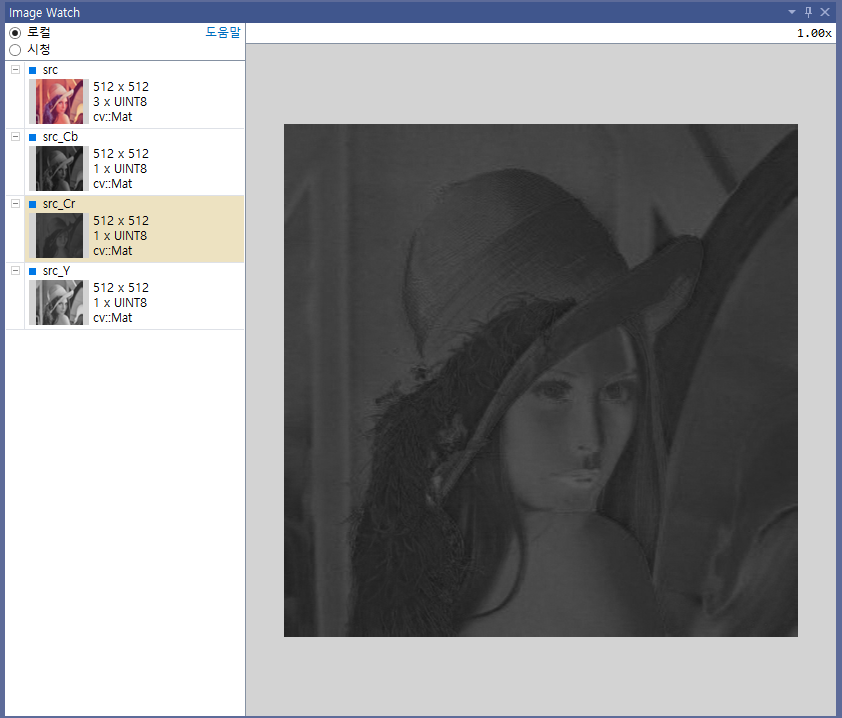

Color Space

- RGB -> YCbCr

#pragma once

#include "Common.h"

int main()

{

//std::string fileName = "../KCCImageNet/stop_img.png";

std::string fileName = "../thirdparty/opencv_480/samples/data/lena.jpg";

cv::Mat src = cv::imread(fileName, cv::ImreadModes::IMREAD_ANYCOLOR);

uchar* pData = src.data; // data array

int length = src.total(); // data length

int channels = src.channels();

Mat src_Y = Mat(src.rows, src.cols, CV_8UC1);

Mat src_Cb = Mat(src.rows, src.cols, CV_8UC1);

Mat src_Cr = Mat(src.rows, src.cols, CV_8UC1);

for (size_t row = 0; row < src.rows; row++)

{

for (size_t col = 0; col < src.cols; col++)

{

// mono

int index = (row)*src.cols + col;

// color

if (channels == 3)

{

int index_B = index * channels + 0;

int index_G = index * channels + 1;

int index_R = index * channels + 2;

int val_B = pData[index_B];

int val_G = pData[index_G];

int val_R = pData[index_R];

int val_Y = 0.299 * val_R + 0.587 * val_G + 0.114 * val_B;

int val_Cb = -0.169 * val_R + 0.331 * val_G + 0.500 * val_B;

int val_Cr = 0.500 * val_R - 0.419 * val_G + 0.0813 * val_B;

src_Y.data[index] = (uchar)val_Y;

src_Cb.data[index] = (uchar)val_Cb;

src_Cr.data[index] = (uchar)val_Cr;

}

}

}

return 1;

}- YCbCr -> RGB

#pragma once

#include "Common.h"

int main()

{

//std::string fileName = "../KCCImageNet/stop_img.png";

std::string fileName = "../thirdparty/opencv_480/samples/data/lena.jpg";

cv::Mat src = cv::imread(fileName, cv::ImreadModes::IMREAD_ANYCOLOR);

uchar* pData = src.data; // data array

int length = src.total(); // data length

int channels = src.channels();

Mat src_Y = Mat(src.rows, src.cols, CV_8UC1);

Mat src_Cb = Mat(src.rows, src.cols, CV_8UC1);

Mat src_Cr = Mat(src.rows, src.cols, CV_8UC1);

Mat src_New = Mat(src.rows, src.cols, CV_8UC3);

for (size_t row = 0; row < src.rows; row++)

{

for (size_t col = 0; col < src.cols; col++)

{

// mono

int index = (row)*src.cols + col;

// color

if (channels == 3)

{

int index_B = index * channels + 0;

int index_G = index * channels + 1;

int index_R = index * channels + 2;

int val_B = pData[index_B];

int val_G = pData[index_G];

int val_R = pData[index_R];

int val_Y = 0.299 * val_R + 0.587 * val_G + 0.114 * val_B;

int val_Cb = -0.169 * val_R - 0.331 * val_G + 0.500 * val_B;

int val_Cr = 0.500 * val_R - 0.419 * val_G - 0.0813 * val_B;

src_Y.data[index] = (uchar)val_Y;

src_Cb.data[index] = (uchar)val_Cb;

src_Cr.data[index] = (uchar)val_Cr;

int val_R2 = 1.000 * val_Y + 1.402 * val_Cr + 0.000 * val_Cb;

int val_G2 = 1.000 * val_Y - 0.714 * val_Cr - 0.344 * val_Cb;

int val_B2 = 1.000 * val_Y + 0.000 * val_Cr + 1.772 * val_Cb;

src_New.data[index_R] = (uchar)val_R2;

src_New.data[index_G] = (uchar)val_G2;

src_New.data[index_B] = (uchar)val_B2;

}

// mono

else

{

src_Y.data[index] = src.data[index];

src_Cb.data[index] = src.data[index];

src_Cr.data[index] = src.data[index];

}

}

}

return 1;

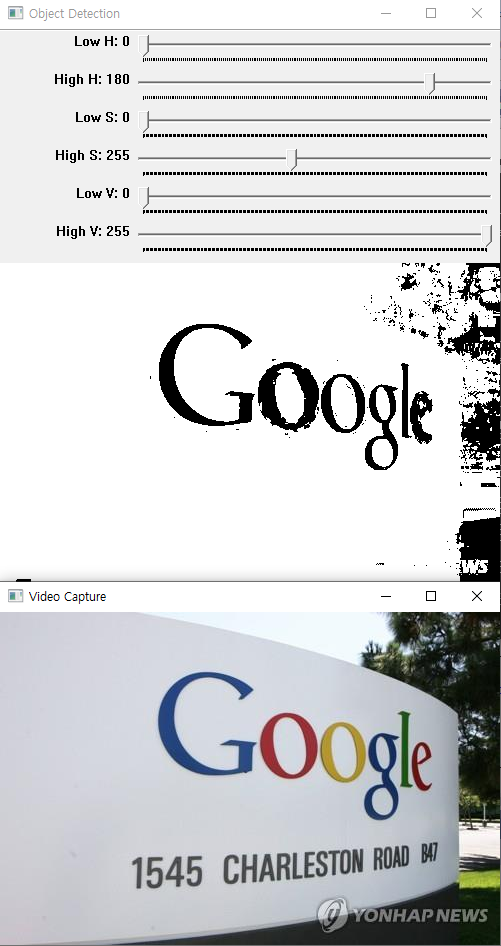

}Color HSV

HSV로 나타내기

(공식 문서 참고)

#pragma once

#include "Common.h"

//https://docs.opencv.org/3.4/da/d97/tutorial_threshold_inRange.html

using namespace cv;

const int max_value_H = 360 / 2;

const int max_value = 255;

const String window_capture_name = "Video Capture";

const String window_detection_name = "Object Detection";

int low_H = 0, low_S = 0, low_V = 0;

int high_H = max_value_H, high_S = max_value, high_V = max_value;

static void on_low_H_thresh_trackbar(int, void*)

{

low_H = min(high_H - 1, low_H);

setTrackbarPos("Low H", window_detection_name, low_H);

}

static void on_high_H_thresh_trackbar(int, void*)

{

high_H = max(high_H, low_H + 1);

setTrackbarPos("High H", window_detection_name, high_H);

}

static void on_low_S_thresh_trackbar(int, void*)

{

low_S = min(high_S - 1, low_S);

setTrackbarPos("Low S", window_detection_name, low_S);

}

static void on_high_S_thresh_trackbar(int, void*)

{

high_S = max(high_S, low_S + 1);

setTrackbarPos("High S", window_detection_name, high_S);

}

static void on_low_V_thresh_trackbar(int, void*)

{

low_V = min(high_V - 1, low_V);

setTrackbarPos("Low V", window_detection_name, low_V);

}

static void on_high_V_thresh_trackbar(int, void*)

{

high_V = max(high_V, low_V + 1);

setTrackbarPos("High V", window_detection_name, high_V);

}

int main(int argc, char* argv[])

{

//VideoCapture cap(argc > 1 ? atoi(argv[1]) : 0);

namedWindow(window_capture_name);

namedWindow(window_detection_name);

// Trackbars to set thresholds for HSV values

createTrackbar("Low H", window_detection_name, &low_H, max_value_H, on_low_H_thresh_trackbar);

createTrackbar("High H", window_detection_name, &high_H, max_value_H, on_high_H_thresh_trackbar);

createTrackbar("Low S", window_detection_name, &low_S, max_value, on_low_S_thresh_trackbar);

createTrackbar("High S", window_detection_name, &high_S, max_value, on_high_S_thresh_trackbar);

createTrackbar("Low V", window_detection_name, &low_V, max_value, on_low_V_thresh_trackbar);

createTrackbar("High V", window_detection_name, &high_V, max_value, on_high_V_thresh_trackbar);

Mat frame, frame_HSV, frame_threshold;

while (true) {

std::string fileName = "../KCCImageNet/find_google_area.png";

cv::Mat frame = cv::imread(fileName, cv::ImreadModes::IMREAD_ANYCOLOR);

//resizeWindow(window_capture_name, frame.cols, frame.rows);

//resizeWindow(window_detection_name, frame.cols, frame.rows);

//cap >> frame;

if (frame.empty())

{

break;

}

// Convert from BGR to HSV colorspace

cvtColor(frame, frame_HSV, COLOR_BGR2HSV);

// Detect the object based on HSV Range Values

inRange(frame_HSV, Scalar(low_H, low_S, low_V), Scalar(high_H, high_S, high_V), frame_threshold);

// Show the frames

imshow(window_capture_name, frame);

imshow(window_detection_name, frame_threshold);

char key = (char)waitKey(30);

if (key == 'q' || key == 27)

{

break;

}

}

return 0;

}