출처: https://amitness.com/2020/05/self-supervised-learning-nlp/

참고: https://lilianweng.github.io/lil-log/2019/11/10/self-supervised-learning.html

- Language Models have existed since the 90’s even before the phrase “self-supervised learning” was termed.

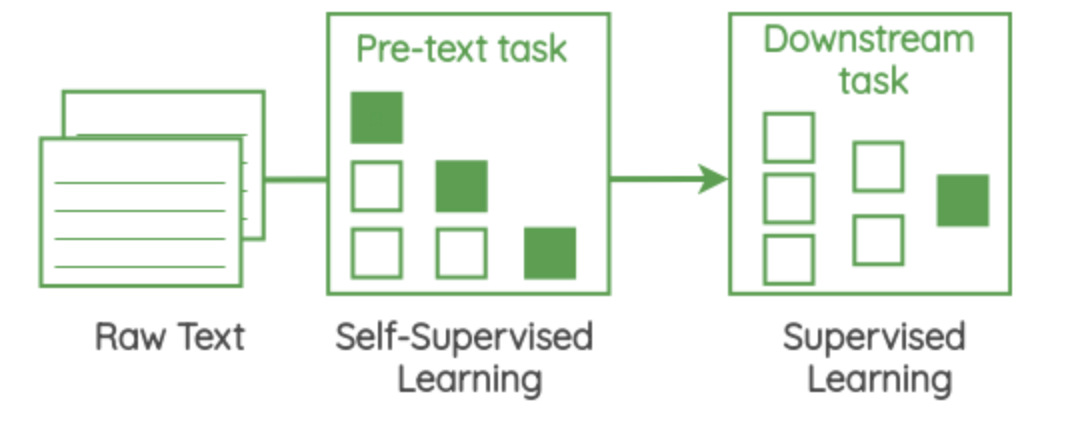

- At the core of these self-supervised methods lies a framing called “pretext task”

- pretext task(= auxiliary task, pre-training task): use the data itself to generate labels and use supervised methods to solve unsupervised problems

Self-Supervised Formulations

1. Center Word Prediction

In this formulation, we take a small chunk of the text of a certain window size and our goal is to predict the center word given the surrounding words.

For example, in the below image, we have a window of size of one and so we have one word each on both sides of the center word. Using these neighboring words, we need to predict the center word.

2. Neighbor Word Prediction

In this formulation, we take a span of the text of a certain window size and our goal is to predict the surrounding words given the center word.

3. Neighbor Sentence Prediction

In this formulation, we take three consecutive sentences and design a task in which given the center sentence, we need to generate the previous sentence and the next sentence. It is similar to the previous skip-gram method but applied to sentences instead of words.