[논문리뷰]VATT: Transformers for Multimodal Self-Supervised Learning from Raw Video, Audio and Text

PREVIEW

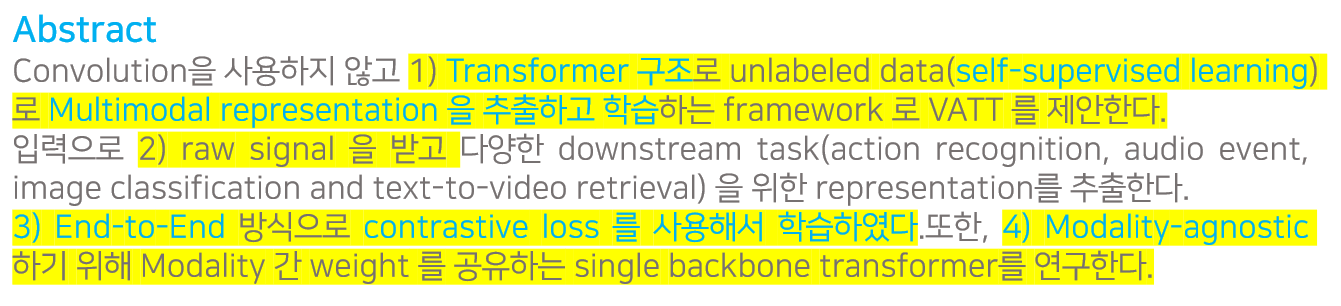

1) Multimodal representation learning을 학습하는 프레임워크

2) transformer backbone Architecture

3) Self-supervised learning : unlabeled data

4) End-to-End Contrastive learning

5) Drop Token

○ inductive bias ▶ large scale training

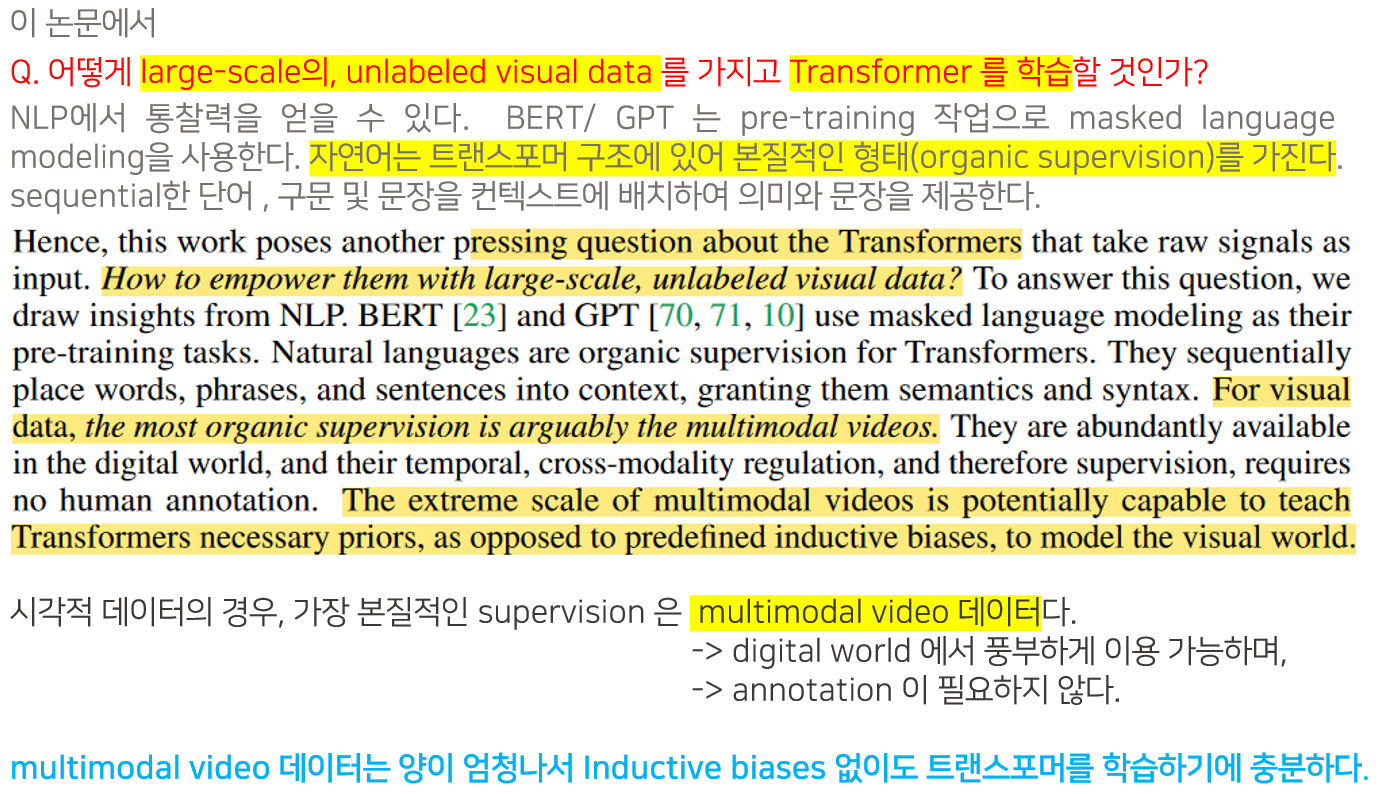

▼ Q. How to train transformers with large scale, unlabeled visual data?

▼ 연구 주제