This post is based on google cloud course

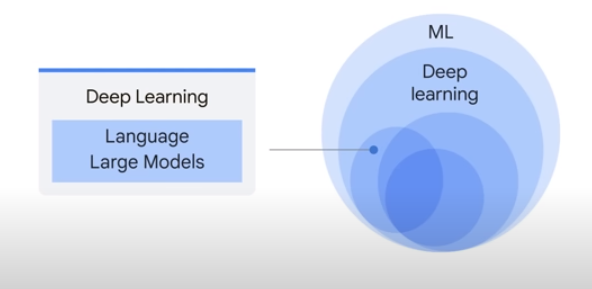

Define LLM

- subset of DL

- LLM : large, general purpose language models can be pre-trained and then fine-tuned for specific purposes

trained for commen language problems .. and then tailored to solve specific problems

Large -> large training set and large number of parameters

General purpose -> commonality of human languages

Pre-trained an fine-tuned

Benefits?

single model can be used for different tasks

fine-tune process requires minimal field data

continously growing performance

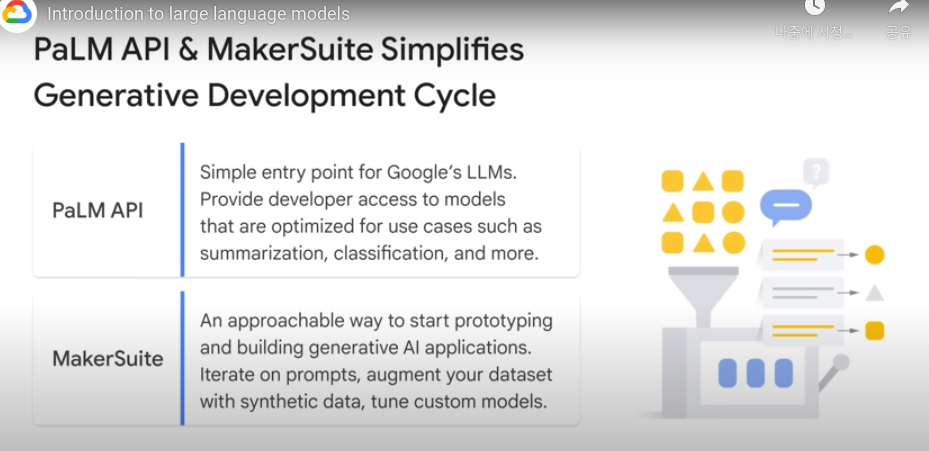

ex) PaLM , GPT, LaMDA

LLL use cases

- Question Answering (QA)

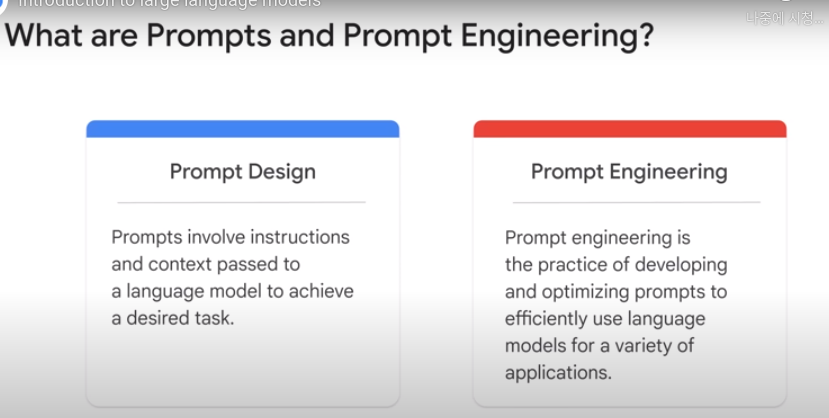

Prompt tuning

Tuning

process of adapting a model to a new domain of custom use casses. by training new data

3 main kinds of LLM -> each requries different way of prompting

- Generic language model : predict next word ex) auto complete, search

-

Instruction Tuned : predict a response to the instructions ex) summarize, writing (generate poam...), keyword extraction

-

Dialog Tuned : have dialog by predicting next response.

ex) chat bot

==> task specific tuning can make LLMs more reliable !

fine-tuning

retrain the pre-trained model by weighting -> expensive

althernative?

PETM parameter-efficient tuning methods

method for tuning LLM on own data. The base model is not changed. few add-on layers are tuned. ex) Prompt Tuning

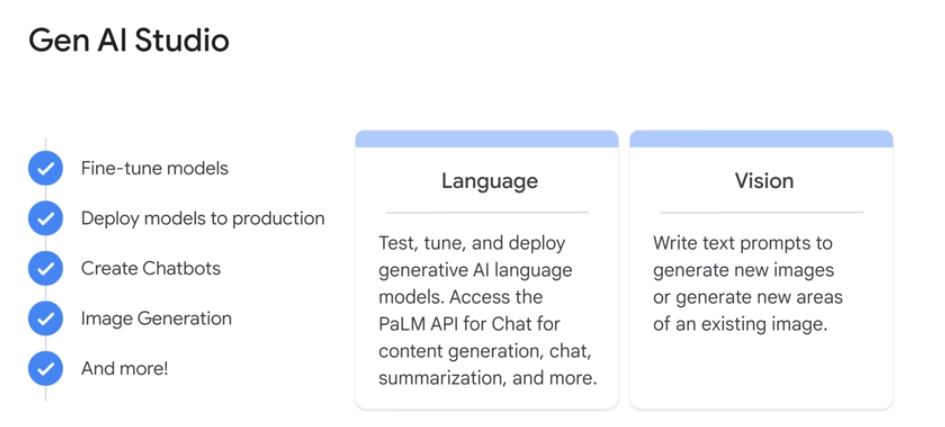

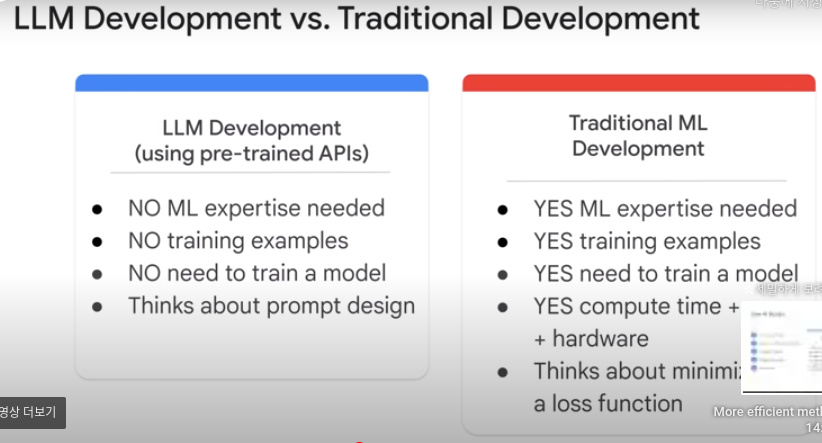

Gen AI development tools