1. 객체 계획 및 정의

코드 작성 전에 수집할 웹사이트에서 무엇을 수집하고 저장할지 잘 생각해서 데이터 모델 짜는것이 가장 중요

2. 다양한 웹사이트 레이아웃 다루기

import requests

class Content:

def __init__(self, url, title, body):

self.url = url

self.title = title

self.body = body

def getPage(url):

req = requests.get(url)

return BeautifulSoup(req.text, 'html.parser')

def scrapeNYTimes(url):

bs = getPage(url)

title = bs.find('h1').text

lines = bs.select('div.StoryBodyCompanionColumn div p')

body = '\n'.join([line.text for line in lines])

return Content(url, title, body)

def scrapeBrookings(url):

bs = getPage(url)

title = bs.find('h1').text

body = bs.find('div', {'class', 'post-body'}).text

return Content(url, title, body)

url = 'https://www.brookings.edu/blog/future-development/2018/01/26/delivering-inclusive-urban-access-3-uncomfortable-truths/'

content = scrapeBrookings(url)

print('Title: {}'.format(content.title))

print('URL: {}\n'.format(content.url))

print(content.body)

url = 'https://www.nytimes.com/2018/01/25/opinion/sunday/silicon-valley-immortality.html'

content = scrapeNYTimes(url)

print('Title: {}'.format(content.title))

print('URL: {}\n'.format(content.url))

print(content.body)

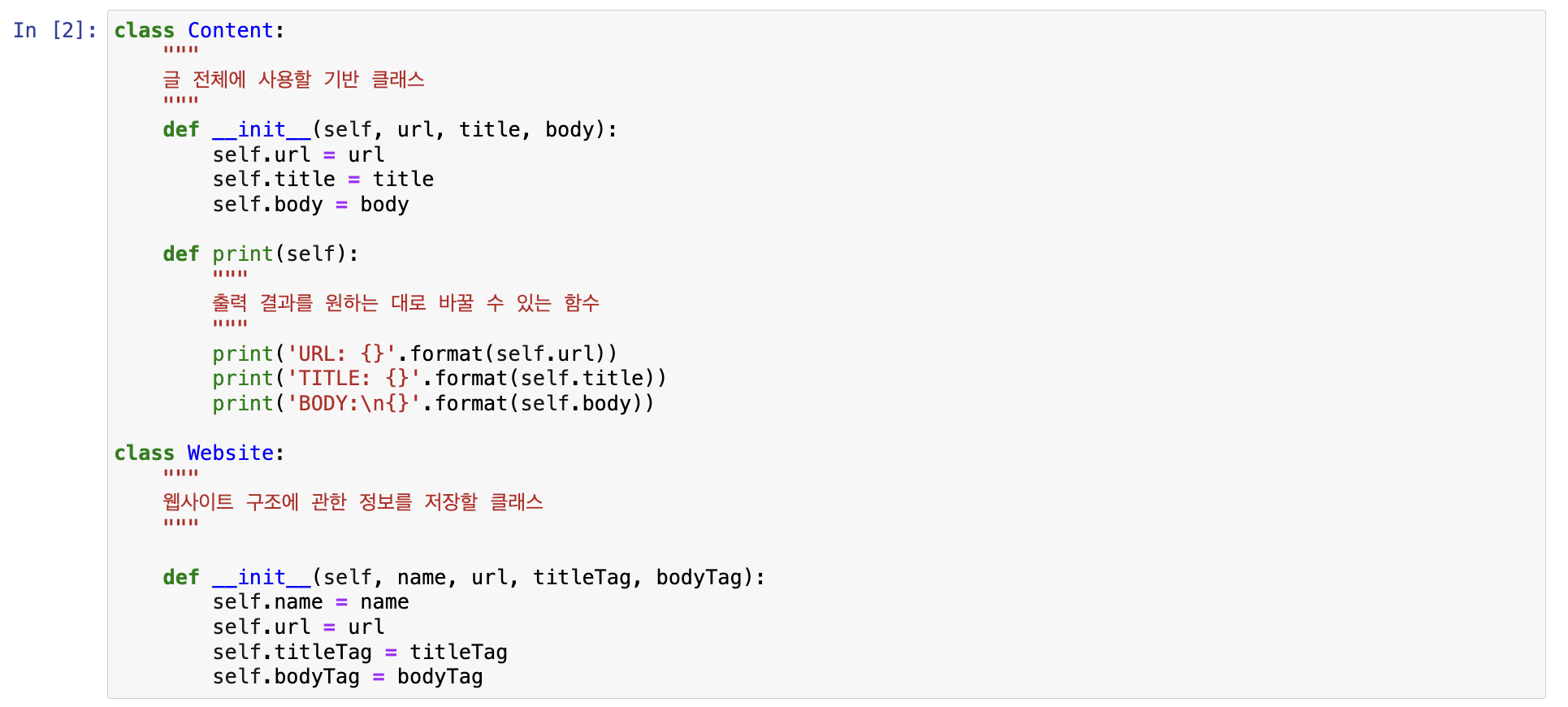

이 웹사이트의 콘텐츠에 대응하는 Content클래스

사이트에 따라 css선택자가 변함-> 수집할 정보에 대응하는 css 선택지를 각각 문자열 하나로 만들고, 이를 딕셔너리 객체에 모아서 BeautifulSoup select 함수랑 사용하면 편리

Website클래스에 데이터가 아닌, 데이터 수집 방법에 대한 지침을 저장

ex. 제목을 찾을 수 있는 위치를 나타내는 문자열 태그 h1을 저장

Content클래스에 실제 정보를 저장

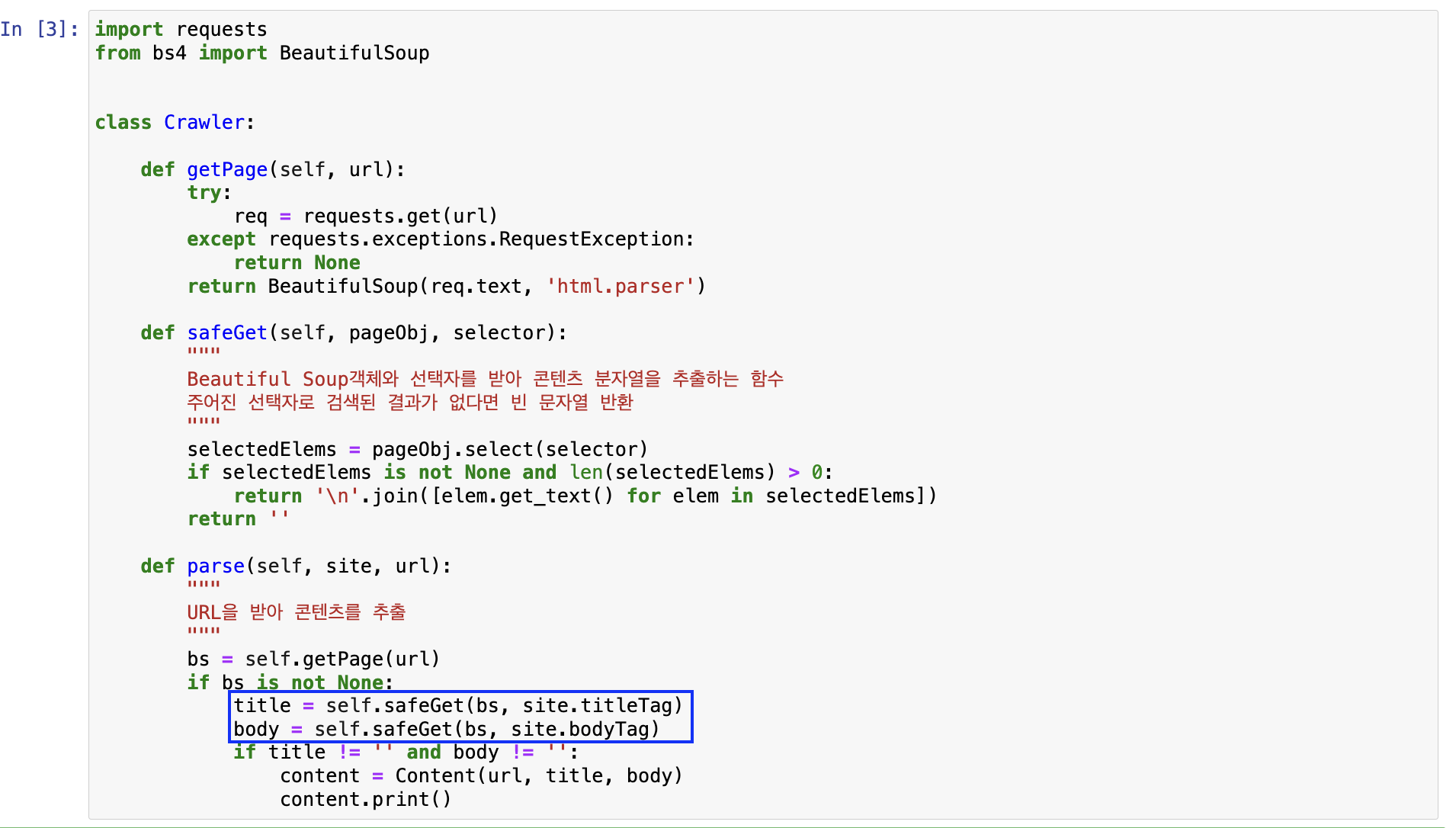

=> 웹페이지에 존재하는 url의 제목과 내용을 모드 스크랩할 Crawler

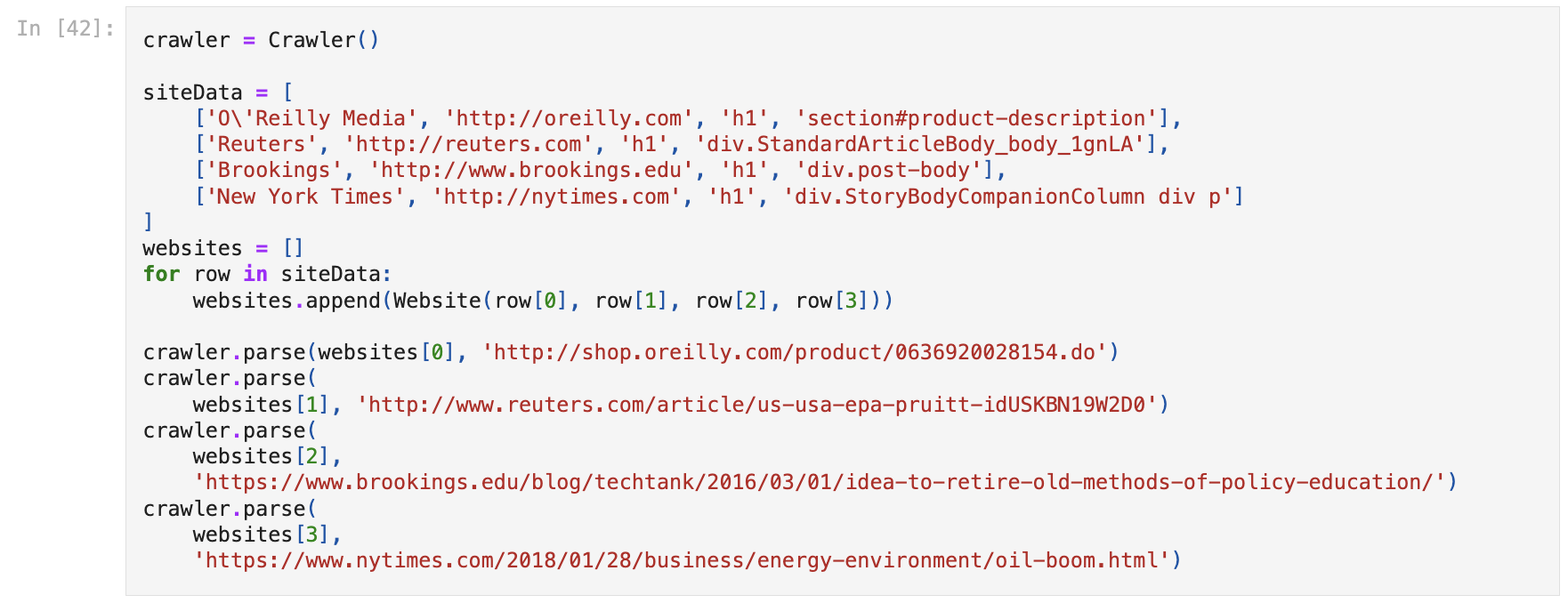

웹사이트 객체 정의하고 프로세스 시작

3. 크롤러 구성

1) 검색을 통한 사이트 크롤링

대부분의 사이트는 http://example.com?search=myTopic의 형식으로 검색어 삽입해 검색 가능

검색어 목록 하나하나에 대해 Website데이터들로 검색 진행

절대 url인지 상대 url인지에 따라 다르게 처리

class Content:

"""Common base class for all articles/pages"""

def __init__(self, topic, url, title, body):

self.topic = topic

self.title = title

self.body = body

self.url = url

def print(self):

"""

Flexible printing function controls output

"""

print('New article found for topic: {}'.format(self.topic))

print('URL: {}'.format(self.url))

print('TITLE: {}'.format(self.title))

print('BODY:\n{}'.format(self.body))

class Website:

"""Contains information about website structure"""

def __init__(self, name, url, searchUrl, resultListing, resultUrl, absoluteUrl, titleTag, bodyTag):

self.name = name

self.url = url

self.searchUrl = searchUrl

self.resultListing = resultListing

self.resultUrl = resultUrl

self.absoluteUrl = absoluteUrl

self.titleTag = titleTag

self.bodyTag = bodyTag

import requests

from bs4 import BeautifulSoup

class Crawler:

def getPage(self, url):

try:

req = requests.get(url)

except requests.exceptions.RequestException:

return None

return BeautifulSoup(req.text, 'html.parser')

def safeGet(self, pageObj, selector):

childObj = pageObj.select(selector)

if childObj is not None and len(childObj) > 0:

return childObj[0].get_text()

return ''

def search(self, topic, site):

"""

Searches a given website for a given topic and records all pages found

"""

bs = self.getPage(site.searchUrl + topic)

searchResults = bs.select(site.resultListing)

for result in searchResults:

url = result.select(site.resultUrl)[0].attrs['href']

# Check to see whether it's a relative or an absolute URL

if(site.absoluteUrl):

bs = self.getPage(url)

else:

bs = self.getPage(site.url + url)

if bs is None:

print('Something was wrong with that page or URL. Skipping!')

return

title = self.safeGet(bs, site.titleTag)

body = self.safeGet(bs, site.bodyTag)

if title != '' and body != '':

content = Content(topic, title, body, url)

content.print()

crawler = Crawler()

siteData = [

['O\'Reilly Media', 'http://oreilly.com', 'https://ssearch.oreilly.com/?q=',

'article.product-result', 'p.title a', True, 'h1', 'section#product-description'],

['Reuters', 'http://reuters.com', 'http://www.reuters.com/search/news?blob=', 'div.search-result-content',

'h3.search-result-title a', False, 'h1', 'div.StandardArticleBody_body_1gnLA'],

['Brookings', 'http://www.brookings.edu', 'https://www.brookings.edu/search/?s=',

'div.list-content article', 'h4.title a', True, 'h1', 'div.post-body']

]

sites = []

for row in siteData:

sites.append(Website(row[0], row[1], row[2],

row[3], row[4], row[5], row[6], row[7]))

topics = ['python', 'data science']

for topic in topics:

print('GETTING INFO ABOUT: ' + topic)

for targetSite in sites:

crawler.search(topic, targetSite)2) 링크를 통한 사이트 크롤링

특정 URL패턴(정규표현식)과 일치하는 링크를 모두 따라가기

class Website:

def __init__(self, name, url, targetPattern, absoluteUrl, titleTag, bodyTag):

self.name = name

self.url = url

self.targetPattern = targetPattern

self.absoluteUrl = absoluteUrl

self.titleTag = titleTag

self.bodyTag = bodyTag

class Content:

def __init__(self, url, title, body):

self.url = url

self.title = title

self.body = body

def print(self):

print('URL: {}'.format(self.url))

print('TITLE: {}'.format(self.title))

print('BODY:\n{}'.format(self.body))

import re

class Crawler:

def __init__(self, site):

self.site = site

self.visited = []

def getPage(self, url):

try:

req = requests.get(url)

except requests.exceptions.RequestException:

return None

return BeautifulSoup(req.text, 'html.parser')

def safeGet(self, pageObj, selector):

selectedElems = pageObj.select(selector)

if selectedElems is not None and len(selectedElems) > 0:

return '\n'.join([elem.get_text() for elem in selectedElems])

return ''

def parse(self, url):

bs = self.getPage(url)

if bs is not None:

title = self.safeGet(bs, self.site.titleTag)

body = self.safeGet(bs, self.site.bodyTag)

if title != '' and body != '':

content = Content(url, title, body)

content.print()

def crawl(self):

"""

Get pages from website home page

"""

bs = self.getPage(self.site.url)

targetPages = bs.findAll('a', href=re.compile(self.site.targetPattern))

for targetPage in targetPages:

targetPage = targetPage.attrs['href']

if targetPage not in self.visited:

self.visited.append(targetPage)

if not self.site.absoluteUrl:

targetPage = '{}{}'.format(self.site.url, targetPage)

self.parse(targetPage)

reuters = Website('Reuters', 'https://www.reuters.com', '^(/article/)',

False, 'h1', 'div.StandardArticleBody_body_1gnLA')

crawler = Crawler(reuters)

crawler.crawl()3) 여러 페이지 유형 크롤링

페이지 유형:

URL에 따라

특정 필드가 존재하는지에 따라

페이지를 식별할 수 있는 특정 태그의 여부에 따라