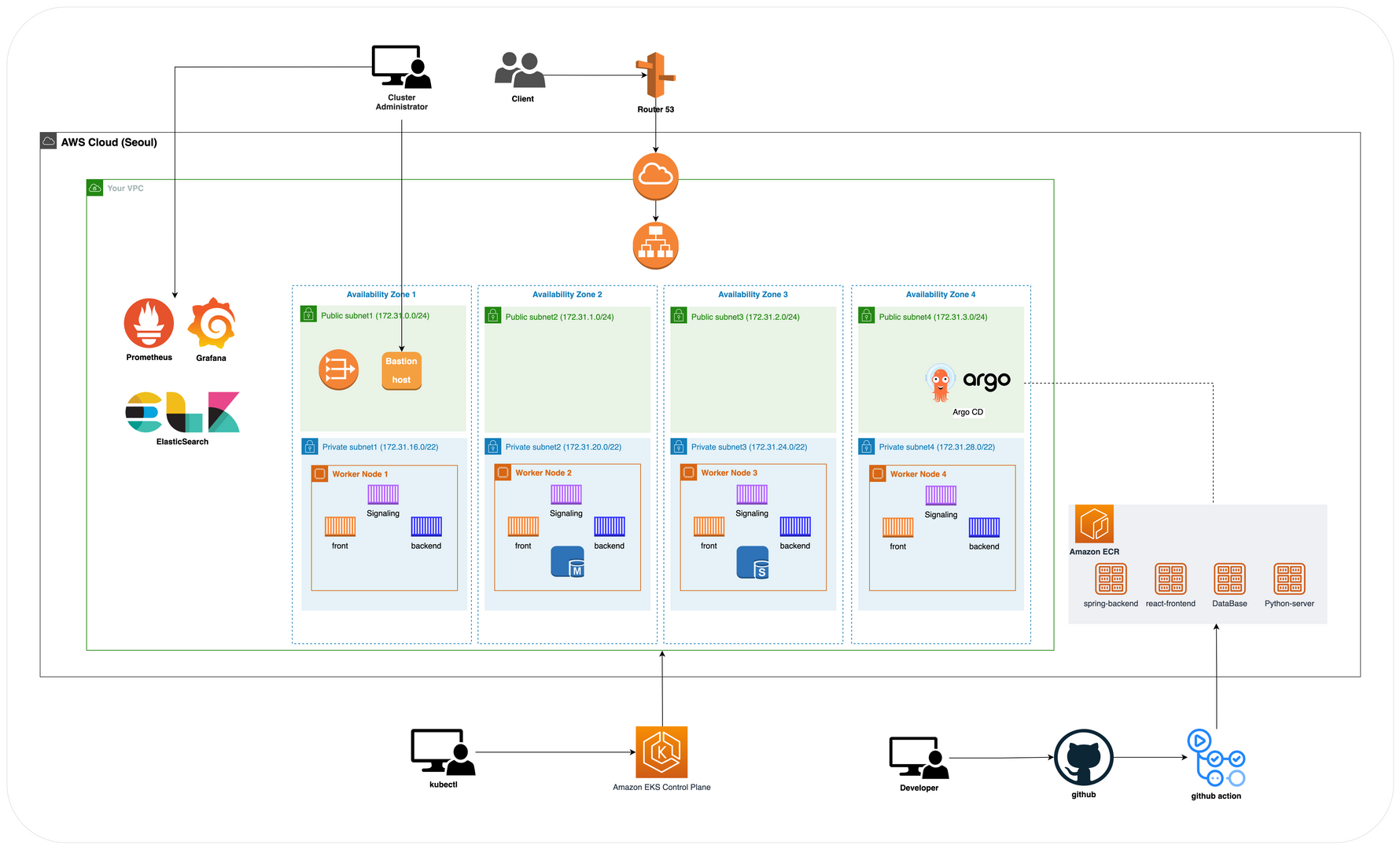

지난 시간 kubectl명령어를 사용하여 EKS를 구축했었다

public subnet3개 생성을 하였으나 테스팅 용도였음으로 이제 우리 팀이 설계한 아키텍쳐대로 EKS를 세팅해야한다

- Region : ap-northeast-2 (서울)

- AZ

ap-northeast-2a

ap-northeast-2b

ap-northeast-2c

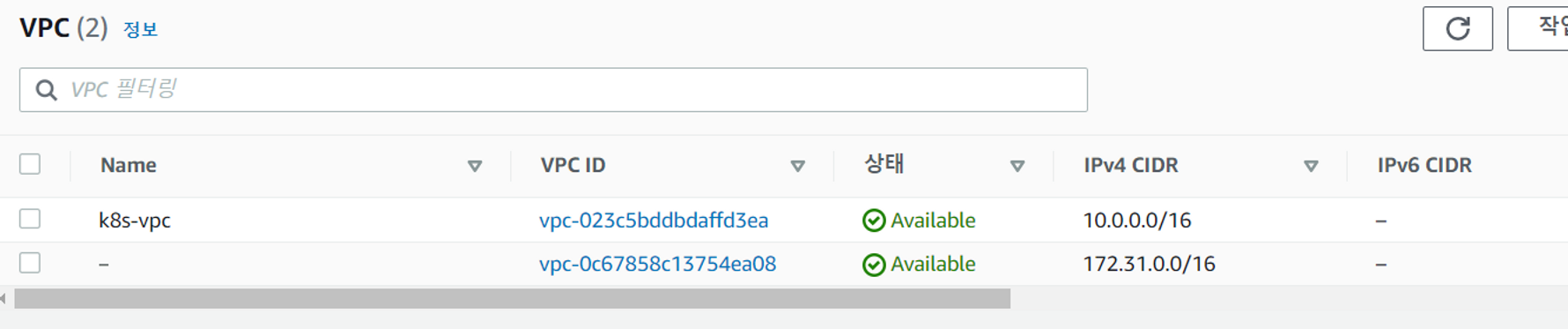

ap-northeast-2d- VPC CIDR 대역

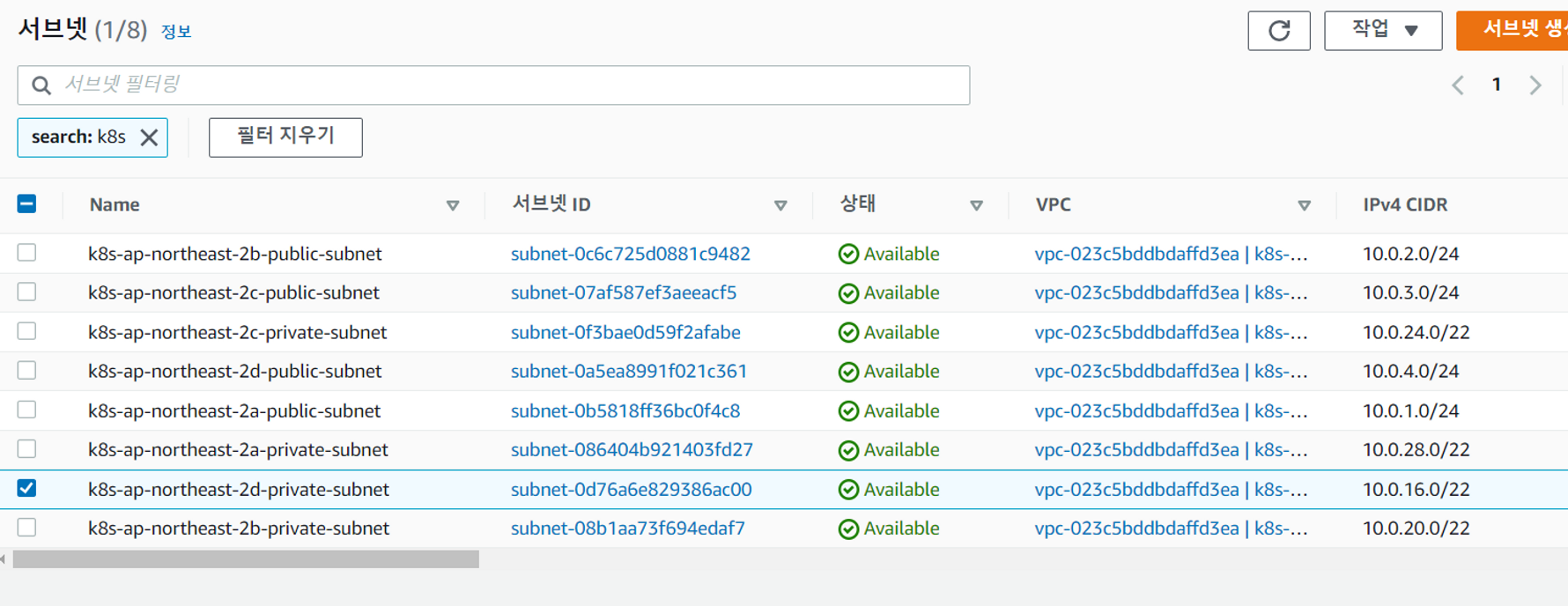

10.0.0.0/16/16- Worker Public Subnet CIDR 대역 - 각 가용영역에 하나씩 배치

10.0.1.0/24

10.0.2.0/24

10.0.3.0/24

10.0.4.0/24- Worker Private Subnet CIDR 대역 - 각 가용영역에 하나씩 배치

10.0.16.0/22

10.0.20.0/22

10.0.24.0/22

10.0.28.0/22

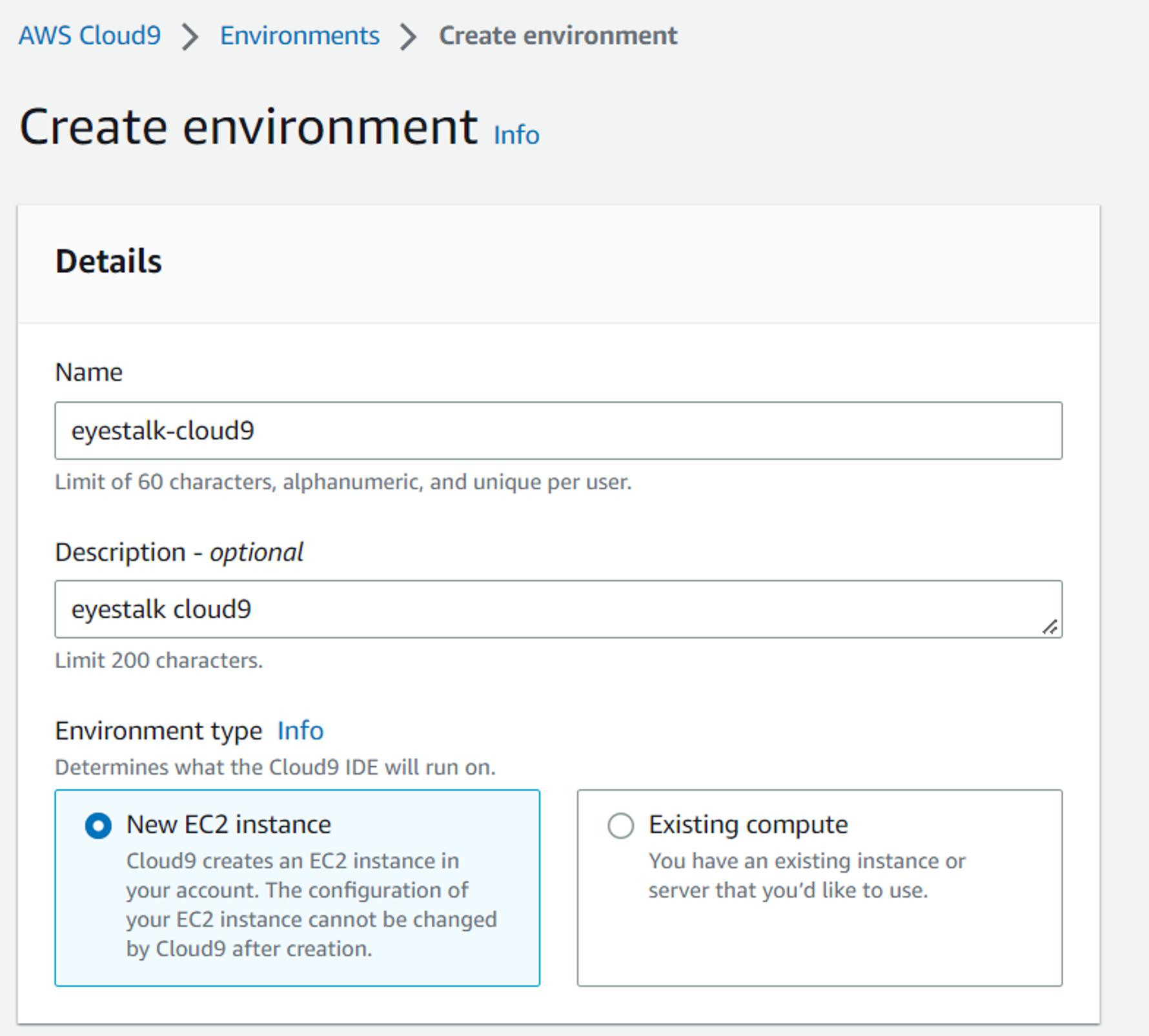

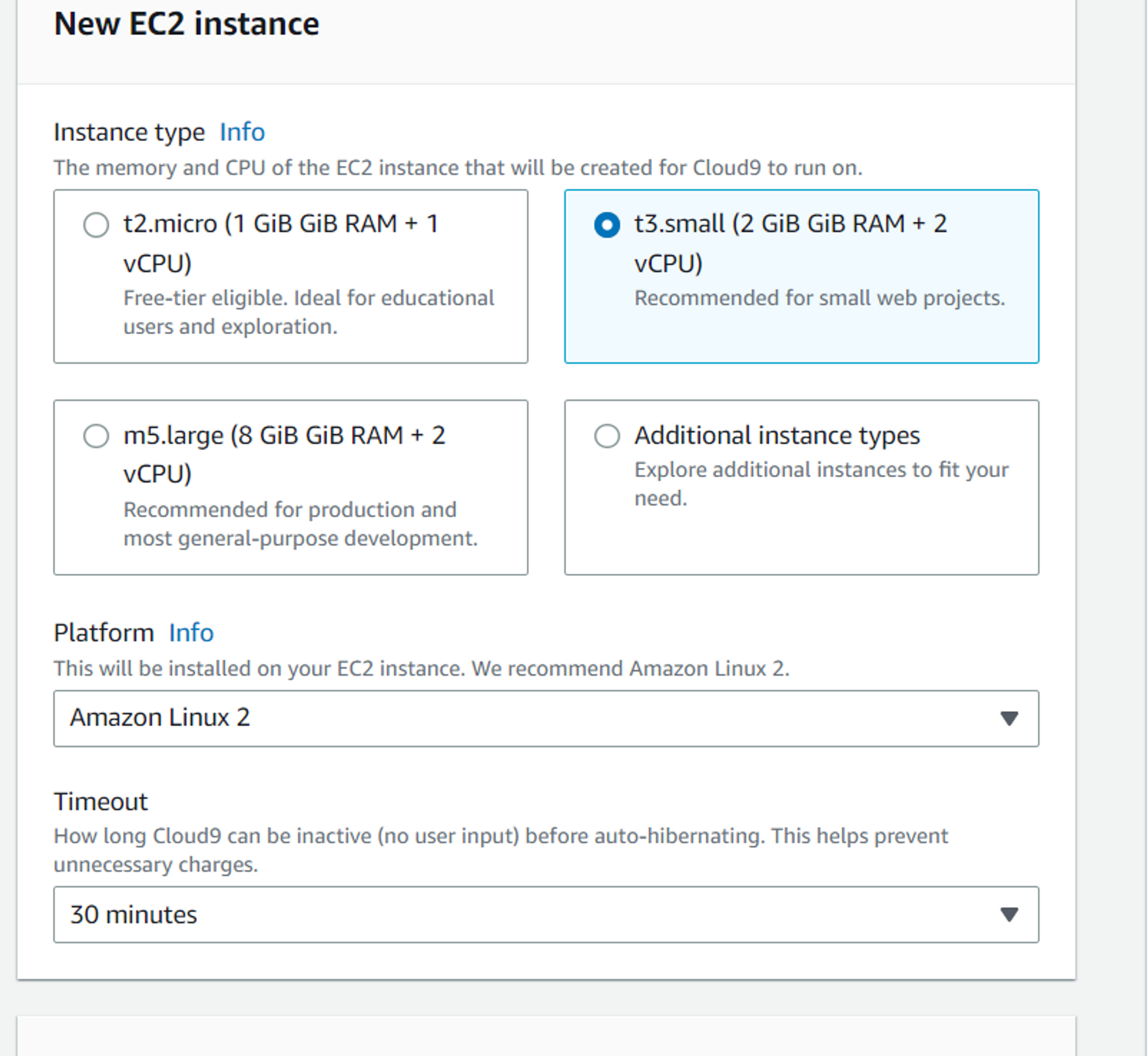

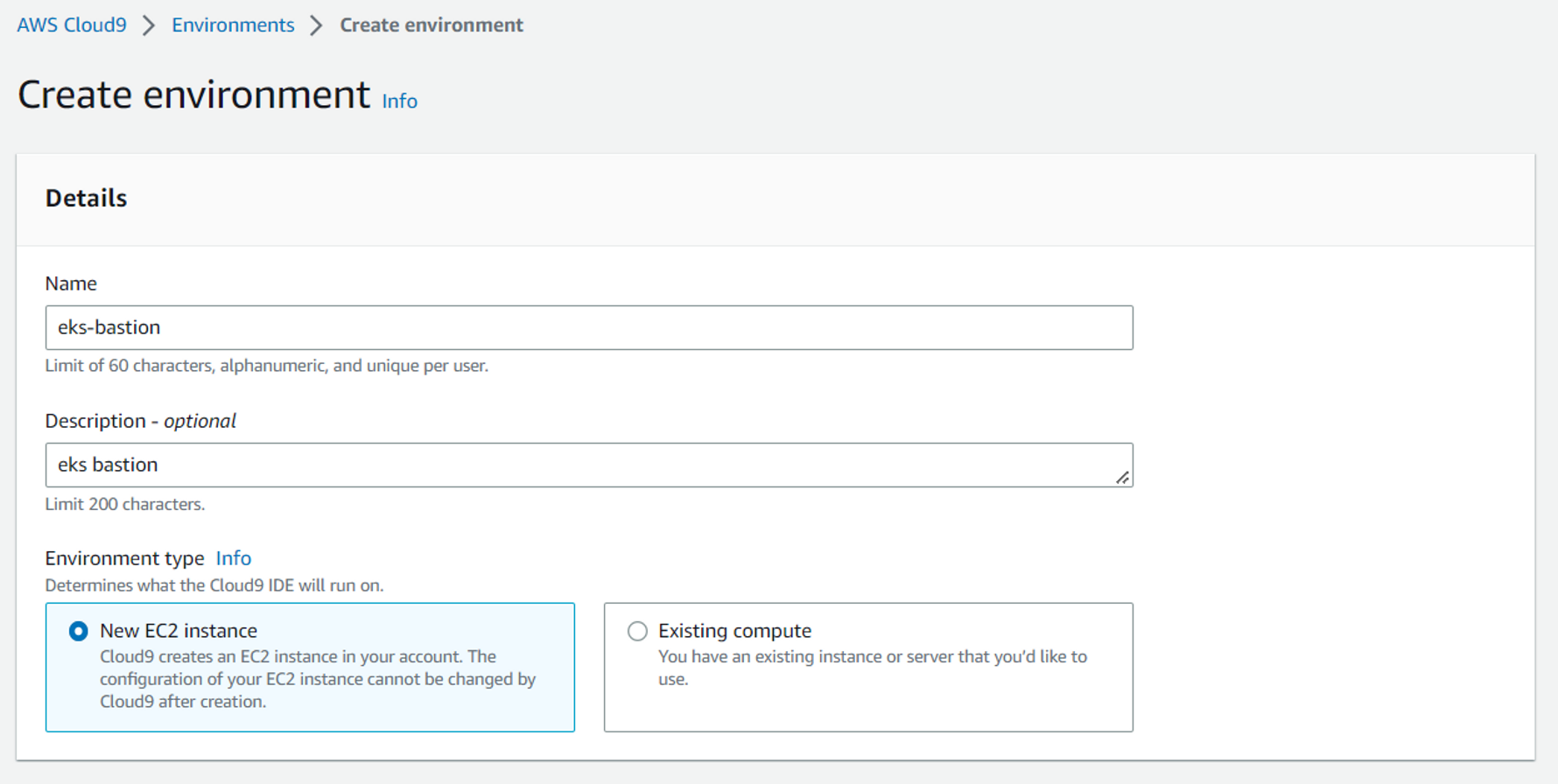

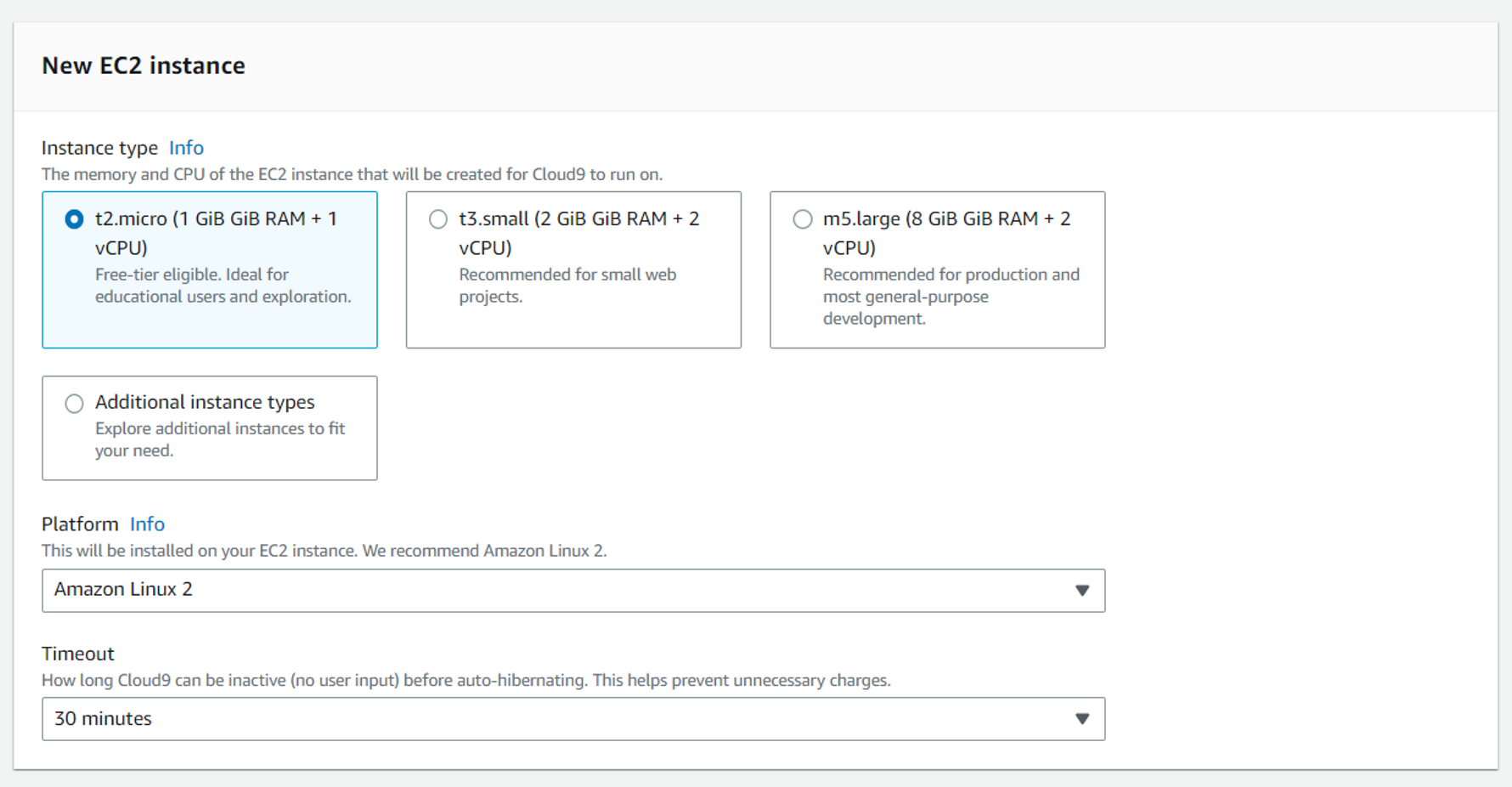

1. Cloud9 세팅

- cloud9 environment create

- cloud9은 최소 t3.small로 세팅해야 한다. 과금 주의

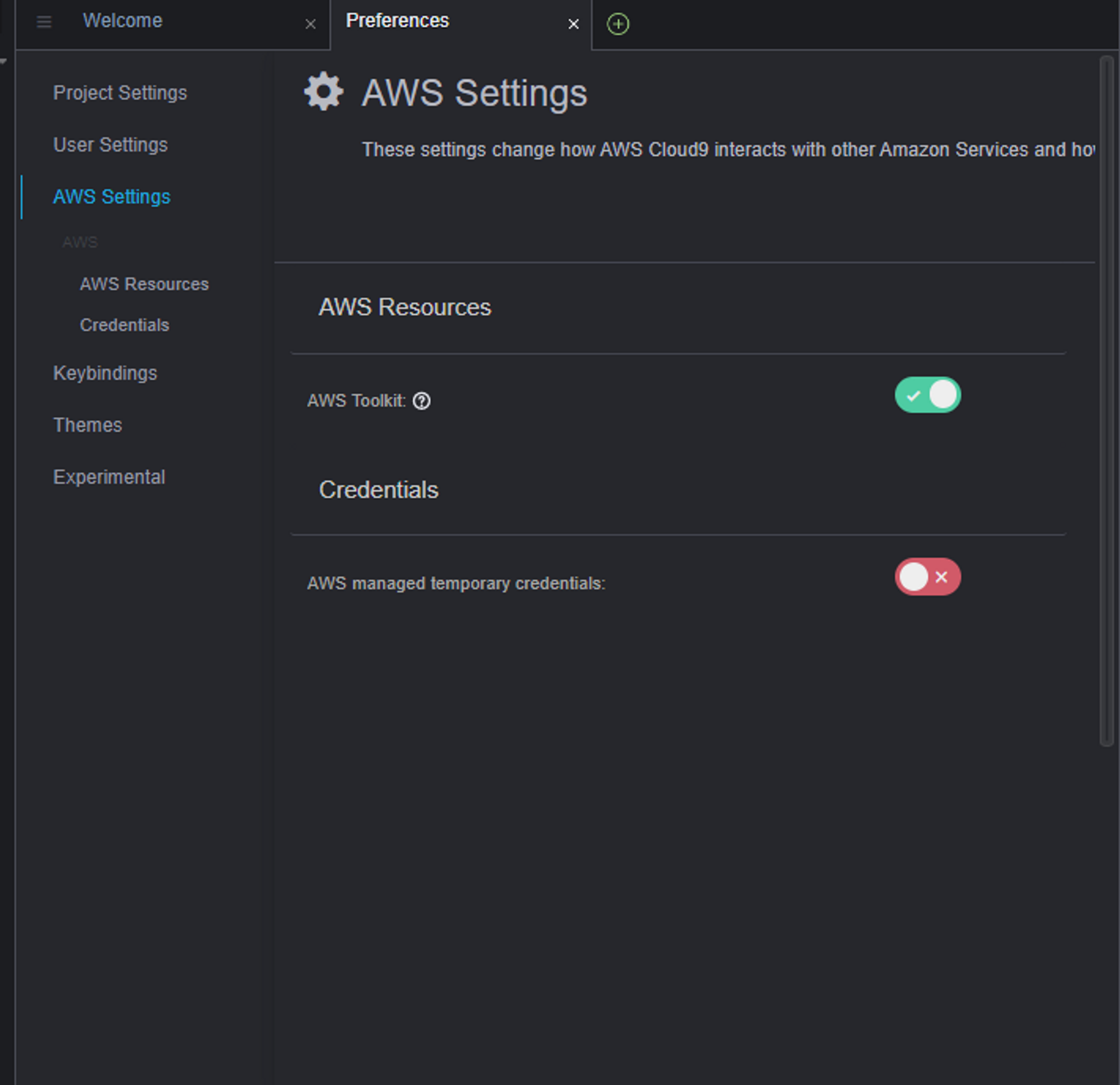

- cloud9 접속후 AWS setting 변경

2. 설치툴 다운

Cloud9 에 접속하여 기본적으로 필요한 설치툴 다운로드 및 설정

- awscli update

- aws configure

- eksctl 다운로드

- kubectl 다운로드

- json parsing 을 위한 jq 다운로드

rapa0005:~/environment $ curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 45.7M 100 45.7M 0 0 236M 0 --:--:-- --:--:-- --:--:-- 237Mrapa0005:~/environment $ unzip awscliv2.ziprapa0005:~/environment $ sudo ./aws/install --update

You can now run: /usr/local/bin/aws --versionrapa0005:~/environment $ aws --version

aws-cli/2.9.1 Python/3.9.11 Linux/4.14.296-222.539.amzn2.x86_64 exe/x86_64.amzn.2 prompt/offrapa0005:~/environment $ aws configure

AWS Access Key ID [None]: AKIAZ2UUOMJ5C4YOFLUT

AWS Secret Access Key [None]: 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

Default region name [None]: ap-northeast-2

Default output format [None]: json

인증정보 입력

# 환경변수로 인증정보 입력 - cluster 를 관리할때 필요

rapa0005:~/environment $ export AWS_DEFAULT_REGION=ap-northeast-2

rapa0005:~/environment $ export AWS_ACCESS_KEY_ID=AKIAZ2UUOMJ5C4YOFLUT

rapa0005:~/environment $ export AWS_SECRET_ACCESS_KEY=vDGbQz0gqng6b6jReJMNacp1d9YznpufAnY//Ee8eksctl 다운로드

rapa0005:~/environment $ curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

rapa0005:~/environment $ sudo mv /tmp/eksctl /usr/local/binkubectl 다운로드

rapa0005:~/environment $ curl -LO https://dl.k8s.io/release/v1.23.9/bin/linux/amd64/kubectl

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 138 100 138 0 0 507 0 --:--:-- --:--:-- --:--:-- 511

100 44.4M 100 44.4M 0 0 32.1M 0 0:00:01 0:00:01 --:--:-- 70.3M3. Terraform Network Setting

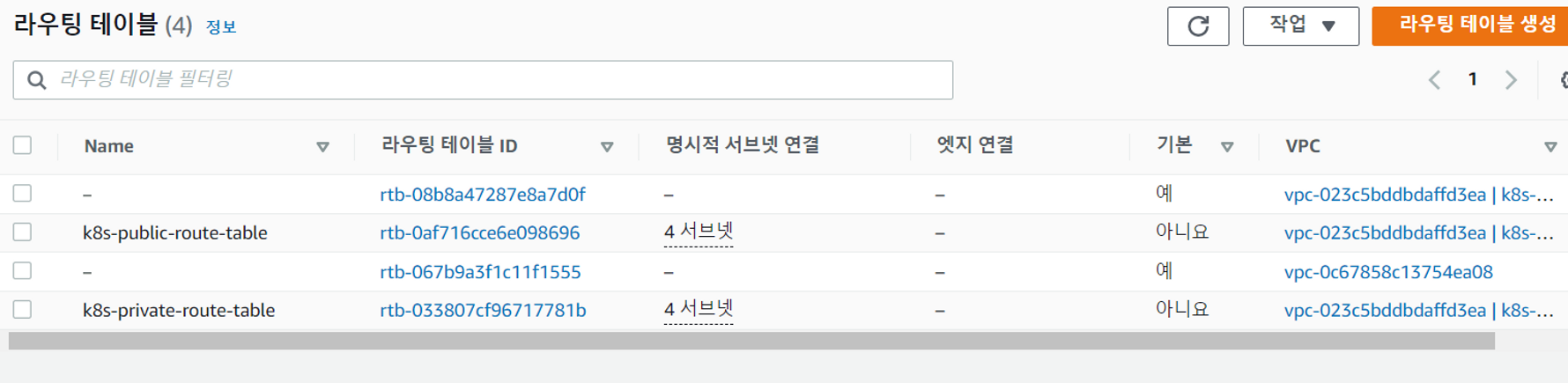

Terraform으로 VPC, AZ, Subnet을 생성

rapa0005:~/environment $ git clone https://github.com/leonli/terraform-vpc-networking

Cloning into 'terraform-vpc-networking'...

remote: Enumerating objects: 27, done.

remote: Counting objects: 100% (27/27), done.

remote: Compressing objects: 100% (19/19), done.

remote: Total 27 (delta 9), reused 23 (delta 5), pack-reused 0

Receiving objects: 100% (27/27), 15.49 KiB | 15.49 MiB/s, done.

Resolving deltas: 100% (9/9), done.

rapa0005:~/environment $ cd terraform-vpc-networking

cat << EOF > terraform.tfvars

//AWS

region = "ap-northeast-2"

environment = "k8s"

/* module networking */

vpc_cidr = "10.0.0.0/16"

public_subnets_cidr = ["10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24", "10.0.4.0/24"] //List of Public subnet cidr range

private_subnets_cidr =["10.0.16.0/22", "10.0.20.0/22", "10.0.24.0/22", "10.0.28.0/22"] //List of private subnet cidr range

EOF

rapa0005:~/environment/terraform-vpc-networking (master) $ terraform apply

rapa0005:~/environment/terraform-vpc-networking (master) $ terraform init

Initializing modules...

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Using previously-installed hashicorp/aws v4.40.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.terraform.tfvars 작성 (VPC, 서브넷 설정)

cat << EOF > terraform.tfvars

//AWS

region = "ap-northeast-2"

environment = "k8s"

/* module networking */

vpc_cidr = "10.0.0.0/16"

public_subnets_cidr = ["10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24", "10.0.4.0/24"] //List of Public subnet cidr range

private_subnets_cidr =["10.0.16.0/22", "10.0.20.0/22", "10.0.24.0/22", "10.0.28.0/22"] //List of private subnet cidr range

EOFrapa0005:~/environment/terraform-vpc-networking (master) $ terraform plan

rapa0005:~/environment/terraform-vpc-networking (master) $ terraform init

Initializing modules...

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Using previously-installed hashicorp/aws v4.40.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary

rapa0005:~/environment/terraform-vpc-networking (master) $ terraform apply- 확인하기

Error

가용영역 당 하나의 private/public 서브넷이 생성되어야 하는데 위와 같이 구성하면 (a,a,b,c)로 구성된다. 중복되는 서브넷들은 지운 후 직접 가용영역 d에 서브넷을 생성해줘야 한다.

json parsing 을 위한 jq 다운로드

rapa0005:~/environment/terraform-vpc-networking (master) $ sudo yum install -y jq

Loaded plugins: extras_suggestions, langpacks, priorities, update-motd

amzn2-core | 3.7 kB 00:00:00

253 packages excluded due to repository priority protections

Resolving Dependencies

--> Running transaction check

---> Package jq.x86_64 0:1.5-1.amzn2.0.2 will be installed

--> Processing Dependency: libonig.so.2()(64bit) for package: jq-1.5-1.amzn2.0.2.x86_64

--> Running transaction check

---> Package oniguruma.x86_64 0:5.9.6-1.amzn2.0.4 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

===================================================================================================

Package Arch Version Repository Size

===================================================================================================

Installing:

jq x86_64 1.5-1.amzn2.0.2 amzn2-core 154 k

Installing for dependencies:

oniguruma x86_64 5.9.6-1.amzn2.0.4 amzn2-core 127 k

Transaction Summary

===================================================================================================

Install 1 Package (+1 Dependent package)

Total download size: 281 k

Installed size: 890 k

Downloading packages:

(1/2): jq-1.5-1.amzn2.0.2.x86_64.rpm | 154 kB 00:00:00

(2/2): oniguruma-5.9.6-1.amzn2.0.4.x86_64.rpm | 127 kB 00:00:00

---------------------------------------------------------------------------------------------------

Total 2.4 MB/s | 281 kB 00:00:00

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : oniguruma-5.9.6-1.amzn2.0.4.x86_64 1/2

Installing : jq-1.5-1.amzn2.0.2.x86_64 2/2

Verifying : jq-1.5-1.amzn2.0.2.x86_64 1/2

Verifying : oniguruma-5.9.6-1.amzn2.0.4.x86_64 2/2

Installed:

jq.x86_64 0:1.5-1.amzn2.0.2

Dependency Installed:

oniguruma.x86_64 0:5.9.6-1.amzn2.0.4

Complete!

output -json | jq -r .private_subnets_id.value[0])- value값 넣기

rapa0005:~/environment/terraform-vpc-networking (master) $ export PRIVATE_SUBNETS_ID_A=$(terraform output -json | jq -r .private_subnets_id.value[0])

rapa0005:~/environment/terraform-vpc-networking (master) $ export PRIVATE_SUBNETS_ID_B=$(terraform output -json | jq -r .private_subnets_id.value[1])

rapa0005:~/environment/terraform-vpc-networking (master) $ export PRIVATE_SUBNETS_ID_C=$(terraform output -json | jq -r .private_subnets_id.value[2])

rapa0005:~/environment/terraform-vpc-networking (master) $ export PRIVATE_SUBNETS_ID_D=$(terraform output -json | jq -r .private_subnets_id.value[3])

rapa0005:~/environment/terraform-vpc-networking (master) $ export PUBLIC_SUBNETS_ID_A=$(terraform output -json | jq -r .public_subnets_id.value[0])

rapa0005:~/environment/terraform-vpc-networking (master) $ export PUBLIC_SUBNETS_ID_B=$(terraform output -json | jq -r .public_subnets_id.value[1])

rapa0005:~/environment/terraform-vpc-networking (master) $ export PUBLIC_SUBNETS_ID_C=$(terraform output -json | jq -r .public_subnets_id.value[2])

rapa0005:~/environment/terraform-vpc-networking (master) $ export PUBLIC_SUBNETS_ID_D=$(terraform output -json | jq -r .public_subnets_id.value[3])- 확인

rapa0005:~/environment/terraform-vpc-networking (master) $ echo "VPC_ID=$VPC_ID, \

> PRIVATE_SUBNETS_ID_A=$PRIVATE_SUBNETS_ID_A, \

> PRIVATE_SUBNETS_ID_B=$PRIVATE_SUBNETS_ID_B, \

> PRIVATE_SUBNETS_ID_C=$PRIVATE_SUBNETS_ID_C, \

> PRIVATE_SUBNETS_ID_D=$PRIVATE_SUBNETS_ID_D, \

> PUBLIC_SUBNETS_ID_A=$PUBLIC_SUBNETS_ID_A, \

> PUBLIC_SUBNETS_ID_B=$PUBLIC_SUBNETS_ID_B, \

> PUBLIC_SUBNETS_ID_C=$PUBLIC_SUBNETS_ID_C, \

> PUBLIC_SUBNETS_ID_D=$PUBLIC_SUBNETS_ID_D"- 명령을 실행하기 전에 eksctlCloud9 환경에서 기본 ssh 키를 생성

rapa0005:~/environment/terraform-vpc-networking (master) $ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/ec2-user/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/ec2-user/.ssh/id_rsa.

Your public key has been saved in /home/ec2-user/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:9FyP4cqXXEzZ4yN055BCs/9xhapEXUXleKYcqVAyCEE ec2-user@ip-172-31-19-115.ap-northeast-2.compute.internal

The key's randomart image is:

+---[RSA 2048]----+

| .Eo .o + .o=|

| . * + O |

| . o *.XoB|

| . + +.%oB+|

| S + =.B+o|

| o + o..+|

| + + .|

| . |

| |

+----[SHA256]-----+4. EKS 클러스터 구성 파일 생성

VPC에서 클러스터를 프로비저닝하기 위해 해당 매개변수를 전달하기 위해 'eks-cluster.yaml`이라는 EKS 구성 파일을 생성한다

cat << EOF > /home/ec2-user/environment/eks-cluster.yaml

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: web-host-on-eks

region: ap-northeast-2 # 서울로변경

version: "1.23" # 버전변경

privateCluster: # Allows configuring a fully-private cluster in which no node has outbound internet access, and private access to AWS services is enabled via VPC endpoints

enabled: true

additionalEndpointServices: # specifies additional endpoint services that must be enabled for private access. Valid entries are: "cloudformation", "autoscaling", "logs".

- cloudformation

- logs

- autoscaling

vpc:

id: "${VPC_ID}" # Specify the VPC_ID to the eksctl command

subnets: # Creating the EKS master nodes to a completely private environment

private:

private-ap-northeast-2a:

id: "${PRIVATE_SUBNETS_ID_A}"

private-ap-northeast-2b:

id: "${PRIVATE_SUBNETS_ID_B}"

private-ap-northeast-2c:

id: "${PRIVATE_SUBNETS_ID_C}"

private-ap-northeast-2d:

id: "${PRIVATE_SUBNETS_ID_D}"

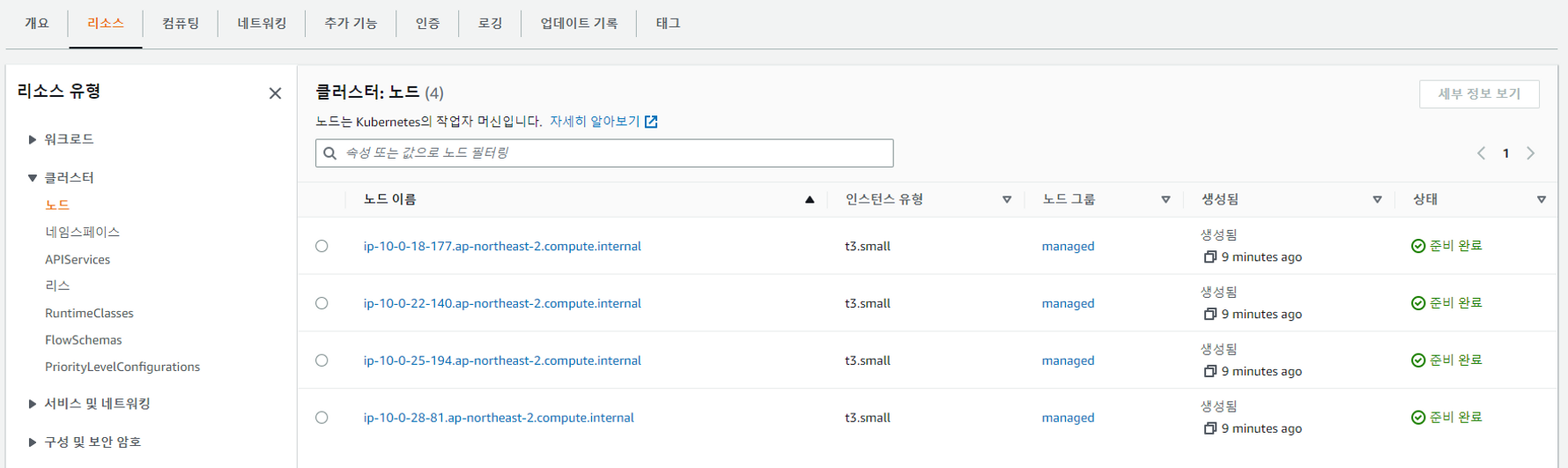

managedNodeGroups: # Create a managed node group in private subnets

- name: managed

labels:

role: worker

instanceType: t3.small

minSize: 4

desiredCapacity: 4

maxSize: 10

privateNetworking: true

volumeSize: 50

volumeType: gp2

volumeEncrypted: true

iam:

withAddonPolicies:

autoScaler: true # enables IAM policy for cluster-autoscaler

albIngress: true

cloudWatch: true

# securityGroups:

# attachIDs: ["sg-1", "sg-2"]

ssh:

allow: true

publicKeyPath: ~/.ssh/id_rsa.pub

# new feature for restricting SSH access to certain AWS security group IDs

subnets:

- private-ap-northeast-2a

- private-ap-northeast-2b

- private-ap-northeast-2c

- private-ap-northeast-2d

cloudWatch:

clusterLogging:

# enable specific types of cluster control plane logs

enableTypes: ["all"]

# all supported types: "api", "audit", "authenticator", "controllerManager", "scheduler"

# supported special values: "*" and "all"

addons: # explore more on doc about EKS addons: https://docs.aws.amazon.com/eks/latest/userguide/eks-add-ons.html

- name: vpc-cni # no version is specified so it deploys the default version

attachPolicyARNs:

- arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy

- name: coredns

version: latest # auto discovers the latest available

- name: kube-proxy

version: latest

iam:

withOIDC: true # Enable OIDC identity provider for plugins, explore more on doc: https://docs.aws.amazon.com/eks/latest/userguide/authenticate-oidc-identity-provider.html

serviceAccounts: # create k8s service accounts(https://kubernetes.io/docs/reference/access-authn-authz/service-accounts-admin/) and associate with IAM policy, see more on: https://docs.aws.amazon.com/eks/latest/userguide/iam-roles-for-service-accounts.html

- metadata:

name: aws-load-balancer-controller # create the needed service account for aws-load-balancer-controller while provisioning ALB/ELB by k8s ingress api

namespace: kube-system

wellKnownPolicies:

awsLoadBalancerController: true

- metadata:

name: cluster-autoscaler # create the CA needed service account and its IAM policy

namespace: kube-system

labels: {aws-usage: "cluster-ops"}

wellKnownPolicies:

autoScaler: true

EOF- kubectl 설치

rapa0005:~/environment $ curl -o kubectl https://s3.us-west-2.amazonaws.com/amazon-eks/1.23.7/2022-06-29/bin/linux/amd64/kubectl

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--: 0 44.4M 0 134k 0 0 139k 0 0:05:26 --:--: 25 44.4M 25 11.3M 0 0 5888k 0 0:00:07 0:00: 73 44.4M 73 32.8M 0 0 11.0M 0 0:00:04 0:00:100 44.4M 100 44.4M 0 0 13.0M 0 0:00:03 0:00:03 --:--:-- 13.0M

rapa0005:~/environment $ openssl sha1 -sha256 kubectl

SHA256(kubectl)= bb262ba21f5c0f67100140d49e7d09712b4c711498782e652628a692a0582c3e

rapa0005:~/environment $ chmod +x ./kubectl

rapa0005:~/environment $ mkdir -p $HOME/bin && cp ./kubectl $HOME/bin/kubectl && export PATH=$PATH:$HOME/bin

rapa0005:~/environment $ kubectl version --short --client

Client Version: v1.23.7-eks-4721010클러스터 생성하기

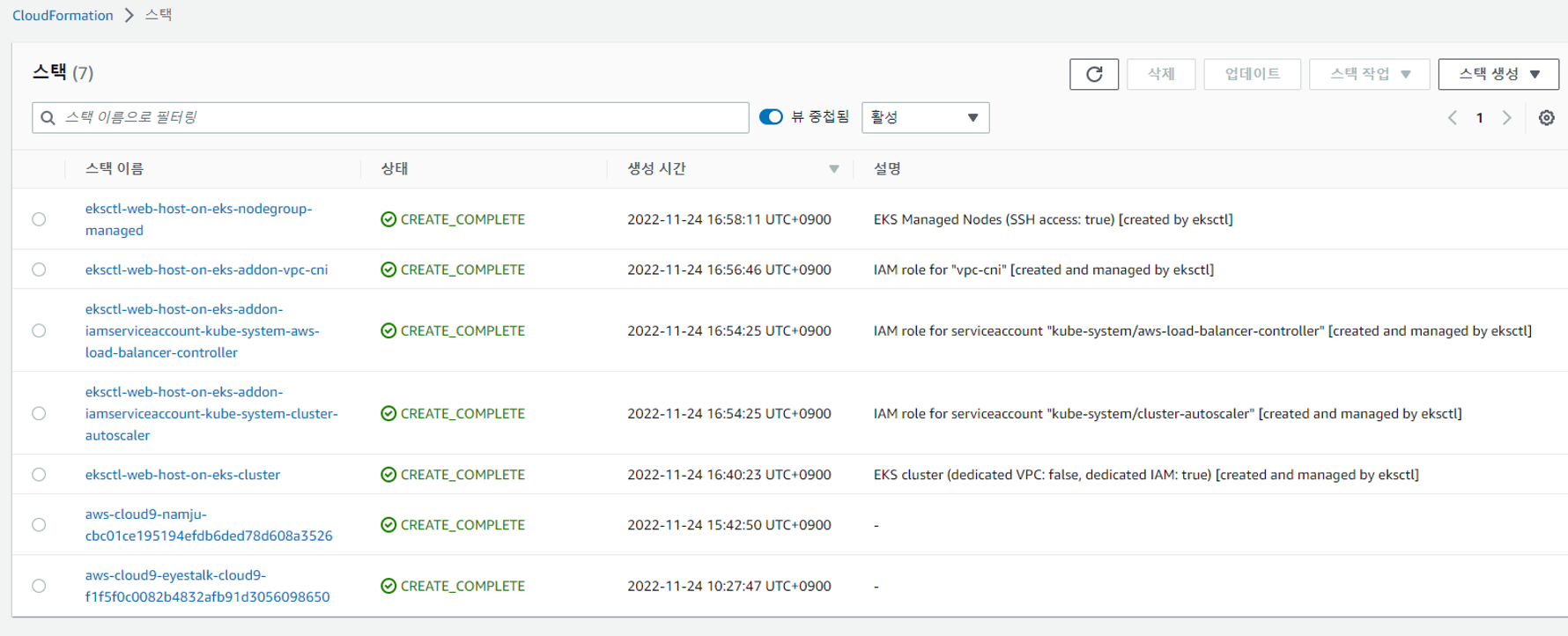

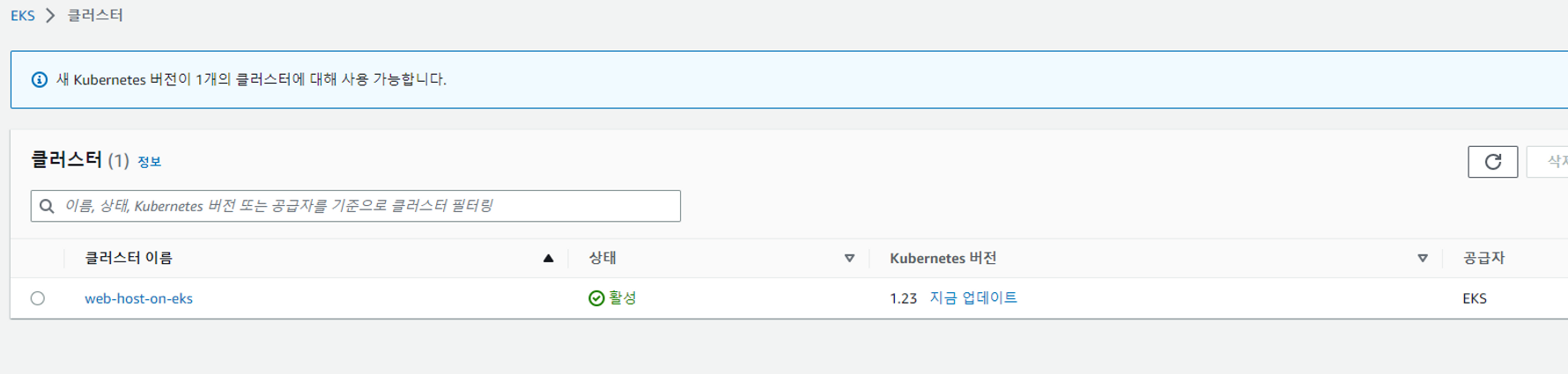

rapa0005:~/environment $ eksctl create cluster -f /home/ec2-user/environment/eks-cluster.yaml클러스터 생성 완료

Baston 생성하기

개발자나 다른 사내 직원이 접속할 수있는 네트워크를 만드는 것

mkdir ~/.kube

cat > ~/.kube/config << EOF

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeU1URXlOREEzTkRZeE1sb1hEVE15TVRFeU1UQTNORFl4TWxvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTGYzCmFhRGwyZmFXSWIrdG0xdnpaVkZEQ0M3Nm5rb013Z2VPV1pGc0RJOVFJOXMxMFhISEgwOU90N3pOd1pzTGVXanQKTkRjS1JzZDdXOGJZU1ZOSGJRbk15RzJQYnRHWkM4RThEcE1ibC9qamRCQzdHbm9xV2RoMCsvQ2c5dnFWaVJhYQp4enJQdUE0TVptc3JqQTRmSlBvVk13WXNhcDhnZlNacUhRR2hnajdVaGtEQSt1WFA3cTdxTW9CdnZQZjZiYmRwCjFJczRzTUxkc3dXeWFzYzREdFNiVjJLNDBBZ0JITVByUXFSZktTQjJtMldWeFRuOW11cFQ2cUxzcXUvSFJYZXcKNitvUTFvYlJ2blVXaWMybTFxVE10dkFpRnlxMFpEOVlUNC9OTmVNQytRWVFZZHBoaVUzd3FLRXUvQjhSV3JJaQpCK3NKNHY5b3dtV051Y3I4WnFzQ0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZDR3FOTXVKK2VNclJVUCswaG9FMHJVKzVrNUxNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBRDFyWGcvYnE3c3l1ek9iMTNBbApvcGEzT1RjZ2JkZThDa0hLT2phNDBubDJqeldlL1plVjBpb1dUb0ZML1o4Y09XaGdSa3lPMm03dWlqWlVVTzMvCnRvTThPNEpreHNnRjZOL1orTVU1UnFzc1J0cFZDUndiYmkrVm1GdytDdTN6dHJva0NTcmlsUnIxRysrSEsxZTYKbms4NVRVTm1pMkdhOENTYjNmaHVnWXBNSzFOVWtocDBKc0VBblVTQVIzdXBkVVZGb3dHeEdFL3JXWXlwNjZweQpld2lNQkpaQ0hiQ1lNN3JUQjJGem9ZQTgyZTdPRldsc0IrTEtxcDNNcVk5VUJscTlVZDFYK3psTGtuVUFNTlZuClNTOXROVWVxTy9taDJLVEx5MnBXUHJ5MFVHb0FKN3pDTTluTlZFUzlaTjJ2bndCMWg5cXdHOTFJSVpja2N4dVoKWEJRPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://5110F02873554BAE215A6D160750B814.gr7.ap-northeast-2.eks.amazonaws.com

name: web-host-on-eks.ap-northeast-2.eksctl.io

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeU1URXlOREEzTkRZeE1sb1hEVE15TVRFeU1UQTNORFl4TWxvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTGYzCmFhRGwyZmFXSWIrdG0xdnpaVkZEQ0M3Nm5rb013Z2VPV1pGc0RJOVFJOXMxMFhISEgwOU90N3pOd1pzTGVXanQKTkRjS1JzZDdXOGJZU1ZOSGJRbk15RzJQYnRHWkM4RThEcE1ibC9qamRCQzdHbm9xV2RoMCsvQ2c5dnFWaVJhYQp4enJQdUE0TVptc3JqQTRmSlBvVk13WXNhcDhnZlNacUhRR2hnajdVaGtEQSt1WFA3cTdxTW9CdnZQZjZiYmRwCjFJczRzTUxkc3dXeWFzYzREdFNiVjJLNDBBZ0JITVByUXFSZktTQjJtMldWeFRuOW11cFQ2cUxzcXUvSFJYZXcKNitvUTFvYlJ2blVXaWMybTFxVE10dkFpRnlxMFpEOVlUNC9OTmVNQytRWVFZZHBoaVUzd3FLRXUvQjhSV3JJaQpCK3NKNHY5b3dtV051Y3I4WnFzQ0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZDR3FOTXVKK2VNclJVUCswaG9FMHJVKzVrNUxNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBRDFyWGcvYnE3c3l1ek9iMTNBbApvcGEzT1RjZ2JkZThDa0hLT2phNDBubDJqeldlL1plVjBpb1dUb0ZML1o4Y09XaGdSa3lPMm03dWlqWlVVTzMvCnRvTThPNEpreHNnRjZOL1orTVU1UnFzc1J0cFZDUndiYmkrVm1GdytDdTN6dHJva0NTcmlsUnIxRysrSEsxZTYKbms4NVRVTm1pMkdhOENTYjNmaHVnWXBNSzFOVWtocDBKc0VBblVTQVIzdXBkVVZGb3dHeEdFL3JXWXlwNjZweQpld2lNQkpaQ0hiQ1lNN3JUQjJGem9ZQTgyZTdPRldsc0IrTEtxcDNNcVk5VUJscTlVZDFYK3psTGtuVUFNTlZuClNTOXROVWVxTy9taDJLVEx5MnBXUHJ5MFVHb0FKN3pDTTluTlZFUzlaTjJ2bndCMWg5cXdHOTFJSVpja2N4dVoKWEJRPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://5110F02873554BAE215A6D160750B814.gr7.ap-northeast-2.eks.amazonaws.com

name: arn:aws:eks:ap-northeast-2:675694928506:cluster/web-host-on-eks

contexts:

- context:

cluster: web-host-on-eks.ap-northeast-2.eksctl.io

user: rapa0005@web-host-on-eks.ap-northeast-2.eksctl.io

name: rapa0005@web-host-on-eks.ap-northeast-2.eksctl.io

- context:

cluster: arn:aws:eks:ap-northeast-2:675694928506:cluster/web-host-on-eks

user: arn:aws:eks:ap-northeast-2:675694928506:cluster/web-host-on-eks

name: arn:aws:eks:ap-northeast-2:675694928506:cluster/web-host-on-eks

current-context: arn:aws:eks:ap-northeast-2:675694928506:cluster/web-host-on-eks

kind: Config

preferences: {}

users:

- name: rapa0005@web-host-on-eks.ap-northeast-2.eksctl.io

user:

exec:

apiVersion: client.authentication.k8s.io/v1beta1

args:

- eks

- get-token

- --cluster-name

- web-host-on-eks

- --region

- ap-northeast-2

command: aws

env:

- name: AWS_STS_REGIONAL_ENDPOINTS

value: regional

provideClusterInfo: false

- name: arn:aws:eks:ap-northeast-2:675694928506:cluster/web-host-on-eks

user:

exec:

apiVersion: client.authentication.k8s.io/v1beta1

args:

- --region

- ap-northeast-2

- eks

- get-token

- --cluster-name

- web-host-on-eks

command: aws

EOF

chmod 600 ~/.kube/config