개요

- Swish와 GLU, 두 개의 activation function을 섞어 만든 함수

- LLaMA, Gemma 등 많은 최신 LLM 들에서 activation으로 채택함

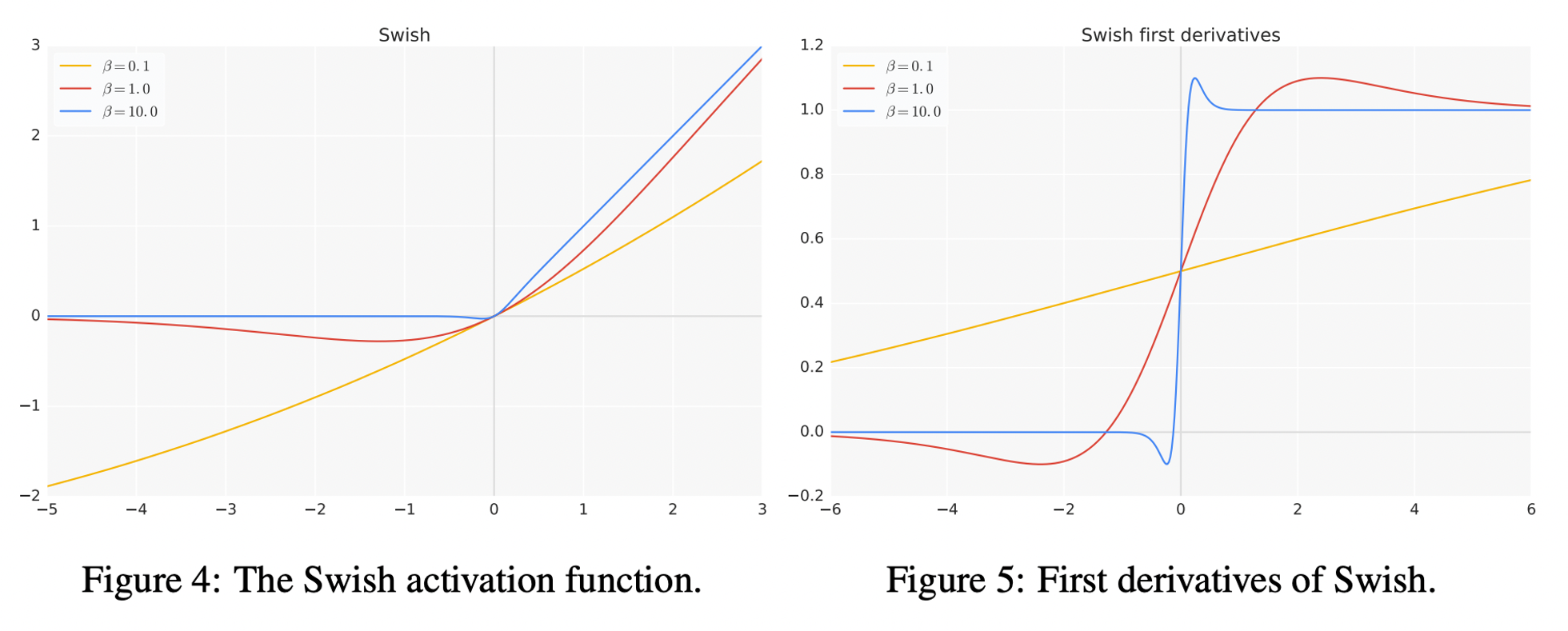

Swish

- Sigmoid 함수를 이용한 activation으로, 가 1에 가까울수록 ReLU-alike하게 형성됨

- 이때 는 trainable

- 특징

- Unbounded above for x ≥ 0: 0 이상의 구간에서 상한 없음; 모든 양수 값을 허용해 정보량 보존

- Bounded below for x < 0: 음수 구간에서 0으로 수렴하며 하한 존재; 음수 값의 경우 최소한의 정보를 남겨두되 서서히 죽이면서 dying node issue를 방지하고, 큰 음수 값은 regularize

- Differentiability & Smoothness: 모든 구간에서 도함수가 연속; Oscillation이 적어 빠른 수렴이 가능

- Non-monotonicity: 단조 증가하지 않아, 어떤 구간에서는 미분 값이 음수가 될 수 있음

- Self-gated: Gate를 통해 입력 정보량을 조절(LSTM-alike); 는 (0, 1) 구간이지만, 해당 값 자체는 입력 자신인 가 결정하기 때문 → Generalization & overfitting-prevention

- Computationally expensive: 지수함수(sigmoid)가 포함되어 당연히 계산 비용 낮지 않음

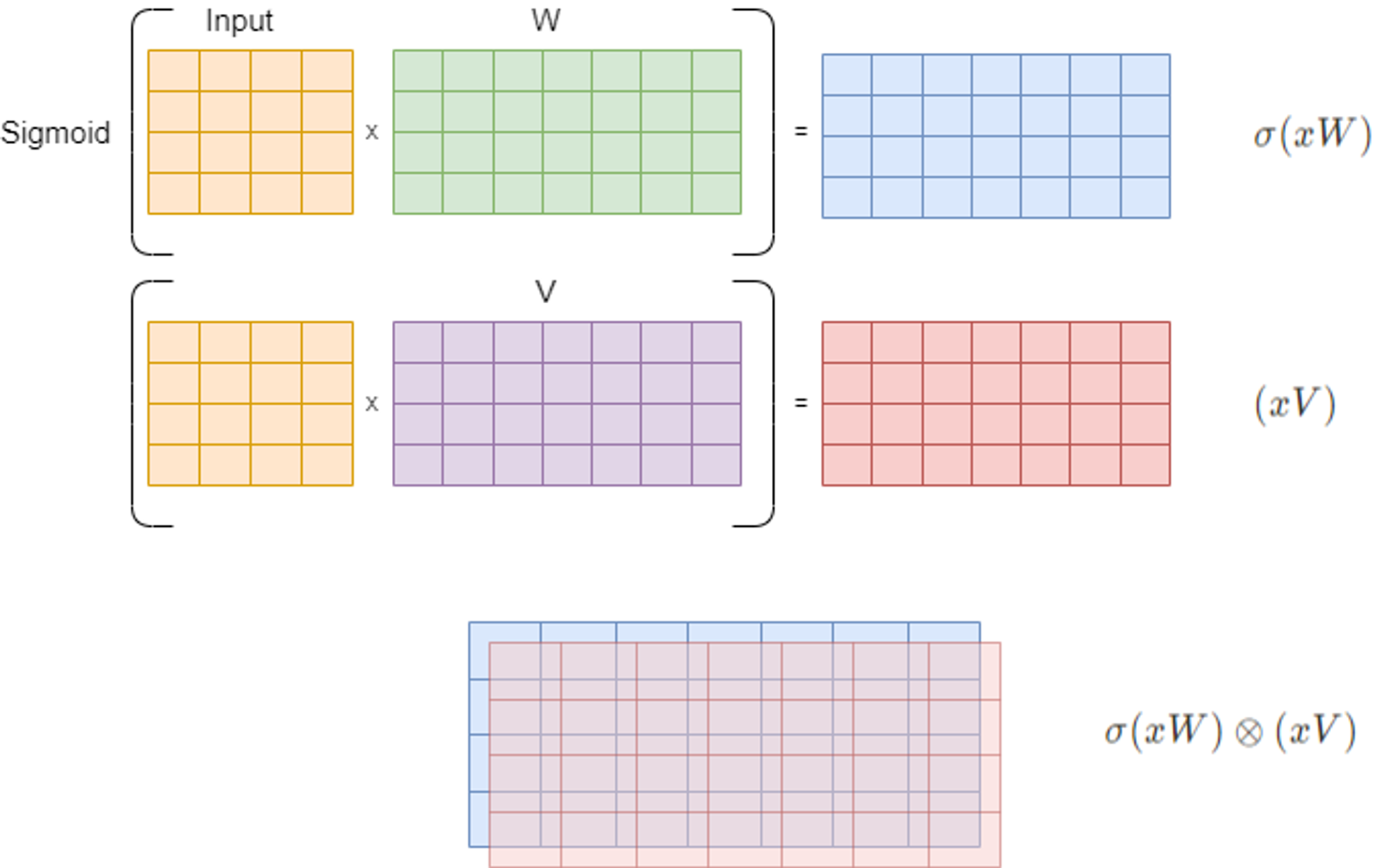

GLU (Gated Linear Units)

- Swish와 유사하게 sigmoid에 기반해 계산

- Swish에서 self-gated 특징처럼 가 의 element-wise한 필터가 되는 격

- , : trainable 텐서

- , : trainable bias

- : Element-wise multiplication

Swish + GLU = SwiGLU

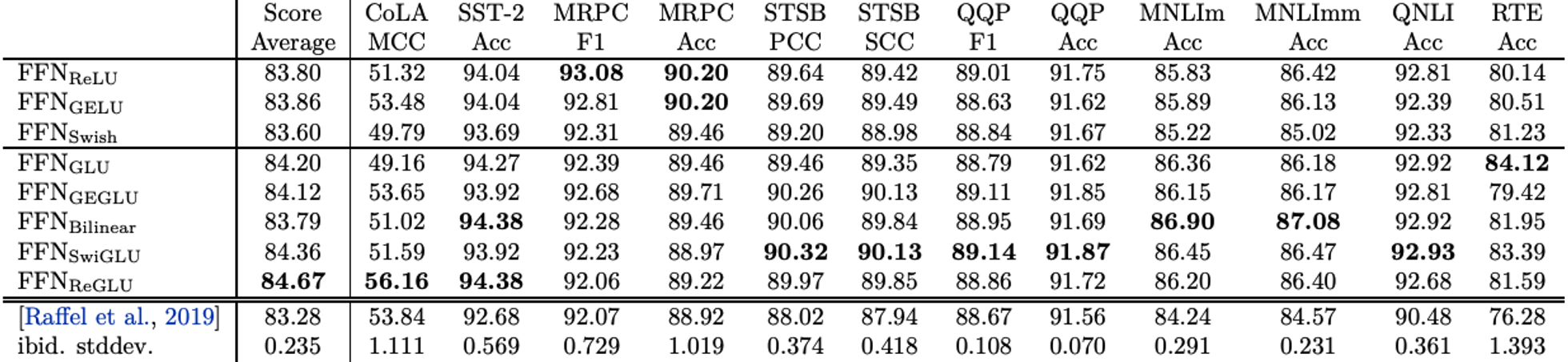

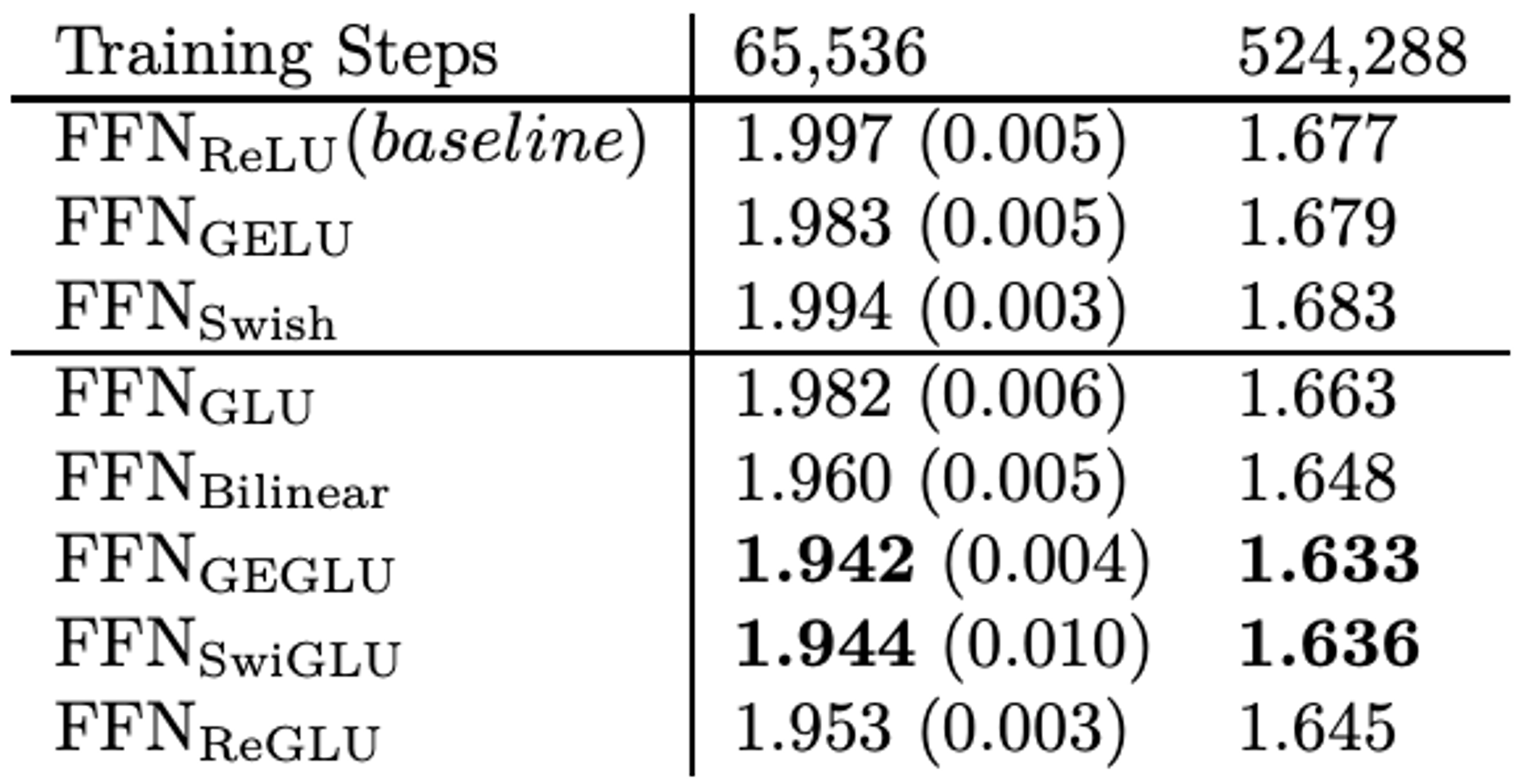

- SwiGLU는 처음 제안되었던 논문에서도 그 우수성을 딱히 수학적으로 분석하지 않음(empirical)

- 물론 계산 비용 높은 것은 여전하지만, Swish와 GLU보다 높은 학습 성능

- 파이썬 기반 구현

import torch.nn as nn import torch.nn.functional as F class SwiGLU(nn.Module): def forward(self, x): x, gate = x.chunk(2, dim=-1) return F.silu(gate) * x # SiLU: Swish with beta=1 class Swish(nn.Module): def __init__(self): super().__init__() self.beta = nn.Parameter(torch.Tensor([1.])) def forward(self, x): return x * F.sigmoid(self.beta * x) # beta가 살아있는 Swish