📌 AWS Spark 클러스터 론치

-

AWS에서 Spark을 실행하기위한 준비

- EMR(Elastic MapReduce )위에서 실행하는 것이 일반적이다.

-

EMR

-

AWS의 Hadoop Service(On-demand Hadoop)

->Hadoop(YARN), Spark, Hive, Notebook 등등이 설치되어 제공되는 서비스이다. -

EC2 Server들을 worker node로 사용하고 S3를 HDFS로 사용한다.

-

AWS내의 다른 Service들과 연동이 쉽다.(Kinesis, DynamoDB, Redshift, ..)

-

-

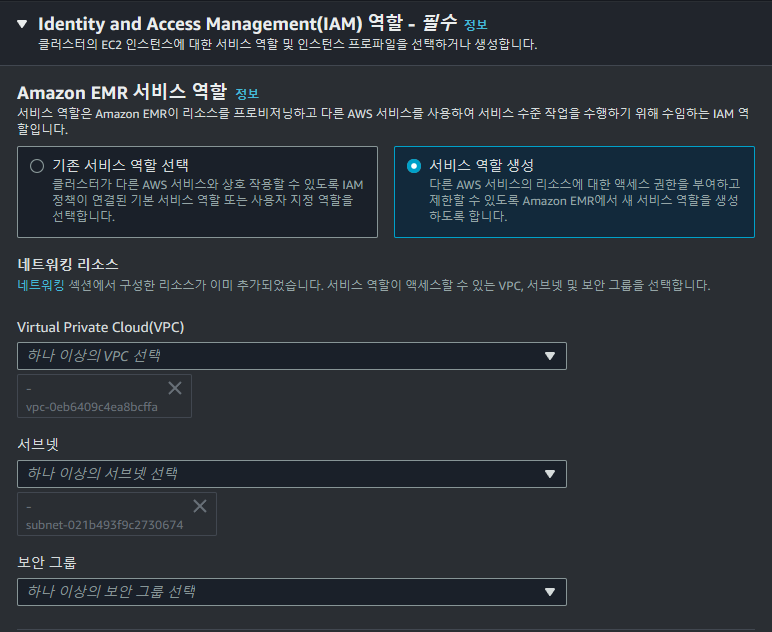

Spark on EMR 실행 및 사용 과정

- AWS의 EMR 클러스터 생성

- EMR 생성시 Spark을 실행(Option으로 선택)

-> S3를 기본 파일 시스템으로 사용 - EMR의 마스터 노드를 드라이버 노드로 사용

- 마스터 노드를 SSH로 로그인

-> spark-submit를 사용 - Spark의 Cluster 모드에 해당한다.

- 마스터 노드를 SSH로 로그인

-

Spark Cluster Manager와 실행 Model 요약

| Cluster manager | Deployed mode | Program Run Method |

|---|---|---|

| loacl[n] | Client | Spark Shell, IDE, Notebook |

| YARN | Client | Spark Shell, Notebook |

| YARN | Client | spark-submit |

📌 시나리오

Step 1

-

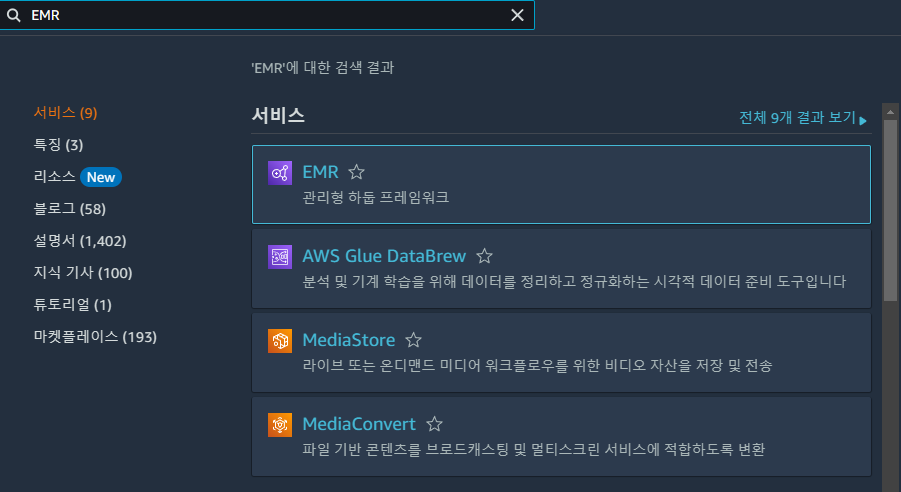

EMR Service Page로 이동 & AWS Console에서 EMR 선택

-

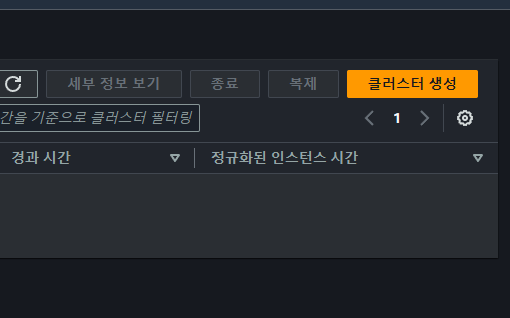

Create Cluster 선택

Step 2

-

EMR Cluster 생성 -> 이름과 기술 스택 선택

-

Software configuration

- Spark이 들어간 옵션 선택

- Zeppelin

-

Zeppelin이란

-> Notebook -> Spark, SQL, Python

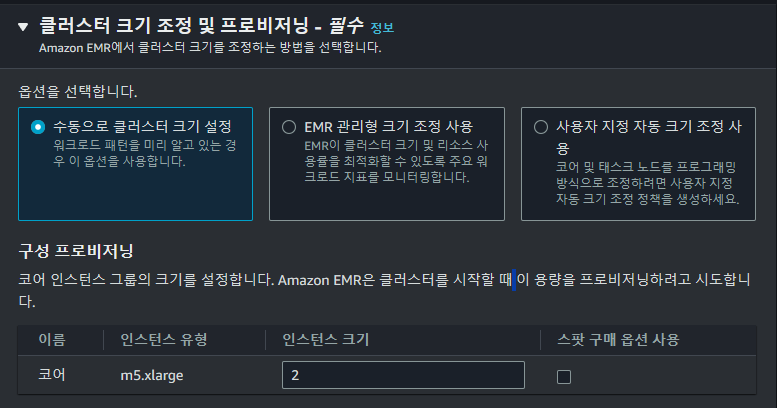

Step 3

- Cluster 사양 선택 후 생성

-

m5.xlarge node 3개 선택 -> 하루 $35 비용 발생

- 4 CPU * 2

- 16 GB * 2

-

Create Cluster 선택

Step 4

-

EMR Cluster 생성까지 대기

-

Cluster가 Waiting으로 변할 때까지 대기

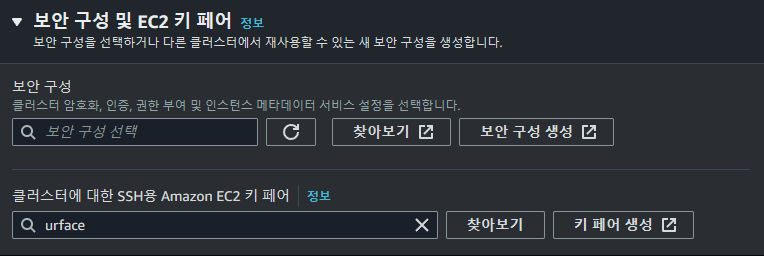

Step 5

-

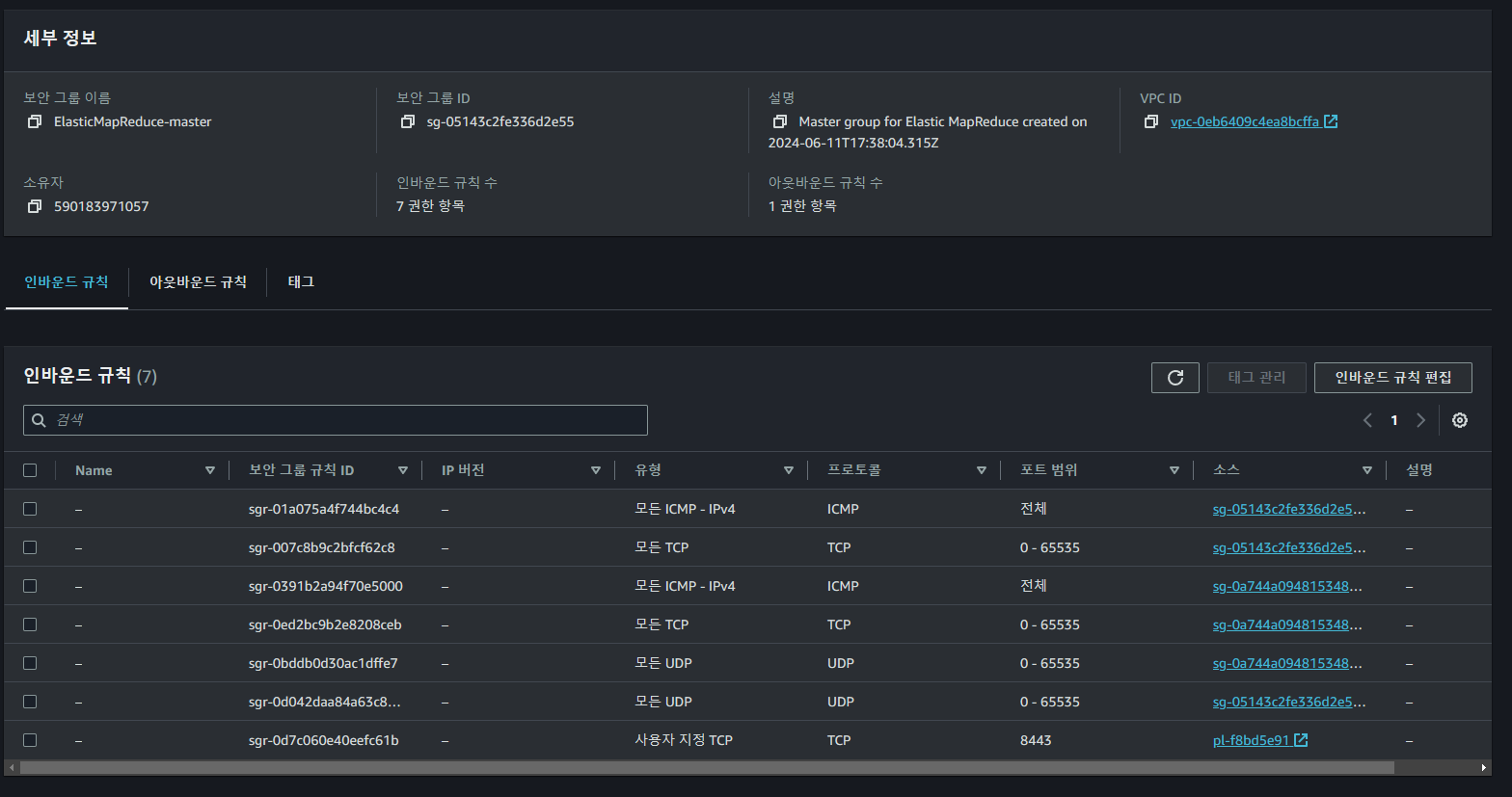

마스터 노드의 포트번호 22번으로 열기

-> 네트워크 및 보안 -> EC2 보안 그룹(방화벽) -> 프라이머리 노드 -> 인바운드 규칙 -> 인바운드 규칙 편집(SSH가 없다면) -

EMR Cluster Summary Tab 선택

-

Security Groups for Master 링크 선택

-

Security Groups 페이지에서 마스터 노드의 security group ID를 클릭

-

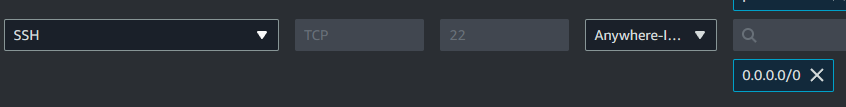

Edit inbound rules 버튼 클릭 후 Add rule 버튼 선택

-

Port 번호로 22를 입력

-

Anywhere IP v4 선택

-

Save rules 버튼 선택

Step 6

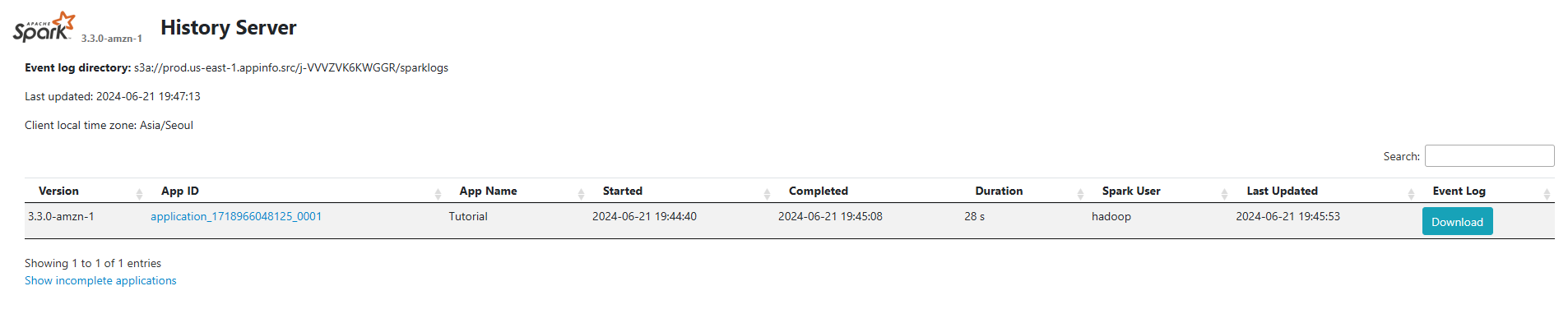

- Spark History Server 보기

Step 7

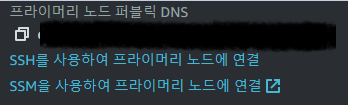

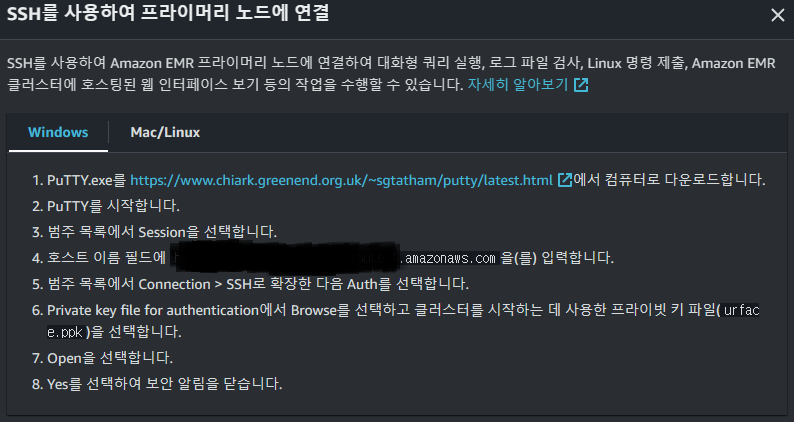

- Spark master node에 SSH로 로그인

-> 이를 위해서 Master node의 TCP Port Number 22번을 오픈해야한다.

-

spark-submit을 이용하여 실행하면서 디버깅한다.

-

두 개의 Job을 AWS EMR 상에서 실행해 볼 예정이다.

Step 8

-

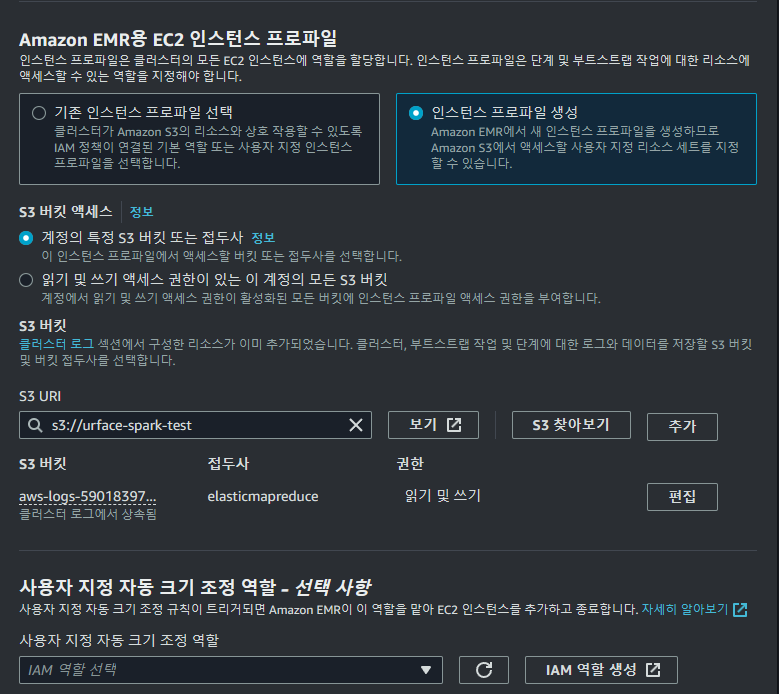

입력 데이터를 S3로 로딩

-

Stackoverflow 2022년 개발자 Survey CSV 파일을 S3 Bucket으로 업로드

-> 익명화된 83,339개의 Survey 응답 -

s3://spark-tutorial-dataset/survey_results_public.csv

Step 9

-

PySpark Job Code

-

입력 CSV 파일을 분석하여 그 결과를 S3에 다시 저장(stackoverflow.py)

-

미국 개발자만 대상으로 코딩을 처음 어떻게 배웠는지를 카운트 하여 S3에 저장

📙 stackoverflow.py

from pyspark.sql import SparkSession

from pyspark.sql.functions import col

S3_DATA_INPUT_PATH = 's3://spark-tutorial-dataset/survey_results_public.csv'

S3_DATA_OUTPUT_PATH = 's3://spark-tutorial-dataset/data-output'

spark = SparkSession.builder.appName('Tutorial').getOrCreate()

df = spark.read.csv(S3_DATA_INPUT_PATH, header=True)

print('# of records {}'.format(df.count()))

learnCodeUS = df.where((col('Country')=='United States of America')).groupby('LearnCode').count()

learnCodeUS.write.mode('overwrite').csv(S3_DATA_OUTPUT_PATH) # parquet

learnCodeUS.show()

print('Selected data is successfully saved to S3: {}'.format(S3_DATA_OUTPUT_PATH))Step 10

-

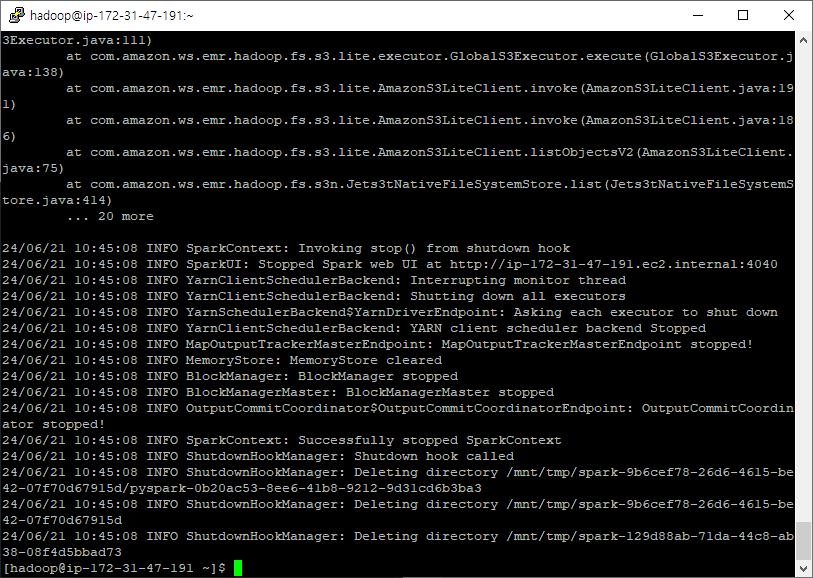

PySpark Job Run

-

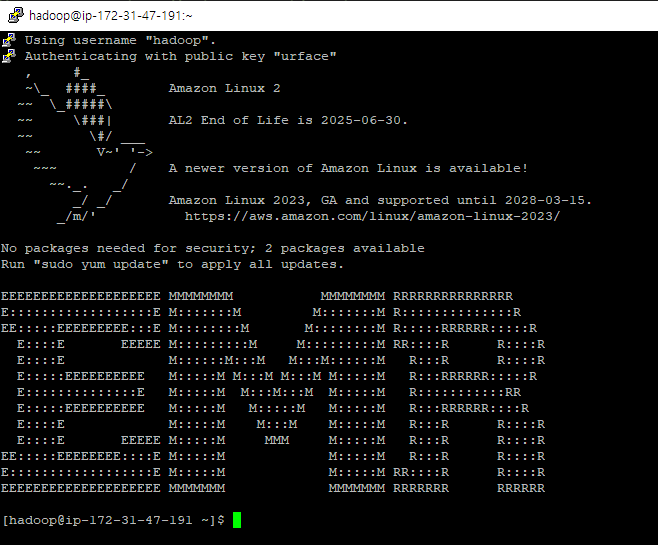

Spark Master node에 SSH로 로그인 하여 spark-submit을 통해 실행

-

앞서 다운로드 받은 .ppk 파일을 사용하여 SSH 로그인

-

Code Run

- spark-submit -- master yarn stackoverflow.py

[hadoop@ip-172-31-47-191 ~]$ nano stackoverflow.py

[hadoop@ip-172-31-47-191 ~]$ spark-submit --master yarn stackoverflow.py

Final

- PySpark Job 실행 결과를 S3에서 확인하기