0901 실습

💡 Sigmoid는 출력층에서 주로 사용

- 출력층

sigmoid사용 시 flatten 을 통해 예측값을 1차원으로 변환

📌 모델 예측

# 모델 컴파일

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])

# 예측

# 예측값을 y_pred 변수에 할당 후 재사용합니다.

y_pred = model.predict(X_test)

y_pred.shape

# Outcome; 아래와 같은 확률값을 X_test 개수 (154개) 만큼 출력

array([[0.62700105],

[0.01058297],

[0.3780695 ],

[0.5054825 ],

[0.2762504 ],

...🤔 바이너리면 결과가 2개 나와야 하는거 아닌가요?

소프트맥스라면 2개가 나오지만, 모델 컴파일 시 바이너리를 사용했기 때문에 예측값이 각 row당 하나씩 나옵니다. 여기서 나오는 값은 0 ~ 1 사이의 확률값(sigmoid)이 나옵니다.

argmax(): 다차원 배열의 차원에 따라 가장 큰 값의 인덱스들을 반환해주는 함수

- 출력층에서 softmax 사용 시 확률로 반환되기 때문에 그 중 가장 높은 값의 인덱스를 찾을 때 사용

e.g.y_predict = np.argmax(y_pred, axis=1)

📌 성능 향상시키기

unit의 수를 변경하여 네트워크를 다양하게 구성해 볼 수 있고 fit을 할 때 epoch 수 등을 조정해서 성능을 변경해 볼 수 있다.

자동차 연비 예측하기 (회귀)

https://www.tensorflow.org/tutorials/keras/regression

💡 보통 회귀에서는 출력을 1로 받는다.

📌 정규화

# 사이킷런의 전처리 기능이나 직접 계산을 통해 정규화 해주는 방법도 있다.

# TF에서도 정규화하는 기능을 제공한다.

# horsepower_normalizer: Horsepower 변수를 가져와서 해당 변수만 정규화 해주는 코드

horsepower = np.array(train_features['Horsepower'])

horsepower_normalizer = layers.Normalization(input_shape=[1,], axis=None)

horsepower_normalizer.adapt(horsepower)

⬆️ 입력층 ⬆️

---------------------------------------------------------------------------

# 전처리 레이어를 추가해서 모델을 만들 때 전처리 기능을 같이 넣어줄 수 있습니다.

# 장점은 정규화 방법을 모르더라도 추상화된 기능을 사용해서 쉽게 정규화 해줄 수 있다는 것이지만

# 추상화된 기능의 단점은 소스코드, 문서를 열어보기 전에는 어떤 기능인지 알기 어렵다는 단점

# 사이킷런의 pipeline기능과 유사하다.

horsepower_model = tf.keras.Sequential([

horsepower_normalizer,

layers.Dense(units=1) # (activation = "linear")가 생략되어 있는 것과 같다.

]) # == 항등함수; 입력받은 값을 그대로 출력

horsepower_model.summary()

Output:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

normalization_1 (Normalizat (None, 1) 3

ion)

dense (Dense) (None, 1) 2

=================================================================

Total params: 5

Trainable params: 2

Non-trainable params: 3

_________________________________________________________________

🤔 레이어 구성에서 출력층의 분류와 회귀의 차이?

- 분류는(n, activation='softmax'), (1, activation='sigmoid')

- 회귀는 항등함수 (= 입력받은 값을 그대로 출력)

💡 회귀의 출력층은 항상

layers.Dense(units=1)형태

🤔 분류 실습과 회귀 loss 의 차이?

- 분류: crossentropy

- 회귀: mae, mse 등

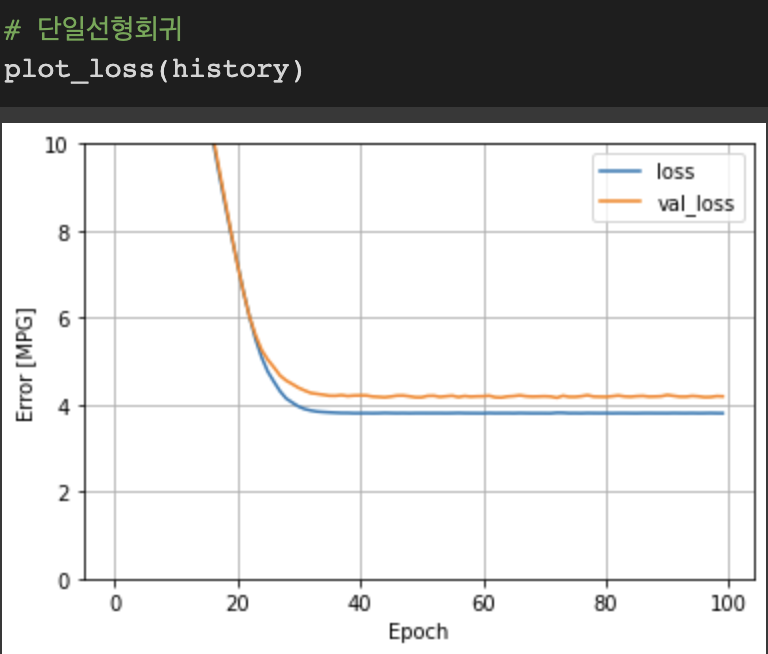

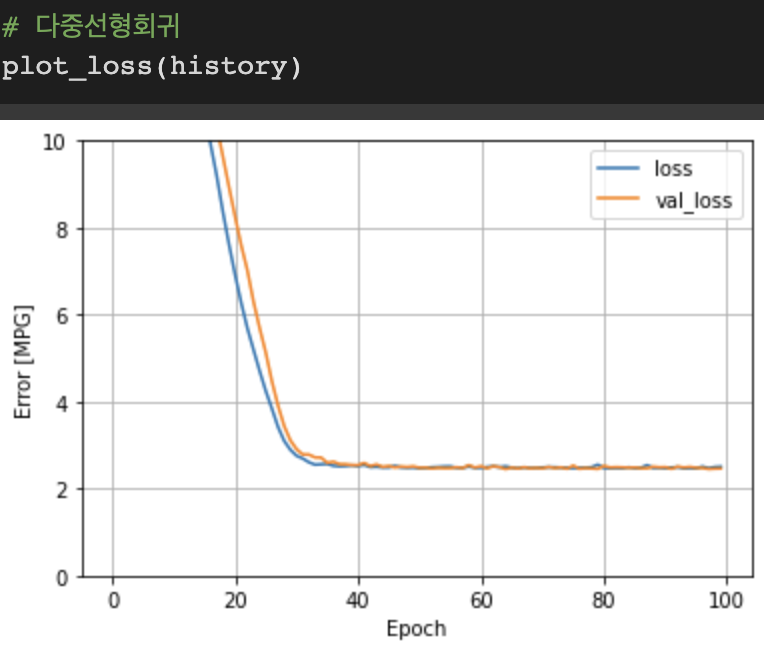

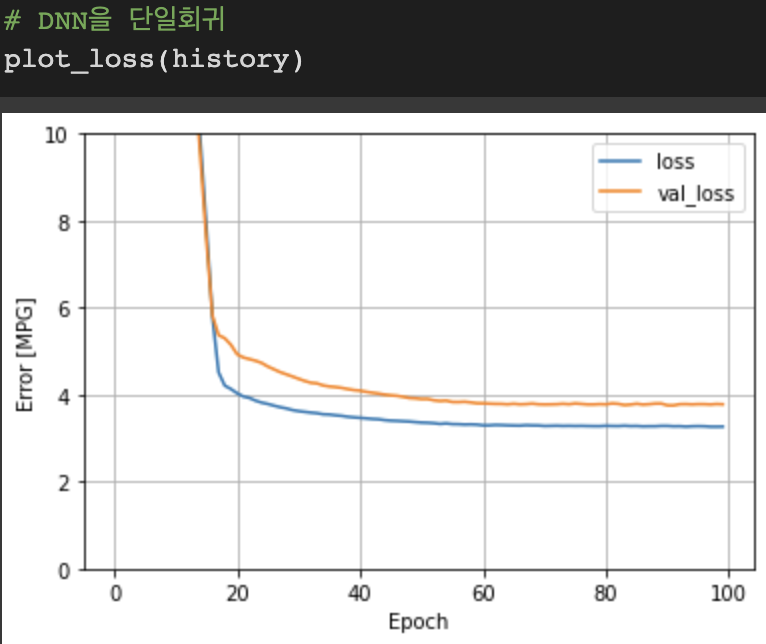

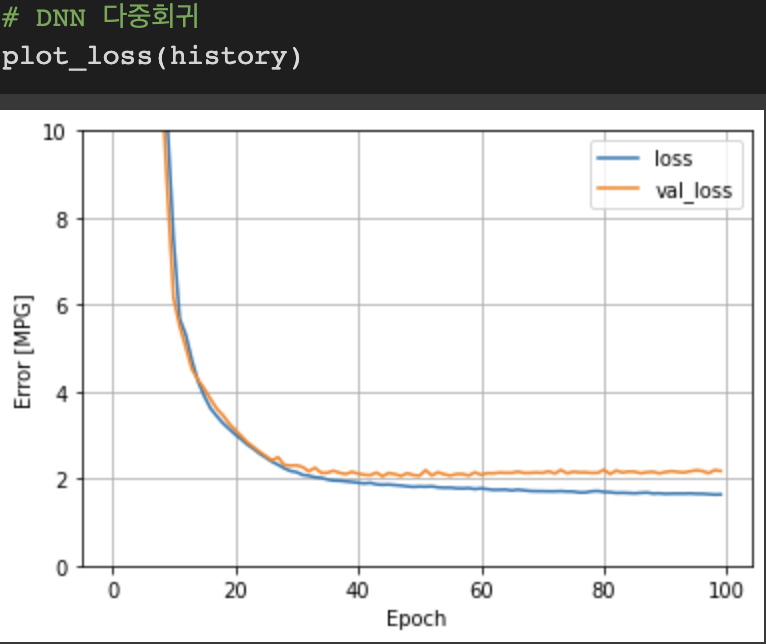

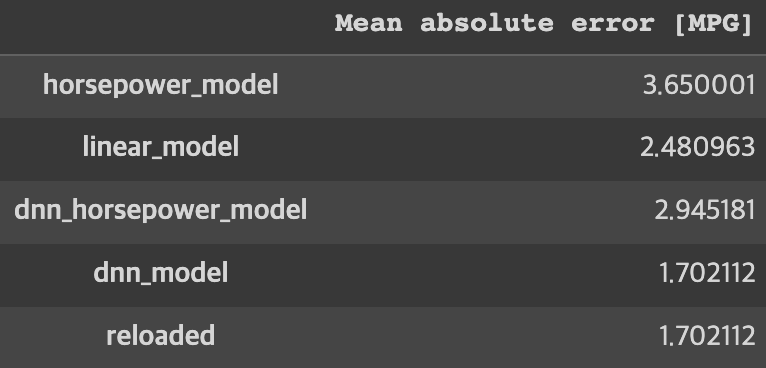

✅ 선형회귀와 DNN을 사용했을 때 loss 값의 차이

🤔 loss와 val_loss의 차이?

loss는 훈련 손실값, val_loss는 검증 손실값

🤔 어떻게 해야 val 결과가 나올까?

학습단계에서 validation_split 지정

model.fit(X_train, y_train, epochs, validation_split)

🤔 flatten() 은 2차원만 받아서 1차원으로 바꿔주나?

A: n차원을 1차원으로 만들어줌.

💡

ndim(): 해당 데이터의 dimension 출력

💡 괄호 n개 == n차원

e.g.[[[ ]]]] == 3차원

👀 참고할 만한 사이트: https://numpy.org/doc/stable/user/absolute_beginners.html

📌 회귀 실습 Summary

1) 정형데이터 입력층: input_shape

2) 정규화 레이어의 사용: 직접 정규화해도 된다.

3) 출력층이 분류와 다르게 구성이 된다.

4) loss 설정이 분류, 회귀에 따라 다르다.

5) 입력변수(피처)를 하나만 사용했을 때보다 여러 변수를 사용했을 때 성능이 더 좋아졌다.

💡 반드시 여러 변수를 사용한다고 해서 성능이 좋아지지 않을 수도 있지만 너무 적은 변수로는 예측모델을 잘 만들기 어렵다는 점을 알 수 있다.

0902 실습

📌 모델 컴파일과 학습

# 모델을 컴파일 합니다.

# loss와 metrics에 하나 이상을 지정하게되면 평가를 여러가지로 하게된다.

model.compile(loss=['mae', 'mse'],

optimizer=tf.keras.optimizers.Adam(0.001),

metrics=['mae', 'mse'])

# 모델을 학습합니다.

early_stop = tf.keras.callbacks.EarlyStopping(monitor='val_mae',

patience=50)

# 학습결과를 history 변수에 할당합니다.

# validation_split: 데이터셋 내에서 지정한 값에 따라 알아서 validation set을 생성

history = model.fit(X_train, y_train, epochs=1000, verbose=0,

callbacks=[early_stop], validation_split=0.2)

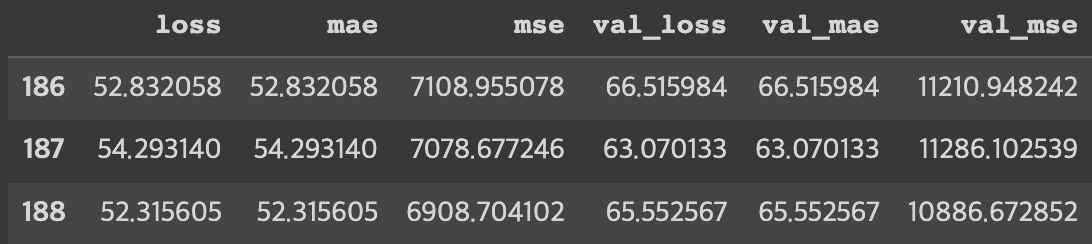

# history를 데이터프레임으로 만듭니다.

df_hist = pd.DataFrame(history.history)

df_hist.tail(3)

Output:

output 순서는 compile 시 지정한 순서대로 출력. loss 는 다른 지표를 지정했을 때 가장 앞에 출력됨.

🤔 loss, val_loss, mae, val_mae 값이 같은 이유?

loss의 기준을 mae로 지정했기 때문에 같은 값이 출력된다.

💡 optimizer의 종류와 learning_rate에 따라 학습속도에 차이가 생긴다.

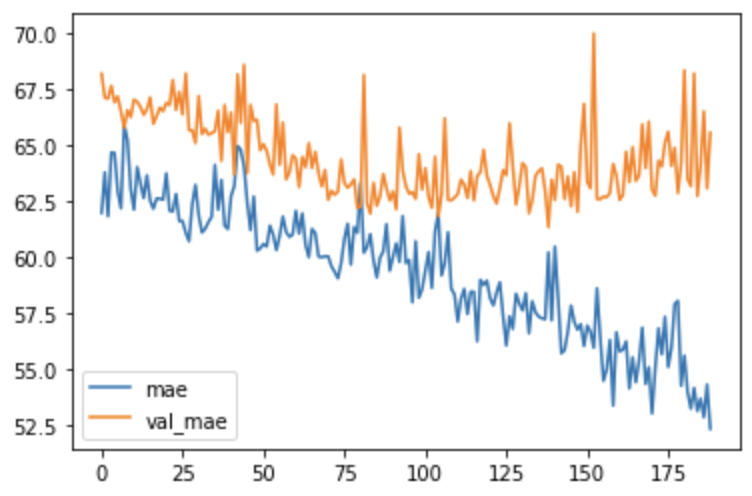

🤔 mae와 val_mae가 벌어지는 현상?

과적합

📌 예측과 평가

# 예측

y_pred = model.predict(X_test)

y_pred.shape

Output:

(79, 1)

# 예측값을 flatten을 사용해 1차원으로 변환

y_predict = y_pred.flatten()

y_predict.shape

Output:

(79,)

# evaluate를 통해 평가

# loss, mae, mse

loss, mae, mse = model.evaluate(X_test, y_test)

print("테스트 세트의 평균 절대 오차 : {:5.2f}".format(mae))

print("테스트 세트의 평균 제곱 오차 : {:5.2f}".format(mse))

Output:

- loss: 63.7553 - mae: 63.7553 - mse: 11125.1436

테스트 세트의 평균 절대 오차 : 63.76

테스트 세트의 평균 제곱 오차 : 11125.14📌 0902 실습 summary

1) 딥러닝 모델을 조정하여서 회귀 딥러닝 모델을 생성하였습니다!

(분류 모델과 다르게 Dense 출력 유닛 1로 설정, Compile에서 loss, metrics 변경)

2) 자원과 시간을 아끼기 위해서 학습 과정 중 성능이 비슷하면 멈출 수 있도록 EarlyStopping을 설정해줍니다!

(tf.keras.callbacks.EarlyStopping -> 성능이 비슷함에도 남은 epochs가 많이 남았다면 시간이 아까우니까요)

3) 학습 과정에서 validation_split을 설정하여 검증 데이터셋도 설정해줍니다!

(모델이 과적합인지 과소적합인지 제 성능을 하는지 확인하기 위해서)

4) 딥러닝 모델을 학습시킵니다.

(model.fit)

5) 학습한 모델의 성능을 history 표를 보면서 측정합니다!

(여기서 우리 전에 배웠던 loss, mae, mse 지표를 보면서 모델이 잘 예측했는지 평가해봅니다. -> 검증모델에서의 지표와 비교를 해보고(val_loss, val_mae, val_mse 등등) 과대적합이 됐는지, 과소적합이 됐는지도 볼 수 있습니다.)

🙋🏻♀️ 질문

Q: sigmoid 그래프를 어떻게 2진 분류에 사용할까요?

A: 0.5를 기준으로 업다운. 특정 임계값을 정해서 크고 작다를 통해 True, False값으로 판단합니다. 임계값은 보통 0.5를 사용하지만 다른 값을 사용하기도 합니다.

Q: loss, metric 의 차이는 무엇인가요?

A: loss는 W, b 값을 업데이트 해주기 위해 훈련에 사용하고, metric 모델의 성능을 평가하기 위해 검증에 사용한다.

Q: 분류일 때 사용하는 loss 의 종류는 무엇이 있을까요?

A: Cross entropy.

loss 값을 보고 label이 어떤 형태인지 알 수 있습니다. label 값이 바이너리, 원핫, 오디널 인코딩 되어 있는지 보고 loss 값을 지정해야 합니다.

- 이진분류 : binarycrossentropy

- 다중분류 : 원핫인코딩 - categorical_crossentropy

- 다중분류 : 오디널 - sparse_categorical_crossentropy

Q: 머신러닝을 썼을 때와 딥러닝을 사용했을 때 성능이 어떤가요?

A: 보통은 정형데이터는 딥러닝보다는 머신러닝이 대체적으로 좋은 성능을 내기도 합니다. 물론 딥러닝이 좋은 성능을 낼때도 있습니다. 무엇보다도 중요한 것은 데이터 전처리와 피처엔지니어링이 성능에 더 많은 영향을 줍니다.

정형데이터: 부스팅 3대장

비정형데이터: 딥러닝

Q: standard, min-max, robust 스케일링 각각의 특징을 얘기해 주세요!

A:

- Z-score (Standard) scaling은 평균을 0으로, 표준편차를 1로 만들고 평균을 이용하여 계산하기 때문에 이상치에 민감하지 않습니다.

- Min-Max scaler는 이름 그대로 최솟값과 최댓값을 0과 1로 지정합니다. 이는 정규분포가 아니거나 표준편차가 작을 때 효과적입니다. 하지만 이상치를 포함하고 있다면 범위 설정에 영향이 가기 때문에 이상치에 민감합니다.

- Robust scaler는 중앙값을 0으로, 사분위 수를 1로 만들고 중앙값을 이용하기 때문에 앞의 두 정규화 방법들에 비해 이상치에 강합니다.

💡 standard, min-max, robust: 스케일만 바꿀 뿐이지 분포는 변하지 않습니다.

Q: 딥러닝에서는, model 에 fit 해준걸 왜 history 라고 하나요?

A: epoch를 통해 학습을 하면 그 학습결과를 로그로 저장을 해서 history라는 API명을 사용합니다.

Q: 다중입력 선형회귀 사용했을 때의 에러가 단일입력 DNN 사용했을 때의 에러보다 낮은데 그럼 DNN사용 여부보다는 다중입력인지 단일입력인지가 더 중요한건가요?

A: DNN이라도 입력값이 불충분하다면 제대로 학습하기 어렵다는 것을 볼 수 있습니다. (=과소적합)

🦁 질문

Q: 레이어 구성을 2의 제곱들로만 해야하나요?

A: 보통은 2의 제곱으로 사용하는 편인데 그렇게 사용하지 않아도 상관 없습니다.

Q: 드랍아웃 코드는 한 줄만 써줘도 모든 layer에 적용 되나요?

A: 바로 위 레이어에만 적용됩니다.

Q: 항등함수라는 개념을 잘 모르겠습니다.

A: 입력받은 값을 그대로 출력하는 함수입니다.

Q: 그러면 회귀의 출력층은 항상 layers.Dense(units=1)이 형태가 되나요?

A: 넵

Q: 신경망 모델 자체가 2차원 결과가 나오기로 만들어진건가요? 결과가 2차원인게 조금 헷갈리네요.

A: 예측값이 기본적으로 2차원으로 나옵니다. API 에서 제공하는 기능이라 사이킷런과 차이가 있습니다. 사이킷런에서 정형데이터를 전처리하는 기능을 포함하고 있는 것처럼 텐서플로에서도 정형데이터, 이미지, 텍스트 등을 전처리하는 기능들도 포함하고 있습니다.

✏️ TIL

- 사실(Fact): 딥러닝을 이용해 분류와 회귀 문제를 실습했다.

- 느낌(Feeling): 아직 헷갈리고 이해되지 않는 부분들이 있지만 3일차에 전부 이해하려고 하는건 욕심이다.

- 교훈(Finding): 복습하며 기본기를 다지자.