Introduction

Recently, I had a responsibility to maintain old service , "The Pol" which is first service of our company and have been operated from 2020, made by python (django).

While understanding the source code, I faced a confused function handling async process.

I had used a javascript and it is not that hard to deal with the async process, just adding asycn & await, but it seem different on django.

For processing the async, I have to install Celery and Rabbitmq to detach the process from the main thread and deal with it.

How does it work?

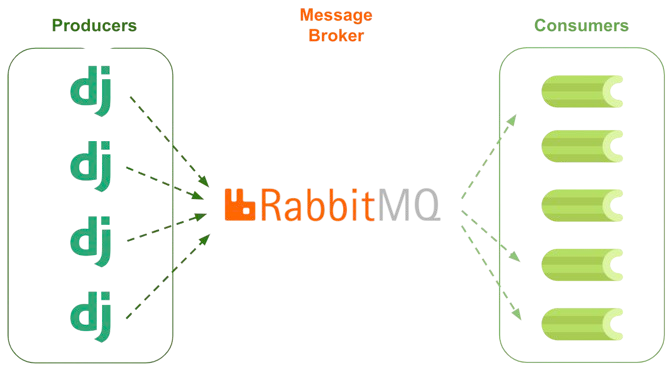

1. Producers

Producer is the application that requires background task processing or deferred execution

2. Message Broker

Message Broker is the component for exchange messages and tasks distribution between workers, popular implementation are RabbitMQ and Redis;

3. Consumers

Consumer is the application that receives the messages and processes them. And the Celery manages workers which is the calculation unit that execute task, the tasks are the unit of execution in celery, a task is nothing but a function that will be processed asynchronously

result backends (result storage) —> place where we can store results after deferred execution or background task processing, popular implementation are SQLAlchemy/Django ORM, Memcached, Redis, AMQP (RabbitMQ), and MongoDB;

Setup

1. Installation

First of all, I install the Celery and Rabbitmq. I installed them, you can use the Rabbitmq, Redis and Amazon SQS, Zookeeper (experimental) whatever you want, as a message broker.

pip3 install celery

brew install rabbitmq2. Setup

Creating a file celery.py in your project directory with the following code:

from thepolbackend import settings

# set the default Django settings module for the 'celery' program.

# from django_celery_beat.models import PeriodicTask

os.environ.setdefault("DJANGO_SETTINGS_MODULE", "project_name.settings")

app = Celery('project_name', )

# Using a string here means the worker doesn't have to serialize

# the configuration object to child processes.

# - namespace='CELERY' means all celery-related configuration keys

# should have a `CELERY_` prefix.

app.config_from_object("django.conf:settings", namespace="CELERY")

# Load task modules from all registered Django app configs.

app.autodiscover_tasks()

if __name__ == '__main__':

app.start()

After Setting the celery.py, let’s now set up RabbitMQ as the message broker.

Add the following code to your settings.py file to configure RabbitMQ as the message broker for Celery:

BROKER_URL = f"amqps://{user_name}:{user_passoword}@{host}:{port}/{virtual_host}"

3. Define tasks

With the environment set up, it’s now time to define our tasks that Celery will process in the background.

To define a task, simply create a function and decorate it with @app.task. For example:

from celery import shared_task

// with task decorator such as

@app.task(), @task.task, @shared_task and etc.

def sample_code ():

// ... codes

Defining as a class, For example :

from celery import Task

class CustomTask (Task):

abstract = True

// ... do what you want

def __call__(self, *args, **kwargs):

_task_stack.push(self)

self.push_request(args=args, kwargs=kwargs)

try:

return self.run(*args, **kwargs)

finally:

self.pop_request()

_task_stack.pop()

4. Running the Celery Worker

With our tasks defined, let’s now run the Celery worker to process the tasks.

In your terminal, run the following command:

celery -A project_name worker -l info

This will start the Celery worker and run it in the foreground. The -l info argument sets the logging level to info, which will show detailed information about the tasks being processed.

5. Triggering Tasks

If the worker is running properly, it is time to trigger the tasks.

To trigger a task, simply call the task function as you would any other function, and the task will be added to the Celery queue for processing. For example:

task = sample_code.delay()

Task will work and return result detached from main process. Now I can avoid the overloaded data process.

Reference :

First-Steps-With-Celery

Django-Celery-Rabbitmq