Data Augmentation 기법 고찰

일단 우리가 대회에서 사용하는 데이터셋은 face image dataset + mask 데이터셋이라고 볼 수 있기 때문에

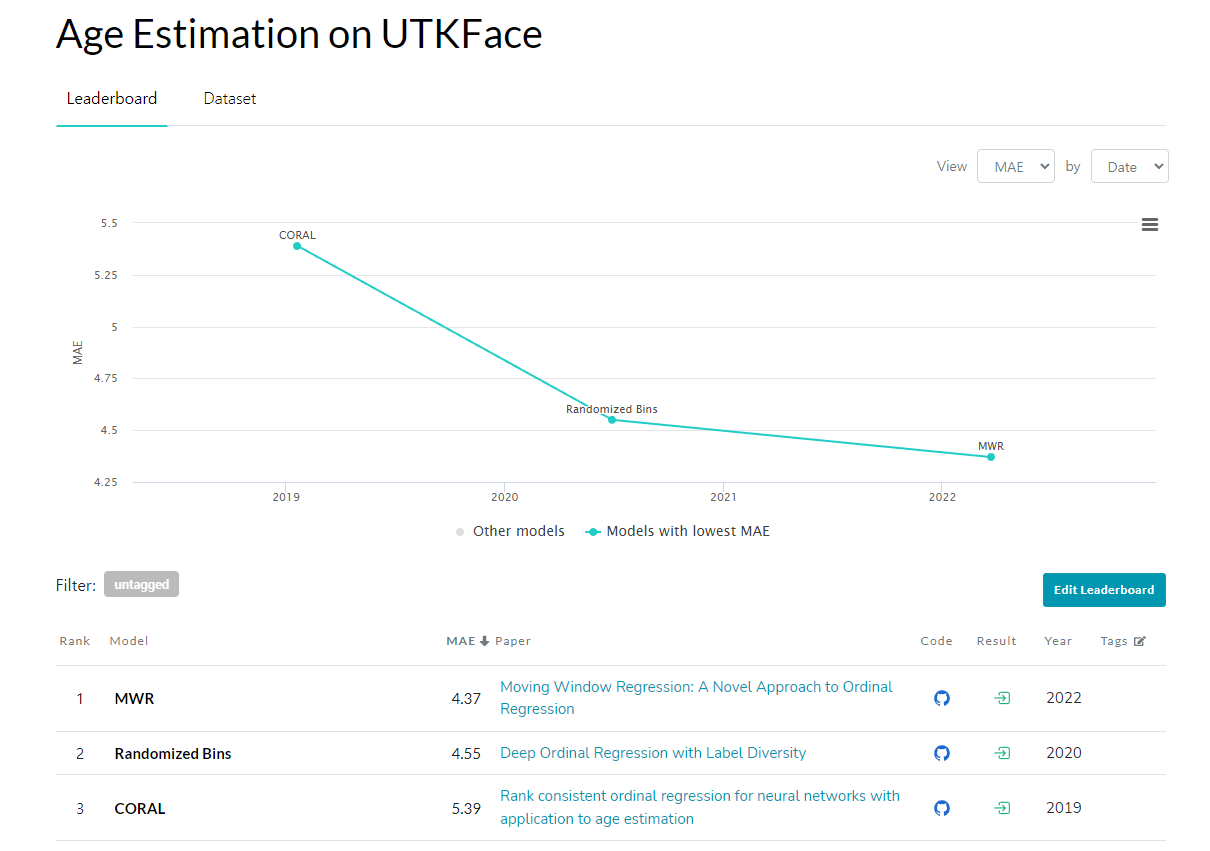

우리가 사용하는 데이터셋과 비슷한 데이터셋, 그리고 Task가 비슷한 UTKFace 데이터셋에 대한 Reference를 찾아 보았다.

Papers with Code에서 관련 내용을 찾아보다가, UTK Face 데이터셋에 대한 Age Estimation 리더보드가 있어서 해당 리더보드의 논문에서 어떤 Data Augmentation을 적용했는지 확인해보았다.

MWR

In facial age estimation, we do random horizontal flipping and random cropping

to the image size of 224 × 224 for data augmentation. In HCI classification, we

do random horizontal flipping only. We use the Adam optimizer [36] with a

learning rate of 10−4. The minibatch size is 18.→ RandomCrop, Horizontal flip 사용

논문 공식 깃허브에서 해당 코드를 찾을 수 있었다.

from imgaug import augmenters as iaa

(생략)

############################################ Img Aug ############################################

def ImgAugTransform(img):

aug = iaa.Sequential([

iaa.CropToFixedSize(width=224, height=224),

iaa.Fliplr(0.5)

])

img = np.array(img)

img = aug(image=img)

return img

def ImgAugTransform_Test(img):

aug = iaa.Sequential([

iaa.CropToFixedSize(width=224, height=224, position="center")

])

img = np.array(img)

img = aug(image=img)

return img

def ImgAugTransform_Test_Aug(img):

sometimes = lambda aug: iaa.Sometimes(0.3, aug)

aug = iaa.Sequential([

iaa.CropToFixedSize(width=224, height=224, position="center"),

iaa.Fliplr(0.5),

sometimes(iaa.LogContrast(gain=(0.8, 1.2))),

sometimes(iaa.AdditiveGaussianNoise(scale=(0, 0.05*255))),

])

img = np.array(img)

img = aug(image=img)

return imgRandomized Bin

For all datasets, we train the network for 30 epochs using the ADAM optimizer

[33] with a mini-batch size of 32, a learning rate of 0.0005 that is decreased

by a factor of 0.1 every 10th epoch, and an L2-regularization factor of 0.001

on the weights

For data augmentation, we use random horizontal flipping of the images and

apply a uniformly distributed random translation and scaling between [-20, 20]

pixels and [0.7, 1.4] respectively. All results are averaged over 10 trials

with different random initializations of the last fully connected layers.→ Horizontal flip, translation, scaling

translation, scaling에 어떤 기법을 쓴지 알기위해 깃허브 코드 확인

aug = imageDataAugmenter('RandXReflection', true, 'RandXTranslation', [-20,20], ...

'RandYTranslation', [-20,20], 'RandRotation', [0, 0], 'RandScale', [0.7, 1.4]);

trainDs = augmentedImageDatastore(outputSize, train, 'ColorPreprocessing', 'gray2rgb', ...

'DataAugmentation', aug);

testDs = augmentedImageDatastore(outputSize, test, 'ColorPreprocessing', 'gray2rgb');사실 matlab 코드라 잘 모르지만 대강 영어 해석하듯이 해석을 해보면

이미지 scale 랜덤 조정, XY방향 Random Translation,

RandXreflection은 Horizontal flip 역할로 예상

RandRotation은 [0, 0] 인걸로 봐서 전 범위 랜덤Rotate 혹은 Rotate 하지 않음 인것같은데

논문에 Rotation에 대한 언급이 없었으므로 쓰지않은것으로 예상된다.

CORAL

Following the procedure described in Niu et al. (2016), each image database was

randomly divided into 80% training data and 20% test data. All images were

resized to 128×128×3 pixels and then randomly cropped to 120×120×3 pixels to

augment the model training. During model evaluation, the 128×128×3 RGB face

images were center-cropped to a model input size of 120×120×3→ Resize, Random Crop, Test셋에 대해 Center Crop 사용

custom_transform = transforms.Compose([transforms.CenterCrop((140, 140)),

transforms.Resize((128, 128)),

transforms.RandomCrop((120, 120)),

transforms.ToTensor()])

train_dataset = Morph2Dataset(csv_path=TRAIN_CSV_PATH,

img_dir=IMAGE_PATH,

transform=custom_transform)

custom_transform2 = transforms.Compose([transforms.CenterCrop((140, 140)),

transforms.Resize((128, 128)),

transforms.CenterCrop((120, 120)),

transforms.ToTensor()])

test_dataset = Morph2Dataset(csv_path=TEST_CSV_PATH,

img_dir=IMAGE_PATH,

transform=custom_transform2)

valid_dataset = Morph2Dataset(csv_path=VALID_CSV_PATH,

img_dir=IMAGE_PATH,

transform=custom_transform2)논문 내용과 같은 내용을 확인했다.

결론

- 의외로 Augmentation 기법을 많이 사용하진 않는다?

- 이유는 충분히 큰 Dataset의 크기, Age 분포가 균등할 것?

- 논문에서 사용하는 데이터 셋, 그리고 우리가 사용하는 데이터셋은 분포가 많이 다를 것으로 예상된다.

- 우리가 사용하는 데이터셋은 mask, age, gender에 대한 imbalance가 중첩되어 class간 데이터 수가 많이 차이나는 상황.

- 논문의 dataset(UTKFace)을 분석해보지 않았지만 overfitting에 강하고 general한 모델을 만들기 위해 나이, 성별 분포가 비교적 균등한 데이터셋일거라 예상.

우리의 분류 대회에 무조건 적으로 적용되는 부분은 아닌듯 하다.