🎀🎁⚙💾📌🗑📍🍀

🌞 아침 쪽지시험

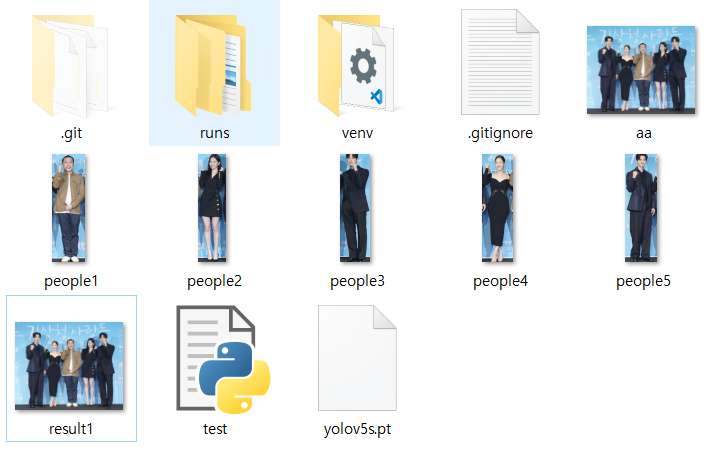

- 위 이미지를 다운받아 저장하세요

- opencv 로 이미지를 읽고 이미지의 가로, 세로가 각 몇 pixel 인지 구하세요

- (세로, 가로)

- 이미지에서 사람을 찾아 하얀색으로 네모를 그려서 result1.png 로 저장하세요

- 이미지에서 사람들을 잘라 people1.png, people2.png… 로 저장하세요

- 코드와 이미지를 git에 업로드하고 해당 repository를 공유해주세요

- python -m venv venv

- venv/scripts/activate

- pip install -qr https://raw.githubusercontent.com/ultralytics/yolov5/master/requirements.txt

import torch

import cv2

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True)

img = cv2.imread('aa.jpeg')

print(img.shape)

results = model(img)

results.save()

result = results.pandas().xyxy[0].to_numpy()

result = [item for item in result if item[6]=='person']

print(result)

#

print(len(result))

# 5

tmp_img = cv2.imread('aa.jpeg')

for i in range(len(result)):

cv2.rectangle(tmp_img, (int(results.xyxy[0][i][0].item()), int(results.xyxy[0][i][1].item())),

(int(results.xyxy[0][i][2].item()), int(results.xyxy[0][i][3].item())),

(255,255,255))

cv2.imwrite('result1.png', tmp_img)

for i in range(len(result)):

cropped = tmp_img[int(result[i][1]):int(result[i][3]), # ymin:ymax

int(result[i][0]):int(result[i][2]) # xmin:xmax

]

i+=1

filename = 'people%i.png'%i # %i 부분엔 숫자가 들어감

cv2.imwrite(filename, cropped)

출력결과

(837, 1024, 3)

[

array([432.42706298828125, 155.5716552734375, 631.0242919921875, 804.8375854492188, 0.9132900238037109, 0, 'person'], dtype=object),

array([620.9993896484375, 154.4466552734375, 790.0960693359375, 806.7845458984375, 0.9080604910850525, 0, 'person'], dtype=object),

array([762.9304809570312, 127.33479309082031, 951.8087768554688, 813.9844970703125, 0.8943580985069275, 0, 'person'], dtype=object),

array([274.86883544921875, 174.7275390625, 457.47015380859375, 805.7642822265625, 0.8802736401557922, 0, 'person'], dtype=object),

array([98.14337158203125, 109.3960189819336, 310.7022399902344, 816.4161987304688, 0.8792229890823364, 0, 'person'], dtype=object)

]

5

git init

git remote add origin https://github.com/hyojine/1013test.git

git remote -v

➜

origin https://github.com/hyojine/1013test.git (fetch)

origin https://github.com/hyojine/1013test.git (push)

git add .

git commit -m'1013test'

Author identity unknown

*** Please tell me who you are.

Rungit config --global user.email "you@example.com"

git config --global user.name "Your Name"to set your account's default identity.

➜ 둘다 복사해서 따옴표 안에 작성해서 넣으면 깃헙 연결하는 창 뜨고 깃 연결 완료오오오오ㅗ오오옹오옹

⚙️ 머신러닝 4주차 ➜▶❌❗

🤖 4주차에서 배울 것 : Neural Network & Transfer Learning

perceptron

Deep Feed Forward Network : RNN, LSTM으로 파생

Deep Convolutional Network(DCN) : 이미지 처리에 특화됨

🤖 Convolutional Neural Networks (합성곱 신경망)

- 합성곱: 컴퓨터 비전에서 많이 사용하는 이미지 처리 방식(얼굴인식, 사물인식에도 사용됨)

입력 데이터에 필터(가중치)의 각 요소를 칸끼리 곱함- element-wise: 왼쪽에서 오른쪽, 위에서 아래로

- filter(=kernel): 입력값에 적용해서 feature map(특성맵)을 뽑아낸다

- stride: 필터가 이동하는 간격

- padding(=margin): 합성곱 연산의 결과(특성맵)의 크기가 줄어드는 것을 방지

필터를 여러개 사용하면 합성곱 신경망의 성능을 높일 수 있다

이미지는 3차원(가로, 세로, 채널)

ex) 입력이미지 크기(10,10,3) - 필터의 크기(4,4,3) - 필터의 갯수 2 - 출력(특성맵)의 크기(10,10,2)

➜ 필터의 수와 아웃풋(피쳐맵) 채널 수 동일함

🤖 CNN의 특성

-

합성곱(convolution) 레이어와 완전연결(fully connected=dense) 레이어를 함께 사용

-

합성곱 레이어(activator :Relu)의 결과가 특성맵 + pooling(=subsampling)을 반복하면서 크기가 작아지면서 핵심적인 특성들을 추출해냄

-

pooling: 특성맵의 중요한 부분을 추출, 저장

- max pooling: stride 간격으로 옮겨다니면서 특성맵의 poolsize에서 가장 큰 값들을 추출

- avg pooling: stride 간격으로 옮겨다니면서 특성맵의 poolsize안의 값들의 평균을 추출

-

flatten layer : 마지막 풀링 레이어를 지나면 FCL(Dense)와 연결되어야하는데 풀링을 통과한 특성맵은 2차원, FCL은 1차원이므로 연산하기위해 2차원 ➜ 1차원으로 바꿔준다(flattening)

-

flatten을 거친 레이어는 flc와 행렬곱셈을 하고 이후 완전연결계층과 활성화함수의 반복을 통해 노드 갯수를 줄이고 마지막에 softmax활성화 함수를 통과해서 결과물 생성

🤖 CNN 활용의 예

- 사물 인식(Object Detection) : 사물을 네모칸 형태로 찾아줌 ➜ YOLOv5(어제 써본거)가 대표적 Computer Vision Algorithm

다른 real-time detection에 비해서 정확도가 높음 - 이미지 분할(Segmentation) : 각 물체에 속한 픽셀들을 분리 누끼따듯이 사물의 형태를 비교적 정확하게 인식함

CT 촬영에서 종양의 크기와 위치를 색깔로 나타냄

인물 촬영모드에서 배경만 흐릿하게 하기

ex) 자율주행 물체 인식, 자세 인식(저스트댄스), 화질 개선(super-resolution), style transfer(사진에 필터 적용하기)

🤖 CNN의 종류

- AlexNet (2012):의미있는 성능을 낸 첫 번째 합성곱 신경망, Dropout과 Image augmentation 기법을 효과적으로 적용하여 딥러닝에 많은 기여를 함

- VGGNet (2014): Deep한 모델(파라미터의 개수가 많고 모델의 깊이가 깊음)

처음 모델을 설계할 때 전이 학습 등을 통해서 가장 먼저 테스트하는 모델 - GoogLeNet(=Inception V3) (2015): 구조가 복잡해 널리 쓰이진 않았지만 구조에 주목!

하나의 계층에서도 다양한 종류의 필터, 풀링을 도입함으로써 개별 계층를 두텁게 확장시킴

(기존엔 한 가지의 필터를 적용한 합성곱 계층을 단순히 깊게 쌓음)

차원(채널) 축소를 위한 1x1 합성곱 계층

여러 계층을 사용하여 분할하고 합치는 아이디어는, 갈림길이 생김으로써 조금 더 다양한 특성을 모델이 찾을 수 있게하고, 인공지능이 사람이 보는 것과 비슷한 구조로 볼 수 있게함.

이러한 구조로 VGGNet 보다 신경망이 깊어졌음에도, 사용된 파라미터는 절반 이하 - ResNet (2015): 층이 깊어질 수록 역전파의 기울기가 점점 사라져서 학습이 잘 되지 않는 문제(Gradient vanishing)가 발생 ➜ Residual block을 제안(그래디언트가 잘 흐를 수 있도록 일종의 지름길(Shortcut=Skip connection)을 만들어주는 방법 = 입력과 출력 간의 차이를 학습하도록 설계됨)

🤖 Transfer Learning (전이 학습)

- pretrained model들을 새로운 데이터셋에 대해서 다시 학습시키는 방법

데이터 셋이 완전히 다르더라도 유의미하게 학습성능이 향상됨

🤖 Recurrent Neural Networks (순환 신경망)

- 음성, 문자열 등 순차적으로 등장하는 데이터 처리에 적합한 모델

- 길이에 관계없이 입력과 출력을 받아들일 수 있는 구조

- 주식이나 암호화폐의 시세 예측, 사람과 대화하는 챗봇을 만드는 등의 다양한 모델을 만들 수 있음

🤖 Generative Adversarial Network (생성적 적대 신경망)

- 서로 적대(Adversarial)하는 관계의 2가지 모델(생성 모델과 판별 모델)을 동시에 사용하는 기술

- 위조지폐범(Generator)은 더욱 더 정교하게, 경찰(Discriminator)은 더욱 더 판별을 잘하면서 서로 발전의 관계가 되어 원본과 구별이 어려운 가짜 이미지가 만들어지게 됩니다.

- GAN의 작동방식

- Generator(위조지폐범): 이미지가 진짜(1)로 판별되어야함 ➜ 보다 정교하게 모델을 만들려고 노력하며 Target은 1로 나오도록 해야합니다. 가짜를 진짜인 1처럼 만들기 위하여 타깃인 1과 예측의 차이인 손실을 줄이기 위하여 Backpropagation을 이용한 weight를 조정

- Discriminator(경찰): 진짜 이미지는 1로, 가짜 이미지는 0으로 판별할 수 있어야합니다. 생성된 모델에서 Fake와 Real 이미지 둘다를 학습하여 예측과 타깃의 차이인 손실을 줄여야함

➜ 두 모델이 대립하면서(Adversarial) 발전해 에폭(Epoch)이 지날 때마다 랜덤 이미지가 점점 동물을 정교하게 생성해 내는 것

ex) deep fake, beautyGAN, Toonify Yourself

🤖실습

🐱🚀 CNN 실습 - 수화 MNIST

(지난번에 deep neural network MLP로 했던)

https://colab.research.google.com/drive/1x2SRHEAdRqNHTMKvVn8oUSwF9KV9Vi4C?usp=sharing#scrollTo=I_strLH75R_x

런타임Runtime - 런타임 유형 변경Change runtime type - GPU 선택(연산속도 늘리기)

import os

os.environ['KAGGLE_USERNAME'] = 'username' # username

os.environ['KAGGLE_KEY'] = 'key' # key!kaggle datasets download -d datamunge/sign-language-mnist

!unzip sign-language-mnist.zipfrom tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense, Conv2D, MaxPooling2D, Flatten, Dropout

from tensorflow.keras.optimizers import Adam, SGD

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import OneHotEncoder

#데이터셋 로드하기

train_df = pd.read_csv('sign_mnist_train.csv')

test_df = pd.read_csv('sign_mnist_test.csv')

# 라벨 분포 확인하기(총 24개)

plt.figure(figsize=(16, 10))

sns.countplot(train_df['label'])

plt.show()

# 전처리

# 입력과 출력 나누기

# 훈련 데이터셋

train_df = train_df.astype(np.float32)

#32비트로 바꾸는건 필수

x_train = train_df.drop(columns=['label'], axis=1).values

# 라벨 빼고는 모두 x

x_train = x_train.reshape((-1, 28, 28, 1))

# (배치사이즈, 이미지 가로, 세로, 그레이스케일)

# 이미지 가로, 세로, 색상까지해서 3차원

# -> xdata를 (28,28,1) 크기의 이미지 형태로 변환

y_train = train_df[['label']].values

# 검증 데이터셋

test_df = test_df.astype(np.float32)

x_test = test_df.drop(columns=['label'], axis=1).values

x_test = x_test.reshape((-1, 28, 28, 1))

y_test = test_df[['label']].values

print(x_train.shape, y_train.shape) # (27455, 28, 28, 1) (27455, 1)

print(x_test.shape, y_test.shape) # (7172, 28, 28, 1) (7172, 1)

# 데이터 미리보기

index = 1

plt.title(str(y_train[index]))

plt.imshow(x_train[index].reshape((28, 28)), cmap='gray')

plt.show()

# one-hot 인코딩하기

encoder = OneHotEncoder()

y_train = encoder.fit_transform(y_train).toarray()

y_test = encoder.fit_transform(y_test).toarray()

print(y_train.shape) # (27455, 24)

# 일반화하기

# 이미지 데이터를 255로 나누어 0-1 사이의 소수점 데이터(floating point 32bit = float32)로 바꾸기

# ImageDataGenerator() 이용

train_image_datagen = ImageDataGenerator(

rescale=1./255, # 일반화

)

train_datagen = train_image_datagen.flow(

x=x_train,

y=y_train,

batch_size=256,

shuffle=True

)

# flow를 이용해 순차적으로 값을 넣어준다

test_image_datagen = ImageDataGenerator(

rescale=1./255

)

test_datagen = test_image_datagen.flow(

x=x_test,

y=y_test,

batch_size=256,

shuffle=False # 랜덤성을 없앰

)

index = 1

preview_img = train_datagen.__getitem__(0)[0][index]

# __getitem__

preview_label = train_datagen.__getitem__(0)[1][index]

plt.imshow(preview_img.reshape((28, 28)))

plt.title(str(preview_label))

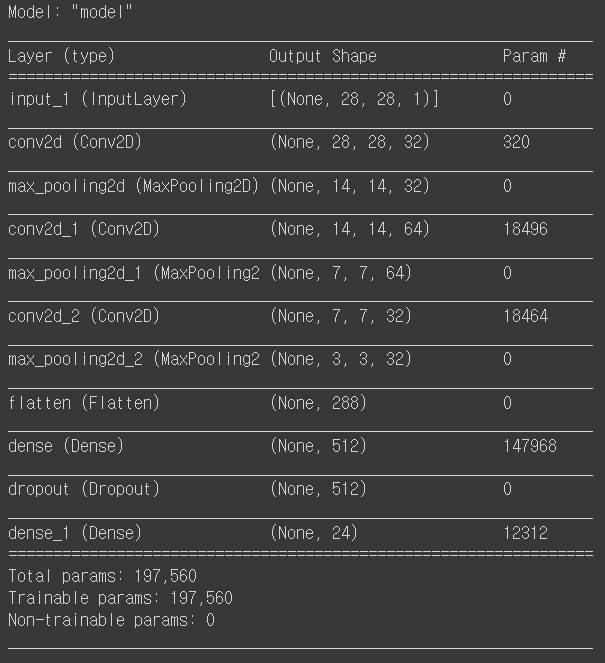

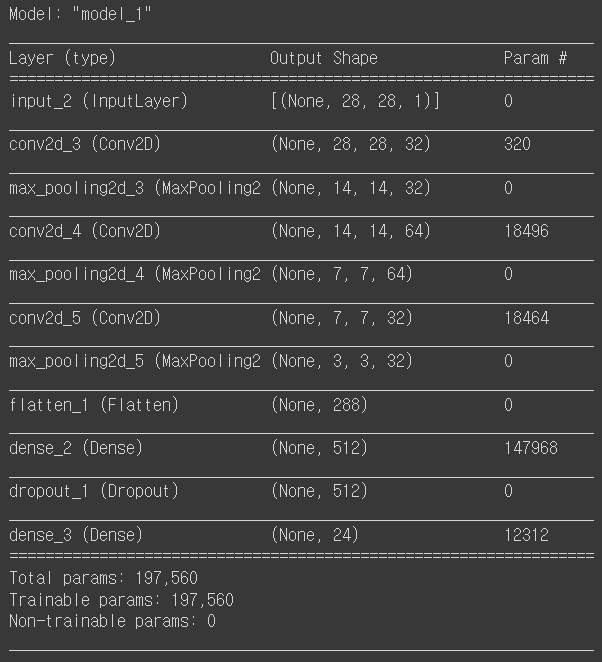

plt.show()⭐⭐ 이어서 네트워크 구성 ⭐⭐

input = Input(shape=(28, 28, 1))

# conv랑 pooling이랑 한쌍이 한블럭

hidden = Conv2D(filters=32, kernel_size=3, strides=1, padding='same', activation='relu')(input)

hidden = MaxPooling2D(pool_size=2, strides=2)(hidden)

hidden = Conv2D(filters=64, kernel_size=3, strides=1, padding='same', activation='relu')(hidden)

hidden = MaxPooling2D(pool_size=2, strides=2)(hidden)

hidden = Conv2D(filters=32, kernel_size=3, strides=1, padding='same', activation='relu')(hidden)

hidden = MaxPooling2D(pool_size=2, strides=2)(hidden)

# 마지막 풀링을 거치고 나면

# 1차원으로 풀어줘야 dense와 연산 가능

hidden = Flatten()(hidden)

# 여기부턴 deep neural network랑 동일(노드수, 활성함수)

hidden = Dense(512, activation='relu')(hidden)

hidden = Dropout(rate=0.3)(hidden)

# 노드의 30%를 랜덤으로 뺀다

output = Dense(24, activation='softmax')(hidden)

model = Model(inputs=input, outputs=output)

model.compile(loss='categorical_crossentropy', optimizer=Adam(lr=0.001), metrics=['acc'])

model.summary()

➜ 크기는 정수여야하므로 7->3 으로 줄어들때 나머지는 버림

# 학습시키기

history = model.fit(

train_datagen,

validation_data=test_datagen, # 검증 데이터를 넣어주면 한 epoch이 끝날때마다 자동으로 검증

epochs=20 # epochs 복수형으로 쓰기!

)

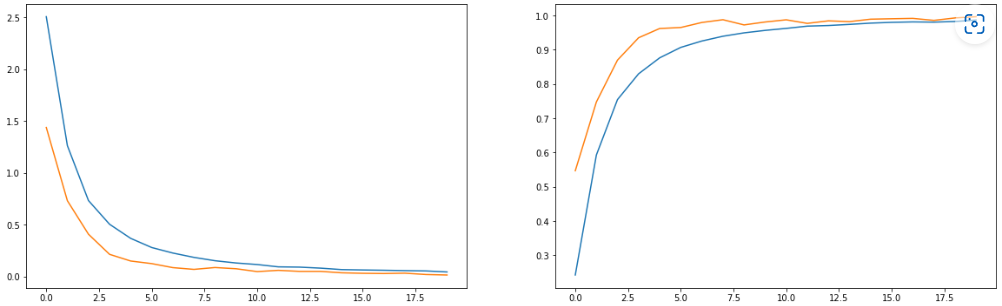

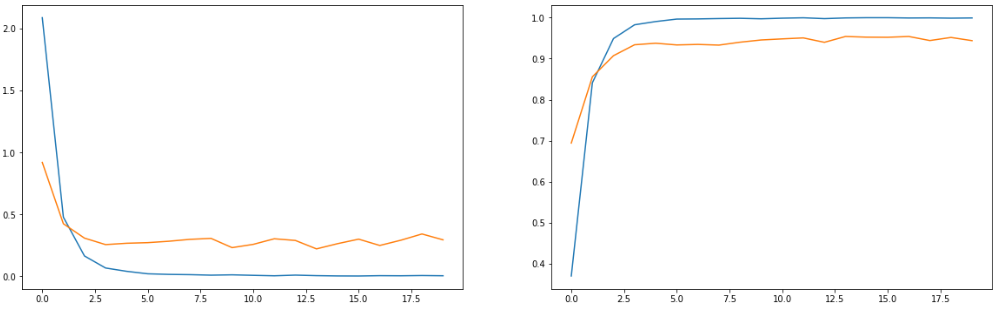

# 학습결과 그래프

fig, axes = plt.subplots(1, 2, figsize=(20, 6))

axes[0].plot(history.history['loss'])

axes[0].plot(history.history['val_loss'])

axes[1].plot(history.history['acc'])

axes[1].plot(history.history['val_acc'])

⭐이미지 증강 기법 data augmentation 이용해보기⭐

#ImageDataGenerator를 이요해서 이미지 증강을 쉽게 할 수 있다

train_image_datagen = ImageDataGenerator(

rescale=1./255, # 일반화(원랜 이것만했었는데)

rotation_range=10, # 랜덤하게 이미지를 회전 (단위: 도, 0-180)

zoom_range=0.1, # 랜덤하게 이미지 확대 (%)

width_shift_range=0.1, # 랜덤하게 이미지를 수평으로 이동 (%)

height_shift_range=0.1, # 랜덤하게 이미지를 수직으로 이동 (%)

)

train_datagen = train_image_datagen.flow(

x=x_train,

y=y_train,

batch_size=256,

shuffle=True

)

test_image_datagen = ImageDataGenerator(

rescale=1./255

)

# 검증/테스트 데이터는 augmentations없어도 되기도 하고 있으면 검증때마다 결과가 달라져서 결과가 객관성을 잃음

test_datagen = test_image_datagen.flow(

x=x_test,

y=y_test,

batch_size=256,

shuffle=False

)

index = 1

preview_img = train_datagen.__getitem__(0)[0][index]

preview_label = train_datagen.__getitem__(0)[1][index]

plt.imshow(preview_img.reshape((28, 28)))

plt.title(str(preview_label))

plt.show()input = Input(shape=(28, 28, 1))

hidden = Conv2D(filters=32, kernel_size=3, strides=1, padding='same', activation='relu')(input)

hidden = MaxPooling2D(pool_size=2, strides=2)(hidden)

hidden = Conv2D(filters=64, kernel_size=3, strides=1, padding='same', activation='relu')(hidden)

hidden = MaxPooling2D(pool_size=2, strides=2)(hidden)

hidden = Conv2D(filters=32, kernel_size=3, strides=1, padding='same', activation='relu')(hidden)

hidden = MaxPooling2D(pool_size=2, strides=2)(hidden)

hidden = Flatten()(hidden)

hidden = Dense(512, activation='relu')(hidden)

hidden = Dropout(rate=0.3)(hidden)

output = Dense(24, activation='softmax')(hidden)

model = Model(inputs=input, outputs=output)

model.compile(loss='categorical_crossentropy', optimizer=Adam(lr=0.001), metrics=['acc'])

model.summary()

history = model.fit(

train_datagen,

validation_data=test_datagen,

epochs=20

)

fig, axes = plt.subplots(1, 2, figsize=(20, 6))

axes[0].plot(history.history['loss'])

axes[0].plot(history.history['val_loss'])

axes[1].plot(history.history['acc'])

axes[1].plot(history.history['val_acc'])